Bridging the Semantic Gap in vGOAL for Verifiable Autonomous

Decision-Making

Yi Yang

a

and Tom Holvoet

b

Imec-DistriNet, KU Leuven, 3001 Leuven, Belgium

Keywords:

vGOAL, Semantic Gap, Interpreter, Model Checking, Autonomous Decision-Making.

Abstract:

Verifiable autonomous decision-making requires bridging the semantic gap between the execution semantics

of an agent programming language (APL) and the formal model used for verification. In this paper, we address

this challenge for vGOAL, an APL derived from GOAL and designed for automated verification. We make

three contributions. First, we identify the semantic gap in vGOAL: while both its interpreter and its model-

checking framework implement the semantics of vGOAL, they differ in how they define the next program state

for vGOAL. Second, we bridge the semantic gap by developing an improved interpreter for vGOAL that aligns

with the model checker’s formal semantics, thus ensuring correct verification results. Third, we introduce a

stepwise refinement approach to address potential efficiency concerns arising from this semantic alignment.

Through a case study in autonomous logistics, we demonstrate that while our approach introduces additional

verification overhead, the efficient model-checking framework of vGOAL keeps this overhead manageable,

making our solution practical for real-world applications.

1 INTRODUCTION

Verifiable autonomous decision-making requires

bridging the semantic gap between the execution se-

mantics of agent programming languages (APLs) and

their formal models used for verification. This chal-

lenge is particularly evident in the relationship be-

tween APL interpreters, which execute programs, and

their associated model-checking frameworks, which

verify program properties.

Model-checking frameworks for APLs can be

broadly categorized into two types. The first type

is interpreter-based model-checking frameworks, e.g.,

the interpreter-based model checker (IMC) for GOAL

(Hindriks, 2009), (Jongmans et al., 2010), (Jong-

mans, 2010). The second type is non-interpreter-

based model-checking frameworks, e.g., the model-

checking framework for AgentSpeak (Bordini and

H

¨

ubner, 2005), (Bordini et al., 2003). When model-

checking frameworks are developed upon the same

interpreter as the running program, this approach es-

tablishes a solid foundation for semantic equivalence,

as both operate under the same operational seman-

tics. In this paper, we focus on vGOAL, an APL

a

https://orcid.org/0000-0001-9565-1559

b

https://orcid.org/0000-0003-1304-3467

derived from GOAL with a specific emphasis on

automated verification (Yang and Holvoet, 2023a).

vGOAL is particularly advantageous due to its effi-

cient model-checking framework, which is capable

of validating complex autonomous systems involving

multiple agents. For vGOAL, both its interpreter and

its model-checking framework implement its opera-

tional semantics (Yang and Holvoet, 2023c), (Yang

and Holvoet, 2024). However, they differ in their ap-

proach to defining the next program state for vGOAL,

posing challenges for achieving a correct model for

sound model-checking analyses.

To address this challenge, we make three main

contributions. First, we identify and analyze the se-

mantic gap between the vGOAL interpreter and its

model-checking framework, providing a clear under-

standing of the discrepancies in their definitions of the

next program state. Second, we develop an improved

interpreter for vGOAL that aligns with the formal se-

mantics of the model-checking framework, ensuring

semantic consistency and correct verification results.

Third, we introduce a stepwise refinement approach

to address potential efficiency concerns arising from

this semantic alignment. This approach mitigates the

computational overhead introduced by the improved

interpreter, ensuring that the solution remains practi-

cal for real-world applications.

580

Yang, Y. and Holvoet, T.

Bridging the Semantic Gap in vGOAL for Verifiable Autonomous Decision-Making.

DOI: 10.5220/0013262400003890

In Proceedings of the 17th International Conference on Agents and Artificial Intelligence (ICAART 2025) - Volume 1, pages 580-587

ISBN: 978-989-758-737-5; ISSN: 2184-433X

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

Our approach effectively bridges the semantic gap

in vGOAL while maintaining manageable computa-

tional costs, thanks to the efficiency of its model-

checking framework. We demonstrate the practical-

ity and effectiveness of our solution through a case

study in autonomous logistics, involving three au-

tonomous robots. This case study highlights how our

approach enables reliable verification without com-

promising system performance.

The remainder of this paper is organized as fol-

lows: Section 2 discusses related work. Section 3

provides the preliminaries for vGOAL. Section 4 de-

tails the semantic gap problem in vGOAL. Section 5

presents our approach to bridging the semantic gap

in vGOAL through an improved interpreter and dis-

cusses how stepwise refinement address potential per-

formance overhead. Section 6 describes how to ap-

ply the stepwise refinement to a vGOAL program to

improve the overall system efficiency through an au-

tonomous logistic system. Finally, Section 7 con-

cludes our paper.

2 RELATED WORK

Model checking is widely used in verifying both

robotic systems and agent programs (Luckcuck et al.,

2019). This section reviews key model-checking ap-

proaches for APLs, focusing on the semantic gap be-

tween program execution and verification.

Interpreter-based approaches use the APL inter-

preter directly in the model generation process, po-

tentially offering better semantic alignment between

program execution and the generated model for model

checking. The MCAPL (Model-checking Agent Pro-

gramming Languages) framework (Dennis, 2018)

represents a significant advancement in this direc-

tion, supporting various APLs including Gwendolen,

GOAL, SAAPL (Winikoff, 2007), ORWELL (Das-

tani et al., 2009), AgentSpeak, and 3APL (Hindriks

et al., 1999). While MCAPL ensures close seman-

tic alignment through its Agent Infrastructure Layer

(AIL), it faces efficiency challenges (Dennis et al.,

2012). Attempts to address these limitations through

translation to more efficient model checkers like SPIN

(Holzmann, 1997) and PRISM (Kwiatkowska et al.,

2011) (Dennis et al., 2018) have shown promise

but introduce new semantic gap concerns during the

translation process.

The interpreter-based model checker (IMC) for

GOAL (Jongmans et al., 2010), (Jongmans, 2010)

takes a similar approach, using the program inter-

preter directly for state space generation. While this

ensures semantic consistency, its limited state-space

reduction capabilities and restriction to single-agent

systems constrain its practical application.

These approaches typically translate agent pro-

grams into established model-checking languages.

Early work with AgentSpeak (Bordini et al., 2003)

used Promela for verification with SPIN, introduc-

ing semantic gaps through translation. Recent work

(Yang and Holvoet, 2023b) demonstrates automated

model checking for GOAL programs without inter-

preter dependency, though limited to single-agent sys-

tems.

Both approaches face challenges in bridging the

semantic gap between program execution and model

checking in APLs, with interpreter-based approaches

generally offering better semantic alignment.

3 PRELIMINARIES: vGOAL

This section provides preliminaries to understand

the semantic gap in vGOAL. We provide a concise

overview of a vGOAL program, its operational se-

mantics, and the shared implementation of its in-

terpreter and its model-checking framework. For

more details in vGOAL, its interpreter, and its model-

checking framework, please refer to (Yang and

Holvoet, 2023a), (Yang and Holvoet, 2023c), and

(Yang and Holvoet, 2024), respectively.

Definition 1. (vGOAL Program)

A vGOAL program is defined as:

P ::=(MAS, RuleSets, E f f ects, Domain, Analyses),

MAS ::=Agent

∗

,

Agent ::=(id, belie f s, goals, Msgs),

Msgs ::=sentMsgs, receivedMsgs,

RuleSets ::=Knowledge, Constraints, Actions, Sent, Events,

Analyses ::=Sa f ety, Errors, FatalMsgs.

A vGOAL program consists of the specifica-

tion of the multi-agent system (MAS), five rule sets

(RuleSets), the effects of actions (E f f ects), vari-

able domains (Domain), and analyzed properties

(Analyses). The syntax of vGOAL is based on first-

order logic, with terms, predicates, and quantifiers.

It imposes three key restrictions: finite domains for

all variables, all variables must be quantified, and no

negative recursion within each rule. These ensure a

minimal model for each rule set.

Definition 2. (Interpretation) The interpretation of

beliefs and goals is defined as follows:

belie f s ::= [b

1

, ..., b

m

]

I(belie f s) ::= {b

1

, ..., b

m

}

goals ::= [[g

11

, ..., g

1k

], ..., [g

n

]]

I(goals) ::= {a-goal-g

11

, ..., a-goal-g

1k

}

Bridging the Semantic Gap in vGOAL for Verifiable Autonomous Decision-Making

581

The interpretation of beliefs is a set of atoms. No-

tably, the interpretation of goals only pertains to the

first goal of the agent. a-goal indicates the desired be-

liefs. The interpretation of goals is the key to merging

state space of vGOAL programs, especially for multi-

ple goals.

Definition 3. (vGOAL States) A vGOAL state is de-

fined as:

state ::= {subS

1

, . . . , subS

n

},

subS

i

::= id

i

: (I(belie f s

i

), I(goals

i

)),

where id

1

, . . . , id

n

are unique identifiers for each sub-

state.

A vGOAL state is composed of multiple substates.

Each substate represents an agent, identified by a

unique identifier, along with the interpretation of its

beliefs and goals.

Definition 4. (Operational Semantics of vGOAL) The

operational semantics of vGOAL are defined by the

transition:

state

Act

−−→ state

′

,

where:

• state, state

′

::= subS

1

, . . . , subS

n

, with n ≥ 1

• subS

i

::= {i : (I(belie f s

i

), I(goals

i

))}, for i ∈

{1, . . . , n}

• Act ::= events, actions

• events ::= {id

1

: event

1

, . . . , id

n

: event

n

}, with n ≥

1

• actions ::= {id

1

: action

1

, . . . , id

n

: action

n

}, with

n ≥ 1

• id

i

are unique identifiers for each involved agent

The operational semantics describe how each state

transition is influenced by actions and events, which

are specific to each agent.

For vGOAL, the implementations of its interpreter

and its model-checking framework share the same im-

plementation of the substate updates outlined in Al-

gorithm 1. This algorithm implements all possible

state transitions: subS

i

id

i

:action

i

,id

i

:event

i

,

−−−−−−−−−−−→ subS

′

i

, where

substates evolve through two mechanisms: actions

that modify agent beliefs, and events that can af-

fect both beliefs and goals. This shared implemen-

tation is fundamental to ensure semantic consistency

between program execution and the model checked

by the model-checking framework, which we will ex-

plore in detail in the following sections.

4 SEMANTIC GAP

In this section, we analyze the fundamental difference

between program executions in the vGOAL interpreter

Algorithm 1: Substate Update.

1 Function Expansion(state, P):

2 foreach subS

i

∈ state do

3 actions

i

, events

i

← Reason(subS

i

, P)

4 subtrans

i

←

/

0

5 foreach action

i

∈ actions

i

do

6 subS

′

i

←

U pdate(subS

i

, events

i

, action

i

)

7 subT ←

(subS, events

i

, ation

i

, subS

′

i

)

8 subtrans

i

← subtrans

i

∪ {subT }

9 Communicate among all agents

10 subtransitions ←

S

n

1

{(id

i

: subtrans

i

)}

11 actions ←

S

n

1

{(id

i

: actions

i

)}

12 return subtransitions, actions

and its model-checking framework, illustrating this

difference through a representative scenario.

Both implementations follow the same formal

transition rule: state

Act

−−→ state

′

. However, the model-

checking framework waits for all agents to complete

their actions before generating the next program state,

while the interpreter updates the state as soon as one

agent completes its action. This difference leads to

distinct execution behaviors in practice. The inter-

preter’s approach allows for more dynamic and re-

sponsive interactions with the environment, as it can

adapt to changes as soon as they occur. In contrast,

the model-checking framework considers all possi-

ble outcomes before transitioning to the next program

state. These differences illustrate the trade-offs be-

tween real-time adaptability and exhaustive state ex-

ploration in multi-agent systems.

Algorithm 2 describes the stepwise execution of

the vGOAL interpreter. It processes one state at a time

and interacts with actual agents in the environment. It

begins with a vGOAL program and real-time agent be-

lief information as inputs. The execution starts with

the initial state (s

0

), based on agents’ initial beliefs

and goals. and sets the current state s and execu-

tion trace E to s

0

. It then enters a loop that continues

while any agent has remaining goals. The interpreter

iteratively evaluates each agent’s state to determine

possible actions, allowing at most one action per sub-

state. It identifies potential next substates by consid-

ering action outcomes and sends commands to agents

with actions to perform. During the waiting phase, the

interpreter updates each agent’s substate using real-

time information. The waiting phase ends as soon as

one substate completes its action, after which the new

state is set and the reasoning cycle begins again. This

cycle continues until all agents have achieved their

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

582

Algorithm 2: Operational Semantics Implementa-

tion in the vGOAL Interpreter.

Input: a vGOAL program: P, real-time agent

information: In f o

Output: a program execution

s

0

, (events

1

, actions

1

), s

1

, . . . , s

n

// Initialization

1 s

0

← {id

i

: (I(belie f s

i

), I(goals

i

)) | i ∈

{1, . . . , n}}

2 s ← s

0

, E ← s

0

// Continue until no gaols

3 while ∃i.I(goals

i

) ̸=

/

0 do

4 subtrans, actions ← Expansion(s,P)

5 foreach (id

i

: actions

i

) ∈ actions do

6 Send to Agent

i

: perform action

i

.

7 wait ← True, s ←

/

0, t ←

/

0

8 while wait do

9 foreach subS

i

∈ s do

10 subS

′

i

← In f o

11 subT ← (subS

i

, , action

i

, subS

′

i

)

12 t ← t ∪{subT } s ← s

′

∪ {subS

′

i

}

13 foreach subT ∈ t do

14 if subT ∈ subtrans

i

then

15 wait ← False,

16 events ← events ∪ {id : event

i

}

17 actions ← actions ∪ {id :

action

i

}

18 E ← E, (events, actions), s

19 return E

goals.

Algorithm 3 outlines the process of generating a

transition system from a vGOAL program. The algo-

rithm iteratively explores each state, generating possi-

ble transitions and updating the set of states and tran-

sitions accordingly. It captures the non-deterministic

outcomes of agent actions by calculating all possi-

ble transitions from the current state, considering the

Cartesian product of subtransitions for each agent.

The loop continues until no new states are generated,

at which point the algorithm terminates and returns

the constructed transition system.

To illustrate the semantic gap in vGOAL, we

present a representative multi-agent scenario de-

picted in Figure 1. The scenario involves two

robots (Robot

1

and Robot

2

) navigating through in-

termediate locations to reach a common destination

(Location

5

). Robot

1

starts from Location

1

and moves

via Location

2

, while Robot

2

begins at Location

3

and

travels via Location

4

. Access to locations is granted

on a first-come-first-served basis, with Robot

1

having

Algorithm 3: Operational Semantics Implementa-

tion in the vGOAL Model-Checking Framework.

Input: a vGOAL program: P

Output: a transition system: (S, T, s

0

, F)

// Initialization

1 s

0

← {id

i

: (I(belie f s

i

), I(goals

i

)) | i ∈

{1, . . . , n}}

2 S ← {s

0

}, F ←

/

0, T ←

/

0

// Iterative state exploration

3 S

cur

← {s

0

}

4 while S

cur

̸=

/

0 do

5 S

next

←

/

0

6 foreach s ∈ S

cur

do

7 subtrans, , ← Expansion(s, P)

8 transitions ←

∏

n

i=1

subtrans

i

,

T ← T ∪transitions

9 foreach (s, , , s

′

) ∈ transitions do

10 S

next

← S

next

∪ {s

′

}

// Terminal state identification

11 foreach s ∈ S

next

do

12 if ∀i.I(goals

i

) =

/

0 then

13 F ← F ∪{s}

// State updates

14 S ← S ∪ S

next

, S

next

← S

next

\ F,

S

cur

← S

next

15 return (S, T, s

0

, F)

priority in simultaneous requests.

Robot

1

Robot

2

Location

1

Location

2

Wrong Location

Location

3

Location

4

Location

5

Initial

Initial

Success

Failure

Success

Success

Failure

Success

Failure

Figure 1: A Multi-Agent Scenario.

This scenario illustrates a fundamental difference

between the interpreter and model-checking imple-

mentations. Initially, both implementations generate

identical decisions, directing the robots toward their

respective intermediate locations. However, their be-

haviors diverge in subsequent state transitions. The

interpreter processes state changes asynchronously,

updating the program state whenever either robot

completes its movement. Consequently, the robot that

reaches its intermediate location first gains access to

Bridging the Semantic Gap in vGOAL for Verifiable Autonomous Decision-Making

583

Location

5

. In contrast, the model-checking frame-

work processes state changes synchronously, waiting

for both robots to complete their movements before

transitioning to the next state. Under this implementa-

tion, Robot

1

consistently secures access to Location

5

due to its priority status.

5 BRIDGING SEMANTIC GAP

This section presents our approach to bridging the se-

mantic gap in vGOAL. As discussed in Section 4, the

vGOAL interpreter and model-checking framework

differ in how they define the next program state. The

interpreter updates the state as soon as one agent com-

pletes its action, while the model-checking frame-

work waits for all agents to complete their actions. To

address this gap, we propose two general approaches.

The first approach involves aligning both the

vGOAL interpreter and the model-checking frame-

work with the interpreter’s definition of the next pro-

gram state, where decisions are made as soon as any

agent completes its action. This approach allows for

more dynamic and responsive system behavior but ex-

ponentially increases the state space, thus worsening

the state-space explosion problem in model checking.

The second approach aligns both components with

the model-checking framework’s definition, where

decisions are made only after all agents complete their

actions. Although this approach introduces potential

efficiency concerns due to increased waiting times, it

maintains a manageable state space and ensures con-

sistency between the interpreter and model-checking

framework.

Given that system efficiency can be improved

through various software engineering techniques, we

adopt the second approach and employ stepwise re-

finement of the original vGOAL program to mitigate

the efficiency concerns. Our solution involves two se-

quential steps: (1) improving the vGOAL interpreter

to ensure that autonomous decision-making occurs

only after all agents have completed their current ac-

tions, and (2) applying stepwise refinement to the

original vGOAL program to mitigate the increased ex-

ecution time.

Algorithm 4 outlines the implementation of the

operational semantics in the improved vGOAL inter-

preter. Compared with Algorithm 2, Algorithm 4 only

revised the condition to generate the next decision for

the autonomous system. Specifically, a new variable

terminate is introduced in Algorithm 4 (see Line 13),

which evaluates whether all agents complete their ac-

tions. This imposed restriction makes the implemen-

tation of the operational semantics in the vGOAL in-

Algorithm 4: Operational Semantics Imple-

mentation in the Improved vGOAL Interpreter.

Input: a vGOAL program: P, real-time agent

information: In f o

Output: a program execution

s

0

, (events

1

, actions

1

), s

1

, . . . , s

n

// Initialization

1 s

0

← {id

i

: (I(belie f s

i

), I(goals

i

)) | i ∈

{1, . . . , n}}

2 s ← s

0

, E ← s

0

// Continue until no gaols

3 while ∃i.I(goals

i

) ̸=

/

0 do

4 subtrans, actions ← Expansion(s,P)

5 foreach (id

i

: actions

i

) ∈ actions do

6 Send to Agent

i

: perform action

i

.

7 wait ← True, s ←

/

0, t ←

/

0

8 while wait do

9 foreach subS

i

∈ s do

10 subS

′

i

← In f o

11 subT ← (subS

i

, , action

i

, subS

′

i

)

12 t ← t ∪{subT } s ← s

′

∪ {subS

′

i

}

13 terminate ← True

14 foreach subT ∈ t do

15 if subT ∈ subtrans

i

then

16 events ← events ∪ {id : event

i

}

17 actions ← actions ∪ {id :

action

i

}

18 E ← E, (events, actions), s

19 else

20 terminate ← False,

21 wait ← ¬terminate

22 return E

terpreter align with the implementation of the oper-

ational semantics in the model-checking framework

for vGOAL.

We acknowledge that the improved vGOAL inter-

preter may result in a long waiting time for some

agents when each agent needs a different time to com-

plete its action, which increases the overall execution

time for the whole autonomous system.

However, we point out that this efficiency issue

can be properly addressed if we introduce the step-

wise refinement to the vGOAL program. Specifically,

we can refine actions that may take a long time into

multiple actions that take a shorter time, thus making

the waiting time shorter, thereby improving the sys-

tem efficiency. For example, consider a robot’s move-

ment action from location A to location D, which

takes a long time to complete. Instead of having a

single ”move to location D” action, we can refine it

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

584

into multiple shorter actions that traverse through in-

termediate locations: ”move from A to B”, ”move B

to C”, and ”move C to D”. This way, other agents

can continue their tasks after each intermediate move-

ment is completed, rather than waiting for the entire

from A to D movement to finish. This refinement sig-

nificantly reduces overall waiting time and improves

system efficiency.

While stepwise refinement may increase the state

space, we hypothesize that this growth can be con-

trolled to remain linear rather than exponential. This

hypothesis is based on the observation that the addi-

tional states represent intermediate steps of existing

actions rather than entirely new behavioral branches.

Furthermore, the verification of properties, including

safety and liveness, remains unchanged despite the re-

finement of actions. Future experiments will be con-

ducted to validate this hypothesis and quantify the im-

pact of stepwise refinement on state-space growth.

6 CASE STUDY

This section presents a case study to illustrate how

stepwise refinement addresses efficiency issues intro-

duced by the improved vGOAL interpreter. The case

study involves three autonomous robots operating in

a logistic system. We conduct a comparative analy-

sis to demonstrate the impact of stepwise refinement

on system efficiency and empirically evaluate the time

costs for model checking. All experiments were con-

ducted on a MacBook Air 2020 with an Apple M1 and

16GB of RAM. The complete vGOAL specifications

are available at (Yang, 2024).

The autonomous logistic system is expected to de-

liver three workpieces from one of the two pick sta-

tions to the delivery destination. The autonomous

logistic system consists of three autonomous robots:

Robot

1

, Robot

2

, and Robot

3

. Each robot can perform

four actions: move, pick, drop, and charge. Uncer-

tainty happens in the execution of actions, i.e., each

action can either succeed or fail, leading to a desired

state or the system crash.

Destination

Pick

1

Location

0

Location

1

Location

3

Location

4

Location

5

Charge

1

Charge

2

Charge

3

Pick

2

Location

6

Figure 2: Environment before Refinement.

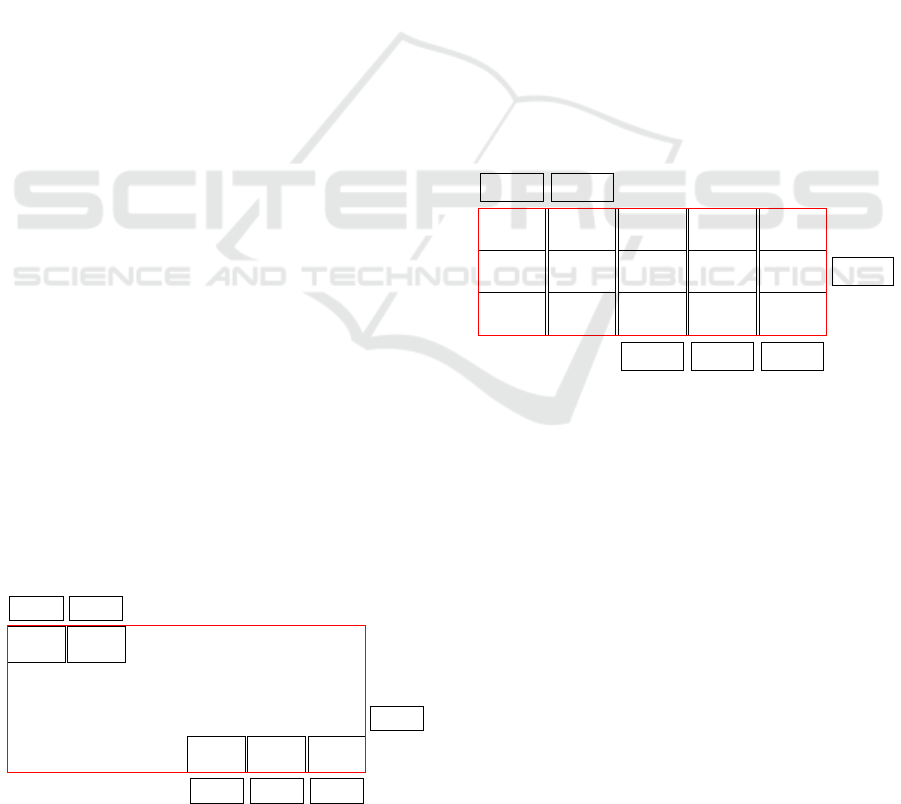

Now, we introduce the environment where the au-

tonomous system operates before the stepwise refine-

ment. Figure 2 presents the layout of the divided

into eight areas, from Location

0

to Location

7

. Eight

areas include one delivery destination (Location

0

);

two pick stations (Location

1

, Location

2

); three charg-

ing stations (Location

3

, Location

4

, Location

5

); and

the rest area (Location

6

). Initially, Robot

1

locates at

Location

3

; Robot

2

locates at Location

4

; and Robot

4

locates at Location

5

. Additionally, a maximum of one

robot can stand on the locations from Location

0

to

Location

6

.

In this autonomous logistic system, each robot can

only perform four actions. The execution time to per-

form move can vary a lot and this action needs loca-

tion permission, while the execution time to perform

the other three actions (pick, drop, and charge) are

relatively stable, and do not require any critical re-

sources. Hence, move is the key to affecting the over-

all execution time for this autonomous system. For

example, the vGOAL interpreter initially makes a de-

cision for Robot

1

and Robot

2

: move to Location

1

, and

move to Location

2

, respectively. Robot

3

has to wait to

get the location permission for Location

1

until both

Robot

1

and Robot

2

achieve their goals.

Destination

Pick

1

Location

0

Location

1

Location

11

Location

13

Location

14

Location

7

Location

9

Location

10

Location

12

Location

2

Location

6

Location

8

Location

3

Location

4

Location

5

Charge

1

Charge

2

Charge

3

Pick

2

Figure 3: Environment after Refinement.

To address the inefficiency, we refined the envi-

ronment to 15 areas as shown in Figure 3. Specif-

ically, we keep the original six locations from

Location

0

to Location

5

, and we refine one original

location (Location

6

) to the current nine areas (from

Location

6

to Location

14

. In the refined environment,

each execution time for the move action will be rel-

atively stable, and robots do not have to wait to get

the move command until other robots complete a long

journey.

We illustrate the efficiency improvement between

before and after the refinement. First, we use the

improved vGOAL interpreter outlined in Algorithm

4 and the model-checking framework for vGOAL.

The vGOAL program describes how the autonomous

logistic system works in the environment as shown

in Figure 2. Second, we use the improved vGOAL

interpreter outlined in Algorithm 4 and the model-

Bridging the Semantic Gap in vGOAL for Verifiable Autonomous Decision-Making

585

checking framework for vGOAL. The vGOAL pro-

gram describes how the autonomous logistic system

works in the refined environment as shown in Figure

3.

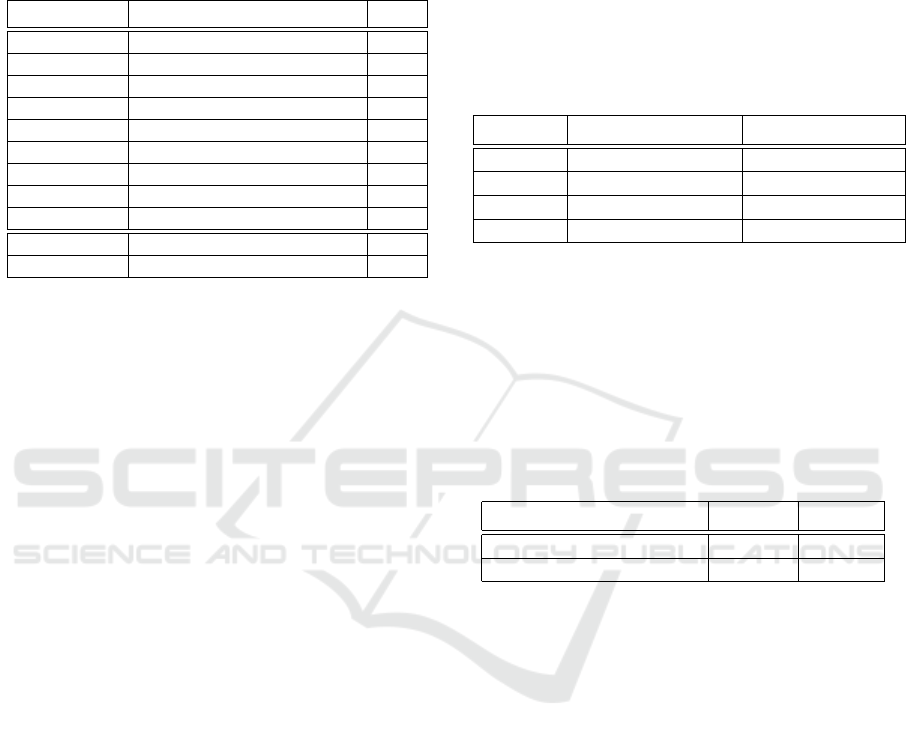

Table 1: Stepwise Refinement in vGOAL Program.

Original Path Refined Path Time

3 → 1 3 → 8 → 9 → 1 3t

1

4 → 1 4 → 8 → 9 → 1 3t

1

5 → 1 5 → 8 → 9 → 1 3t

1

3 → 2 3 → 10 → 12 → 2 3t

1

4 → 2 4 → 12 → 2 2t

1

2 → 0 2 → 14 → 13 → 11 → 1 → 0 5t

1

0 → 3 0 → 7 → 6 → 8 → 3 4t

1

0 → 4 0 → 7 → 6 → 8 → 4 4t

1

0 → 5 0 → 7 → 6 → 8 → 5 4t

1

1 → 0 1 → 0 t

1

5 → 2 5 → 2 t

1

Table 1 illustrates the refinement of movement

paths in our case study. Among the four possi-

ble robot actions, the move action exhibits signifi-

cant variability in execution time, quantified using

t

1

. This variability is primarily influenced by the dis-

tance a robot must travel, leading to substantial wait-

ing times for other robots and reducing overall system

efficiency.

In our analysis, robots can traverse 11 different

paths to achieve their delivery objectives. We iden-

tified nine paths that need to be refined, each requir-

ing multiple t

1

units for a move action, while only

two paths were efficient, taking approximately t

1

. To

optimize the system, we concentrated our refinement

efforts on these nine longer paths. Our approach in-

volved breaking down each long path into shorter seg-

ments, ensuring that each move action would take ap-

proximately t

1

to complete. This refinement approach

reduces robot waiting times and improves overall sys-

tem performance.

The total system execution time is calculated as

the sum of sequential action steps, where multiple

robots can operate simultaneously in each step. For

example, when all three robots perform a charging

action concurrently, the execution time for this step

is simply t

2

. Similarly, when Robot

1

moves from

Location

3

to Location

1

(taking 3t

1

) while Robot

2

moves from Location

4

to Location

2

(taking 2t

1

), and

Robot

3

remains idle, the execution time for this step

is max(3t

1

, 2t

1

, 0) = 3t

1

. This calculation method en-

sures an accurate representation of both sequential

and parallel robot operations.

We ran both the original and refined vGOAL pro-

grams within a model-checking framework, generat-

ing the same longest execution that can be produced

by the vGOAL interpreter. Table 2 shows the details

of the execution time before and after refinement. The

execution time before refinement includes 26t

1

for

seven unrefined move actions, t

2

for one charge ac-

tion, 2t

3

for two pick actions, and 3t

4

for three drop

actions. The execution time after refinement includes

17t

1

for 17 refined move actions, t

2

for one charge ac-

tion, 2t

3

for two pick actions, and 2t

4

for two drop

actions.

Table 2: Execution Time before and after the Refinement.

Duration Before Refinement After Refinement

move 26t

1

17t

1

charge t

2

t

2

pick 2t

3

2t

3

drop 3t

4

2t

4

The refinement of the vGOAL program signifi-

cantly improved the efficiency of robot actions, par-

ticularly in reducing the time spent on move actions.

By breaking down longer paths into shorter segments,

we achieved a reduction in the total execution time

from 26t

1

to 17t

1

for move actions. This refinement

of move minimizes waiting times for other robots and

improves the overall system performance.

Table 3: Model-Checking Before and After Refinement.

Indicators Original Refined

Number of States 1186 2635

Model-checking Time (s) 40.88 218.84

We recognize that the refinement process leads to

an expansion of the state space, which in turn in-

creases the time required for model-checking. Table 3

illustrates the model-checking results both before and

after the refinement. In this case study, we focused

on verifying the safety and liveness properties. As

expected, the state space approximately doubled, re-

sulting in an increase of about 160 seconds in model-

checking time. Despite this increase, the additional

time remains manageable and within acceptable lim-

its. Despite this increase in computational overhead,

we consider this trade-off acceptable for several rea-

sons. First, the model-checking time remains within

practical limits, adding only about three minutes to

the verification process. Second, this one-time verifi-

cation cost is outweighed by the long-term benefits of

improved runtime efficiency in the actual robot sys-

tem.

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

586

7 CONCLUSION AND FUTURE

WORK

This paper addresses a critical challenge in verifiable

autonomous decision-making: bridging the semantic

gap between program execution and model check-

ing in vGOAL. We have made three key contribu-

tions: (1) identifying and analyzing the semantic gap

in vGOAL, (2) developing an improved interpreter that

aligns the implementation of the next program state,

and (3) demonstrating how stepwise refinement can

effectively address potential efficiency issues.

Our case study of an autonomous logistics sys-

tem with three mobile robots validates our approach.

The results show that while our improved interpreter

may introduce some execution overhead, the step-

wise refinement successfully reduces the execution

time for move actions by 34.6%. Although the re-

finement process increased the state space and model-

checking time, the additional computational cost re-

mained manageable, demonstrating the practicality of

our approach.

Future work will focus on two directions: (1)

extending experimental validation across a broader

range of multi-agent scenarios and real-world envi-

ronments, and (2) conducting comprehensive scal-

ability analysis with increasing system complexity.

These extensions will further validate and enhance

our approach to developing reliable autonomous

decision-making systems.

ACKNOWLEDGEMENTS

This research is partially funded by the Research

Fund KU Leuven.

REFERENCES

Bordini, R. H., Fisher, M., Pardavila, C., and Wooldridge,

M. (2003). Model checking AgentSpeak. In Pro-

ceedings of the second international joint conference

on Autonomous agents and multiagent systems, pages

409–416.

Bordini, R. H. and H

¨

ubner, J. F. (2005). BDI agent program-

ming in AgentSpeak using Jason. In International

workshop on computational logic in multi-agent sys-

tems, pages 143–164. Springer.

Dastani, M., Tinnemeier, N. A., and Meyer, J.-J. C. (2009).

A programming language for normative multi-agent

systems. In Handbook of Research on Multi-Agent

Systems: semantics and dynamics of organizational

models, pages 397–417. IGI Global.

Dennis, L. A. (2018). The mcapl framework including the

agent infrastructure layer and agent java pathfinder.

The Journal of Open Source Software.

Dennis, L. A., Fisher, M., and Webster, M. (2018). Two-

stage agent program verification. Journal of Logic and

Computation, 28(3):499–523.

Dennis, L. A., Fisher, M., Webster, M. P., and Bordini,

R. H. (2012). Model checking agent programming

languages. Automated software engineering, 19(1):5–

63.

Hindriks, K. V. (2009). Programming rational agents in

GOAL. In Multi-agent programming, pages 119–157.

Springer, Berlin, Heidelberg.

Hindriks, K. V., De Boer, F. S., Van der Hoek, W., and

Meyer, J.-J. C. (1999). Agent programming in 3apl.

Autonomous Agents and Multi-Agent Systems, 2:357–

401.

Holzmann, G. J. (1997). The model checker spin. IEEE

Transactions on software engineering, 23(5):279–

295.

Jongmans, S. (2010). Model checking GOAL agents.

Jongmans, S.-S. T., Hindriks, K. V., and Van Riemsdijk,

M. B. (2010). Model checking agent programs by us-

ing the program interpreter. In Computational Logic

in Multi-Agent Systems: 11th International Workshop,

CLIMA XI, Lisbon, Portugal, August 16-17, 2010.

Proceedings 11, pages 219–237. Springer.

Kwiatkowska, M., Norman, G., and Parker, D. (2011).

Prism 4.0: Verification of probabilistic real-time sys-

tems. In Computer Aided Verification: 23rd Interna-

tional Conference, CAV 2011, Snowbird, UT, USA,

July 14-20, 2011. Proceedings 23, pages 585–591.

Springer.

Luckcuck, M., Farrell, M., Dennis, L. A., Dixon, C., and

Fisher, M. (2019). Formal specification and verifica-

tion of autonomous robotic systems: A survey. ACM

Computing Surveys (CSUR), 52(5):1–41.

Winikoff, M. (2007). Implementing commitment-based in-

teractions. In Proceedings of the 6th international

joint conference on Autonomous agents and multia-

gent systems, pages 1–8.

Yang, Y. (2024). Supplementary Documents. https://github.

com/yiyangvGOAL/vGOALICAART2025.git.

Yang, Y. and Holvoet, T. (2023a). vGOAL: a GOAL-based

specification language for safe autonomous decision-

making. In Engineering Multi-Agent Systems: 11th

International Workshop, EMAS 2023, London, UK,

29-30 May 2023, Revised Selected Papers.

Yang, Y. and Holvoet, T. (2023b). Making model checking

feasible for goal. Annals of Mathematics and Artificial

Intelligence.

Yang, Y. and Holvoet, T. (2023c). Safe autonomous

decision-making with vGOAL. In Advances in

Practical Applications of Agents, Multi-Agent Sys-

tems, and Cognitive Mimetics. The PAAMS Collec-

tion. Guimar

˜

aes, Portugal.

Yang, Y. and Holvoet, T. (2024). Model Checking of

vGOAL. arXiv, Preprint. Preprint.

Bridging the Semantic Gap in vGOAL for Verifiable Autonomous Decision-Making

587