Recognition of Typical Highway Driving Scenarios for Intelligent

Connected Vehicles Based on Long Short-Term Memory Network

Xinjie Feng

1

, Shichun Yang

1

, Zhaoxia Peng

1

, Yuyi Chen

1

, Bin Sun

1

, Jiayi Lu

1

, Rui Wang

1

and

Yaoguang Cao

2, 3

1

School of Transportation Science and Engineering, Beihang University, Beijing, China

2

State Key Lab of Intelligent Transportation System, Beihang University, Beijing, China

3

Hangzhou International Innovation Institute, Beihang University, Hangzhou, China

Keywords:

Typical Driving Scenarios, Scenario Element Extraction, Scenarios Recognition, Long Short-Term Memory

Network.

Abstract:

In the complex traffic environment where intelligent connected vehicles (ICVs) and traditional vehicles coex-

ist, accurately identifying the driving scenarios of a vehicle helps ICVs make safer and more efficient decisions,

while also enabling performance evaluation across different scenarios to further optimize system capabilities.

This paper presents a typical highway driving scenarios recognition model with extensive scenario coverage

and high generalizability. The model first categorizes the constituent elements of driving scenarios and extracts

the core elements of typical highway scenarios. Then, based on a long short-term memory (LSTM) network

architecture, it extracts features from the ego vehicle and surrounding vehicles to identify the typical driving

scenarios in which the ego vehicle is located. The model was tested and validated on the HighD dataset,

achieving an overall accuracy of 96.74% for four typical highway scenarios: Lane-change, Car-following,

Alongside vehicle cut-in, and Preceding vehicle cut-out. Compared to baseline models, the proposed model

demonstrated superior performance.

1 INTRODUCTION

Driving scenario recognition is a fundamental and

challenging task in autonomous driving systems, and

it is also a key step in understanding traffic envi-

ronments(Lee et al., 2020). Under different driv-

ing scenarios, the parameter settings and operational

performance of ICVs vary, making accurate scenario

recognition crucial for improving their operational

efficiency. Additionally, the environmental and ve-

hicular information contained in these scenarios pro-

vides support for the development, testing, and per-

formance evaluation of ICVs. Specifically, the role of

driving scenario recognition includes:

• Predicting the scenario in which the autonomous

vehicle is located, providing prior knowledge

to subsequent decision-making systems, filtering

perception system data, providing standardized

data to the perception network, and enabling tar-

geted parameter training;

• Identifying the different driving scenarios expe-

rienced by the autonomous vehicle, thereby en-

hancing the efficiency and coverage of open road

testing for autonomous driving;

• Evaluating the performance of ICVs in various

scenarios through scenario recognition, providing

directions for the continuous optimization of au-

tonomous driving systems.

The driving scenarios involved in ICVs typically

encompass complex environmental factors, including

interactions between dynamic and static traffic partic-

ipants. A driving scenario refers to the dynamic inter-

action process between an ICV and surrounding par-

ticipants over a period of time. For instance, a driving

scenario may involve a vehicle rapidly changing lanes

from the left lane into the main lane (Lu et al., 2023).

Before recognizing this as a Lane-change scenario,

the vehicle needs to continuously acquire information

about the position, speed, and direction of itself and

surrounding traffic participants. The dynamic interac-

tion process among these participants during this time

can be defined as a Lane-change scenario.

Detecting real-world traffic conditions and scenar-

ios is a critical area of research in ICVs (Rsener et al.,

Feng, X., Yang, S., Peng, Z., Chen, Y., Sun, B., Lu, J., Wang, R. and Cao, Y.

Recognition of Typical Highway Driving Scenarios for Intelligent Connected Vehicles Based on Long Short-Term Memory Network.

DOI: 10.5220/0013201700003941

In Proceedings of the 11th International Conference on Vehicle Technology and Intelligent Transport Systems (VEHITS 2025), pages 25-33

ISBN: 978-989-758-745-0; ISSN: 2184-495X

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

25

2016; Benmimoun and Eckstein, 2014). In many

cases, scenario classification for simple situations can

be adequately performed using maneuver-based de-

tection, which considers the goals of the ego vehicle

and classifies scenarios in a way that is understand-

able to humans, such as lane changing, turning or

following. For instance, the nuScenes dataset (Cae-

sar et al., 2020) primarily classifies scenarios based

on the behavior and status of the ego vehicle, such

as ”waiting at an intersection,” ”turning left,” ”turn-

ing right,” and ”approaching an intersection.” How-

ever, this method of defining scenarios does not con-

sider the dynamic behavior of other traffic partici-

pants, thereby ignoring their influence on the ego ve-

hicle. Analysis of the California autonomous vehicle

dataset indicates that the behavior of other vehicles

has a significant impact on the safety of autonomous

vehicles (Ma et al., 2022). Simply classifying driv-

ing scenarios based on the ego vehicle’s maneuvers

without considering environmental factors results in

overly simplistic scenario segmentation and inaccu-

rate classification.

Merely identifying the ego vehicle’s maneuvers

(such as Lane-change or Turning) does not adequately

account for the impact of other vehicles’ behaviors

on scenario changes, such as a leading vehicle cut-

ting in, resulting in insufficient scenario classifica-

tion and inaccurate recognition. To address these is-

sues, this paper proposes a typical scenario recog-

nition method based on Long Short-Term Memory

(LSTM) networks. The proposed method divides

driving scenarios into environmental, roadway, and

dynamic driving behavior layers to decouple com-

plex scenarios. By using LSTM networks to consider

the interaction characteristics of surrounding vehi-

cles, the method effectively recognizes typical driving

behaviors, thereby achieving a comprehensive classi-

fication of typical driving scenarios for ICVs.

2 RELATED WORK

2.1 Scenario Definition

Early researchers abstracted the information sur-

rounding a vehicle into a concept called a ”scenario”

(Ren et al., 2022). Depending on the environmental

information and the ego vehicle’s data, various def-

initions of scenarios have been proposed. (Go and

Carroll, 2004) described a scenario as a comprehen-

sive representation that includes participants, back-

ground information, environmental assumptions, par-

ticipants’ goals or intentions, as well as a sequence of

operations and events. In certain applications, some

of these elements may be partially omitted or sim-

plified. (Ulbrich et al., 2015) provided a more gen-

eral definition of a scenario, suggesting that it de-

scribes the temporal evolution of multiple situations,

each of which has an initial state, and evolves through

changes in actions, events, goals, and values. (Zhu

et al., 2019) viewed scenarios as a combination of the

driving scene and driving context of autonomous ve-

hicles, proposing that a scenario is a dynamic depic-

tion of various elements of the autonomous vehicle

and its driving environment over time, with these ele-

ments determined by the autonomous driving function

being tested.

In the field of autonomous driving, scholars pri-

marily classify scenario elements based on the six-

layer scenario model proposed by the German PE-

GASUS project (Menzel et al., 2018). The PEGA-

SUS project, initiated by relevant companies and re-

search institutions in the German automotive industry,

aims to establish testing standards for autonomous ve-

hicles. From the perspective of deconstructing and

reconstructing test scenarios, the PEGASUS project

proposed a six-layer scenario model:

• 1) Road Layer: Describes the road geometry, di-

mensions, topology, surface quality, and boundary

information;

• 2) Traffic Infrastructure Layer: Describes vari-

ous fixed facilities associated with the road layer,

which constrain the behavior of autonomous ve-

hicles and other traffic participants through traffic

rules;

• 3) Temporary Operation Layer: Describes tempo-

rary sections of roads and related traffic facilities

within the scenario;

• 4) Objects Layer: Describes various dynamic,

static, and movable traffic participants within the

scenario and their interaction behaviors;

• 5) Environmental Layer: Describes the environ-

mental conditions within the scenario, such as

weather and lighting;

• 6) Data Communication Layer: Covers V2X in-

formation, digital maps, and other related content.

It can be seen that a scenario encompasses the ex-

ternal road, traffic infrastructure, weather conditions,

traffic participants, as well as the driving tasks and

status of the vehicle itself. It represents an organic

combination and dynamic reflection of the driving en-

vironment, traffic participants, and driving behavior

over time and space. To clearly delineate different

scenario elements and simplify the task of scenario

recognition, this paper analyzes and identifies typical

driving scenarios of ICVS based on the six-layer ele-

ment framework.

VEHITS 2025 - 11th International Conference on Vehicle Technology and Intelligent Transport Systems

26

2.2 Scenario Classification

The German PEGASUS project (Menzel et al., 2018)

divides scenarios into three levels of abstraction:

functional scenarios, logical scenarios, and concrete

scenarios, based on the degree of abstraction of sce-

nario elements. Functional scenarios describe the

types of scenario elements and simple parameters, but

they are not directly machine-readable. Logical sce-

narios represent a set of parameterized scenarios, in-

cluding the types of elements and their value ranges.

Concrete scenarios precisely describe a specific sit-

uation and the associated chain of events with fixed

parameters, and they can be defined in detail using

specific languages. In scenario recognition, the focus

is generally on recognizing logical scenarios, which

facilitates extracting the parameter ranges of specific

scenarios for testing and data mining, as well as ab-

stracting functional scenarios to define the operational

design domain (ODD) of ICVs, such as Lane Keeping

Assist (LKA) and Adaptive Cruise Control (ACC).

Therefore, this paper focuses on the extraction and

recognition of logical scenarios.

In addition to the aforementioned scenario classi-

fications, there are also types such as hazardous sce-

narios, edge scenarios, and accident scenarios. These

driving scenarios encompass some of the scenario

parameters, such as extreme parameters, hazardous

parameters, and rare parameters. The vehicle typ-

ical driving scenario recognition in this study does

not differentiate between these parameter ranges but

rather focuses on recognizing scenarios characterized

by typical driving behaviors, such as car-following

scenarios.

2.3 Scenario Recognition

The recognition of typical driving scenarios for ICVs

primarily focuses on detecting vehicle maneuvers,

such as Car-following driving and Lane-change driv-

ing. Some methods extract similar scenarios through

clustering to identify representative driving scenarios.

For example, (Nitsche et al., 2017) proposed an algo-

rithm to cluster vehicle collision data based on pre-

defined scenario types, while (Kruber et al., 2018)

discussed an unsupervised learning algorithm using

random forests to group general traffic data. How-

ever, existing methods for obtaining scenario types

from driving data often rely on manually crafted fea-

tures, which may lead to certain situations being over-

looked or insufficiently recognized. To address this,

(Hauer et al., 2020) proposed a method for extracting

scenario types from driving data, primarily extract-

ing speed and distance features and utilizing Principal

Component Analysis (PCA) for feature compression.

In addition, neural network methods can also be

used to distinguish and recognize driving scenarios.

For instance, (Lu et al., 2023) proposed an unsuper-

vised method from a bird’s-eye perspective to catego-

rize hazardous scenarios in intelligent driving. (Yang

et al., 2022; Sun et al., 2020) extracted ego and sur-

rounding vehicle information, along with the spa-

tiotemporal features of the environment, to identify

lane-changing behaviors and predict Lane-change tra-

jectory distribution. (Epple et al., 2020) used an ap-

proach that separately handled the temporal and spa-

tial domains to extract and recognize vehicle scenario

features.

However, methods based on fixed preset rules

are typically limited to extracting a single specific

scenario, making it difficult to adapt to increasingly

complex real-world driving environments. Moreover,

solely identifying the ego vehicle’s maneuvers can-

not fully capture the dynamic changes of elements

within typical driving scenarios. Therefore, in this

study, we divided the scenario elements into different

dimensions, focusing on analyzing dynamic driving

elements that change significantly in a short period.

We developed a typical driving scenario recognition

model to enhance the comprehensiveness and accu-

racy of typical scenario recognition.

3 METHODOLOGY

3.1 Task Statement

According to the PEGASUS project, scenarios in

intelligent driving systems can be categorized into

six constituent elements, which can be further di-

vided into three dimensions: dynamic driving sce-

narios, road scenarios, and natural environment sce-

narios. Dynamic driving scenarios include behaviors

such as car-following, lane-keeping, ego-vehicle lane

changes, alongside vehicle cut-in, and preceding ve-

hicle cut-out. Relevant parameters describe the mo-

tion state of the ego vehicle, as well as its relative

position and relative motion with respect to target ob-

jects. Road information includes descriptions of road

type, road class, pavement structure, and infrastruc-

ture, such as highways, urban expressways, road clas-

sifications (e.g., primary, secondary roads), road cur-

vature, lane width, number of lanes, toll stations, main

road entrances and exits, and intersections. These de-

tails are generally obtainable through map and posi-

tioning data. Natural environment information de-

scribes weather conditions, including weather type,

time of day, light intensity, and direction, which can

Recognition of Typical Highway Driving Scenarios for Intelligent Connected Vehicles Based on Long Short-Term Memory Network

27

Table 1: Dynamic driving scenarios on highways.

Vehicle behavior Behavior decomposition Notes

1 Lane-keeping driving

Longitudinal (straight road),

longitudinal + lateral (curved road)

Single vehicle behavior

2 Car-following driving

Longitudinal (straight road),

longitudinal + lateral (curved road)

Two-vehicles interaction

3 Alongside vehicle cut-in Longitudinal Two-vehicles interaction

4 Preceding vehicle cut-out Longitudinal Two-vehicles interaction

5 Free lane change

Longitudinal (straight road),

longitudinal + lateral (curved road)

Single vehicle behavior

6 Forced lane change

Longitudinal (straight road),

longitudinal + lateral (curved road)

Two-vehicles interaction

7

Merging from acceleration lane

/emergency lane into the mainline

Longitudinal (straight road),

longitudinal + lateral (curved road)

Single vehicle behavior

8

Merging from the mainline

into the deceleration lane

Longitudinal (straight road),

longitudinal + lateral (curved road)

Single vehicle behavior

9 Lead vehicle emergency braking Longitudinal Two-vehicles interaction

10 Overtaking

Longitudinal (straight road),

longitudinal + lateral (curved road)

Two-vehicles interaction

11 Avoiding speed-conflict vehicles

Longitudinal (straight road),

longitudinal + lateral (curved road)

Two-vehicles interaction

typically be obtained from meteorological data.

For the task of recognizing typical driving sce-

narios, dynamic driving scenarios exhibit significant

changes over short time periods. Therefore, the fo-

cus is primarily on identifying dynamic driving sce-

narios within a given time frame, and subsequently

overlaying road and weather scenarios to determine

the typical driving scenario on the highway. By an-

alyzing the constraint relationships between different

road scenario types and dynamic driving scenarios, a

coupling relationship can be established between road

scenarios and dynamic driving scenarios, categorizing

highway dynamic driving scenarios into 11 types, as

shown in Table 1.

Excluding specific road types, the aforementioned

11 dynamic driving scenarios can be categorized into

five fundamental driving behaviors: Lane-keeping,

Car-following, Alongside vehicle cut-in, Preceding

vehicle cut-out, and Lane-change. Among these sce-

narios, lane-keeping has fewer distinct features, so

in a highway driving trajectory, once the other four

scenarios are identified, the remaining trajectory se-

quence is classified as lane-keeping. Therefore, the

focus of typical driving scenario recognition in this

paper is on the identification of four scenarios: Car-

following, Alongside vehicle cut-in, Preceding vehi-

cle cut-out, and Lane-change. This approach not only

considers the scenario changes caused by ego-vehicle

maneuvers but also accounts for those resulting from

the maneuvers of surrounding vehicles.

3.2 Definition of Target Vehicle and

Environmental Information

The objective of typical driving scenario recognition

is to identify the driving scenarios experienced by

the target vehicle based on the historical trajecto-

ries of both the target vehicle and the surrounding

vehicles. The scenario of the target vehicle is in-

fluenced by the surrounding vehicles. As shown in

Fig. 1, the area around the target vehicle is divided

into eight positions: Left-Preceding(LP), Preced-

ing(P), Right-Preceding(RP), Left-Alongside(LA),

Right-Alongside(RA), Left-Following(LF), Follow-

ing(F), and Right-Following(RF). The spatial infor-

mation from each of these positions serves as an

important feature input to the scenario recognition

model, thereby improving the accuracy of scenario

recognition.

Figure 1: The 8 adjacent positions vehicles of the target

vehicle.

As mentioned above, it is necessary to use the spa-

tiotemporal features of the ego vehicle and surround-

ing vehicles as input sequences. We input a time se-

VEHITS 2025 - 11th International Conference on Vehicle Technology and Intelligent Transport Systems

28

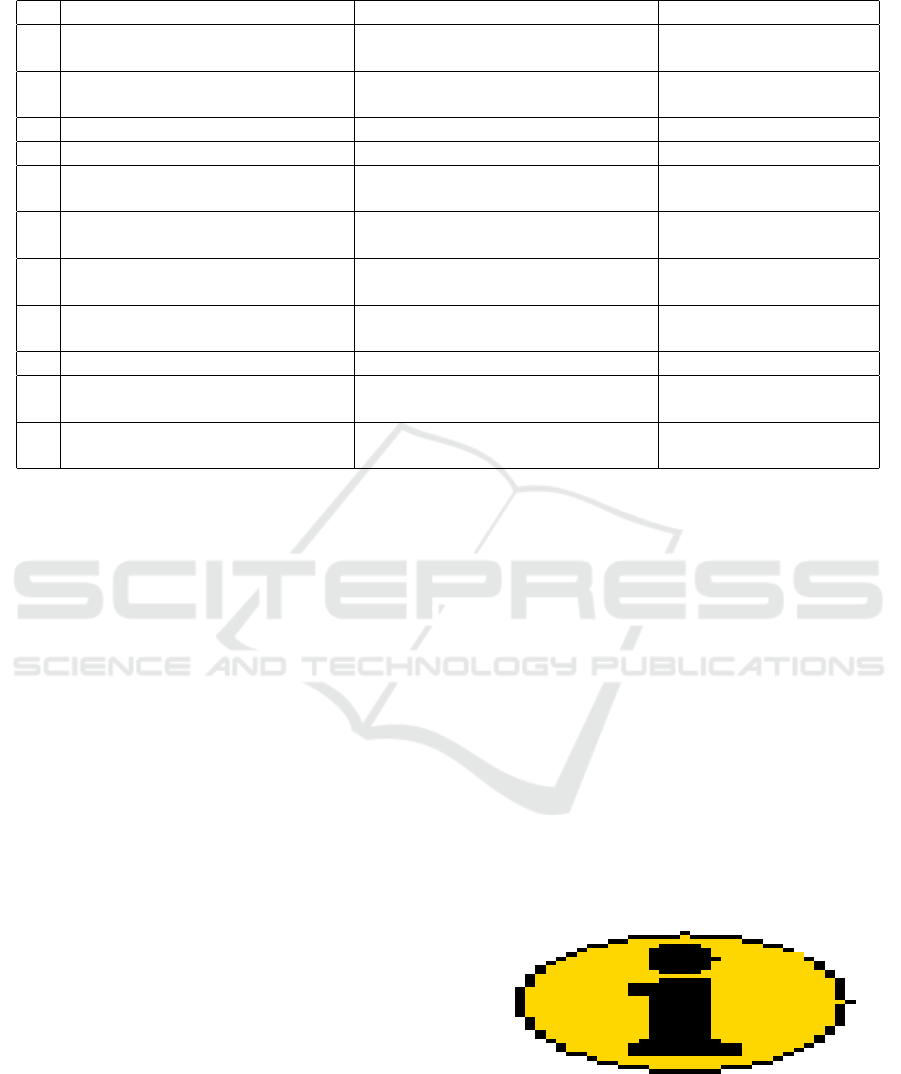

Figure 2: The structure of the proposed dynamic driving scenarios recognition model.

ries consisting of T time steps, as shown in Equation

1.

F = (F

1

, F

2

, ...F

T

) (1)

where F

T

= (F

e

T

, F

s

T

) represents the trajectory features

at time step T , F

e

T

represents the ego vehicle trajec-

tory features at time step T , which include ego vehicle

coordinates, lateral and longitudinal speeds, and lat-

eral and longitudinal accelerations. F

s

T

represents the

surrounding vehicle trajectory features at time step

T , including the coordinates, lateral and longitudinal

speeds, and lateral and longitudinal accelerations of

the vehicles at eight surrounding positions. If no ve-

hicle exists in a given surrounding position, the cor-

responding values are set to 9999 for consistent pro-

cessing in subsequent steps.

3.3 Dynamic Driving Scenario

Recognition Model

In this study, we propose a two-layer LSTM-based

dynamic driving scenario recognition model to extract

hidden driving features from vehicle trajectories. As

shown in Fig. 2, the proposed model consists of a tra-

jectory feature extraction layer and a scenario recog-

nition layer, with the scenario classification score as

the output for recognition results.

The trajectory feature extraction layer utilizes a

bidirectional Long Short-Term Memory (Bi-LSTM)

network combined with an attention mechanism, al-

lowing the model to consider both forward and back-

ward trajectory information while focusing on key

features. The LSTM core comprises three gates: a

forget gate, an input gate, and an output gate. The for-

get gate determines which information should be dis-

carded or retained during transmission; the input gate

is responsible for updating the cell state; and the out-

put gate determines the output of the cell. In trajectory

sequences, the current output may be influenced by

both previous and future information, which is why

a Bi-LSTM, combining both forward and backward

LSTMs, is used to fully leverage sequential informa-

tion. The corresponding calculation formula is shown

in Equation 2 and Equation 3.

h

e

t

= concat(h

e

L

t

, h

e

R

t

) (2)

h

s

t

= concat

h

s

L

t

, h

s

R

t

(3)

where h

L

t

and h

R

t

represent the hidden states of the

forward LSTM and backward LSTM, respectively.

The concat function concatenates the forward and

backward hidden states. h

e

t

and h

s

t

represent the ego

vehicle state information and the surrounding vehicle

information, respectively.

The attention weights α

t

are obtained using the

Softmax function, as shown in Equation 4:

α

t

=

exp(e

t

)

∑

T

k=1

exp(e

k

)

(4)

where e

t

is the attention score at time step t.

The weighted sum of the outputs at each time step

can then be calculated as Equation 5 and 6:

c

e

=

T

∑

t=1

α

e

t

h

e

t

(5)

c

s

=

T

∑

t=1

α

e

t

h

s

t

(6)

Recognition of Typical Highway Driving Scenarios for Intelligent Connected Vehicles Based on Long Short-Term Memory Network

29

where c

e

and c

s

represent the weighted sums of the

hidden layers for the ego vehicle and surrounding ve-

hicles, respectively.

The scenario recognition layer uses a Dropout

function to remove redundant hidden units, prevent-

ing the model from overfitting.The context vectors of

the ego vehicle and surrounding vehicles are concate-

nated along the feature dimension to form a compre-

hensive feature vector, as shown in Equation 7.

c = [c

e

;c

s

] (7)

The concatenated feature vector c is fed into a

fully connected layer to obtain the raw prediction

scores z for each class. Finally, we feed the raw pre-

diction scores z into the Softmax function to obtain

the predicted probability for each class, as shown in

Equation 8.

ˆy

i

=

exp(z

i

)

∑

K

k=1

exp(z

k

)

, i = 1, 2, 3, 4 (8)

where y

i

represents the predicted probability for class

i.

4 EXPERIMENT

4.1 Dataset and Data Processing

The HighD dataset is a large-scale naturalistic vehicle

trajectory dataset used to validate scenarios on Ger-

man highways. The dataset includes approximately

110,000 post-processed trajectories of vehicles (in-

cluding cars and trucks), which were extracted from

videos recorded using drones on German highways

near Cologne in 2017 and 2018. A total of 60 record-

ings were conducted across six different locations,

with an average recording duration of 17 minutes, to-

taling 16.5 hours, covering a highway segment ap-

proximately 420 meters in length, as shown in Fig.

3.

Figure 3: The HighD dataset collection diagram.

The HighD dataset consists of 60 recordings, each

containing three files that record vehicle information

for each frame, including ID, trajectory coordinates,

speed, acceleration, Car-following data, and vehicle

type. The dataset was collected across different high-

way locations and on different dates, encompassing

various vehicle types, thus demonstrating diversity.

This dataset is representative of typical vehicle char-

acteristics in daily traffic, making it highly valuable

for scenario recognition research in ICVs.

4.2 Sample Sequence Extraction

To extract the motion features of the ego vehicle and

surrounding vehicles at each frame for the purpose

of distinguishing different scenarios, this study first

extracts the Lane-change data sequence based on the

ego vehicle’s lane-change behavior. For lane-change

vehicles, the complete trajectory includes the prepa-

ration phase, the midpoint of the lane change, and the

completion phase. To avoid scene trajectory overlaps

and changes in surrounding vehicle IDs, we extract

only the preparation and midpoint phases of the lane

change, marking these as Lane-change scenarios, and

collect the surrounding vehicle trajectories during this

phase. The trajectory data extraction method is illus-

trated in Fig. 4.

Figure 4: Lane-change and Car-Following Trajectory Se-

quence Extraction.

Next, we extract trajectories for Alongside vehi-

cle cut-in, Preceding vehicle cut-out from the ego ve-

hicle’s lane-keeping trajectory. Initially, we check

whether the lead vehicle ID changes. If the ID re-

mains unchanged, the trajectory sequence is labeled

as Car-following. If the lead vehicle ID changes,

we differentiate between Alongside vehicle cut-in and

Preceding vehicle cut-out by comparing the vehicle

coordinates before and after the change. To avoid

confusion due to changes in surrounding vehicle IDs,

we only extract the trajectory up to the successful

completion of a lane change, ensuring that vehicle

IDs in surrounding positions remain consistent, The

trajectory data extraction method is illustrated in Fig.

5 and Fig. 6.

VEHITS 2025 - 11th International Conference on Vehicle Technology and Intelligent Transport Systems

30

Figure 5: Car-Following and Alongside vehicle cut-in Tra-

jectory Sequence Extraction.

Figure 6: Car-Following and Preceding vehicle cut-out Tra-

jectory Sequence Extraction.

Finally, the sliding window method is used to ex-

tract fixed-length sample sequences from all vehicle

trajectory segments, with a window length of 1 sec-

ond. In total, we extracted 11,721 Lane-change sce-

nario trajectories, 7,166 Car-following trajectories,

3,976 Alongside vehicle cut-in trajectories, and 7,669

Preceding vehicle cut-out trajectories. To mitigate

the impact of data imbalance on model performance,

we applied oversampling to underrepresented scenar-

ios to balance the number of samples across classes.

Since the dataset includes multiple types of data, such

as position and speed, which have varying scales and

units, we performed min-max normalization on all

data to reduce interference from different data mag-

nitudes and facilitate the training and convergence of

the neural network. The corresponding normalization

formula is as shown in Equation 9:

x

′

=

x − x

min

x

max

− x

min

(9)

where x represents the original data, x

min

and x

max

are

the minimum and maximum values of the data, re-

spectively, and x

′

is the normalized data.

4.3 Training Parameters and

Evaluation Metrics

In this study, the data was randomly divided into a

training set and a test set, with the test set compris-

ing 20% of the data. The proposed scenario recog-

nition network was trained using the PyTorch frame-

work. The model consists of a 4-layer stacked LSTM,

with each hidden layer containing 64 neurons. Cross-

entropy loss was used as the loss function, and the

entire model was trained using the Adam optimizer.

We employed precision, recall, and F1-score as

evaluation metrics to assess the proposed LSTM-

based typical driving scenario recognition model:

Precision refers to the proportion of correctly

identified samples for a particular scenario out of the

total samples identified as belonging to that scenario

category. For detailed calculation, refer to Equation

10.

P =

T P

T P + FP

(10)

Recall refers to the proportion of correctly identi-

fied samples for a particular scenario out of the actual

total samples of that scenario, as shown in Equation

11.

R =

T P

T P + FN

(11)

F1-score refers to the harmonic mean of Precision

and Recall, providing a single measure that balances

both. The formula for the F1-score is Equation 12:

F1 =

2 ∗ P ∗ R

P + R

(12)

In the above formula, TP (True Positives) repre-

sents number of samples correctly identified as be-

longing to the target class. False Positives (FP) rep-

resents the number of samples incorrectly identified

as belonging to the target class. False Negatives (FN)

represents the number of samples that belong to the

target class but were incorrectly identified as not be-

longing to it.

4.4 Result

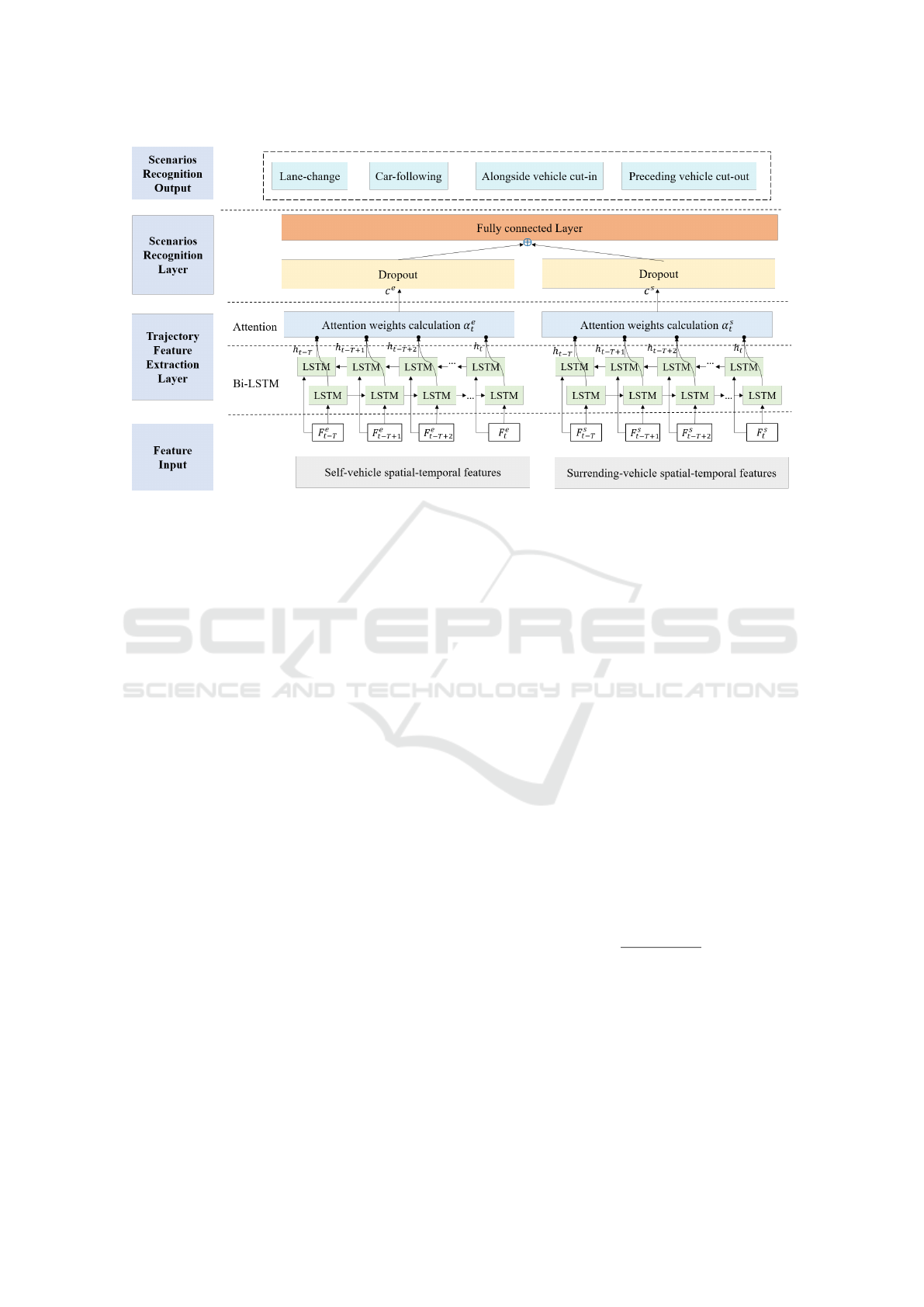

The model was trained for 300 epochs, with the train-

ing and test losses gradually decreasing.The valida-

tion set was used as the test set, and predictions were

compared with actual results to generate a confusion

matrix, describing the correspondence between the

classifier’s predictions and actual labels, as shown in

Fig. 7. The precision and recall for each class ex-

ceeded 95%, indicating that the model can accurately

recognize vehicle driving scenarios.

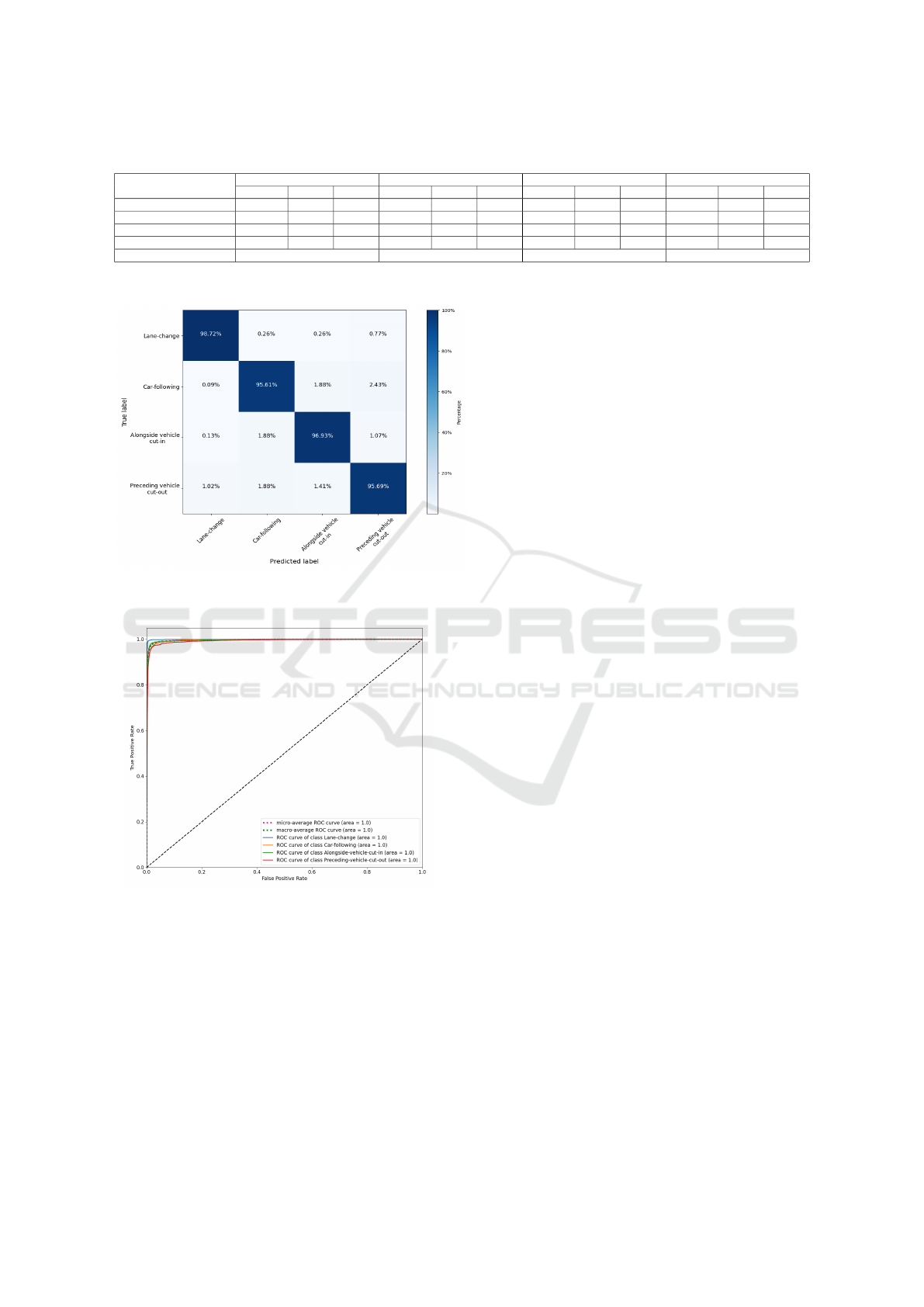

The ROC curve is shown in Fig. 8. The ROC

curves for each class, as well as the macro-average

and micro-average ROC curves, all have an AUC

value of 1, indicating excellent classification perfor-

mance of the model.

Table 2 demonstrates that the proposed typical

dynamic driving scenario recognition model out-

performs the baseline models—Support Vector Ma-

chine(SVM), Long Short-Term Memory(LSTM), and

Recognition of Typical Highway Driving Scenarios for Intelligent Connected Vehicles Based on Long Short-Term Memory Network

31

Table 2: Comparison of Scenario Recognition Performance Across Different Models.

SVM simple-LSTM Bi-LSTM Proposed model

Precision Recall F1 Precision Recall F1 Precision Recall F1 Precision Recall F1

Lane-change 89.07% 94.1% 91.52% 90.26% 88.14% 89.19% 91.36% 90.7% 91.03% 98.76% 98.72% 98.74%

Car-following 87.8% 94.11% 90.86% 92.79% 92.8% 92.79% 93.48% 94.07% 93.78% 95.97% 95.61% 95.80%

Alongside vehicle cut-in 84.11% 71.1% 77.06% 92.92% 93% 92.96% 92.98% 92.07% 92.52% 96.48% 96.93% 96.7%

Preceding vehicle cut-out 86.48% 80.3% 83.28% 85.95% 87.89% 86.91% 88.61% 89.56% 89.08% 95.73% 95.69% 95.71%

Overall 87.59% 90.45 91.6% 96.74%

Figure 7: The confusion matrix of the proposed model.

(AV:Alongside Vehicle, PV:Preceding Vehicle).

Figure 8: The ROC Curve of the proposed model.

Bi-directional Long Short-Term Memory(Bi-LSTM).

The proposed model exhibits significant advantages

in terms of accuracy, recall, and F1-score for Lane-

change, Car-following, Alongside vehicle cut-in, and

Preceding vehicle cut-out scenarios, indicating that

the model is highly efficient in recognizing dynamic

driving scenarios.

5 CONCLUSIONS

This study addresses the issues of overly simplistic

scenario classification and the lack of consideration

for overall environmental changes in scenario recog-

nition. We propose an innovative highway typical

driving scenario recognition model based on LSTM

and attention mechanisms, which extracts features

while accounting for scenario changes caused by ve-

hicle interactions and surrounding vehicle maneuvers.

Comparative experiments with other baseline models

demonstrate the accuracy and reliability of the pro-

posed model, advancing a deeper understanding of

driving scenario recognition.

In addition, this task can predict the driving sce-

narios of vehicles, provide prior knowledge for sub-

sequent decision-making systems, and also filter the

perception paradigm data of ICVs to provide stan-

dardized data to neural networks, making subsequent

parameter training more targeted and improving the

safety of intelligent connected vehicles.

However, the method proposed in this article for

identifying typical driving scenarios on highways

does not cover more road types. In future work, in

order to achieve comprehensive recognition of typi-

cal driving scenarios, it is necessary to integrate auto-

matic recognition of road and environmental elements

to improve the generality of the scenarios, thus cov-

ering more types and achieving accurate recognition.

Therefore, future research should focus on develop-

ing a unified recognition framework to achieve more

accurate and comprehensive recognition results.

ACKNOWLEDGEMENTS

This work was supported by the National Key

Research and Development Program of China:

2022YFB2503400, and the National Natural Science

Foundation of China Regional Innovation and Devel-

opment Joint Fund: U22A2042.

VEHITS 2025 - 11th International Conference on Vehicle Technology and Intelligent Transport Systems

32

REFERENCES

Benmimoun, M. and Eckstein, L. (2014). Detection of criti-

cal driving situations for naturalistic driving studies by

means of an automated process. Journal of Intelligent

Transportation and Urban Planning, 2(1):11–21.

Caesar, H., Bankiti, V., Lang, A. H., Vora, S., Liong, V. E.,

Xu, Q., Krishnan, A., Pan, Y., Baldan, G., and Bei-

jbom, O. (2020). nuscenes: A multimodal dataset for

autonomous driving. In Proceedings of the IEEE/CVF

conference on computer vision and pattern recogni-

tion, pages 11621–11631.

Epple, N., Hankofer, T., and Riener, A. (2020). Sce-

nario classes in naturalistic driving: Autoencoder-

based spatial and time-sequential clustering of sur-

rounding object trajectories. In 2020 IEEE 23rd In-

ternational Conference on Intelligent Transportation

Systems (ITSC), pages 1–6. IEEE.

Go, K. and Carroll, J. M. (2004). The blind men and the

elephant: Views of scenario-based system design. in-

teractions, 11(6):44–53.

Hauer, F., Gerostathopoulos, I., Schmidt, T., and Pretschner,

A. (2020). Clustering traffic scenarios using mental

models as little as possible. In 2020 IEEE Intelligent

Vehicles Symposium (IV), pages 1007–1012. IEEE.

Kruber, F., Wurst, J., and Botsch, M. (2018). An unsu-

pervised random forest clustering technique for au-

tomatic traffic scenario categorization. In 2018 21st

International conference on intelligent transportation

systems (ITSC), pages 2811–2818. IEEE.

Lee, Y., Jeon, J., Yu, J., and Jeon, M. (2020). Context-aware

multi-task learning for traffic scene recognition in au-

tonomous vehicles. In 2020 IEEE Intelligent Vehicles

Symposium (IV), pages 723–730. IEEE.

Lu, J., Yang, S., Zhang, B., and Cao, Y. (2023). A bev

scene classification method based on historical loca-

tion points and unsupervised learning. In 2023 7th

CAA International Conference on Vehicular Control

and Intelligence (CVCI), pages 1–6. IEEE.

Ma, Y., Yang, S., Lu, J., Feng, X., Yin, Y., and Cao, Y.

(2022). Analysis of autonomous vehicles accidents

based on dmv reports. In 2022 China Automation

Congress (CAC), pages 623–628. IEEE.

Menzel, T., Bagschik, G., and Maurer, M. (2018). Scenarios

for development, test and validation of automated ve-

hicles. In 2018 IEEE Intelligent Vehicles Symposium

(IV), pages 1821–1827. IEEE.

Nitsche, P., Thomas, P., Stuetz, R., and Welsh, R. (2017).

Pre-crash scenarios at road junctions: A clustering

method for car crash data. Accident Analysis & Pre-

vention, 107:137–151.

Ren, H., Gao, H., Chen, H., and Liu, G. (2022). A survey of

autonomous driving scenarios and scenario databases.

In 2022 9th International Conference on Dependable

Systems and Their Applications (DSA), pages 754–

762. IEEE.

Rsener, C., Fahrenkrog, F., Uhlig, A., et al. (2016). A

scenario-based assessment approach for automated

driving by using time series classification of human-

driving behaviour [c]. In IEEE, pages 35–42.

Sun, C., ShangGuan, W., and Chai, L. (2020). Vehicle be-

havior recognition and prediction method for intelli-

gent driving in highway scene. In 2020 Chinese Au-

tomation Congress (CAC), pages 555–560. IEEE.

Ulbrich, S., Menzel, T., Reschka, A., Schuldt, F., and Mau-

rer, M. (2015). Defining and substantiating the terms

scene, situation, and scenario for automated driving.

In 2015 IEEE 18th international conference on intel-

ligent transportation systems, pages 982–988. IEEE.

Yang, S., Chen, Y., Cao, Y., Wang, R., Shi, R., and Lu, J.

(2022). Lane change trajectory prediction based on

spatiotemporal attention mechanism. In 2022 IEEE

25th International Conference on Intelligent Trans-

portation Systems (ITSC), pages 2366–2371. IEEE.

Zhu, B., Zhang, P.-x., Zhao, J., Chen, H., Xu, Z., Zhao, X.,

and Deng, W. (2019). Review of scenario-based vir-

tual validation methods for automated vehicles. China

Journal of Highway and Transport, 32(6):1–19.

Recognition of Typical Highway Driving Scenarios for Intelligent Connected Vehicles Based on Long Short-Term Memory Network

33