Advancing Network Anomaly Detection Using Deep Learning and

Federated Learning in an Interconnected Environment

Hanen Dhrir

1

, Maha Charfeddine

2

and Habib M. Kammoun

3

1

Data Engineering and Semantics Research Unit, Faculty of Sciences of Sfax, Sfax, Tunisia

2

REGIM-Lab: REsearch Groups in Intelligent Machines, National Engineering School of Sfax, Sfax, Tunisia

3

REGIM-Lab: REsearch Groups in Intelligent Machines, Faculty of Sciences of Sfax, Sfax, Tunisia

Keywords:

Anomaly Detection, Federated Learning, Deep Learning, Network Security, Privacy.

Abstract:

Network anomaly detection is a fundamental cybersecurity task that seeks to identify unusual patterns that

could indicate security threats or system failures. Traditional centralized anomaly detection methods face

issues such as data privacy. Federated Learning has emerged as a promising solution that distributes model

training across multiple devices or nodes. Federated Learning improves anomaly detection by leveraging ge-

ographically distributed data sources while maintaining data privacy and security. This study presents a novel

Federated Learning architecture designed specifically for network anomaly detection, addressing important

information sensitivity issues in network environments. We compare some Deep Learning algorithms, such

as Long Short-Term Memory (LSTM), Convolutional Neural Networks (CNN), and Multilayer Perceptron

(MLP), using XGBoost for feature selection and Stochastic Gradient Descent (SGD) as an optimizer. To ad-

dress the problem of imbalanced data, we use the Synthetic Minority Over-sampling Technique (SMOTE)

with the UNSW-NB15 dataset. Our methodology is rigorously evaluated using standard evaluation metrics

and compared to state-of-the-art approaches.

1 INTRODUCTION

Network anomaly detection is essential for secur-

ing digital infrastructure, preventing unauthorized ac-

cess, and mitigating cyber threats. Traditional central-

ized methods face challenges in handling vast data,

adapting to evolving threats, and operating in real-

time, all while raising privacy concerns by aggregat-

ing sensitive data in a single location. This approach

jeopardizes data confidentiality and demands exten-

sive datasets to effectively capture network behav-

iors, often failing to scale and respond to advanced

risks (Garg et al., 2020). Federated Learning offers a

promising alternative by decentralizing model train-

ing across multiple devices or nodes. It keeps data

local, sharing only model updates aggregated on a

central server to create a global model. By leverag-

ing geographically distributed data, Federated Learn-

ing enhances anomaly detection while maintaining

data privacy and security. Each entity trains mod-

els locally and shares updates, reducing data breach

risks. This approach addresses privacy issues and

improves detection system robustness and accuracy

through diverse data sources, enhancing model re-

silience (Aburomman and Reaz, 2016). The main

contributions of this research work are as follows:

Introduction of a Novel Federated Learning Archi-

tecture: We propose a novel Federated Learning ar-

chitecture specifically designed for network anomaly

detection. This architecture ensures data privacy and

security, addressing the important challenge of data

sensitivity in network environments.

Evaluation of various Deep Learning Algo-

rithms and Machine Learning Methods: Our re-

search thoroughly evaluates several Deep Learning

(DL) models, including Long Short-Term Memory

(LSTM), Convolutional Neural Networks (CNN), and

Multilayer Perceptron (MLP), employs XGBoost for

feature selection, and applies Stochastic Gradient De-

scent (SGD) as an optimizer. These models are as-

sessed for their effectiveness in accurately detecting

network anomalies.

Application of SMOTE for Data Balancing: To

address the challenge of imbalanced data, we ap-

ply the Synthetic Minority Over-sampling Technique

(SMOTE) to the UNSW-NB15 dataset. This tech-

nique improves the performance of our models by en-

suring a more balanced representation of the dataset.

Dhrir, H., Charfeddine, M. and Kammoun, H. M.

Advancing Network Anomaly Detection Using Deep Learning and Federated Learning in an Interconnected Environment.

DOI: 10.5220/0013134100003928

In Proceedings of the 20th International Conference on Evaluation of Novel Approaches to Software Engineering (ENASE 2025), pages 343-350

ISBN: 978-989-758-742-9; ISSN: 2184-4895

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

343

Comprehensive Assessment Using Standard Eval-

uation Metrics: The proposed methodology is rig-

orously evaluated using the most common evalua-

tion parameters. Our results are benchmarked against

other state-of-the-art approaches, demonstrating the

efficacy of our methods. The structure of this pa-

per is as follows: Section 2, Theoretical Background,

covers the foundations of Federated Learning and rel-

evant Deep Learning methods for network anomaly

detection. Section 3, Related Work, reviews relevant

research and finding. Section 4, Methodology, de-

scribes the proposed Federated Learning approach for

network anomaly detection, with an emphasis on data

privacy. Section 5, Experiments and Results, presents

the experimental setup and results, including a com-

parison with previous related works. Finally, Section

6, concludes the paper.

2 THEORETICAL BACKGROUND

This section covers the key concepts of Machine

Learning, Deep Learning, and Federated Learning for

network anomaly detection. It highlights how Fed-

erated Learning addresses the limitations of tradi-

tional methods by preserving privacy and decentraliz-

ing data, leading to more reliable and secure anomaly

detection solutions, and includes a description of the

Deep Learning algorithms used in our system.

2.1 Network Anomaly Detection

Network anomaly detection is a critical technique for

identifying unusual network activity that could indi-

cate security threats or breaches. It involves moni-

toring network traffic for deviations from normal pat-

terns, signaling potential malicious actions or system

failures. Historically, anomaly detection has relied

on two approaches: signature-based and anomaly-

based methods. Signature-based detection identifies

known threats by comparing network activity to pre-

defined patterns or signatures. While effective for

known threats, it is limited in detecting new or un-

known threats. On the other hand, anomaly-based

detection monitors network behavior, identifying de-

viations from established norms. While capable of

detecting novel threats, it often struggles with the

need for comprehensive training data (Hdaib et al.,

2024). To enhance these methods, Machine Learn-

ing (ML) and Deep Learning (DL) approaches are

currently widely used. These techniques improve

detection by Learning complex patterns and anoma-

lies, overcoming the limitations of traditional meth-

ods. ML tools, such as classifiers, segment data into

distinct categories like normal and abnormal, train-

ing on labeled datasets to detect patterns and clas-

sify new data points, recognizing outliers (Rafique

et al., 2024). Autoencoders, a type of neural net-

work, excel at this task by compressing input data and

reconstructing it, Learning normal data patterns and

detecting anomalies by comparing the reconstructed

output with the original (Torabi et al., 2023). Ad-

ditionally, Deep Learning models like Convolutional

Neural Networks (CNNs) and Recurrent Neural Net-

works (RNNs) automatically extract relevant features

from large, unstructured datasets, improving anomaly

detection by capturing intricate patterns and tempo-

ral dependencies (Lakey and Schlippe, 2024). While

DL techniques offer significant advancements in net-

work anomaly detection, they also raise privacy con-

cerns. These methods often require centralizing large

datasets for model training, which can lead to privacy

breaches if sensitive data is not adequately protected.

2.2 Federated Learning for Network

Anomaly Detection

Federated Learning is a revolutionary approach to ma-

chine Learning, providing a decentralized framework

that enables multiple entities to collaboratively train

a model while keeping their data local. Unlike tra-

ditional centralized methods, Federated Learning im-

proves data privacy and security by keeping sensi-

tive information in its original environment. Rather

than aggregating raw data, this method gathers and

combines model updates such as gradients or param-

eters—from each participant. This decentralized ag-

gregation reduces the risk of data breaches and en-

sures compliance with stringent data protection regu-

lations. Federated Learning’s key principles include

collaborative training across distributed nodes, se-

cure aggregation of model updates, and robust com-

munication protocols. Federated Learning improves

anomaly detection across network environments by

aggregating model updates from a variety of decen-

tralized sources. This approach uses a broader set

of data while maintaining individual privacy, result-

ing in more accurate and resilient detection systems

that overcome the limitations of centralized meth-

ods(Bharati et al., 2022).

2.2.1 Architecture of Federated Learning for

Network Anomaly Detection

Federated Learning is intended to handle decentral-

ized data sources and strict privacy requirements. It

enables multiple entities, such as devices or servers,

to participate in model training without transmitting

ENASE 2025 - 20th International Conference on Evaluation of Novel Approaches to Software Engineering

344

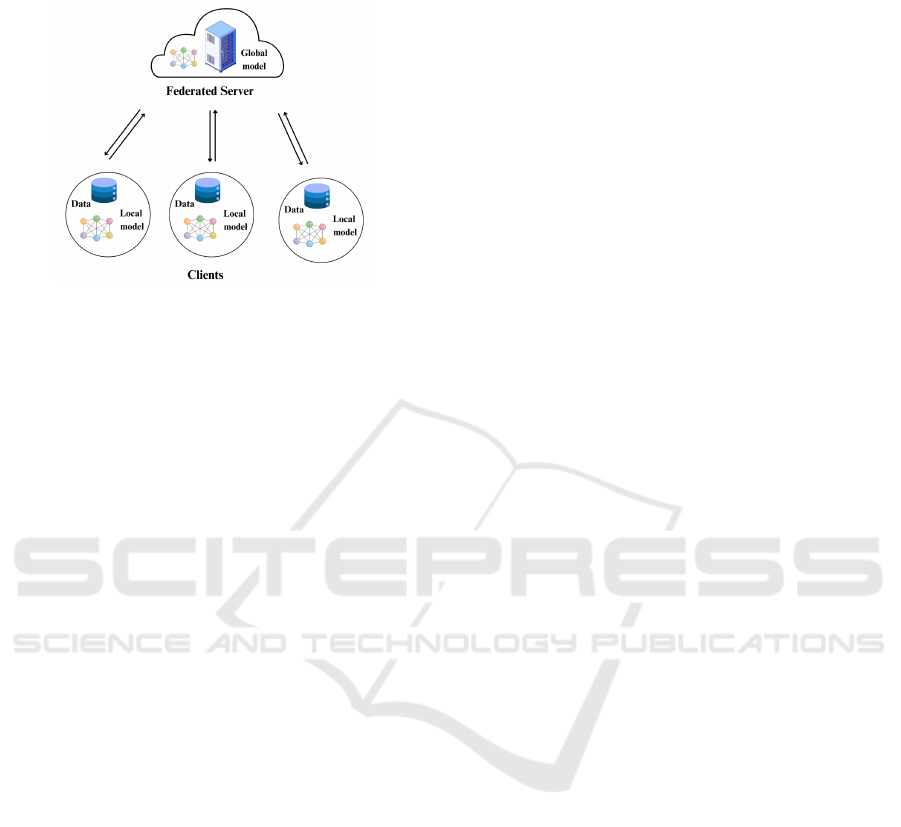

raw data. The architecture depicted in figure 1 has

three major components (Zhao et al., 2019):

Figure 1: Architecture of Federated Learning.

• Server as a Central Coordinator: The server

manages the global model, initialized with pa-

rameters from a pre-existing dataset. It selects

a subset of client devices for each training itera-

tion based on performance or availability, ensur-

ing diverse data sources contribute efficiently to

the global model.

• Client Devices and Local Computation: Each

client device uses its private dataset to compute

model updates based on the global model. These

updates, encrypted or anonymized for privacy, are

transmitted to the server without exposing raw

data.

• Secure Aggregation: The server aggregates

encrypted updates using methods like Feder-

ated averaging or secure multi-party computation

(MPC). Weighted strategies may prioritize signif-

icant client contributions, improving model accu-

racy and robustness. The updated global model

is then redistributed to clients, enabling iterative

refinement for anomaly detection across diverse

data.

2.2.2 Privacy Measures in Federated Learning

Federated Learning incorporates a number of privacy-

preserving features throughout its decentralized

framework. Data is distributed across client devices

to avoid the centralization of sensitive information.

Clients compute and send model updates in a se-

cure, encrypted format, which the server aggregates

using privacy-preserving methods. Furthermore, dif-

ferential privacy techniques may be used to add noise

to model updates, thereby protecting individual data

points. The client selection procedure is randomized

to guarantee diverse participation and avoid dispro-

portionate disclosure of any single client’s data (Lyu

et al., 2022). The described Federated Learning ar-

chitecture provides a strong foundation for collabora-

tive, privacy-preserving anomaly detection in decen-

tralized environments.

2.3 Deep Learning Algorithms

This section explores the three Deep Learning al-

gorithms used in our system: Multilayer Perceptron

(MLP), Long Short-Term Memory (LSTM) networks,

and Convolutional Neural Networks (CNNs). Each

algorithm is designed to handle different types of

data, enhancing the detection and analysis of anoma-

lies in network traffic, sequential data, and grid-like

data structures. We detail how each is applied, focus-

ing on their role in improving the accuracy and per-

formance of our predictive models.

The Multilayer Perceptron (MLP): We use a multi-

layer perceptron (MLP), a type of artificial neural net-

work (ANN) commonly applied to supervised Learn-

ing tasks like network anomaly detection. The MLP

consists of perceptrons that compute the dot product

of input features with a weight matrix, which contains

trainable parameters. Our architecture includes three

fully connected layers: two hidden layers with 256

units each, activated by ReLU, and an output layer

that uses a sigmoid activation function for binary clas-

sification, predicting class probabilities.

Long Short-Term Memory (LSTM): Long Short-

Term Memory (LSTM) networks handle sequential

data by capturing long-term dependencies through

specialized LSTM cells with input, forget, and out-

put gates. Our setup includes one LSTM layer, two

dense layers, and a final sigmoid layer, enabling effi-

cient processing of time series data and pattern recog-

nition.

Convolutional Neural Network (CNN): Our system

also employs Convolutional Neural Networks, which

excel at processing and analyzing grid-like data struc-

tures, such as images. CNNs are composed of sev-

eral layers, including convolutional layers that apply

filters to the input data to extract indispensable fea-

tures like edges and textures in images. These con-

volutional layers are followed by pooling layers that

reduce dimensionality and computational complexity

by downsampling the feature maps. The architecture

also integrates fully connected layers that use the flat-

tened output from the convolutional and pooling lay-

ers to make final predictions. Through the use of con-

volutional and pooling operations, CNNs effectively

capture spatial hierarchies and patterns in the data,

making them ideal for tasks like image classification

and object detection. The following section will go

over the detailed methodology, which includes the al-

Advancing Network Anomaly Detection Using Deep Learning and Federated Learning in an Interconnected Environment

345

gorithms, datasets, and evaluation metrics used to im-

plement and evaluate the effectiveness of this archi-

tecture in real-world network anomaly detection sce-

narios.

3 RELATED WORK

This section reviews significant contributions in

the field of Federated Learning as applied to net-

work anomaly detection, highlighting innovative ap-

proaches and methodologies that address the unique

challenges of this domain. The emphasis is on adap-

tive Federated Learning frameworks and hierarchi-

cal architectures that enhance system robustness and

data privacy. Authors in (Doriguzzi-Corin and Sir-

acusa, 2024) introduced FLAD, an adaptive Fed-

erated Learning Approach specifically designed for

DDoS attack detection. This approach aims to over-

come the limitations of traditional Federated Learn-

ing algorithms by dynamically adjusting client selec-

tion and computational workloads during the train-

ing process, without necessitating the exchange of

training or validation data between clients and the

central server. FLAD is tailored for cybersecurity

applications, with a particular focus on maintaining

data privacy by ensuring that sensitive attack data

is not shared between clients and the server. This

method utilizes the CIC-DDoS2019 dataset (Saheb

et al., 2021), which provides a robust framework

for evaluating DDoS detection capabilities under a

Federated Learning paradigm. The paper introduces

FLAD, an adaptive Federated Learning mechanism

that improves upon traditional methods like FEDAVG

by dynamically tuning the Federated training process

based on client performance. In (Marfo et al., 2022),

the authors explore a Federated Learning architec-

ture that incorporates a hierarchical setup involving

clients, edge servers, and a global server. Clients re-

tain their data locally and manage their models inde-

pendently, thereby safeguarding data privacy by pre-

venting direct data transfers to central servers. Edge

servers play a critical role in this architecture by re-

ceiving model updates from clients, aggregating these

updates, and forwarding the consolidated results to

the global server. The global server then refines the

global model using these aggregated updates and dis-

seminates the improved model back to clients for lo-

cal updates. This hierarchical structure, which intro-

duces intermediate layers such as edge servers, dis-

tributes the computational load and enhances system

reliability by mitigating the impact of potential fail-

ures. This tiered approach not only improves scal-

ability but also increases the resilience of Federated

Learning systems. The application of Multilayer Per-

ceptron (MLP) within this framework is particularly

effective for tasks like network anomaly detection, as

demonstrated using the UNSW-NB15 dataset, which

provides comprehensive coverage of network intru-

sion scenarios. These innovative approaches in Fed-

erated Learning for network anomaly detection un-

derscore the importance of adaptive frameworks and

hierarchical architectures in enhancing privacy, scala-

bility, and system resilience. In (Priyadarshini, 2024),

to improve anomaly detection in IoT environments,

the authors use the UNSW-NB15 dataset and the Split

Learning (SL) model. Split Learning maintains pri-

vacy by enabling the model to be trained on several

devices without transferring raw data. This technique

divides the model into multiple components, each of

which is trained on a separate device. This lowers

the computational load while enhancing security. The

UNSW-NB15 dataset, which is renowned for its ex-

tensive records of network traffic, facilitates the effi-

cient identification of cyberthreats within the infras-

tructure of smart cities. In the following section, we

delve Deeper into the proposed methodology, further

exploring their implication for robust and efficient

anomaly detection.

4 METHODOLOGY

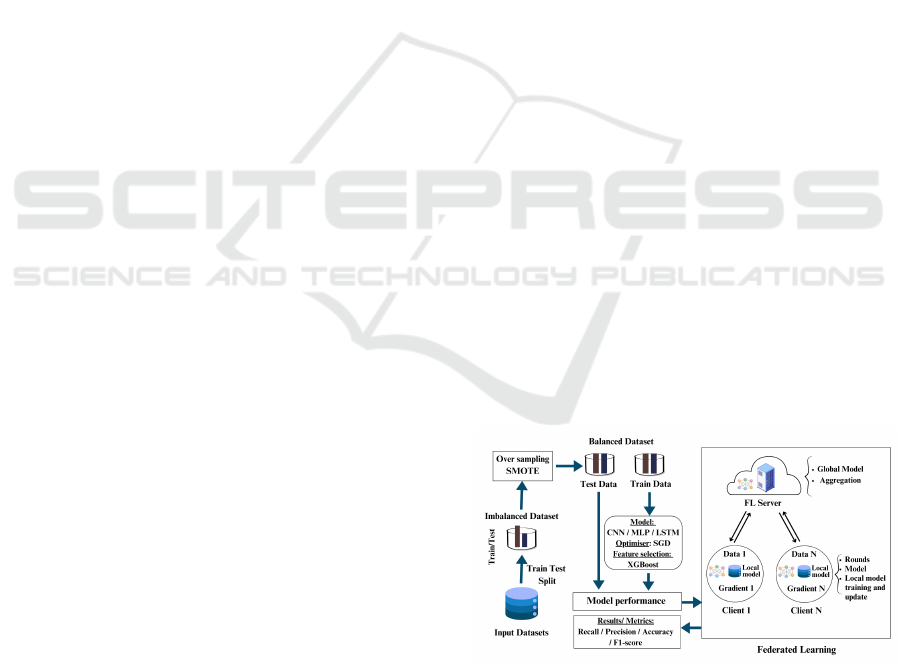

This section describes how we developed and evalu-

ated our Federated Learning-based network anomaly

detection system. Our methodology ensures a thor-

ough and rigorous assessment of various Deep Learn-

ing models, incorporating feature selection and bal-

ancing techniques to enhance model performance and

robustness, as illustrated in figure 2.

Figure 2: Overview of the proposed methodology.

ENASE 2025 - 20th International Conference on Evaluation of Novel Approaches to Software Engineering

346

4.1 Federated Network Anomaly

Detection Framework

We employed Federated Learning to promote collabo-

rative model training in a decentralized manner across

ten clients. Each client keeps its local data, ensuring

privacy, and sends model updates to the central server

through fifty communication rounds, as illustrated in

Figure 2. The experiments were conducted out on

the UNSW-NB15 dataset, which was balanced using

the SMOTE technique and then partitioned across ten

clients. We explored various scenarios by implement-

ing and training CNN, LSTM, and MLP models for

each client. To achieve optimal performance in our

Federated Learning-based system, we used Stochas-

tic Gradient Descent (SGD) as an optimizer and XG-

Boost for feature selection.

4.2 Dataset Description

The UNSW-NB15 dataset (Moustafa and Slay, 2015),

developed by the ACCS at UNSW, is used for training

and evaluating network anomaly detection models. It

covers nine attack types and includes 49 features from

network traffic. With around 2.5 million records, it

provides labeled data for both normal and malicious

activity. The dataset is publicly available for research

to enhance network security and intrusion detection

systems.

4.3 Preprocessing Steps

Preprocessing plays a vital role in enhancing the per-

formance of Machine Learning models. The key steps

include:

Handling Missing Values: Missing values, often re-

sulting from data corruption or improper recording,

are addressed by removing rows with NaN values,

negative infinity (-inf), and duplicates. Feature Scal-

ing: Standardization is applied to rescale numerical

features to have a mean of zero and a standard devi-

ation of one, improving model accuracy and perfor-

mance (Fki et al., 2024).

Label Encoding: Categorical features are converted

into numerical values to make them suitable for Ma-

chine Learning models.

Class Imbalance Processing: The Synthetic Mi-

nority Over-sampling Technique (SMOTE) addresses

class imbalance by generating synthetic examples for

the minority class. It creates new instances in the fea-

ture space, improving the model’s ability to classify

minority classes (Ali et al., 2024a).

4.4 Tested Learning Algorithms

Using MLP, LSTM, and CNN architectures, we con-

ducted 50 rounds with 10 epochs for each of the 10

clients. Through extensive testing, we determined

that 10 epochs yielded the best results. Additionally,

we split the dataset into 80% for training and 20% for

testing. To optimize the proposed models, we use the

Stochastic Gradient Descent (SGD) optimizer. This

prominent algorithm in Machine Learning is particu-

larly effective for training models such as neural net-

works. Unlike traditional gradient descent, which cal-

culates the gradient using the entire dataset, SGD up-

dates model parameters iteratively using small, ran-

domly selected batches of data. This approach signif-

icantly enhances computational efficiency and speed.

The frequent updates facilitated by mini-batches en-

able faster convergence and improve the model’s abil-

ity to generalize, thereby reducing the risk of overfit-

ting. Additionally, the stochastic nature of SGD helps

the optimizer escape local minima, making it advanta-

geous for handling large-scale datasets. Overall, SGD

is a fundamental tool for scalable and robust model

training (Sun et al., 2022).

4.5 Tested Feature Selection

Among the best features, we use XGBoost feature

selection for identifying the most significant features

within a dataset. XGBoost intrinsically evaluates fea-

ture importance by analyzing each feature’s contribu-

tion to the model’s predictive accuracy during train-

ing. It measures metrics such as gain, coverage, and

frequency. This process enables the identification of

the most influential features, facilitating dimension-

ality reduction, enhancing model performance, and

lowering computational costs by focusing on the fea-

tures that significantly impact prediction outcomes

(Chen and Guestrin, 2016).

4.6 Evaluation Metrics

In anomaly detection, evaluating a model involves

metrics like recall, precision, accuracy, and the F1

score to assess its ability to identify anomalies.

• Recall is the metric that evaluates a model’s abil-

ity to correctly identify true anomalies, minimiz-

ing false negatives. Recall is calculated using the

formula (1):

Recall =

TP

TP + FN

(1)

• Accuracy measures the overall correctness of a

model’s predictions, considering both true posi-

Advancing Network Anomaly Detection Using Deep Learning and Federated Learning in an Interconnected Environment

347

tives and true negatives. The formula (2) for ac-

curacy, considering true positives (TP), true neg-

atives (TN), false positives (FP), and false nega-

tives (FN), is:

Accuracy =

TN + TP

TP + FN + FP + TN

(2)

.

• The F1-Score, the harmonic mean of preci-

sion and recall, provides a balanced evaluation

of model performance, especially in imbalanced

datasets, by balancing false alarms and anomaly

detection. The formula (3) calculates the F1-

Score:

F1-Score =

2 × (Recall × Precision)

Recall + Precision

(3)

• Precision measures the proportion of flagged

anomalies that are true anomalies, minimizing

false positives (Ali et al., 2024b). The formula

for precision is (4):

Precision =

TP

TP + FP

(4)

5 EXPERIMENTS AND RESULTS

For validating the methodology explained in the pre-

vious section, we conducted various experiments as

part of our research, using the SMOTE to balance the

dataset and the SGD architecture to optimize the mod-

els’ performance. We investigated three DL models:

MLP, LSTM, and CNN, while also employing XG-

Boost for feature selection. We ran 50 rounds with

10 epochs for each of the 10 clients across the three

combinations. Our Federated Learning-based ex-

periments yielded the following summarized results:

First, we present our experiments on the UNSW-

NB15 dataset (Moustafa and Slay, 2015) and then, we

compare our findings with those from other studies.

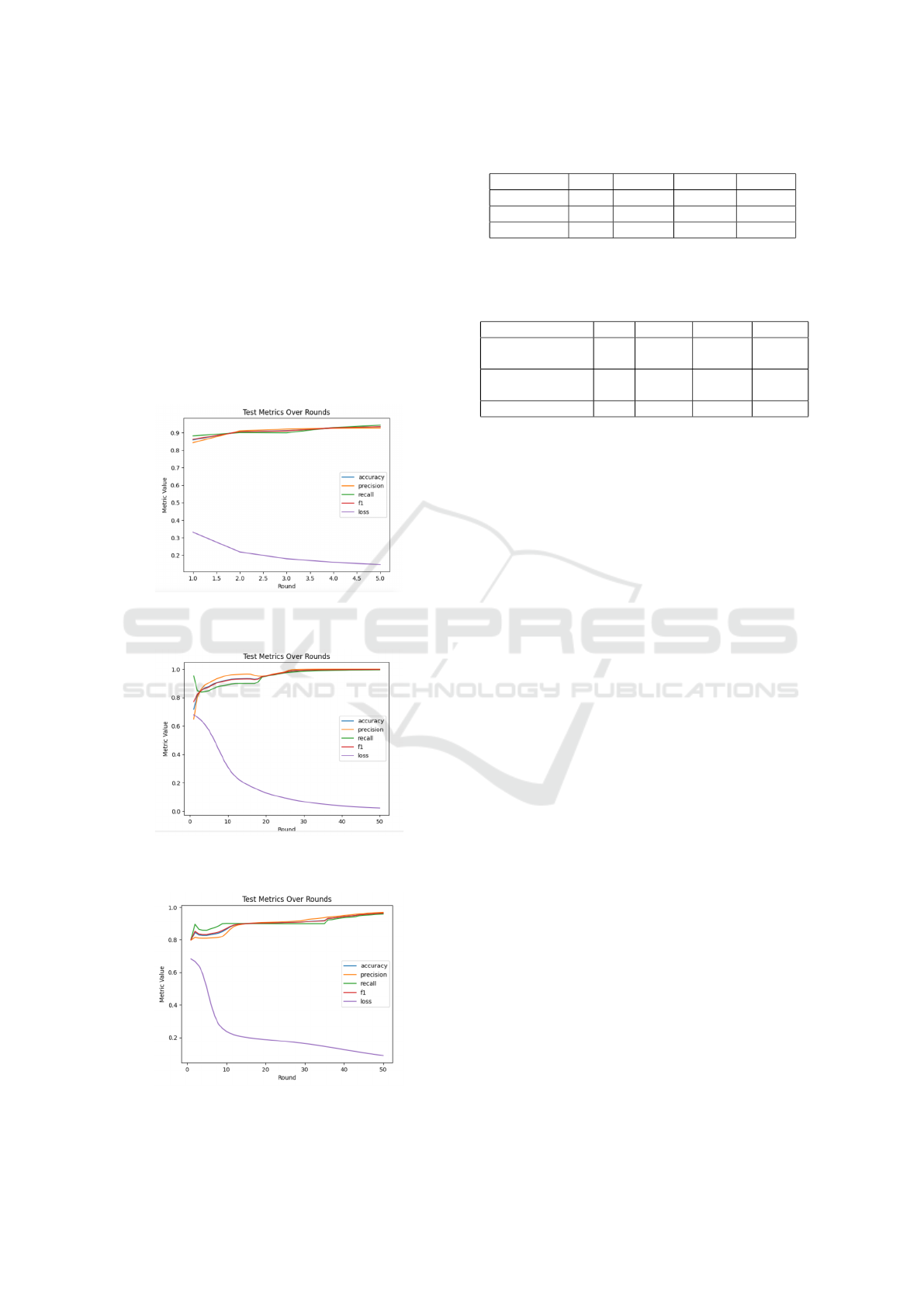

As can be seen from the table 1, within the proposed

Federated Learning architecture, the CNN model with

SGD outperforms the other models (MLP and LSTM)

using the same optimizer in terms of recall, accuracy,

precision, and F1-Score. This proves that CNN model

with SGD is very good at finding positive examples

with low error rates. The CNN is slightly superior

to the LSTM model with SGD in terms of precision

and recall, but both models still perform well. With

the lowest recall, precision, accuracy, and F1-Score

among the models, the MLP model with SGD, on the

other hand, performs worse than the other two mod-

els. In addition, we displayed some plotted curves

3, 4 and 5 for the tested combined approaches. In

our Federated Learning setup, a ”round” is a full cy-

cle in which each client performs local training on its

data and sends updates to the central server. The pro-

cess is repeated 50 rounds, allowing each model to it-

eratively improve while Learning from decentralized

data sources. In figures 3, 4 and 5, the X-axis repre-

sents the number of communication rounds (ranging

from 1 to 50). The Y-axis represents the metric val-

ues (recall, precision, accuracy, F1-score, and loss)

used.These metrics for each model are plotted to show

how the model’s performance changes with each sub-

sequent round of training. From what we observed

in general for figures 3, 4 and 5, as the rounds in-

crease, recall improves because the model becomes

more adept at identifying true positives, Learning

from decentralized data from multiple clients. The

MLP’s recall begins low and improves marginally, in-

dicating a struggle to consistently identify anomalies.

CNN increases significantly over time, rapidly reach-

ing high recall values, indicating its effectiveness in

detecting anomalies. LSTM gradually evolves, but

not as quickly or effectively as CNN, most likely due

to its sequential data processing capabilities, which

are less suited to this specific problem. Precision also

tends to improve with rounds as the model reduces

false positives, thus enhancing its ability to correctly

classify anomalies. Precision increases slightly for

the MLP model, but remains relatively low, indicating

a challenge in reducing false positives. CNN achieves

high precision quickly and maintains it throughout

the rounds, demonstrating its strong ability in accu-

rate anomaly detection. LSTM advances with each

round but falls short of CNN’s precision, implying

that some false positives remain. Accuracy improves

as the model becomes more effective at identifying

both true positives and true negatives. MLP increases

slightly but stands the lowest of the models, indicating

lower overall performance. CNN achieves high accu-

racy early in the rounds, and maintains it throughout,

making it the most effective model. LSTM improves

steadily, achieving decent accuracy but still falling be-

hind CNN. As precision and recall improve, the F1-

score generally rises. The F1-score for the MLP al-

gorithm is relatively low and increases slightly, in-

dicating that the model faces recall and precision

challenges. CNN quickly achieves a high F1-score,

demonstrating a good balance between precision and

recall. LSTM raises but does not match CNN, indi-

cating unbalanced gains in precision and recall. Loss

typically decreases as the model becomes more accu-

rate, reducing the difference between predicted and

actual results. MLP’s loss decreases slightly but

remains higher than that of other models, showing

poorer performance. CNN promptly achieves low

ENASE 2025 - 20th International Conference on Evaluation of Novel Approaches to Software Engineering

348

loss values, indicating effective Learning and con-

vergence. Loss decreases steadily with LSTM but

does not reach the minimal values achieved by CNN.

Among the three models, CNN with SGD optimiza-

tion performs the best in anomaly detection within the

proposed Federated Learning framework. It consis-

tently achieves high recall, precision, accuracy, and

F1-score while reducing loss. LSTM performs rea-

sonably well but is less effective than CNN, most

likely due to its sequential processing nature not being

fully utilized in this particular context. MLP struggles

the most, implying that it may not be appropriate for

such complex anomaly detection tasks in a Federated

interconnected setting.

Figure 3: Test metrics over rounds for MLP architecture and

SGD optimiser.

Figure 4: Test metrics over rounds for CNN architecture

and SGD optimiser.

Figure 5: Test metrics over rounds for LSTM architecture

and SGD optimiser.

Table 1: Experiments on the UNSW-NB15 Dataset.

Experiment Recall Precision Accuracy F1-Score

MLP+SGD 0.9085 0.8939 0.8903 0.9012

CNN+SGD 0.9939 0.9998 0.9965 0.9968

LSTM+SGD 0.9492 0.9610 0.9508 0.9550

• Comparative Analysis with Related Works

Table 2: Comparative Analysis with Related Works.

Experiment Recall Precision Accuracy F1-Score

SL (Priyadarshini,

2024)

0.9802 0.9812 0.9802 0.9811

MLP + Smote

(Marfo et al., 2022)

0.9718 0.9809 0.9721 0.9763

CNN+SGD (our) 0.9939 0.9998 0.9965 0.9968

The table 2 compares our best proposed Fed-

erated Learning-based network anomaly detection

(CNN+SGD) to various related previous approaches

while considering the four common metrics re-

call, precision, accuracy, and F1-scores. The SL

(Priyadarshini, 2024) solution performs well and con-

sistently across all metrics, with a recall of 0.9802,

precision of 0.9812, accuracy of 0.9802, and F1-score

of 0.9811. SMOTE combined with the MLP model

(Marfo et al., 2022) yields a recall of 0.9718, preci-

sion of 0.9809, accuracy of 0.9721, and F1-score of

0.9763. Our best solution combining the CNN, SGD,

SMOTE with XGBoost for feature selection outper-

forms all other related existing studies, with a recall of

0.9939, precision of 0.9998, accuracy of 0.9965, and

F1-score of 0.9968. These findings demonstrate that

combining a CNN with SGD, adequate features using

XGBoost, and SMOTE within a Federated Learning

architecture is extremely effective.

6 CONCLUSION

This study compared the performance of various

Deep Learning models in detecting network anoma-

lies within a secure Federated Learning environment.

To enhance model performance, we utilized XGBoost

for feature selection, SMOTE for dataset balancing,

and SGD for model optimization. The findings show

that when it comes to recall, precision, accuracy, and

F1-score, CNN combined with SGD, SMOTE, and

XGBoost perform noticeably better than other pos-

sible combinations. In particular, the CNN model

demonstrated almost flawless classification perfor-

mance with recall of 0.9939, precision of 0.9998, ac-

curacy of 0.9965, and F1-score of 0.9968. The per-

formance of the LSTM and MLP models was en-

hanced by the addition of SMOTE, underscoring the

Advancing Network Anomaly Detection Using Deep Learning and Federated Learning in an Interconnected Environment

349

significance of correcting the dataset’s class imbal-

ance. However, the feature selection model using

MLP, SGD, and SMOTE performed relatively poorly,

indicating that this combination might not be as use-

ful in the network anomaly specific context. Over-

all, the results indicate that feature selection with a

CNN equipped with SGD, SMOTE, and XGBoost is

very successful when applied to network anomaly de-

tection tasks within a Federated Anomaly environ-

ment. To improve the results’ generalizability, fu-

ture research ought to investigate into other optimiza-

tion techniques for these models and their applica-

tions across various domains. Furthermore, testing

our experiments on different datasets would provide

a broader overview of the model’s performance.

ACKNOWLEDGEMENTS

The research leading to these results was supported

by the Ministry of Higher Education and Scientific

Research of Tunisia.

REFERENCES

Aburomman, A. A. and Reaz, M. B. I. (2016). A novel

svm-knn-pso ensemble method for intrusion detection

system. Applied Soft Computing, 38:360–372.

Ali, A. H., Charfeddine, M., Ammar, B., and Hamed,

B. B. (2024a). Intrusion detection schemes based on

synthetic minority oversampling technique and ma-

chine learning models. In 2024 IEEE 27th Interna-

tional Symposium on Real-Time Distributed Comput-

ing (ISORC), pages 1–8. IEEE.

Ali, A. H., Charfeddine, M., Ammar, B., Hamed, B. B.,

Albalwy, F., Alqarafi, A., and Hussain, A. (2024b).

Unveiling machine learning strategies and considera-

tions in intrusion detection systems: a comprehensive

survey. Frontiers in Computer Science, 6:1387354.

Bharati, S., Mondal, M., Podder, P., and Prasath, V. (2022).

Federated learning: Applications, challenges and fu-

ture directions. International Journal of Hybrid Intel-

ligent Systems, 18(1-2):19–35.

Chen, T. and Guestrin, C. (2016). Xgboost: A scalable

tree boosting system. In Proceedings of the 22nd acm

sigkdd international conference on knowledge discov-

ery and data mining, pages 785–794.

Doriguzzi-Corin, R. and Siracusa, D. (2024). Flad: adaptive

federated learning for ddos attack detection. Comput-

ers & Security, 137:103597.

Fki, Z., Ammar, B., Fourati, R., Fendri, H., Hussain, A.,

and Ben Ayed, M. (2024). A novel iot-based deep

neural network for covid-19 detection using a soft-

attention mechanism. Multimedia Tools and Applica-

tions, 83(18):54989–55009.

Garg, S., Kaur, K., Batra, S., Kaddoum, G., Kumar, N., and

Boukerche, A. (2020). A multi-stage anomaly detec-

tion scheme for augmenting the security in iot-enabled

applications. Future Generation Computer Systems,

104:105–118.

Hdaib, M., Rajasegarar, S., and Pan, L. (2024). Quan-

tum deep learning-based anomaly detection for en-

hanced network security. Quantum Machine Intelli-

gence, 6(1):26.

Lakey, D. and Schlippe, T. (2024). A comparison of deep

learning architectures for spacecraft anomaly detec-

tion. In 2024 IEEE Aerospace Conference, pages 1–

11. IEEE.

Lyu, L., Yu, H., Ma, X., Chen, C., Sun, L., Zhao, J., Yang,

Q., and Philip, S. Y. (2022). Privacy and robustness in

federated learning: Attacks and defenses. IEEE trans-

actions on neural networks and learning systems.

Marfo, W., Tosh, D. K., and Moore, S. V. (2022). Net-

work anomaly detection using federated learning. In

MILCOM 2022-2022 IEEE Military Communications

Conference (MILCOM), pages 484–489. IEEE.

Moustafa, N. and Slay, J. (2015). Unsw-nb15: a compre-

hensive data set for network intrusion detection sys-

tems (unsw-nb15 network data set). In 2015 military

communications and information systems conference

(MilCIS), pages 1–6. IEEE.

Priyadarshini, I. (2024). Anomaly detection of iot cyberat-

tacks in smart cities using federated learning and split

learning. Big Data and Cognitive Computing, 8(3):21.

Rafique, S. H., Abdallah, A., Musa, N. S., and Murugan,

T. (2024). Machine learning and deep learning tech-

niques for internet of things network anomaly detec-

tion—current research trends. Sensors, 24(6):1968.

Saheb, M. C. P., Yadav, M. S., Babu, S., Pujari, J. J., and

Maddala, J. B. (2021). A review of ddos evaluation

dataset: Cicddos2019 dataset. In International Con-

ference on Energy Systems, Drives and Automations,

pages 389–397. Springer.

Sun, Y., Que, H., Cai, Q., Zhao, J., Li, J., Kong, Z., and

Wang, S. (2022). Borderline smote algorithm and

feature selection-based network anomalies detection

strategy. Energies, 15(13):4751.

Torabi, H., Mirtaheri, S. L., and Greco, S. (2023). Practical

autoencoder based anomaly detection by using vector

reconstruction error. Cybersecurity, 6(1):1.

Zhao, Y., Chen, J., Wu, D., Teng, J., and Yu, S. (2019).

Multi-task network anomaly detection using federated

learning. In Proceedings of the 10th international

symposium on information and communication tech-

nology, pages 273–279.

ENASE 2025 - 20th International Conference on Evaluation of Novel Approaches to Software Engineering

350