Usage of Police Surveillance Drone for Public Safety

Donna Mariya David

a

, Abdul Adnan

b

and Abhishek H

c

Department of Electrical and Electronics Engineering, Dayananda Sagar College of Engineering,

Bengaaluru, Karnataka, India

Keywords: Autonomous, Public Safety, Law Enforcement, Emergency Response, Real Time Data Processing, Machine

Learning.

Abstract: This paper presents a framework for utilizing autonomous drones to enhance public safety. The proposed

system leverages advanced methodologies in autonomous navigation and real-time data processing to address

critical challenges in law enforcement and emergency response. The drone operates independently, capturing

high-resolution images of crime scenes, conducting surveillance over vast areas, and detecting suspicious

activities such as theft. By integrating machine learning algorithms, the drone is capable of identifying and

tracking individuals or objects of interest, providing actionable insights to authorities in real time. This work

outlines the system’s architecture, operational workflow, and key technologies, emphasizing its adaptability

to dynamic environments and diverse applications. The paper also discusses the societal implications,

potential challenges, and future directions for deploying autonomous drones in public safety scenarios.

Through rigorous analysis and experimental validation, this study demonstrates how autonomous drones can

serve as a transformative tool in modern law enforcement, promoting safety and security across communities.

1 INTRODUCTION

In an era where public safety concerns are

increasingly paramount, especially in both urban and

rural set- tings, the need for rapid and effective

response mechanisms have been more critical.

Traditional emergency response systems often face

challenges in reaching crime scenes promptly,

leaving communities vulnerable in times of crisis. To

address these challenges, our project introduces an

innovative solution: an autonomous drone designed

specifically for public safety applications. This drone

not only enhances surveillance capabilities but also

integrates seamlessly with a dedicated protection

application. The proposed drone system operates

autonomously to monitor and traverse various

environments, providing real-time aerial support to

law enforcement agencies. In situations where

individuals feel threatened or are in danger, the

accompanying mobile application allows users to

alert authorities with a simple press of a button. This

action triggers the drone to navigate directly to the

a

https://orcid.org/0009-0001-2469-8090

b

https://orcid.org/0009-0006-7013-2481

c

https://orcid.org/0009-0009-4457-3234

user’s location ensuring a swift response. Upon

arrival the drone captures high- resolution imagery of

the scene. facilitating immediate assessment and

documentation of potential threats. A key feature of

our system is the integration of machine learning

techniques to analyse video feeds and sensor data for

identifying suspicious behaviours or activities. By

training algorithms on diverse datasets, the drone can

recognize patterns indicative of potential threats,

enabling proactive intervention and enhancing

situational awareness for law enforcement. This

capability not only aids in crime scene documentation

but also facilitates real- time decision- making by

providing actionable insights. In this paper, we will

explore the methodologies underpinning our

autonomous drone system, focusing on its operational

framework and the critical role of machine learning

in enhancing safety. Through this approach, we aim

to contribute to safer environments by harnessing the

power of technology and data driven insights in

public safety initiatives.

Mariya David, D., Adnan, A. and H, A.

Usage of Police Surveillance Drone for Public Safety.

DOI: 10.5220/0013652700004639

In Proceedings of the 2nd International Conference on Intelligent and Sustainable Power and Energy Systems (ISPES 2024), pages 171-177

ISBN: 978-989-758-756-6

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

171

2 REVIEWS OF EXISITING

MODELS

The incorporation of drones into public safety

initiatives has significantly transformed the landscape

of law enforcement and emergency response. Models

such as the JOUAV CW Series and Height

Technologies SAMS exemplify the cutting- edge

advancements that enhance surveillance and

operational effectiveness. The JOUAV CW Series is

particularly notable for its extensive range and

impressive flight duration, making it well-suited for

monitoring large areas, such as borders or critical

infrastructure. In contrast, the SAMS drone system

operates autonomously, allowing for continuous

surveillance without requiring constant human

supervision. This capability not only improves

situational awareness but also facilitates quicker

responses to incidents, thereby bolstering com safety.

Skydio drones can be quickly launched from patrol

vehicles, providing immediate aerial perspectives on

developing situations. This real-time visibility is

crucial for law enforcement to assess incidents

effectively before arriving on the scene. Similarly,

GAO Tek’s drones utilize advanced sensors and

machine learning algorithms to monitor suspicious

activities and gather critical data efficiently,

showcasing how technology can enhance crime

prevention efforts.

3 GAP ANALYSIS

The current landscape of drones designed for public

safety includes a range of models with each offering

unique features tailored to specific operational needs.

While these established models excel in areas like

autonomous navigation, high-resolution imaging, and

real-time data transmission, they often lack

comprehensive user engagement mechanisms that

empower citizens to actively participate in their own

safety. Most existing systems are primarily designed

for law enforcement use and do not provide a direct

interface for the general public to alert authorities in

emergencies. In contrast, the proposed drone model

is paired with a mobile application that allows anyone

to utilize its capabilities for public safety. In situations

where in- dividuals feel threatened or are in danger,

they can simply press a button on the app to send an

immediate alert along with their location to the drone.

Upon receiving an alert from the app, the drone can

autonomously navigate to the user’s location and

analyze the environment for potential threats. This

proactive approach allows law enforcement to assess

situations more effectively upon arrival, ultimately

improving public safety outcomes. By integrating

user engagement through our app with advanced

drone technology, our model represents a significant

advancement over current offerings in the public

safety drone market. This user-friendly feature

addresses a significant gap in existing models by

enabling rapid response from the drone without

requiring prior training or specialized knowledge.

The integration of this app not only enhances

community engagement but also ensures that help can

be dispatched quickly and efficiently

4 METHADOLOGY

This section outlines the design and assembly of a

drone system that integrates essential hardware

components, including motors, electronic speed

controllers, and a Raspberry Pi as the central

processing

unit. The second part talks about the

programming phase which incorporates machine

learning algorithms and advanced image

processing

techniques using OpenCV and TensorFlow Lite

4.1 Design and Assembly of Hardware

Once the frame is established, we proceed to assemble

the essential components, including motors,

electronic speed controllers (ESCs), propellers, and a

power distribution board. Each motor is paired with

a propeller to generate sufficient lift, while the ESCs

regulate power delivery based on input from the flight

controller. In addition to these core components,

several auxiliary elements are integrated into the

drone system. The Raspberry Pi acts as the central

processing unit, managing data from various sensors,

including the GPS module for location tracking and

ultrasonic sensors for obstacle detection. This

configuration allows for autonomous navigation and

real time decision-making, critical for effective

operation in dynamic environments. Communication

is facilitated through a GSM module, which connects

the drone to a mobile application used by individuals

in distress, allowing them to send alerts with their

location. The telemetry module enables real-time data

transmission between the drone and ground control,

providing operators with live video feeds and sensor

information. The onboard camera captures high-

resolution images and video for surveillance purposes,

with image processing handled by OpenCV to

enhance data quality. Together, these components

create a cohesive system that enhances situational

ISPES 2024 - International Conference on Intelligent and Sustainable Power and Energy Systems

172

awareness and enables rapid responses to

emergencies.

4.2 Programming and Integration

The subsequent phase focuses on programming the

flight controller, which acts as the central processing

unit for the drone’s operations. The flight controller

is configured to interpret data from the GPS module

and various sensors, enabling autonomous navigation

and obstacle avoidance capabilities. Machine

learning algorithms are implemented to enhance the

drone’s ability to analyze sensor data in real time for

detecting suspicious activities. These algorithms are

are trained on diverse datasets to improve their

accuracy in identifying potential threats.A crucial

aspect of this research is the development of a

dedicated mobile application that allows users to

engage with the drone system directly. In emergency

situations, individuals can alert authorities by

pressing a button within the app, which sends their

location to the drone. Upon receiving this alert, the

drone autonomously navigates to the user's location

while simultaneously analyzing its surroundings for

any signs of danger. The mentioned are the key

software components employed in the project

OpenCV: For image processing.

TensorFlow Lite: For optimized deep learning

models. YOLOv4-tiny or MobileNet: For real-time

object detection

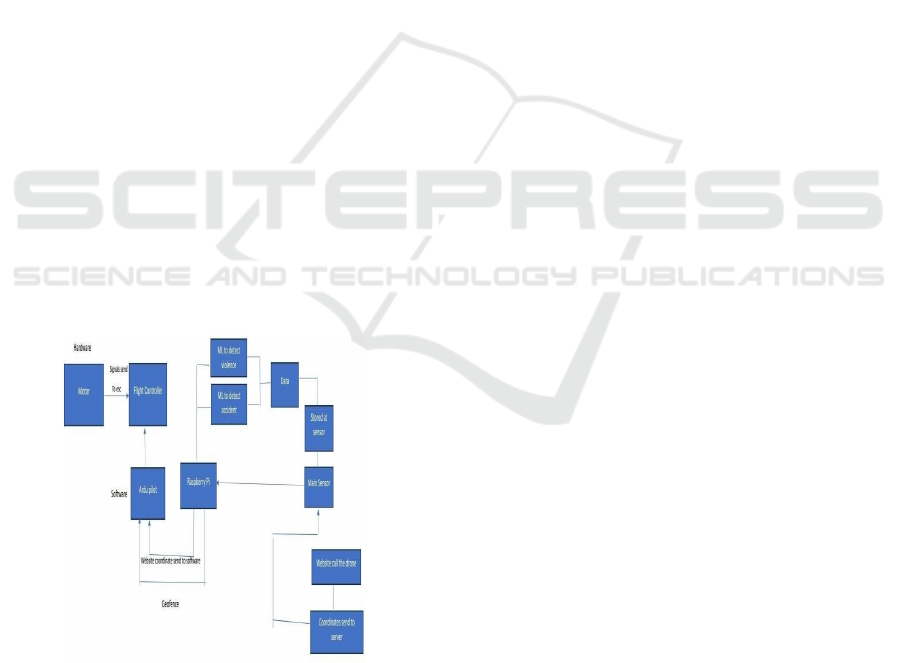

4.3 Block Diagram

Figure 1: Block Diagram of the drone system.

4.3.1 Code Used for Facial Recognition

This code provides a straightforward implementation

of a facial recognition system that leverages the

capabilities of the face recognition library and

OpenCV. It captures live video from a webcam,

processes each frame to identify faces, and matches

these faces against a predefined set of known

encodings. This system can be applied in various

contexts, including security monitoring, user

verification, and automated attendance systems.

Import face_rec

ognition

import

cv2

video_capture = cv2.VideoCapture(0)

known_face_encodings = [] # Add your

known faces' encodings here

known_face_names = [] # Add the

corresponding names for the known faces

while True:

ret, frame =

video_capture.

read()

rgb_frame =

frame[:, :, ::-1]

# Find all face locations and

encodings in the current frame

face_locations =

face_recognition.face_locations(rgb_frame)

face_encodings =

face_recognition.face_encodings(rgb_frame,

face_locations)

for (top, right, bottom, left), face_encoding in

zip (face_locations, face_encodings): matches

=

face_recognition.compare_faces(known_face_

encodings, face_encoding)

It implements a basic facial recognition system

using the face recognition library and OpenCV. It

initializes video capture from the webcam and

continuously reads frames in a loop. Each captured

frame is converted from BGR to RGB format, which

is necessary for face detection.

The code detects faces in the frame and computes

their encodings, which are numerical representations

of the faces. It then compares these encodings against

a predefined list of known face encodings to identify

matches.

4.3.2 Machine Learning Data

The dataset will comprise a diverse collection of

video clips and images that depict various scenarios,

including physical confrontations, aggressive

gestures, and peaceful interactions, enabling the

model to learn the key characteristics that

differentiate these categories. Dataset encompasses a

wide range of scenarios depicting violence, non-

Usage of Police Surveillance Drone for Public Safety

173

violence, accidents etc.

4.3.3 Data for Violence

Figure 2: Machine Learning data for violence.

4.3.4 Data for Non-Violence

Figure 3: Machine Learning data for non-violence.

4.3.5 Data for Accidents

Figure 4: Machine Learning data for accidents.

4.3.6 Design of the App

i.

User Login:

Upon launching the app, users must log in to their

accounts for security and personalization .After

successful login, users are presented with the main

interface of the app.

Figure 5: Main interface of the app.

ii.

Initiating the call

Users will see a prominent button labeled "Call

Drone.”, When the button is pressed, the.app sends

the user's current GPS location to the drone

Figure 6. Emergency button to call the drone.

iii.

Visual Feedback:

The app displays a notification indicating "Calling the

Drone," confirming that the request has been

successfully transmitted.

iv.

Emergency Response:

The drone receives the location and begins it’s

autonomous navigation to assist the user in distress.

Fig 7. Feedback of the Emergency Response

ISPES 2024 - International Conference on Intelligent and Sustainable Power and Energy Systems

174

5 WORKING AND WORKING

MODEL

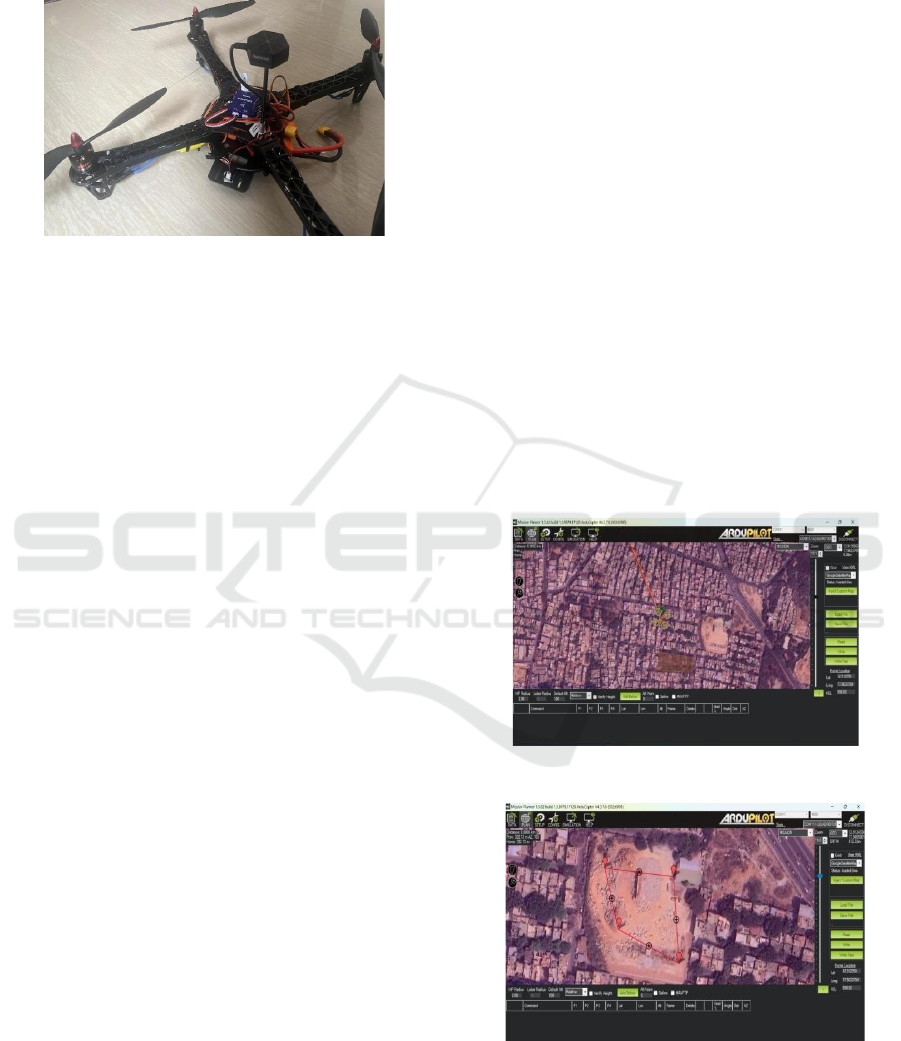

Figure 8: Working Police Surveillance Drone Model.

The process begins when a user interacts with the

mobile plication designed specifically for public

safety. In the event of an emergency or when an

individual feels threatened, they can activate an alert

by pressing a button within the app. This action sends

a notification that includes the user’s current GPS

coordinates to the drone. The communication is

facilitated through the GSM module integrated into

the drone, ensuring swift and reliable transmission of

the alert. Once the drone receives the alert, its flight

controller initiates the autonomous navigation

process. The GPS module determines the exact

location of the user and transmits this information to

the Raspberry Pi, which processes it to establish the

most efficient route to reach the user. The drone

employs pre-programmed flight algorithms to

navigate its environment while utilizing ultrasonic

sensors to detect and avoid obstacles in real time. This

capability allows the drone to maneuver safely

through complex urban or rural settings. As the drone

approaches the user's location, it activates its onboard

camera to capture high-resolution images and video

footage of the area. This data is crucial for

documenting any incidents or potential threats

present at the scene. Concurrently, machine learning

algorithms running on the Raspberry Pi analyze data

from various sensors, including video streams from

the camera and readings from ultrasonic sensors.

These algorithms are designed to identify suspicious

activities based on specific behavioural patterns,

enabling effective assessment of potential risks.

Throughout it’s mission, the drone maintains

continuous communication with ground control via

its telemetry module. This connection allows for real-

time transmission of video feeds and sensor data back

to monitoring personnel or law enforcement agencies.

Operators at ground control can evaluate live footage

and sensor information to make informed decisions

regarding further actions or resource allocation. If

suspicious behaviour is detected or if additional

assistance is deemed necessary, ground control can

initiate an appropriate response based on insights

provided by the drone. This may involve dispatching

law enforcement officers to investigate further or

implementing other emergency protocols as required.

6 RESULTS

6.1 Real-Time Navigation and Obstacle

Avoidance

The drone exhibited proficient autonomous

navigational abilities across diverse environmental

contexts. The ArduPilot framework facilitated

dependable flight regulation, permitting the drone to

sustain stability throughout its operational phases.

The incorporation of ultrasonic sensors markedly

improved the drone's capacity for obstacle detection.

These sensors furnished instantaneous distance

assessments, empowering the drone to identify

proximate objects and modify its trajectory as

necessary.

Figure 9 Visual output from ArduPilot 1.

Figure 10 Visual output from ArduPilot 2.

6.2 Facial Recognition

The integration of facial recognition technology into

Usage of Police Surveillance Drone for Public Safety

175

the drone's operational framework markedly

improved its proficiency in recognizing individuals

instantaneously. The system underwent training

utilizing a comprehensive dataset comprising

identifiable facial images. This functionality is of

paramount importance for applications pertaining to

public safety, as it empowers the drone to swiftly

detect potential threats or individuals in urgent need

6.3 Data Collection and Analysis

The drone's onboard camera successfully captured

high-resolution images and video footage during its

operations. These visual data were processed using

OpenCV for image enhancement and analysis,

allowing for effective monitoring of public spaces.

The integration of machine learning algorithms

enabled the drone to detect suspicious activities in

real-time, providing actionable insights for law

enforcement and emergency responders. The data

collected during test flights will serve as a valuable

resource for refining future models and improving

detection accuracy.

6.4 User Interaction and Application

Functionality

The mobile application developed for user interaction

proved effective in facilitating communication

between individuals in distress and the drone system.

Users reported a positive experience with the app's

interface, which allowed them to easily send alerts

and track the drone's location in real time. The

notification feature indicating "Calling the Drone"

provided reassurance to users during emergencies,

confirming that their request for assistance had been

successfully transmitted

7 CHALLENGES & FUTURE

IMPROVEMENTS

Several challenges were identified during testing the

accuracy of facial recognition decreased in low- light

conditions or when subjects wore accessories that

obscured facial features. Additionally, variations in

environmental factors such as wind speed affected

flight stability during certain operations. Future work

will focus on expanding the dataset used for training

facial recognition algorithms to include diverse

environmental conditions and enhancing navigation

algorithms to improve robustness against external

factors. One major constraint was budget limitations,

which impacted our ability to procure higher-quality

components that could enhance performance and

reliability. With a larger budget, we could have

integrated more advanced sensors, improved

processing units, and better cameras, ultimately

resulting in a more robust drone capable of

overcoming many of the challenges faced during this

project.In future improvements, we plan to invest in

better and more expensive components that can

enhance the drone's capabilities significantly.

Upgrading hardware such as high-resolution cameras,

advanced obstacle detection sensors, and more

powerful processors will not only improve facial

recognition accuracy but also enhance navigation

stability under varying environmental conditions.

8 CONCLUSION

The advancement of the autonomous drone model

designated for public safety signifies a pivotal

progression in the enhancement of emergency

response capabilities and situational awareness frame

work through the amalgamation of sophisticated

technologies, including machine learning algorithms,

real-time data analytics, and high-resolution imaging,

the drone is proficiently equipped to meticulously

monitor diverse environments, discern potential

threats, and assist first responders in critical

scenarios. Its capacity equ- ipped to meticulously

monitor diverse environments, discern potential

threats, and assist first responders in critical

scenarios, navigate complex terrains autonomously

while simultaneously collecting and analyzing data in

real time accentuates its potential as an indispensable

asset for law enforcement and public safety agencies.

The deployment of drones in public safety operations

offers numerous advantages, including rapid

coverage of large areas and the provision of critical

information before personnel arrive on the scene.

This capability not only aids in informed decision-

making but also enhances the safety of responders by

allowing them to assess potentially hazardous

situations from a distance. The versatility of drones

enables their use in various applications, such as

surveillance, search and rescue missions, and

infrastructure assessments, demonstrating their

effectiveness across different public safety scenarios.

The autonomous drone model has the potential to

transform public safety operations by enhancing

situational awareness and improving response times

while minimizing risks to personnel.

ISPES 2024 - International Conference on Intelligent and Sustainable Power and Energy Systems

176

REFERENCES

E. Sanchez, T. Hsu, M. Goodman (2022) - “Autonomous

Drones for Urban Surveillance: A Review”: This paper

reviews the deployment and challenges of using

autonomous drones for urban surveillance, focusing on

their potential for improving security and public safety.

J. Lee, A. Khan, R. Patel (2021) - “Machine Learning

Algorithms for Real-Time Surveillance and Anomaly

Detection in Drones”: This study explores the

application of machine learning algorithms in

enhancing the real-time surveillance and anomaly

detection capabilities of drones.

L. Chen, Y. Li, C. Zheng (2020) - “Facial Recognition and

Surveillance Systems in Law Enforcement: A

Comprehensive Survey”: The paper provides an

extensive survey of facial recognition technologies

used in conjunction with drones for law enforcement

surveillance.

R. Sharma, H. Wei, K. Nair (2023) - “Real-Time

Communication and Data Transmission in Autonomous

Drone Systems”: This research investigates the

methods and technologies used for real-time

communication and data transmission in autonomous

drone systems.

P. Gupta, E. Adams, F. Zheng (2021) -“Advanced Obstacle

Avoidance Techniques for Drones in Urban

Environments”: This paper discusses the development

and implementation of advanced techniques for

obstacle avoidance in drones, particularly in complex

urban settings.

Roggi, G., Meraglia, S., & Lovera, M. (2023) "Leonardo

Drone Contest Autonomous Drone Competition:

Overview, Results, and Lessons Learned from

Politecnico di Milano Team." This paper provides an

overview of an annual competition focused on

autonomous drone design and navigation in GNSS-

denied environments

Usage of Police Surveillance Drone for Public Safety

177