Few Shot Learning via Multiple Teacher Knowledge Distillation

Yuwen Jie

a

School of Cyber Science and Engineering, Southeast University, Nanjing, China

Keywords: Few Show Learning, Knowledge Distillation, Transfer Learning.

Abstract: Machine learning enables computers to discover patterns in large datasets and use them for recognition or

prediction. A model's performance typically depends on the amount of data available; sufficient training

samples ensure good generalization. However, in many applications, acquiring large amounts of labeled data

is costly and time-consuming, and in fields like healthcare or autonomous driving, labeled samples can be

scarce. This raises the important challenge of training effective models with only a few samples. Few-shot

learning addresses this challenge by aiming to perform tasks with very few training examples, learning and

generalizing from just a few or even a single sample. The paper designs a novel method that combines

Knowledge Distillation and Few-Shot Learning to improve model performance with limited data. By

leveraging intermediate features from the teacher model and applying Multiple Teacher-Student Architecture,

the paper’s approach enhances feature extraction and adaptation in few-shot scenarios. This method achieves

premier results on the various dataset, demonstrating the effectiveness of feature distillation in Few-Shot

Learning tasks.

1 INTRODUCTION

In applications which require intensive data, Machine

Learning is widely applied and achieved significant

success. However, when the available dataset is

small, model performance is often limited. In reality,

obtaining large amounts of training samples is both

difficult and expensive, making it crucial to study

how to learn effectively under limited data

conditions. Few-Shot Leaning (FSL), which is a new

learning method to train with limited supervised

example was proposed(Fei-Fei et al., 2006; Fink,

2004). FSL's objective is to make it possible for

models to fast adapt to new tasks using only a few

samples. Current FSL approaches can be categorized

into three types: non-episodic approaches that pre-

train on base classes and then fine-tune on new

classes (Dong et al., 2022), beta learning methods

based on meta-learning, where the model learns how

to learn (Finn et al., 2017), and metric-learning

methods that categorize by directly analyzing the

consistency of query images and support classes

(Snell et al., 2017).

Additionally, Transfer learning has been

extensively employed in image classification tasks as

a

https://orcid.org/ 0009-0000-0304-8622

a means of avoiding the need to train models from

scratch. By leveraging models pre-trained on large

datasets, transfer learning helps models significantly

enhances the performance of few-shot learning (Sung

et al., 2022; Mehrotra et al., 2020; Lotfollahi et al.,

2022).

The paper proposes a novel method that combines

Knowledge Distillation (KD) with few-shot learning.

While most previous KD research has focused on

logical distillation, which trains student models by

reducing any KL divergence in the output probability

distributions of the teacher and student models.

However, feature distillation where intermediate

teacher’s features are used to guide the student model

has been proved to outperform logical distillation in

several tasks (Romero et al., 2014). Despite its

superior performance, feature distillation has rarely

been applied to few-shot learning. Therefore, the

main contributions of the paper include:

1. Introducing feature distillation into few-shot

learning. By leveraging the teacher model's logic

distribution and intermediate features, the paper

further improves the performance of the few-shot

learning model.

Jie and Y.

Few Shot Learning via Multiple Teacher Knowledge Distillation.

DOI: 10.5220/0013515000004619

In Proceedings of the 2nd International Conference on Data Analysis and Machine Learning (DAML 2024), pages 239-245

ISBN: 978-989-758-754-2

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

239

2. This method introduces multi-teacher

distillation into few-shot learning tasks. By utilizing

multiple teacher models, the student model is able to

learn diverse and complementary information from

different teachers, resulting in more robust feature

representations. Unlike traditional single-teacher

distillation, the multi-teacher setup allows for better

capture of task diversity, thereby enhancing the

student model’s generalization ability.

3. The potential of combining feature distillation

with few-shot learning is demonstrated through the

best performance metrics in image classification tasks

achieved by this method on various datasets.

2 RELATED WORKS

2.1 Knowledge Distillation

KD was first introduced by in (Hinton, 2015), where

a complex and excellent model(also called teacher

model) trained to provide knowledge to a slim

model(also called student model) through distillation

training. It allows the knowledge of a complex

teacher model to be transferred to a simpler student

model with minimal performance loss. Hinton

proposed the class probability with parameter T, also

known as "soft targets." Soft targets carry more

generalized information than hard targets. Initially,

knowledge distillation only focused on learning from

the teacher model's soft labels to produce a

lightweight student model, but as teacher models

grew deeper and more complex, learning solely from

soft labels became insufficient.

Currently, knowledge distillation methods focus

on two aspects: logical distillation (Komodakis &

Zagoruyko, 2017; Benaim & Wolf, 2018) and feature

distillation (Romero et al., 2014; Komodakis &

Zagoruyko, 2017; Kim et al., 2018; Chen et al., 2021).

The teacher model's knowledge can include more

than just its logical output, features from intermediate

layers, parameters, and even the connections between

layers can be considered knowledge. Both final-layer

outputs and intermediate outputs can teach student

model to learn. Knowledge based on feature can

complement response-based knowledge and is useful

for training slim networks. FitNets introduced

intermediate representations (Romero et al., 2014),

selecting an intermediate layer from the teacher

model to guide the hidden layer output of the student

model. Inspired by (Romero et al., 2014), subsequent

research proposed various methods to use teacher

model intermediate layers (Komodakis & Zagoruyko,

2017; Kim et al., 2018; Chen et al., 2021). Zagoruyko

et al. proposed using attention maps to represent

knowledge; Kim et al. introduced convolutional

translators as more interpretable intermediate

representations (Kim et al., 2018). Chen et al.

proposed cross-layer KD, adaptively assigning layers

in the student network to layers in the teacher network

using attention mechanisms (Chen et al., 2021).

Additionally, feature extraction-based methods often

overlook the importance of logical distillation, which

Zhao et al. emphasized by rephrasing classical KD

loss to highlight logical distillation’s importance

(Zhao et al., 2022).

2.2 Few Shot Learning

Few-shot learning was proposed in (Fei-Fei et al.,

2006; Fink, 2004) as a new machine learning

paradigm for learning from limited supervised

examples. One approach to FSL is data augmentation

(Lake et al., 2015; Wang et al., 2019), which involves

transformations such as translation, flipping, and

shearing, or data synthesis and feature enhancement.

These methods enlarge the dataset, but their design

requires expert domain knowledge and high labor

costs. Another category is meta-learning-based

methods, where the model learns to learn. Finn et al.

proposed a model-agnostic meta-learning framework

(MAML) (Finn et al., 2017), which performs a few

gradient updates across multiple tasks to find the

optimal initialization parameters across all tasks.

These allow the model to adapt quickly to new tasks

with minimal gradient updates and achieve good

performance. Other meta-learning-inspired methods

like MetaDet use a meta-model to learn predict-class-

specific parameters from few-shot data (Wang et al.,

2019), enabling accurate object detection in new

classes. Zhang et al. designed a new associated meta-

learning strategy (Zhang et al., 2022), Meta-DETR,

which use the association aggregation module (CAM)

combined with task encoding to integrate the

supported category information into the query

features, and then encode and decode through the

Transformer to adapt to new classes in few-shot tasks

for efficient object detection.

Previous research has shown that transfer learning

make model avoid training from scratch possible by

adapting to new tasks fast (Sung et al., 2022;

Mehrotra et al., 2020; Lotfollahi et al., 2022).

Transferring learning has been widely proven to be

effective through KD, where the teacher model

instructs the student model. The core task of few-shot

learning is enabling the model to fit on very few

samples and generalize well. The dark knowledge

provided by the teacher model’s soft lable in KD are

DAML 2024 - International Conference on Data Analysis and Machine Learning

240

crucial for FSL models with only a few hard targets,

helping prevent overfitting and improving task

generalization. Therefore, some recent studies have

attempted to combine KD and FSL, typically by using

the teacher model to augment the data (Rashid et al.,

2020; Rajasegaran et al., 2020; Yoo et al., 2021). In

contrast, the paper applies feature based distillation to

FSL, fully utilizing the feature extraction capabilities

of powerful teacher models to improve student model

performance under few-shot conditions.

3 METHOD

3.1 Pre-Train

Typically, to perform few-shot learning, the model

needs to be pre-trained on a source domain dataset

𝑆

={(𝑥

,𝑦

),...,(𝑥

,𝑦

)}, and then trained on the

target domain dataset 𝑆

={(𝑥

,𝑦

),...,(𝑥

,𝑦

)},

(𝑥

、𝑦

) represents the i-th sample 𝑥

and label 𝑦

in

the dataset. If only 𝑆

is used for training, the model

will struggle to converge. The pre-training phase

involves mixing the source domain data with the

target domain data, which has limited samples, to

create a mixed domain dataset 𝑆

=𝑆

+𝑆

, and

train the teacher backbone using 𝑆

. This allows the

teacher model to better grasp the low-level feature

representations, such as edge information and texture

information, across different categories, preventing

the model from overfitting. The student's ability to

generalize well even with a few samples is greatly

enhanced by the presence of a well-trained teacher

backbone in the subsequent steps, which can transfer

its low-level feature extraction capability to the

student backbone through KD.

3.2 Attention-Weighted Distillation Loss

During the distillation process, the first step is to let

the teacher model perform inference on the data, save

the output prediction results (soft labels), and then

train the smaller model. Soft labels differ from the

hard labels typically used in model training and

contain similar information between different classes.

However, directly using soft labels to train the student

model can easily ignore the relationships between

different classes. This is because the output of the

teacher model is typically a probability distribution

calculated through the Softmax function. By default,

the Softmax function tends to concentrate the output

distribution on one or a few most likely categories,

resulting in a sharp probability distribution, where

most categories have probabilities close to zero.

However, by introducing a temperature coefficient

𝑇

to the Softmax, the output probability distribution of

Softmax becomes smoother (Hinton, 2015). The

Softmax with the temperature parameter T can be

represented as:

𝑝

=

exp

(

𝑧

/𝑇

)

∑

exp𝑧

/𝑇

(

1

)

Where 𝑧

is the unnormalized score (logit) for

class i.

When 𝑇 >1, the probability distribution becomes

smoother, and the differences between class

probabilities decrease. The student model is better

able to capture subtleties during training with a

smooth probability distribution that provides more

information about the similarities between different

classes. After introducing the temperature coefficient

𝑇 into the Softmax function, the output probability

distribution is 𝑃

={𝑃

,...,𝑃

}, and the output of

student’s is 𝑃

={𝑃

,...,𝑃

}, P

i

representing the

probability of class i. The key to training with soft

labels is the KL divergence . The loss is calculated to

determine the disparity between the probability

distribution of output in student and the soft labels

from the teacher model.

ℓ

=𝐷𝐾

(𝑃

||𝑃

)=(

𝑃

)(𝑙𝑜𝑔

𝑃

𝑃

)

(

2

)

In equation (2) C represents the number of

classes.

The final distillation loss is obtained by

combining KL divergence and task-specific loss:

ℓ

=𝛼ℓ

+𝛽ℓ

(

3

)

This method designed a feature loss to measure

the differences in intermediate layers between the

student and the teacher. Let the feature maps of the

teacher model be denoted as 𝐹

∈{𝐹

,𝐹

,...,𝐹

,𝑃

}

and the student model’s are 𝐹

∈{𝐹

,𝐹

,...,𝐹

,𝑃

}.

The one-to-one matching method is defined as:

ℓ

=𝐷

(𝐹

,𝐹

)

(

4

)

D is a function that calculate the distance used to

determine the difference between the student and

teacher models (such as Euclidean distance or other

metrics)

Specifically, equation (4) assumes that channels

in the feature maps that come from teacher and

student model are the same. To map features into

target representations, a transformation o is necessary

Few Shot Learning via Multiple Teacher Knowledge Distillation

241

for channels that differ, and equation (4) can be

expressed as:

ℓ

=𝐷

(𝑜(𝐹

),𝑜(𝐹

))

(

5

)

The paper’s goal is to minimize ℓ

. When the

architecture of the teacher and student models differ

significantly, or multi-layer information needs to be

considered, a simple one-to-one matching might not

be enough to effectively transfer knowledge. To

better achieve knowledge distillation, it is usually

necessary to combine multi-layer knowledge. After

introducing multi-layer distillation, the loss in multil-

layer can be defined as:

ℓ

=(

𝐷

(𝑜(𝐹

),𝑜(𝐹

)))

(

6

)

Different layers have different effects on the

student's learning based on the features of the teacher

model. Some layers' features are more important than

others, and simply accumulating the features from all

layers can lead to these important features being

overlooked. By introducing attention weights, the

model can adaptively assign different weights to the

features of each layer, allowing the important features

to receive more attention while the less important

features are appropriately suppressed. Therefore, the

paper designed Attention-Weighted Distillation

Loss (AWD), which balances multi-layer knowledge

with dynamic weighting instead of simply

accumulating it. AWD is defined as:

ℓ

=𝐷(

𝑜(𝐹

),𝛼

,

⋅𝑜(𝐹

))

(

7

)

where 𝛼

,

is the attention weight, and the

definition of 𝛼

,

is:

𝛼

,

=

𝑒𝑥𝑝 (𝐹

⋅𝐹

)

∑

𝑒𝑥𝑝

(𝐹

⋅𝐹

)

(

8

)

Finally, the paper define the overall loss function

as:

ℓ=𝛾ℓ

+(1−𝛾)ℓ

(

9

)

3.3 Multiple Teacher-Student

Architecture

Previous KD work has focused on the framework of

single teacher-single students (Komodakis &

Zagoruyko, 2017; Chen et al., 2021; Zhao et al.,

2022). This is because, under conditions of limited

computational resources and insufficient data, this

method has shown relatively good performance, and

it is easy to implement in practice. A single teacher

model can provide strong supervision by transferring

complex knowledge representations to a smaller

student model, thus improving the student model’s

learning ability. However, in FSL scenarios, the

single teacher distillation method has certain

limitations. Due to insufficient training data, the

teacher model often fails to fully capture the diversity

and fine-grained characteristics of the data, leading to

insufficient transfer of knowledge and limiting the

student’s ability to generalize.

This paper suggests a method called MTKD

(multiple teacher-student) to address this issue. The

student model can learn from diverse perspectives

and dimensions in FSL scenarios when the multiple

teacher models are combined. Each teacher model

can provide diverse feature representations, helping

the student model to achieve a more comprehensive

learning process. Multiple teacher models generate

various knowledge. Distilling with multiple teachers,

which is different from a single teacher, the student

model can better handle the diversity and complexity

of tasks under few-shot conditions. The multiple

teacher-student framework effectively enables the

student model to build a stronger learning foundation

in limited data settings, compensating for the

shortcomings of a single teacher.

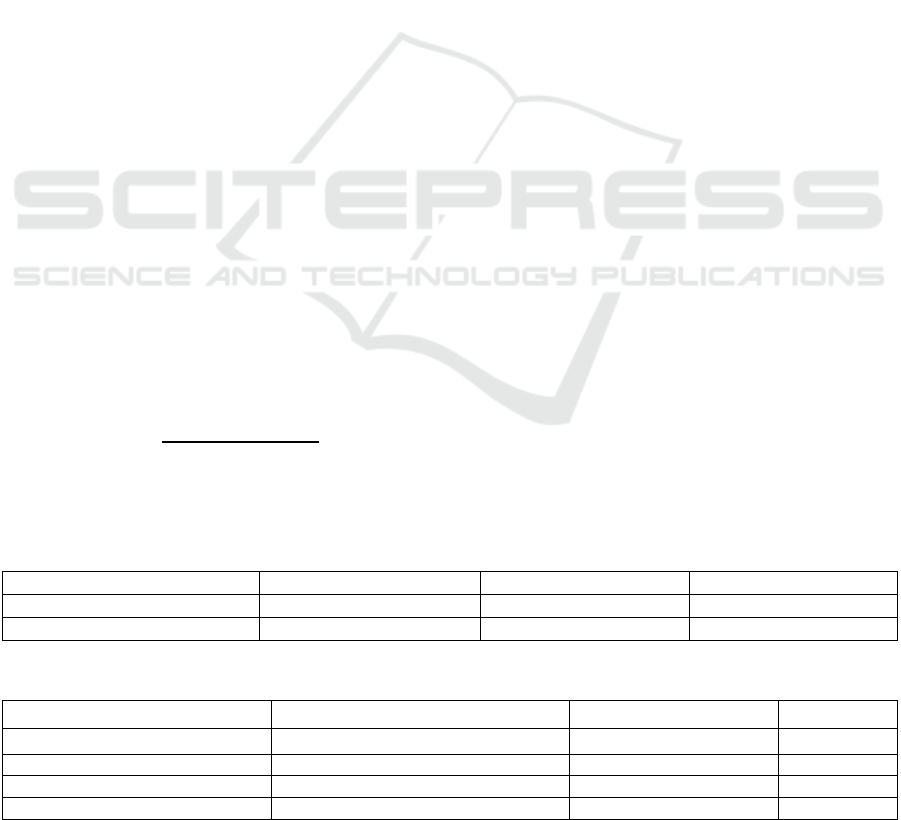

Table 1: Accuracy of teacher model on ImageNet Dataset.

Model Dataset Top-1(%) Tok-5(%)

ResNet-50 ImageNet 77.6 93.2

DenseNet-121 ImageNet 76.2 92.6

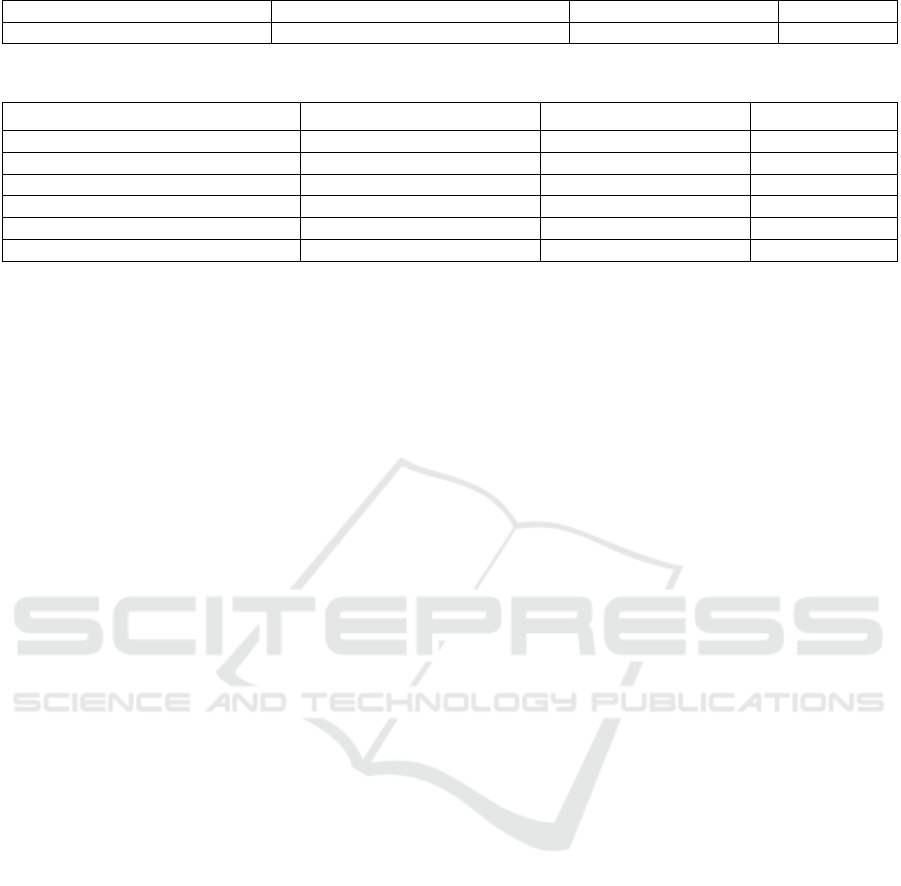

Table 2: Accuracy of few-shot learning tasks in the 1-shot settings.

Model Mini-ImageNet Omniglot CUB

MTKD(Ours) 67.40±0.18 97.74 ± 0.15 70.44 ± 0.25

MAML

(

Finn et al., 2017

)

56.72±0.22 96.50 ± 0.30 61.52 ± 0.20

ProtoNet

(

Snell et al., 2017

)

60.74±0.19 97.62 ± 0.20 65.31 ± 0.25

SAML

(

Hao et al., 2019

)

61.61±0.20 97.30 ± 0.15 66.07 ± 0.20

DAML 2024 - International Conference on Data Analysis and Machine Learning

242

MetaO

p

tNet

(

Gon

g

, 2023

)

62.79±0.20 97.53 ± 0.15 66.49 ± 0.20

Tranfer Learning+Finetune 65.95±0.20 98.45 ± 0.15 69.53 ± 0.20

Table 3: Accuracy of few-shot learning tasks in the 5-shot settings.

Model Mini-ImageNet Omniglot CUB

MTKD(Ours) 81.83±0.20 98.64 ± 0.15 82.50 ± 0.21

MAML

(

Finn et al., 2017

)

68.81±0.19 98.59 ± 0.22 74.21 ± 0.21

ProtoNet

(

Snell et al., 2017

)

79.19±0.22 98.82 ± 0.15 77.50 ± 0.19

SAML

(

Hao et al., 2019

)

78.91±0.17 98.72 ± 0.17 78.22 ± 0.22

MetaOptNet(Gong, 2023) 80.52±0.20 98.92 ± 0.17 79.39 ± 0.19

Tranfer Learning+Finetune 79.77±0.22 99.25 ± 0.16 80.26 ± 0.21

4 EXPERIMENTS

4.1 Implementation Details

In experiments, the paper adopted ResNet-50 and

DenseNet-121 as the teacher models, as these two

architectures possess different feature learning

abilities. ResNet-50, with its deeper hierarchical

structure, excels at capturing global features, while

DenseNet-121 enhances local feature extraction

through inter-layer feature reuse. Combining both

logics and features from these two teacher models, the

paper performed distillation training on the student

model, ResNet-18. The paper compared MTKD with

a baseline model that did not use distillation and was

directly trained on the small sample dataset, to

validate the effectiveness of MTKD in few-shot

learning scenarios.

In both the distillation and baseline training, the

paper conducted 1-shot and 5-shot learning tasks. The

teacher models were pre-trained on the full training

dataset, while the student model was trained under

one-shot and five-shot scenarios with guidance from

the teacher models. Paper’s method combined soft-

label distillation, hard-label supervision, and

attention-weighted feature distillation loss functions.

The student model was able to improve its feature

representation and generalization capabilities by

acquiring knowledge from both teacher models

during the distillation process.

The train process experiment employed a SGD

and use a baseline ResNet-18 model. Weight decay

occurs at a rate of 4e-4. Initially, the learning rate is

lowered to 0.01. Models were trained directly on the

one-shot and five-shot settings with different

methods. In the experiments, the paper used accuracy

as evaluation metrics.

4.2 Teacher Models on Source Dataset

The two teacher models performed exceptionally well

on the ImageNet dataset, as shown in Table 1,

indicating that they were able to capture key features

and class information in large-scale image

classification tasks. The Top-1 accuracy of ResNet-

50 was 77.6% and the Top-5 accuracy was 93.2,

while DenseNet-121 was 76.2% accurate in Top-1

and 92.6% in Top-5. Although ResNet-50 slightly

outperformed DenseNet-121, the difference in Top-5

accuracy between the two models is minimal,

indicating that both models have strong

generalization capabilities and can accurately identify

the correct class in most cases. This strong

classification performance makes these two models

well-suited as teacher models, providing effective

supervision signals for the student model in few-shot

learning tasks. Through these teacher models, the

student model can learn from rich feature

representations, overcoming the data scarcity issue in

few-shot learning and improving the overall

performance of the model.

4.3 Performance of Few Shot Learning

In Table 2, MTKD achieves higher accuracy across

all datasets compared to other methods in the 1-shot

learning tasks. On Mini-ImageNet and CUB, the

improvement is particularly evident when compared

to baseline methods like MAML and ProtoNet. This

demonstrates that MTKD can effectively extract key

features even with extremely limited data, regardless

of the small number of training samples. Unlike

MAML and ProtoNet, which tend to struggle with

distinguishing subtle inter-class differences when

sample sizes are small, MTKD, with the assistance of

teacher models, can extract features more efficiently

and generalize faster.

On the Omniglot dataset, MTKD also performs

exceptionally well, with accuracy significantly higher

Few Shot Learning via Multiple Teacher Knowledge Distillation

243

than other models. Although Omniglot is a relatively

simple dataset, the stable performance of MTKD on

this dataset further demonstrates its robustness. The

results indicate that MTKD not only excels at

learning from small amounts of data but is also

adaptable to different types of datasets, including

those with relatively simple or homogeneous classes.

However, it is also evident from the results that while

MTKD performs excellently on Omniglot, it does not

achieve the best performance among all methods. The

source dataset the teacher model used is ImageNet

and it is quite different from handwritten character

dataset, Omniglot. Obviously, there is a huge domain

gap.

According to Table 3, when performing 5-shot

learning tasks, the performance of all models

improves as the number of training samples increases.

However, MTKD continues to maintain its leading

position, especially on the Mini-ImageNet and CUB

datasets. This suggests that MTKD not only benefits

from having more samples but also consistently

maintains a significant performance gap compared to

other methods. The improved accuracy with more

samples further demonstrates MTKD's strong ability

to utilize additional information, improve

classification decisions, and reduce errors.

A key factor contributing to the enhanced

performance of MTKD is the use of distillation from

multiple teachers. By leveraging several teacher

models, the student model can assimilate diverse and

complementary insights from various sources,

leading to stronger feature representations. This

technique helps the student model improve its

generalization abilities, especially in situations where

the training data is limited or contains noise.

Although Transfer Learning + Finetune shows

competitive performance on the Omniglot dataset, its

performance is inconsistent across different datasets.

This inconsistency suggests that while transfer

learning helps leverage pre-trained knowledge, it may

struggle to adapt effectively to new categories and

tasks that differ from the source domain. In contrast,

MTKD is specifically designed for few-shot learning

tasks, making it more reliable and adaptable across

various scenarios.

Furthermore, the success of MTKD can also be

attributed to its ability to simultaneously learn both

local and global features. This allows the model to

capture fine details while also recognizing broader

patterns, giving it a distinct advantage over models

that focus predominantly on one aspect. This

balanced feature-learning approach ensures that the

model can adapt to a wide range of tasks, regardless

of the complexity of the dataset.

5 CONCLUSION

This study proposed and validated a FSL approach

based on KD. By utilizing ResNet50 and DenseNet-

121 as teacher models and ResNet18 as the student

model, the study effectively applied the principles of

knowledge distillation. This allowed the student

model, even with a limited amount of training data, to

achieve performance surpassing other few-shot

learning methods. Additionally, the paper designed a

comprehensive loss function, combining soft-label

loss, hard-label loss, and attention-weighted feature

distillation, which further enhances the student

model's feature learning capabilities while

maintaining prediction accuracy.

The experimental results show that after thorough

training of the teacher model on a large dataset,

distillation to the student model not only reduced the

model's parameter count and computational cost but

also significantly improved the student model's

generalization ability in few-shot tasks. Specifically,

in One-Shot and Few-Shot scenarios, the student

model achieved better performance after distillation

training compared to training independently on small

datasets and other few-shot learning methods. This

approach fully validated the effectiveness of

knowledge distillation in few-shot learning and

demonstrated its high practical value, especially in

resource-constrained applications. Future work will

further explore more sophisticated distillation

strategies, such as adaptive temperature parameter

adjustment, to achieve more robust model

performance across more tasks and datasets.

REFERENCES

Benaim, S., & Wolf, L. (2018). One-shot unsupervised

cross domain translation. Advances in neural

information processing systems, 31.

Chen, D., Mei, J. P., Zhang, Y., Wang, C., Wang, Z., Feng,

Y., & Chen, C. (2021, May). Cross-layer distillation

with semantic calibration. In Proceedings of the AAAI

conference on artificial intelligence (Vol. 35, No. 8, pp.

7028-7036).

Dong, B., Zhou, P., Yan, S., & Zuo, W. (2022, October).

Self-promoted supervision for few-shot transformer. In

European Conference on Computer Vision (pp. 329-

347). Cham: Springer Nature Switzerland.

Fei-Fei, L., Fergus, R., & Perona, P. (2006). One-shot

learning of object categories. IEEE transactions on

pattern analysis and machine intelligence, 28(4), 594-

611.

Finn, C., Abbeel, P., & Levine, S. (2017, July). Model-

agnostic meta-learning for fast adaptation of deep

DAML 2024 - International Conference on Data Analysis and Machine Learning

244

networks. In International conference on machine

learning (pp. 1126-1135). PMLR.

Fink, M. (2004). Object classification from a single

example utilizing class relevance metrics. Advances in

neural information processing systems, 17.

Gong, Y. (2023). Meta-learning with differentiable convex

optimization (No. 10372). EasyChair.

Hao, F., He, F., Cheng, J., Wang, L., Cao, J., & Tao, D.

(2019). Collect and select: Semantic alignment metric

learning for few-shot learning. In Proceedings of the

IEEE/CVF international Conference on Computer

Vision (pp. 8460-8469).

Hinton, G. (2015). Distilling the knowledge in a neural

network. arXiv preprint arXiv:1503.02531.

Kim, J., Park, S., & Kwak, N. (2018). Paraphrasing

complex network: Network compression via factor

transfer. Advances in neural information processing

systems, 31.

Komodakis, N., & Zagoruyko, S. (2017, June). Paying

more attention to attention: Improving the performance

of convolutional neural networks via attention transfer.

In ICLR.

Lake, B. M., Salakhutdinov, R., & Tenenbaum, J. B.

(2015). Human-level concept learning through

probabilistic program induction. Science, 350(6266),

1332-1338.

Lotfollahi, M., Naghipourfar, M., Luecken, M. D., Khajavi,

M., Büttner, M., Wagenstetter, M., ... & Theis, F. J.

(2022). Mapping single-cell data to reference atlases by

transfer learning. Nature biotechnology, 40(1), 121-

130.

Mehrotra, R., Ansari, M. A., Agrawal, R., & Anand, R. S.

(2020). A transfer learning approach for AI-based

classification of brain tumors. Machine Learning with

Applications, 2, 100003.

Rajasegaran, J., Khan, S., Hayat, M., Khan, F. S., & Shah,

M. (2020). Self-supervised knowledge distillation for

few-shot learning. arXiv 2020. arXiv preprint

arXiv:2006.09785.

Rashid, A., Lioutas, V., Ghaddar, A., & Rezagholizadeh,

M. (2020). Towards zero-shot knowledge distillation

for natural language processing. arXiv preprint

arXiv:2012.15495.

Romero, A., Ballas, N., Kahou, S. E., Chassang, A., Gatta,

C., & Bengio, Y. (2014). Fitnets: Hints for thin deep

nets. arXiv preprint arXiv:1412.6550.

Snell, J., Swersky, K., & Zemel, R. (2017). Prototypical

networks for few-shot learning. Advances in neural

information processing systems, 30.

Sung, Y. L., Cho, J., & Bansal, M. (2022). VL-Adapter:

Parameter-efficient transfer learning for vision-and-

language tasks. In Proceedings of the IEEE/CVF

conference on computer vision and pattern recognition

(pp. 5227-5237).

Wang, Y. X., Ramanan, D., & Hebert, M. (2019). Meta-

learning to detect rare objects. In Proceedings of the

IEEE/CVF International Conference on Computer

Vision (pp. 9925-9934).

Yoo, K. M., Park, D., Kang, J., Lee, S. W., & Park, W.

(2021). GPT3Mix: Leveraging large-scale language

models for text augmentation. arXiv preprint

arXiv:2104.08826.

Zhang, G., Luo, Z., Cui, K., Lu, S., & Xing, E. P. (2022).

Meta-DETR: Image-level few-shot detection with

inter-class correlation exploitation. IEEE transactions

on pattern analysis and machine intelligence, 45(11),

12832-12843.

Zhao, B., Cui, Q., Song, R., Qiu, Y., & Liang, J. (2022).

Decoupled knowledge distillation. In Proceedings of

the IEEE/CVF Conference on computer vision and

pattern recognition (pp. 11953-11962).

Few Shot Learning via Multiple Teacher Knowledge Distillation

245