Comparison of Music Composing Software: Nyquist IDE, Audacity,

and Garage Band

Yuheng Lin

Sentinel Secondary, Vancouver, Canada

Keywords: Music Software, Sound Synthesis, Audio Editing, Machine Learning, Music Production.

Abstract: The art of music composition got great leaps forward with Nyquist IDE, Audacity, and GarageBand

applications. In the present work, it will be valuable to compare them and work out how they correspond to

various user needs. Nyquist IDE is very powerful for controlling sound synthesis by codes, but this system

has a very high learning curve for beginning users. Audacity is less complicated, having a simpler interface,

and is more apt for basic editing, though it does not have the variety of advanced functions necessary for

professional production. GarageBand is then more accessible, with its good balance of ease of use, though

not professional due to a shortage of advanced tools for manipulation and mixing. Adding machine learning

into the feature set for these platforms could exponentially increase their functionality, i.e., Nyquist

autocompletion, AI modification and cleanup for Audacity, and adaptive instruments for GarageBand. These

results underline a future where a choice between the toolset has to be made based on creative needs but points

to a future of development in which the bridge between casual and professional users would be mended and

the advanced music production toolset would become more accessible to all.

1 INTRODUCTION

The origin of computer music reaches back into the

early 20th century well before the widespread use of

digital technologies. Early experimentations in

electronic sound prefigured more formal

developments in the mid-century, especially in the

post-war era (1945-1970s). The initial program for

computer music was developed in the 1950s, which

marks a new era in creating sound digitally. Computer

music made important progress in the 1960s and

1970s with the development of analog synthesizers

and music programming systems. These early

systems allowed composers to input instructions for

sound synthesis that computers could follow, and that

lays the groundwork for future developments. These

systems not only introduced the possibility of real-

time sound synthesis but also are the basis for digital

audio tools in use today by composers and sound

designers. Later, the big jump came in the 1980s with

the introduction of the MIDI standard, whereby

electronic instruments could talk directly to

computers. Suddenly, musicians were able to

manipulate sounds to a much greater extent using

digital audio workstations. As personal computers

became powerful and widely available, the same

musicians were able to both create and perform

digital music without recourse to specialized studios

(Lazzarini, 2013; Manning, 2013).

In recent years, evolutionary computation has

introduced a new approach to computer music

(Miranda & Biles, 2007; Gorbunova & Hiner, 2019).

By using algorithms, composers can transform simple

musical ideas into more complex compositions. This

method has led to the creation of new music and a

deeper understanding of how musical ideas grow and

spread across different contexts and cultures (Burlet

& Hindle, 2013). Historical foundations of computer

music have developed into the advanced systems

used today while recent technological and

educational innovations go on to further expand the

way in which music is composed and taught.

Throughout the past several decades, professional

and educational contexts have increasingly integrated

computer music technologies. With the development

of synthesizers, multimedia computers, and software

created for that purpose, traditional methods within

music pedagogy were able to integrate advanced

technology. These digital tools have enhanced music

education through an even more interactive and

learning process for the students. It offers new ways

of blending classical methodologies into digital

208

Lin and Y.

Comparison of Music Composing Software: Nyquist IDE, Audacity, and Garage Band.

DOI: 10.5220/0013512700004619

In Proceedings of the 2nd International Conference on Data Analysis and Machine Learning (DAML 2024), pages 208-213

ISBN: 978-989-758-754-2

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

platforms, thereby expanding the possibilities for

teaching music in the digital era.

On the other hand, the rise of musicians as "end-

user programmers" has grown. These artists take

advantage of visual programming languages such as

Max/MSP and Pure Data, through which they can

perform a different type of process in music creation.

Even though computer musicians alter fewer codes,

they still contribute to improve the software by

reporting bugs. Instead, their focus tends to lean more

to the creative rather than efficiency (McPherson &

Tahiroğlu, 2020).

Besides technological developments, creative

procedures from computer music languages arise.

The different programming languages influence the

pathways taken in composition by musicians many

times based on ease of accomplishing a certain task.

Tasks that are easier to perform in one programming

language than another can influence musicians’

creative choices. This interplay between the language

being used and the resulting music places the

designers of the programming languages used by

digital composers in a position of great influence over

the ultimate artistic result.

In modern days, the way of using digital software

to create music has become more and more popular.

Due to the improvement of computer music, using the

software to make music is convenient and flexible.

The advantages of such tools include accessibility,

ease of use, and the ability to create music anytime

and anywhere, without the need for expensive

equipment. Therefore, it’s important to provide

different groups of people with appropriate

composing apps that can fulfill their specific needs.

Hence, the following research will be conducted to

describe three of the popular music software

applications: Nyquist IDE, Audacity, and

GarageBand. Since each one has a function that is

unique from the others, the research will touch on the

differences related to user interface, sound editing,

personalization, and hardware integrations. Through

the study of those features, this paper aims to give an

idea about how those tools could meet various types

of users demands for the selection of the most

appropriate software by musicians in view of their

creative aims.

2 DESCRIPTIONS OF MUSIC

COMPOSING BASED ON

SOFTWARE

Software music creation rests on the premises of

digital sound synthesis, signal processing, and

algorithmic composition. Such tools provide the

composers with abilities for digital creation,

modification, and manipulation of sounds, offering

immense flexibility and control over their musical

ideas. Nyquist IDE is a code-based application that

allows the user to have very fine control over sound

synthesis. Actually, it enables users to program the

behavior and transformations of a sound right at its

source, fitting those composers' needs working with

algorithmic processes. Coding will allow the

composer to specify the parameters of the sound and

build highly complex, dynamic compositions with a

very high degree of custom specificity. Nyquist will

be ideal for those who want to plunge into the

technical details in creating music and meet their

requirements since they are in control of the

generation and manipulation of sounds.

Audacity is a lighter audio manipulation program

that contains fewer functions for synthesizing sounds

but focuses more on manipulating those that are

already created. The user interface allows musicians

to slice and splice tracks and insert a variety of

effects. Audacity contains very minimal technical

overhead; in fact, it's used even in educational

curricula and is ideal for those who want to edit audio

files without getting mired in the details of sound

creation.

GarageBand strikes a good balance between

features and simplicity. It is pretty straightforward

with its interface, pre-made loops, and virtual

instruments, so any person without broad knowledge

in music theory or sound engineering will easily

manage it. With GarageBand, users can easily create

tracks by combining loops and instruments, and that

can make it an ideal choice for amateur musicians and

a popular starting point for those learning music

production. In contrast, apps like Logic Pro and

Ableton Live versions are designed for professional

work and have special features related to live

performances, mixing, and mastering. Such platforms

are developed for users requiring high level levers in

sound production and often include physical

hardware such as MIDI controllers or synthesizers.

Comparison of Music Composing Software: Nyquist IDE, Audacity, and Garage Band

209

3 NYQUIST IDE

Nyquist IDE, created by Roger B. Dannenberg, is a

software for music composition and sound synthesis.

It includes compositional structure and manipulations

of sound into a single uniform context (Dannenberg,

1996; Dannenberg, 1997). Whereas most systems

maintain a firm separation between score and sound

synthesis, Nyquist supports interaction with score and

sound synthesis interchangeably by the composer.

Based on the Lisp programming language, it offers

the composer a flexible environment where sound can

be easily stored, modified, and reused in their

compositions. This allows the user to store, edit, and

reuse sounds in their composition with a lot of ease

and hence work in a really flexible and efficient

manner. Nyquist is mainly designed based on the

concepts of functional programming. Composers

enjoy extensive control over key parameters,

including but not limited to, pitch, duration, timbre,

and other properties of the sound through

conceptually transparent means of abstraction. By

directly coding the behavior and transformation of the

sounds, composers can make elaborate and dynamic

compositions with a high degree of customizing.

Moreover, Nyquist supports real time audio

processing so that immediate auditory feedback is

provided to the composers. Therefore it will be

convenient for users to find any potential bug and

debug it. The most characteristic features of Nyquist

concern its capabilities for behavioral abstraction; it

allows the transformation of sounds depending on

their context in a composition. There are several ways

to apply time stretching to both synthesized and

sampled sounds, since the method is generally

flexible and allows various types of manipulations.

The possibility of dynamic changes in the behavior of

sounds opens a wide range of new creative

possibilities for composers.

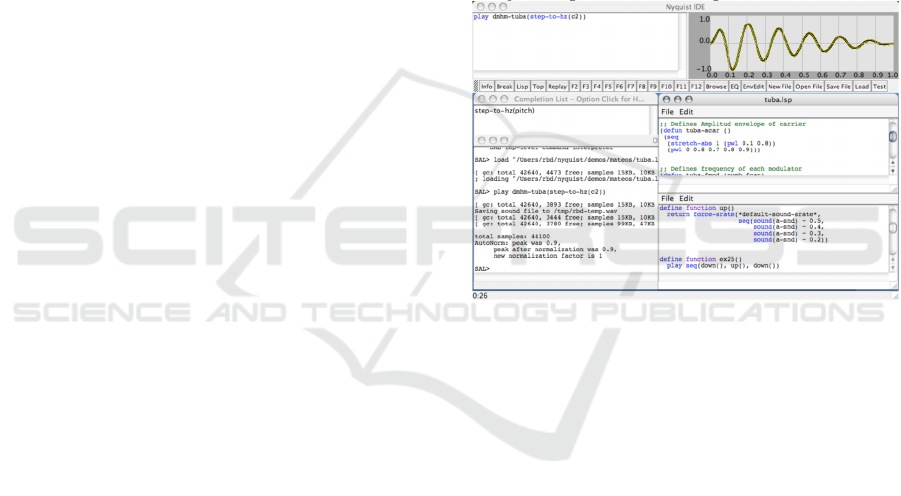

Fig. 1 gives a good overview of how composers

would interact with Nyquist IDE. The user inputs the

code that describes the behaviors of sound

parameters, while the platform provides real time

graphical feedback to help users refine their work in

the process of composition. In particular, Nyquist is

effective for manipulations of detailed sound, and that

can combine coding and visual representation to give

the user a more clear view. Additionally, the use of

Nyquist has been involved in music schools such as

Carnegie Mellon University and experimental music

setups. In academics, Nyquist IDE can be an

interesting tool for the students in additive and

frequency modulation sound synthesis, among others.

This focus allows students to not only learn about

sound theory but also apply both their music and

computer knowledge in a creative way, making it

ideal for research and higher education where

students can experiment with the technical.

Nyquist lends well to experiments, especially

where composers rely heavily on algorithmic

composition or real time sound processing. In

practice, it has been applied to a number of generative

music projects where compositions evolve

algorithmically according to predefined rules. This

use of Nyquist is particularly useful in composing

works that react to change variables. Therefore,

Nyquist is quite suitable for everything from

experimental and algorithmic composition to

traditional creation of music. its flexibility and

interactive features make it a useful tool in sound

design, composition, and live performances.

Figure 1: The Nyquist IDE (Roger B. Dannenberg-2008).

4 AUDACITY

Audacity is free software that most people in the

world use in recording, editing, and analyzing audio

material. Because of its simplicity and its zero cost,

many have applied it to the fields of education,

research, and music making (Azalia et al, 2022;

Washnik et al, 2023). Probably one of the main

reasons for such popularity is the ease of installing it

and using it. Its techniques are easily grasped by

novices up to professional categories of people.

Audacity requires the least amount of hardware to

work with and comes with a very friendly interface

wherein any user can easily see and edit the sound

waves. It can support a range of audio formats. Users

can import and export files in formats such as WAV,

MP3, and AIFF. Once the audio has been imported,

the user can edit the sound by cutting, copying,

pasting, or applying effects such as noise reduction,

equalization, and reverb. This visual representation of

DAML 2024 - International Conference on Data Analysis and Machine Learning

210

sound waves allows users to edit and adjust the audio

without having much technical knowledge. For

instance, it enables the user to zoom in on a sound

wave, piece by piece, making very little changes to

enhance either the quality or the time of a recording.

Furthermore, Audacity can normally be used for

sound analysis, both in scientific and educational

settings. The strong points feature the display of

sound spectrograms showing the audio frequency

content versus time and this offers a lot in terms of

experiments dealing with waves of sound. In this

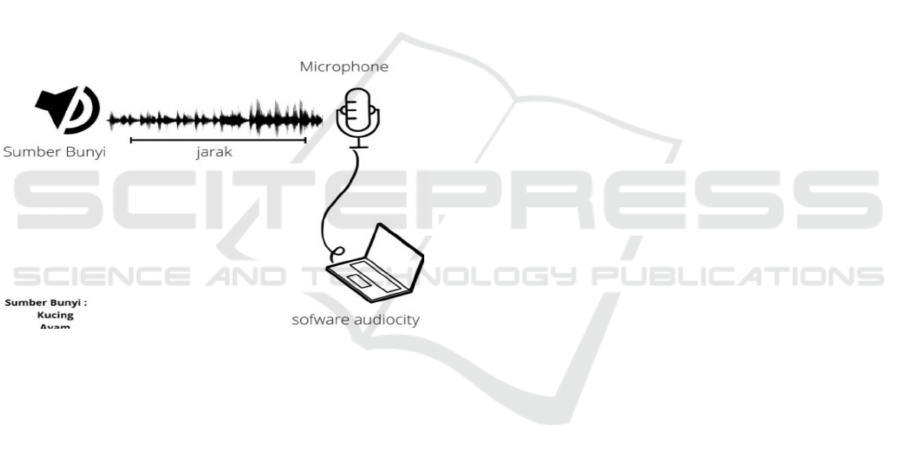

setup, as shown in Fig. 2, a microphone is connected

to Audacity and records sound from a source, such as

a cat’s meow. Then, the sound visually appears in

Audacity and can be analyzed in characteristics such

as frequency, amplitude, and time. Thus, Audacity

can help students and researchers in finding important

concepts of physics and acoustics about how sound

waves behave, interact with one another, and take part

in yielding different frequencies to result in an overall

sound.

Figure 2: Implementation Process for Audacity

(Aisha Azalia, Desi Ramadhanti, Hestiana, Heru

Kuswanto-2022).

Audacity also has been used in actual scientific

research. For example, one study focused on the

aspects of sound frequencies within a variety of

animals. In doing so, it utilized Audacity to record

and, later, to analyze the vocalizations of several cats.

The recorded sounds could be plotted as

spectrograms with the software, which allowed the

researcher to see variations in frequency and

amplitude between the many vocalizations. This

example with Audacity had shown how easily it could

process and visualize such complex sound data and,

therefore, support scientific experiments with

acoustics and sound analysis.

In addition, Audacity is most commonly used by

musicians and podcasters, even though it has

important applications in education and research.

This software provides multitracking editing features

and that allows the user to layer multiple audio

recordings. While Audacity is lightweight, its

functionality allows users to work with it just as well

as with many other more expensive audio production

programs. Musicians will find it easy to record

instruments or vocals, while podcasters can also

easily record and edit shows without much hassle.

Another great feature in Audacity is the ability to

extend Audacity with third party plugins for specific

needs in the creation of music, production of a

podcast, or even a scientific experiment.

5 GARAGEBAND

GarageBand is a digital audio workstation that has

taken vows to be simple and straightforward for

beginning users, though it should also be powerful for

advanced ones. As part of the Apple ecosystem,

GarageBand is available on Mac, iPhone, and iPad. It

is widely used by users looking to create music with

minimal effort, making it a popular tool for beginners

and hobbyists. One characteristic of GarageBand is

an intuitively built interface with controls that allow

people to make professional sounding audio.

GarageBand simplifies the music production process,

whether users are recording live instruments, using

virtual ones, or arranging pre-recorded loops.

GarageBand has various basic functions in music

production. Users can create and layer multiple tracks

by using its preset virtual instruments that range from

pianos to guitars and drums. It also boasts a massive

library of loops, which are essentially pre set musical

phrases that can easily be put together to create whole

songs. For a beginning composer, it can be very

helpful as an entry point, without requiring a deep

understanding of music theory. For those who want

to record live, GarageBand has the ability to hook up

a microphone or other instruments, such as guitars or

keyboards, and record right into the system.

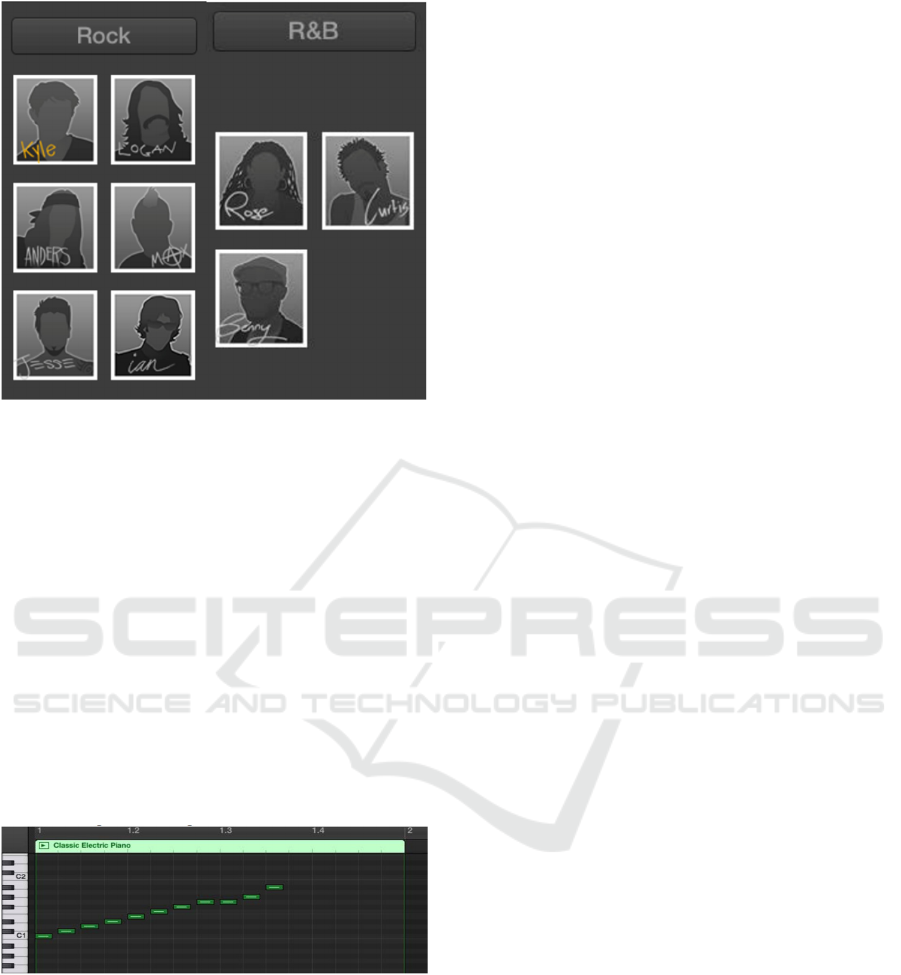

In addition to its basic features, GarageBand

offers tools that contain creative flexibility. One of

these is the Smart Instruments feature, which includes

tools like the Smart Drummer. This function allows

users to integrate drum tracks into their compositions

by selecting from a range of virtual drummers, each

with different playing styles (seen from Fig. 3). These

drummers can be customized to fit the specific needs

of the composition, adjusting aspects like tempo and

rhythm patterns. This feature simplifies the process of

creating rhythm sections, even for users with no prior

experience in programming drums (Bell, 2015;

LeVitus, 2021).

Comparison of Music Composing Software: Nyquist IDE, Audacity, and Garage Band

211

Figure 3: The garage band drummers (Vincent C.

Bates,Brent C. Talbot-2015).

Another noticeable tool of GarageBand is its

multitrack editing capability. The user can layer

various sounds, apply effects, and mix tracks. This

function will enable the user to create more complex

compositions by recording each instrument

separately and then combining them. Especially for

musicians, this feature allows them to edit their

recordings to a professional quality. Such

functionality includes the Piano Roll Editor, which is

able to edit MIDI tracks by changing individual notes

themselves, as shown in Fig. 4. The editor will be

especially appealing to people working with virtual

instruments because it gives users precise control

over timing, pitch, and duration of every note for

exact editing and arrangement.

Figure 4: The piano roll editor view in Garage Band

(Vincent C. Bates,Brent C. Talbot-2015).

Besides, GarageBand found its place in both

professional and semi professional music production.

Quite a number of people use the software for

recording demos or even producing finished tracks.

Sometimes composers will even choose Garage band

over professional music making software like Logic

Pro due to its simplicity and strong editing features.

While it may not have all the advanced features of

more expensive DAWs, GarageBand's versatility

ensures that users can produce high quality music

with minimal effort.

6 LIMITATIONS AND

PROSPECTS

Limitations of each reviewed music software

platform-Nyquist IDE, Audacity, and GarageBand-

are associated with the scope of use and design. A

major problem most of the tools are facing is that they

need to choose between being easily accessible and

being complex. While useful in providing features for

some user groups, they often poorly try to fulfill

larger or more advanced needs. For example, the

Nyquist IDE, while it does grant an extremely

customizable environment for sound synthesis and

music composition, highly involves deep coding

inside. This can be very discouraging to casual users

or people with no experience in programming,

therefore increase the time cost of use. The flexibility

Nyquist offers with respect to real time processing of

sound and behavioral abstraction is laudable but at a

rather high learning price. This also makes it difficult

to use in live performances or educational situations

with people who are not used to programming. The

lack of any more intuitive graphical interface restricts

its use for live performance or education when non-

programmers need an easier tool.

Audacity is widely praised for its simplicity but

falls short for advanced features. It is really good at

basic audio editing and recording but loses its

potential whenever real time processing, complex

multi-track editing, or sound synthesis comes into

play. For a more advanced user who wants to do

music production or sound design, the capabilities are

somewhat limiting and need supplementation of their

workflow with additional software or plugins. Also,

its reliance on third party plugins introduces

complications given that the integration isn't always

smooth, and this software can feel a little fragmented.

For GarageBand, compared to professional

DAWs such as Logic Pro or Ableton Live, it lacks

more sophisticated features: detailed mixing and

mastering capabilities, for example, or further

developed sound manipulation. Perfect for hobbyists,

the limitations are more apparent for professionals

when they push the boundaries of sound production

in live performances or more intricate compositions.

Going forward, some of the most promising

features for music software platforms include aspects

of machine learning. With this in mind, AI is

DAML 2024 - International Conference on Data Analysis and Machine Learning

212

supposed to solve most of the deficiencies in the

status quo and can automate mundane tasks, make

intelligent suggestions, thus can provide tools at all

levels of user expertise. For example, Nyquist IDE

could use machine learning to make smart

suggestions, auto completion features that would help

a beginner to develop things using his tool more

easily. Audacity will be able to embed AI audio

enhancement capabilities, such as instant noise

reduction or auto-mastering capabilities within the

application. In this way, it ensures better sound

quality without actually having to learn all the

technical details involved. GarageBand might

introduce adaptive virtual instruments or smart loops

that, by user input interaction, adapt themselves for

interactive creation rather than manual adjustments.

This could also mean more creativity in general,

possibly opening new frontiers when machine

learning would be integrated into such platforms. It

would allow users to try out new genres, styles, and

techniques by employing AI-driven algorithms that

would automatically make suggestions regarding

harmonies, rhythms, or even full composition. The

gap between casual users and professionals would be

closed by making music creation more accessible and

providing advanced tools to all skill levels.

7 CONCLUSIONS

To sum up, a comparison between Nyquist IDE,

Audacity, and GarageBand reveals how each is suited

for different types of users from highly complicated

sound synthesizers to more simple audio editors.

Nyquist IDE is good at providing functionality for the

users by granularly controlling the sound through

codes; however, such complexity makes it difficult

for a beginner to work with. While Audacity is

simpler, it doesn't support all advanced features

necessary in professional and complex music

creation, whereas GarageBand strikes the midpoint

between the two, providing a platform that is easy to

use by casual creators but lacking in professional

settings. Whereas the limitations mentioned here look

ahead to the implementation of machine learning that

will further the user experience into automation and

intelligent suggestions, this study does stress the right

tool choice based on the creative needs of the user. It

points to some future technological developments

that will make music production more accessible and

efficient regardless of skill level.

REFERENCES

Azalia, A., Ramadhanti, D., Hestiana, H., Kuswanto, H.,

2022. Audacity Software Analysis in Analyzing the

Frequency and Character of the Sound Spectrum.

Journal Penelitian Pendidikan IPA, 2-6.

Bell, A. P., 2015. Can We Afford These Affordances?

GarageBand and the Double-Edged Sword of the

Digital Audio Workstation. Action, Criticism, and

Theory for Music Education, 44-47, 57-61.

Burlet, G., Hindle, A., 2013. An Empirical Study of End-

User Programmers in the Computer Music Community.

Journal of New Music Research, 1-2.

Dannenberg, R. B., 1996. Nyquist: A Language for

Composition and Sound Synthesis. Proceedings of the

International Computer Music Conference. 1-6

Dannenberg, R. B., 1997. Real-Time Processing in

Nyquist. Proceedings of the International Computer

Music Conference, 1-12

Gorbunova, I., Hiner, H., 2019. Music Computer

Technologies and Interactive Systems of Education in

Digital Age School. Atlantis Press, 1-2, 9-10.

Lazzarini, V., 2013. The development of computer music

programming systems. Journal of New Music Research,

1(1), 7, 13.

LeVitus, B., 2021. GarageBand For Dummies. John Wiley

& Sons, Inc., 15-25.

Manning, P., 2013. Electronic and computer music. Oxford

University Press, 14–16.

McPherson, A., Tahiroğlu, K., 2020. Idiomatic Patterns

and Aesthetic Influence in Computer Music Languages.

Cambridge University Press, 65-67.

Miranda, E. R., Biles, A., 2007. Evolutionary computer

music. Springer-Verlag, 28–35.

Washnik, N., Suresh, C., Lee, C. Y., 2023. Using Audacity

Software to Enhance Teaching and Learning of

Hearing Science Course: A Tutorial. Teaching and

Learning in Communication Sciences & Disorders, 2,

6-7.

Comparison of Music Composing Software: Nyquist IDE, Audacity, and Garage Band

213