Car Price Prediction Based on Multiple Machine Learning Models

Hangzhi Chen

a

Wuhan Experimental Foreign Languages School, Wuhan, Hubei, China

Keywords: Car Price Prediction, Linear Regression, Random Forest, XGBoost.

Abstract: This article takes car price prediction based on three machine learning models as the topic. Cars, especially

in recent decades, are becoming increasingly necessary for companies and families around the world. As the

demand for cars grows, further concerns about purchasing vehicles emerge. Obviously, car price acts as a key

factor in making these decisions. Therefore, car price prediction becomes a meaningful topic to discuss about.

In this passage, comparisons between three machine learning models, Linear Regression, Random Forest and

XGBoost, are carried out. The three models are applied to a train and a test dataset and the performances are

evaluated by Root Mean Squared Error (RMSE) and accuracy. For the train dataset, the RMSE for Linear

Regression, Random Forest and XGBoost are 534,865.15, 155,706.68 and 302,861.88 while the accuracy

reveals to be 61.64%, 96.75% and 87.70%. For the test dataset, the RMSE for Linear Regression, Random

Forest and XGBoost are 555,802.12, 338,065.9 and 337,698.16 while the accuracy is 58.44%, 84.62% and

84.66%. The overall conclusion is that Random Forest learnt slightly faster and better than XGBoost but

performed almost the same in predicting car prices. Linear regression performed the worst throughout all

datasets.

1 INTRODUCTION

Humans have taken advantage of an immense amount

of means of transportation. One category of popular

travel tools is cars. Invented in 1886, cars are now

becoming an increasingly indispensable part of

people’s life. More companies and families are

owning vehicles of different types recently, many of

which own two or more. Cars are often regarded as

dependable tools during work period and are

considered as reliable friends for travellers. From

application scenarios of cars, it is easy to notice that

the mobilization efficiency has been greatly increased

and the range of human activities on land has been

greatly expanded. In recent years, the demand for cars

has met a substantial surge. When considering

purchasing a car, the price of it serves as a vital factor,

while it could differ greatly due to a variety of

aspects. After the pandemic and experiencing

dramatic changes in energy resources, it has been

widely acknowledged that wide swings can be

common in the car market over the near future.

Therefore, more advanced methods are required to be

applied to predict car prices, which can contribute to

a

https://orcid.org/0009-0006-6242-0598

making vehicle purchase decisions. Furthermore, this

will also provide vehicle companies with

convenience in pricing their automobiles and defining

future development direction.

With the popularization of Artificial Intelligence

(AI) and relevant technologies (Roll, 2016; Chen,

2022; Patil, 1998), it is apparent that algorithms are

playing vital roles on various occasions. In recent

years, lots of representative algorithms have

appeared, including the random forest, decision tree,

logistic regression etc. Machine learning algorithms

have been widely applied to various fields, such as

chemistry, biomedicine and especially business

analytics. For instance, Diamantaras et al. used eight

machine learning models to predict airfare prices

(Diamantaras, 2017). Karthikeyan et al. used

ensemble-based machine learning techniques to carry

out gold price prediction (Karthikeyan, 2019).

Michael et al. determined how variations in the

Deoxyribonucleic Acid (DNA) of individuals can

affect the risk of different diseases through machine

learning (Alipanahi, 2015). One important field

included is car price prediction. For instance, Gegic

et al. used artificial neural network, support vector

92

Chen and H.

Car Price Prediction Based on Multiple Machine Learning Models.

DOI: 10.5220/0013509000004619

In Proceedings of the 2nd International Conference on Data Analysis and Machine Lear ning (DAML 2024), pages 92-95

ISBN: 978-989-758-754-2

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

machine and random forest to predict car price

(Gegic, 2019). It is now a fact that AI can bring about

accurate prediction results. Therefore, this paper

intends to consider using machine learning models to

predict the corresponding price according to various

features of cars and analyze the importance of the

contribution factors.

The dataset used in the study is taken from Kaggle

(Kaggle, 2021). The study considers using three

machine learning models to predict car prices,

including Linear Regression, Random Forest and

XGBoost. The implementation of code was referred

to a notebook written on Kaggle (Kaggle, 2019).

Standards used to compare their performances are

Root Mean Squared Error (RMSE) and accuracy.

Feature importance function in Random Forest was

also used to evaluate the importance of the

contribution factors.

2 METHOD

2.1 Dataset Preparation

The dataset used in this study is taken from Kaggle

(Kaggle, 2019). The original dataset includes

information of 6, 1500 vehicles and 22 variables.

Before inputting the dataset, 11 of them such as 'url',

'city' was dropped due to the existence of NaN

columns. In addition, ’Year’ and ‘Odometer’ features

were transferred into int type.

Before inputting the dataset, it was split into a

train dataset and a test dataset. The train dataset

included 525, 839 rows and the test dataset included

34, 440 rows.

2.2 Machine Learning Models-based

Prediction

This study used Linear Regression, Random Forest

and XGBoost for car price prediction. The models

were imported from sklearn. The evaluation metrics

applied in this study included RMSE and accuracy.

2.2.1 Linear Regression

Linear Regression is a widely utilized machine

learning model, commonly employed in statistical

analysis to explore and predict relationships between

variables. It operates on the principle of fitting a

straight line to a set of data points in a manner that

best captures the underlying relationship between two

features, typically used for continuous target

variables.

In practice, Linear Regression analyzes paired

data to determine a direct correlation, making it

especially valuable in scenarios where the

relationship between the independent variable (or

predictor) and the dependent variable (or outcome) is

expected to be linear. This model constructs a linear

equation based on the input dataset, plotting a line in

a two-dimensional coordinate system aimed at best

fitting the observed data points. This linear

representation enables predictions regarding future

outcomes by projecting the dependent variable's

values based on current trends.

However, the real-world application of Linear

Regression acknowledges that the model cannot

perfectly fit every data point. There will invariably be

deviations between the actual values and the values

predicted by the model, which are termed as

residuals. The presence of these residuals indicates

the error inherent in the model predictions, reflecting

the nuances and variability in real-world data that

cannot be fully captured by a linear model.

To evaluate the performance of a Linear

Regression model, a metric known as Root Mean

Square Error (RMSE) is used. RMSE measures the

average magnitude of the residuals, providing insight

into how much error is typically involved in the

predictions made by the linear model. A lower RMSE

value indicates a better fit of the model to the data,

suggesting that the linear equation is effective at

capturing the primary trends and reducing the

prediction errors.

Despite its simplicity, Linear Regression has

profound implications across various fields, from

economics and finance to healthcare and social

sciences. It aids in understanding and quantifying the

strength of relationships between variables,

facilitating decision-making processes by providing a

clear, quantifiable link between different variables.

For instance, businesses often use Linear Regression

to predict sales based on advertising spend, or

healthcare providers might use it to predict patient

outcomes based on treatment protocols.

2.2.2 Random Forest

Random Forest is an ensemble learning method that

builds on the simplicity of decision trees by

combining multiple such trees to form a more robust

and accurate model. This technique leverages the

strength of numerous decision trees, each serving as

an estimator, to improve predictive accuracy and

control over-fitting, which is a common problem in

single decision tree models. The core idea behind

Car Price Prediction Based on Multiple Machine Learning Models

93

Random Forest is to create a 'forest' of trees where

each tree is slightly different from the others.

The model begins by using a technique called

Bootstrap Aggregating, or bagging, to create different

subsets of the original dataset, with replacement.

Each subset is used to train a separate decision tree.

Since the subsets can have overlapping data points,

each tree in the forest is not identically trained, which

helps in making the ensemble of trees less sensitive

to the specifics of the training data, thereby enhancing

the generalization capabilities of the model.

During prediction, Random Forest takes an

average of the outputs from all the decision trees for

regression problems, or a majority vote for

classification, to improve predictive accuracy. By

aggregating the results, the model not only reduces

the variance but also combines the individual trees’

predictions to produce a more accurate and stable

estimation than any single tree could provide.

Despite its many benefits, such as high accuracy,

the ability to handle a large number of input variables,

and robustness to overfitting, the Random Forest

model does have some drawbacks. One significant

limitation is the loss of interpretability that comes

with using a single decision tree. The complex

structure of multiple trees makes it difficult to

visualize and understand the specific reasons behind

predictions, thus complicating the explanation of

model decisions to stakeholders who are not technical

experts.

Furthermore, while Random Forest is generally

good at handling overfitting, it can still perform

poorly in the presence of noisy classification or

regression problems. This occurs because noise can

lead to overly complex trees, which, even when

averaged, result in an overfitted model. Therefore,

while Random Forest is a powerful tool in the arsenal

of machine learning techniques, it requires careful

tuning of parameters like the number of trees in the

forest and the depth of each tree to optimize its

performance and prevent overfitting. This ensemble

method, hence, offers a balance between accuracy

and complexity, providing a reliable predictive tool

across various applications in fields ranging from

finance to healthcare.

2.2.3 XGBoost

XGBoost is an abbreviation for eXtreme Gradient

Boosting. It is a machine learning model with

massively parallel boosting trees. The whole process

is an additive model made up by multiple base

models. When generating a processing tree,

algorithms including greedy algorithm for best

solutions and approximation algorithm for massive

data, can be practical alternatives. Advantages for the

XGBoost are high accuracy and flexibility, learning

shrinkage, regularization included, column sampling

and various tools to support parallel. Disadvantages

also exist, during the whole process, all data have to

be traversed. Furthermore, the prearrangement

process requires relatively high space complexity to

store the index of the gradient statistics for the

corresponding sample.

3 RESULTS AND DISCUSSION

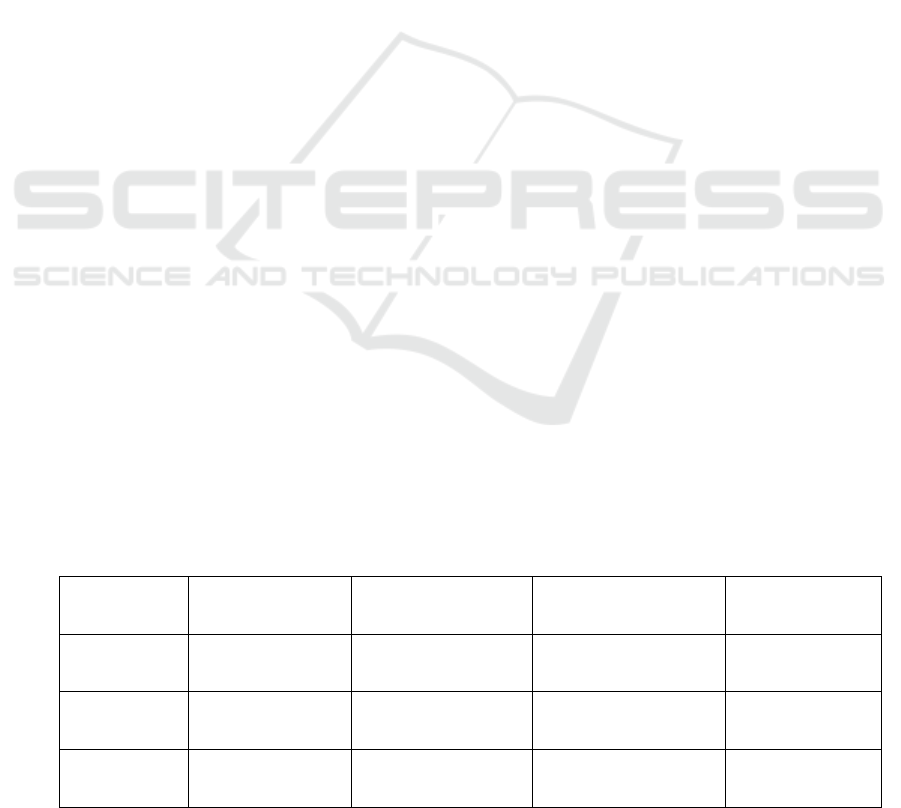

The results of the predictions are shown in Table 1.

Illustrated by the Table, it’s obvious that for the train

dataset, Random Forest reached the highest accuracy

of 96.75% while XGBoost demonstrated fairly well,

achieving 87.70%, followed by Linear Regression

which appeared to be the worst among the three with

an accuracy of 61.64%. The result is almost the same

when it comes to train RMSE. Random Forest, the

best among all three, had the lowest RMSE of

155,706.68, next is XGBoost with the value of

302,861.88 and Linear Regression with the highest

RMSE of 534,865.15.

Table 1. The performance of different models

Model name Train Accuracy Train RMSE Test Accuracy Test RMSE

Linear

Regression

61.64% 534,865.15 58.44% 555,802.12

Random Forest 96.75% 155,706.68 84.62% 338,065.9

XGBoost 87.70% 302,861.88 84.66% 337,698.16

DAML 2024 - International Conference on Data Analysis and Machine Learning

94

However, while applying them to the test dataset,

the accuracy of Random Forest was almost the same

as XGBoost which were both around 85%, 84.62%

and 84.66% representatively, while Linear regression

kept carrying out the worst performance with an

accuracy of 58.44%. The same condition emerged

when considering RMSE. The RMSE of Random

Forest and XGBoost were about the same, 338,065.9

and 337,698.16 each, and the largest one remained

Linear Regression with a value of 555,802.12, almost

the same as the train RMSE.

Above all, Random Forest learnt slightly faster

and better than XGBoost as Random Forest

performed evidently better when greeting the train

dataset. However, Random Forest predicted as

accurately as XGBoost did according to the test

dataset results. It’s obvious to notice that Linear

Regression performed the worst throughout all

datasets.

It was a possible reason that Linear regression is a

one-time analyzation while others went through two

times or more. What’s more, multiple results were

generated and concluded in both Random Forest and

XGBoost, while only one was generated from Linear

Regression.

Noticeably, some of the reasons concluded are

only based on the understanding towards the

algorithms and are lack of further research data

support. However, in a study written by Vishal

Khandare and Manish Pandey in which they used the

same three models to predict electric car prices, the

results come out to be almost the same (Khandare,

2022). In the studies mentioned above, Random

Forest performs the best, reaching an accuracy of

90.38% while XGBoost gets 89% and Linear

Regression achieves 67%. Therefore, the result is

quite credible to a certain extent. However, the

reasons and logic behind it still need further work and

research.

4 CONCLUSIONS

This paper compared the performances between three

machine learning models which are Linear

Regression, Random Forest and XGBoost

representatively. The standards used to appraise the

results are Root Mean Squared Error (RMSE) and

accuracy. Through the final results, it is evident that

Random Forest learnt slightly faster and better than

XGBoost but performed almost the same in

predicting car prices. Linear regression performed the

worst throughout all datasets. It was a possible reason

that Linear regression is a one-time analyzation and

only one result is concluded, much less than the other

two models.

This article supports the feasibility of machine

learning models and analyzed the reasons why the

performances differed from each other. The reasons

mentioned above are according to the personal

perspectives and understanding and requires more

accurate data to support them. However, these can

still serve as a clue to analyze different machine

learning models and select the best to be applied to

car price prediction.

REFERENCES

Alipanahi, B., Leung, M. K., Delong, A., & Frey, B. J.

2015. Machine learning in genomic medicine: a review

of computational problems and data sets. Proceedings

of the IEEE, 104(1), 176-197.

Chen, X., Zou, D., Xie, H., Cheng, G., & Liu, C. 2022. Two

decades of artificial intelligence in education.

Educational Technology & Society, 25(1), 28-47.

Diamantaras, K. I., Tziridis, K., Kalampokas, T., &

Papakostas, G. A. 2017. Airfare prices prediction using

machine learning techniques. 2017 25th European

Signal Processing Conference (EUSIPCO), 1036-1039.

IEEE.

Gegic, E., Isakovic, B., Keco, D., Masetic, Z., & Kevric, J.

2019. Car price prediction using machine learning

techniques. TEM Journal, 8(1), 113.

Kaggle. 2019. Used cars price prediction by 15 models.

Retrieved from

https://www.kaggle.com/code/vbmokin/used-cars-

price-prediction-by-15-models/notebook

Kaggle. 2021. Craigslist carstrucks data. Retrieved from

https://www.kaggle.com/datasets/austinreese/craigslist

-carstrucks-data

Karthikeyan, P., & Manjula, K. A. 2019. Gold price

prediction using ensemble based machine learning

techniques. 2019 3rd International Conference on

Trends in Electronics and Informatics (ICOEI), 1360-

1364. IEEE.

Khandare, V., & Pandey, M. 2022. Electric Car Price

Prediction using Machine Learning Techniques.

International Journal of Engineering Research in

Computer Science and Engineering (IJERCSE), 9(11).

Patil, R. S., Szolovits, P., & Schwartz, W. B. 1988.

Artificial intelligence in medical diagnosis. Annals of

internal medicine, 108(1), 80-87.

Roll, I., & Wylie, R. 2016. Evolution and revolution in

artificial intelligence in education. International journal

of artificial intelligence in education, 26, 582-599.

Car Price Prediction Based on Multiple Machine Learning Models

95