Explore the Impact and Key Role of AI Technology in

Autonomous Driving

Laikai Xu

a

Sino-British College, University of Shanghai for Science and Technology, Middle FuXin Road 1195, ShangHai, China

Keywords: Artificial Intelligence, Autonomous Driving, Automotive Sensors, Neural Networks.

Abstract: Artificial intelligence technology in today's world is in a stage of exponential explosive growth, and the time

of technology iteration is getting faster and faster, so artificial intelligence will soon penetrate into every

industry. It is obvious that driverless technology is developing more and more rapidly with the support of

artificial intelligence. For example, Google's driverless project, Tesla's Autopilot, and China's Baidu's Carrot

Travel are rapidly affecting people's travel methods. At the same time, it is very clear that more and more

technology companies are pouring into the research and development of driverless technology like a flood.

This paper will discuss the key role and impact of artificial intelligence in driverless technology, introduce

the sensors of self-driving cars, describe the significance of sensor fusion strategies, and analyze the deep

learning models used in data processing. At this stage, AI has become a hot spot in the development of world

science and technology. The research object of this paper is about the key role of AI in the field of autonomous

driving.

1 INTRODUCTION

First of all, in real life, many people may not know

the true meaning of self-driving cars (AVs), which

can sense the external environment through the car's

sensors, and then transmit the data to the car's interior

for analysis and then predict and plan the next

operation of the car. Driverless cars rely on

technologies such as Global Positioning systems

(GPS), lidar and radar to take the surrounding

environment and transmit the data to the next part of

the vehicle to determine the direction and speed of the

vehicle, as well as to predict the behaviour of other

road users, such as cyclists and pedestrians walking

on the road. (Isaac, 2016). For centuries, since the

invention of the automobile, many scientists have

dreamed of enabling cars to drive themselves. The

concept of self-driving cars dates back to the 16th

century, when Leonardo Da Vinci designed a small,

three-wheeled self-propelled car with a small pre-

programmed steering system and parking brakes

combined with a series of propulsion springs, which

is amazing because it provides humanity with the

earliest blueprint for future self-driving cars.

a

https://orcid.org/0009-0002-7899-081X

The early modern autonomous car experiments

were led by Carnegie Mellon University (CMU) and

Delphi University (DELCO), using simple sensors

and algorithms. By the 1990s, Carnegie Mellon's Na-

vigation Laboratory (Navlab) developed early

prototypes of autonomous vehicles using computer

vision and sensor fusion. In the past 20 years,

autonomous driving technology has continued to

mature, and many technology giants have launched

their own autonomous driving systems, such as

Google and Tesla. Although they are not fully

autonomous, their advanced driver assistance systems

(ADAS) functions demonstrate the potential of AI in

car driving.

Today, more and more companies have joined in

and achieved a series of technological breakthroughs:

advances in deep learning, computer vision, sensor

technology (such as lidar) and high-precision map

technology have made driverless cars more reliable in

various complex environments.

In recent years, more and more scholars have been

studying how AI can enhance driverless technology,

indicating that AI is still promising in enhancing

traffic safety and improving traffic efficiency.

According to research, about 1.35 million people die

Xu, L.

Explore the Impact and Key Role of AI Technology in Autonomous Driving.

DOI: 10.5220/0013234100004558

In Proceedings of the 1st International Conference on Modern Logistics and Supply Chain Management (MLSCM 2024), pages 93-98

ISBN: 978-989-758-738-2

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

93

in traffic accidents every year in the world, and about

90% of these accidents are caused by human error.

However, driverless technology with the support of

AI can well reduce the probability of human error,

because AI can predict and avoid potential dangers

and reduce the incidence of accidents by analyzing

sensor data in real time.

In addition, AI also optimizes vehicle path

planning and traffic management, which can reduce

traffic congestion and improve overall transportation

efficiency. AI can also continuously optimize the

vehicle's self-diagnosis and maintenance prediction

functions through machine learning and big data

analysis, and improve the reliability of the system

(Bhardwaj, 2024).

However, it is worth noting that although AI can

rapidly promote the development of driverless

technology, it still has shortcomings in some aspects.

For example, when driverless cars face some complex

road conditions, the system may not have processed

similar data before and make wrong decisions. In

addition, when facing some extreme weather

conditions, AI processing data will also make similar

mistakes.

Therefore, exploring smarter AI models and

finding better sensors to achieve the future AI can

quickly analyze some unknown data sets and make

correct decisions efficiently during driving under

some disadvantageous conditions. This paper focuses

on summarizing what relevant models and algorithms

AI will use in driverless technology, and will point

out what kind of profound impact the arrival of AI

will have on society, and how governments should

take the right measures to prevent the harm caused by

AI in the face of these problems.

2 THE KEY ROLE OF AI IN

DRIVELESS TECHNOLOG

Y

The AI can play a key role in the field of driverless

driving, so what specific key roles can it play? First,

AI can enable self-driving vehicles to perceive the

surrounding environment through advanced sensors,

cameras, radar, lidar, GPS and other equipment, and

make real-time decisions. Secondly, AI can improve

the safety of autonomous driving through algorithms,

especially methods based on deep learning, which

have made significant progress in key components

such as vehicle perception, object detection and

planning.

An Intel report revealed that self-driving

technology will reduce users’ commuting times and

save hundreds of thousands of lives over the next

decade. For example, the first self-driving vehicle

using neural networks in 1988 was able to generate

control commands from camera images acquired by a

laser rangefinder. Finally, and more importantly,

since the decision-making process of autonomous

driving systems is opaque to humans, explainable AI

(XAI) is needed to provide transparency in the

decision-making process, enhance user trust in the

system, and meet regulatory requirements (Shahin et

al., 2024).

The main way for humans to perceive the outside

world is through various senses. Similarly, cars

perceive the outside world mainly through various

sensors that act as the "senses" of the car to perceive

changes in the external environment. Sensors play an

extremely important role in the process of

autonomous driving and are the key to the decision-

making process of autonomous vehicles.

The data they collect has heterogeneous and

multimodal characteristics, and these data are further

integrated to form effective decision-making rules. In

the autonomous driving system, they are the basis for

perceiving the surrounding environment of the

vehicle. In addition, they can also provide detailed

information about objects around the vehicle, roads

and traffic conditions.

Sensors can detect obstacles, identify road signs,

measure vehicle speed and position, etc., which

greatly improves driving safety. Sensors are divided

into short-range, medium-range and long-range

according to the transportation range of wireless

technology. Specific sensor types include cameras,

millimetre wave radar (MMW-Radar), GPS, inertial

measurement unit (IMU), lidar, ultrasonic and

communication modules. Each sensor has its own

characteristics. For example, cameras can be widely

used for environmental observation and can produce

high-resolution images, but are affected by lighting

and weather conditions. LiDAR can estimate distance

and generate point cloud data by emitting laser pulses

and measuring the time it takes to reflect back, but it

is expensive. By comparison, so each sensor has its

advantages and disadvantages (Ignatious et al., 2022).

It is obvious that a powerful sensor system is

needed to truly realize unmanned driving, so perform

sensor fusion to complement the advantages and

disadvantages of each sensor and achieve efficient

operation. Sensor fusion is the combination of data

from multiple sensors to obtain more accurate and

reliable environmental information than a single

sensor. Scholars have studied many sensors fusion

strategies. This paper mainly discusses three

important fusion levels. (1) Low-level Fusion (LLF):

MLSCM 2024 - International Conference on Modern Logistics and Supply Chain Management

94

Driverless cars improve the accuracy of obstacle

detection by integrating the lowest level of sensor

data, also known as raw data, and retaining all the

data. (2) Intermediate Fusion (MLF): The car fuses

the corresponding sensor data, such as colour

information extracted from radar and lidar images or

position features. (3) High-Level Fusion (HLF): Each

sensor independently executes the target detection or

tracking algorithm, and then combines the results

together to work together to achieve the same goal. In

addition to the fusion of the three levels of sensors,

algorithmic fusion is also carried out, such as the

fusion of convolutional neural networks (CNN) and

recurrent neural networks (RNN) (Yeong et al., 2021).

3 THE ROLE OF MACHINE

LEARNING AND DEEP

LEARNING IN AUTONOMOUS

DRIVING TECH

Machine learning is a branch of artificial intelligence

that enables systems to learn data from fixed

problems and train the data so that analytical models

can learn on their own. Automatically constructing

problems and autonomously learning to solve related

tasks, ML enables computers to discover hidden

insights and complex patterns without explicit

iterative programming to learn from training data for

specific problems. Deep learning is a conceptual

network (artificial neural network) of machine

learning based on artificial nerves.

For many applications, DL models outperform

shallow ML models and traditional data analysis

methods. In addition, DL is able to process large

amounts of high-dimensional data such as text,

images, videos, speech, and audio data by using deep

neural network architectures. Deep learning is a

subset of machine learning that focuses on using deep

neural networks to improve learning capabilities.

DL models typically contain multiple hidden

layers and are able to automatically discover high-

level feature representations of data (Janiesch et al.,

2021). For driverless technology, ML and DL play an

important role in four main aspects: environmental

perception, decision making, predictive modeling,

and real-time processing.

3.1 The Role of Convolutional Neural

Networks in Autonomous Driving

The architecture of a CNN, including input layers,

convolutional layers, pooling layers, and fully

connected layers. The key role of a convolutional

neural network is to detect objects on the road while

the car is driving. By training artificial neurons, CNN

can analyze different road scenarios, such as

collisions, open roads, traffic, etc., and send

appropriate instructions to the car based on these

scenarios, such as braking, accelerating, or

decelerating. In convolutional neural networks, the

You Only Look Once (YOLO) algorithm is

particularly worth mentioning.

It is a prediction technique that can provide

accurate results with fewer background errors. It has

excellent learning capabilities and can learn

representations of objects and apply them in object

detection (Mishrikotkar, 2022). YOLO is designed

for real-time applications and can achieve fast

detection speeds while maintaining high accuracy.

The network structure of YOLO includes several

main substructures, such as convBnLeaky,

bottleneck, bottleneckCSP, and SPP (Spatial Pyramid

Pooling), which are reused throughout the network to

extract image features.

In addition, YOLO can also efficiently extract

image features through its convolutional layers and

specially designed modules (such as CSPNet), and

gradually reduce the spatial dimension of the feature

map while increasing the feature depth (Liu et al.,

2021). Nowadays, many deep learning architectures

are aimed at target detection, but recently there is a

new study that uses deep networks for vehicle control,

which is called an end-to-end architecture. The input

source of this architecture is a deep network

composed of different sensors, and the weight

parameters are trained based on the training signal

input of the vehicle. As shown in Figure 1, a small

remote control car can pass obstacles in a controlled

real environment through a CNN network. This

neural network uses 7 convolutional layers and 4 fully

connected layers to map the steering angle training

signal to the raw pixel input from the front RGB

camera (Cameron Wesley Hodges et al., 2019).

Figure 1: Five-layer convolutional neural network

(Cameron Wesley Hodges et al., 2019).

Explore the Impact and Key Role of AI Technology in Autonomous Driving

95

3.2 The Role of Recurrent Neural

Networks in Autonomous Driving

Technology

RNN is different from the general neural network

architecture in that it introduces a recurrent layer,

which is the core of the RNN and can process

sequence data. The recurrent layer has a memory

function that can capture the dynamic features in the

time series. For unmanned driving technology, the

recurrent layer can learn the movement pattern of the

vehicle at different time steps. In addition, there is a

mixed density network output layer (MDN). Unlike

the traditional single output, the MDN can output a

probability density function to represent the

uncertainty and multimodality of the prediction. Then

the main role of RNN in the process of unmanned

driving is to predict the trajectory. The recurrent

neural network can process sequence data, which

enables them to identify the behavior patterns and

intentions of drivers at intersections.

Through the mixed density network output layer,

RNNs can provide a probability distribution of

predicted trajectories instead of a single deterministic

prediction, which helps to deal with uncertainty in the

real world. In order to enable the unmanned driving

system to select the most likely trajectory to respond,

the researchers introduced a clustering algorithm that

can extract a set of possible trajectories from the

probability distribution and sort them according to

their probabilities, which can effectively achieve this

goal (Zyner et al., 2019).

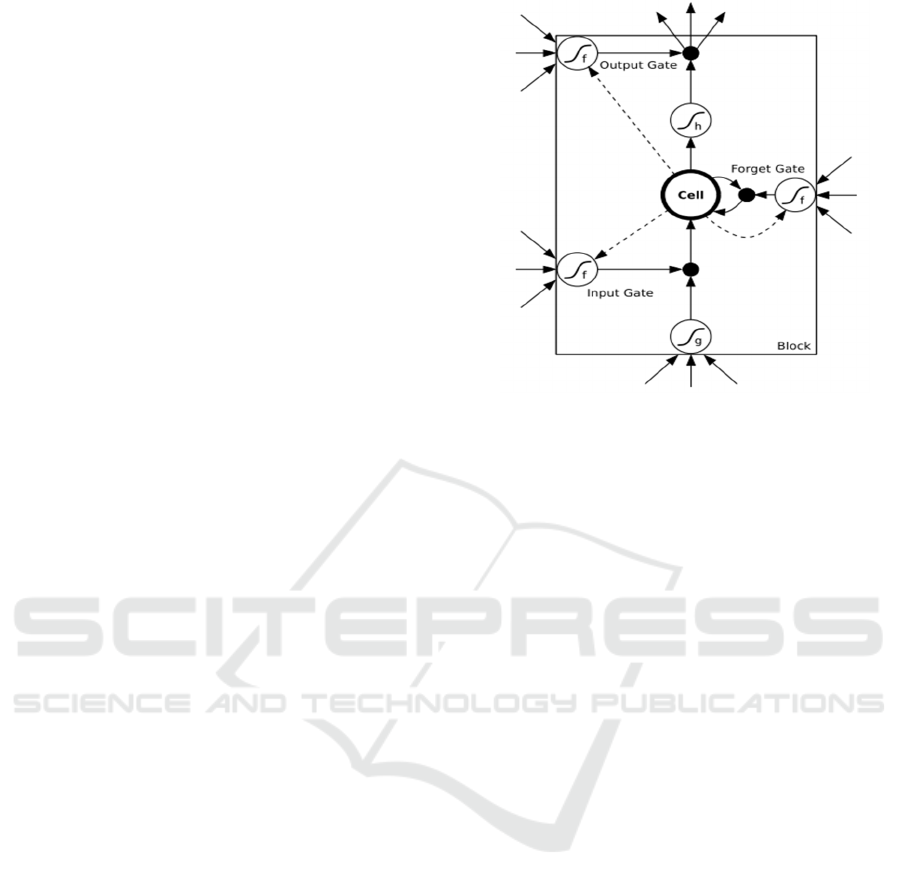

Studies have shown that the RNN framework can

classify the activities of objects on the road, and the

activity classification system can effectively identify

specific dangerous situations. RNN can use the

temporal information of the upper and lower parts to

map the input sequence to the output sequence, but

problems arise when the input information decays or

grows exponentially during the recursive connection

process of the network. Long short-term memory

(LSTM) is a structure of RNN that can solve this kind

of problem well. For example, as shown in Figure 2,

the vanishing gradient replaces the storage unit of

each node of the network. The storage unit is to

remember and accumulate cells, and the forget gate

determines what proportion of cell memory should be

kept in the next step. The input gate determines

whether the input should be allowed, and the output

gate determines whether the output of the block

should be sent. Unlike general RNN, the back

propagation error in LSTM does not disappear

exponentially over time, and the data is easy to train

(Khosroshahi et al., 2016).

Figure 2: An LSTM Memory Cell (Khosroshahi, et al.,

2016).

3.3 The Role of Deep Reinforcement

Learning Neural Network in

Autonomous Driving Technology

When a car is driving on the road, it is often not

smooth sailing. In the face of some complex road

sections, traditional supervised learning is difficult to

cope with the complex and changing driving

environment. Deep reinforcement learning can

effectively solve this problem by training machines

through interaction with the environment and

learning from mistakes.

The framework of deep reinforcement learning

consists of spatial aggregation, attention model,

temporal aggregation, and planning. This framework

integrates RNNs and uses RNNs to integrate

information on time series. RNNs can remember

previous state information and combine current

observations to infer the true state of the environment.

The attention mechanism is used to process relevant

information and reduce computational complexity.

The spatial bureau aggregation network contains

sensor fusion and spatial features. This network can

process data collected from multiple sensors (such as

cameras and lidars) and extract spatial features

through CNNs. In addition, Bayesian filter

algorithms, such as Kalman filters, are used to

process sensor readings and infer the true state of the

environment. Finally, reinforcement learning

planning is performed through a deep Q network

(DQN) or a deep deterministic policy gradient

(DDAC) algorithm. Studies have shown that the

framework was tested on the open source 3D racing

MLSCM 2024 - International Conference on Modern Logistics and Supply Chain Management

96

simulator TORCS (which provides complex road

curvature and simple interaction scenarios), and the

experimental results show that the proposed

framework is able to learn to drive autonomously in

these complex scenarios (Sallab et al., 2017).

4 CHALLENGES OF AI IN THE

FIELD OF AUTONOMOUS

DRIVING AND FUTURE

SOLUTIONS

Looking back at history, any emerging product will

face challenges in a certain era. For example, the

textile machines that appeared in the first industrial

revolution replaced workers, causing many workers

to lose their jobs. Similarly, driverless technology

will face similar situations in modern times.

Driverless cars will replace taxis. Especially with

the support of AI technology, driverless technology

will advance by leaps and bounds, which will make

people feel more and more uneasy. It will inevitably

face many challenges in the future.

4.1 Challenges of AI in Autonomous

Driving

4.1.1 The challenge Comes from AI Itself

AI systems must ensure that they can make safe

decisions in all situations. However, even though

driverless technology is gradually maturing with the

help of AI, it is still impossible to guarantee the safety

of road driving 100%, which highlights the lack of

AI's ability to understand and process complex traffic

environments. In addition, when in harsh

environments, it is difficult for sensors to obtain real-

time road conditions, resulting in AI being unable to

process data in a timely manner. At the same time,

improving the safety of AI systems requires a large

amount of data for training.

The cost of obtaining and processing data is

sometimes too high, and the manufacturing and

maintenance costs of autonomous vehicles are high.

Costs need to be reduced before they can be

popularized. When obtaining user data, many people

also say that their privacy rights are violated. AI

systems are very likely to be attacked by cyber

attacks, and there is currently no strong network

security protection system to maintain the safety of

AI autonomous driving.

4.1.2 The Challenge Comes from Society

AI driverless cars will face serious challenges in

society, especially ethical challenges. First of all,

people will not trust their lives to a machine to

manage, and many drivers cannot overcome the

psychological barriers brought by AI driverless cars.

In addition, driverless taxis are slowly squeezing the

living space of human drivers.

For example, China's Turnip Express has 6,000

orders every day in Wuhan, triggering a technological

ethics crisis. People are afraid that drivers will lose

their jobs after full popularization (Nair, 2024). The

most important thing is that the government does not

have a fair and transparent regulatory framework and

legal documents for AI driverless cars.

Each country has a very different way of

regulating driverless technology. For example, Japan

allows Level 4 autonomous vehicles, provided that

they comply with strict safety standards, while the

United States adopts a state-by-state approach. Some

states such as Arizona are very open to autonomous

vehicle testing. Faced with such a complex regulatory

chaos, it is a problem for some multinational travelers

(US, 2024).

4.2 Suggestions for Future AI

Driverless Technology

First, in the face of a complex regulatory

environment, governments need to communicate and

implement unified regulatory standards, including

who will supervise and how government officials will

supervise. A clear legal system needs to be

established. When faced with a car accident, the legal

responsibilities of both parties need to be quickly

clarified.

The government needs to formulate relevant laws

and regulations to ensure that people's living

standards are not negatively affected by AI in order

to deal with the moral and ethical dilemmas of social

personnel. Secondly, the privacy of user data needs to

be adequately protected so that users do not have to

worry about their personal information being stolen

while experiencing unmanned driving (West, 2016).

Finally, researchers should be committed to

innovation, research more powerful sensors to

improve Ai's ability to process data in extreme

climates, and improve the efficiency and performance

of large AI models, especially for the optimization of

deep learning neural networks, such as research on

lightweight neural network structures such as

MobileNet and EfficientNet, so that they can run

efficiently on vehicle hardware (Oranim, 2024).

Explore the Impact and Key Role of AI Technology in Autonomous Driving

97

5 CONCLUSIONS

AI has made great achievements in the field of

driverless cars. By processing the data from the

sensors of driverless cars, AI can help vehicles make

correct decisions quickly on the road. With the

reinforcement of sensor fusion strategies, AI can

process the obtained data better and faster. AI is

mostly based on deep learning frameworks in

machine learning, such as convolutional neural

networks, recurrent neural networks, and deep

reinforcement learning frameworks, to process the

environment perception, behavior prediction, road

planning, etc. of cars.

Although driverless technology has achieved a

rapid leap with the support of AI, and safety has been

guaranteed to a certain extent, what follows is that

society has doubts about whether AI will have a

negative impact on people's living standards, and

faces serious moral and ethical challenges. In the

future, the government and enterprises need to work

together to deal with this thorny problem.

REFERENCES

Bhardwaj, V., 2024. AI-Enabled Autonomous Driving:

Enhancing Safety and Efficiency through Predictive

Analytics. International Journal of Scientific Research

and Management (IJSRM), 12(02), 1076–1094.

Cameron, H., An, S., Rahmani, H., & Bennamoun, M.

(2019). Deep Learning for Driverless Vehicles. Smart

Innovation, Systems and Technologies, 83–99.

Ignatious, H. A., Sayed, H. E., & Khan, M., 2022. An

Overview of Sensors in Autonomous Vehicles.

Procedia Computer Science, 198, 736–741.

Isaac, L., 2016. DRIVING TOWARDS DRIVERLESS: A

GUIDE FOR GOVERNMENT AGENCIES.

Janiesch, C., Zschech, P., & Heinrich, K., 2021. Machine

learning and deep learning. Electronic Markets, 31(31),

685–695.

Khosroshahi, A., Eshed Ohn-Bar, & Trivedi, M. M. (2016).

Surround vehicles trajectory analysis with recurrent

neural networks.

Liu, T., Du, S., Liang, C., Zhang, B., & Feng, R., 2021. A

Novel Multi-Sensor Fusion Based Object Detection and

Recognition Algorithm for Intelligent Assisted Driving.

IEEE Access, 9, 81564–81574.

Mishrikotkar, A. 2022. Image Recognition by Using a

Convolutional Neural Network to Identify Objects for

Driverless Car. International Journal for Research in

Applied Science and Engineering Technology, 10(2),

1210–1215.

Nair, V. 2024. Baidu Clocks 6,000 Driverless Rides Per

Day in Wuhan, China. AIM.

Sallab, A., Abdou, M., Perot, E., & Yogamani, S., 2017.

Deep Reinforcement Learning framework for

Autonomous Driving. Electronic Imaging, 2017(19),

70–76.

Shahin, A., Salameh, M., Yao, H., & Goebel, R., 2024.

Explainable Artificial Intelligence for Autonomous

Driving: A Comprehensive Overview and Field Guide

for Future Research Directions. IEEE Access, 1–1.

US, T., 2024. Top Autonomous Vehicle Challenges and

How to Solve Them. TaskUs.

West, D., 2016. Securing the future of driverless cars.

Brookings.

Yeong, D. J., Velasco-Hernandez, G., Barry, J., & Walsh,

J., 2021. Sensor and Sensor Fusion Technology in

Autonomous Vehicles: a Review. Sensors, 21(6), 2140.

MLSCM 2024 - International Conference on Modern Logistics and Supply Chain Management

98