The Impact of Structured Prompt-Driven Generative AI on Learning

Data Analysis in Engineering Students

Ashish Garg and Ramkumar Rajendran

a

IDP in Educational Technology, Indian Institute of Technology Bombay, Mumbai, India

Keywords: Large Language Model, Structured Prompt, Programming, Data Analysis.

Abstract: This paper investigates the use of Generative AI chatbots, especially large language models like ChatGPT, in

enhancing data analysis skills through structured prompts in an educational setting. The study addresses the

challenge of deploying AI tools for learners new to programming and data analysis, focusing on the role of

structured prompt engineering as a facilitator. In this study Engineering students were trained to adeptly use

structured prompts in conjunction with Generative AI, to improve their data analysis skills. The t-test

comparing pre-test and post-test scores on programming and data analysis shows a significant difference,

indicating learning progress. Additionally, the task completion rate reveals that 45% of novice participants

completed tasks using Generative AI and structured prompts. This finding highlights the transformative

impact of Generative AI in education, indicating a shift in learning experiences and outcomes. The integration

of structured prompts with Generative AI not only aids skill development but also marks a new direction in

educational methodologies.

1 INTRODUCTION

This study highlights the significant impact of

Generative AI, especially large language models like

OpenAI's ChatGPT, on education. These AI systems,

known for their human-like text generation, are

revolutionizing education by providing interactive

and tailored learning experiences (Firaina, R., &

Sulisworo, D., 2023). In educational contexts,

Generative AI goes beyond a simple tool, becoming

key in creating dynamic learning spaces that address

diverse student needs (Ruiz-Rojas, L. I., et al., 2023).

Transitioning to the specific context of

programming and data analysis, the application of

Generative AI assumes a critical role. These complex,

data-intensive fields benefit from AI's ability to

analyze intricate data and identify patterns (Dhoni, P.,

2023). This transition enhances conceptual

understanding, allowing learners to explore the

theoretical foundations of these disciplines.

Generative AI's incorporation is set to transform

conventional educational models, providing a richer

learning experience (Sarkar, A., 2023).

Building on this foundation, this study

investigates Generative AI's role in education,

a

https://orcid.org/0000-0002-0411-1782

especially for beginners in programming and data

analysis. Previous research has focused on AI code-

generators for experienced programmers,

overlooking its potential for novices, particularly in

introductory data analysis (Vaithilingam, P., Zhang,

T., & Glassman, E. L., 2022). The research aims to

fill this gap by examining how Generative AI can

facilitate learning and enable independent data

analysis. It is driven by a research question on

Generative AI's practical use and its educational

impact through structured prompt engineering.

RQ: How does structured prompt engineering

with Generative AI, influence the mastery of

programming and data analysis concepts among

learners with no prior programming experience?

In response to the research questions, this study

involved 20 participants aged 24 to 29, all beginners

in text-based programming and data analysis, from

various engineering fields. They participated in

structured training to understand basic data analysis

concepts and use Generative AI tools. During a four-

hour session, they engaged in prompt engineering

with Generative AI to address data analysis tasks. The

study showed significant learning improvements,

with a large effect size of 0.89 and a p-value < 0.05 in

270

Garg, A. and Rajendran, R.

The Impact of Structured Prompt-Driven Generative AI on Learning Data Analysis in Engineer ing Students.

DOI: 10.5220/0012693000003693

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 16th International Conference on Computer Supported Education (CSEDU 2024) - Volume 2, pages 270-277

ISBN: 978-989-758-697-2; ISSN: 2184-5026

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

the paired sample t-test on programming knowledge

and data analysis concepts. These findings suggest

the benefits of incorporating Generative AI and

structured prompts in education, especially in

domains like programming and data analysis.

2 BACKGROUNDS AND

LITERATURE REVIEW

The literature review examines the application of

Generative AI in educational settings, with a focus on

ChatGPT's contributions to various learning

environments. This section highlights the critical role

of structured prompt engineering and evaluates the

limited existing studies on Generative AI's use in

programming and data analysis. This section

identifies areas that require further investigation to

fully understand the educational benefits and

opportunities of Generative AI.

2.1 Generative AI in Education

Generative AI tools, such as ChatGPT, have become

popular for their capacity to generate responses and

content that mimic human interaction, using

advanced deep learning algorithms and vast amounts

of text data (Dai, Y. et al., 2023). To explore the

applications and potential advantages of these AI

tools in education, a systematic literature review was

conducted, The applications of Generative AI are

summarized as follows

Personalized Tutoring: AI provides customized

tutoring and feedback, tailoring the learning

experience to each student's needs and progress

(Bahrini, A. et al., 2023).

Automated Essay Grading: AI systems are trained

to grade essays by identifying characteristics of

effective writing and offering feedback (De Silva, D.

et al., 2023).

Language Translation: AI translates educational

materials into multiple languages, ensuring accurate

and understandable translations (Kohnke, L.,

Moorhouse, B. L., & Zou, D., 2023).

Interactive Learning: AI creates dynamic learning

environments with a virtual tutor that responds to

student inquiries (Bahrini, A. et al., 2023).

Adaptive Learning: AI adjusts teaching strategies

based on student performance, customizing the

difficulty of problems and materials (Bahrini, A. et

al., 2023).

Content Creation: AI generates a variety of

content, including articles, stories, poems, essays,

summaries, and computer code (Rajabi, P.,

Taghipour, P., Cukierman, D., & Doleck, T., 2023).

Transitioning from the applications of generative

AI to its specific role in programming, recent studies

have explored the impactful role of generative AI. An

experimental study showcased how the programming

tool Codex, powered by generative AI, outperformed

learners in a CS1 class on a rainfall problem, ranking

in the top quartile (Denny, P. et al., 2023). Another

investigation used the flake8 tool to assess code

generated by AI against the PEP8 coding style,

revealing a minimal syntax error rate of 2.88% (Feng,

Y. et al., 2023). A notable study involved Github's

generative AI platform, which initially failed to solve

87 Python problems; however, applying prompt

engineering techniques enabled it to resolve

approximately 60.9% of them successfully (Finnie-

Ansley, J. et al., 2022).

The Above findings collectively highlight the

efficacy of generative AI in code generation. This

transition into the realm of prompt engineering, a

critical aspect of maximizing the potential of

generative AI in educational contexts, leads us to the

next section of the literature review, focusing on the

nuances of prompt engineering.

2.2 Prompt Engineering

Maximizing Generative AI's benefits in education

relies on the proficient use of prompt engineering, a

key skill that significantly affects AI model

interactions (Kohnke, L., Moorhouse, B. L., & Zou,

D., 2023). Effective prompt engineering requires

understanding AI's operational principles, and

ensuring prompts are clear and precise to improve

tokenization and response accuracy. Including

detailed context in prompts also enhances the AI's

ability to form relevant connections, boosting

response quality.

The art of prompt engineering also involves

specifying the desired format of the AI's responses,

ensuring they align with user expectations in terms of

structure and style. Controlling verbosity within

prompts is another key aspect, allowing users to

manage the level of detail in the AI's responses, thus

tailoring the information density to suit specific

needs.

However, understanding these principles is just

the beginning. Practical application demands a

structured approach, embodied in the CLEAR

framework. This framework provides a systematic

strategy for crafting prompts that effectively harness

the capabilities of AI language models. It's a synthesis

of clarity, context, formatting, and verbosity control,

The Impact of Structured Prompt-Driven Generative AI on Learning Data Analysis in Engineering Students

271

all working in concert to elevate the communication

process with AI, making it efficient and impactful in

educational settings (Lo, L. S., 2023).

The framework

elements are presented below as

Concise: Prompts should be succinct, clear, and

focused on the task's core elements to guide AI

towards relevant and precise responses.

Logical: Prompts need a logical flow of ideas,

helping AI understand context and relationships

between concepts, resulting in coherent outputs.

Explicit: Prompts must specify the expected output

format, content, or scope to avoid irrelevant

responses.

Adaptive: Prompts should be flexible, allowing

experimentation with different structures and settings

to balance creativity and specificity.

Reflective: Continuous evaluation and refinement of

prompts are crucial, using insights from previous

responses to improve future interactions (Lo, L. S.,

2023).

Additionally, some strategies mentioned in open

AI documentation for writing prompts are presented

below

Strategy: Write Clear Instructions

I. Include details in your query to get relevant

answers.

II. Ask the model to adopt a persona using the

system message.

III. Use delimiters to indicate distinct parts of the

input.

Strategy: Provide Reference Text

I. Instruct the model to answer using a reference

text.

II. Instruct the model to answer with citations

from a reference text.

Strategy: Split Complex Tasks into Simpler

Subtasks

I. Use intent classification to identify the most

relevant instructions.

II. Summarize long documents piecewise and

construct a full summary recursively (OpenAI,

2023).

The strategic application of prompt engineering

tactics significantly refines AI interactions, ensuring

responses are both precise and contextually relevant.

2.3 Gaps in Existing Literature

The existing research focuses on generative AI's

ability in code generation and problem-solving but

often misses its wider educational effects and

interactions with learners. Key areas needing further

exploration include:

Educational Impact in Data Analysis: The

educational benefits of using tools like ChatGPT in

teaching data analysis are unclear. Their influence on

student motivation, comprehension of data analysis

principles, and lasting skill acquisition needs

examination, especially for newcomers to data

analysis.

Prompt Engineering in Education: The role of

prompt engineering in educational settings is largely

unexplored. Recognized for enhancing AI

performance, it has the potential to help learners

articulate data analysis problems, think critically, and

engage creatively with tasks that need exploration.

The literature review highlights the need for this

study, focusing on prompt engineering's unexplored

potential in education, especially in data analysis. It

identifies a gap in understanding how Generative AI,

with structured prompts, affects analytical skills and

self-directed learning. This research aims to bridge

this gap, providing insights into integrating

Generative AI effectively in education and advancing

data analysis practices.

3 STUDY DESIGN

In this part, the research method is explained,

including the tasks created, the data used for these

tasks, and the tests conducted before and after to

assess the results. The discussion also covers the SUS

survey used to evaluate user satisfaction and the

training provided for effective prompt crafting.

3.1 Selection of Concept of Data

Analysis for the Task

The study focuses on two essential data analysis

skills: data aggregation and merging. Aggregation

simplifies data, revealing trends and easing novices

into complex tasks, much like learning the alphabet

before forming sentences (McKinney, W., 2022).

Merging integrates diverse datasets, essential in the

data landscape, offering a unified view (McKinney,

W., 2022).

CSEDU 2024 - 16th International Conference on Computer Supported Education

272

3.2 Dataset for the Task and Problem

Statement for the Task

3.2.1 Dataset

The dataset includes data from September to January,

capturing attributes such as student video usage,

student ID, school ID, view count, and last access

date and time. Each month's dataset contains over

10,000 observations, providing a comprehensive

view of student engagement with video content. This

dataset is created for the task and the discussed

concept of data analysis. However, this dataset draws

inspiration from the school education program where

students are provided tablets to enhance learning.

3.2.2 Ta sk

Based on the given dataset, the task is designed that

way so that it cannot be completed with no

programming software like Excel and Tableau, etc.,

The following are the problem statements of the task

T1: Calculate the total daily video usage for each

student across all months.

T2: Given the unique data capture cycle of student

video usage (the 26th of one month to the 25th of the

next), compute the monthly total video usage for each

student, for example, compute the student total video

usage for October (1st October to 31st October).

T3: Calculate the monthly video usage for each

school over all the months.

For the completion of the tasks, Python

programming is selected because it is preferred in

data analytics due to its simplicity, extensive libraries

like pandas and NumPy, and compatibility with big

data and machine learning. Its strong community and

relevance in real-world applications, along with

market demand as highlighted by Zheng, Y. (2019),

make it superior to alternatives like R and Weka,

justifying its choice for this study.

3.3 Instrument Designed

The study selects specific tools to ensure the findings

are valid and reliable. It aims to closely examine the

experiences, needs, and performance of participants

to fully understand the research goals.

3.3.1 Pre and Post-Test

A set of 15 MCQs was designed, focusing

predominantly on the concepts of aggregation and

merging. These questions aimed to assess the

participants' comprehensive understanding of the

concepts through Python programming. These

Questions are designed across three levels of Bloom's

taxonomy, the test comprises five questions each

● Understanding (L1)

● Applying (L2)

● Analyzing (L3)

These questions emphasized practical application

also over mere rote learning and ensured relevance to

the task at hand. These questions originated from the

official panda's documentation and underwent

multiple validations by industry experts, ensuring

their efficacy in gauging participant performance.

3.3.2 SUS Survey

The System Usability Scale (SUS) was adapted to

gather feedback on the Generative AI tool in data

analysis, providing a reliable evaluation of its

effectiveness and user experience (Brooke, J., 1995).

Combining SUS scores with task performance and

learning metrics allows for a detailed assessment of

the tool's impact on user proficiency.

3.4 Design of Structured Prompt

Training

A one-hour training session has been designed to

introduce participants to prompt engineering,

employing an example-based approach. Initially, the

CLEAR framework and strategies from OpenAI's

documentation are explained to lay the foundation.

Subsequently, two examples of structured prompts

are presented to illustrate the concepts in practice.

The first example is non-contextual, featuring a

question from the Union Public Service Commission

ethics exam, a well-known civil service recruitment

examination in India as shown in Figure 1. This

example is chosen for its general nature, ensuring that

even students with limited programming or data

analysis experience can grasp the concept of

structured prompts. The structured prompt for this

question is crafted to build trust and provide an easy

introduction to the topic. However, the prompt for

this case is written this way “At the beginning of the

prompt, the full question is clearly stated, followed by

a detailed context explaining that this is a UPSC exam

question, the nature of the exam, the selection

process, and the roles of those selected. The

requirement to limit the response to 250 words is

specifically highlighted. The prompt then instructs to

adopt the persona of an evaluator, whose profile is

clearly outlined, to ensure the response meets the

evaluator's expectations. Additionally, a strategy is

provided to organize the answer logically, enhancing

The Impact of Structured Prompt-Driven Generative AI on Learning Data Analysis in Engineering Students

273

the overall response utilizing concepts from the

CLEAR framework and Open AI strategies.

Figure 1: Example 1- Structured prompt for answering

UPSC exam question.

The second example is contextual and directly related

to the field of study. It involves showing students an

Excel file with a dataset different from the one used

in the tasks. For this dataset, a structured prompt is

written based on a specific problem statement as

shown in Figure 2,

Figure 2: Example 2-Structured Prompt for writing Python

code for the comparison of scores for the given training

dataset.

In this case, the prompt is structured using the CLEAR

framework and Open AI strategies. It starts by clearly

defining the problem statement. Next, it specifies the

requirement for a Python code, it begins by stating the

file path of the dataset, followed by explicitly naming

the columns in this dataset. It then instructs to focus

only on the columns relevant to the problem. Based on

the problem statement, it first outlines the comparison

metrics and then provides instructions for advanced

analysis, including checking assumptions. It also

clearly states what actions to take if the assumptions

are not met. This is all organized in a logical sequence.

At the end of the prompt, there's a request to provide

the code and explain each part of the syntax step by

step. This helps the user understand the process and

learn in segments. This approach not only

demonstrates the application of structured prompts in

a relevant context but also prepares students for the

types of tasks they will encounter. This careful, step-

by-step approach ensures that all participants,

regardless of their background, can effectively engage

with and understand the principles of prompt

engineering, setting a solid foundation for their

subsequent tasks in data analysis

4 USER STUDY

This study examined the impact of Generative AI and

structured prompt engineering on novices learning

data analysis, using a four-hour session with Python

and AI tools. Participants independently completed

tasks, with researcher guidance and continuous AI

access, to assess how structured prompts affect

learning in a condensed time frame.

4.1 Participants

This study involved 20 graduate-level participants, all

familiar with ChatGPT or similar AI tools but without

formal training in programming or data analytics.

This selection ensured a uniform baseline of

understanding across the 12 male and 8 female

participants, aged 24 to 29. Each participant had

access to ChatGPT 3.5 and shared English as their

formal education language, minimizing language

barriers. Their lack of prior prompt engineering

experience set a consistent starting point for all,

crucial for examining the impact of structured prompt

training on their data analysis skills using Generative

AI. This Purposive sampling was important for

maintaining a controlled study environment and

focusing on the specific research objectives.

4.2 Study Procedure

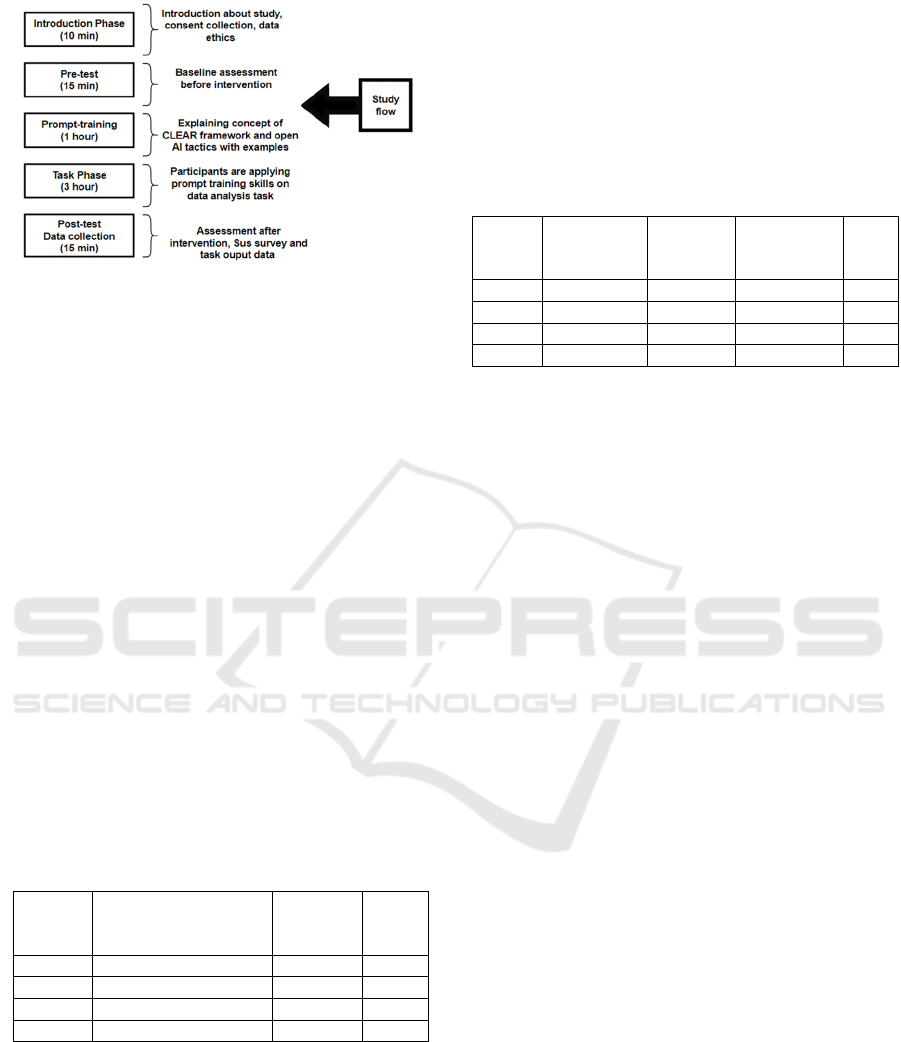

In this study, as shown in Figure 3 participants were

initially briefed on the impact of Generative AI in

data analysis and consented to ethical data collection

and privacy practices. A pre-test then assessed their

existing knowledge, establishing a baseline for

subsequent phases. During the training phase, they

engaged in a one-hour session on structured prompt

writing, essential for effective interaction with

Generative AI tools like OpenAI's ChatGPT, and

practiced crafting prompts through contextual and

non-contextual examples. In the task phase, they

applied prompting skills over three hours, tackling

various data analysis tasks and refining their

proficiency. Post-intervention, their skills were

reassessed to quantify the training's effectiveness.

CSEDU 2024 - 16th International Conference on Computer Supported Education

274

Figure 3: Flow chart of conducted study.

Comprehensive data collection included a consent

form, pre and post-tests, output file task-wise, and a

System Usability Scale survey,

providing a

multidimensional understanding of the participants'

learning journeys and the influence of Generative AI

tools on enhancing data analysis learning.

5 RESULT

In this part, we look at the data gathered to understand

how structured prompt engineering and Generative

AI affect learning results.

Following the initial overview, the analysis began

with the Levene Test on pre-test scores for Bloom's

taxonomy levels: understanding (L1), applying (L2),

and analyzing (L3), to check variance homogeneity

among participants, it is essential for valid statistical

analysis. The Levene test's null hypothesis assumed

no difference in the variance of scores across all three

levels. Detailed test results are presented in Table 1,

laying the groundwork for further analysis.

Table 1: Levene’s test on pre-test score of participant.

Sr. No Level Levene’s

Test

value

P

value

1 L1

_

Pre

_

Test 0.13 0.72

2 L2

_

Pre

_

Test 2.66 0.12

3 L3

_

Pre

_

Test 0.13 0.72

4 Total_score_Pre_Test 0.15 0.70

P value >0.05 from Table 1, suggests that the null

hypothesis is accepted and it confirms homogeneity

among participants, the analysis advanced to compare

pre and post-intervention test performances across

Bloom's taxonomy levels: understanding, applying,

and analyzing and overall scores, for the comparison

paired sample t-test is used with the null hypothesis

that there is no significant difference between test

score before and after the intervention.

From Table 2, for all three levels of Bloom's

taxonomy and the total score, the null hypothesis for

the t-test was rejected, indicating a statistically

significant difference in scores post-intervention test.

Table 2: Paired sample t-test statistics for pre-test and post-

test scores of the participants.

Sr. No Level Test

Statistic

t (1,19)

P value Effe

ct

Size

1 L1 6.77 9.04E-07 0.84

2 L2 6.097 3.66 E-06 0.81

3 L3 4.29 1.97 E-04 0.70

4 Total score 8.66 2.51 E-08 0.89

From the assessment of task output files, the task

completion data reveals a clear trend in students'

performance, with a higher success rate observed in

the initial task (T1) compared to the complex

subsequent task (T2, T3). Specifically, 70% of

students completed T1 successfully, while only 55%

and 45% of students were able to complete tasks T2

and T3 respectively. This trend shows that while

students could manage simple data analysis tasks,

they found it difficult when the tasks needed more

advanced skills, especially in merging data.

The System Usability Scale (SUS) survey yielded

a mean score of 72.38 and a standard deviation of

8.45, indicating a generally positive reception of the

Generative AI tool's usability in data analysis

learning. Participants perceived the tool as user-

friendly and effective, reflecting a satisfactory user

experience overall.

Concluding the data analysis, we observed

significant advancements in learning outcomes

through structured prompt engineering and

Generative AI. Moving into the discussion section,

we explored the deeper implications of these findings.

6 DISCUSSION AND

CONCLUSION

In addressing RQ, the study's focus was to understand

the influence of structured prompt engineering with

Generative AI on the mastery of programming and

data analysis concepts among learners with no prior

experience. The quantitative analysis, initiated with

the Levene Test, established a uniform baseline

across participants, ensuring the validity of

subsequent comparisons. The paired sample t-tests

revealed a statistically significant improvement in

The Impact of Structured Prompt-Driven Generative AI on Learning Data Analysis in Engineering Students

275

participants' performance across all levels of Bloom's

taxonomy post-intervention, indicating an improved

understanding and application of programming

concepts crucial for data analysis.

The substantial effect sizes reported in the t-test

results underscore the profound impact of the

intervention on learners' ability to grasp and apply

programming principles within the context of data

analysis. Additionally, task completion rates after

structured prompt training with Generative AI

suggest the significant influence of the intervention

on participants' ability to understand and execute data

analysis tasks. This progress goes beyond mere

memorization, indicating a shift towards the

comprehension of the underlying principles.

Furthermore, the System Usability Scale (SUS)

survey results, indicating a positive reception of the

Generative AI tool's usability, complement the

study's findings. A user-friendly and effective tool is

crucial in an educational setting, as it can significantly

reduce the cognitive load on learners, allowing them

to concentrate on understanding and applying the

concepts rather than navigating the tool itself.

This study sheds light on the potential of

Generative AI and structured prompt engineering to

transform educational methods. The results of this

research suggest that Generative AI can play a crucial

role in helping learners understand complex subjects

like programming and data analysis. Moreover, the

usability of structured prompts has been instrumental

in providing students with clear, actionable guidance

through intricate learning tasks, enhancing their

engagement and help them to master skills.

However, the study acknowledges its limitations,

including the absence of log data analysis and

qualitative data like interviews which could provide

deeper insights into the behavioral patterns of high

and low performers. The relatively small sample size

also restricts the generalizability of the findings.

7 FUTURE WORK

Future research on integrating Generative AI and

structured prompt engineering in education,

especially in programming and data analysis, is set to

deepen our understanding of its effects on learning.

Planned comparative studies can examine the

learning outcomes of groups with varying levels of

access to ChatGPT and prompt training, aiming to

understand the role of Generative AI in learner

engagement and educational processes. These studies

can expand participant diversity and employ methods

like structured interviews and task analysis to capture

detailed learner interactions and perceptions. A key

focus will be evaluating the quality of participants'

prompts to enhance critical thinking and refine

training methods. Expected to enrich learning

theories and Human-Computer Interaction

frameworks, this research will help explore how

Generative AI can innovate pedagogy and create

personalized, accessible educational experiences

worldwide.

REFERENCES

Bahrini, A. et al. (2023). ChatGPT: Applications,

opportunities, and threats. 2023 Systems and

Information Engineering Design Symposium (SIEDS),

Charlottesville, VA, USA, 274-279.

https://doi.org/10.1109/SIEDS58326.2023.10137850

Brooke, J. (1995, November 30). SUS: A quick and dirty

usability scale. Usability Evaluation in Industry, 189.

Dai, Y., et al. (2023). Reconceptualizing ChatGPT and

generative AI as a student-driven innovation in higher

education. Procedia CIRP, 119, 84-90.

https://doi.org/10.1016/j.procir.2023.05.002

De Silva, D., Mills, N., El-Ayoubi, M., Manic, M., &

Alahakoon, D. (2023). ChatGPT and generative AI

guidelines for addressing academic integrity and

augmenting pre-existing chatbots. Proceedings of the

IEEE International Conference on Industrial

Technology, 2023-April.

https://doi.org/10.1109/ICIT58465.2023.10143123

Denny, P., Kumar, V., & Giacaman, N. (2023). Conversing

with Copilot: Exploring prompt engineering for solving

CS1 problems using natural language. In Proceedings

of the 54th ACM Technical Symposium on Computer

Science Education (SIGCSE 2023) (pp. 1-7). ACM.

https://doi.org/10.1145/3545945.356982

Dhoni, P. (2023, August 29). Exploring the synergy

between generative AI, data, and analytics in the

modern age. TechRxiv.

https://doi.org/10.36227/techrxiv.24045792.v1

Feng, Y., Vanam, S., Cherukupally, M., Zheng, W., Qiu,

M., & Chen, H. (2023). Investigating code generation

performance of ChatGPT with crowdsourcing social

data. In 2023 IEEE 47th Annual Computers, Software,

and Applications Conference (COMPSAC) (pp. TBD).

IEEE.

Finnie-Ansley, J., Denny, P., Becker, B.A., Luxton-Reilly,

A., & Prather, J. (2022). The robots are coming:

Exploring the implications of OpenAI Codex on

introductory programming. In Proceedings of the 24th

Australasian Computing Education Conference (pp.

TBD). Virtual Event, February 14–18, 2022.

Firaina, R., & Sulisworo, D. (2023). Exploring the usage of

ChatGPT in higher education: Frequency and impact on

productivity. Buletin Edukasi Indonesia, 2(01), 39–46.

https://doi.org/10.56741/bei.v2i01.310

CSEDU 2024 - 16th International Conference on Computer Supported Education

276

Kohnke, L., Moorhouse, B. L., & Zou, D. (2023). ChatGPT

for language teaching and learning. RELC Journal,

54(2), 537-550.

https://doi.org/10.1177/00336882231162868

Lo, L. S. (2023). The art and science of prompt engineering:

A new literacy in the information age. Internet

Reference Services Quarterly.

https://doi.org/10.1080/10875301.2023.222762

McKinney, W. (2022). Python for data analysis: Data

wrangling with pandas, NumPy, and Jupyter (3rd ed.).

OpenAI. (n.d.). Best practices for using GPT. Retrieved

October 20, 2023, from

https://platform.openai.com/docs/guides/gpt-best-

practices

Rajabi, P., Taghipour, P., Cukierman, D., & Doleck, T.

(2023). Exploring ChatGPT's impact on post-secondary

education: A qualitative study. ACM International

Conference Proceeding Series, art. no. 9.

https://doi.org/10.1145/3593342.3593360

Ruiz, L., Acosta-Vargas, P., De-ta-Llovet, J., & Gonzalez,

M. (2023). Empowering education with generative

artificial intelligence tools: Approach with an

instructional design matrix. Sustainability, 15(15),

11524. https://doi.org/10.3390/su151511524

Sarkar, A. (2023). Will code remain a relevant user

interface for end-user programming with generative AI

models? In Proceedings of the 2023 ACM SIGPLAN

International Symposium on New Ideas, New

Paradigms, and Reflections on Programming and

Software (Onward! 2023) (pp. 153–167). Association

for Computing Machinery.

https://doi.org/10.1145/3622758.3622882

Vaithilingam, P., Zhang, T., & Glassman, E. L. (2022).

Expectation vs. experience: Evaluating the usability of

code generation tools powered by large language

models. In CHI Conference on Human Factors in

Computing Systems Extended Abstracts (pp. 1–7).

Zheng, Y. (2019). A comparison of tools for teaching and

learning data analytics. Conference on Information

Technology Education, 26 Sep 2019, pp. 160

The Impact of Structured Prompt-Driven Generative AI on Learning Data Analysis in Engineering Students

277