Understanding How Different Visual Aids for Augmented Reality

Influence Tool-Patient Alignment in Surgical Tasks: A Preliminary Study

Stefano Stradiotti

a

, Nicolas Emiliani

b

, Emanuela Marcelli

c

and Laura Cercenelli

d

eDIMES Lab - Laboratory of Bioengineering, Department of Medical and Surgical Sciences, University of Bologna,

via Massarenti, 9, Bologna, Italy

Keywords:

Augmented Reality, Surgery, Magic Leap 2, Visual Aids, Marker Tracking, 6DoF, Alignment, Accuracy,

Image-Guided Surgery, Visualization and Rendering Techniques.

Abstract:

This study explores the impact of several visual aids on the accuracy of tool-patient alignment in augmented

reality (AR) assisted surgical tasks. AR has gained prominence across surgical specialties, integrating virtual

models derived from patient anatomy into the surgical field. This opens avenues for innovative visual aids

and feedback which can facilitate surgical operations. To assess the influence of different visual aids on

surgeon performance, we conducted a tool-patient alignment test on a 3D-printed frame, involving 12 surgical

residents. Each participant inserted 12 toothpicks with a release tool into predefined target positions on the

frame simulating patient targets, under AR visualization through a Magic Leap 2 Head-Mounted-Display. As

visual aids, four holographic solutions were employed, with two of them offering graphical feedback upon the

correct alignment to the target. Linear and angular positioning errors were measured, alongside participant

responses to a satisfaction questionnaire. The tests maintained a consistent tracking system for estimating

target and tool poses in the real-world, ensuring evaluation stability. Preliminary results indicated statistically

significant differences among the proposed visual aids, suggesting the need for further exploration in the realm

of their usability in relation to the specific surgical task and the expected overall surgical accuracy.

1 INTRODUCTION

Augmented Reality (AR) technology, emerged in the

early 1990s, enables users to observe both real-world

images and computer-generated images, supplement-

ing and prompting information to the user to achieve

“augmentation” of the real world (Jiang et al., 2023;

Fraga-Lamas et al., 2018; Carmigniani and Furht,

2011). In recent years the use of AR in medicine

has arisen in many surgical specialties because of the

ability of AR systems to integrate the virtual mod-

els built from medical image data and the real surgi-

cal scenes into a unified view. This augmented visu-

alization offers an unparalleled avenue for surgeons

to access critical anatomical details and to visualize

the guidance information directly onto the patient’s

body. Ensuring surgical accuracy with AR guidance

depends, among others, on careful pre-operative plan-

ning. This is crucial because we need highly precise

a

https://orcid.org/0009-0008-5309-3876

b

https://orcid.org/0009-0001-2146-6155

c

https://orcid.org/0000-0002-5897-003X

d

https://orcid.org/0000-0001-7818-1356

virtual models (i.e.: high quality 3D reconstruction

from CT or MR patient’ scan) overlaid onto the real-

world scenario during surgery. Moreover, ensuring

the dependable operation of real-world tracking sys-

tems is crucial for accurately overlaying virtual ob-

jects and facilitating effective coordination between

the surgeon’s vision and manual dexterity (Condino

et al., 2023; Cercenelli et al., 2022; Fitzpatrick, 2010).

The effectiveness of this coordination could even de-

pend on the choice of visual aids, (Cercenelli et al.,

2023; Ruggiero et al., 2023; Schiavina et al., 2021;

Battaglia et al., 2020; Cercenelli et al., 2020; Fida

et al., 2018; Meola et al., 2017), and this is what we

want to investigate in our study.

Several studies have been done regarding the ac-

curacy of “image-to-patient” registration with differ-

ent AR technologies, for example using AR in virtual

nasal endoscopy found an accuracy of 1.3 cm (Bar-

ber et al., 2018), while others reported a target posi-

tion error of 1.19±0.42 mm in a similar setting (Li

et al., 2016). It has also been demonstrated that the

use of markers directly positioned onto the patient’s

body near the anatomical region of interest can further

616

Stradiotti, S., Emiliani, N., Marcelli, E. and Cercenelli, L.

Understanding How Different Visual Aids for Augmented Reality Influence Tool-Patient Alignment in Surgical Tasks: A Preliminary Study.

DOI: 10.5220/0012611800003660

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 19th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2024) - Volume 1: GRAPP, HUCAPP

and IVAPP, pages 616-622

ISBN: 978-989-758-679-8; ISSN: 2184-4321

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

reduce the registration error (Scherl et al., 2021; van

Doormaal et al., 2019). Moreover, with the advance-

ments of technologies, some studies have shown that

results obtained with AR systems can be compared to

the standard navigation systems in different surgical

specialties (Mai et al., 2023; Thabit et al., 2022; Peh

et al., 2020), even with faster execution times (Agten

et al., 2018). It has been demonstrated that the use of

different overlays can have an impact on the ”image-

to-patient” registration checks (Condino et al., 2023).

Despite this, only a few studies focused on the use of

different visual aids for AR-guided surgery and our

findings underscored the substantial implications of

the visual aid employed during surgical tasks.

The digital information can be visualized on the

real world in many ways and most of the studies fo-

cus on just one visual aid when assessing the achieved

surgical precision under AR guidance. This means

they do not consider how different visual aids might

affect the final surgical outcome. Because of this, we

are missing a complete picture of how different graph-

ical solutions can impact final results and if working

on them can enhance the surgical performance (Ver-

hellen et al., 2023; Scherl et al., 2021; Jud et al.,

2020).

Therefore, we questioned whether the type of vi-

sual aid used in AR guidance systems makes a differ-

ence in the achieved surgical accuracy, while employ-

ing the same image-to-patient registration method. To

explore this, we conducted a phantom test, simulating

the alignment between a surgical tool and a target po-

sition on the patient (“tool-patient alignment”), using

four different graphic solutions as visual aids. Then

we measured, for each aid, how accurately the simu-

lated tool is positioned and perceived under AR guid-

ance.

For the study we employed the Magic Leap 2

head-mounted-display (HMD) for rendering holo-

grams (three-dimensional images formed by the inter-

ference of light beams from a laser or other coherent

light source) serving as visual aids and for ensuring

accurate tracking in the spatial domain, i.e. 6 degrees

of freedom (6DoF) pose of both the target position on

the patient and the surgical tool. Among the overlays

tested, two of them displayed only the target position,

without providing feedback on the correct alignment.

In contrast, the other two overlays provide feedback

on the correct alignment, giving visual information to

identify a correctly aligned tool and a misaligned one.

This comparison helped us to assess the significance

of graphical feedback in improving accuracy during

AR-assisted surgical procedures.

2 MATERIALS AND METHODS

2.1 Study Design and Participants

In our comparative study, aimed at determining

whether and to what extent the use of different

visual aids influence the accuracy of AR-assisted

surgical tasks, we recruited 12 surgical residents

from IRCCS Azienda Ospedaliero-Universitaria of

Bologna. Among them, 6 were residents in maxillo-

facial surgery and 6 residents in orthopedics, rang-

ing from 25 to 38 years. To be eligible for the test,

participants were required to have observed at least

50 surgical procedures, even of the same type. Each

participant was instructed to place 12 toothpicks in

12 different planned positions on a 3D printed frame

filled with modeling clay, using a release tool of simi-

lar dimensions to a syringe. The positioning was AR-

guided using four different types of visual aids dis-

played through the Magic Leap 2 HMD. At the end of

the test, participants were asked to complete a Likert-

scale questionnaire to measure their appreciation of

the different graphic solutions, ranging from 1 (totally

disagree) to 5 (totally agree), with the following ques-

tions:

1. “I think the overlay “X” speeds up the alignment

surgical operations.”

2. “I think the overlay “X” is clear and intuitive to

use.”

3. “I think the overlay “X” is not occlusive with re-

spect to the operating field.”

4. “I think the overlay “X” is well suited for High

Accuracy surgery operations.”

Finally, linear and angular positioning accuracy

were measured.

The simulated task can be related to lumbar facet

joint injections (Agten et al., 2018), however, since

it focuses on the alignment of a surgical instrument

to a specific target position on the patient’s anatomy,

it may also hold significance for surgical procedures

in other specialties such as orthopedic milling for the

insertion of patient-specific prosthetics (Fotouhi et al.,

2018) or the placement of screws in the thoracic and

lumbar spine (Peh et al., 2020).

2.2 Study Process

For the execution of the test, an Android application

was developed for the Magic Leap 2 device (1.4.1 OS

Release) using the Unity development platform (ver-

sion 2022.3.9f1) and the Magic Leap SDK (Software

Development Kit, version 1.4.0). The purpose of the

Understanding How Different Visual Aids for Augmented Reality Influence Tool-Patient Alignment in Surgical Tasks: A Preliminary Study

617

application was to sequentially display properly posi-

tioned visual support holograms indicating the target

positions where the 12 toothpicks should be inserted

on the 3D printed frame filled with modeling clay.

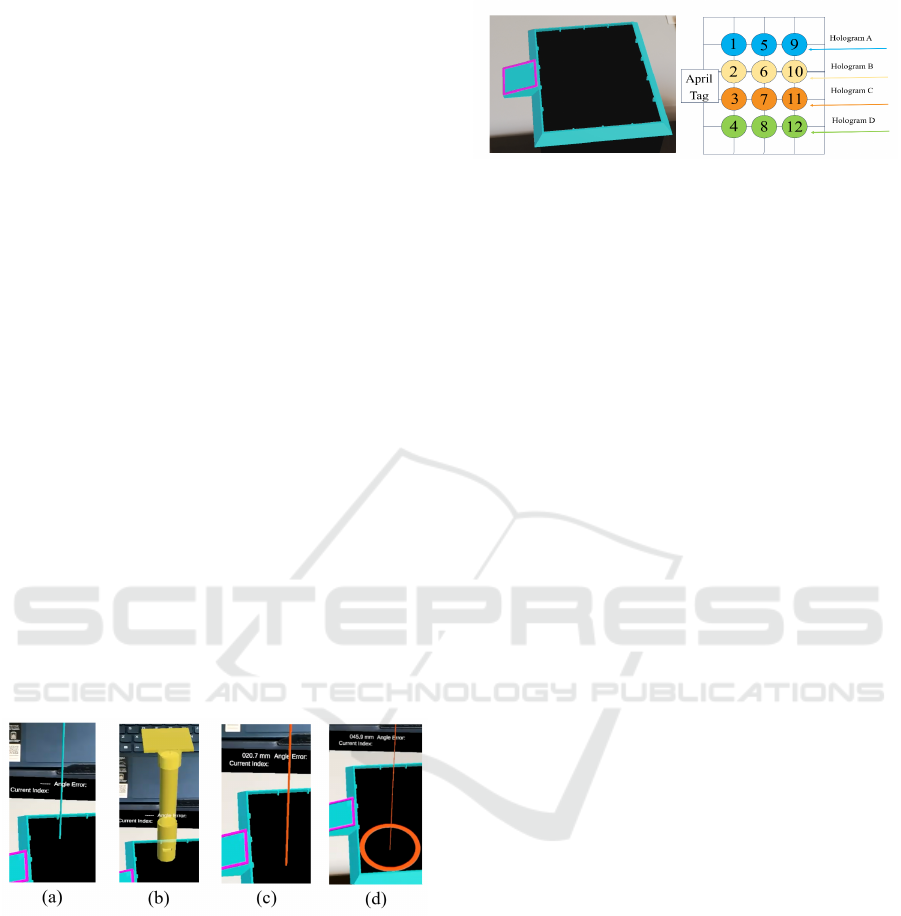

At the beginning of the test, each surgical resi-

dent was shown each adopted visual aid (shown in

figure 1), and the order in which they would be pre-

sented was explained to them (shown in figure 2b).

Specifically, the first solution (referred to as “A” from

now on) involved displaying a simple blue axis with

a diameter of 5 mm perfectly aligned with the tar-

get entry point and direction. The second solution

(“B”) displayed the transparent phantom of the tool

(transparency is adjustable based on the instructions

given by the surgeon) in the position where the real

tool should be located to align the toothpick accu-

rately in the target entry point and direction. The

third proposed solution (“C”) showed a virtual or-

ange axis with a diameter of 5 mm, which turned into

green when the tool (tracked in space using an April-

Tag marker placed above it) was aligned in the target

position within a deviation of less than 3 mm and 3

degrees. This color change provides feedback to the

user on the correct alignment. Finally, the fourth solu-

tion (“D”), presented the same system as solution “C”

but with the addition of a circle around the target entry

point. The diameter of the circle expanded and con-

tracted proportionally to the deviation of the tooltip

from the target entry position. Also in this case, the

proposed visual aid gives graphical feedback on the

correct alignment.

Figure 1: Visual aids used during the test with Magic Leap

2: Hologram “A” (a), Hologram “B” (b), Hologram “C” (c)

and Hologram “D” (d).

To ensure accurate registration of the holograms

in the virtual world relative to the real world, April-

Tag markers (used dictionary: 25h9, length: 40 mm)

securely attached to the frame target and to the tooth-

pick release tool were used to estimate their 6DoF

pose (see figure 3c). AprilTag markers provide a

crucial advantage compared to the use of QR-codes,

namely the ability to detect the marker even when

smaller in size. This represents a fundamental ben-

efit for surgery as it allows for less occlusion of the

operative field.

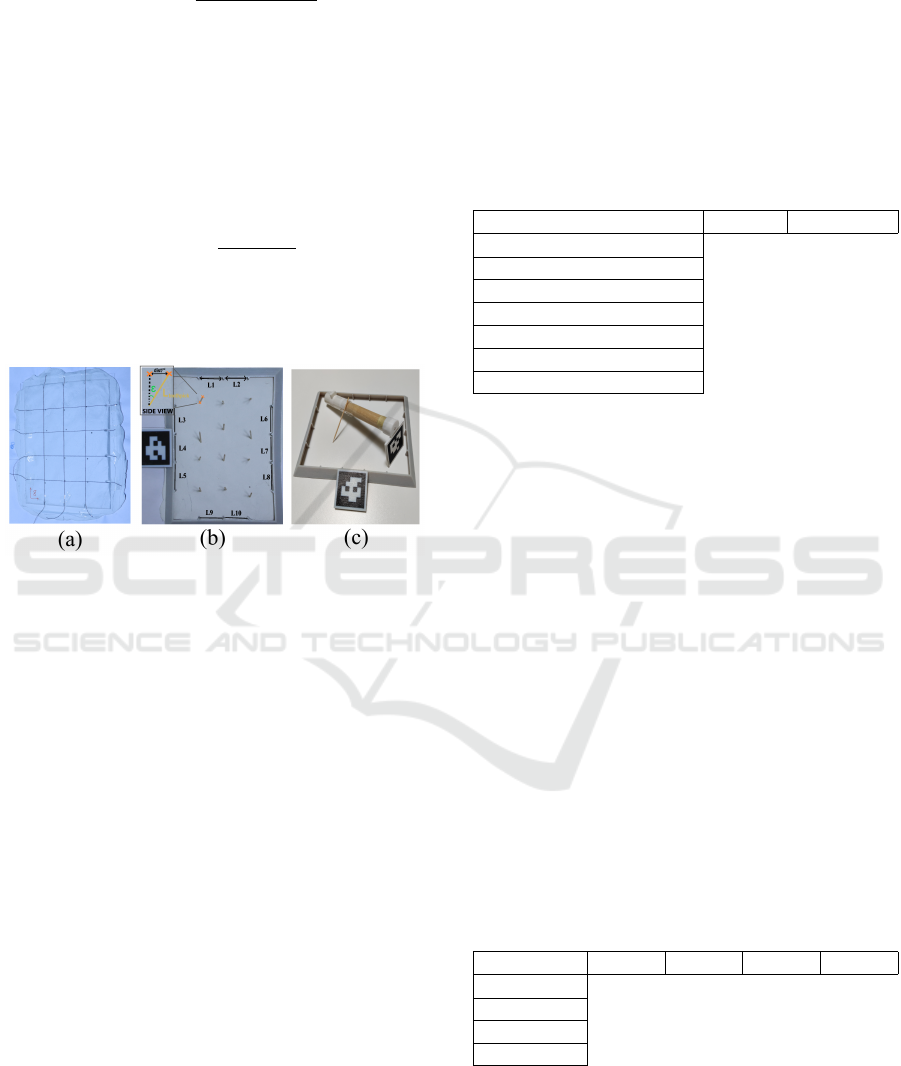

(a) (b)

Figure 2: The target frame used during the test (a) and the

order of execution of tasks under the 4 different visual aids,

i.e. holograms A, B, C, D (b).

The software implementation of 6DoF pose esti-

mation exploited the MLMarkerTracker library pro-

vided by Magic Leap itself for use with their AR

headset. In particular, the marker tracking back-end

has been set to use the 3-world-cameras of the Magic

Leap 2 in order to ensure stereo-vision of the mark-

ers (i.e.: the provided marker tracking algorithm can

be configured with several options). This guarantees

a much higher field of view and even better accuracy,

if compared to only the frontal RGB camera. During

the execution of each test, the time required for the

insertion of each toothpick was measured.

2.3 Outcome Measurements

Firstly, the tracking accuracy is visually checked by

ensuring the alignment between the virtual frame and

the real one. This ensures the correct positioning of

the holograms during testing. Then for measuring

the execution times, a standard stopwatch was used.

The start time was considered as the moment in which

the target position was displayed, while the end time

was taken manually by an external operator when the

toothpick was inserted and fully released. Times were

recorded in whole seconds (error < 1 s).

To measure the linear positioning error, a standard

analogic Vernier caliper was used (accuracy of 0.05

mm). The distance between the center of the hole

left by the toothpick inside the modeling clay and

the ideal insertion position was measured. The ideal

insertion position was identifiable through grooves

present in the frame target (as shown in figures 2a

and 3a), which served as reference for the grid con-

struction. This deviation was measured in millimeters

(error < 1 mm).

Finally, for measuring the angular positioning er-

ror, a photograph was taken using a Canon Eos 77D

equipped with a standard 18-135mm lens at the maxi-

mum possible zoom level. The photograph was taken

from approximately 1 meter away from the toothpick

insertion surface. To compute the angular error the

following equation, derived from elementary geomet-

IVAPP 2024 - 15th International Conference on Information Visualization Theory and Applications

618

rical principles, has been used:

ε = arcsin(

dist

px

∗ px2mm

L

toothpick

) (1)

where dist

px

is the norm of the difference between

the coordinates in the photograph of the toothpick

tip and entry point (see figure 3b). The conversion

factor from pixel to millimeters (px2mm) is obtained

by comparing the mean length in pixel between each

image’s reference grooves with their ideal distance

which corresponds to 30 mm. This can be write com-

pactly as follows:

px2mm =

30

mm

∗ 10

∑

10

i=1

L

px

i

(2)

The ε angle was calculated as the angle between the

axis of the toothpick and the axis perpendicular to the

plane of the insertion surface. (error < 3°).

Figure 3: Resultant dry clay with grid lines used for posi-

tioning accuracy (a), mock target frame (b and c) and mock

tool used for the experiments (c).

2.4 Statistical Analysis

Qualitative and quantitative data have been registered

and analyzed on an Excel document. Statistical vali-

dation was performed using the Friedman test (Fried-

man, 1940; Friedman, 1939; Friedman, 1937), a non-

parametric statistical test developed by Milton Fried-

man used to detect differences in treatments (ie: dif-

ferent visual aids) across multiple test attempts. The

hypothesis being tested was whether there were sig-

nificant differences in mean positioning accuracy and

appreciation among the four proposed visual aids. In

the calculation of the p-value (the probability of the

null hypothesis), the first four (out of 12) inserted

toothpicks were excluded as they were used as train-

ing by the surgical residents. Additionally, to ensure

that each graphic solution was tested with approxi-

mately the same amount of practice by the surgeons,

they have been shown in the following sequence: A-

B-C-D, A-B-C-D, A-B-C-D. At the end, the follow-

ing measurements were separately validated: execu-

tion times, positioning errors, angular positioning er-

rors, and individual questionnaire answers. A statisti-

cally significant p-value of 0.05 was chosen.

3 RESULTS

3.1 Feasibility and Acceptability

The Friedman statistical hypothesis test has been con-

ducted for several variables in order to understand if

and at which level each visual aid influenced the final

accuracy and appreciation. The results are shown in

table 1.

Table 1: Resultant p-values from the Friedman Test.

Variable P-value Percentage

Execution Times 0.0006 0.06 %

Linear Positioning Error 0.0023 0.23%

Angular Positioning Error 0.0615 6.15 %

Question 1 0.0236 2.36 %

Question 2 0.4619 46.19 %

Question 3 0.0093 0.93 %

Question 4 0.0019 0.19 %

A p-value below the predetermined significance

level (0.05) suggests evidence to reject the null hy-

pothesis, indicating significant differences among the

tested conditions. In this context, execution times,

linear positioning error, and certain questionnaire an-

swers exhibited statistically significant differences

among the proposed visual aids. However, the angular

positioning error and the second question regarding

the clarity and intuitiveness of the specific hologram

did not show significant differences.

3.2 Procedural Times

The obtained execution times demonstrate that the

two solutions without graphical feedback (i.e. Holo-

grams A and B) are faster compared to the other two

(Holograms C and D). This is likely since the surgical

resident focused more on positioning until the guiding

reference axis for task execution turned green. The

average, minimum, maximum, and standard deviation

values are reported in table 2.

Table 2: Mean values regarding the execution time.

TIME (sec) Hol. A Hol. B Hol. C Hol. D

Mean 16.27 14.81 35.50 23.27

Minimum 4.00 5.00 6.00 6.00

Maximum 55.00 32.00 105.00 76.00

St. Dev. 12.84 8.56 28.62 17.31

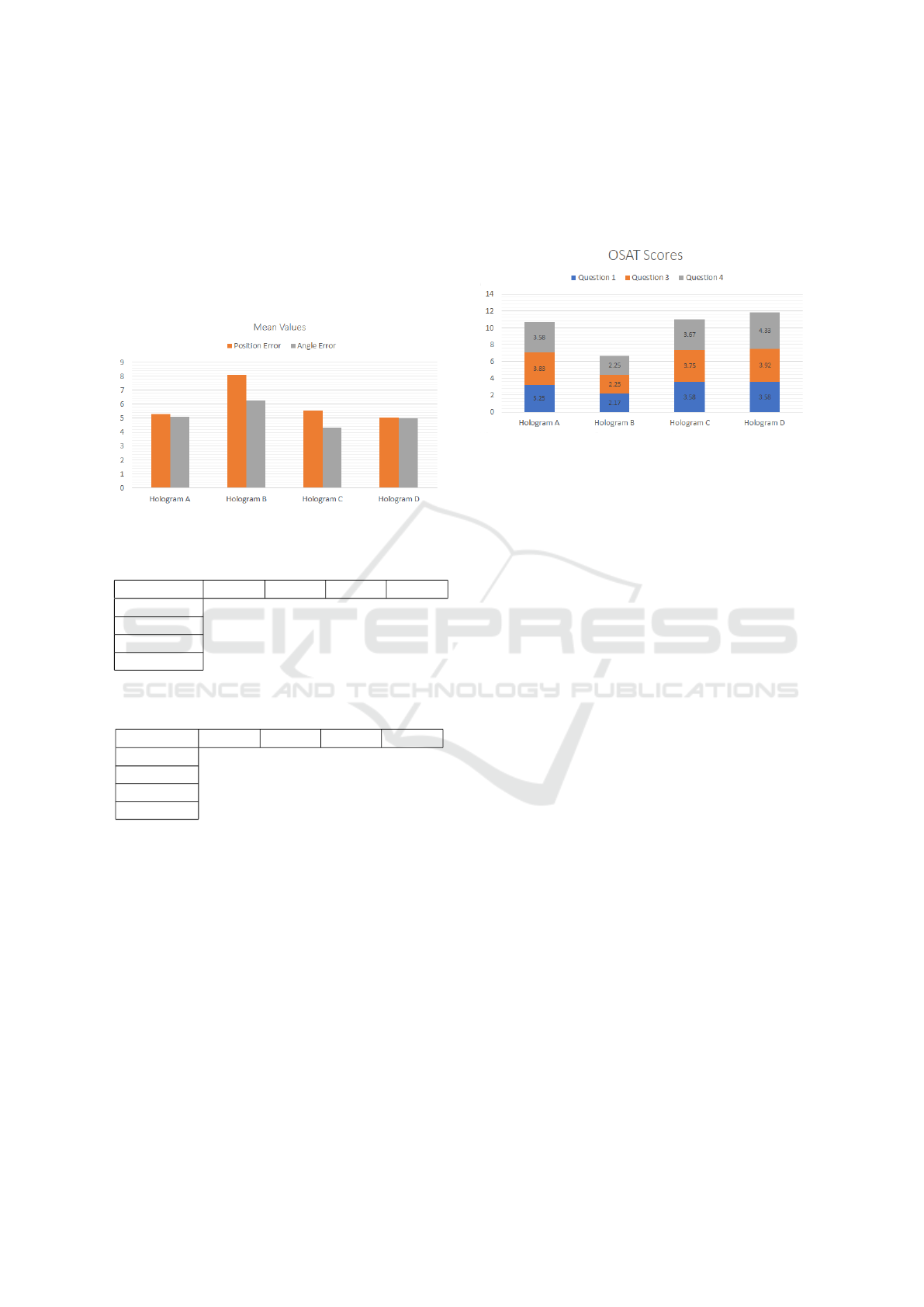

3.3 Task Accuracy

Regarding the dimensional positioning accuracy, it is

most evident that the second solution (“Hologram B”)

Understanding How Different Visual Aids for Augmented Reality Influence Tool-Patient Alignment in Surgical Tasks: A Preliminary Study

619

performed worse than all others (as shown in figure

4). This aligns with its respective level of appreci-

ation, reported in the questionnaire. Solution “B”,

lacking the display of the target axis, proved to be the

least precise among the four and also the most oc-

cluding by covering the entire working tool. The av-

erage, minimum, maximum values, and standard de-

viations for linear and angular positioning accuracy

are reported in tables 3 and 4, and in figure 4.

Figure 4: Mean values of linear and angular positioning er-

rors, collected for the four visual aids.

Table 3: Mean values regarding linear positioning accuracy.

POS. (mm) Hol. A Hol. B Hol. C Hol. D

Mean 5.30 8.10 5.54 5.02

Minimum 0.50 2.40 1.45 1.10

Maximum 18.60 25.00 15.55 8.45

St. Dev. 4.48 5.71 2.91 1.97

Table 4: Mean values regarding angular positioning accu-

racy.

ANG. (°) Hol. A Hol. B Hol. C Hol. D

Mean 5.08 6.28 4.32 4.98

Minimum 0.58 1.32 0.31 2.16

Maximum 9.55 17.10 9.52 11.14

St. Dev. 2.25 3.67 2.49 2.77

3.4 Overall Satisfaction

The measurement of overall satisfaction was con-

ducted through four appreciation questions (listed in

section 2.1), where participants were required to as-

sign an appreciation score for each proposed visual

aid. The second question was excluded from the anal-

ysis due to its low statistical significance, as all four

proposed solutions were found to be intuitively usable

with minimal divergence in results. The remaining

three questions were evaluated, encompassing aspects

of speed, occlusion, and perceived accuracy. Solu-

tion “D” emerged as the overall preferred choice with

an average total score of 11.83 out of 15. Follow-

ing closely was solution “C” with a score of 11 out

of 15, while solution “A” secured the third position

with a total of 10.67 out of 15 points. Lastly, solution

“B”, identified as the most occlusive, received a final

score of 6.67 out of 15, deemed insufficient. Figure

5 illustrates a graphical representation of the obtained

results.

Figure 5: Mean appreciation values obtained from the

OSAT (Overall Satisfaction) questionnaire.

4 DISCUSSION

The integration of AR technology in surgical settings

has sparked significant interest due to its potential to

integrate virtual models derived from medical imag-

ing to the live surgical environment, providing a uni-

fied and enriched visual context. Our study delved

into the pivotal aspect of visual aid within AR sys-

tems, exploring its influence on surgical precision.

The investigation aimed to evaluate the impact of dis-

tinct graphical interfaces on surgical accuracy while

employing the same registration technique.

The comparison among the four different overlays

revealed distinct performances and results’ validity is

confirmed by the statistical analysis performed. It

revealed significant differences among the proposed

graphic solutions concerning execution times, lin-

ear positioning errors, and specific questionnaire re-

sponses, confirming that different graphic solutions

mean statistically different results. While angular po-

sitioning error and the second questionnaire item did

not demonstrate substantial differences, it is crucial

to note that our study may not have been exhaustive

enough in exploring these two aspects. A true differ-

entiation in angular positioning was not conducted, as

participants were instructed to insert toothpicks per-

pendicularly to the surface-plane. Similarly, for the

second questionnaire item regarding the clarity and

intuitiveness of the graphical support provided, res-

idents were briefed on the functionality of the four

graphical solutions, facilitating their understanding.

Notably, the solutions lacking explicit graphical

feedback (A, B) exhibited swifter execution times and

a mean accuracy error comparable to the overlays

IVAPP 2024 - 15th International Conference on Information Visualization Theory and Applications

620

with feedback (C, D). These results suggest a poten-

tial tendency of surgeons to focus more intensely on

positioning until the guidance cue was met and that

this overlay can be preferable in surgeries where the

instruments’ tracking cannot be done. On the other

hand, solutions providing visual cues for checking the

correct alignment exhibited enhanced precision but

were associated with slightly longer execution times.

Mean precision of axis-based solutions are simi-

lar, but the feedback-based ones achieve better scores

in standard deviation: more or less the half for “C”

and “D” if compared to solutions “A” and “B”. Worth

to mention, the second solution (B), lacking of a visi-

ble target axis, resulted in lower precision. This aligns

with the corresponding lower level of appreciation re-

ported for solution B in the questionnaire.

Moreover, this study shed light on the residents’

perception about the proposed solutions, as indicated

by their answers to the questionnaire. This aspect,

coupled with the quantitative measurements, under-

scores the multifaceted impact of visual aids, encom-

passing both objective task performance and subjec-

tive user perception. Solution “D” has achieved the

best overall satisfaction score. Similar to solution

“C”, it suggests that simple additional information,

such as a circle that enlarges and shrinks proportion-

ally to positioning error, can be of great use. It is

worth mentioning that some residents have asked for

arrows or similar indications in order to show the di-

rection to which move the tool to ensure correct align-

ment.

Finally, another suggestion has been made by res-

idents regarding the black bar showing quantitative

linear and angular positioning errors in real time (it is

partially visible in figure 1). It was appreciated by all

participants, however it was difficult to read as it was

positioned far from the task objective. This suggests

that for future implementations it can be an additional

visual aid if shown nearer the working area or in con-

junction with the before-mentioned arrows.

Limitations

Limitations of this study primarily regard the re-

stricted sample size of surgical residents involved

from specific specialties, potentially limiting the gen-

eralizability of obtained findings across different sur-

gical domains. Additionally, the test involved a spe-

cific set of tasks with toothpick insertions, potentially

constraining the applicability of the results to broader

surgical procedures. Last, but not least, angular mea-

surement errors are notably high when compared to

the measured values, making them less meaningful

even if they were to achieve a p-value below 0.05. Fu-

ture research encompassing a larger and more diverse

cohort of surgeons across various specialties and di-

verse surgical tasks could further elucidate the nu-

anced relationship between visual aids and surgical

accuracy.

5 CONCLUSIONS

Our study highlights the pivotal role of visual aids

in AR-guided surgical procedures, emphasizing the

correlation between proposed graphical solutions and

task execution accuracy.

The inclusion of graphical feedback to address the

proper alignment of surgical instruments with the pa-

tient diminishes the positioning jitter (lower standard

deviation in positioning accuracy) which means di-

minished chances of making systematic or consistent

errors.

The exploration of different graphical interfaces

illuminates the need for tailored visual aids that strike

an optimal balance between intuitive guidance and ac-

curate task execution, thereby potentially enhancing

surgical performance.

REFERENCES

Agten, C. A., Dennler, C., Rosskopf, A. B., Jaberg, L., Pfir-

rmann, C. W., and Farshad, M. (2018). Augmented re-

ality–guided lumbar facet joint injections. Investiga-

tive Radiology, 53:495–498. E’ proprio l’intervento

che sembra che facciamo col nostro STRARiS Over-

lay Test.

Barber, S. R., Jain, S., Son, Y., and Chang, E. H. (2018).

Virtual functional endoscopic sinus surgery simula-

tion with 3d-printed models for mixed-reality nasal

endoscopy. Otolaryngology–Head and Neck Surgery,

159:933–937.

Battaglia, S., Ratti, S., Manzoli, L., Marchetti, C.,

Cercenelli, L., Marcelli, E., Tarsitano, A., and Rug-

geri, A. (2020). Augmented reality-assisted perios-

teum pedicled flap harvesting for head and neck re-

construction: An anatomical and clinical viability

study of a galeo-pericranial flap. Journal of Clinical

Medicine, 9:2211.

Carmigniani, J. and Furht, B. (2011). Augmented reality:

An overview.

Cercenelli, L., Babini, F., Badiali, G., Battaglia, S., Tarsi-

tano, A., Marchetti, C., and Marcelli, E. (2022). Aug-

mented reality to assist skin paddle harvesting in os-

teomyocutaneous fibular flap reconstructive surgery:

A pilot evaluation on a 3d-printed leg phantom. Fron-

tiers in Oncology, 11.

Cercenelli, L., Carbone, M., Condino, S., Cutolo, F., Mar-

celli, E., Tarsitano, A., Marchetti, C., Ferrari, V., and

Badiali, G. (2020). The wearable vostars system for

Understanding How Different Visual Aids for Augmented Reality Influence Tool-Patient Alignment in Surgical Tasks: A Preliminary Study

621

augmented reality-guided surgery: Preclinical phan-

tom evaluation for high-precision maxillofacial tasks.

Journal of Clinical Medicine, 9:3562.

Cercenelli, L., Emiliani, N., Gulotta, C., Bevini, M., Badi-

ali, G., and Marcelli, E. (2023). Augmented reality in

orthognathic surgery: A multi-modality tracking ap-

proach to assess the temporomandibular joint motion.

Condino, S., Cutolo, F., Carbone, M., Cercenelli, L., Badi-

ali, G., Montemurro, N., and Ferrari, V. (2023). Reg-

istration sanity check for ar-guided surgical interven-

tions: Experience from head and face surgery. IEEE

Journal of Translational Engineering in Health and

Medicine, pages 1–1.

Fida, B., Cutolo, F., di Franco, G., Ferrari, M., and Ferrari,

V. (2018). Augmented reality in open surgery. Up-

dates in Surgery, 70:389–400.

Fitzpatrick, J. M. (2010). The role of registration in accurate

surgical guidance. Proceedings of the Institution of

Mechanical Engineers, Part H: Journal of Engineer-

ing in Medicine, 224:607–622.

Fotouhi, J., Alexander, C. P., Unberath, M., Taylor, G.,

Lee, S. C., Fuerst, B., Johnson, A., Osgood, G., Tay-

lor, R. H., Khanuja, H., Armand, M., and Navab,

N. (2018). Plan in 2-d, execute in 3-d: an aug-

mented reality solution for cup placement in total hip

arthroplasty. Journal of medical imaging (Bellingham,

Wash.), 5:021205.

Fraga-Lamas, P., Fernandez-Carames, T. M., Blanco-

Novoa, O., and Vilar-Montesinos, M. A. (2018). A

review on industrial augmented reality systems for the

industry 4.0 shipyard. IEEE Access, 6:13358–13375.

Friedman, M. (1937). The use of ranks to avoid the as-

sumption of normality implicit in the analysis of vari-

ance. Journal of the American Statistical Association,

32:675–701.

Friedman, M. (1939). A correction. Journal of the Ameri-

can Statistical Association, 34:109–109.

Friedman, M. (1940). A comparison of alternative tests of

significance for the problem of m rankings. The An-

nals of Mathematical Statistics, 11:86–92.

Jiang, J., Zhang, J., Sun, J., Wu, D., and Xu, S. (2023).

User’s image perception improved strategy and appli-

cation of augmented reality systems in smart medical

care: A review. The International Journal of Medical

Robotics and Computer Assisted Surgery, 19.

Jud, L., Fotouhi, J., Andronic, O., Aichmair, A., Osgood,

G., Navab, N., and Farshad, M. (2020). Applicability

of augmented reality in orthopedic surgery – a system-

atic review. BMC Musculoskeletal Disorders, 21:103.

Li, L., Yang, J., Chu, Y., Wu, W., Xue, J., Liang, P.,

and Chen, L. (2016). A novel augmented real-

ity navigation system for endoscopic sinus and skull

base surgery: A feasibility study. PLOS ONE,

11:e0146996.

Mai, H.-N., Dam, V. V., and Lee, D.-H. (2023). Accuracy

of augmented reality–assisted navigation in dental im-

plant surgery: Systematic review and meta-analysis.

Journal of Medical Internet Research, 25:e42040.

Meola, A., Cutolo, F., Carbone, M., Cagnazzo, F., Fer-

rari, M., and Ferrari, V. (2017). Augmented reality

in neurosurgery: a systematic review. Neurosurgical

Review, 40:537–548.

Peh, S., Chatterjea, A., Pfarr, J., Sch

¨

afer, J. P., Weuster,

M., Kl

¨

uter, T., Seekamp, A., and Lippross, S. (2020).

Accuracy of augmented reality surgical navigation for

minimally invasive pedicle screw insertion in the tho-

racic and lumbar spine with a new tracking device.

The Spine Journal, 20:629–637.

Ruggiero, F., Cercenelli, L., Emiliani, N., Badiali, G.,

Bevini, M., Zucchelli, M., Marcelli, E., and Tarsitano,

A. (2023). Preclinical application of augmented re-

ality in pediatric craniofacial surgery: An accuracy

study. Journal of Clinical Medicine, 12:2693.

Scherl, C., Stratemeier, J., Karle, C., Rotter, N., Hesser, J.,

Huber, L., Dias, A., Hoffmann, O., Riffel, P., Schoen-

berg, S. O., Schell, A., Lammert, A., Affolter, A., and

M

¨

annle, D. (2021). Augmented reality with hololens

in parotid surgery: how to assess and to improve accu-

racy. European Archives of Oto-Rhino-Laryngology,

278:2473–2483.

Schiavina, R., Bianchi, L., Chessa, F., Barbaresi, U.,

Cercenelli, L., Lodi, S., Gaudiano, C., Bortolani,

B., Angiolini, A., Bianchi, F. M., Ercolino, A.,

Casablanca, C., Molinaroli, E., Porreca, A., Golfieri,

R., Diciotti, S., Marcelli, E., and Brunocilla, E.

(2021). Augmented reality to guide selective clamp-

ing and tumor dissection during robot-assisted par-

tial nephrectomy: A preliminary experience. Clinical

Genitourinary Cancer, 19:e149–e155.

Thabit, A., Benmahdjoub, M., van Veelen, M.-L. C.,

Niessen, W. J., Wolvius, E. B., and van Walsum, T.

(2022). Augmented reality navigation for minimally

invasive craniosynostosis surgery: a phantom study.

International Journal of Computer Assisted Radiology

and Surgery, 17:1453–1460.

van Doormaal, T. P. C., van Doormaal, J. A. M., and

Mensink, T. (2019). Clinical accuracy of holo-

graphic navigation using point-based registration on

augmented-reality glasses. Operative Neurosurgery,

17:588–593.

Verhellen, A., Elprama, S. A., Scheerlinck, T., Aerschot,

F. V., Duerinck, J., Gestel, F. V., Frantz, T., Jansen,

B., Vandemeulebroucke, J., and Jacobs, A. (2023).

Exploring technology acceptance of head-mounted

device-based augmented reality surgical navigation in

orthopaedic surgery. The International Journal of

Medical Robotics and Computer Assisted Surgery.

IVAPP 2024 - 15th International Conference on Information Visualization Theory and Applications

622