Notes on Measures for Information Access in

Neuroscience and AI Systems

Giulio Prevedello

1,2,∗ a

, Luis Marcos-Vidal

3,∗ b

, Emanuele Brugnoli

2,4 c

and Pietro Gravino

1,2 d

1

Sony Computer Science Laboratories Paris, Paris, France

2

Centro Ricerche Enrico Fermi, Rome, Italy

3

Hospital del Mar Research Institute, Barcelona, Spain

4

Sony Computer Science Laboratories Rome - Joint Initiative CREF-SONY, Rome, Italy

Keywords:

Awareness, Information Access, Structural Connectivity, Functional Connectivity, Operational Connectivity,

Intrinsic Ignition, Conditional Mutual Information.

Abstract:

With the increasing prevalence of artificial systems in society, it is imperative to ensure transparency in ma-

chine decision processes. To better elucidate their decisions, artificial systems must possess an awareness of

the information they handle. This includes the understanding of information flow, integration and impact on

the final outcome. A specific facet of awareness, termed access-consciousness, denotes the ability of informa-

tion to be utilised in reasoning and the rational control of action (and speech). This study proposes a method

for measuring access to information within a system by examining the communication dynamics among its

components, specifically focusing on connectivity. To achieve this, we initially delineate the various types of

connectivity in the brain and then propose their translation to artificial systems. Structural connections are

highlighted as mechanisms enabling one component to access information from another. Additionally, we

explore functional connectivity, which gauges the extent to which information from one component is utilised

by another. Finally, operational connectivity is introduced to describe how information propagates from one

component to the entire system. This framework aims to contribute to a clearer understanding of information

access in both biological and artificial systems.

1 INTRODUCTION

One of the objectives of Artificial Intelligence (AI) re-

search is to create systems that can adapt their actions

to the human user. In parallel, many efforts are spent

to make the machine’s decision process more under-

standable and transparent to instil confidence and fa-

cilitate interactions. This is reflected by the numerous

works about human-centred AI, AI alignment or ex-

plainable AI in the scientific community (Koster et al.,

2022). At the same time, public attention invested this

field after the recent achievements of large language

models (Min et al., 2023).

Explainability in humans is related to being

”aware of something”. But what does awareness

a

https://orcid.org/0000-0002-9857-2351

b

https://orcid.org/0000-0003-2135-1478

c

https://orcid.org/0000-0002-5342-3184

d

https://orcid.org/0000-0002-0937-8830

∗

Equally contributed

mean? It is true that both awareness and con-

sciousness have been mongrel terms that encom-

pass multiple phenomena (or at least multiple parts

of the same phenomenon), but when referring to

AI and explainability, we can narrow their defini-

tion. Following the famous distinction made by

Ned Block between phenomenal-consciousness and

access-consciousness (Block, 1995), in this work we

will only focus on the latter. According to Block’s

definition, information is access-conscious if it can be

used for reasoning, and it is poised for rational control

of action and of speech (Block, 1995). It is then only

related to the content of the information and who is

able to use it (Chalmers, 1997, ”easy problem”), in

contrast to phenomenal consciousness, which is re-

lated to subjective experience (Chalmers, 1997, ”hard

problem”).

Access-consciousness, whether in humans or arti-

ficial systems, requires the acquisition of external in-

formation, internal processing, and the transmission

of this processed information to the system’s actua-

Prevedello, G., Marcos-Vidal, L., Brugnoli, E. and Gravino, P.

Notes on Measures for Information Access in Neuroscience and AI Systems.

DOI: 10.5220/0012596000003636

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 16th International Conference on Agents and Artificial Intelligence (ICAART 2024) - Volume 3, pages 1429-1435

ISBN: 978-989-758-680-4; ISSN: 2184-433X

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

1429

tors. These actuators then generate an output, such as

an action or decision, which is external to the system.

Therefore, it is essential to comprehend how informa-

tion flows within the system’s components and how

access to information occurs. This study specifically

examines the information propagation in both biolog-

ical and artificial systems, making comparisons be-

tween the brain and AI models.

For both domains we assume any system is made

of components that receive, process and send infor-

mation to others. If information can travel from one

component to another, a link is established between

them. Thus, such a system can be represented as

a graph, whose structural connectivity traces where

information can flow between its nodes. No partic-

ular restrictions are imposed to the possible topol-

ogy of connections. For example, a sender node

could provide information to several others and a re-

ceiver nodes could get signals from many sources. In

general, structures such as loops, trees, disconnected

components are acceptable, and it is possible that the

system’s structural connectivity can change over time.

While one component can send information to an-

other, if there is a structural connection, it is possible

that no information is provided or that the informa-

tion is redundant with respect to the signals from other

sources. Thus, we define the functional connectivity

of the system the ensemble of links where the flow of

information is useful, in the sense that it is (function-

ally) used by the receiver and integrated in its output.

Of note, a flow of information presuppose a

stream of data that are continuously passed between

components. Yet, it is possible to restrict the notion

of connectivity further to the path of components ac-

tivation triggered by a single datum as it is elaborated

by the system. The ensemble of these paths, over sev-

eral data, defines the operational connectivity.

In the following, we delve into these three con-

cepts of connectivity (structural, functional and op-

erational) and, based on those, we review possible

measure for access of information, tracing parallel be-

tween neurological and AI systems.

2 STRUCTURAL

CONNECTIVITY

In the study of the brain, structural connectivity

refers to the anatomical connections between brain re-

gions. They are composed mainly of myelinated ax-

ons, allowing for fast information transmission. De-

pending on the level of observation, we can study

single-neuron connections (microscale), cortical col-

umn connections (mesoscale), or the fiber tracts con-

necting brain regions (macroscale) (Sporns et al.,

2005; Sporns, 2011; Kennedy et al., 2016). We will

focus in the macroscale studies as they align more

closely to the artificial domain.

The set of structural connections forms the con-

nectome, which is a mathematical model of the phys-

ical links between brain regions. The brain is thus

considered a network in which each of the regions

becomes a node, and the edges are fiber tracts that

connect them. This network has a non-random archi-

tecture in terms of a scale-free topology (Chung et al.,

2017), high modularity (Sporns and Betzel, 2016) and

efficiency (Latora and Marchiori, 2001), small world-

ness (Sporns and Zwi, 2004), and a rich club orga-

nization (Van Den Heuvel and Sporns, 2011). These

network properties are explained in economic terms

because they offer a trade-off between metabolic cost

and integration of information (Bullmore and Sporns,

2012; Bassett and Bullmore, 2017).

Structural connections only refer to the possibil-

ity of communication between brain regions but say

nothing about how much they communicate. It can be

said that structure enables function and determines the

possible interactions occurring in a system. Thus, the

presence of a structural connection enables access to

the raw information provided by one processing unit.

From a computational perspective, the structural

connectivity in a network system indicates which

components sends the information to which others.

This concept differs from notion of causality as for-

mally defined in mathematical statistics (Pearl, 2009),

which targets cause-effect relations between compo-

nents using counterfactual interventions. In general

is is not possible to perform interventions, so causal

relations are notably difficult to infer in a data driven

way and requires specific techniques. One example

of such techniques is the measure of causal flow that

can be constructed using information theory by modi-

fying the mutual information statistic (Ay and Polani,

2008). Yet, a cause-effect relation might not occur

between two components even if they exchange infor-

mation, e.g. such information could be ignored. In AI

systems, however, structural connectivity is usually

defined and available by design. For example, struc-

tural links can be fixed and defined during implemen-

tation, or component interactions can be regulated

only by a predefined policy. This is the biggest ad-

vantage over biological systems like the brain, where

the mapping of the entire connectome is an open chal-

lenge (Sporns, 2013).

AWAI 2024 - Special Session on AI with Awareness Inside

1430

3 FUNCTIONAL CONNECTIVITY

If structural connectivity refers to the physical con-

nections of different nodes, functional connectivity

reflects the statistical dependence between their out-

puts. In the brain, it reflects the level of synchro-

nization over remote regions and have a relationship

with co-activation during specific behavior (Biswal

et al., 1995; Friston, 2011). Functional connectivity

describes how much information is shared by two re-

gions (integration) and, together with the directional-

ity of the information flow, the level of factual access

(contrasting to the possible access which is enabled

by structural connectivity).

Mimicking the models on structural connectivity,

a connectome can be created using functional connec-

tivity to study the functional network’s topology. The

functional network also exhibits a complex architec-

ture characterised mainly by a power law distribution

of connections, high efficiency, a high clustering coef-

ficient and, thus, small-worldness (White et al., 1986;

Watts and Strogatz, 1998; Salvador et al., 2005; Va-

lencia et al., 2008). This architecture enables the seg-

regation of structures which become specialized for

specific information processing while ensuring their

integration in the whole system. The brain can then be

divided into functional networks, which are defined

as group of highly connected regions which are re-

lated to specific cognitive processes (Yeo et al., 2011;

Glasser et al., 2016).

Interestingly, there are differences between the

structural and functional networks, highlighting the

difference between possible access and factual ac-

cess. Multiple brain models have attempted to study

the relation between the two, but the more successful

ones are functional connectivity models that are con-

strained by anatomical connections, such as the Hopf

brain model (Deco et al., 2021). The neuroimaging

results reveal that a functional organization of statisti-

cal relationships can arise from the structural connec-

tions within the system. Importantly, this functional

organization serves as a more effective indicator of

how extensively each component accesses informa-

tion from other components.

In AI systems, functional connections are traced

by the signals, flowing from the sender to the receiv-

ing components, whose information is actually inte-

grated by the receivers. The extend of “useful” in-

formation can measured by comparing the distribu-

tions of components’ input and output using informa-

tion theoretic statistics such as the Conditional Mu-

tual Information, shortened in CMI (Wyner, 1978).

This statistic enables to account for the information

the receiver component (X) get from the sender (Y )

that is redundant with the signal already coming from

another source (Z) (Ay and Polani, 2008). Formally,

CMI is defined as

I(X;Y |Z) =

= H(X, Z) + H(Y,Z) − H(Z)− H(X,Y,Z)

(1)

where H(X ) is the entropy (usually Shannon’s) of the

random variable X (Shannon, 1948).

As the entropy is well defined for any distribution,

this measure is applicable without imposing particu-

lar restriction to the variables X , Y , Z, and therefore

on the system design. In general, CMI can be formu-

lated for variables that can be discrete (ordinal or cat-

egorical) or continuous, as long as their density can

be suitably estimated. It is applicable to time series

(Schreiber, 2000, see Transfer Entropy) and to multi-

variate variables, although the estimation of their den-

sity becomes harder as the dimensions increase, due

to the curse of dimensionality (Runge et al., 2012).

3.1 Simulations

To better understand what functional connectivity is,

in this section we simulate an AI system where the

structural connectivity is fixed and the strength of

functional connectivity (the CMI) can be controlled.

The simulation was developed to show the benefits

of AI systems that are aware of moral values (Steels,

2024; Montes et al., 2022; Abbo and Belpaeme, 2023;

Roselli et al., 2023), as part of a research project

on information dynamics in social media (Gravino

et al., 2022) and moral values (Brugnoli et al., 2024;

Marcos-Vidal et al., 2024). In this work, we con-

sider a simple recommender algorithm (Gravino et al.,

2019; Marzo et al., 2023) that evaluates if a set of

items (e.g., posts from Twitter/X) are fit for given

users.

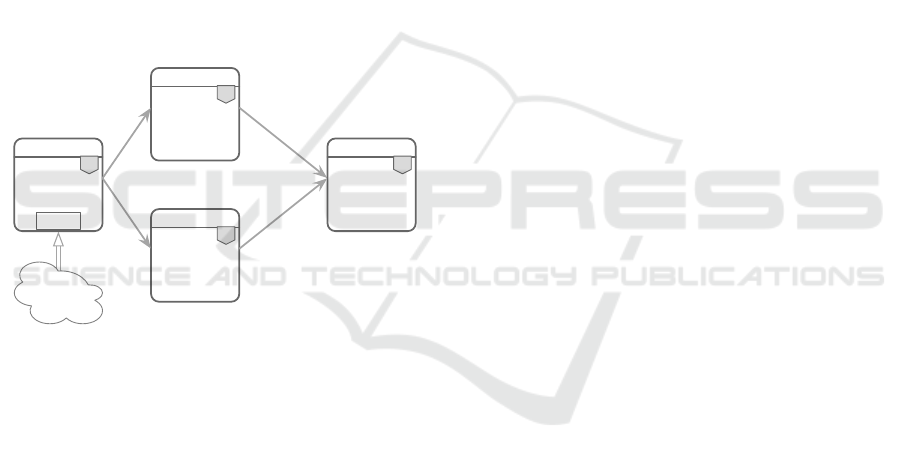

This system consists of four components: a

text preprocessing component, a topic detection

model (Lavrenko et al., 2002), a moral-topic simi-

larity scorer, and a detector of value dyads from the

Moral Foundations Theory (Graham et al., 2013): Au-

thority/Subversion, Care/Harm, Fairness/Cheating,

Loyalty/Betrayal, Purity/Degradation and No values.

Upon receiving a tweet, the system preprocesses and

passes it to the topic detection module, which returns

a vector i

t

∈ R

p

such that i

t j

is the probability that

the tweet t is about topic j and

∑

j

i

t j

= 1; the prepro-

cessed text is also passed to values-detector, which

returns a vector i

m

∈ R

q

such that i

m j

is the probabil-

ity that the tweet m mainly expresses the moral dyad j

and

∑

j

i

m j

= 1. Then i

t

and i

m

are passed to the simi-

larity scorer that calculates a score r ∈ [0,1] following

the formula

λ S

C

(D(i

m

,h

m

),u

m

) + (1 − λ)S

C

(D(i

t

,h

t

),u

t

), (2)

Notes on Measures for Information Access in Neuroscience and AI Systems

1431

where:

• S

C

is the cosine similarity, to measure the affin-

ity, between the user and the item, on morals and

topics;

• D(v,h), with v ∈ R

k

for any k > 0, is the sample

from a Dirichlet distribution with parameters α

i

=

1 + v

i

k/(h + 10

−10

) for i = 1, ...,k, enabling the

introduction of noise to the input components that

is modulated in magnitude by h ≥ 0;

• The values h

t

≥ 0 and h

m

≥ 0 modulate the magni-

tude of the noise that artificially perturbs the input

variables i

t

and i

m

, respectively;

• λ ∈ [0,1] interpolates between topic (small λ) and

moral (large λ) similarities.

In summary, a tweet enters the system which out-

puts a recommendation score based on the user-post

similarities of moral and topics vectors. The parame-

ters λ, u

m

, u

t

, h

m

, h

t

must be initialised upon deploy-

ment. The architecture is sketched in Figure 1.

MFT Values

Detection

None

C2

Moral-topic

Similarity

Parameters

C1

Topic

Detection

Model

Scores threshold

C0

Text

preprocessing

Tasks

C0

Percepts

Twitter post

Figure 1: The architecture for the analysis of the CMI.

In this architecture, the CMI lets us quantify

how much information the similarity scorer integrates

from the value detector, discounting the information

already provided by the topic detector. Thus, the CMI

is calculated from the outputs of these three compo-

nents.For notation, we define that from the j-th tweet

(0 ≤ j ≤ J), the three components respectively pro-

duce the observations of i

j

t

, i

j

m

and r

j

of the random

variables Z, X, Y . Since J can be very large (171,067

tweets in the present analysis), we set a batch size of

1000 for the number of observations on which the

CMI is calculated, for a total of B = 171 batches.

So, for each batch b, to obtain the CMI, it suffices

to calculate the entropies in (2) from the variables’

densities that are estimated via Kernel Density Esti-

mation (KDE) with Gaussian kernel and bandwidth

from Scott’s method (Scott, 2015). Of note, if the

distributions were discrete, one could replace the es-

timation of the density function with the estimator of

the probability mass function by its empirical coun-

terpart.

To understand what the CMI is sensitive to, we

set the parameters h

t

= 0 and u

t

= (1,2,2,2,1,1,2,

2,1,1,2,1,1,2,1,1,2,2,1,1,2, 2, 2, 2, 2, 1)/40 by as-

signing weight 1 or 2 uniformly at random to every

topic and then renormalising. The empirical distribu-

tions of the CMI are thus generated on B batches from

the architecture simulations with:

• λ ∈ {0, 0.1, 0.2, 0.5, 0.7, 1}, to weight the input

from the moral component;

• h

m

∈ {0,0.01,1,10,10

10

}, to perturb the input

from the moral component;

• u

m

∈ {(1,1,1,1,1,1)/6,(1,2,2,1,1, 2)/9,

(1,0,0,0,0,0)}, to simulate a user with uniform,

balanced and extreme moral preferences;

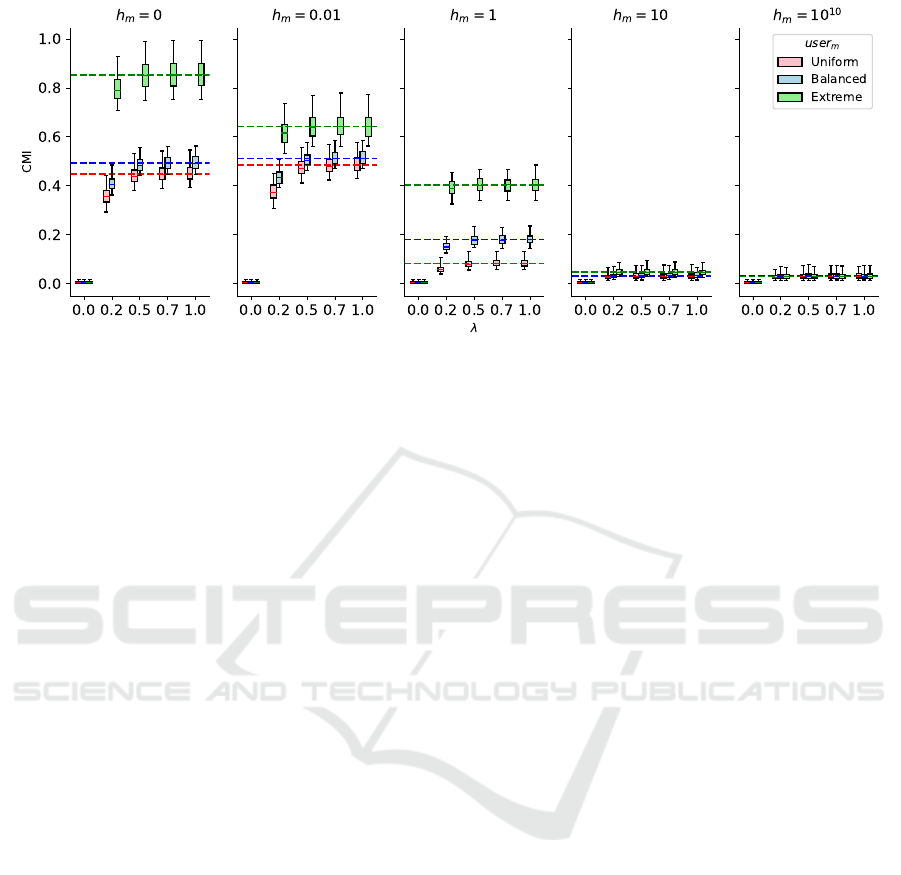

The analysis of Figure 2 shows that the CMI be-

tween the value detector and the similarity scorer,

conditioned on the topic detector, decreases with λ

and becomes null when λ = 0. This transition reflects

the reduction of new information, from the moral

component, integrated in the moral-topic similarity.

The plot also show that the information transfer gets

corrupted as the output from the moral component

becomes noisier with increasing h

m

.These expected

trends hold true irrespective of the user’s moral pref-

erences u

m

, with the case λ = 1 dominating the oth-

ers for every fixed noise level. Interestingly, when the

noise is low, the CMI distribution is quite different be-

tween users. CMI increases from uniform to balanced

and then to extreme profiles, suggesting that the sim-

ilarity scorer adapts its behaviour to the users’. This

evidence could be the result of the distribution of Y

becoming less variable and, therefore, less entropic

as the user’s moral profile becomes more extreme.

In practice, with this measure, we can rank dif-

ferent systems to select the architecture specifica-

tions with stronger functional connectivity for se-

lected components. Another application of the mea-

sure is to support design decisions in the presence of

tradeoffs between performances and functional con-

nectivity. For example, the similarity scorer compo-

nent always provides the highest integration of the

moral detection component when λ = 1, at the cost

of totally ignoring the input from the topic detec-

tion model, which is quite unreasonable. However,

comparing the CMI across different values of λ, it

becomes apparent that values of λ = 0.7 or λ = 0.5

do not degrade considerably the amount of integrated

information, indicating these as acceptable design

choices.

AWAI 2024 - Special Session on AI with Awareness Inside

1432

Figure 2: Comparison of integration measure from the simulations of different architectures. Each boxplot summarises the

distribution of CMI between the value detector and the similarity scorer conditioned on the topic detector from 171 batches

of observations, with boxes covering the interquartile range and containing a full line for the median and whiskers at the 5th

and 95th percentiles. Boxes are grouped in triplets, colour-coded by user

m

choice as in the legend, and horizontally located

depending on the parameters λ (within panels) and h

m

(between panels) chosen for their simulation. Dashed horizontal lines

are placed at the level of the medians of each user’s boxplot with λ = 1 and coloured as the related box.

4 OPERATIONAL

CONNECTIVITY

As a system processes an input, a series of compo-

nents get activated, generating a path in the network.

The ensemble of these paths is the target of the oper-

ational connectivity analysis, an approach commonly

employed to study the brain (Casali et al., 2013). The

brain’s activity can be sampled using techniques such

as functional magnetic resonance imaging or elec-

troencephalogram, and data from these trials are often

used to infer the structural connectivity and the func-

tional connectivity of the brain (Bullmore and Sporns,

2009).

By investigating the chain reactions of neuronal

activity, also called neuronal avalanches, a study

found that their size and lifetime follow a power law

distribution, whose scale-free property is a trait of

scalable, self-organising systems (Beggs and Plenz,

2003). The same authors proposed that the power law

structure emerges from the avalanches behaving like a

branching process (Harris et al., 1963). This is a prob-

abilistic process to model the reproduction of, for ex-

ample, the activity of a neuron that triggers a stochas-

tic number of neighbouring neurons. In particular, the

branching coefficient, optimal for the observed dy-

namic, showed that the process is at a critical state,

meaning that the amount of information transmitted

by an avalanche is maintained high while preventing

the catastrophic activation of the entire system (occur-

ring at the supercritical state).

High scalability and stable information transmis-

sion are desirable properties for AI systems that

evolve over time. The analysis of operational con-

nectivity, as in (Beggs and Plenz, 2003), provides

ways to assess where and how single datapoints per-

colate through the system. In particular, the coeffi-

cients from branching process theory and power law

distributions enable us to measure how scalable the

data transmission process is. However, this analysis is

only sensible for those AI systems whose components

may turn on and off over time with no predictable or

preprogrammed schedule. Trivially, a convolutional

feed-forward deep neural network activates all its lay-

ers of components irrespective of the input.

As different inputs can be processed similarly by

the system, their sequences of activation might share

the same common patterns. For example, an input

generating a long path that touches several regions

of the system is indicative of an expensive process,

which becomes outstanding if the path observed is

particularly unusual compared to the previous others.

Also, a path that gets stuck into an infinite loop bounc-

ing between the same set of components might flag an

internal clash that calls for a mechanism to solve the

conflict.

We contend that investigating the operational con-

nectivity by measuring avalanches and patterns of ac-

tivity can contribute to understanding both the system

and the salience of its inputs.

Notes on Measures for Information Access in Neuroscience and AI Systems

1433

5 CONCLUSIONS

We have analysed access of information, a hallmark

of awareness, from both neurological and compu-

tational perspectives. For systems whose compo-

nents form a network structure, three distinct types

of connectivity were identified: structural, defin-

ing where information can flow; functional, defining

where novel information flows; operational, traced by

the series of components activation triggered by a sin-

gle input. Finally, we reviewed some measures for ac-

cess of information defined from the information flow

on the different types of connectivity. In particular we

focused on the quantification of functional connectiv-

ity via Conditional Mutual Information, and showed

with simulations the utility of this metric to evaluate

access of information in AI systems.

By focusing on information access, we did not ex-

amine other aspects of awareness. When information

is internalised, for example, the content of informa-

tion is also elaborated, integrating the processes of

several components. Providing rigorous quantifica-

tion for all these aspects might benefit the develop-

ment of aware AI systems. Meanwhile, the synthesis

of neurological and computational perspectives will

contribute to a broader understanding of awareness in

both biological and artificial systems.

ACKNOWLEDGEMENTS

This work has been supported by the Horizon Eu-

rope VALAWAI project (grant agreement number

101070930). We thank Prof. Luc Steels for the fruit-

ful discussions that were essential to conceiving the

manuscript.

REFERENCES

Abbo, G. A. and Belpaeme, T. (2023). Users’ perspectives

on value awareness in social robots. In HRI2023, the

18th ACM/IEEE International Conference on Human-

Robot Interaction, pages 1–5.

Ay, N. and Polani, D. (2008). Information flows in causal

networks. Advances in complex systems, 11(01):17–

41.

Bassett, D. S. and Bullmore, E. T. (2017). Small-

world brain networks revisited. The Neuroscientist,

23(5):499–516.

Beggs, J. M. and Plenz, D. (2003). Neuronal avalanches

in neocortical circuits. Journal of neuroscience,

23(35):11167–11177.

Biswal, B., Zerrin Yetkin, F., Haughton, V. M., and Hyde,

J. S. (1995). Functional connectivity in the motor

cortex of resting human brain using echo-planar mri.

Magnetic resonance in medicine, 34(4):537–541.

Block, N. (1995). On a confusion about a function

of consciousness. Behavioral and brain sciences,

18(2):227–247.

Brugnoli, E., Gravino, P., and Prevedello, G. (to appear in

2024). Moral values in social media for disinforma-

tion and hate speech analysis. In VALE workshop Pro-

ceedings, LNAI.

Bullmore, E. and Sporns, O. (2009). Complex brain net-

works: graph theoretical analysis of structural and

functional systems. Nature reviews neuroscience,

10(3):186–198.

Bullmore, E. and Sporns, O. (2012). The economy of brain

network organization. Nature reviews neuroscience,

13(5):336–349.

Casali, A. G., Gosseries, O., Rosanova, M., Boly, M.,

Sarasso, S., Casali, K. R., Casarotto, S., Bruno, M.-

A., Laureys, S., Tononi, G., et al. (2013). A the-

oretically based index of consciousness independent

of sensory processing and behavior. Science transla-

tional medicine, 5(198):198ra105–198ra105.

Chalmers, D. J. (1997). The conscious mind: In search of a

fundamental theory. Oxford Paperbacks.

Chung, M. K., Hanson, J. L., Adluru, N., Alexander, A. L.,

Davidson, R. J., and Pollak, S. D. (2017). Integrative

structural brain network analysis in diffusion tensor

imaging. Brain Connectivity, 7(6):331–346.

Deco, G., Vidaurre, D., and Kringelbach, M. L. (2021). Re-

visiting the global workspace orchestrating the hierar-

chical organization of the human brain. Nature human

behaviour, 5(4):497–511.

Friston, K. J. (2011). Functional and effective connectivity:

a review. Brain connectivity, 1(1):13–36.

Glasser, M. F., Coalson, T. S., Robinson, E. C., Hacker,

C. D., Harwell, J., Yacoub, E., Ugurbil, K., Anders-

son, J., Beckmann, C. F., Jenkinson, M., et al. (2016).

A multi-modal parcellation of human cerebral cortex.

Nature, 536(7615):171–178.

Graham, J., Haidt, J., Koleva, S., Motyl, M., Iyer, R., Wo-

jcik, S. P., and Ditto, P. H. (2013). Moral founda-

tions theory: The pragmatic validity of moral plural-

ism. In Advances in experimental social psychology,

volume 47, pages 55–130. Elsevier.

Gravino, P., Monechi, B., and Loreto, V. (2019). Towards

novelty-driven recommender systems. Comptes Ren-

dus Physique, 20(4):371–379.

Gravino, P., Prevedello, G., Galletti, M., and Loreto, V.

(2022). The supply and demand of news during covid-

19 and assessment of questionable sources produc-

tion. Nature human behaviour, 6(8):1069–1078.

Harris, T. E. et al. (1963). The theory of branching pro-

cesses, volume 6. Springer Berlin.

Kennedy, H., Van Essen, D. C., and Christen, Y. (2016).

Micro-, meso-and macro-connectomics of the brain.

Springer Nature.

Koster, R., Balaguer, J., Tacchetti, A., Weinstein, A., Zhu,

T., Hauser, O., Williams, D., Campbell-Gillingham,

L., Thacker, P., Botvinick, M., et al. (2022). Human-

AWAI 2024 - Special Session on AI with Awareness Inside

1434

centred mechanism design with democratic ai. Nature

Human Behaviour, 6(10):1398–1407.

Latora, V. and Marchiori, M. (2001). Efficient behav-

ior of small-world networks. Physical review letters,

87(19):198701.

Lavrenko, V., Allan, J., DeGuzman, E., LaFlamme, D., Pol-

lard, V., and Thomas, S. (2002). Relevance models

for topic detection and tracking. In Proceedings of the

human language technology conference (HLT), pages

104–110.

Marcos-Vidal, L., Marchesi, S., Wykowska, A., and Pretus,

C. (to appear in 2024). Moral agents as relational sys-

tems: The contract-based model of moral cognition

for ai. In VALE workshop Proceedings, LNAI.

Marzo, G. D., Gravino, P., and Loreto, V. (2023). Recom-

mender systems may enhance the discovery of novel-

ties.

Min, B., Ross, H., Sulem, E., Veyseh, A. P. B., Nguyen,

T. H., Sainz, O., Agirre, E., Heintz, I., and Roth, D.

(2023). Recent advances in natural language process-

ing via large pre-trained language models: A survey.

ACM Computing Surveys, 56(2):1–40.

Montes, N., Osman, N., and Sierra, C. (2022). A com-

putational model of ostrom’s institutional analysis

and development framework. Artificial Intelligence,

311:103756.

Pearl, J. (2009). Causal inference in statistics: An overview.

Statistics Surveys, 3(none):96 – 146.

Roselli, C., Marchesi, S., De Tommaso, D., and Wykowska,

A. (2023). The role of prior exposure in the like-

lihood of adopting the intentional stance toward a

humanoid robot. Paladyn, Journal of Behavioral

Robotics, 14(1):20220103.

Runge, J., Heitzig, J., Petoukhov, V., and Kurths, J. (2012).

Escaping the curse of dimensionality in estimating

multivariate transfer entropy. Physical review letters,

108(25):258701.

Salvador, R., Suckling, J., Coleman, M. R., Pickard, J. D.,

Menon, D., and Bullmore, E. (2005). Neurophysio-

logical architecture of functional magnetic resonance

images of human brain. Cerebral cortex, 15(9):1332–

1342.

Schreiber, T. (2000). Measuring information transfer. Phys-

ical review letters, 85(2):461.

Scott, D. W. (2015). Multivariate density estimation: the-

ory, practice, and visualization. John Wiley & Sons.

Shannon, C. E. (1948). A mathematical theory of communi-

cation. The Bell system technical journal, 27(3):379–

423.

Sporns, O. (2011). The human connectome: a complex net-

work. Annals of the new York Academy of Sciences,

1224(1):109–125.

Sporns, O. (2013). The human connectome: origins and

challenges. Neuroimage, 80:53–61.

Sporns, O. and Betzel, R. F. (2016). Modular brain net-

works. Annual review of psychology, 67:613–640.

Sporns, O., Tononi, G., and K

¨

otter, R. (2005). The hu-

man connectome: a structural description of the hu-

man brain. PLoS computational biology, 1(4):e42.

Sporns, O. and Zwi, J. D. (2004). The small world of the

cerebral cortex. Neuroinformatics, 2:145–162.

Steels, L. (to appear in 2024). Values, norms and ai. in-

troduction to the vale workshop. In VALE workshop

Proceedings, LNAI.

Valencia, M., Martinerie, J., Dupont, S., and Chavez, M.

(2008). Dynamic small-world behavior in functional

brain networks unveiled by an event-related networks

approach. Physical Review E, 77(5):050905.

Van Den Heuvel, M. P. and Sporns, O. (2011). Rich-club

organization of the human connectome. Journal of

Neuroscience, 31(44):15775–15786.

Watts, D. J. and Strogatz, S. H. (1998). Collective dynam-

ics of ‘small-world’networks. nature, 393(6684):440–

442.

White, J. G., Southgate, E., Thomson, J. N., Brenner, S.,

et al. (1986). The structure of the nervous system of

the nematode caenorhabditis elegans. Philos Trans R

Soc Lond B Biol Sci, 314(1165):1–340.

Wyner, A. D. (1978). A definition of conditional mutual

information for arbitrary ensembles. Information and

Control, 38(1):51–59.

Yeo, B. T., Krienen, F. M., Sepulcre, J., Sabuncu, M. R.,

Lashkari, D., Hollinshead, M., Roffman, J. L.,

Smoller, J. W., Z

¨

ollei, L., Polimeni, J. R., et al. (2011).

The organization of the human cerebral cortex esti-

mated by intrinsic functional connectivity. Journal of

neurophysiology.

Notes on Measures for Information Access in Neuroscience and AI Systems

1435