Towards a Definition of Awareness for Embodied AI

Giulio Antonio Abbo

1 a

, Serena Marchesi

2 b

, Kinga Ciupinska

2 c

, Agnieszka Wykowska

2 d

and Tony Belpaeme

1 e

1

IDLab-AIRO, Ghent University, imec, Belgium

2

Social Cognition in Human-Robot Interaction (S4HRI), Italian Institute of Technology, Genova, Italy

Keywords:

Awareness, Artificial Intelligence, Embodied AI.

Abstract:

This paper explores the concept of awareness in the context of embodied artificial intelligence (AI), aiming to

provide a practical definition and understanding of this multifaceted term. Acknowledging the diverse inter-

pretations of awareness in various disciplines, the paper focuses specifically on the application of awareness

in embodied AI systems. We introduce six foundational elements as essential building blocks for an aware

embodied AI. These elements include access to information, information integration, attention, coherence, ex-

plainability, and action. The interconnected and interdependent nature of these building blocks is emphasised,

forming a minimal base for constructing AI systems with heightened awareness. The paper aims to spark a

dialogue within the research community, inviting diverse perspectives to contribute to the evolving discipline

of awareness in embodied AI. The proposed insights provide a starting point for further empirical studies and

validations in real-world AI applications.

1 INTRODUCTION

The concept of awareness does not allow itself to

be pinned down easily. Indeed, the term is found

across virtually any discipline, not rarely with differ-

ent meanings. Often it is used to describe voluntarily

directing one’s attention towards a certain aspect. In

other cases, it has a specific and circumscribed mean-

ing, seldom familiar to the uninitiated outside a par-

ticular field.

In philosophy, awareness pertains to conscious-

ness and self-awareness, with philosophers investi-

gating how mental states interconnect with physical

processes in the mind-body problem (Fodor, 1981).

Psychology finds it closely linked to consciousness

and delves into different levels of awareness, ranging

from the conscious to the subconscious and uncon-

scious. Neuroscience sheds light on the neural corre-

lates of awareness, studying brain activity associated

with conscious experiences. Researchers in neuro-

science also explore altered states of consciousness,

a

https://orcid.org/0000-0001-6301-0028

b

https://orcid.org/0000-0001-9931-156X

c

https://orcid.org/0000-0002-9909-4400

d

https://orcid.org/0000-0003-3323-7357

e

https://orcid.org/0000-0001-5207-7745

such as sleep, meditation, and drug-induced states, to

unravel the neural mechanisms underlying awareness.

In this turmoil of different definitions, a complete

reconciliation is unfeasible. Furthermore, each of

these definitions is dictated by the heterogeneity of

their applications. Thus, an unifying attempt would

be counterproductive and limiting, as it would lose

the necessary specificity and detail.

We will focus on awareness applied to the field

of artificial intelligence (AI). In particular, we will

discuss what awareness means when dealing with

embodied AI (Chrisley, 2003; Pfeifer and Bongard,

2006; Duan et al., 2022).

Using its body an AI system can explore its sur-

roundings using sensorimotor behaviour, implying

that embodied AI has a certain level of control – or

agency – over what it does in the environment. For

example, a social robot can use its camera feed to

interpret visual events near it and respond appropri-

ately, and in a multi-party conversation, the robot can

use a microphone array to distinguish between speak-

ers and provide insights into what was discussed.

The applications for embodied AI are countless and

we expect embodied AI to acquire even greater rel-

evance in our everyday lives, thanks to the advent of

Large Language Models (LLM) and specifically Mul-

timodal LLMs.

Abbo, G., Marchesi, S., Ciupinska, K., Wykowska, A. and Belpaeme, T.

Towards a Definition of Awareness for Embodied AI.

DOI: 10.5220/0012594800003636

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 16th International Conference on Agents and Artificial Intelligence (ICAART 2024) - Volume 3, pages 1399-1404

ISBN: 978-989-758-680-4; ISSN: 2184-433X

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

1399

Given the ever-changing dynamics of the social

and physical world, having access to sensor data is

not sufficient. Indeed, it is necessary to integrate the

information, enabling coherent interactions between

internal representation, and eventually the system and

the outside world. As a consequence of their in-

creased autonomy from human intervention, these al-

gorithms will necessarily have to show an advanced

level of something which might best be described as

“awareness”. A quality which allows a system to ex-

hibit optimal performance, enabling them to interact

efficiently with their social and physical environment

and respond contingently and quickly to dynamic sit-

uations.

Consider for instance a system operating in a so-

cial environment, where interaction with humans is

fundamental. This can be the check-in area of a busy

metropolitan hospital, with all the complexities asso-

ciated with it: from the noisy environment to the wide

range of cultures and ages. The system could take the

form of a robot in the future, but let us imagine for

now an interactive kiosk. Currently, where kiosks are

available, they show an interface that hustles the pa-

tients through the initial part of the check-in proce-

dure. This experience is often not pleasant, especially

the first time around, and would greatly benefit from

a more human-centric approach. The system could

be empowered with an AI to facilitate a smoother and

more user-friendly check-in process, for example by

being aware of the patient’s emotions. This goes be-

yond the simple detection of a smile, as it involves es-

tablishing a common ground and complex topics such

as the Theory of Mind (Frith and Frith, 2005). The re-

sult would be an enhanced and more humanised form

of interaction, which is fundamental in delicate sce-

narios, such as the one presented.

In this paper, we identify and discuss six build-

ing blocks of an aware embodied AI, showing how

each of them is necessary and providing an illustra-

tive application. Then, we suggest how the items pre-

sented are connected and interdependent, before mov-

ing to propose a definition of awareness in this field.

While our definition is a working definition, this pa-

per contributes to the discourse on awareness and con-

sciousness in AI by offering new thoughts and per-

spectives, thereby enriching the ongoing exploration

in this field.

2 BACKGROUND

2.1 Consiusness, Awareness,

Self-Awareness

Dehaene et al. (Dehaene et al., 2017) identify two

levels of consciousness: Global Availability and Self-

Monitoring. The first represents consciousness in its

transitive meaning as in being conscious of X, the in-

formation becomes globally available to the rest of

the system for further processing. Fundamental at

this level is the mental representation of the object

of thought and the ability to report about it verbally

and non-verbally. The psychological definition of at-

tention (James, 1890) overlaps with the concept of

global availability if we exclude the previous stages

of involuntary attentional selection. The second level

identifies the reflexive meaning of consciousness, in

the sense of self-monitoring, introspection, or meta-

cognition. Confidence, reflection, meta-memory and

reality monitoring are all aspects related to this level

of consciousness or self-awareness. Importantly, the

two levels are orthogonal as one can exist without the

other.

In their position paper, Dehaene et al. state that a

machine endowed with these two capabilities would

behave as if conscious. However, a holistic imple-

mentation in which the system becomes aware of ev-

erything is currently technologically unfeasible. For a

concrete application of the definition, we find it nec-

essary to always specify the object of the awareness.

We will thus refer to awareness of something: e.g.,

awareness of the self (self-aware), awareness of the

context, awareness of our capabilities, and so on. This

limitation, in which a system is aware only of a few

aspects, carefully avoids any conscious-mimicking

behaviour.

2.2 Embodied AI

“Embodied AI is about incorporating traditional in-

telligence concepts from vision, language, and rea-

soning into an artificial embodiment” (Duan et al.,

2022). Conventional AI leverages the vast amounts of

data available on the internet from text, to multimedia

elements, to the most diverse datasets. On the other

hand, embodied AI integrates physical interaction and

sensorimotor capabilities into artificial agents. The

physical presence of embodied AI needs to be re-

flected in its training data. For this reason, egocen-

tric (first-person) perception plays a central role in

this field. First-person data consists of videos and im-

ages taken from the point of view of the agent, in this

case, the embodied system. With this new kind of

AWAI 2024 - Special Session on AI with Awareness Inside

1400

data, it is possible to tackle new and exciting prob-

lems (Grauman et al., 2022): indexing past experi-

ences, analysing present interactions, and anticipating

future activity.

Chella et al. set to achieve awareness – specifi-

cally, self-awareness – through inner speech (Chella

et al., 2020). Inner speech can take many forms: it

can consist of just a few words or full sentences, and

it can be a monologue or a dialogue, in the case one

asks questions and answers them using both “I” and

“You”. This process is involved in self-regulation,

language functions such as writing and reading, re-

membering the goals of action, task-switching per-

formances, Theory of Mind, and self-awareness. The

system makes use of perception and action modules, it

includes proprioception of emotions, beliefs, desires,

intentions and body as well as exteroception. Actu-

ators include covert articulation and motor modules,

and everything is enabled by a set of memory modules

(Chella and Pipitone, 2020). We choose to focus on

the indispensable ingredients of awareness, since we

are not set to achieve true consciousness. This means

that several aspects, such as believes and desires – but

also goals – are not considered in our work.

3 ELEMENTS FOR AWARENESS

What are the building blocks for an aware embodied

AI? Which processes, structures and properties are

required for an aware behaviour when dealing with

the external world? In this section, we introduce six

requisites: access to information, information integra-

tion, attention, coherence, explainability, and action.

We purposefully will not cover those aspects that are

secondary nice-to-haves but do not constitute a mini-

mal base for awareness.

3.1 Access to Information

For a system to be aware of X, it must have access

to X . While this statement may seem self-evident,

we want to stress that access, in this context, extends

beyond mere availability. It encompasses the system’s

ability to effectively retrieve and process information

from the outside of the system and from other system

components, such as a memory (Wood et al., 2012).

Access to crucial information might be challeng-

ing in scenarios where an embodied AI system oper-

ates with restricted sensor capabilities or obstructed

lines of sight. Consider a robot navigating a cluttered

and dimly lit space. If its visual sensors are obstructed

or limited, the system’s access to visual cues, such as

identifying obstacles or determining the layout of the

environment, is compromised, and so is its awareness

of the surroundings.

On the other hand, in a properly designed system,

an embodied AI system can showcase effective access

to information. For instance, in an autonomous ve-

hicle equipped with advanced cameras, LiDAR, and

radar systems, the system gains access to a rich set of

data about its surroundings. At any time, the system

has access to the data, and if one of these sensors fails,

the system maintains awareness thanks to the redun-

dancy of its senses.

3.2 Information Integration

For a system to exhibit awareness of X , the integra-

tion of all data about X is essential. Integration goes

beyond access as it involves putting together and syn-

thesising information into a unified and meaningful

representation.

Without access to the visual information, the robot

in the example previously discussed finds itself lost in

the environment. Having an alternative data source,

such as a sonar, would alleviate the problem. How-

ever, the new system is susceptible to a new issue:

the two sensors could provide contrasting data. If

the robot fails to merge and integrate the information

available into a coherent model of the environment,

then it will not find itself in a better position than in

the initial situation.

Similarly, the aforementioned robot has access to

a diverse range of data sources about its surroundings.

However, it needs to maintain a coherent model of

the situation, to be aware of it. The integration of

these diverse data sources empowers the AI system to

navigate safely, showcasing a high level of awareness

of its surroundings.

3.3 Attention

Attention is a key component of awareness – or con-

sciousness, depending on the discipline that is being

considered. According to Taylor (Taylor, 2007), at-

tention is the consciousness of a stimulus. It allows

focusing on the most salient aspects while ignoring

other distractors. Real-time processing is fundamen-

tal for maintaining awareness, and attention is one of

the means to reduce the computational load of the sys-

tem.

Trivially, any system with a sound design displays

a certain form of architectural attention. Imagine a

self-driving car: the system in charge of maintaining

awareness of the surroundings will not receive data on

which radio station is playing, by design. However,

this is hardly a proper attention mechanism, as it boils

Towards a Definition of Awareness for Embodied AI

1401

down to simply not having access to irrelevant data.

Instead, attention is about filtering out a part of

the data, and the focus of attention can be limited to

a handful of aspects at one time. Paying attention to

a car several hundred meters behind while driving at

high speed is not necessary, as the car’s resources are

better employed to detect obstacles in front of the ve-

hicle. Nonetheless, the data about the car is still ac-

cessible, and the attention should be shifted towards

it if, for instance, it turned on the police light bars

signalling to make way.

3.4 Coherence

For embodied AI to demonstrate awareness of X , it

must exhibit coherence in the decision-making asso-

ciated with it. This involves maintaining consistency,

both during the task at hand and over time.

To showcase awareness, the AI system must ex-

hibit coherence with its own decision history. Mem-

ory, or by extension an internal model, is vital for

this process. An embodied AI should maintain an ac-

cessible record of past decisions and outcomes, and

produce consistent responses across similar scenarios,

demonstrating the system’s ability to apply past expe-

riences to comparable situations. As a consequence,

the system could be made able to learn from previous

mistakes and predict the outcome of its actions.

In the same way, the system should be stable in its

decisions during the execution of a task. It is expected

that an autonomous car will suddenly reduce its speed

when it detects an unforeseen obstacle. However, in

a normal situation, the car is expected to maintain a

constant speed showing awareness of the obstacles on

the way.

3.5 Explainability

In the context of embodied AI awareness, explainabil-

ity is a safeguard for safety and a means to account-

ability. The system must not only be aware of (X) but

also capable of elucidating its understanding and de-

cisions regarding X. The explanation can be in any

form, such as the English language or a diagram. It

has however to be factual, representing the real mo-

tivations for a certain behaviour. Indeed, post-fact

reasoning about the events that happened and why,

which any Large Language Model certainly enables,

does not add to the safety nor the accountability of the

system.

It is easy to see how a factual explanation of why

a self-driving car chose a specific course of action

is essential for passengers, regulators, and other road

users. For instance, in situations where the car over-

Access

Data

Availability

Information

Integration

Memory

Coherence

Explainability

Attention

Action

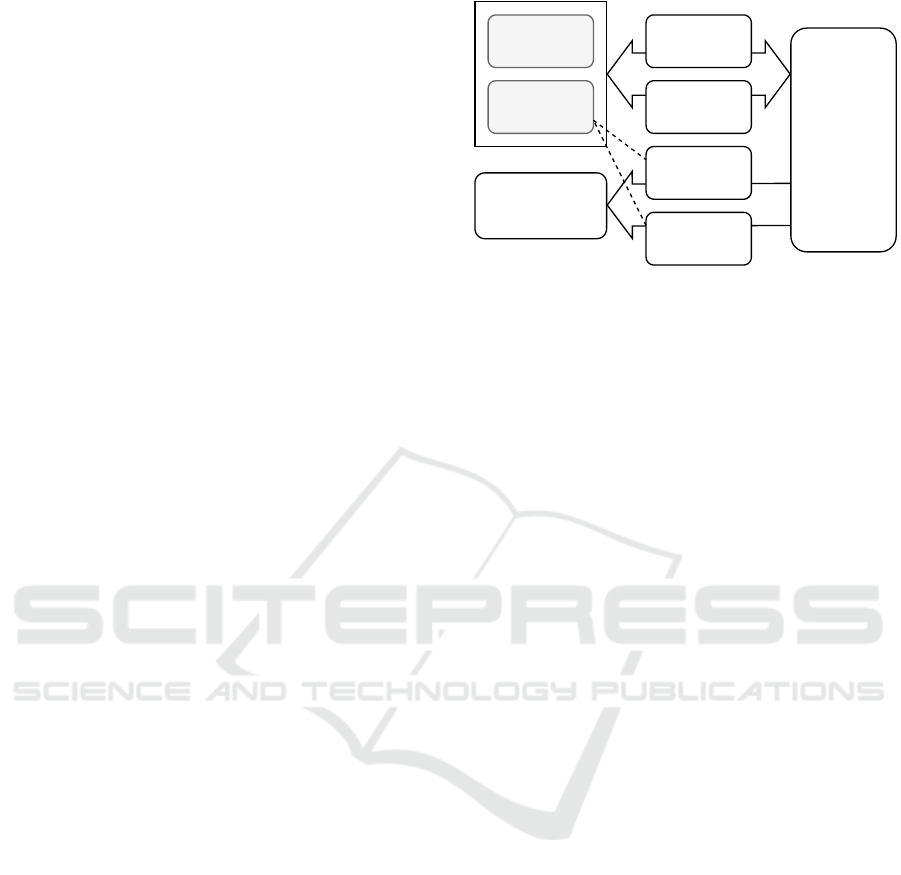

Figure 1: This diagram shows the relations between the

awareness requirements presented.

rides human input or faces ambiguous road condi-

tions, clear explanations ensure accountability and ad-

herence to legal and ethical standards. While this as-

pect might appear as secondary, only a truly aware

system can provide such an explanation.

3.6 Action

An integral aspect of embodied AI is the interac-

tion with the external environment. Being aware of

a certain aspect (X) should be followed by a possible

change of the internal behaviour or an intervention in

the environment to bring about a desired change.

Consider an autonomous vehicle navigating a

busy urban area. The system being aware of its sur-

roundings is useless unless it can also modify its tra-

jectory and speed to avoid obstacles, ensuring the

safety of both passengers and others on the road. In-

tervening on the system’s behaviour is not the only

way to effect change: indeed, a system can also in-

tervene in the external environment. For example, a

smart building management system may adjust light-

ing and temperature based on occupancy patterns, to

enhance energy efficiency and user comfort.

Without the ability for a behaviour change, reac-

tive or proactive, the system’s utility diminishes. In-

deed, true awareness of a situation encompasses not

just perceiving and understanding it but also adapting

and responding effectively to its dynamics.

4 AWARENESS FOR EMBODIED

AI

The requirements presented depend strongly on each

other as shown in Figure 1. Access to internal and

external information forms the foundational layer, al-

lowing the system to perceive and collect data about

its environment and have a memory. This information

AWAI 2024 - Special Session on AI with Awareness Inside

1402

is then subjected to information integration, where the

system combines and synthesises data into a cohesive

representation. Without access, the information inte-

gration process would have to rely passively on the

data streaming from the environment. Attention acts

as a dynamic filter, directing the system to focus on

relevant aspects and optimising real-time processing.

Action completes the loop, as the system, thanks

to the information integration, can dynamically inter-

act with and influence its surroundings. Ensuring that

the system’s decisions and actions align with its un-

derstanding, and maintaining coherence over time is

fundamental for successful and reliable interactions.

Explainability serves as a critical component, de-

manding that the system accurately justifies its deci-

sions, fostering transparency and accountability. Both

these last functionalities require access, specifically to

the memory of previous experiences.

Considering everything presented so far, we pro-

pose to call a system aware of X if:

• it has access to information about X , in the form of

data availability, memory recall and forward mod-

elling;

• it displays an attention mechanism towards X, fil-

tering out distractors;

• it can successfully integrate available information

into a model of X;

• it can act in response to X, changing its behaviour

or intervening on the environment;

• it displays coherence in its decisions about X, with

respect to its current and previous actions;

• it is explainable in its decisions about X, using

verifiable data to justify them.

If the first measures are evidently necessary for a

working system, it can be debated that action and ex-

plainability do not play a fundamental role. However,

we argue that all the aspects presented are equally im-

portant for aware embodied AI.

In particular, the action is what distinguishes the

aware system from a passive observer. Consider for

instance a human without any motor capability: even

without a possibility to change the state of the world

that surrounds him, this subject is clearly still aware,

as long as he can change his ideas and thoughts in

response to external stimuli. However, if we know for

certain that this is not the case, we would say that the

subject is no longer aware.

On the other hand, a system that can interact with

the external world – thus possessing the action re-

quirement – but cannot explain the motivation behind

its actions, cannot be defined as truly aware as it lacks

the crucial element of transparency. Explainability

serves as the bridge between the system’s internal pro-

cesses and its external behaviour. Without the ability

to articulate the reasons behind its actions, the system

remains inscrutable, hindering our understanding and

trust in its cognitive processes.

A relevant note is to be made, that in this work

we borrowed the term awareness from studies revolv-

ing around humans, and we applied it to the world of

machines. This was permitted by the similarities be-

tween the behaviour of an aware AI with the results

of similar mechanisms taking place in humans and

has nonetheless been done before (Drury et al., 2003;

Holland, 2004; Schipper, 2014, just to cite a few).

We want to underline that the scope of this definition

is embodied AI, and it is not our intention to define

awareness for humans and living creatures. We inten-

tionally chose awareness to underline that what we

want to achieve is a subset of consciousness, which

remains a trait of mankind alone.

5 CONCLUSION

This paper initiates a conversation on practical strate-

gies for enhancing awareness in embodied AI sys-

tems. We introduce six key elements as its founda-

tions: access to information, information integration,

attention, coherence, explainability, and action. Em-

phasising their interconnected and interdependent na-

ture, we argue that these elements form a minimal

base for constructing systems with heightened aware-

ness.

While the proposed definition takes a practical ap-

proach to the topic, it’s important to note that there

is currently limited empirical evidence supporting it.

The contribution underscores the need for future stud-

ies to validate and refine these insights, ensuring their

effective implementation in real-world AI applica-

tions.

This contribution aims to spark a dialogue within

the research community, fostering a dynamic ex-

change of ideas and perspectives. Our work aims not

just to set a stage but to open a dialogue, inviting di-

verse voices to contribute to the evolving discipline of

awareness in embodied AI.

ACKNOWLEDGEMENTS

Funded by the Horizon Europe VALAWAI project

(grant agreement number 101070930).

Towards a Definition of Awareness for Embodied AI

1403

REFERENCES

Chella, A. and Pipitone, A. (2020). A cognitive architec-

ture for inner speech. Cognitive Systems Research,

59:287–292.

Chella, A., Pipitone, A., Morin, A., and Racy, F. (2020). De-

veloping Self-Awareness in Robots via Inner Speech.

Frontiers in Robotics and AI, 7.

Chrisley, R. (2003). Embodied artificial intelligence. Arti-

ficial intelligence, 149(1):131–150.

Dehaene, S., Lau, H., and Kouider, S. (2017). What is

consciousness, and could machines have it? Science,

358(6362):486–492.

Drury, J., Scholtz, J., and Yanco, H. (2003). Aware-

ness in human-robot interactions. In SMC’03 Con-

ference Proceedings. 2003 IEEE International Con-

ference on Systems, Man and Cybernetics. Confer-

ence Theme - System Security and Assurance (Cat.

No.03CH37483), volume 1, pages 912–918 vol.1.

Duan, J., Yu, S., Tan, H. L., Zhu, H., and Tan, C. (2022).

A Survey of Embodied AI: From Simulators to Re-

search Tasks. IEEE Transactions on Emerging Topics

in Computational Intelligence, 6(2):230–244.

Fodor, J. A. (1981). The mind-body problem. Scientific

american, 244(1):114–123.

Frith, C. and Frith, U. (2005). Theory of mind. Current

biology, 15(17):R644–R645.

Grauman, K., Westbury, A., Byrne, E., Chavis, Z., Furnari,

A., Girdhar, R., Hamburger, J., Jiang, H., Liu, M., Liu,

X., Martin, M., Nagarajan, T., Radosavovic, I., Ra-

makrishnan, S. K., Ryan, F., Sharma, J., Wray, M.,

Xu, M., Xu, E. Z., Zhao, C., Bansal, S., Batra, D.,

Cartillier, V., Crane, S., Do, T., Doulaty, M., Erapalli,

A., Feichtenhofer, C., Fragomeni, A., Fu, Q., Gebre-

selasie, A., Gonz

´

alez, C., Hillis, J., Huang, X., Huang,

Y., Jia, W., Khoo, W., Kol

´

a

˘

ı, J., Kottur, S., Kumar, A.,

Landini, F., Li, C., Li, Y., Li, Z., Mangalam, K., Mod-

hugu, R., Munro, J., Murrell, T., Nishiyasu, T., Price,

W., Puentes, P. R., Ramazanova, M., Sari, L., Soma-

sundaram, K., Southerland, A., Sugano, Y., Tao, R.,

Vo, M., Wang, Y., Wu, X., Yagi, T., Zhao, Z., Zhu,

Y., Arbel

´

aez, P., Crandall, D., Damen, D., Farinella,

G. M., Fuegen, C., Ghanem, B., Ithapu, V. K., Jawa-

har, C. V., Joo, H., Kitani, K., Li, H., Newcombe, R.,

Oliva, A., Park, H. S., Rehg, J. M., Sato, Y., Shi, J.,

Shou, M. Z., Torralba, A., Torresani, L., Yan, M., and

Malik, J. (2022). Ego4D: Around the World in 3,000

Hours of Egocentric Video. In 2022 IEEE/CVF Con-

ference on Computer Vision and Pattern Recognition

(CVPR), pages 18973–18990.

Holland, O. (2004). The Future of Embodied Artificial

Intelligence: Machine Consciousness? In Iida, F.,

Pfeifer, R., Steels, L., and Kuniyoshi, Y., editors, Em-

bodied Artificial Intelligence: International Seminar,

Dagstuhl Castle, Germany, July 7-11, 2003. Revised

Papers, Lecture Notes in Computer Science, pages

37–53. Springer, Berlin, Heidelberg.

James, W. (1890). The principles of psychology, vol. 1.

henry holt and co. New York.

Pfeifer, R. and Bongard, J. (2006). How the body shapes the

way we think: a new view of intelligence. MIT press.

Schipper, B. C. (2014). Awareness. Available at SSRN

2401352.

Taylor, J. G. (2007). Through machine attention to machine

consciousness. In Chella, A. and Manzotti, R., ed-

itors, Artificial Consciousness, pages 24–47. Imprint

Academic.

Wood, R., Baxter, P., and Belpaeme, T. (2012). A review of

long-term memory in natural and synthetic systems.

Adaptive Behavior, 20(2):81–103.

AWAI 2024 - Special Session on AI with Awareness Inside

1404