Human Activity Recognition for Identifying Bullying and

Cyberbullying: A Comparative Analysis Between Users Under and

over 18 Years Old

Vincenzo Gattulli and Lucia Sarcinella

Department of Computer Science, University of Bari Aldo Moro, 70125 Bari, Italy

Keywords: Human Activity Recognition, Cyberbullying, Bully, Smartphone, Sensors, Machine Learning.

Abstract: The smartphone is an excellent source of data. Sensor values can be extrapolated from the smartphone. This

work exploits Human Activity Recognition (HAR) models and techniques to identify human activity

performed while filling out a questionnaire that aims to classify users as Bullies, Cyberbullies, Victims of

Bullying, and Victims of Cyberbullying. The paper aims to identify activities related to the questionnaire class

other than just sitting. The paper starts with a state-of-the-art analysis of HAR to arrive at the design of a

model that could recognize everyday life actions and discriminate them from actions resulting from alleged

bullying activities (Questionnaire Personality Index). Five activities were considered for recognition:

Walking, Jumping, Sitting, Running, and Falling. The best HAR activity identification model was applied to

the dataset obtained from the "Smartphone Questionnaire Application" experiment to perform the analysis.

The best model for HAR identification is CNN.

1 INTRODUCTION

Identifying and recognizing human activities is called

Human Activity Recognition (HAR). These actions

can vary, such as walking, sitting, falling, etc...

(Carrera et al., 2022a; Vincenzo Dentamaro et al.,

2020). Each requires Artificial Intelligence (AI)

algorithms to analyze and classify raw data collected

from devices like smartphones or smartwatches.

According to (Minh Dang et al., 2020a), the latter has

sensors that can capture data during activities. This

data can classify and recognize activities (Minh Dang

et al., 2020a).

The paper being a social and person problem,

comes under the world of health and well-being

(Angelillo, Balducci, et al., 2019; Angelillo,

Impedovo, et al., 2019; Cheriet et al., 2023; Gattulli,

Impedovo, Pirlo, & Semeraro, 2023; D. Impedovo et

al., 2012; Donato Impedovo et al., 2021).

These devices can monitor users' mental and

physical states with their sensors. Within an IMU

(Inertial Measurement Unit), an electronic device

consists of accelerometers, gyroscopes, and

occasionally magnetometers; these sensors are

combined. To determine the number of axes on which

the measurement of each sensor used is made, an n-

axis IMU can be created, where the number of axes

are the sum of the axes on which the measurement of

each sensor is made. For example, when a three-axis

accelerometer and a three-axis gyroscope are

integrated, this is called a six-axis IMU. If the

magnetometer is included, speak of a 9-axis IMU

(Thomas et al., 2022; Zhang et al., 2022).

One of the fundamental sensors is the triaxial

accelerometer, but other sensors, such as the triaxial

gyroscope and magnetometer, are also used.

Specifically:

1. The Accelerometer detects linear motion and

gravitational force by measuring acceleration

along the three axes: X, Y, and Z;

2. The Gyroscope measures the rate of rotation of a

body about the X, Y, and Z axes;

3. The Magnetometer is used to detect and measure

geomagnetic fields, which are only sometimes

useful for HAR purposes; therefore, it is only

sometimes included among the sensors used

(Straczkiewicz et al., 2023).

Research on HAR is advanced, but few studies

have recognized bullying actions over other activities.

Most studies have focused on recognizing actions of

physical violence, such as punching or pushing

(Twyman et al., 2010). For this reason, datasets for

Gattulli, V. and Sarcinella, L.

Human Activity Recognition for Identifying Bullying and Cyberbullying: A Comparative Analysis Between Users Under and over 18 Years Old.

DOI: 10.5220/0012578800003654

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 13th International Conference on Pattern Recognition Applications and Methods (ICPRAM 2024), pages 969-977

ISBN: 978-989-758-684-2; ISSN: 2184-4313

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

969

recognizing bullying activities are less common than

those for recognizing more general human activities.

A fall can indicate a bullying action experienced

(Twyman et al., 2010). A real-world example is a boy

falling due to direct pushes or hits.

This paper focused on the recognition of bullying

activities such as falling, an activity little considered

in other studies, and other activities in line with other

studies in the field. This paper aims to identify

activities performed during the completion of a

questionnaire by an experimental group of high

school student’s 18-year-olds, about 16 years, and an

experimental group of college student’s over-18-

year-old, about 19 years, in Italy.

The study aims to achieve several objectives.

1. To understand and study the most widely used

techniques in the state of the art of Human

Activity Recognition and then use them for

Bullying Detection systems, which deal with

identifying and recognizing cases of bullying.

2. To compare the results obtained on this

experimental group of under-18-year-olds and

analyze any differences from the over-18-year-

old group (Gattulli, Impedovo, Pirlo, &

Sarcinella, 2023).

To achieve these goals, the literature was studied

to understand the typical architecture of a Human

Activity Recognition system, commonly used

sensors, and the most relevant activities to be

recognized. Next, dataset creation techniques were

studied, and a public dataset was considered. Once

this information was understood, a dataset containing

data generated by the triaxial accelerometer for five

different activities (Walking, Running, Jumping,

Sitting, and Falling) was performed by nineteen

participants. This dataset, named "Uniba Dataset,"

was later compared with one of the best-known public

datasets in the literature, UniMiB SHAR. The aim is

to distinguish activities resulting from bullying from

Activities of Daily Living. Next, SHAR studied

smartphone-based methods to combine the activities

in the datasets to the final label given by the

questionnaire (Bully, Cyberbully, Victim of Bullying,

and Victim of Cyberbullying).

The paper is structured as follows: Section 2

contains the state-of-the-art Human Activity

Recognition analysis focusing on the Smartphone-

based category. Sections 3 and 4 provide a detailed

analysis of the Datasets, Features, and Classifiers

used. Section 5 discusses the solution implemented in

the experiment. Section 6 is analyzing the results

obtained and discuss the strengths and weaknesses of

the proposed model. Section 7 provides the

conclusions.

2 STATE OF ART

In this section, several studies on Human Activity

identification are viewed and analyzed. A new type of

human activity detection approach is considered that

combines potential abnormal activities performed

while filling out a questionnaire to identify attitudes

associated with bullying or cyberbullying by the

individual.

Many studies still need to include the feature

extraction and selection phase; some examples of this

type of study are given below. The investigation

(Minarno et al., 2020) uses a triaxial gyroscope and

accelerometer to recognize the daily actions of thirty

volunteers. The researchers (Minarno et al., 2020)

examined daily life actions, such as lying down,

standing, sitting, walking, and going down or upstairs,

including Decision Tree, Random Forest, and K-

Nearest Neighbor. Some classifiers have excellent

performance with up to 98 percent accuracy without

implementing a feature selection and extraction step.

Testing is done using three public datasets: the

UMAFall (Casilari et al., 2017), UniMiB SHAR

(Micucci et al., 2017), and SisFall (Sucerquia et al.,

2017). The latter is a publicly available dataset that is

only sometimes considered because it uses only

Inertial Measurement Units to collect data.

The study presents An interesting example

(Dehkordi et al., 2020), in which a 75% overlap is

applied on a two-second sliding window. According to

the study, there are two types of actions: static actions,

in which the global coordinates of the body do not

change, and dynamic actions, in which the whole body

is in motion. Ten users were considered. They were

each given a smartphone to hold in their dominant hand

and instructions on what to do. The smartphone's

triaxial accelerometer (either a Samsung Galaxy or an

LG Nexus) collected data at 50 Hz. The classification

phase took place with the implementation of many

classifiers, including Support Vector Machine,

Decision Tree, and Naïve Bayes. The latter two

performed the best by achieving 98% average accuracy

between static and dynamic task recognition.

2.1 Recognition of Acts of Bullying or

Violence

The vision-based approach can identify bullying or

violent actions in human activity detection.

According to the study (Minh Dang et al., 2020b),

sensor-generated data are more difficult to obtain.

There are two categories of data: RGB and RGB-D.

The second type of data offers higher accuracy than

RGB data but is less used because it is more complex

NeroPRAI 2024 - Workshop on Medical Condition Assessment Using Pattern Recognition: Progress in Neurodegenerative Disease and

Beyond

970

and expensive. This study also emphasizes how vital

the pre-processing and feature extraction stages are.

Classifiers are trained on datasets consisting of

movies or frames to determine whether or not there

are violent situations in the described scene. After a

thorough analysis by the authors regarding several

classifiers, the most efficient one turns out to be CNN,

with an accuracy ranging from 93.32% to 97.62%.

Researchers have combined sensor-based activity

recognition and voice tone emotions to recognize

activities (Zihan et al., 2019). In this study (Ye et al.,

2018), sensors used in smartphones and smartwatches

were used for sensor-based activity recognition. To

reduce the size of the feature matrix, some features

were extracted a priori and then selected using the

principal component analysis algorithm. Mel

Frequency Cepstral Coefficients (MFCC), a

representation of the short-term power spectrum of a

sound, were used to calculate features for emotion

recognition by voice. The classifier used is a K-

Nearest Neighbors. Cross-validation results showed

77.8 percent accuracy for sensor-based systems and

81.4 percent for audio-based systems.

Also considered were studies in which public

datasets are used. The first example considered is the

study (Amara et al., 2021) that used the UMAFall and

SisFall Datasets created with 38 volunteer

participants. The data created were then divided: 20%

is devoted to examination, 30% to validation, and

50% to training. In pre-processing, activities are

assigned a label to determine whether they are daily

activities or falls. As a result, the classification is

binary, and the number of instances in the classes

needs to be more balanced. UMAFall is used only

with these class-unbalanced methods with binary

classification in the fall detection domain.

Some studies, also conducted by the authors of

this paper, have used smartphone-based sensor

methods to deal with cyberbullying. Indeed, the

smartphone is an excellent source of information. An

anomaly detection analysis characterized by human

behavior can be performed using smartphone sensor

values using machine learning techniques.

The researchers (Gattulli, Impedovo, Pirlo, &

Sarcinella, 2023) use Human Activity Recognition

(HAR) models and techniques to identify human

activity performed while filling out a questionnaire

using a smartphone application that aims to classify

users as Bullies, Cyberbullies, Bullying Victims, and

Cyberbullying Victims. The work aims to discuss an

innovative smartphone method that integrates the

results of the cyberbullying and bullying

questionnaire (Bully, Cyberbully, Bullying Victim,

and Cyberbullying Victim) and the human activity

performed. At the same time, the individual fills out

the questionnaire. The work begins with state-of-the-

art analysis of HAR to arrive at the design of a model

capable of recognizing actions of daily life and

distinguishing them from those that might result from

alleged bullying. Five activities were considered for

recognition: Walking, Jumping, Sitting, Running, and

Falling (Castro, Dentamaro, et al., 2023). The best

HAR activity identification model is applied to the

dataset derived from the "Smartphone Questionnaire

Application" experiment to perform the previously

described analysis. The work presented in the

following paper is the precursor (Gattulli, Impedovo,

Pirlo, & Sarcinella, 2023) to the one present.

Another work analyzed is that of (Gattulli,

Impedovo, & Sarcinella, 2023), which analyzes a

method of Detection Anomaly, an essential process

for identifying a situation different from the ordinary.

The following study analyzes anomalies in the human

behavioral domain observed when filling out a

questionnaire on bullying and cyberbullying. This

work analyzes smartphone sensor data

(Accelerometer, Magnetometer, and Gyroscope) to

use anomaly detection techniques to identify

anomalous behaviors used during questionnaire

completion in an Android application. To understand

any polarizing content suggested during the use of the

application and identify users who exhibit abnormal

behaviors, which could be expected of classes of

users, psychology and computer science work

together to analyze and detect any latent patterns

within the dataset under consideration (Gattulli,

Impedovo, & Sarcinella, 2023).

3 DATASET

The datasets used during this experiment are as

follows:

DatasetUniba. The creation of this dataset took place

in a controlled environment with nineteen

participants, including thirteen males and six females

(Gattulli, Impedovo, Pirlo, & Sarcinella, 2023). Each

participant performed eight different actions divided

into the two categories previously described:

ADL:

• Walking;

• Running;

• Hopping;

• Sitting;

• Falling (forward, backward, right, and left).

Human Activity Recognition for Identifying Bullying and Cyberbullying: A Comparative Analysis Between Users Under and over 18 Years

Old

971

Only accelerometer-generated data were collected

using a smartphone placed in each participant's right

pocket, with the screen facing the body. This was

done at a sampling rate of 200 Hz using the Android

application. The collected raw data were then sent to

a server in TXT format to be reprocessed and

converted to CSV format. Each task was performed

several times (two or three). Each trial was 15

seconds long. The falls were performed on a mattress

placed on the floor.

The CSV file is divided into three columns: the

first column contains the user ID, the second contains

the activity performed, the third contains the

Timestamp in milliseconds from the start of the

action, and the next three contain the three triaxial

accelerometer values X, Y, and Z.

DatasetUniba Resampled. A resampled version of the

DatasetUniba.

UniMiB SHAR. Dataset consisting only of values

obtained from the accelerometer. Recognized

activities are again divided into two categories:

Falling:

• Falling forward;

• Falling backward;

• Falling to the right;

• Falling to the left;

• Falling by hitting an obstacle;

• Falling with protective strategies;

• Falling backward without protective strategies;

• Syncope.

ADL:

• Walking;

• Running;

• Climbing stairs;

• Descending stairs;

• Jumping;

• Lying down from a standing position;

• Sitting.

For each activity, there are 2 to 6 trials for each

user. For the actions with two trials, the smartphone

in the right pocket is used in the first and the left in

the second. For actions with six trials, the first three

have the smartphone in the right pocket and the others

in the left pocket. Data are provided in windows of 51

or 151 samples around an original signal peak higher

than 1.5g, with g being the acceleration of gravity.

The best results obtained in the experimentation

performed from this dataset are with a K-NN in the

ADL-only category.

In all datasets, all activities are considered anomalous

except the sitting activity.

4 METHODS

The machine learning models used are:

• CNN, a convolutional, feed-forward neural

network, consisting in this case of 3 ReLU layers,

each alternating with a pooling layer for

simplifying the output obtained from the

previous layer, whose practical goal is to reduce

the number of parameters the network must

learn. After these, a flattened layer is used for

linearizing the output, and a SoftMax layer is

used for the classification.

• LSTM, a type of RNN, differs from CNN

networks because of the addition of feedback

layers, whose peculiarity is the ability to learn

from long-time sequences and then maintain

memory. This network is structured by 2 LSTM

layers, alternating with a Dropout layer. Then,

the actual layers are devoted to the prediction of

relu and SoftMax.

• Bi-LSTM is a particular type of LSTM network

that is practically trained to make predictions not

only on past knowledge but also on future

knowledge and then go backward with the

predictions. Unlike the LSTM network, which

can learn unidirectionally.

Each of these models was used in different

combinations with the various datasets. Starting with

CNN, it was noted that remarkable performance was

achieved compared with LSTM and Bi-LSTM.

5 EXPERIMENTATIONS

The experiment is organized into several stages:

• Data pre-processing;

• Extraction of data generated by the sensors;

• Classifier training;

• Activity recognition;

• Comparing results with data from users under 18

years old with results from users over 18 years

old.

The pre-processing phase was carried out to

prepare the data for further processing to simplify the

classification of activities. Specifically:

• Activities that were not relevant (in the case of

UniMiBSHAR) were removed;

NeroPRAI 2024 - Workshop on Medical Condition Assessment Using Pattern Recognition: Progress in Neurodegenerative Disease and

Beyond

972

• Activities were renamed and grouped into a more

manageable and meaningful set. For example,

the various types of falls (right, left, front, and

back) were aggregated into a single activity

representing each type of fall;

• The data were appropriately adapted into a three-

dimensional form for input into a CNN.

The second phase of the experiment involved a

data feature extraction phase by which only the

accelerometer values were extracted from the text

files from the sensors of the devices used by the users

when filling out the questionnaire. Values generated

by the magnetometer and gyroscope were then

discarded. The row of interest (X, Y, and Z

coordinates) was placed within a data structure

offered by the Python Pandas library, called a data

frame. Each coordinate triple (X, Y, Z) also specified

the questionnaire screen in which that movement was

recorded. This procedure obtained a series of CSV

files used in the following steps to make predictions.

The entire process was repeated for all users

participating in all test phases (Test1, Test2, Test3)

except a few who needed to be considered because

they had not completed the entire questionnaire.

In phase three, the chosen HAR classifiers are

trained with three HAR datasets: UniMiB SHAR

(Micucci et al., 2017), DatasetUniba, and

DatasetUniba Resampled, a sampled version of

Dataset Uniba. In the fourth phase, through the HAR

model, the activities performed using the results of

the first phase are predicted for each user. The fifth

phase compares the results obtained on college

students over 18 and those obtained on high school

students under 18. This way, the differences between

the two groups of participants are analyzed.

6 RESULTS

Our research methodology focused on evaluating a

variety of Deep Learning datasets and model

combinations to determine the most effective

configuration (Cannarile et al., 2022; Donato

Impedovo et al., 2019, 2023). Various model and

dataset combinations were used to evaluate their

performance to complete the offline training phase.

Table 1 shows the results, including the overall users'

accuracy averages and F1-scores.

It is critical to note that these values represent the

averages for each user in the dataset, which was trained

using the Leave One Out technique. The latter

technique ensures a reliable training process in which

each user is isolated during model training. This allows

us to evaluate the system's performance under more

realistic and generalizable conditions. In this way, we

provide a comprehensive assessment of the predictive

capabilities of our model for the dataset in question.

Table 1: Accuracy results and F1_Score averages.

Model Dataset

Average

Accurac

y

Average

F1-Score

CNN DatasetUniba 0,915 0,901

CNN UniMiBShar 0,998 0,996

CNN DatasetUniba

Resam

p

le

d

0,659 0,459

LSTM DatasetUniba 0,865 0,819

Bi-LSTM DatasetUniba 0,891 0,855

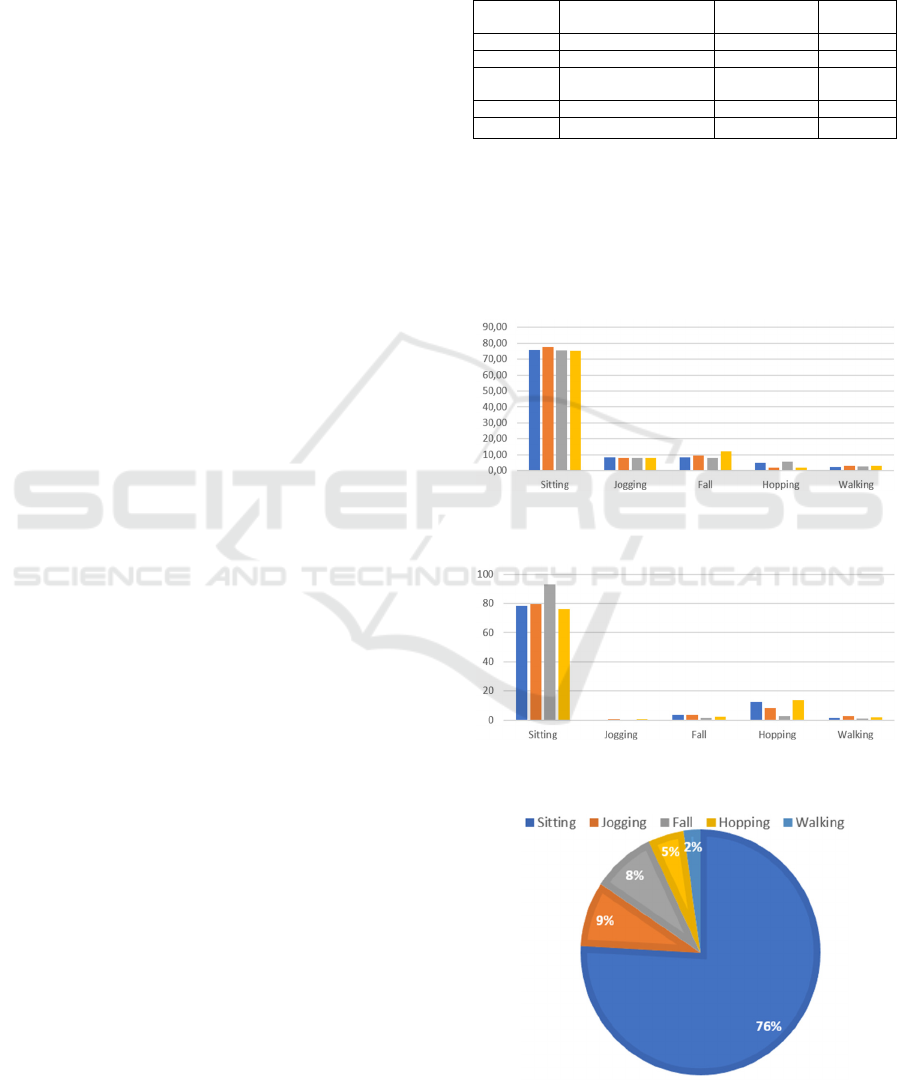

Below is a comparison of the results obtained by

high school students under 18 and college students

over 18 (Figure 1- 4).

Legend (Figure 1-2): Bullying (blue), Victim of

Bullying (orange), Cyberbully (gray), Victim of

Cyberbullying (yellow).

Figure 1: Recognized activities for high school students

under 18 years.

Figure 2: Recognized activities of college students over 18

years by category.

Figure 3: Activity percentages of high school students

under 18 years.

Human Activity Recognition for Identifying Bullying and Cyberbullying: A Comparative Analysis Between Users Under and over 18 Years

Old

973

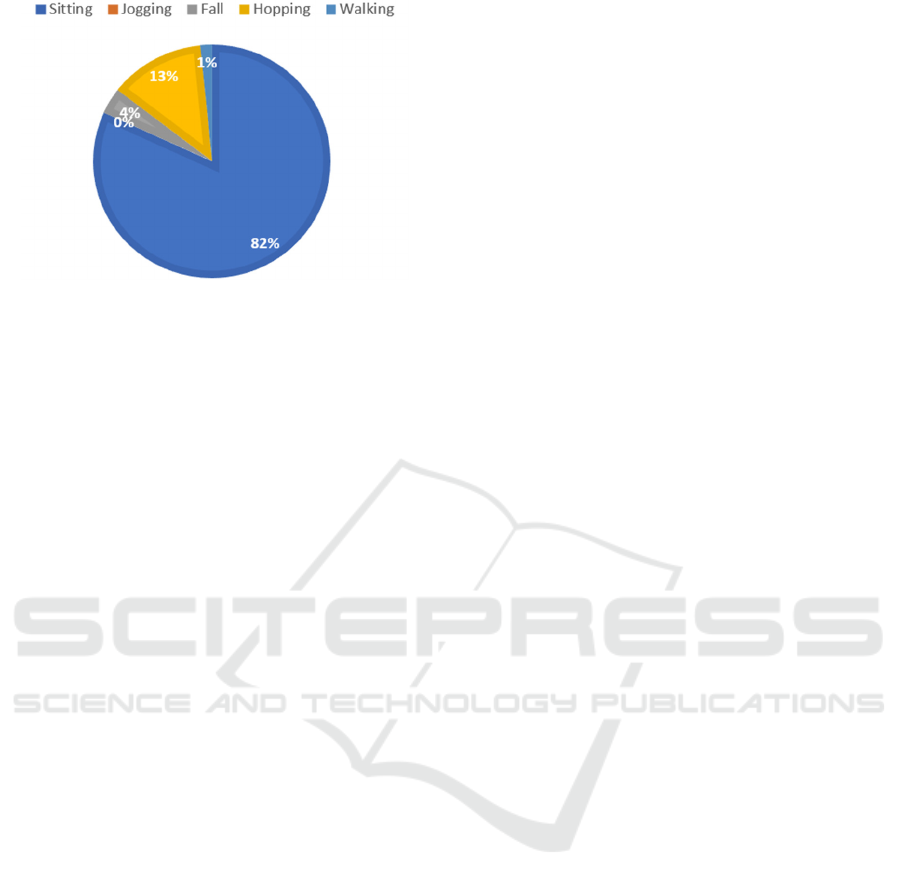

Figure 4: Percentages of college student activity over 18

years.

Looking at the results obtained, some psychological

and scientific observations could be made:

• Cyberbullies under 18 years old showed

abnormalities in the initial part of the

questionnaire, with jumping, falling, and running

activities while watching videos related to the

feelings they experienced. Cyberbullies over 18

years, on the other hand, were considered

“quieter” as they spent most of their time sitting

in higher percentages than the other categories;

• Bullies, among high schoolers under 18 years,

showed running and falling as prevalent

abnormal activities. Among those over 18 years,

on the other hand, the prevalent abnormal

activity shown was jumping. These abnormal

activities were recorded, particularly during the

questionnaire phases composed of the

QuizActivityButtons/DomestionsVideos;

• Bully victims under 18 years showed more

remarkable abnormal phases during

QuizActivityButtons but with prevalent falling

and running activities. For those over 18 years,

the abnormal phase is mainly characterized by

jumping activities;

• The Victims of CyberBullies Under 18 years, as

well as the bullies, recorded falling and running

as their main abnormal activity, particularly

during the QuizActivityButton/DomestionsVideo

phase. Victims Over 18 years, on the other hand,

were the only users who showed abnormalities in

the early part, as well as Cyberbullies in our

experiment.

In general, frequent abnormal running and falling

activities were observed in the sample of high school

students under 18 years, while hopping activities

were less frequent and walking activities were almost

nonexistent. Among college students over 18 years,

on the other hand, the predominant abnormal activity

recorded was hopping, while the other activities,

except sitting, were infrequent.

In addition, the questionnaire could be reduced to

only those questions about the category of bully to be

identified. In both experiments, in the case of bullies

and victims of bullies, the QuizActivityButton

activities would suffice, thus removing the initial part

of submitting videos and related questions.

For the Bullies Under 18 years, questions regarding

the running activity were more discriminating:

• Bullying: the frequency with which bullying was

done (stealing items, discriminating against

someone because of disability/skin color/sexual

orientation, pushing);

• Cyberbullying: in what environment it occurs,

how many incidents have been suffered, and how

many text messages containing insults have been

received.

For the fall activity, questions regarding.

• Habits: rules imposed by parents and control

internet use, whether cyberbullying has been

discussed in the classroom.

• Bullying: If you intervene if you see a case of

bullying in action, how often you were bullied

and the frequency you were bullied (Physical,

Verbal, Behavioral, and Threats).

Victims of bullying under 18 years recorded

abnormal fall activities in responding to questions

concerning:

• Bullying: whether one has been subjected to

threats, exclusion, physical violence, false

rumors about oneself, discrimination because of

one's skin/culture, theft, or damage to one's

belongings.

• Cyberbullying: the frequency with which you

have been bullied through messages, media,

websites, and e-mails; the frequency with which

you have been ignored online; whether someone

has impersonated you, received false news about

you, impersonated you on social media or with

your address book contacts.

Regarding Cyberbullies under 18 years, questions

regarding the following were found to be

discriminating for the fall activity.

NeroPRAI 2024 - Workshop on Medical Condition Assessment Using Pattern Recognition: Progress in Neurodegenerative Disease and

Beyond

974

• Bullying: whether one has been subjected to

threats, exclusion, physical violence, false

rumors about oneself, discrimination because of

one's skin/culture, theft, or damage to one's

belongings.

For the running activity, the questions are covered.

• Cyberbullying: the frequency with which acts of

cyberbullying such as threatening and abusive

texting/emails, sending violent, embarrassing, or

intimate media, threatening online, making silent

or intimidating phone calls, and spreading false

rumors about other people.

For the skipping activity, the questions covered:

• Emotions were felt when watching some videos

and asking general questions.

For the Cyberbullies over 18 years, questions

regarding:

• Cyberbullying: specifically, the frequency with

which acts of bullying were carried out through

messages, media, phone calls, and e-mails.

Victims of Cyberbullying Under 18 years recorded

abnormal running activities for the following

questions:

• Cyberbullying: the frequency with which acts of

cyberbullying such as threatening and abusive

texting/emails, sending violent, embarrassing, or

intimate media, threatening online, making silent

or intimidating phone calls, and spreading false

rumors about other people.

In addition, abnormal fall activities have been

recorded:

• Bullying: threats, exclusion, physical violence,

false rumors about oneself, discrimination

because of one's skin/culture, theft of or damage

to one's belongings were experienced.

• Cyberbullying: how often you have been bullied

via messages, media, websites, and e-mail, how

often you have been ignored online, whether

someone has impersonated you, received false

rumors about you, impersonated you on social

Victims of cyberbullying over 18 years, on the other

hand, recorded abnormal activities during the initial

part concerning The emotions felt when watching

some videos and general questions.

7 CONCLUSIONS

In this work, Human Activity Recognition (HAR)

models are used to examine the behavior exhibited by

high school students under 18 years old and college

students over 18 years old while performing a

questionnaire. The distinguishing feature of this

research is the use of accelerometer sensors built into

smartphones, which allowed us to record users'

behaviors in detail.

This technique allowed us to analyze the actions

of individuals, distinguishing between different

movements and actions. They are considered

"abnormal," all those behaviors beyond simply

sitting, focusing on more dynamic or unusual

activities or movements that could provide significant

information about students' emotional state or active

participation while filling out the questionnaire.

By using HAR models in this context, we could

better understand the behavioral patterns formed

during the interaction with the questionnaire and find

attractive cues for evaluating the responses.

In this research, we used accelerometer

technology innovatively, which allowed us to

improve our analysis capabilities and offered new

perspectives for interpreting and monitoring student

behavior in scientific research contexts.

We can conclude these experiments by saying that

we achieved our goals, namely:

• It was possible to use appropriate DL models to

perform HAR on data generated by sensors on a

smartphone, all after comparing different models

and datasets to achieve optimal accuracy. The

best performance was obtained with a

Convolutional Neural Network (CNN) with

DatasetUniba and UniMiBSHAR datasets;

• The results obtained in this experiment on an

experimental group of high school students under

18 years were compared with those obtained

from college students over 18 years.

Participants under 18 did a lot more running and

falling, but they did less jumping than their over-18-

year-old counterparts, who said jumping was one of

the most frequent unusual things they did.

Both groups spent most of their time sitting, with

76 percent of participants under 18 years and 82

percent over 18 years. The two groups spent the same

amount of time on other activities, except jumping, an

unusual behavior commonly seen by the under-18-

Human Activity Recognition for Identifying Bullying and Cyberbullying: A Comparative Analysis Between Users Under and over 18 Years

Old

975

year-old participants but never seen by the over-18-

year-old participants.

This view allows us to observe how the under-18-

year-olds are particularly active and active compared

to their over-18-year-old peers. This difference in

behavior could be because young people are more

sensitive and concerned about bullying. Their greater

involvement in the complex social dynamics that

foster bullying could be the reason for this interest.

Implementing more advanced cybersecurity

measures, such as data encryption, could be expected.

More excellent protection of sensitive information

can be ensured through modern techniques, helping

preserve privacy and prevent harmful phenomena

such as online bullying. In this context, cybersecurity

becomes essential to ensure a safer and more secure

digital environment, particularly considering how

actively young people are involved in online activities

(Carrera et al., 2022b; Castro, Impedovo, et al., 2023;

V. Dentamaro et al., 2021; Vincenzo Dentamaro et

al., 2018; Galantucci et al., 2021).

REFERENCES

Amara, M. I., Akkouche, A., Boutellaa, E., & Tayakout, H.

(2021). A Smartphone Application for Fall Detection

Using Accelerometer and ConvLSTM Network. 2020

2nd International Workshop on Human-Centric Smart

Environments for Health and Well-Being (IHSH), 92–

96. doi: 10.1109/IHSH51661.2021.9378743

Angelillo, M. T., Balducci, F., Impedovo, D., Pirlo, G., &

Vessio, G. (2019). Attentional Pattern Classification for

Automatic Dementia Detection. IEEE Access, 7,

57706–57716. doi: 10.1109/ACCESS.2019.2913685

Angelillo, M. T., Impedovo, D., Pirlo, G., & Vessio, G.

(2019). Performance-Driven Handwriting Task

Selection for Parkinson’s Disease Classification.

Lecture Notes in Computer Science (Including

Subseries Lecture Notes in Artificial Intelligence and

Lecture Notes in Bioinformatics), 11946 LNAI, 281–

293. doi: 10.1007/978-3-030-35166-3_20/COVER

Cannarile, A., Dentamaro, V., Galantucci, S., Iannacone,

A., Impedovo, D., & Pirlo, G. (2022). Comparing Deep

Learning and Shallow Learning Techniques for API

Calls Malware Prediction: A Study. Applied Sciences

2022, Vol. 12, Page 1645, 12(3), 1645. doi:

10.3390/APP12031645

Carrera, F., Dentamaro, V., Galantucci, S., Iannacone, A.,

Impedovo, D., & Pirlo, G. (2022a). Combining

Unsupervised Approaches for Near Real-Time

Network Traffic Anomaly Detection. Applied Sciences

2022, Vol. 12, Page 1759, 12(3), 1759. doi:

10.3390/APP12031759

Carrera, F., Dentamaro, V., Galantucci, S., Iannacone, A.,

Impedovo, D., & Pirlo, G. (2022b). Combining

Unsupervised Approaches for Near Real-Time

Network Traffic Anomaly Detection. Applied Sciences

2022, Vol. 12, Page 1759, 12(3), 1759. doi:

10.3390/APP12031759

Casilari, E., Santoyo-Ramón, J. A., & Cano-García, J. M.

(2017). UMAFall: A Multisensor Dataset for the

Research on Automatic Fall Detection. Procedia

Computer Science, 110, 32–39. doi:

10.1016/j.procs.2017.06.110

Castro, F., Dentamaro, V., Gattulli, V., & Impedovo, D.

(2023). Fall Detection with LSTM and Attention

Mechanism. WAMWB 2023 Advances of Mobile and

Wearable Biometrics 2023.

Castro, F., Impedovo, D., & Pirlo, G. (2023). A Medical

Image Encryption Scheme for Secure Fingerprint-

Based Authenticated Transmission. Applied Sciences

2023, Vol. 13, Page 6099, 13(10), 6099. doi:

10.3390/APP13106099

Cheriet, M., Dentamaro, V., Hamdan, M., Impedovo, D., &

Pirlo, G. (2023). Multi-speed transformer network for

neurodegenerative disease assessment and activity

recognition. Computer Methods and Programs in

Biomedicine, 230. doi: 10.1016/J.CMPB.2023.107344

Dehkordi, M. B., Zaraki, A., & Setchi, R. (2020). Feature

extraction and feature selection in smartphone-based

activity recognition. Procedia Computer Science, 176,

2655–2664. doi: 10.1016/j.procs.2020.09.301

Dentamaro, V., Convertini, V. N., Galantucci, S., Giglio,

P., Palmisano, T., & Pirlo, G. (2021). Ensemble

consensus: An unsupervised algorithm for anomaly

detection in network security data. CEUR

WORKSHOP PROCEEDINGS, 2940, 309–318.

Retrieved from https://ricerca.uniba.it/handle/

11586/377597

Dentamaro, Vincenzo, Impedovo, D., & Pirlo, G. (2018).

LICIC: Less Important Components for Imbalanced

Multiclass Classification. Information 2018, Vol. 9,

Page 317, 9(12), 317. doi: 10.3390/INFO9120317

Dentamaro, Vincenzo, Impedovo, D., & Pirlo, G. (2020).

Fall detection by human pose estimation and kinematic

theory. Proceedings - International Conference on

Pattern Recognition, 2328–2335. doi:

10.1109/ICPR48806.2021.9413331

Galantucci, S., Impedovo, D., & Pirlo, G. (2021). One time

user key: A user-based secret sharing XOR-ed model

for multiple user cryptography in distributed systems.

IEEE Access, 9, 148521–148534. doi:

10.1109/ACCESS.2021.3124637

Gattulli, V., Impedovo, D., Pirlo, G., & Sarcinella, L.

(2023). Human Activity Recognition for the

Identification of Bullying and Cyberbullying Using

Smartphone Sensors. Electronics 2023, Vol. 12, Page

261, 12(2), 261. doi: 10.3390/

ELECTRONICS12020261

Gattulli, V., Impedovo, D., Pirlo, G., & Semeraro, G.

(2023). Handwriting Task-Selection based on the

Analysis of Patterns in Classification Results on

Alzheimer Dataset. IEEESDS’23: Data Science

Techniques for Datasets on Mental and

Neurodegenerative Disorders.

NeroPRAI 2024 - Workshop on Medical Condition Assessment Using Pattern Recognition: Progress in Neurodegenerative Disease and

Beyond

976

Gattulli, V., Impedovo, D., & Sarcinella, L. (2023).

Anomaly Detection using smartphone Sensors for a

Bullying Detection. WAITT 2023 - 1st Workshop on

Artificial Intelligence for Technology Transfer.

Impedovo, D., Pirlo, G., Sarcinella, L., Stasolla, E., &

Trullo, C. A. (2012). Analysis of stability in static

signatures using cosine similarity. Proceedings -

International Workshop on Frontiers in Handwriting

Recognition, IWFHR, 231–235. doi:

10.1109/ICFHR.2012.180

Impedovo, Donato, Dentamaro, V., Abbattista, G., Gattulli,

V., & Pirlo, G. (2021). A comparative study of shallow

learning and deep transfer learning techniques for

accurate fingerprints vitality detection. Pattern

Recognition Letters, 151, 11–18. doi:

10.1016/J.PATREC.2021.07.025

Impedovo, Donato, Dentamaro, V., Pirlo, G., & Sarcinella,

L. (2019). TrafficWave: Generative deep learning

architecture for vehicular traffic flow prediction.

Applied Sciences (Switzerland), 9(24). doi:

10.3390/APP9245504

Impedovo, Donato, Pirlo, G., & Semeraro, G. (2023). Next

Activity Prediction: An Application of Shallow

Learning Techniques Against Deep Learning Over the

BPI Challenge 2020. IEEE Access, 11, 117947–

117953. doi: 10.1109/ACCESS.2023.3325738

Micucci, D., Mobilio, M., & Napoletano, P. (2017).

UniMiB SHAR: A dataset for human activity

recognition using acceleration data from smartphones.

Applied Sciences (Switzerland), 7(10). doi:

10.3390/app7101101

Minarno, A. E., Kusuma, W. A., Wibowo, H., Akbi, D. R.,

& Jawas, N. (2020). Single Triaxial Accelerometer-

Gyroscope Classification for Human Activity

Recognition. 2020 8th International Conference on

Information and Communication Technology, ICoICT

2020. doi: 10.1109/ICOICT49345.2020.9166329

Minh Dang, L., Min, K., Wang, H., Jalil Piran, M., Hee Lee,

C., & Moon, H. (2020a). Sensor-based and vision-

based human activity recognition: A comprehensive

survey. Pattern Recognition, 108, 107561. doi:

10.1016/J.PATCOG.2020.107561

Minh Dang, L., Min, K., Wang, H., Jalil Piran, M., Hee Lee,

C., & Moon, H. (2020b). Sensor-based and vision-

based human activity recognition: A comprehensive

survey. Pattern Recognition, 108. doi:

10.1016/j.patcog.2020.107561

Straczkiewicz, M., Huang, E. J., & Onnela, J. P. (2023). A

“one-size-fits-most” walking recognition method for

smartphones, smartwatches, and wearable

accelerometers. Npj Digital Medicine 2023 6:1, 6(1),

1–16. doi: 10.1038/s41746-022-00745-z

Sucerquia, A., López, J. D., & Vargas-Bonilla, J. F. (2017).

SisFall: A fall and movement dataset. Sensors

(Switzerland), 17(1). doi: 10.3390/s17010198

Thomas, B., Lu, M. L., Jha, R., & Bertrand, J. (2022).

Machine Learning for Detection and Risk Assessment

of Lifting Action. IEEE Transactions on Human-

Machine Systems, 52(6), 1196–1204. doi:

10.1109/THMS.2022.3212666

Twyman, K., Saylor, C., Taylor, L. A., & Comeaux, C.

(2010). Comparing children and adolescents engaged in

cyberbullying to matched peers. Cyberpsychology,

Behavior and Social Networking, 13(2), 195–199. doi:

10.1089/CYBER.2009.0137

Ye, L., Wang, P., Wang, L., Ferdinando, H., Seppänen, T.,

& Alasaarela, E. (2018). A Combined Motion-Audio

School Bullying Detection Algorithm. International

Journal of Pattern Recognition and Artificial

Intelligence, 32(12). doi:

10.1142/S0218001418500465

Zhang, Y., Wang, L., Chen, H., Tian, A., Zhou, S., & Guo,

Y. (2022). IF-ConvTransformer: A Framework for

Human Activity Recognition Using IMU Fusion and

ConvTransformer. Proceedings of the ACM on

Interactive, Mobile, Wearable and Ubiquitous

Technologies, 6(2), 88. doi: 10.1145/3534584

Zihan, Z., & Zhanfeng, Z. (2019). Campus bullying

detection based on motion recognition and speech

emotion recognition. Journal of Physics: Conference

Series, 1314(1). doi: 10.1088/1742-6596/1314/

1/012150

APPENDIX

The research of Dott. Vincenzo Gattulli was funded

by PON Ricerca e Innovazione 2014-2020 FSE

REACT-EU, Azione IV.4 "Dottorati e contratti di

ricerca su tematiche dell'innovazione", CUP

H99J21010060001.

This work is supported by the Italian Ministry of

Education, University, and Research within the

PRIN2017 - BullyBuster project - A framework for

bullying and cyberbullying action detection by

computer vision and artificial intelligence methods

and algorithms.

Human Activity Recognition for Identifying Bullying and Cyberbullying: A Comparative Analysis Between Users Under and over 18 Years

Old

977