A Type of EEG-ITNet for Motor Imagery EEG Signal Classification

Maryam Khoshkhooy Titkanlou

1a

, Ehsan Monjezi

2b

and Roman Mouček

3c

1

Department of Computer Science and Engineering, University of West Bohemia, 306 14 Plzen, Czech Republic

2

Department of Electrical Engineering, Shahid Chamran University, Golestan Blvd. Ahvaz, Iran

3

Department of Computer Science and Engineering, University of West Bohemia, 306 14 Plzen, Czech Republic

Keywords: Electroencephalography, Brain-Computer Interface, ERD/ERS, Deep Neural Network, Motor Imagery,

Inception Module.

Abstract: The brain-computer interface (BCI) is an emerging technology that has the potential to revolutionize the

world, with numerous applications ranging from healthcare to human augmentation. Electroencephalogram

(EEG) motor imagery (MI) is among the most common BCI paradigms used extensively in healthcare

applications such as rehabilitation. Recently, neural networks, particularly deep architectures, have received

substantial attention for analyzing EEG signals (BCI applications). EEG-ITNet is a classification algorithm

proposed to improve the classification accuracy of motor imagery EEG signals in a noninvasive brain-

computer interface. The resulting EEG-ITNet classification accuracy and precision were 75.45% and

76.43%, using a motor imagery dataset of 29 healthy subjects, including males aged 21-26 and females aged

18-23. Three different methods have also been implemented to augment this dataset.

a

https://orcid.org/0000-0002-4139-6836

b

https://orcid.org/0009-0008-4395-787X

c

https://orcid.org/0000-0002-4665-8946

1

INTRODUCTION

A brain-computer interface system (BCI) is a control

pathway created through a form of communication

between the neural activity of the human brain and

the outside world via brain signal recording and

decoding techniques. The application of BCI

systems has gone in two main directions. The first

direction is studying brain activity to explore a

feedforward pathway that controls the external

devices without the rehabilitation intention. The other

main direction is using closed-loop BCI systems

during neurorehabilitation, with the feedback loop

playing an essential role in recovering the neural

plasticity training or controlling brain activities

(Lebedev & Nicolelis, 2017). The methods for

recording brain activity are categorized into invasive

and noninvasive groups.

While some noninvasive technologies offer

superior spatial resolution, such as fMRI, EEG has

proved to be the most popular method for its ability

to directly measure neural activity, cost-

effectiveness, and portability for clinical applications

(Wolpaw et al., 2002). EEG signals have been used to

control assistive and rehabilitation devices (Meng et

al., 2016).

Motor imagery involves the brain’s imagination

without actual physical movement. The contralateral

sensorimotor cortical EEG signals in the alpha band

(8–12 Hz) and beta band (13–30 Hz) (Mu Li & Bao-

Liang Lu, 2009) exhibit a decrease in amplitude

during unimanual preparation and execution of a

movement. This phenomenon is known as event-

related desynchronization (ERD), which represents a

decrease in the amplitude of the activated cortical

EEG signals. Simultaneously, there is an increase in

the amplitude of the ipsilateral sensorimotor cortical

EEG signals in the alpha and beta frequency bands,

which is called event-related synchronization (ERS)

and represents an increase in the amplitude of the

corresponding cortical signals in the resting state (Liu

et al., 2019). The ERD/ERS observed in the μ and β

frequency bands of the brain motor-sensory cortices

indicates the activation or deactivation state of the

central region of the brain.

Deep neural networks, which can extract complex

Khoshkhooy Titkanlou, M., Monjezi, E. and Mou

ˇ

cek, R.

A Type of EEG-ITNet for Motor Imagery EEG Signal Classification.

DOI: 10.5220/0012569400003657

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 17th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2024) - Volume 2, pages 257-262

ISBN: 978-989-758-688-0; ISSN: 2184-4305

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

257

features from raw data automatically, have received

significant attention in motor imagery signal

classification (LeCun et al., 2015) (Altaheri et al.,

2023). Convolutional neural networks have proposed

neural network models with various architectures to

classify motor imagery signals. For example,

Schirrmeiste et al. (Schirrmeister et al., 2017) studied

deep and shallow convolutional neural networks

called DeepConvNet and ShallowConvNet, among

the first models used to decode motor imagery tasks

from raw EEG signals. Later, Lawhern et al.

(Lawhern et al., 2018) introduced EEGNet, a more

compact and efficient CNN architecture with fewer

parameters than ShallowNet and DeepConvNet. Dai

et al. (Dai et al., 2020) proposed a hybrid-scale CNN

architecture with a data augmentation method to

improve the accuracy of EEG motor imagery

classification. Borra et al. (Borra et al., 2020)

proposed a lightweight and interpretable shallow

CNN (Sinc-ShallowNet) architecture for EEG motor

decoding. Santamaría-Vázquez et al. (Santamaria-

Vazquez et al., 2020) studied a novel CNN, called

EEG-Inception, that improves the accuracy and

calibration time of assistive ERP-based BCIs, but the

network lacked interpretability. Mirzabagherian et al.

(Mirzabagherian et al., 2023), based on convolutional

layers with temporal-spatial, Separable and

Depthwise structures, developed (Temporal-Spatial

Convolutional Residual Network)TSCR-Net and

(Temporal-Spatial Convolutional Iterative Residual

Network)TSCIR-Net models which decoded

distinctive characteristics of different movement

efforts and obtained higher classification accuracy

than previous deep neural networks. Amin et al.

(Amin et al., 2022) introduced an attention inception

approach that combines CNN and LSTM networks

for motor imagery task classification, which extracts

spatial features by CNN and temporal features by

LSTM and then merges all features into a fully

connected layer. However, because of their

exploding/vanishing gradient or lack of memory

issues, RNNs (e.g., LSTM) are less common in this

field. By including TCN in their structure, Ingolfsson

et al. (Ingolfsson et al., 2020) and Musallam et al.

(Altuwaijri & Muhammad, 2022) have reported better

results for the classification of motor imagery signals

in response to the slow training essence of RNNs.

TCNs have been shown promising outcomes for

temporal analysis of EEG time series with faster

computation. In this paper, we introduce EEG-ITNet

(Salami et al., 2022), which can extract rich spectral,

spatial, and temporal information from multi-channel

EEG signals with less complexity by using inception

modules and causal convolutions with dilation.

The subsequent sections of this paper are arranged

as follows. Section 2 provides the material and

methods used in this research. Following that, our

result is presented in section 3. In section 4, we finally

conclude and provide some suggestions for the future.

2

MATERIAL AND METHODS

2.1 Data Acquisition

Four cycles in the entire EEG scenario are used for

measurement, with a resting and a stimulating phase

in each cycle. Every cycle begins with the subject

resting for one minute, during which they are required

to sit motionless and at complete rest. If their eyes are

open, this includes minimizing their blinking.

Following the resting phase, the participant moves

their wrists with either their left or right hand for two

minutes during the stimulation phase. Following a

five-second break, the subject completes the assigned

task during the stimulation phase. A green LED

positioned in front of the subject alerts them to the

phase shift. The subject completes the task and enters

the stimulation phase when the LED is on, and the

subject is in the resting phase when the LED is off.

The phases are then alternated this way, and each of

them is repeated three times. This means that each

cycle lasts exactly 9 minutes. The cycles differ from

each other by the task performed by the subject in the

stimulation phase, which is optionally combined with

alternating open or closed eyes.

The dataset was gathered at the University of

West Bohemia in the Czech Republic. 29 healthy

people were measured (men aged 21-26 and women

aged 18- 23) (Kodera et al., 2023). Each subject

received instructions on completing the measurement

before it began, and the procedure for each cycle was

specified before it began. In the meantime, the nurse

placed an EEG cap with Ag/AgCl electrodes on the

subject's head using a 10–20 system. Afterward, she

attached two electrodes to the subject's hand and one

ground electrode below the elbow because the

distance to the bone is smallest there. Lastly, a

reference electrode of the EEG cap was attached to

the earlobe. Fz, Cz, Pz, F3, F4, P3, P4, C3 and C4

were used for the measurement. Following

preparation, the subject was put in a dark, sound-

proof chamber to prevent background noise from the

surroundings during measurement.

The EEG data were captured using the BrainAmp

DC amplifier in conjunction with BrainVision

recorder software. For EMG recording, the

microcontroller STM324F429I-DISCO board and

HEALTHINF 2024 - 17th International Conference on Health Informatics

258

the EKG/EMG shield from Olimex company were

employed to generate synchronization pulses and

implement the stimulation scenario, as illustrated in

Figure 1.

Figure 1: Microcontroller board STM324F429I-DISCO

and EKG/EMG shield from company Olimex.

2.2 Our Proposed Method

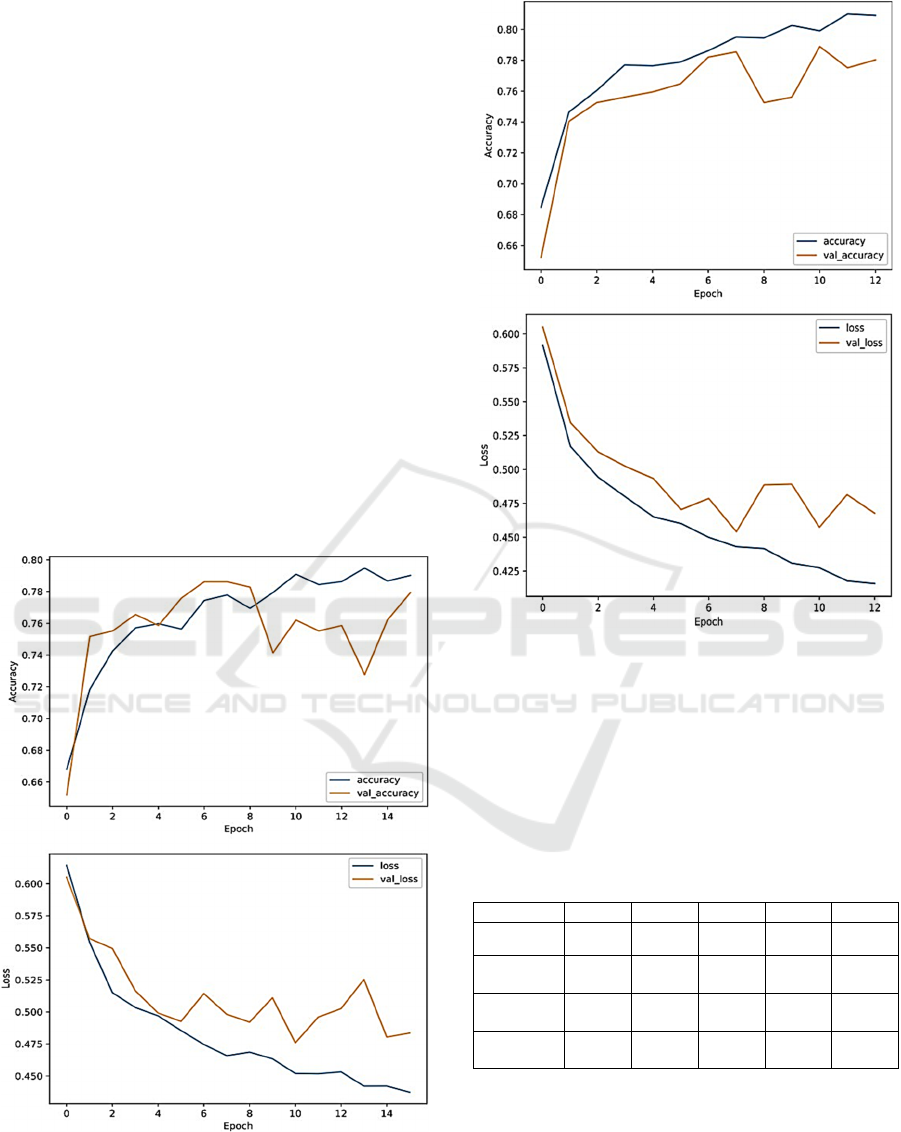

The four primary blocks that comprise the general

architecture of EEG-ITNet are the inception block,

temporal convolution (TC) block, dimension

reduction (DR) block, and classification block, as

shown in Figure 2.

Figure 2: Details of different blocks in EEG-ITNet

architecture.

• Inception Block

Four parallel sets of layers are used to begin the

learning process, each comprising a 2D

convolutional layer along the temporal axis serving

as frequency filtering, followed by a 2D depthwise

convolutional layer functioning as spatial filtering.

Adding inception modules with different

convolutional kernel sizes eliminates the need for a

fixed-length kernel (Santamaria-Vazquez et al.,

2020). It allows the network to learn filters that

represent various frequency sub-bands. In order to

prevent overfitting and enable the network to learn

more complex nonlinear spatial information, this

block ends with a nonlinear activation function and

dropout.

• Temporal Convolution (TC) Block

The discriminative temporal features are extracted

using the TCN architecture, which considers the

time series history, following the extraction of

sources in various informative frequency sub-bands.

The TC block comprises multiple residual blocks,

each composed of depthwise causal convolutional

layers with leading zero padding, followed by

activation function and dropout. Using depthwise

causal convolution followed by batch normalization

instead of weight normalization made this model

more robust and performed better than the

conventional TCN. This block is also preceded by an

average pooling layer, which reduces the data

dimensions and prevents overfitting.

• Dimension Reduction (DR) Block

The output of the TC block fundamentally contains

temporal information retrieved from sources with

various frequency spectrums. To control the number

of final features used for the classification task, we

combined these temporal features using a 1 × 1

convolutional layer. This block also includes an

average pooling layer at the end, an activation

function, and a dropout layer to reduce the tensor

dimension further.

• Classification Block

The last component of the EEG-ITNet has a fully

connected layer with a "softmax" activation function

that comes after a flattened layer. Even though we

call it the classification layer, it is easily adjustable

based on the problem set and desired output.

We first used 10-fold cross-validation with 100

epochs. Before classification, 20% of the samples

were separated for testing purposes, and the

A Type of EEG-ITNet for Motor Imagery EEG Signal Classification

259

remaining 80% was utilized for training. The

learning rate value was 0.001. The model was

implemented in Keras.

It is worth mentioning that, since our dataset is

not large enough to obtain better results, we try to

implement three data augmentation approaches

(noise injection (NI), conditional variational

autoencoder (cVAE), and conditional GAN with

wasserstein price function and gradient penalty

(cWGAN-GP)) in order to expand the training set of

input data with newly created artificial samples.

3

RESULTS

Table 1 summarises the classification accuracy,

precision, recall, F1 score, and AUC for the EEG-

ITNet model and the combination of this model with

the augmentation methods implemented in this

research. The accuracy of EEG-ITNet and NI EEG-

ITNet is 75.45 % and 75.86 %, respectively. The

accuracy and loss graphs of these two models are

shown in figure 3 and Figure 4.

Figure 3: Accuracy and loss curve of EEG-ITNet.

Figure 4: Accuracy and loss curve of NI EEG-ITNet.

Based on the results, only the noise injection

augmen-tation method improves the accuracy of

motor image-ry classification from 75.45% to

75.86% (0.41%). So, data augmentation does not

affect the result for this dataset. Unfortunately, there

is no English paper related to this dataset to compare

our results.

Table 1: Results of four models used in this study.

Method Accuracy Precision Recall F1 Score AUC

EEG-ITNet

75.45

±1.43

76.43

±0.96

75.50

±1.40

75.23

±1.58

0.755

±0.01

NI

EEG-ITNet

75.86

±1.21

76.31

±1.06

75.89

±1.21

75.77

±1.27

0.759

±0.01

cVAE

EEG-ITNet

74.25

±1.28

74.54

±1.29

74.28

±1.28

74.18

±1.29

0.743

±0.01

cWGAN-GP

EEG-ITNet

73.18

±2.04

74.42

±1.17

73.25

±2.01

72.84

±2.43

0.732

±0.02

4

CONCLUSIONS

The suggested method has proven to be suitable for

classifying hand movements in EEG. Our proposed

architecture includes four blocks, inception block,

HEALTHINF 2024 - 17th International Conference on Health Informatics

260

temporal convolution (TC) block, dimension

reduction (DR) block, and classification block. The

dataset used in this paper consists of 29 healthy

people who move their hands with open or closed

eyes. Alternatively, one of the limitations of this

dataset is that it is not large enough for EEG-ITNet

to prove its advantages, so data augmentation could

be an appropriate technique to solve this problem.

After adjusting the hyperparameters, our model's

accuracy and precision were 75.45% and 76.43%.

Furthermore, the best result with data augmentation

was related to the noise injection method, NI EEG-

ITNet, and its accuracy and precision were 75.86%

and 76.31%, respectively.

Since few models have been implemented on this

dataset, other researchers can try other deep networks

or combine our proposed method with other

algorithms to improve accuracy. The proposed data is

available in (Kodera et al., 2023).

ACKNOWLEDGEMENTS

This work was supported by the university specific

research project SGS-2022-016 Advanced Methods

of Data Processing and Analysis (project SGS-2022-

016).

REFERENCES

Lebedev, M. A., & Nicolelis, M. A. L. (2017). Brain-

Machine Interfaces: From Basic Science to Neuro-

prostheses and Neurorehabilitation. Physiological

Reviews, 97(2), 767–837. https://doi.org/10.1152/

physrev.00027.2016.

Wolpaw, J., Birbaumer, N., McFarland, D., Pfurtscheller,

G., & Vaughan, T. (2002). Brain-Computer Interfaces

for Communication and Control. Clinocal

Neurophysiology, 113, 767-791.

Meng, J., Zhang, S., Bekyo, A., Olsoe, J., Baxter, B., & He,

B. (2016). Noninvasive Electroencephalogram Based

Control of a Robotic Arm for Reach and Grasp Tasks.

Scientific Reports, 6(1), 38565. https://doi.org/10.1038/

srep38565.

Mu Li & Bao-Liang Lu. (2009). Emotion classification

based on gamma-band EEG. 2009 Annual International

Conference of the IEEE Engineering in Medicine and

Biology Society, 1223–1226. https://doi.org/10.1109/

IEMBS.2009.5334139.

Liu, Y.-H., Lin, L.-F., Chou, C.-W., Chang, Y., Hsiao, Y.-

T., & Hsu, W.-C. (2019). Analysis of

Electroencephalography Event-Related Desynchronisa-

tion and Synchronisation Induced by Lower-Limb

Stepping Motor Imagery. Journal of Medical and

Biological Engineering, 39(1), 54–69. https://doi.org/

10.1007/s40846-018-0379-9.

LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning.

Nature, 521(7553), 436–444. https://doi.org/10.1038/

nature14539.

Altaheri, H., Muhammad, G., Alsulaiman, M., Amin, S. U.,

Altuwaijri, G. A., Abdul, W., Bencherif, M. A., &

Faisal, M. (2023). Deep learning techniques for

classification of electroencephalogram (EEG) motor

imagery (MI) signals: A review. Neural Computing and

Applications, 35(20), 14681–14722. https://doi.org/

10.1007/s00521-021-06352-5.

Schirrmeister, R. T., Springenberg, J. T., Fiederer, L. D. J.,

Glasstetter, M., Eggensperger, K., Tangermann, M.,

Hutter, F., Burgard, W., & Ball, T. (2017). Deep

learning with convolutional neural networks for EEG

decoding and visualization. Human Brain Mapping,

38(11), 5391–5420. https://doi.org/10.1002/hbm.23730.

Lawhern, V. J., Solon, A. J., Waytowich, N. R., Gordon, S.

M., Hung, C. P., & Lance, B. J. (2018). EEGNet: A

compact convolutional neural network for EEG-based

brain–computer interfaces. Journal of Neural

Engineering, 15(5), 056013.

Dai, G., Zhou, J., Huang, J., & Wang, N. (2020). HS- CNN:

A CNN with hybrid convolution scale for EEG motor

imagery classification. Journal of Neural Engineering,

17(1), 016025. https://doi.org/10.1088/1741-2552/ab40

5f.

Borra, D., Fantozzi, S., & Magosso, E. (2020). Interpretable

and lightweight convolutional neural network for EEG

decoding: Application to movement execution and

imagination. Neural Networks, 129, 55–74.

https://doi.org/10.1016/j.neunet.2020.05.032.

Santamaria-Vazquez, E., Martinez-Cagigal, V., Vaquerizo-

Villar, F., & Hornero, R. (2020). EEG- Inception: A

Novel Deep Convolutional Neural Network for

Assistive ERP-Based Brain-Computer Interfaces. IEEE

Transactions on Neural Systems and Rehabilitation

Engineering, 28(12), 2773–2782. https://doi.org/10.11

09/TNSRE.2020.3048106.

Mirzabagherian, H., Menhaj, M. B., Suratgar, A. A., Talebi,

N., Abbasi Sardari, M. R., & Sajedin, A. (2023).

Temporal-spatial convolutional residual network for

decoding attempted movement related EEG signals of

subjects with spinal cord injury. Computers in Biology

and Medicine, 164, 107159. https://doi.org/10.1016/

j.compbiomed.2023.107159.

Amin, S. U., Altaheri, H., Muhammad, G., Abdul, W., &

Alsulaiman, M. (2022). Attention-Inception and Long-

Short-Term Memory-Based Electroencephalography

Classification for Motor Imagery Tasks in

Rehabilitation. IEEE Transactions on Industrial

Informatics, 18(8), 5412– 5421. https://doi.org/10.11

09/TII.2021.3132340.

Ingolfsson, T. M., Hersche, M., Wang, X., Kobayashi, N.,

Cavigelli, L., & Benini, L. (2020). EEG-TCNet: An

Accurate Temporal Convolutional Network for

Embedded Motor-Imagery Brain-Machine Interfaces.

https://doi.org/10.48550/ARXIV.2006.00622.

A Type of EEG-ITNet for Motor Imagery EEG Signal Classification

261

Altuwaijri, G. A., & Muhammad, G. (2022).

Electroencephalogram-Based Motor Imagery Signals

Classification Using a Multi-Branch Convolutional

Neural Network Model with Attention Blocks.

Bioengineering, 9(7), 323. https://doi.org/10.3390/bio

engineering9070323.

Salami, A., Andreu-Perez, J., & Gillmeister, H. (2022).

EEG-ITNet: An Explainable Inception Temporal

Convolutional Network for Motor Imagery

Classification. IEEE Access, 10, 36672–36685.

https://doi.org/10.1109/ACCESS.2022.3161489.

Kodera, J., Mouček, R., Moutner, P., Mochura, P., & Saleh,

J. Y. (2023). EEG motor imagery [dataset]. Zenodo.

https://doi.org/10.5281/ZENODO.7893847.

HEALTHINF 2024 - 17th International Conference on Health Informatics

262