A Generative Model for Guided Thermal Image Super-Resolution

Patricia L. Su

´

arez

1 a

and Angel D. Sappa

1,2 b

1

Escuela Superior Polit

´

ecnica del Litoral, ESPOL, Facultad de Ingenier

´

ıa en Electricidad y Computaci

´

on, CIDIS,

Campus Gustavo Galindo Km. 30.5 V

´

ıa Perimetral, P.O. Box 09-01-5863, Guayaquil, Ecuador

2

Computer Vision Center, Edifici O, Campus UAB, 08193 Bellaterra, Barcelona, Spain

Keywords:

Thermal Super-Resolution, HSV Color Space, Luminance-Driven Bicubic Image.

Abstract:

This paper presents a novel approach for thermal super-resolution based on a fusion prior, low-resolution ther-

mal image and H brightness channel of the corresponding visible spectrum image. The method combines

bicubic interpolation of the ×8 scale target image with the brightness component. To enhance the guidance

process, the original RGB image is converted to HSV, and the brightness channel is extracted. Bicubic inter-

polation is then applied to the low-resolution thermal image, resulting in a Bicubic-Brightness channel blend.

This luminance-bicubic fusion is used as an input image to help the training process. With this fused image, the

cyclic adversarial generative network obtains high-resolution thermal image results. Experimental evaluations

show that the proposed approach significantly improves spatial resolution and pixel intensity levels compared

to other state-of-the-art techniques, making it a promising method to obtain high-resolution thermal.

1 INTRODUCTION

Super-resolution is the process of upgrading the res-

olution and quality of an image to obtain a higher-

resolution version, reconstructing missing high-

frequency information, and improving image clarity.

It is a vital technique in image processing and com-

puter vision, as it addresses the challenge of obtaining

sharper, more detailed images from low-resolution

sources. This involves the use of advanced algorithms

and mathematical methods to infer high-resolution

detail based on available low-resolution data. Super-

resolution is the process of upgrading the resolution

and quality of an image to obtain a higher-resolution

version, reconstructing missing high-frequency infor-

mation, and improving image clarity. It is a vital tech-

nique in image processing and machine vision as it

addresses the challenge of getting sharper, more de-

tailed images from low-resolution sources. This in-

volves the use of advanced algorithms and mathe-

matical methods to infer high-resolution detail based

on available low-resolution data (e.g., (Mehri et al.,

2021b), (Mehri et al., 2021a)). Lately, a variant of

super-resolution has emerged that takes advantage of

the guidance of an additional high-resolution image,

known as a ”guide image.” This guide image has simi-

a

https://orcid.org/0000-0002-3684-0656

b

https://orcid.org/0000-0003-2468-0031

lar content to the low-resolution image but is acquired

at a higher resolution. This additional information

helps improve the quality and accuracy of the super-

resolved output by providing more detailed and ac-

curate information about the scene. Guided super-

resolution strategy has been used to improve depth-

map super-resolution; where a high-resolution RGB

image is used as a guiding image (e.g., (Liu et al.,

2017), (Guo et al., 2018)).

Thermal imaging technology has become rele-

vant in various fields, including surveillance, medical

imaging, and industrial applications, due to its ability

to capture temperature variations and reveal hidden

patterns in the infrared spectrum. However, the intrin-

sic limitations of thermal sensors often result in low-

resolution images, which can make accurate analy-

sis and decision-making processes difficult. There-

fore, thermal super-resolution has emerged as a very

popular technique to improve the quality, visibility,

and accuracy of thermal images (e.g., (Rivadeneira

et al., 2023), (Mandanici et al., 2019), (Prajapati et al.,

2021), (Zhang et al., 2021)). By increasing resolution

and reducing noise, thermal image super-resolution

improves object clarity and detail in thermal images,

benefiting various applications. Improved visibility of

objects makes them appear sharper and more defined,

aiding tasks such as surveillance and object identifi-

cation. Additionally, thermal image super-resolution

Suárez, P. and Sappa, A.

A Generative Model for Guided Thermal Image Super-Resolution.

DOI: 10.5220/0012469400003660

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 19th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2024) - Volume 3: VISAPP, pages

765-771

ISBN: 978-989-758-679-8; ISSN: 2184-4321

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

765

provides more detailed information, increasing the ac-

curacy of thermal image analysis. The guidance strat-

egy mentioned above has also being used in the ther-

mal image super-resolution problem, where a high-

resolution visible spectrum image is considereded as

a guidance for the super-resolution process (e.g., (Al-

masri and Debeir, 2019), (Almasri and Debeir, 2018),

(Gupta and Mitra, 2021), (Gupta and Mitra, 2020)).

In the present work, the problem of guided super-

resolution is addressed using an adversarial gener-

ative model, which allows obtaining improved syn-

thetic images (super-resolved) using as input a fused

image (HSV color space brightness channel and the

low-resolution thermal image).

The performance and quality of the obtained syn-

thesized super-resolved thermal images are compre-

hensively evaluated through extensive experiments

and comparisons with state-of-the-art methods. The

manuscript is structured as follows; Section 2, de-

scribes related state-of-the-art approaches. Section

3 introduces the proposed approach. Next, Section

4, shows experimental results and comparison with

state-of-art approaches. Both quantitative and quali-

tative results are provided showing the improvements

achieved with the proposed approach. Finally, con-

clusions and future works are given in Section 5.

2 RELATED WORK

Single image super-resolution (SISR) is a challeng-

ing task in image processing that aims to reconstruct

high-resolution images from low-resolution images.

In recent years, there has been a growing interest in

using prior information to improve the performance

of SISR methods. Prior information can be used

to guide the reconstruction process and improve the

quality of the output images. In this related work,

we review some of the recent advances in SISR using

prior information. One of the approaches presented

in (Chudasama et al., 2020) proposes a CNN network

named TherISuRNet which employs a progressive

enhancement method that incorporates asymmetric

residual learning, ensuring computational efficiency

for a variety of enhancement factors, including ×2

and ×3, and ×4. This architecture is specifically de-

signed to include separate modules for extracting low

and high-frequency features, complemented by up-

sampling blocks. Another approach that uses a gen-

erative network is the one proposed in (Deepak et al.,

2021) an architecture for the super-resolution of a sin-

gle image based on a Generative Adversarial Network

(GAN) is presented. This model has been specifically

designed to improve the images of thermal cameras.

The model achieves ease of implementation and com-

putational efficiency. To speed up the model training

process and reduce the number of training parame-

ters, the number of residual blocks has been reduced

to just 5, allowing for faster training. Additionally, the

batch normalization layers are removed from the gen-

erator and discriminant networks to eliminate redun-

dancy in the model architecture. Reflective padding

is carefully incorporated before each convolutional

layer to ensure that feature map sizes are preserved

at the edges.

In (Thuan et al., 2022) a method is proposed to

increase the resolution of thermal images using edge

features of corresponding high-resolution visible im-

ages. This method is based on a Generative Adver-

sarial Network (GAN) that uses residual dense blocks

and can perform super-resolution. The dataset used in

this method contains raw image data of indoor scenes

captured by low-resolution thermal cameras. Another

paper presented by (Zhang et al., 2022) introduces

a novel network called Heat-Transfer-Inspired Net-

work (HTI-Net) for SR image reconstruction, draw-

ing inspiration from heat transfer principles. Their

approach involves redesigning the ResNet network

using a second-order mixed-difference equation de-

rived from finite difference theory, allowing for en-

hanced feature reuse by integrating multiple informa-

tion sources. Furthermore, a pixel value flow equa-

tion (PVFE) in the image domain has been developed,

based on the thermal conduction differential equation

(TCDE) in the thermal field, to tap into deep po-

tential feature information. In Wang et al. (Wang

et al., 2022), the authors introduce a deep-learning-

based approach for fusing infrared and visible im-

ages, focusing on multimodal super-resolution recon-

struction. Their method uses an encoder-decoder ar-

chitecture to achieve this. Their approach was found

to yield various imaging modalities and demonstrated

superior performance in both visual effects and objec-

tive assessments. Additionally, the authors conduct a

systematic review of the applications, methodologies,

datasets, and evaluation metrics relevant to infrared

(IR) image super-resolution. They categorized IR im-

age super-resolution methods into two groups: tra-

ditional methods and deep learning-based methods.

Traditional methods were further divided into three

subcategories: frequency domain-based, dictionary-

based, and other miscellaneous methods.

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

766

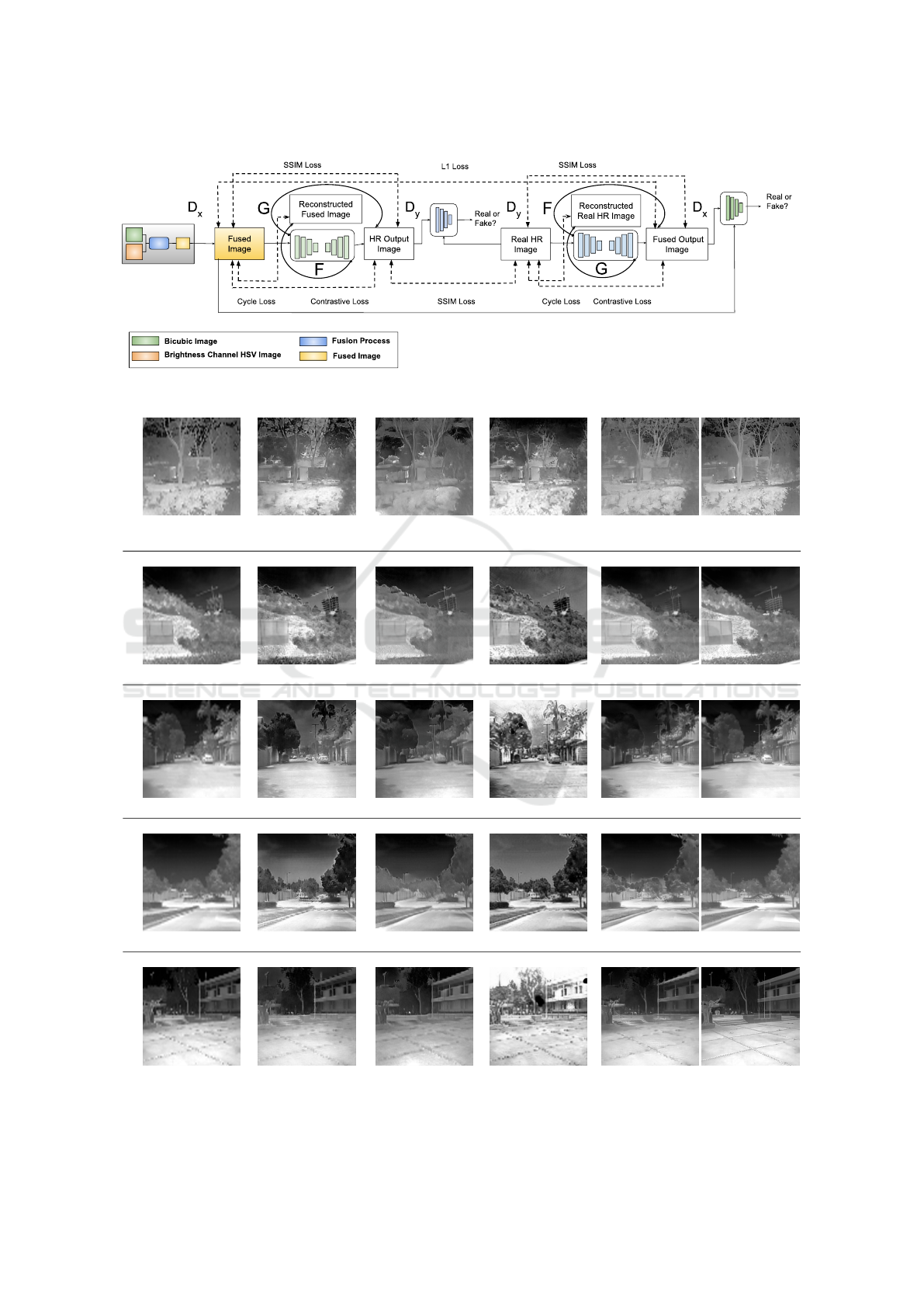

Figure 1: CycleGAN proposed architecture.

Bicubic CycleGAN (Zhu et al., 2017) CUT (Park et al., 2020) FastCUT (Park et al., 2020) Ours GT

Bicubic CycleGAN (Zhu et al., 2017) CUT (Park et al., 2020) FastCUT (Park et al., 2020) Ours GT

Bicubic CycleGAN (Zhu et al., 2017) CUT (Park et al., 2020) FastCUT (Park et al., 2020) Ours GT

Bicubic CycleGAN (Zhu et al., 2017) CUT (Park et al., 2020) FastCUT (Park et al., 2020) Ours GT

Bicubic CycleGAN (Zhu et al., 2017) CUT (Park et al., 2020) FastCUT (Park et al., 2020) Ours GT

Figure 2: Results from the state-of-the-art and the proposed approaches.

A Generative Model for Guided Thermal Image Super-Resolution

767

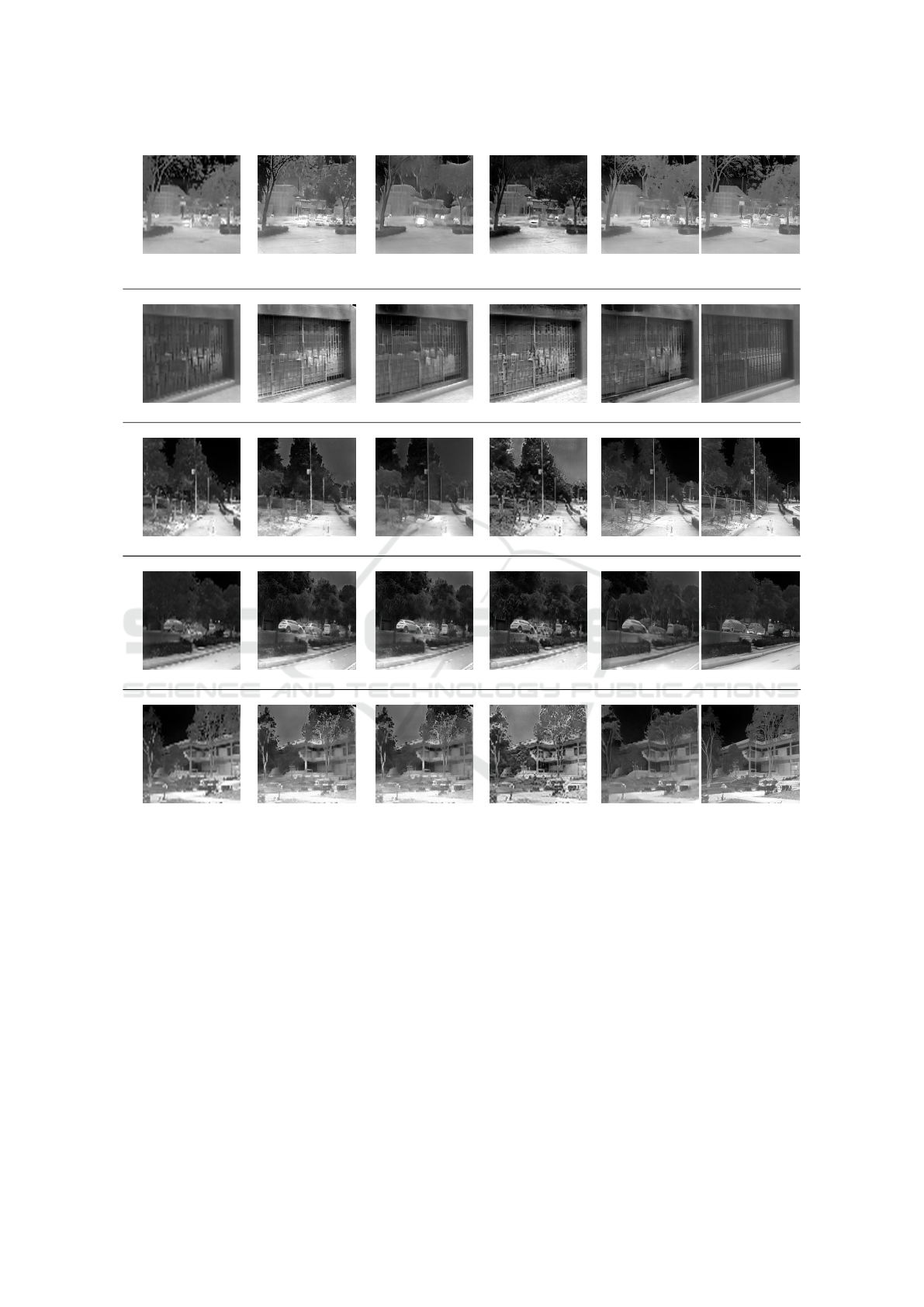

Bicubic CycleGAN (Zhu et al., 2017) CUT (Park et al., 2020) FastCUT (Park et al., 2020) Ours GT

Bicubic CycleGAN (Zhu et al., 2017) CUT (Park et al., 2020) FastCUT (Park et al., 2020) Ours GT

Bicubic CycleGAN (Zhu et al., 2017) CUT (Park et al., 2020) FastCUT (Park et al., 2020) Ours GT

Bicubic CycleGAN (Zhu et al., 2017) CUT (Park et al., 2020) FastCUT (Park et al., 2020) Ours GT

Bicubic CycleGAN (Zhu et al., 2017) CUT (Park et al., 2020) FastCUT (Park et al., 2020) Ours GT

Figure 3: Results from the state-of-the-art and the proposed approaches using Thermal Stereo testing dataset.

3 PROPOSED STRATEGY FOR

THERMAL-LIKE

SUPER-RESOLUTION

In this section, the applied strategy for achieving an

×8 super-resolution of thermal images, which in-

volves the utilization of a CycleGAN-based archi-

tecture (Zhu et al., 2017), trained with unpair im-

ages is presented. This sophisticated architecture is

engineered to transform low-resolution thermal im-

ages into remarkably detailed, high-resolution coun-

terparts.

The preprocessing phase is a crucial step in this

process. It involves the fusion of the bicubic image,

which is derived from the initial low-resolution ther-

mal image, with the brightness channel of the HSV

(Hue, Saturation, Value) color space. It is notewor-

thy that the RGB image was initially converted to

the HSV color space, and then its brightness channel

was extracted to obtain the luminance-bicubic fused

image used as input in the architecture. The fusion

process in the preprocessing phase helps the model

generate high-resolution thermal images by integrat-

ing the thermal information with the brightness chan-

nel of the RGB image converted to HSV color space.

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

768

This fusion allows the model to obtain a more com-

prehensive representation of the image’s content to

enhance the understanding of the image content, pre-

serving structural details and textures. Additionally,

this integration of two different data sources allows

the model to better preserve crucial details during the

super-resolution process. Therefore, by taking ad-

vantage of the brightness channel of an HSV image,

which contains information about the overall inten-

sity of the image, the model can accurately represent

the essential features and characteristics of the origi-

nal content. It effectively enhances the model’s ability

to preserve crucial details during the super-resolution

process.

Additionally, a residual layer is implemented as

a skip connection at the end of the network (Zhang

et al., 2018). This residual layer plays a pivotal role

in fine-tuning the super-resolved output, ensuring that

the essential features and characteristics of the orig-

inal image are faithfully preserved. The architecture

of the proposed approach is presented in Fig. 1.

For the training process, inspired on (Su

´

arez and

Sappa, 2023) multiple loss functions are employed,

each serving a unique purpose in guiding the model

toward the desired outcome. These loss functions are

the L1 loss (Mean Absolute Error) which helps to cal-

culate the absolute pixel-wise difference between the

super-resolved and high-resolution images. It encour-

ages the model to minimize these differences, ensur-

ing accurate pixel-level reconstruction. This L1 loss

is defined as:

L

L1 regularized

(G) =

1

N

N

∑

i=1

|G(x

i

) − y

i

| + β · R(G), (1)

where x

i

and y

i

are the pixel values at the same posi-

tion (i, j) in the two images. N is the total number of

pixels in the images.

∑

N

i=1

represents the sum over all

the pixels in the images. |x

i

− y

i

| calculates the abso-

lute difference between the corresponding pixel val-

ues in the two images. β is the regularization hyperpa-

rameter that controls the importance of regularization

in the loss function. R(G) represents the regulariza-

tion term, which may take the form of a parameter

norm L1 applied to the model.

Also, the contrastive loss has been included, this

loss function introduces a contrastive comparison be-

tween the discriminator’s assessment of real and gen-

erated images. It is designed to encourage similar data

points to be closer in the learned feature space while

pushing dissimilar data points farther apart. This loss

is defined as:

L

contrastive

(

ˆ

Y ,Y ) =

L

∑

l=1

S

l

∑

s=1

ℓ

contr

( ˆv

s

l

,v

s

l

, ¯v

s

l

), (2)

where v

l

∈ R

S

l

×D

l

represents a tensor whose shape

depends on the model architecture. The variable S

l

denotes the number of spatial locations of the tensor.

Consequently, the notation v

s

l

∈ R

D

l

is employed to

refer to the D

l

-dimensional feature vector at the s-th

spatial location. Additionally, ¯v

s

l

∈ R

(S

l

−1)×D

l

repre-

sents the collection of feature vectors at all other spa-

tial locations except the s-th one.

Additionally, the SSIM loss (Structural Similarity

Index) has been used to evaluate the structural simi-

larity between the super-resolved and high-resolution

images. It focuses on preserving the structural details

and textures in the output. The SSIM Loss is defined

as:

LSSIM = 1 − SSIM(s, r), (3)

where, s and r are the high-resolution images obtained

from the model and the original high-resolution im-

age, respectively, that are being compared. SSIM()

is the SSIM function, which measures the similarity

between two images (s,r). Finally, the cyclic consis-

tency loss helps to ensure the cyclic consistency of the

model’s transformations. This loss function measures

the difference between the original high-resolution

image and the result of applying the model twice in

succession. This cycle consistency loss is defined as:

L

cycle

(F, G) = E

s∼p

data

(s)

[∥s − G(F(s))∥

1

]

+E

r∼p

data

(r)

[∥r − F(G(r))∥

1

],

(4)

where, s and r are the high-resolution images obtained

from the model and the original high-resolution im-

age, respectively, that are being compared.

These loss functions collectively guide the model

during the training process, steering it toward gener-

ating high-quality, super-resolved thermal images that

faithfully capture the intricate details and characteris-

tics of the original content. The selection of λ val-

ues enables us to finely adjust the balance between

these various objectives throughout the training pro-

cess. This resulting loss is represented as:

L

final

= λ

1

L

cont

(G,H, X) + λ

2

L

cont

(G,H,Y ) (5)

+λ

3

L

SSIM(x,y)

+ λ

4

L

cycle(G,F)

+λ

5

L

L1 regularizeed(F,G),

where λ

i

are empirically defined.

4 EXPERIMENTAL RESULTS

4.1 Datasets

In this research, our dataset Thermal Stereo, has been

used and contains pairs of high-quality visible and

A Generative Model for Guided Thermal Image Super-Resolution

769

thermal images captured under daylight conditions.

The dataset consists of 200 image pairs taken with

Basler and TAU2 cameras, each having different reso-

lutions. The Elastix algorithm (Klein et al., 2009) has

been employed to register these pairs of thermal and

visible images, all possessing a resolution of 640×480

pixels.

For the experiments, the dataset has been split up

into three subsets: training, validation, and test im-

age pairs, comprising 160, 30, and 10 image pairs,

respectively. It is important to note that the high-

resolution visible spectrum images are used as ref-

erences to enhance the low-resolution (LR) thermal

images and generate high-resolution thermal images.

No additional noise has been introduced to the down-

scaled images during this process.

4.2 Results

This section presents the experimental results of the

proposed approach for a super-resolution model based

on RGB images and its corresponding thermal low-

resolution image. To compare the results, other state-

of-the-art generative models with similar structural

characteristics have been selected. All models have

been trained with the same data set, to guarantee an

evaluation that follows the same parameters and ex-

ecution environment. Also, to evaluate the perfor-

mance of these methods, widely used metrics such as

SSIM (structural similarity index) and PSNR (Peak

Signal-to-Noise Ratio) have been used.

The quantitative results of this comparison are

summarized in Table 1, which shows the values ob-

tained from the proposed super-resolution method in

comparison with other similar generative approaches.

In all cases, improvements can be observed in both

metrics. Qualitatively, the images super-resolved with

our strategy have greater contour detail than the im-

ages produced by the other generative models. Fig-

ures 2 and 3 show results for a sample of the test set,

which was obtained with each super-resolution model

for comparison.

The experimental results demonstrate that our ap-

proach generates high-quality, super-resolved thermal

images that faithfully capture the details and levels

of pixel intensity of the super-resolved thermal im-

age. The approach is compared with various state-

of-the-art methods, and it has demonstrated good per-

formance in both quantitative and qualitative evalua-

tions.

Table 1: Average results on super-resolution using our test-

ing set. Best results in bold.

Approaches

NYU Dataset

PSNR SSIM

Bicubic (Han et al., 2021) 27,244 0.792

CycleGAN (Zhu et al., 2017) 27,311 0.794

CUT (Park et al., 2020) 27,417 0.793

FastCUT (Park et al., 2020) 27,501 0.793

Proposed Approach 27,893 0.802

5 CONCLUSIONS

In conclusion, the proposed thermal super-resolution

strategy has proven to be robust in the quality and

tonality of the pixels. It has also shown better results

in quantitative metrics. In future work, we are going

to explore the integration of additional loss functions,

such as perceptual loss or adversarial losses, which

can further refine the ability of our model to gener-

ate high-quality super-resolved synthesized thermal

images. In addition, the exploration of novel archi-

tectures, including attention mechanisms, or recur-

rent models could open new ways to improve thermal

super-resolution techniques.

ACKNOWLEDGEMENTS

This material is based upon work supported by the

Air Force Office of Scientific Research under award

number FA9550-22-1-0261, and partially supported

by the Grant PID2021-128945NB-I00 funded byM-

CIN/AEI/10.13039/501100011033 and by “ERDF A

way of making Europe”; the “CERCA75046 Pro-

gramme / Generalitat de Catalunya”; and the ESPOL

project CIDIS-12-2022.

REFERENCES

Almasri, F. and Debeir, O. (2018). Rgb guided thermal

super-resolution enhancement. In 2018 4th Interna-

tional Conference on Cloud Computing Technologies

and Applications (Cloudtech), pages 1–5. IEEE.

Almasri, F. and Debeir, O. (2019). Multimodal sensor

fusion in single thermal image super-resolution. In

Computer Vision–ACCV 2018 Workshops: 14th Asian

Conference on Computer Vision, Perth, Australia, De-

cember 2–6, 2018, Revised Selected Papers 14, pages

418–433. Springer.

Chudasama, V., Patel, H., Prajapati, K., Upla, K. P., Ra-

machandra, R., Raja, K., and Busch, C. (2020).

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

770

Therisurnet - a computationally efficient thermal im-

age super-resolution network. In Proceedings of the

IEEE/CVF Conference on Computer Vision and Pat-

tern Recognition (CVPR) Workshops.

Deepak, S., Sahoo, S., and Patra, D. (2021). Super-

resolution of thermal images using gan network.

In 2021 Advanced Communication Technologies and

Signal Processing (ACTS), pages 1–5.

Guo, C., Li, C., Guo, J., Cong, R., Fu, H., and Han, P.

(2018). Hierarchical features driven residual learning

for depth map super-resolution. IEEE Transactions on

Image Processing, 28(5):2545–2557.

Gupta, H. and Mitra, K. (2020). Pyramidal edge-maps and

attention based guided thermal super-resolution. In

Computer Vision–ECCV 2020 Workshops: Glasgow,

UK, August 23–28, 2020, Proceedings, Part III 16,

pages 698–715. Springer.

Gupta, H. and Mitra, K. (2021). Toward unaligned guided

thermal super-resolution. IEEE Transactions on Im-

age Processing, 31:433–445.

Han, J., Shoeiby, M., Petersson, L., and Armin, M. A.

(2021). Dual contrastive learning for unsupervised

image-to-image translation. In Proceedings of the

IEEE/CVF Conference on Computer Vision and Pat-

tern Recognition Workshops.

Klein, S., Staring, M., Murphy, K., Viergever, M. A., and

Pluim, J. P. W. (2009). elastix: A toolbox for intensity-

based medical image registration. IEEE Transactions

on Medical Imaging, 29(1):196–205.

Liu, W., Chen, X., Yang, J., and Wu, Q. (2017). Robust

color guided depth map restoration. IEEE Transac-

tions on Image Processing, 26:315–327.

Mandanici, E., Tavasci, L., Corsini, F., and Gandolfi, S.

(2019). A multi-image super-resolution algorithm ap-

plied to thermal imagery. Applied Geomatics, 11:215–

228.

Mehri, A., Ardakani, P. B., and Sappa, A. D. (2021a).

Linet: A lightweight network for image super reso-

lution. In 2020 25th International Conference on Pat-

tern Recognition (ICPR), pages 7196–7202. IEEE.

Mehri, A., Ardakani, P. B., and Sappa, A. D. (2021b). Mpr-

net: Multi-path residual network for lightweight im-

age super resolution. In Proceedings of the IEEE/CVF

Winter Conference on Applications of Computer Vi-

sion, pages 2704–2713.

Park, T., Efros, A. A., Zhang, R., and Zhu, J.-Y. (2020).

Contrastive learning for unpaired image-to-image

translation. In European Conference on Computer Vi-

sion.

Prajapati, K., Chudasama, V., Patel, H., Sarvaiya, A., Upla,

K. P., Raja, K., Ramachandra, R., and Busch, C.

(2021). Channel split convolutional neural network

(chasnet) for thermal image super-resolution. In Pro-

ceedings of the IEEE/CVF Conference on Computer

Vision and Pattern Recognition, pages 4368–4377.

Rivadeneira, R. E., Sappa, A. D., Vintimilla, B. X., Bin, D.,

Ruodi, L., Shengye, L., Zhong, Z., Liu, X., Jiang, J.,

and Wang, C. (2023). Thermal image super-resolution

challenge results. In Proceedings of the IEEE Con-

ference on Computer Vision and Pattern Recognition

Workshops.

Su

´

arez, P. L. and Sappa, A. D. (2023). Toward a thermal

image-like representation. In Proceedings of the In-

ternational joint Conference on Computer Vision.

Thuan, N. D., Dong, T. P., Manh, B. Q., Thai, H. A., Trung,

T. Q., and Hong, H. S. (2022). Edge-focus thermal im-

age super-resolution using generative adversarial net-

work. In 2022 International Conference on Multime-

dia Analysis and Pattern Recognition (MAPR), pages

1–6.

Wang, B., Zou, Y., Zhang, L., Li, Y., Chen, Q., and Zuo, C.

(2022). Multimodal super-resolution reconstruction of

infrared and visible images via deep learning. Optics

and Lasers in Engineering, 156:107078.

Zhang, M., Wu, Q., Guo, J., Li, Y., and Gao, X.

(2022). Heat transfer-inspired network for image

super-resolution reconstruction. IEEE Transactions

on neural networks and learning systems.

Zhang, W., Sui, X., Gu, G., Chen, Q., and Cao, H. (2021).

Infrared thermal imaging super-resolution via multi-

scale spatio-temporal feature fusion network. IEEE

Sensors Journal, 21(17):19176–19185.

Zhang, Y., Li, K., Li, K., Wang, L., Zhong, B., and Fu,

Y. (2018). Image super-resolution using very deep

residual channel attention networks. In Proceedings of

the European conference on computer vision (ECCV),

pages 286–301.

Zhu, J.-Y., Park, T., Isola, P., and Efros, A. A. (2017).

Unpaired image-to-image translation using cycle-

consistent adversarial networks. In Proceedings of

the IEEE international conference on computer vi-

sion, pages 2223–2232.

A Generative Model for Guided Thermal Image Super-Resolution

771