SAM-Based Detection of Structural Anomalies in 3D Models for

Preserving Cultural Heritage

David Jurado-Rodr

´

ıguez

1 a

, Alfonso L

´

opez

1 b

, J. Roberto Jim

´

enez

1 c

, Antonio Garrido

2 d

,

Francisco R. Feito

1 e

and Juan M. Jurado

1 f

1

Department of Computer Science, University of Ja

´

en, Spain

2

Department of Cartographic, Geodetic and Photogrammetric Engineering, University of Ja

´

en, Spain

Keywords:

3D Modeling, Artificial Intelligence, Cultural Heritage Protection, Drones.

Abstract:

The detection of structural defects and anomalies in cultural heritage emerges as an essential component to

ensure the integrity and safety of buildings, plan preservation strategies, and promote the sustainability and

durability of buildings over time. In the search to enhance the effectiveness and efficiency of structural health

monitoring of cultural heritage, this work aims to develop an automated method focused on detecting unwanted

materials and geometric anomalies on the 3D surfaces of ancient buildings. In this study, the proposed solution

combines an AI-based technique for fast-forward image labeling and a fully automatic detection of target

classes in 3D point clouds. As an advantage of our method, the use of spatial and geometric features in the

3D models enables the recognition of target materials in the whole point cloud from seed, resulting from

partial detection in a few images. The results demonstrate the feasibility and utility of detecting self-healing

materials, unwanted vegetation, lichens, and encrusted elements in a real-world scenario.

1 INTRODUCTION

The importance of preserving architectural heritage

goes beyond a simple historical and cultural obliga-

tion; it represents a fundamental responsibility to-

wards future generations. Identifying and addressing

structural flaws and anomalies in historic buildings

early on is a fundamental pillar in ensuring the in-

tegrity and longevity of these monuments over time.

Thus, the proposal of methodologies to efficiently as-

sess the preservation of heritage and prevent future

incidents involves a promising field of research.

These methodologies can greatly benefit from ex-

ploiting feature patterns extracted from 3D models

and multi-sensory datasets. In this context, Visual

Computing plays a crucial role in 3D building in-

spection through the development of computer vision

methods that are applied to interpret, represent, clas-

sify, summarize, comprehend, and analyze content re-

lated to cultural heritage. These algorithms can be

employed to automatically detect anomalies or de-

a

https://orcid.org/0000-0003-2408-4926

b

https://orcid.org/0000-0003-1423-9496

c

https://orcid.org/0000-0002-1233-2294

d

https://orcid.org/0000-0002-6479-2698

e

https://orcid.org/0000-0001-8230-6529

f

https://orcid.org/0000-0002-8009-9033

fects in the building structure. This includes identi-

fying cracks, deformations, or other structural issues

by analyzing visual data obtained through images or

scans. This aids in automated analysis and under-

standing of the building composition and conserva-

tion.

AI-powered computer vision algorithms can ana-

lyze images and videos of buildings to identify struc-

tural defects, damage, or irregularities automatically.

Drones equipped with cameras can conduct aerial in-

spections of buildings, capturing high-resolution im-

ages and videos. AI can then be employed to ana-

lyze this data for structural issues. Indeed, the use

of AI techniques often involves training classification

models, and this training process typically relies on

labeled datasets.

This work proposes an AI-driven method focused

on generating annotated 3D models from a partial seg-

mentation of a few Unmanned Aerial Vehicle (UAV)

images. The proposed case studies aim to identify

structural flaws on the 3D surface of old buildings. In

this study, the target anomalies are self-healing ma-

terials, unwanted vegetation, lichens, and encrusted

elements. All of them are detrimental to the conserva-

tion of architectural heritage and their detection aids

in more effective preservation strategies and accurate

inspection of the spatial arrangement of structural el-

ements. The proposed pipeline is divided into 2 main

Jurado-Rodríguez, D., López, A., Jiménez, J., Garrido, A., Feito, F. and Jurado, J.

SAM-Based Detection of Structural Anomalies in 3D Models for Preserving Cultural Heritage.

DOI: 10.5220/0012464000003660

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 19th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2024) - Volume 3: VISAPP, pages

741-748

ISBN: 978-989-758-679-8; ISSN: 2184-4321

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

741

steps: (1) semi-automatic labeling of structural faults

of the target building in UAV imagery using AI tools,

(2) 3D point cloud image mapping, and classification

using geometric and radiometric features.The main

contribution of the proposed method lies in the de-

velopment of a methodology for recognizing target

materials in 3D point clouds in real-world scenarios

and identifying harmful elements in historic build-

ings. This is of vital importance in architectural con-

servation tasks and strategies.

This document is structured as follows: section 2

presents the current state-of-the-art, reviewing rele-

vant technologies and methodologies related to dig-

italization for preserving cultural heritage. Then,

section 3 describes datasets to which the proposed

method is targeted. section 4 outlines the proposed

method, whereas the obtained results and the exper-

iments conducted to validate our proposal are pre-

sented in section 5. Finally, the main contributions

of this work are summarized in section 6, including

insights toward future work that aid in further enhanc-

ing the proposed methodology.

2 PREVIOUS WORK

Built heritages face changes through time, includ-

ing erosion, degradation, deformations from natu-

ral phenomena, human interventions, inappropriate

restorations, etc (Li et al., 2023). More formally, the

ISO 19208:2016 standard (recently withdrawn, a new

standard is pending) (ISO, 2016) categorizes these de-

fects into five major groups: mechanical, electromag-

netic, thermal, chemical and biological agents. These

downgrading factors contrast with the relevance of

preserving built heritages and thus evidence the im-

portance of this work.

A deep study of the preservation of built heritage

using multiple technologies is provided by (Li et al.,

2023). Amongst these techniques, preservation and

conservation of cultural heritage are not only under-

stood as extracting faults and defects. Instead, the dig-

itization of cultural heritage and its dissemination has

also been vastly revised (Mendoza et al., 2023), de-

spite not being the main goal of this work. In this re-

gard, photogrammetry, Light Detection and Ranging

(LiDAR) and CAD modelling are frequent acquisition

techniques. These technologies are sometimes com-

bined with their digitization in Building Information

Modelling (BIM) that enables maintaining a record

of repairs and changes in cultural heritage (Moyano

et al., 2020; Rocha et al., 2020). Still, the digitization

is an indirect result of our work due to the reconstruc-

tion of 3D point clouds.

Regarding the detection of building anomalies,

current trends involve using Convolutional Neural

Networks (CNN) over imagery from UAVs and close-

sensing technology. Further insight into this field is

given by (Cumbajin et al., 2023). CNNs are catego-

rized according to the target surface, kind of prob-

lem (classification, semantic segmentation, instance

segmentation, etc.), network and training methodol-

ogy. According to this, the detection of defects over

metal surfaces is trained differently than building-

based methods as they require specialized datasets.

This even applies to individual defects: (Perez et al.,

2019) experimented with a shallow CNN composed

of convolutional and dense layers to identify mois-

ture. For this purpose, a small collaborative dataset

from copyright-free Internet images was used.

Transfer Learning has a significantly higher pres-

ence in building supervision than using custom

CNNs. Amongst the most frequent CNN archi-

tectures, pre-trained VGG, YOLO, U-Net, AlexNet,

GoogleLeNet, Inception and Xception networks stand

out. The work of (Kumar et al., 2021) outputs the

bounding box of cracks in close-sensing building im-

ages, helping to monitor them in real-time with UAVs

coupled with a Jetson-TX2. Otherwise, images can be

semantically segmented to highlight cracks (Mouz-

inho and Fukai, 2021). Region-based CNNs are also

frequent in the literature by using R-CNN (Xu et al.,

2021), Fast R-CNN, Faster R-CNN (Maningo et al.,

2020) and YOLO (Kumar et al., 2021). The objective

was to detect regions with cracks.

From the revised literature, it is clear that there are

some gaps in current building monitoring. Firstly, it is

mainly carried out using close-sensed imagery, rather

than enabling the monitoring of large areas. Thus,

surveys are far slower as they need to capture small

regions of buildings. Second, CNNs are specialized

in specific materials and defects. This drawback is

not only caused by learning limitations but also by

the lack of available datasets. This is even more visi-

ble for defects such as moisture, where RGB imagery

is used instead of more suitable data sources (e.g.,

thermography). Unlike our work, some of the revised

studies are intended for real-time tracking by com-

municating information with Internet of Things (IoT)

communication (Kumar et al., 2021). The main draw-

back of the latter is that it requires planning the lo-

cation of a few devices, for instance, addressing the

optimal sensor placement (OSP). On the other hand,

our case study provides a long-term monitoring tool

for large buildings, that, however, is not a continuous

tracking. Therefore, it is intended for cultural her-

itage whose immediate changes are of no relevance

in the short term. Although this study is conducted

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

742

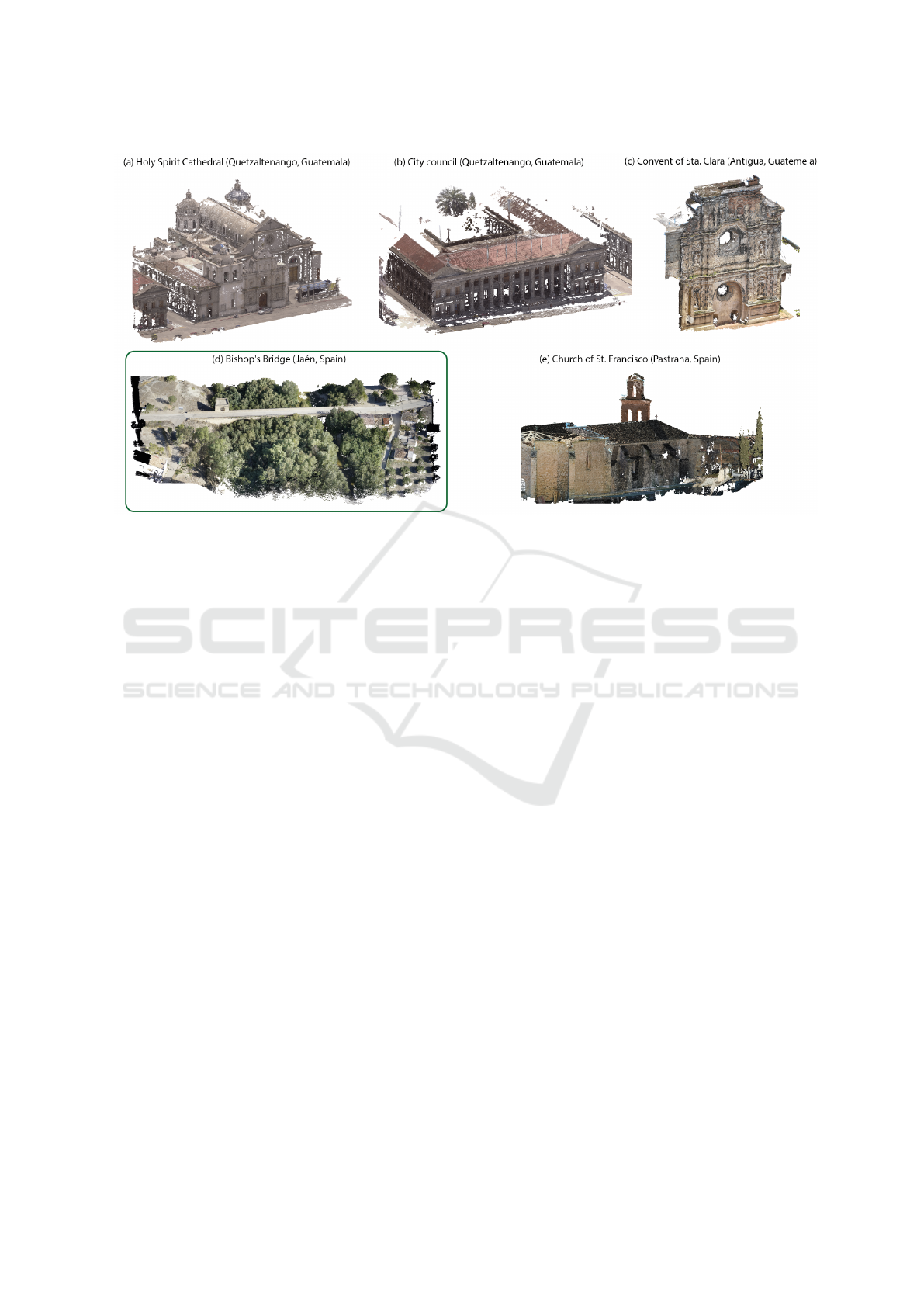

Figure 1: Selected models for the digitization of historical heritage and conservation assessment. These datasets comprise

point clouds for 3D representation and high-resolution images (50 MP).

with RGB imagery, point clouds can be fed with fur-

ther data sources (L

´

opez et al., 2023) that can improve

anomaly detection.

3 THE GENERATION OF

DATASETS

The increasing use of photogrammetric techniques

and LiDAR systems has facilitated the generation of

a wide variety of high-detailed 3D models from many

real-world scenarios. Moreover, the digitization of

cultural heritage has been promoted by the prolifer-

ation of UAV sensors capable of capturing multi-view

images of the whole building structure. Consequently,

dense point clouds can be easily generated and they

bring new opportunities to combine geometric and

spatial features for multiple purposes such as object

detection, semantic classification, and scene under-

standing.

In the field of preservation and restoration of his-

toric buildings, the generation of 3D models allows us

to achieve a more accurate assessment of current con-

servation status. In recent years, we have collected

a huge set of 3D models of significant cultural sites

and civil infrastructures. These datasets were gener-

ated by using airborne LiDAR or photogrammetry. In

both cases, overlapped RGB images were captured to

obtain textured point clouds. Depending on the ac-

quisition system, the image resolution ranges from 20

to 50 megapixels (MP), whereas the point cloud den-

sification varies from 100 to 500 points per squared

meter. Figure 1 shows some results of the resulting

3D reconstructions corresponding to different places

in Spain and Guatemala.

The case study of this work is the Bishop’s Bridge

scenario to automatically identify materials or anoma-

lies that may pose a risk to the preservation of this

structure. The Bishop’s Bridge is a noteworthy exam-

ple of the Andalusian Renaissance, and its construc-

tion dates back to the early 16th century. More pre-

cisely, it was built between 1505 and 1518 to facilitate

passage over the Guadalquivir River. The bridge, de-

signed on a slope to accommodate the varying lev-

els of the supporting shores, comprises four ashlar

arches. These arches are reinforced by correspond-

ing cutwaters—curved pillars strategically placed to

mitigate the force of the water and evenly distribute it

across each arch. Additionally, a chapel is affixed to

one of the abutments of the bridge.

4 METHODOLOGY

This section presents the workflow to detect struc-

tural anomalies in 3D architectural building models.

Our method consists of two stages: the first employs

a few UAV images for the semi-automated identifi-

cation of anomalies in the infrastructure surface and

generates segmented imagery. The second stage is

SAM-Based Detection of Structural Anomalies in 3D Models for Preserving Cultural Heritage

743

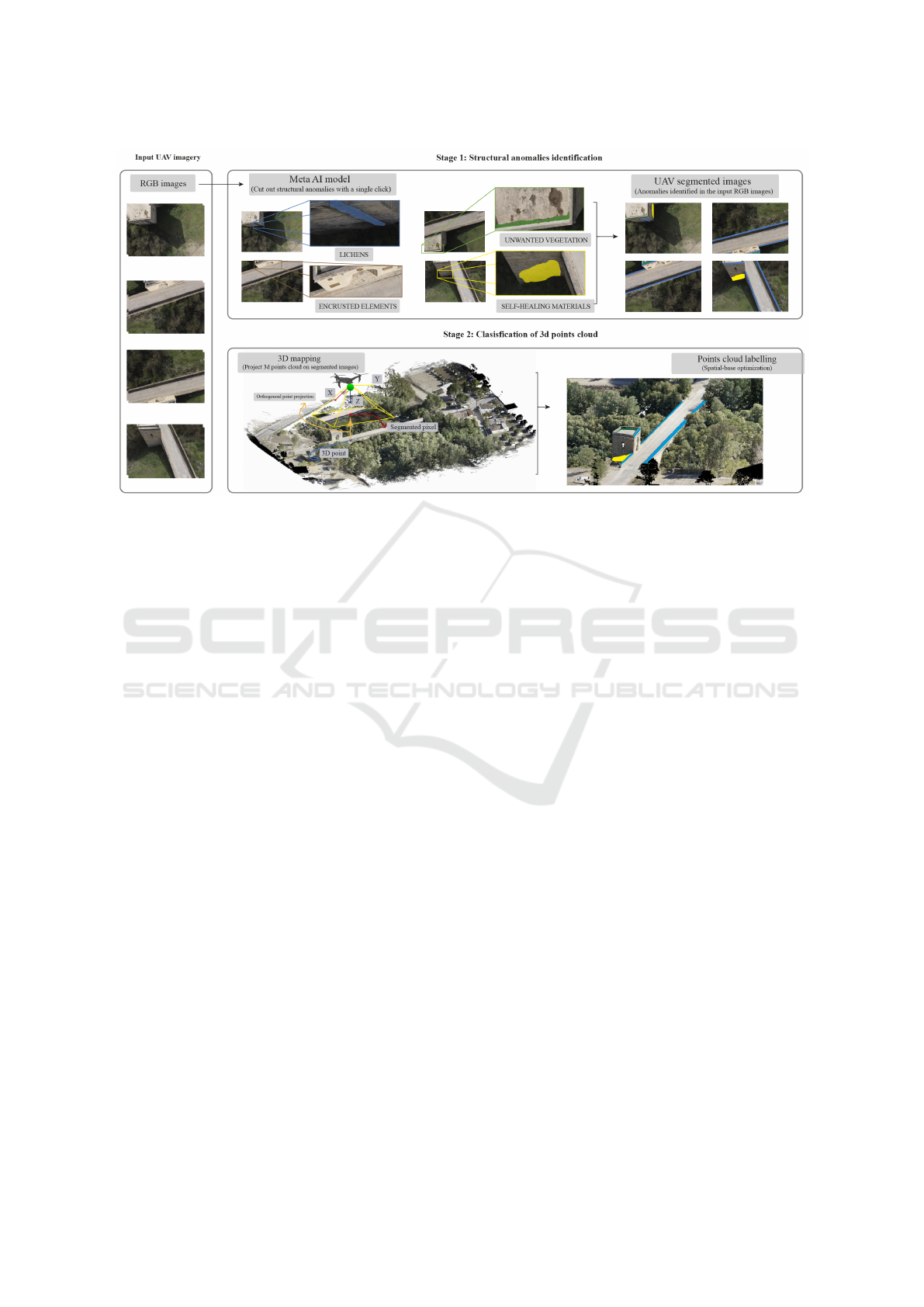

Figure 2: An overview of the proposed workflow that summarizes the main steps of the proposed solution combines an AI-

based technique for fast-forward image labeling and a fully automatic detection of target classes in 3D point clouds.

the mapping of the 3D point cloud onto previously

segmented images. This provides partial labeling of

the point cloud. Finally, an algorithm for 3D model

classification based on geometric and color features is

implemented by taking the already segmented classes

as a starting point, generating a fully segmented 3D

point cloud.

Figure 2 provides a graphical overview of the pro-

posed methodology. The obtained results demon-

strate the reliability of our proposal in identifying

anomalies such as self-healing materials, unwanted

vegetation, lichens, and encrusted elements in archi-

tectural structures within real-world environments.

4.1 Structural Anomalies Identification

in UAV Images

The precise classification of diverse objects within

three-dimensional environments continues to pose a

considerable challenge for contemporary AI models.

To the best of our knowledge, a comprehensive AI

model that effectively tackles the intricate task of

semantic segmentation in 3D models has not been

yet found. Nevertheless, amid this existing limita-

tion, current AI models exhibit significant promise

for the semantic segmentation of high-resolution im-

ages. In this context, SAM (Segment Anything

Model)(Kirillov et al., 2023) must be highlighted as

a valuable resource that employs advanced machine

learning techniques to identify and delineate various

objects in an image. Our methodology utilizes ”Seg-

ment Anything” as a semi-automatic tool to delineate

specific anomalies, such as self-healing materials, un-

wanted vegetation, lichens, and encrusted elements,

on the architectural structures of interest in images

captured by UAVs.

The principle of SAM lies in its ability to con-

duct a meticulous analysis of the visual characteris-

tics of images using pattern recognition and classi-

fication techniques based on geometric and textural

features. This approach enables SAM to distinguish

various elements within an image. However, the ini-

tial segmentation performed by SAM may not always

achieve the required precision, particularly in the con-

text of cultural heritage preservation. This is where

the intervention of experts in architectural conserva-

tion and image analysis becomes essential. Following

SAM’s initial segmentation, these specialists review

and enhance the results, applying their expertise in

architecture and the historical significance of the ele-

ments under scrutiny. This review process fine-tunes

and refines the segmentations to achieve precise and

contextually relevant outcomes.

This intervention facilitates the correction and re-

finement of segmented parts, ensuring a nuanced and

accurate depiction of anomalies. The hybrid nature of

this approach, fusing the precision of machine learn-

ing with human expertise, substantially enhances the

overall quality of the segmentation and subsequently

improves the efficacy of anomaly detection. The seg-

mentation stage generates a set of labeled images,

serving as a crucial starting point for the next method

phase.

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

744

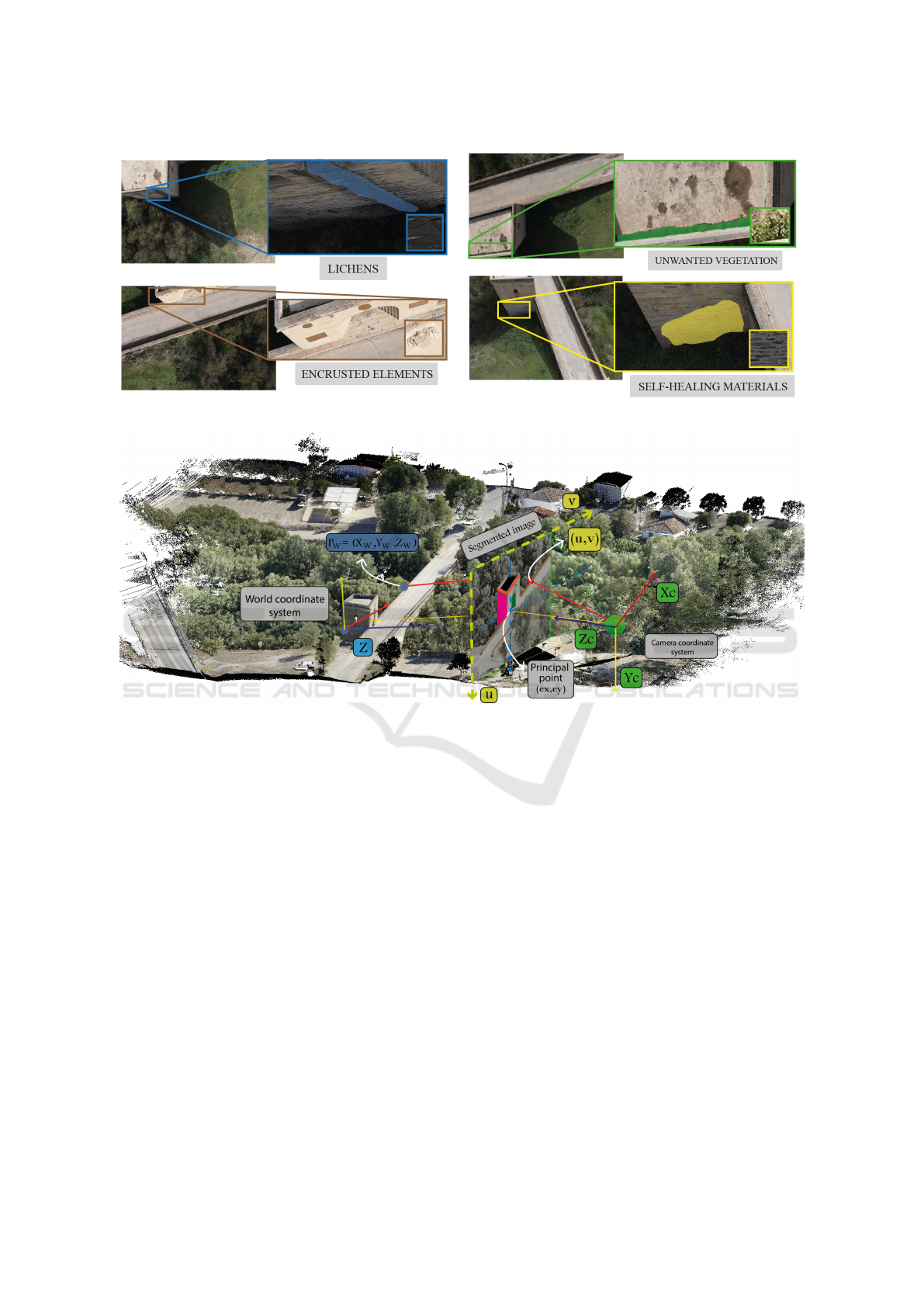

Figure 3: UAV images highlighting the anomalies taken into account in this investigation.

Figure 4: Graphical overview of the pinhole camera model. This describes the relationship between the coordinates (X,Y,Z)

of one point in the world space and its projection (u,v) onto the image plane of an ideal pinhole camera.

4.2 Classification of 3D Points Clouds

The classification of 3D point clouds constitutes a

fundamental stage in our methodology for identifying

structural anomalies in 3D models. In this section, we

present an algorithm to automatically 3D point cloud

labelling, using the segmented UAV images generated

in the previous section. This algorithm is divided into

two phases: 3D mapping and point cloud labeling.

The initial phase projects the 3D point cloud onto the

segmented images and labels those points whose pro-

jection finds a segmented class. The second phase in-

volves expanding these initially labeled regions in the

point cloud using the following information: (1) the

angle formed by the point’s normal with respect to the

class normal, (2) the point color, and (3) the angle of

the expansion vector with respect to the perpendicular

vector of the normal.

4.2.1 The 3D Mapping

The 3D mapping process estimates the projection of

a single 3D point onto the image plane. This projec-

tion is computed (as depicted in Figure 4) using the

pinhole camera model. Given a point (P

w

) with co-

ordinate (X

w

,Y

w

,Z

w

) in the world coordinate system,

the rotation and translation camera matrix (R, and t)

which represent the camera’s orientation and position

in the world. The rotation matrix R describes how the

camera is oriented, while the translation matrix T in-

dicates its location relative to a reference point. Note

that both matrix are derived from the extrinsic calibra-

tion process and enable to estimate the transformation

from (P

w

) to camera coordinate system (X

c

,Y

c

,Z

c

) as

follows.

X

c

Y

c

Z

c

= [R | t]

X

w

Y

w

Z

w

1

(1)

SAM-Based Detection of Structural Anomalies in 3D Models for Preserving Cultural Heritage

745

The lens distortion is modelled using ra-

dial distortion (k

1

,k

2

,k

3

,k

4

,k

5

,k

6

), tangential dis-

tortion (p

1

,p

2

) and prism distortion coefficients

(s

1

,s

2

,s

3

,s

4

). Thus, given the camera matrix (K),

composed of focal lengths (f

x

,f

y

) and an optical cen-

ter (c

x

,c

y

), the coordinates value (u,v) which deter-

mine the projection of the (P

w

) to the segmented im-

age plane is computed as:

u

v

=

f

x

x

′′

+ c

x

f

y

y

′′

+ c

y

(2)

where

x

′′

y

′′

=

x

′

1+k

1

r

2

+k

2

r

4

+k

3

r

6

1+k

4

r

2

+k

5

r

′

k

6

r

6

+ 2p

1

x

′

y

′

+ p

2

r

2

+ 2x

′2

+ s

1

r

2

+ s

2

r

4

y

′

1+k

1

r

2

+k

2

r

4

+k

3

r

6

1+k

4

r

2

+k

5

r

4

+k

6

r

6

+ p

1

r

2

+ 2y

′2

+ 2p

2

x

′

y

′

+ s

3

r

2

+ s

4

r

4

(3)

with

r

2

= x

′2

+ y

′2

(4)

and

x

′

y

′

=

X

c

/Z

c

Y

c

/Z

c

if Z

c

̸= 0 (5)

Finally, it is crucial to highlight that after the 3D

mapping stage, a filtering process is performed to dis-

card occluded points. Occlusion is a common issue

in three-dimensional environments and affects the ac-

curacy of the segmentation process significantly. To

tackle this, a ’z-buffer’ is generated for each image

during the projection stage. This buffer stores the

depth of the 3D points projected from the camera po-

sition. In this way, the occluded points are identified

and omitted.

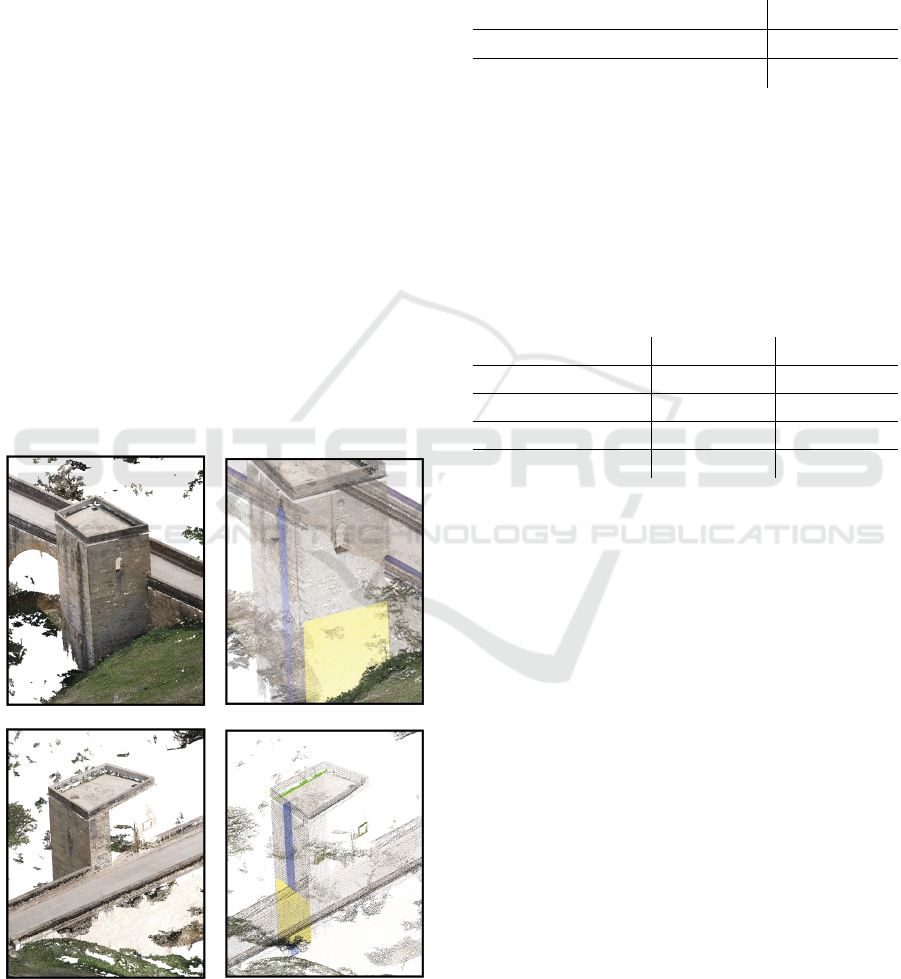

Figure 5: Visual results showing the removal of spotted oc-

clusion (marked in red) after applying the proposed method.

In Figure 5, discarded points marked as occluded

are highlighted in red. This visual representation il-

lustrates the impact of occluded point removal, help-

ing to improve the quality and accuracy of the final

result by providing a faithful and complete represen-

tation of the segmented class.

4.2.2 Point Cloud Labelling

After performing the mapping and omitting occluded

points, a partially segmented point cloud is obtained.

Only the points visible in the images are labelled in

this stage; for this reason, the following step is to ex-

tract a more complete segmentation of the cloud from

the previously labelled 3D dataset.

Figure 6: The proposed method to expand the initially la-

beled classes and generate the 3D point cloud completely

labeled.

In order to address this challenge, an automatic

method based on 3D geometric features has been im-

plemented. For each labeled class, we computed: (1)

a radius (r) to determine the search area in which

neighboring unlabeled points will be considered (2)

the expansion vector, and (3) the color gradient. In

our study, after some tests, the radius is set to five

times the ground sampling distance (GSD). Note that

three unlabeled point features are taken into account

to add the point into the segmented class: (1) the nor-

mal vector, (2) the vector perpendicular to the normal

in the class plane, and (3) the color (R, G, B). If the

point and class vectors are closely aligned (i.e., the

enclosed angle is smaller than their respective thresh-

olds) and the color does not diverge by more than one

given threshold, the point is added to that class.

Figure 6 presents an example of how this method

works to obtain the segmentation of unlabeled points

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

746

belonging to the ’lichen’ class. Geometric and spatial

features were significantly more useful to obtain bet-

ter results. In this way, those 3D points not labeled in

the images can be part of the surrounding classes with

which they share similar features.

5 RESULTS AND EVALUATION

In this section, we describe the results from the exper-

iments which were carried out to validate the method

in natural scenarios. The accuracy, performance, and

robustness of our method were tested on several sce-

narios considering different architectural buildings.

In summary, the resulting 3D models are character-

ized by a GSD of 0.7 cm, an average point cloud den-

sity of 5 thousand points per cubic meter, and a total

of 8 million points. These results were obtained using

a CPU (Intel® CoreTM i7-10510U 2.30 GHz) with 8

GB RAM and Ubuntu 20.04.1 as the operating sys-

tem.

Section 5 shows the result of our method after per-

forming the classification and identification of the dif-

ferent anomalies in the 3D point cloud. As a result,

our method generates a total of 4 point clouds, one

for each detected anomaly.

Figure 7: Results achieved after 3D point cloud labeling.

Regarding the performance of the method, Table 1

shows the computational cost required by the method

to perform the point cloud classification in its entirety.

As can be seen in the table, the cloud mapping on

the images only takes 9 seconds; however, the auto-

matic cloud labeling process requires a total of 25.4

seconds.

Table 1: Response time of the automated 3D point cloud

classification.

Classification of 3D points clouds Average (sec)

A. The 3D mapping 8.02

B. Point cloud labelling 25.4

In order to validate the results obtained, Table 2

counts the total number of labeled points after map-

ping the cloud on the segmented images. Then, it

shows how we managed to increase the number of la-

beled points after applying our method, thus obtaining

a completely labeled 3D point cloud.

Table 2: Comparison between 3D points labeled with a sin-

gle image as reference and those labeled after applying the

expansion method.

Anomalies Single Image Our method

Lichens 64.256 430.750

Encrusted elements 2.658 5.478

Unwanted vegetation 11.256 26.567

Healing material 26.985 75.458

6 CONCLUSIONS AND FUTURE

WORKS

In summary, this study addresses the detection of

structural defects and anomalies in cultural heritage.

The main contribution of our method is an algo-

rithm to exploit the spatial and geometric features,

enabling the recognition of target materials in the en-

tire 3D point cloud. The results demonstrate the fea-

sibility and usefulness of this approach in real sce-

narios, identifying self-healing materials, unwanted

vegetation, lichens, and embedded elements on the

3D surfaces of historic buildings. The detection of

these detrimental elements contributes to more effec-

tive conservation strategies.

The proposed method is divided into two main

steps: semi-automatic labeling of structural faults in

UAV images using AI tools and mapping and classifi-

cation of 3D point cloud images using geometric and

radiometric features.

In conclusion, this study presents a novel AI-

based approach with promising results for the auto-

mated detection of structural anomalies in cultural

heritage in 3D natural scenarios. The integration of

SAM-Based Detection of Structural Anomalies in 3D Models for Preserving Cultural Heritage

747

AI-based techniques and 3D point cloud analysis con-

stitutes a valuable contribution to the conservation

and preservation of architectural heritage, highlight-

ing the feasibility and potential impact on structural

health monitoring in the field of cultural heritage.

ACKNOWLEDGEMENTS

This project has been funded under the contract

signed between the UNIVERSITY OF JA

´

EN and the

companies ALBAIDA INFRAESTRUCTURAS S.A.

and TALLERES Y GRUAS GONZALEZ, S.L. which

regulates the work on ”Multisensory Image Pro-

cessing and Analysis for Material Segmentation and

Characterization” (EXP. 2021 120) and the research

projects with references PID2022-137938OA-I00,

PID2021-126339OB-I00 and TED2021-132120B-

I00.These projects are co-financed by the Junta

de Andaluc

´

ıa, Ministerio de Ciencia e Innovaci

´

on

(Spain), and the European Union’s ERDF funds.

REFERENCES

Cumbajin, E., Rodrigues, N., Costa, P., Miragaia, R.,

Fraz

˜

ao, L., Costa, N., Fern

´

andez-Caballero, A.,

Carneiro, J., Buruberri, L. H., and Pereira, A. (2023).

A systematic review on deep learning with cnns ap-

plied to surface defect detection. Journal of Imaging,

9(10).

ISO (2016). Iso 19208:2016- framework for specifying per-

formance in buildings.

Kirillov, A., Mintun, E., Ravi, N., Mao, H., Rolland, C.,

Gustafson, L., Xiao, T., Whitehead, S., Berg, A. C.,

Lo, W.-Y., Doll

´

ar, P., and Girshick, R. (2023). Seg-

ment anything. arXiv:2304.02643.

Kumar, P., Batchu, S., Swamy S., N., and Kota, S. R.

(2021). Real-time concrete damage detection using

deep learning for high rise structures. IEEE Access,

9:112312–112331.

Li, Y., Zhao, L., Chen, Y., Zhang, N., Fan, H., and Zhang,

Z. (2023). 3d lidar and multi-technology collabora-

tion for preservation of built heritage in china: A re-

view. International Journal of Applied Earth Obser-

vation and Geoinformation, 116:103156.

L

´

opez, A., Ogayar, C. J., Jurado, J. M., and Feito, F. R.

(2023). Efficient generation of occlusion-aware mul-

tispectral and thermographic point clouds. Computers

and Electronics in Agriculture, 207:107712.

Maningo, J. M. Z., Bandala, A. A., Bedruz, R. A. R., Da-

dios, E. P., Lacuna, R. J. N., Manalo, A. B. O., Perez,

P. L. E., and Sia, N. P. C. (2020). Crack detection with

2d wall mapping for building safety inspection. In

2020 IEEE REGION 10 CONFERENCE (TENCON),

pages 702–707.

Mendoza, M. A. D., De La Hoz Franco, E., and G

´

omez, J.

E. G. (2023). Technologies for the preservation of cul-

tural heritage: A systematic review of the literature.

Sustainability, 15(2).

Mouzinho, F. A. L. N. and Fukai, H. (2021). Hierarchi-

cal semantic segmentation based approach for road

surface damages and markings detection on paved

road. In 2021 8th International Conference on Ad-

vanced Informatics: Concepts, Theory and Applica-

tions (ICAICTA), pages 1–5.

Moyano, J., Odriozola, C. P., Nieto-Juli

´

an, J. E., Vargas,

J. M., Barrera, J. A., and Le

´

on, J. (2020). Bring-

ing BIM to archaeological heritage: Interdisciplinary

method/strategy and accuracy applied to a megalithic

monument of the Copper Age. Journal of Cultural

Heritage, 45:303–314.

Perez, H., Tah, J. H. M., and Mosavi, A. (2019). Deep learn-

ing for detecting building defects using convolutional

neural networks. Sensors, 19(16).

Rocha, Mateus, Fern

´

andez, and Ferreira (2020). A Scan-

to-BIM Methodology Applied to Heritage Buildings.

Heritage, 3(1):47–67.

Xu, Y., Li, D., Xie, Q., Wu, Q., and Wang, J. (2021). Au-

tomatic defect detection and segmentation of tunnel

surface using modified mask r-cnn. Measurement,

178:109316.

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

748