On Learning Bipolar Gradual Argumentation Semantics

with Neural Networks

Caren Al Anaissy

1 a

, Sandeep Suntwal

2 b

, Mihai Surdeanu

3 c

and Srdjan Vesic

4 d

1

CRIL Universit

´

e d’Artois & CNRS, Lens, France

2

University of Colorado, Colorado Springs, U.S.A.

3

University of Arizona, Tucson, U.S.A.

4

CRIL CNRS Univ. Artois, Lens, France

Keywords:

Argumentation Semantics, Bipolar Gradual Argumentation Graphs, Neural Networks.

Abstract:

Computational argumentation has evolved as a key area in artificial intelligence, used to analyze aspects of

thinking, making decisions, and conversing. As a result, it is currently employed in a variety of real-world

contexts, from legal reasoning to intelligence analysis. An argumentation framework is modelled as a graph

where the nodes represent arguments and the edges of the graph represent relations (i.e., supports, attacks)

between nodes. In this work, we investigate the ability of neural network methods to learn a gradual bipolar

argumentation semantics, which allows for both supports and attacks. We begin by calculating the acceptabil-

ity degrees for graph nodes. These scores are generated using Quantitative Argumentation Debate (QuAD)

argumentation semantics. We apply this approach to two benchmark datasets: Twelve Angry Men and Debate-

pedia. Using this data, we train and evaluate the performance of three benchmark architectures: Multilayer

Perceptron (MLP), Graph Convolution Network (GCN), and Graph Attention Network (GAT) to learn the

acceptability degree scores produced by the QuAD semantics. Our results show that these neural network

methods can learn bipolar gradual argumentation semantics. The models trained on GCN architecture per-

form better than the other two architectures underscoring the importance of modelling argumentation graphs

explicitly. Our software is publicly available at: https://github.com/clulab/icaart24-argumentation.

1 INTRODUCTION

Computational argumentation theory (CAT) (Dung,

1995; Besnard and Hunter, 2008; Rahwan and Simari,

2009; Baroni et al., 2018; Amgoud and Prade,

2009; Amgoud and Serrurier, 2008; Atkinson et al.,

2017) has emerged as a fundamental area of artifi-

cial intelligence (AI) and is used in many tasks such

as reasoning with inconsistent information, decision

making (Amgoud and Prade, 2009), and classifica-

tion (Amgoud and Serrurier, 2008) across domains

such as legal and medical (Atkinson et al., 2017).

CAT focuses on formalising and automating the

process of argumentation. It accomplishes this

by constructing argumentation graphs in which ar-

a

https://orcid.org/0000-0002-8750-1849

b

https://orcid.org/0000-0002-7746-7114

c

https://orcid.org/0000-0001-6956-8030

d

https://orcid.org/0000-0002-4382-0928

The first and the second authors contributed equally.

guments and counter-arguments are interconnected

through either attack or support edges. Within CAT,

semantics serves as a formal method for evaluating

the strength of each argument in the graph considering

its interactions with other arguments. Several bipo-

lar gradual semantics have been proposed recently:

QuAD (Baroni et al., 2015), DF-QuAD (Rago et al.,

2016), Exponent-based (Amgoud and Ben-Naim,

2018), and Quadratic Energy Model (QEM) (Potyka,

2018). This class of semantics have two key proper-

ties: (a) they model both attacks and supports edges,

and (b) they compute acceptability degrees for the

nodes in the graph, which quantify the strength of in-

dividual arguments in the argument graph. That is,

arguments are not only met with opposition (attack),

but also with reinforcement (support). For example,

QuAD semantics (Baroni et al., 2015), a state-of-the-

art semantics, enables the joint analysis and treatment

of both attack and support relations between argu-

ments. That is, it considers the initial weights of the

arguments as well as the attack and support relations

Al Anaissy, C., Suntwal, S., Surdeanu, M. and Vesic, S.

On Learning Bipolar Gradual Argumentation Semantics with Neural Networks.

DOI: 10.5220/0012448300003636

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 16th International Conference on Agents and Artificial Intelligence (ICAART 2024) - Volume 2, pages 493-499

ISBN: 978-989-758-680-4; ISSN: 2184-433X

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

493

between them in order to calculate the final accept-

ability degree for each argument.

To capture the complex landscape of real-world

discourse, in this work we focus on bipolar gradual

argumentation semantics. While bipolar gradual ar-

gumentation semantics provide the final acceptabil-

ity degree for each node in the graph, there is still

no solution to the fact that they are not guaranteed

to converge in case of cycles. This issue can be re-

solved using neural networks. Neural networks are

known for converging (Smith and Topin, 2019) how-

ever, their ability to learn argumentation semantics is

still unknown. In this work, we focus on the latter

issue.

In the space of deep learning, recent developments

in machine learning and especially in large language

models (LLMs) such as GPT-4 (OpenAI, 2023) have

generated claims of (sparks of) Artificial General In-

telligence (AGI) (Bubeck et al., 2023; Zhang et al.,

2023). These claims seem to be supported by the

observation that LLMs can solve new tasks that they

have not been exposed to during their training such as

mathematics, coding, vision, medicine, law, psychol-

ogy, without needing any domain-specific prompting

(Bubeck et al., 2023).

Our paper is motivated by an important observa-

tion that connects the two threads above: a crucial

prerequisite for claims of machine reasoning or AGI

is that the underlying neural networks understand ar-

gumentation theory semantics. Given that argumenta-

tion is an integral part of thinking, making decisions,

and conversing, how else would a machine truly rea-

son? To verify if neural networks can model CAT

semantics, in this work we train and evaluate multi-

ple neural architectures on their capacity to capture

QuAD semantics. In particular, our paper makes three

contributions:

1. We construct a dataset of argument graphs that

captures QuAD semantics. In particular, we

used the argument graphs from two datasets:

Twelve Angry Men and Debatepedia provided by

the NoDE benchmark (Cabrio and Villata, 2013;

Cabrio et al., 2013). We generated multiple ver-

sions of these graphs, where the arguments’ ini-

tial weights are drawn from four different distribu-

tions (Beta, Normal, Poisson, Uniform), and the

corresponding acceptability degrees are computed

using QuAD semantics.

2. We implement three distinct neural architectures

It is important to note that these claims are not widely

accepted due to suspicions of “contamination,” i.e., the data

used to train these LLMs often contains the tasks used dur-

ing testing (Sainz et al., 2023), which invalidates the bold

claims of AGI.

that learn to predict QuAD semantics acceptabil-

ity degrees given a graph with initial weights.

Our architectures are based on: Multilayer Per-

ceptrons (MLP), Graph Convolution Networks

(GCN) (Kipf and Welling, 2016), and Graph At-

tention Networks (GAT) (Veli

ˇ

ckovi

´

c et al., 2017).

To capture the argument graph structure, all these

architectures have access to node features that in-

clude: (a) node in-degree, (b) total degree, and

(c) the initial node weight. In addition, the two

graph-based architectures use as features: the ini-

tial node weights, edge information, and edge

weights (+1 for supports and -1 for attacks edges).

3. We evaluate the capacity of these architectures

to predict correct acceptability degrees. We con-

clude that their predictions indeed come close

to the true QuAD semantics acceptability degree

(with a mean squared error as low as 0.05). How-

ever, this conclusion has an important caveat: this

performance is only achieved when the argument

graph is explicitly modelled using a graph-based

neural architecture.

2 RELATED WORK

Kuhlmann and Thimm (Kuhlmann and Thimm, 2019)

and Craandijk and Bex (Craandijk and Bex, 2020)

trained neural networks to learn extension-based ar-

gumentation semantics. Kuhlmann and Thimm em-

ployed a conventional single forward pass classifier

to approximate credulous acceptance under the pre-

ferred semantics. Craandijk and Bex proposed an

argumentation Graph Neural Network (GNN) that

learns to predict both credulous and sceptical accep-

tance of arguments under four well-known extension-

based argumentation semantics. Our effort operates

under the same goal, i.e., training a neural network to

learn an argumentation semantics, but it extends these

works considerably. First, these two papers focused

on extension-based semantics, whereas ours focuses

on gradual semantics. This, in principle, is harder

to replicate using neural networks due to the contin-

uous values of acceptability degrees. Second, both

these papers take into account only attacks edges; our

method addresses both attacks and supports edges.

Lastly, we explore three different neural architectures

to better understand the best representation for argu-

mentation semantics.

Within CAT, bipolar gradual semantics, which

model both attacks and supports edges and com-

pute argument acceptability degrees, are well stud-

ied. The QuAD semantics was proposed by Baroni

et al. (Baroni et al., 2015) to evaluate the strength of

ICAART 2024 - 16th International Conference on Agents and Artificial Intelligence

494

answers in decision-support systems. However, this

semantics can sometimes behaviour discontinuously.

The DF-QuAD semantics was proposed by Rago et

al. (Rago et al., 2016) to fix this discontinuity prob-

lem. Amgoud and Ben-Naim (Amgoud and Ben-

Naim, 2018) introduce a set of thirteen principles for

bipolar weighted argumentation semantics. In their

work, the authors explain that both the QuAD and

the DF-QuAD semantics do not satisfy some of these

principles. This is because, as Potyka explains (Po-

tyka, 2018; Potyka, 2019), the QuAD and the DF-

QuAD semantics both have a saturation problem.

That means that once an argument has an attacker

(supporter) with a degree of 1, it becomes meaning-

less to take all the other attackers (supporters) into

account in the aggregation function. To fix this sat-

uration problem, Amgoud and Ben-Naim (Amgoud

and Ben-Naim, 2018) propose the Exponent-based

semantics that satisfies the thirteen principles pro-

posed. However, Potyka (Potyka, 2018) explains

that the Exponent-based semantics violates the dual-

ity principle, meaning that this semantics treats the at-

tack relation and the support relation in an asymmetri-

cal manner. Potyka (Potyka, 2019) also explains that

the Exponent-based semantics do not satisfy “open-

mindedness,” i.e., its ability to change the initial

weights is very limited. The Quadratic Energy Model

(QEM) proposed by Potyka (Potyka, 2018) satisfies

twelve properties among the thirteen properties pro-

posed by Amgoud and Ben-Naim (Amgoud and Ben-

Naim, 2018), the duality and the open-mindedness

properties. The MLP-Based semantics (Potyka, 2021)

consists of viewing a multilayer perceptron (MLP),

which is a feed-forward neural network, as a bipo-

lar gradual argumentation framework. This seman-

tics satisfies the same properties as the QEM, ex-

cept that the MLP-Based semantics satisfies the open-

mindedness property excluding the cases where the

arguments’ intial weight is 0 or 1. However, in this

work we focus on the QuAD semantics because it is

widely known and easily explainable. We plan to in-

vestigate other semantics in future work.

Unfortunately, QuAD and most of the followup

semantics are only defined for acyclic graphs. To de-

tail, the DF-QuAD semantics (Rago et al., 2016) and

the Exponent-based semantics (Amgoud and Ben-

Naim, 2018) are also defined for acyclic graphs.

For the semantics proposed by Mossakowski and

Neuhaus (Mossakowski and Neuhaus, 2016), the con-

vergence is not guaranteed for all cyclic graphs. The

convergence of the Quadratic Energy Model proposed

by Potyka (Potyka, 2018) for cyclic graphs is not

proven. Mossakowski and Neuhaus (Mossakowski

and Neuhaus, 2018) show that twenty-five different

semantics can be obtained by combining five aggrega-

tion functions with five influence functions. However,

only three of these semantics converge for all graphs.

Potyka (Potyka, 2019) shows that these three se-

mantics do not satisfy open-mindednes, which makes

them unsuitable for any practical application. Po-

tyka shows also that continuizing discrete models can

solve divergence problems. However, there is cur-

rently no proof of convergence for continuous models

in cyclic graphs. The MLP-Based semantics (Potyka,

2021) is fully-defined for all acyclic graphs and for

cyclic graphs with convergence conditions.

Since we use QuAD, we also focus only on acyclic

graphs in this work. We acknowledge this limitation,

and discuss future work on cyclic graphs in Section 5.

3 APPROACH

3.1 Data

We performed experiments using datasets created us-

ing argument graphs from two benchmarks: Twelve

Angry Men and Debatepedia provided by the NoDE

benchmark (Cabrio and Villata, 2013; Cabrio et al.,

2013). Note that all the graphs in those datasets are

acyclic. The two datasets used in our study are de-

scribed next.

Twelve Angry Men Dataset. The script of “Twelve

Angry Men” is the first natural language argument

benchmark in our experiments. This dataset contains

three acts (Act 1, Act 2 and Act 3). Every argument

in each act links to at least another dialogue argument

it supports or attacks within the act. The benchmark

contains three graphs (one for each act), where each

argument represents a node and is connected to other

nodes that it supports or attacks. The three graphs

contain 80 edges and 83 nodes in total. We split the

dataset as follows: the graphs from Act 1 and Act 2

(72 nodes) were the training and validation partitions,

while the graph in Act 3 (11 nodes) was the test par-

tition.

Debatepedia and ProCon Dataset. This dataset

consists of two encyclopedia of pro and con argu-

ments. The dataset was manually constructed by

selecting a set of topics of Debatepedia/ProCon de-

bates. Here, each debate represents one topic. Within

each topic, an argument is constructed by extract-

ing user opinion. In this dataset, as the attack and

support edges are represented as binary relations,

On Learning Bipolar Gradual Argumentation Semantics with Neural Networks

495

the arguments are connected with the starting argu-

ment or another argument within the same topic to

which the newest argument refers. A chronologi-

cal order is maintained to ensure a dialogue struc-

ture. This dataset contains 20 debates across differ-

ent topics.The train and validation partition contain

14 graphs; the test partition contains six graphs.

3.2 Method

The QuAD semantics requires each argument to have

an initial weight that captures its intrinsic value. How-

ever, the datasets do not provide such information. To

address this limitation, we generated initial weights

for each node in our dataset using four probabil-

ity distributions: Beta, Normal, Poisson, and Uni-

form. Next, we computed the acceptability degree

score for each node using the QuAD semantics (Ba-

roni et al., 2015). Finally, we used the acceptability

degree scores as the gold degrees to train, validate,

and test three neural network architectures: a multi-

layer perceptron (MLP), a graph convolution network

(GCN) (Kipf and Welling, 2016), and a graph atten-

tion network (GAT) (Veli

ˇ

ckovi

´

c et al., 2017). These

steps were repeated for 100 different initial weights

for each graph and initial weight distribution.

3.2.1 Generating Argumentation Theory-Based

Baselines

The notation Sup(a) (resp. Att(a)) stands for the set

of supporters (resp. attackers) of argument a, and the

notation w(a) stands for the initial weight of a. deg(a)

stands for the acceptability degree of a. We provide a

short explanation of how the QuAD semantics works.

Quantitative Argumentation Debate. The QuAD

semantics determines the strength, i.e., the acceptabil-

ity degree of each argument, by considering its initial

weight and the aggregated strengths of its attackers

and supporters. The functions f

a

(a) and f

s

(a) re-

cursively aggregate the strengths of an argument a’s

attackers and supporters respectively with a’s initial

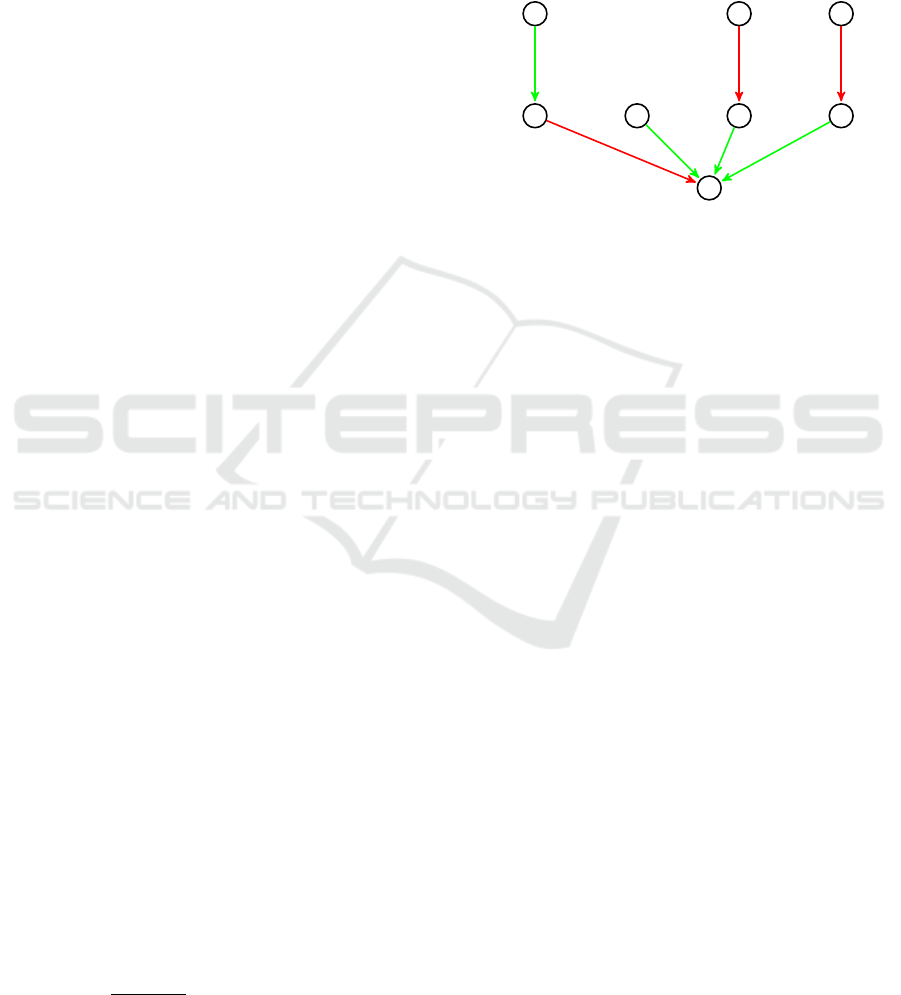

weight. Figure 1 illustrates the QuAD semantics ap-

plied on a bipolar argumentation graph.

Then, the acceptability degree of a is defined as:

deg(a) =

f

a

(a) if Sup(a) =

/

0, Att(a) ̸=

/

0

f

s

(a) if Sup(a) ̸=

/

0, Att(a) =

/

0

w(a) if Sup(a) =

/

0, Att(a) =

/

0

f

a

(a)+ f

s

(a)

2

otherwise

(1)

where

f

a

(a) = w(a) ·

∏

b∈Att(a)

(1 − deg(b))

and

f

s

(a) = 1 − (1 − w(a)) ·

∏

b∈Sup(a)

(1 − deg(b))

4

0.1

0.1

3

0.5

0.55

2

0.25

0.25

5

0.53

0.053

7

0.75

0.6075

6

0.9

0.9

8

0.19

0.19

1

0.3

0.4699

Figure 1: Example of a bipolar argumentation graph ex-

tracted from the Debatepedia dataset (Cabrio and Villata,

2014), for the debate called Sobrietytest. The red arrows

represent attacks while the green arrows represent supports.

The first row of numbers next to the arguments represent

their initial weights which are assigned randomly (drawn

from a given distribution), while the second row of num-

bers represent their acceptability degrees calculated using

the QuAD semantics.

3.2.2 Learning Argumentation Semantics

To investigate the capacity of neural networks to learn

QuAD semantics, we explore three different neural

network architectures. Note that the neural network

architectures typically employed for learning struc-

tured data are not suited for resolving non-euclidean

input data such as graphs. Due to the variable size

and shape of the input graphs, it is difficult to pro-

cess them using conventional data structures such as

adjacency matrices (Veli

ˇ

ckovi

´

c et al., 2017). In addi-

tion, adjacency matrices are dependent on the order in

which the nodes appear, so they are not node invari-

ant. To avoid the limitations of existing algorithms,

we transform the arguments and debates into neu-

ral network graph structures, and learn the semantics

using Graph Neural Networks (GNNs) (Gori et al.,

2005; Scarselli et al., 2008). GNNs aim to utilize con-

ventional deep learning principles for non-euclidean

data. GNNs achieve this by allowing for message

passing between nodes (which represent arguments)

and edges (which represent relations such as support

or attack, and have a weight) through convolution op-

erations. The equation for this operation is as follows:

n

(k)

i

= γ

(k)

n

(k−1)

i

, f

j∈N (i)

φ

(k)

n

(k−1)

i

, n

(k−1)

j

, e

j,i

(2)

ICAART 2024 - 16th International Conference on Agents and Artificial Intelligence

496

Table 1: Results for Twelve Angry Men and Debatepedia datasets using QuAD. The top row indicates the neural network

architecture. The first column represents the distribution from which the initial random weights were assigned. These results

are means across 100 different initial weights for each node. MSE represents the final mean squared error. Since MSE is an

error based statistic, a lower value is better. RMSE represents the relative MSE. The MSE results highlighted in bold indicate

the best MSE results among the three neural networks.The Kendall Tau correlation metric measures the correlation between

the node ranks produced by a given neural network and the ranks produced by QuAD semantics. A statistically significant

Kendall’s τ represents that rankings produced by NNs and QuAD semantics were similar. *, **, *** indicate statistical

significance (≤.05, ≤.005, ≤.0005).

Model MLP GCN GNN-GAT

MSE RMSE Kendall Tau MSE RMSE Kendall Tau MSE RMSE Kendall Tau

Twelve Angry Men Dataset

Beta 0.18 3.33 -0.23 0.09 1.67 0.48 0.14 2.55 0.23

Normal 0.15 2.84 -0.18 0.09 1.64 0.41 0.11 2.08 0.26

Poisson 0.2 inf -0.44 0.14 inf 0.39 0.18 inf 0.25

Uniform 0.14 2.48 -0.19 0.07 1.23 0.47 0.1 1.78 0.22

Debatepedia Dataset

Beta 0.36 0.67 0.36 0.07 0.12 0.72*** 0.1 0.19 0.69

Normal 0.34 0.64 0.39 0.06 0.11 0.73*** 0.08 0.16 0.65

Poisson 0.29 1.06 0.2 0.08 0.27 0.62*** 0.14 0.5 0.76

Uniform 0.32 0.56 0.33 0.05 0.08 0.76*** 0.07 0.12 0.64

where n

(k−1)

i

∈ R

P

represents node i

′

s node features

in layer k − 1, e

j,i

∈ R

Q

represents edge features from

node j to node i, informing us about the importance

of each neighbor. f represents a permutation invariant

function (e.g., sum, avg) to ensure that the methods

make no assumptions about the spatial relationships

between node features during convolutions, φ denotes

a neural network that constructs the message to node i

for each edge j, i, and γ denotes a neural network that

takes the output of this aggregation to update the ac-

ceptability degree scores for node i. The final output

produces the updated acceptability degree scores for

each node in the graph.

We conducted experiments using three architec-

tures: MLP, GCN, and GAT. MLP is a feed-forward

neural network architecture suitable for some struc-

ture data but not graphs. This architecture serves as

our first baseline. GCNs (Kipf and Welling, 2016)

extend the concept of convolution, which is widely

used in convolutional neural networks (CNNs) (Le-

Cun et al., 1998) for image or text processing, to

the graph domain. In CNNs, a convolutional layer

applies filters over local patches of an input image

(or local textual context) to extract features. Simi-

larly, in GCNs, a convolutional layer processes nodes

and their neighboring nodes to aggregate informa-

tion. GATs, our third architecture, use more com-

plex attention-based architectures to explore the en-

tire neighborhood of a node in an order invariant

way (Veli

ˇ

ckovi

´

c et al., 2017). To capture the graph

structure for the MLP architecture, we created node

features that contain: (a) node in-degree, (b) total

degree, and (c) the initial node weight. These fea-

tures help overcome some limitations such as uniform

structure for each input. For the two graph-based

architectures, we used as features: the initial node

weights, edge information, and edge weights (+1 for

supports and -1 for attacks edges). All neural methods

were trained using the mean-squared error (MSE) loss

on the corresponding training partitions. We tuned the

early stopping hyperparameter on the validation par-

tition.

4 RESULTS AND DISCUSSION

Table 1 presents the results from our study, in which

we evaluate the three proposed neural architectures

on the graphs introduced in previous section. This

table lists results across the four distributions for ini-

tial argument weights. To evaluate the performance

of the neural architectures we used mean squared er-

ror (MSE) between the predicted and the gold accept-

ability degrees. We also used the Kendall rank cor-

relation coefficient metric (also referred as Kendall’s

τ) (Kendall, 1938), to measure the correlation be-

tween the node ranks produced by a given neural net-

work and the ranks produced by QuAD semantics.

Kendall’s tau computes the difference between the

number of matching and non-matching observation

pairs and divides it by the total number of observa-

tion pairs. A value of −1 indicates complete negative

association, 1 indicates complete positive association,

and 0 indicates no association between the variables.

The underlying hypothesis tests if the two lists are

identical. A p-value ≤.05 and τ value > 0 signifies

a statistically significant similarity between the two

rankings. Here, a statistically significant Kendall’s τ

score indicates that the acceptability score rankings

produced by QuAD semantics have a high correlation

with the acceptability degree scores produced by the

neural networks.

On Learning Bipolar Gradual Argumentation Semantics with Neural Networks

497

Formally, Kendall’s τ is calculated as follows:

τ =

2

k(k − 1)

∑

i< j

sgn(x

i

− x

j

)sgn(y

i

− y

j

)

where k is the number of observations, x

i

and y

i

are

the rankings of the i

th

observation for the two vari-

ables being compared, and sgn is the sign function

that returns 1 if the argument is positive, -1 if nega-

tive, and 0 if zero. We draw the following observa-

tions from this experiment:

• First, our experiments indicate that neural net-

works can indeed learn to predict the acceptabil-

ity degrees computed by QuAD semantics. To our

knowledge, this is the first work to show that neu-

ral networks can learn to replicate bipolar gradual

CAT semantics. This is an exciting result consid-

ering the complexity of the task.

• Second, the best results by far are obtained by

neural architectures that model graphs explicitly

(GCN and GNN-GAT), which highlights the im-

portance of modelling argument interactions. For

example, the MSEs measured for GCN range be-

tween 0.05 and 0.09 for the two datasets and

the four probability distributions used for initial

weights. In contrast, the MSEs measured for the

MLP, which uses a limited number of features to

summarize the graph structure, range from 0.14

to 0.36 depending on the dataset and probability

distribution. The latter results are not only con-

siderably worse, but also show extreme variation

between datasets.

• Third, between the two graph-based architectures,

GCN performs consistently better than GNN-

GAT. For example, for the Debatepedia Dataset,

the MSE measured for GCN ranges from 0.05 to

0.08, whereas the MSE for GNN-GAT is approx-

imately twice that ranging from 0.08 to 0.14. We

consider this another positive result, which indi-

cates that, as long as the neural architectures cap-

ture the argument graph structures, simplicity is

better. This is an important observation for the

deployment of these neural architectures in real-

world software applications.

• Lastly, if we consider the Kendall Tau scores cal-

culated between the ranking produced by GCN

and the ranking produced by QuAD semantics, we

can observe a moderate positive correlation be-

tween the two rankings for the Twelve Angry Men

dataset. We can also observe a strong positive cor-

relation between the two rankings for the Debate-

pedia dataset, which is statistically significant at a

very low p-value threshold. These results show

us that GCN is not only capturing the general

trends in the data (as indicated by low MSE) but

is also performing well in maintaining the ranking

of the values, therefore producing consistent rank-

ing. These results are particularly valuable since

they give us insights about the capability of GCN

to effectively predict the ranking among the nodes

in a bipolar gradual argumentation graph.

5 CONCLUSION AND FUTURE

WORK

Argumentation continues to grow as a key area in

AI for several tasks that require decision-making and

communication. As such, we argue that understand-

ing argumentation should be a requirement for neural

networks that implement machine reasoning. In this

study, we investigated the ability of neural networks

to learn gradual bipolar argumentation semantics. We

conducted several experiments to train and evaluate

neural networks’ ability to learn the QuAD argumen-

tation semantics. Our findings indicate that argu-

mentation semantics can be learned by neural net-

works successfully. One important observation is that

the best results are consistently produced by GCNs,

a graph-based neural architecture, which underlines

that the argument graph structure must be explicitly

modelled. All in all, this paper is the first to show

that neural architectures can learn gradual bipolar ar-

gumentation semantics.

One limitation of this work is that all the data used

for training and testing the proposed neural architec-

tures consisted only of acyclic graphs, where QuAD

semantics is guaranteed to converge. In the future, we

will expand our work to include cyclic graphs where

the QuAD (or other) semantics converge. To the best

of our knowledge, there does not exist a dataset or

benchmark that contains a significant number of real-

world bipolar weighted cyclic graphs. We are cur-

rently working on collecting cyclic graphs from real

online debates and verify in which situations QuAD

semantics converges. Other future work will include

training neural networks on other bipolar weighted se-

mantics, based on the behaviour desired in a specified

context.

ACKNOWLEDGEMENTS

This work was supported by the International Emerg-

ing Action (IEA) project RHAPSSODY and joint

PhD Program SURFING, both funded by the French

National Center for Scientific Research (CNRS) and

ICAART 2024 - 16th International Conference on Agents and Artificial Intelligence

498

the University of Arizona. Caren Al Anaissy and

Srdjan Vesic were also supported by the project AG-

GREEY ANR22-CE23-0005 from the French Na-

tional Research Agency (ANR).

REFERENCES

Amgoud, L. and Ben-Naim, J. (2018). Evaluation of argu-

ments in weighted bipolar graphs. International Jour-

nal of Approximate Reasoning, 99:39–55.

Amgoud, L. and Prade, H. (2009). Using arguments for

making and explaining decisions. Artificial Intelli-

gence, 173:413–436.

Amgoud, L. and Serrurier, M. (2008). Agents that argue

and explain classifications. Autonomous Agents and

Multi-Agent Systems, 16(2):187–209.

Atkinson, K., Baroni, P., Giacomin, M., Hunter, A.,

Prakken, H., Reed, C., Simari, G. R., Thimm, M., and

Villata, S. (2017). Towards artificial argumentation.

AI Magazine, 38(3):25–36.

Baroni, P., Gabbay, D., Giacomin, M., and van der Torre, L.,

editors (2018). Handbook of Formal Argumentation.

College Publications.

Baroni, P., Romano, M., Toni, F., Aurisicchio, M., and

Bertanza, G. (2015). Automatic evaluation of design

alternatives with quantitative argumentation. Argu-

ment & Computation, 6(1):24–49.

Besnard, P. and Hunter, A. (2008). Elements of Argumenta-

tion. MIT Press.

Bubeck, S., Chandrasekaran, V., Eldan, R., Gehrke, J.,

Horvitz, E., Kamar, E., Lee, P., Lee, Y. T., Li, Y.,

Lundberg, S., et al. (2023). Sparks of artificial gen-

eral intelligence: Early experiments with gpt-4. arXiv

preprint arXiv:2303.12712.

Cabrio, E. and Villata, S. (2013). A natural language bipo-

lar argumentation approach to support users in on-

line debate interactions. Argument & Computation,

4(3):209–230.

Cabrio, E. and Villata, S. (2014). Node: A benchmark of

natural language arguments. In Computational Mod-

els of Argument, pages 449–450. IOS Press.

Cabrio, E., Villata, S., and Gandon, F. (2013). A sup-

port framework for argumentative discussions man-

agement in the web. In The Semantic Web: Seman-

tics and Big Data: 10th International Conference,

ESWC 2013, Montpellier, France, May 26-30, 2013.

Proceedings 10, pages 412–426. Springer.

Craandijk, D. and Bex, F. (2020). Deep learning for abstract

argumentation semantics. In Bessiere, C., editor, Pro-

ceedings of the Twenty-Ninth International Joint Con-

ference on Artificial Intelligence, IJCAI 2020, pages

1667–1673. ijcai.org.

Dung, P. M. (1995). On the Acceptability of Arguments and

its Fundamental Role in Non-Monotonic Reasoning,

Logic Programming and n-Person Games. Artificial

Intelligence, 77:321–357.

Gori, M., Monfardini, G., and Scarselli, F. (2005). A new

model for learning in graph domains. In Proceedings.

2005 IEEE international joint conference on neural

networks, volume 2, pages 729–734.

Kendall, M. G. (1938). A new measure of rank correlation.

Biometrika, 30(1/2):81–93.

Kipf, T. N. and Welling, M. (2016). Semi-supervised clas-

sification with graph convolutional networks. arXiv

preprint arXiv:1609.02907.

Kuhlmann, I. and Thimm, M. (2019). Using graph convo-

lutional networks for approximate reasoning with ab-

stract argumentation frameworks: A feasibility study.

In Scalable Uncertainty Management: 13th Interna-

tional Conference, SUM 2019, Compi

`

egne, France,

December 16–18, 2019, Proceedings, pages 24–37.

Springer.

LeCun, Y., Bottou, L., Bengio, Y., and Haffner, P. (1998).

Gradient-based learning applied to document recogni-

tion. Proceedings of the IEEE, 86(11):2278–2324.

Mossakowski, T. and Neuhaus, F. (2016). Bipolar

weighted argumentation graphs. arXiv preprint

arXiv:1611.08572.

Mossakowski, T. and Neuhaus, F. (2018). Modular seman-

tics and characteristics for bipolar weighted argumen-

tation graphs. arXiv preprint arXiv:1807.06685.

OpenAI (2023). Gpt-4 technical report.

Potyka, N. (2018). Continuous dynamical systems for

weighted bipolar argumentation. In KR, pages 148–

157.

Potyka, N. (2019). Extending modular semantics for bipolar

weighted argumentation. In KI 2019: Advances in Ar-

tificial Intelligence: 42nd German Conference on AI,

Kassel, Germany, September 23–26, 2019, Proceed-

ings 42, pages 273–276. Springer.

Potyka, N. (2021). Interpreting neural networks as quanti-

tative argumentation frameworks. In Proceedings of

the AAAI Conference on Artificial Intelligence, vol-

ume 35, pages 6463–6470.

Rago, A., Toni, F., Aurisicchio, M., Baroni, P., et al. (2016).

Discontinuity-free decision support with quantitative

argumentation debates. KR, 16:63–73.

Rahwan, I. and Simari, G. R., editors (2009). Argumenta-

tion in Artificial Intelligence. Springer.

Sainz, O., Campos, J. A., Garc

´

ıa-Ferrero, I., Etxaniz, J.,

and Agirre, E. (2023). Did chatgpt cheat on your test?

Last accessed: 18th July, 2023.

Scarselli, F., Gori, M., Tsoi, A. C., Hagenbuchner, M.,

and Monfardini, G. (2008). The graph neural net-

work model. IEEE transactions on neural networks,

20(1):61–80.

Smith, L. N. and Topin, N. (2019). Super-convergence:

Very fast training of neural networks using large learn-

ing rates. In Artificial intelligence and machine learn-

ing for multi-domain operations applications, volume

11006, pages 369–386. SPIE.

Veli

ˇ

ckovi

´

c, P., Cucurull, G., Casanova, A., Romero, A., Lio,

P., and Bengio, Y. (2017). Graph attention networks.

arXiv preprint arXiv:1710.10903.

Zhang, C., Zhang, C., Li, C., Qiao, Y., Zheng, S., Dam,

S. K., Zhang, M., Kim, J. U., Kim, S. T., Choi, J.,

et al. (2023). One small step for generative ai, one

giant leap for agi: A complete survey on chatgpt in

aigc era. arXiv preprint arXiv:2304.06488.

On Learning Bipolar Gradual Argumentation Semantics with Neural Networks

499