Path of Solutions for Fused Lasso Problems

Torpong Nitayanont

a

, Cheng Lu

b

and Dorit S. Hochbaum

c

Department of Industrial Engineering and Operations Research, University of California, Berkeley, Berkeley, CA, U.S.A.

Keywords:

Fused Lasso, Path of Solutions, Minimum Cut, Hyperparameter Selection, Signal Processing.

Abstract:

In a fused lasso problem on sequential data, the objective consists of two competing terms: the fidelity term

and the regularization term. The two terms are often balanced with a tradeoff parameter, the value of which

affects the solution, yet the extent of the effect is not a priori known. To address this, there is an interest in

generating the path of solutions which maps values of this parameter to a solution. Even though there are

infinite values of the parameter, we show that for the fused lasso problem with convex piecewise linear fidelity

functions, the number of different solutions is bounded by n

2

q where n is the number of variables and q is the

number of breakpoints in the fidelity functions. Our path of solutions algorithm, PoS, is based on an efficient

minimum cut technique. We compare our PoS algorithm with a state-of-the-art solver, Gurobi, on synthetic

data. The results show that PoS generates all solutions whereas Gurobi identifies less than 22% of the number

of solutions, on comparable running time. Even allowing for hundreds of times factor increase in time limit,

compared with PoS, Gurobi still cannot generate all the solutions.

1 INTRODUCTION

We consider here the class of convex piecewise linear

fused lasso problems:

(PFL) min

x

1

,...,x

n

n

∑

i=1

f

pl

i

(x

i

) + λ

n−1

∑

i=1

|

x

i

− x

i+1

|

(1)

where each fidelity function f

pl

i

(x

i

) is a convex piece-

wise linear function and λ is a parameter that controls

the tradeoff between the fidelity term and the regular-

ization term, which penalizes the differences between

consecutive variables.

Applications of problems in the class of PFL in-

clude the study of DNA copy number gains and losses

on chromosomes using Comparative Genomic Hy-

bridization (CGH) (Eilers and De Menezes, 2005). In

CGH, each piecewise linear function takes the form

of τ(x

i

− a

i

)

+

+ (1 −τ)(a

i

− x

i

)

+

for a given τ ∈ [0,1]

and data point a

i

, and the problem is called quantile

regression. In signal processing (Storath et al., 2016),

each piecewise linear function is f

pl

i

(x

i

) = w

i

|x

i

− a

i

|

for a given weight w

i

and data point a

i

. Each piece-

wise linear function in these examples contains ex-

actly one breakpoint.

a

https://orcid.org/0009-0002-6976-1951

b

https://orcid.org/0000-0001-5137-7199

c

https://orcid.org/0000-0002-2498-0512

Clearly, the optimal solution of PFL (1) varies

with regard to the tradeoff parameter λ. In the works

mentioned above, the choice of λ is either handpicked

(Storath et al., 2016) or selected via cross validation

(Eilers and De Menezes, 2005). However, there could

still be λ values outside of the search range that lead

to desirable results, which go unnoticed, or the reso-

lution of the cross validation grid search could still be

made finer. Our goal is to find the path of solutions to

PFL for all λ ∈ [0,∞), that is we want to identify a set

of ranges or intervals of λ (we call them λ-ranges) that

span [0, ∞), i.e. Λ = {[0,λ

1

),[λ

1

,λ

2

),...,[λ

m

,∞)},

such that the solutions to PFL for two values of λ from

the same range are similar.

In addition to the identification of the set of λ-

ranges, we also find the optimal solution for each

λ-range, {x

∗

([0,λ

1

)),x

∗

([λ

1

,λ

2

)),...,x

∗

([λ

m

,∞))}.

Note that we use the notation of x

∗

(λ) to refer to the

solution of PFL for a specific value of λ. The notation

of x

∗

([λ

1

,λ

2

)), for example, refers to the optimal so-

lution to PFL for any value of λ in the range [λ

1

,λ

2

).

It also implies that the solution for all values of λ in

this range are identical.

The relevant literature on finding the path of solu-

tions include the work on fused-lasso signal approx-

imator (FLSA) by Hoefling (2010). The problem is

Nitayanont, T., Lu, C. and Hochbaum, D.

Path of Solutions for Fused Lasso Problems.

DOI: 10.5220/0012433200003654

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 13th International Conference on Pattern Recognition Applications and Methods (ICPRAM 2024), pages 107-118

ISBN: 978-989-758-684-2; ISSN: 2184-4313

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

107

defined as follows:

min

x

1

,...,x

n

1

2

n

∑

i=1

(x

i

− a

i

)

2

+ λ

1

n

∑

i=1

|

x

i

|

+ λ

2

n−1

∑

i=1

|

x

i

− x

i+1

|

Hoefling (2010) solves for the optimal solution

x

∗

(λ

1

,λ

2

) for all λ

1

and λ

2

by first finding the

solution for λ

1

= 0 in terms of λ

2

, x

∗

(0,λ

2

), in

O(nlogn). Then, x

∗

(λ

1

,λ

2

) is computed as a func-

tion of x

∗

(0,λ

2

). Tibshirani (2011) presents path of

solution algorithms to a generalized lasso problem,

which is formulated as

min

x∈R

n

1

2

∥b − Ax∥

2

2

+ λ∥Dx∥

1

for different configurations of A and D such as when

D is an arbitrary matrix and A = I. Note that the reg-

ularization term of this problem can be made equiva-

lent to our regularization term,

∑

n−1

i=1

|x

i

− x

i+1

|, when

D is set to an appropriate matrix.

Another work on the path of solutions is the work

by Wang et al. (2006), which solves the regularized

least absolute deviation regression problem (RLAD):

min

x∈R

p

||y − Ax||

1

+ λ||x||

1

given A ∈ R

n×p

,y ∈ R

n

for every possible value of λ

in O(log (np) min(p,n)

2

). They applied their method

on the image reconstruction problem.

Generalized isotonic median regression or GIMR

problem, which has the following formulation:

(GIMR) min

x

1

,...,x

n

n

∑

i=1

f

pl

i

(x

i

) +

n−1

∑

i=1

d

i,i+1

· (x

i

− x

i+1

)

+

+

n−1

∑

i=1

d

i+1,i

· (x

i+1

− x

i

)

+

(2)

for a given set of {d

i,i+1

,d

i+1,i

}

n−1

i=1

, is solved by an

algorithm, called HL-algorithm hereafter, in the work

of Hochbaum and Lu (2017). HL-algorithm solves

GIMR by formulating the problem into a sequence

of minimum cut problems, which can be solved ef-

ficiently in O(q log n) where q is the total number of

breakpoints in fidelity functions. This is the best com-

plexity for solving GIMR to date. PFL is a special

case of GIMR and therefore can be solved, for a fixed

λ, using the HL-algorithm. The path of solutions al-

gorithm proposed here that solves PFL for all λ ≥ 0 is

an extension of the HL algorithm. We introduce here

the notations and concepts that will be used through-

out the paper.

Notation and Preliminaries. GIMR (2) can be

viewed as defined on a bi-directional path graph G =

(V,A) with node set V = {1,2,...,n} and arc set

A = {(i,i + 1),(i + 1,i)}

i=1,...,n−1

. Each node i ∈ V

corresponds to the variable x

i

. Let the node interval

[i, j] in the graph G for i ≤ j be the subset of consec-

utive nodes in V, {i, i + 1, . .., j − 1, j}.

We define an associated graph G

st

with the set of

vertices V

st

= V ∪ {s,t} and the set of arcs A

st

= A ∪

A

s

∪A

t

. The two appended nodes s and t are called the

source and the sink node, respectively. A

s

= {(s,i) :

i ∈ V } and A

t

= {(i,t) : i ∈ V } are the sets of source

adjacent arcs and sink adjacent arcs. Each arc (i, j) ∈

A

st

has an associated nonnegative capacity c

i, j

.

An s,t-cut is a partition of V

st

,({s} ∪ S,T ∪ {t}),

where T =

¯

S = V \S. For simplicity, we refer to an s,t-

cut partition as (S,T ). We refer to S as the source set

of the cut, excluding s, and T the sink set, excluding

t. For each node i ∈ V , we define its status in graph

G

st

as status(i) = s if i ∈ S (referred as an s-node),

otherwise status(i) = t)(i ∈ T ) (referred as a t-node).

The capacity of a cut (S,T ) is defined as C({s} ∪

S,T ∪ {t}) where C (V

1

,V

2

) =

∑

i∈V

1

, j∈V

2

c

i, j

. A mini-

mum s,t-cut in G

st

is an s,t-cut (S,T ) that minimizes

C({s} ∪S, T ∪{t}). Hereafter, any reference to a min-

imum cut is to the unique minimum s,t-cut with the

maximal source set, that is, the source set that is not

contained in any other source set of a minimum cut in

case that there are multiple minimum cuts.

A convex piecewise linear function f

pl

i

(x

i

) is

specified by its ascending list of q

i

breakpoints, a

i,1

<

a

i,2

< . . . < a

i,q

i

, and the slopes of the q

i

+ 1 linear

pieces between every two adjacent breakpoints, de-

noted by w

i,0

< w

i,1

< . . . < w

i,q

i

. To define a break-

point more formally, each convex piecewise linear

function f

pl

i

(x

i

) can be viewed as a maximum of mul-

tiple affine functions, which are sorted according to

their slopes. We call the x-coordinate of an intersec-

tion of two consecutive affine functions a breakpoint.

Let the sorted list of the union of q breakpoints of

all the n convex piecewise linear functions be a

i

1

, j

1

<

a

i

2

, j

2

< . . . < a

i

q

, j

q

(w.l.o.g. we may assume that the

n sets of breakpoints are disjoint (Hochbaum and Lu,

2017)), where a

i

k

, j

k

, the k-th breakpoint in the sorted

list, is the breakpoint between the ( j

k

− 1)-th and the

j

k

-th linear pieces of f

pl

i

k

x

i

k

.

Overview of Paper. Section 2 describes the algo-

rithm that solves PFL (1) for a fixed value of λ, the

HL-algorithm. In Section 3, we give a description of

the path of solutions algorithm, or PoS, for ℓ

1

-fidelity

fused lasso problem. In Section 4, we generalize it

to PFL with convex piecewise linear fidelity function.

We provide the bound of the number of different so-

lutions of PFL across all nonnegative λ values as well

as the time complexity of the algorithm in Section 5.

Lastly, we conclude with experimental results in Sec-

tion 6.

ICPRAM 2024 - 13th International Conference on Pattern Recognition Applications and Methods

108

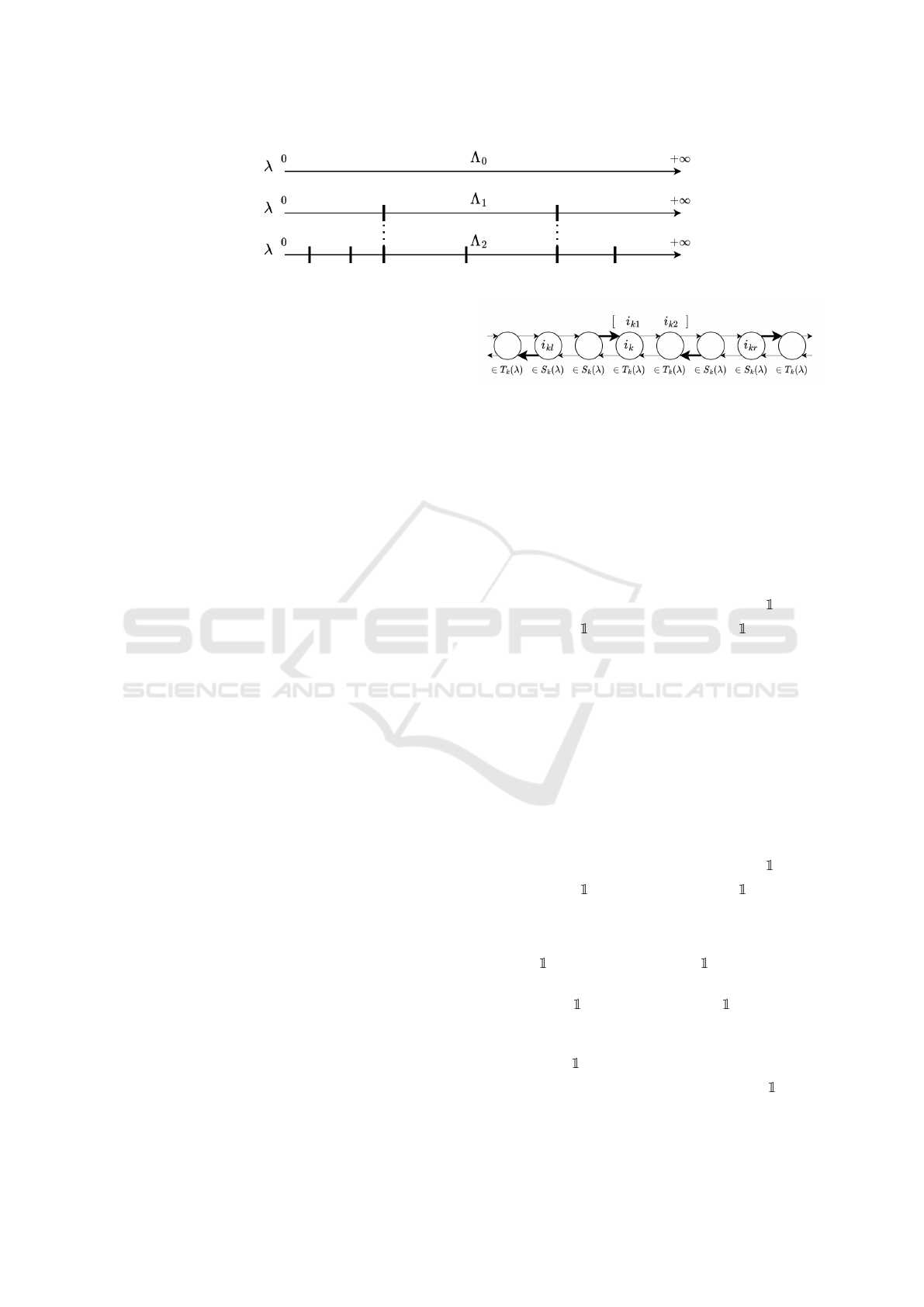

Figure 1: G

st

(α): For node i, if its right sub-gradient at

α, ( f

pl

i

)

′

(α), is negative, s is connected to i with an arc of

capacity c

s,i

= −( f

pl

i

)

′

(α). Otherwise, i is connected to t

with an arc of capacity c

i,t

= ( f

pl

i

)

′

(α).

2 ALGORITHM THAT SOLVES

FUSED LASSO FOR A FIXED

VALUE OF λ

The PFL problem (1) that we consider in this work

can be solved for a fixed λ using the HL-algorithm,

introduced by Hochbaum and Lu (2017). The HL-

algorithm solves problems in the class of generalized

isotonic median regression or GIMR (2). PFL is a

special case of GIMR (2) when d

i,i+1

= d

i+1,i

= λ

for all i ∈ {1,2,...,n − 1}. In this section, we give

a description of how the HL-algorithm solves GIMR,

which also explains for the case of PFL.

We construct a parametric graph G

st

(α) =

(V

st

,A

st

), shown in Figure 1, that is associated with

the bi-directional path graph G = (V,A), for any scalar

value α. The capacities of arcs (i, i + 1),(i + 1,i) ∈ A

are c

i,i+1

= d

i,i+1

and c

i+1,i

= d

i+1,i

respectively. Arc

(s,i) ∈ A

s

has capacity c

s,i

= max{0,−( f

pl

i

)

′

(α)} and

arc (i,t) ∈ A

t

has capacity c

i,t

= max{0, ( f

pl

i

)

′

(α)},

where ( f

pl

i

)

′

(α) is the right sub-gradient of function

f

pl

i

(·) at argument α. (One can select instead the left

sub-gradient.) For any given value of α, we have ei-

ther c

s,i

= 0 or c

i,t

= 0, that is, i is never connected to

both s and t for the same α.

The link between the minimum cut for any given

value of α and the optimal solution to GIMR (2) is

characterized in the following threshold theorem:

Theorem 2.1 (Threshold theorem (Hochbaum,

2001)). For any given α, let S

∗

be the maximal source

set of the minimum cut in graph G

st

(α). Then, there is

an optimal solution x

∗

to GIMR (2) satisfying x

∗

i

≥ α

if i ∈ S

∗

and x

∗

i

< α if i ∈ T

∗

.

An important property of G

st

(α) is that the capac-

ities of source adjacent arcs and sink adjacent arcs are

nonincreasing and nondecreasing functions of α, re-

spectively. The capacities of all the other arcs are con-

stants. This implies the following nested cut property:

Lemma 2.2 (Nested cut property (Gallo et al., 1989;

Hochbaum, 2001, 2008)). For any two parameter val-

ues α

1

≤ α

2

, let S

α

1

and S

α

2

be the respective max-

imal source set of the minimum cuts of G

st

(α

1

) and

G

st

(α

2

), then S

α

1

⊇ S

α

2

.

We remark that the above threshold theorem and

nested cut property both work not only for GIMR

(2) defined on a bi-path graph, but also for a gen-

eralization of GIMR that is defined on arbitrary (di-

rected) graphs, where the regularization term may pe-

nalize the difference between arbitrary pairs of vari-

ables rather than just consecutive variables.

Based on the threshold theorem, it is sufficient

to solve the minimum cuts in the parametric graph

G

st

(α) for all values of α, in order to solve GIMR (2).

In piecewise linear functions, the right sub-gradients

for α values between any two adjacent breakpoints

are constant. Thus, the source and sink adjacent arc

capacities remain constant for α between any two ad-

jacent breakpoint values in the sorted list of break-

points over all the n convex piecewise linear func-

tions. This result leads to the following lemma given

by Hochbaum and Lu (2017).

Lemma 2.3. The minimum cuts in G

st

(α) remain

unchanged for α assuming any value between any

two adjacent breakpoints in the sorted list of break-

points of all the n convex piecewise linear functions,

{ f

pl

i

(x

i

)}

i=1,...,n

.

Thus, the values of α to be considered can be re-

stricted to the set of breakpoints of the n convex piece-

wise linear functions, { f

pl

i

(x

i

)}

i=1,...,n

. The HL algo-

rithm solves GIMR (2) by efficiently computing the

minimum cuts of G

st

(α) for subsequent values of α

in the ascending list of breakpoints, a

i

1

, j

1

< a

i

2

, j

2

<

... < a

i

q

, j

q

.

Let G

k

, for k ≥ 1, denote the parametric graph

G

st

(α) for α equal to a

i

k

, j

k

, i.e., G

k

= G

st

(a

i

k

, j

k

). For

k = 0, we let G

0

= G

st

(a

i

1

, j

1

− ε) for a small value

of ε > 0. Let (S

k

,T

k

) be the minimum cut in G

k

,

for k ≥ 0. Recall that S

k

is the maximal source set.

The nested cut property (Lemma 2.2) implies that

S

k

⊇ S

k+1

for k ≥ 0. Based on the threshold theorem

and the nested cut property, we know that for each

node j ∈ {1, . ..,n}, x

∗

j

= a

i

k

, j

k

for the index k such

that j ∈ S

k−1

and j ∈ T

k

.

The HL-algorithm generates the respective mini-

mum cuts of G

k

in increasing order of k. It is shown

by Hochbaum and Lu (2017) that (S

k

,T

k

) can be com-

puted from (S

k−1

,T

k−1

) in time O(log n). Hence the

total complexity of the algorithm is O(q log n). The

efficiency of updating (S

k

,T

k

) from (S

k−1

,T

k−1

) is

based on the following key results.

The update of the graph from G

k−1

to G

k

is simple

as it only involves a change in the capacities of the

source and sink adjacent arcs of i

k

,(s,i

k

) and (i

k

,t).

Recall that from G

k−1

to G

k

, the right sub-gradient

Path of Solutions for Fused Lasso Problems

109

( f

pl

i

k

)

′

changes from w

i

k

, j

k

−1

, the slope of the k-th lin-

ear piece of f

pl

i

k

, to w

i

k

, j

k

, the slope of the (k + 1)-th

linear piece of f

pl

i

k

. Thus, the changes of c

s,i

k

and c

i

k

,t

from G

k−1

to G

k

depend on the signs of w

i

k

, j

k

−1

and

w

i

k

, j

k

, which have three possible cases.

Case 1. w

i

k

, j

k

−1

≤ 0, w

i

k

, j

k

≤ 0 : c

s,i

k

changes from

−w

i

k

, j

k

−1

to −w

i

k

, j

k

while c

i

k

,t

remains zero.

Case 2. w

i

k

, j

k

−1

≤ 0, w

i

k

, j

k

≥ 0 : c

s,i

k

changes from

−w

i

k

, j

k

−1

to 0 and c

i

k

,t

changes from 0 to w

i

k

, j

k

.

Case 3. w

i

k

, j

k

−1

≥ 0,w

i

k

, j

k

≥ 0 : c

i

k

,t

changes from

w

i

k

, j

k

−1

to w

i

k

, j

k

while c

s,i

k

remains zero.

Note that the update from G

k−1

to G

k

does not

involve the values of d

i,i+1

and d

i+1,i

in GIMR (2), of

which both take the values of λ in PFL (1).

Based on the nested cut property, for any node

i, if i ∈ T

k−1

, then i remains in T

k

and the sink set

for all subsequent cuts. Hence, an update of the

minimum cut in G

k

from the minimum cut in G

k−1

can only involve shifting some nodes from source set

S

k−1

to sink set T

k

. Formally, the relation between

(S

k−1

,T

k−1

) and (S

k

,T

k

) is characterized in Lemma

2.4 and 2.5 given by Hochbaum and Lu (2017):

Lemma 2.4. If i

k

∈ T

k−1

, then (S

k

,T

k

) = (S

k−1

,T

k−1

).

We define s-interval in graph G to be the interval

of consecutive s-nodes, that is the set of consecutive

nodes that are in the source set S of the minimum cut

of G. An s-interval containing i refers to an interval of

s-nodes in G that contains node i. If i is a t-node, then

such s-interval is an empty interval. The maximal s-

interval containing i refers to the s-interval containing

i that is not a subset of any other such s-intervals.

Lemma 2.5. If i

k

∈ S

k−1

, then all the nodes that

change their status from s in G

k−1

to t in G

k

must

form a (possibly empty) s-interval of i

k

in G

k−1

.

Both lemmas imply that the derivation of the min-

imum cut in G

k

from the minimum cut in G

k−1

can

be done by finding an optimal s-interval of nodes

containing i

k

that change their status from s to t.

If this interval of nodes, referred to as node sta-

tus change interval, is [i

kl

,i

kr

] then it follows that

(S

k

,T

k

) = (S

k−1

\[i

kl

,i

kr

],T

k−1

∪ [i

kl

,i

kr

]). Combining

this with the threshold theorem (Theorem 2.1), all x

i

for i ∈ [i

kl

,i

kr

] have their optimal solutions in GIMR

(2) equal to a

i

k

, j

k

.

To find the node status change interval from G

k−1

to G

k

that gives the minimum cut in G

k

, we rely on

the following lemma from Hochbaum and Lu (2017).

Lemma 2.6. Given the maximal s-interval contain-

ing i

k

in G

k−1

, [i

kl

,i

kr

], the node status change inter-

val [i

k1

,i

k2

] is the optimal solution to the following

problem defined on G

k

min

[i

k1

,i

k2

]

C({s},[i

k1

,i

k2

]) +C([i

kl

,i

kr

]\[i

k1

,i

k2

],{t}) (3)

+ C([i

kl

,i

kr

]\[i

k1

,i

k2

],[i

k1

,i

k2]

] ∪ {i

kl

− 1,i

kr

+ 1})

s.t. i

k

∈[i

k1

,i

k2

] ⊆ [i

kl

,i

kr

] or [i

k1

,i

k2

] =

/

0

Solving problem (3) in Lemma 2.6 can be easier

than directly solving the minimum cut problem on

G

k

. Our path of solutions algorithm rely on all of

the stated theorems and lemmas, but particularly on

Lemma 2.5 and 2.6.

3 PATH OF SOLUTIONS

ALGORITHM FOR ℓ

1

FIDELITY

FUNCTIONS

The proposed path of solutions algorithm, or PoS,

finds the optimal solution x

∗

for all nonnegative λ.

We first demonstrate in this section how PoS solves a

specific PFL problem, the ℓ

1

-fidelity fused lasso:

(ℓ

1

-PFL) min

x

1

,...,x

n

n

∑

i=1

w

i

|x

i

− a

i

| + λ

n−1

∑

i=1

|x

i

− x

i+1

| (4)

for all nonnegative λ, given a set of breakpoints

{a

i

}

n

i=1

and a set of positive penalty weights {w

i

}

n

i=1

.

As we consider ℓ

1

-PFL, which is a special case

of both GIMR and PFL, we substitute some notations

introduced in Section 2 by simpler terms.

For ℓ

1

-PFL, f

pl

i

= w

i

|x

i

−a

i

| consists of two linear

pieces and a single breakpoint a

i

. w

i,0

= −w

i

,w

i,1

=

w

i

and a

i,0

= a

i

. Moreover, both d

i,i+1

and d

i+1,i

are

equal to λ. Similar to the HL-algorithm, we let the

indices i

1

,i

2

,...,i

n

be the sorted indices of n break-

points such that a

i

1

< a

i,2

< . .. < a

i

n

. In the graph

G

k

or G

st

(a

i

k

), the weights of the source adjacent arc

(s,i) ∈ A

s

and the sink adjacent arc (i,t) ∈ A

t

are

c

s,i

=

(

−w

i

if a

i

< a

i

k

0 otherwise

, c

i,t

=

(

0 if a

i

< a

i

k

w

i

otherwise

The arcs (i,i + 1) and (i + 1, i) have weights equal to

λ, for i ∈ {1,2,...,n − 1}. The HL-algorithm com-

putes the optimal solution of problem (4) by solving

for the minimum cuts of graphs G

0

,G

1

,...,G

n

.

At iteration k of the HL-algorithm, we update the

graph from G

k−1

to G

k

and compute the minimum cut

solution (S

k

,T

k

), which allows us to discover the op-

timal solutions of variables whose nodes are in T

k

but

not in T

k−1

, as explained last section. In PoS where

we consider all λ ∈ [0,∞), the minimum cut solutions

of G

k

for different values of λ may be different. To

reflect the dependence on λ, we use the notations of

ICPRAM 2024 - 13th International Conference on Pattern Recognition Applications and Methods

110

G

k

(λ) and (S

k

(λ),T

k

(λ)) for the graph and its mini-

mum cut solutions at step k of the algorithm. We may

revert to the notations of G

k

and (S

k

,T

k

) when we dis-

cuss the graph for any λ in general.

The overall idea of PoS is as follow: at the first

iteration of the algorithm, we start with the graph

G

0

and the range of all possible values of λ, [0, ∞),

denoted by Λ

0

, on which all values of λ result in

the same minimum cut (S

0

(λ),T

0

(λ)) where S

0

(λ) =

{1,...,n} and T

0

(λ) =

/

0. We then update the graph

to G

1

and partition Λ

0

into different ranges of λ such

that λ in the same range has the same minimum cut

solution (S

1

(λ),T

1

(λ)). The resulting set of ranges of

λ for G

1

is denoted Λ

1

. For conciseness, we call each

range of values of λ a λ-range.

At iteration k, we start with a set of ranges of

λ-values, denoted by Λ

k−1

, spanning [0,∞), such

that each λ-range Λ ∈ Λ

k−1

corresponds to a par-

ticular minimum cut solution (S

k−1

(Λ),T

k−1

(Λ)) of

G

k−1

(Λ). We update G

k−1

to G

k

, and partition each

λ-range in Λ

k−1

into smaller λ-ranges according to

the solutions of (S

k

,T

k

). These λ-ranges that are off-

spring of ranges in Λ

k−1

together form the set Λ

k

.

The set Λ

k

is then passed onto the next iteration.

We explain the first iteration of PoS in Subsection

3.1. Subsection 3.2 describes the iteration k. In Sub-

section 3.2.1, we show the computation of the mini-

mum cuts of G

k

given the minimum cut solutions of

G

k−1

. Subsection 3.2.2 completes the iteration k by

using the minimum cut solutions to partition λ-ranges

from the previous iteration. We conclude with the out-

puts upon the completion of PoS in Subsection 3.3.

3.1 First Iteration of the Path of

Solutions Algorithm

At the beginning of the first iteration, it is clear that

the minimum cut (S

0

(λ),T

0

(λ)) of G

0

(λ), shown in

Figure 2, is always ({1,2,...,n},{}) for any λ ∈ [0,∞)

since all nodes are connected to s and none is con-

nected to t. We let Λ

0

denote the set of λ-ranges

such that for λ

1

and λ

2

that are in the same λ-range,

we have (S

0

(λ

1

),T

0

(λ

1

)) = (S

0

(λ

2

),T

0

(λ

2

)). Since

there is only one solution of (S

0

(λ),T

0

(λ)) across all

λ ∈ [0, ∞), we have Λ

0

= {[0, ∞)}.

The first step of the algorithm is to determine

the minimum cuts (S

1

(λ),T

1

(λ)) of G

1

(λ), in Figure

3. We consider the outcomes of the cut capacity in

terms of λ, for each possible case of T

1

(λ) accord-

ing to Lemma 2.5, which states that T

1

(λ)\T

0

(λ) must

form an s-interval containing i

1

in G

0

(λ). Since all

nodes in G

0

(λ) are s-nodes for all λ, possible T

1

(λ)

are node intervals with the form of [i

1l

,i

1r

] where

i

1

∈ [i

1l

,i

1r

] ⊆ [1,n]. We group them into cases such

that the coefficient of λ in the cut capacity of each

case is different from other cases.

Case 1. T

1

(λ) =

/

0. None of the nodes changes its

status from s to t. C(S

1

(λ),T

1

(λ)) = w

i

1

.

Case 2. T

1

(λ) = [1, n].

C(S

1

(λ),T

1

(λ)) = (

∑

n

i=1

w

i

) − w

i

1

Case 3. T

1

(λ) consists of some, but not all, nodes.

There are three subcases.

Case 3.1. T

1

(λ) = [1, i

1r

] where i

1

≤ i

1r

< n.

C(S

1

(λ),T

1

(λ)) = λ + min

i

1

≤i

1r

<n

(

∑

i

1r

i=1

w

i

) − w

i

1

The optimal T

1

(λ) under Case 3.1 is [1,i

1

], when

i

1r

= i

1

, with C(S

1

(λ),T

1

(λ)) = λ +

∑

i

1

−1

i=1

w

i

.

Case 3.2. T

1

(λ) = [i

1l

,n] where 1 < i

1l

≤ i

1

.

C(S

1

(λ),T

1

(λ)) = λ + min

1<i

1l

≤i

1

(

∑

n

i=i

1l

w

i

) − w

i

1

The optimal T

1

(λ) under Case 3.2 is [i

1

,n], when

i

1l

= i

1

, with C(S

1

(λ),T

1

(λ)) = λ +

∑

n

i=i

1

+1

w

i

.

Case 3.3. T

1

(λ) = [i

1l

,i

1r

] where 1 < i

1l

≤

i

1

and i

1

≤ i

1r

< n. C(S

1

(λ),T

1

(λ)) = 2λ +

min

1<i

1l

≤i

1

i

1

≤i

1r

<n

(

∑

i

1r

i=i

1l

w

i

) − w

i

1

. The optimal T

1

(λ) under

Case 3.3 is {i

1

} or [i

1

,i

1

], when i

1l

= 1 and i

1r

= 1.

C(S

1

(λ),T

1

(λ)) = 2λ.

The computations of the optimal cuts in Case 3.1,

3.2 and 3.3. rely on the fact that {w

i

}

n

i=1

are positive.

Notice that the comparison between solutions with

similar coefficients of λ in the cut capacity is indepen-

dent of λ. For instance, Case 3.1, with the cut capac-

ity λ+

∑

i

1

−1

i=1

w

i

, and Case 3.2, with the cut capacity of

λ +

∑

n

i=i

1

+1

w

i

, have the same coefficient of λ, which

1, in their cut capacities. Determining which one is

more optimal is independent of λ. We only need to

compare the constant terms of the two cases.

Let T

ρ

denote the cut with the smallest term con-

stant among the cuts whose coefficients of λ are equal

to ρ, and c

ρ

denote the corresponding minimum con-

stant terms. The cut capacity due to the sink set T

ρ

is

then equal to ρλ + c

ρ

. If multiple cut solutions have

the smallest constant, we select the cut solution that

results in the largest source set possible, due to the

maximal source set requirement.

For ρ = 0, we compare Case 1 and Case 2. c

0

=

min(w

i

1

,(

∑

n

i=1

w

i

) − w

i

1

). If w

i

1

≤ (

∑

n

i=1

w

i

) − w

i

1

)

then T

0

=

/

0 (Case 1), otherwise, T

0

= [1,n] (Case

2). Notice that when w

i

1

= (

∑

n

i=1

w

i

)−w

i

1

), we select

T

0

=

/

0 (Case 1) rather than [1,n] (Case 2) since T

0

=

/

0 always results in a larger source set.

For ρ = 1, we compare Case 3.1 and Case

3.2. c

1

= min(

∑

i

1

−1

i=1

w

i

,

∑

n

i=i

1

+1

w

i

). If

∑

i

1

−1

i=1

w

i

<

∑

n

i=i

1

+1

w

i

then T

1

= [1, i

1

] (Case 3.1). If

∑

i

1

−1

i=1

w

i

>

∑

n

i=i

1

+1

w

i

then T

1

= [i

1

,n] (Case 3.2). However,

if

∑

i

1

−1

i=1

w

i

=

∑

n

i=i

1

+1

w

i

, we select the one, between

Case 3.1 and 3.2, that results in a larger source set.

Path of Solutions for Fused Lasso Problems

111

Figure 2: G

0

(λ) for ℓ

1

-PFL (1).

Figure 3: G

1

(λ) for ℓ

1

-PFL (1).

For ρ = 2, there is only one case, Case 3.3, whose

coefficients of λ in cut capacity is 2. The cut capacity

of Case 3.3, T

1

(λ) = {i

1

}, is 2λ. Hence, c

2

= 0 and

T

2

= {i

1

}.

With the notations of T

ρ

and c

ρ

, we compare the

capacities between cases of T

1

(λ) listed above and

give the optimal solution of T

1

(λ) for different λ in-

tervals as follow:

If T

0

=

/

0 then

T

1

(λ) =

T

0

, if λ ≥

c

0

2

and λ ≥ c

0

− c

1

T

1

, if λ < c

0

− c

1

and λ > c

1

T

2

if λ ≤ c

1

and λ <

c

0

2

(5)

However, if T

0

= [1, n] then

T

1

(λ) =

T

0

, if λ >

c

0

2

and λ > c

0

− c

1

T

1

, if λ ≤ c

0

− c

1

and λ > c

1

T

2

if λ ≤ c

1

and λ ≤

c

0

2

(6)

The derivation of both (5) and (6) comes from the

comparison of three cut capacities: c

0

, λ + c

1

and

2λ, of which we select the smallest one for different

ranges of λ. The difference between (5) and (6) is

a result of the fact that we always select the solution

with the maximal source set. Hence, for λ such that

T

0

and T

1

are equally optimal, we select the one with

a larger source set. When T

0

=

/

0, T

0

is always pre-

ferred. When T

0

= [1,n], T

1

is always preferred. The

comparison for such situation between T

0

and T

2

, and

between T

1

and T

2

can be done similarly.

Note that when 2c

1

> c

0

, the second case in (5)

does not exist since c

1

> c

0

−c

1

. Hence, when T

0

=

/

0

and 2c

1

> c

0

, the solution of T

1

(λ) is

T

1

(λ) =

(

T

0

, if λ ≥

c

0

2

T

2

otherwise

(5.1)

Otherwise, when T

0

=

/

0 and 2c

1

≤ c

0

,

T

1

(λ) =

T

0

, if λ ≥ c

0

− c

1

T

1

, if c

1

< λ < c

0

− c

1

T

2

if λ ≤ c

1

(5.2)

For the case where T

0

= [1,n], we can write the so-

lutions of T

1

(λ) for when 2c

1

> c

0

and 2c

1

≤ c

0

in

a similar way as (5.1) and (5.2), which we omit here

due to the space limit.

Suppose the given weights {w

i

}

n

i=1

and break-

points {a

i

}

n

i=1

result in the case where T

0

=

/

0 and

2c

1

≤ c

0

, in which the solution of T

1

(λ) is (5.2).

We divide the λ-range [0,∞) into three λ-ranges:

[0,c

1

],(c

1

,c

0

− c

1

) and [c

0

− c

1

,∞). We denote this

set of λ-ranges by Λ

1

, i.e. Λ

1

= {[0, c

1

],(c

1

,c

0

−

c

1

),[c

0

− c

1

,∞)}. Each λ-range in Λ

1

has a different

solution of T

1

(λ), according to (5.2).

When appropriate, we may use the notation of

T

1

(Λ) in place of T

1

(λ) for λ ∈ Λ ∈ Λ

1

to emphasize

the fact that T

1

(λ) for all λ ∈ Λ are identical.

The first iteration of PoS ends here as we obtain

the set Λ

1

and the minimum cut solutions of G

1

for λ-

ranges in Λ

1

. In the next iteration of PoS, we solve for

the minimum cuts of the graph G

2

for each λ-range

in Λ

1

, which is then partitioned further according to

their minimum cut solutions. The subsequent itera-

tions of PoS will be elaborated in the next section.

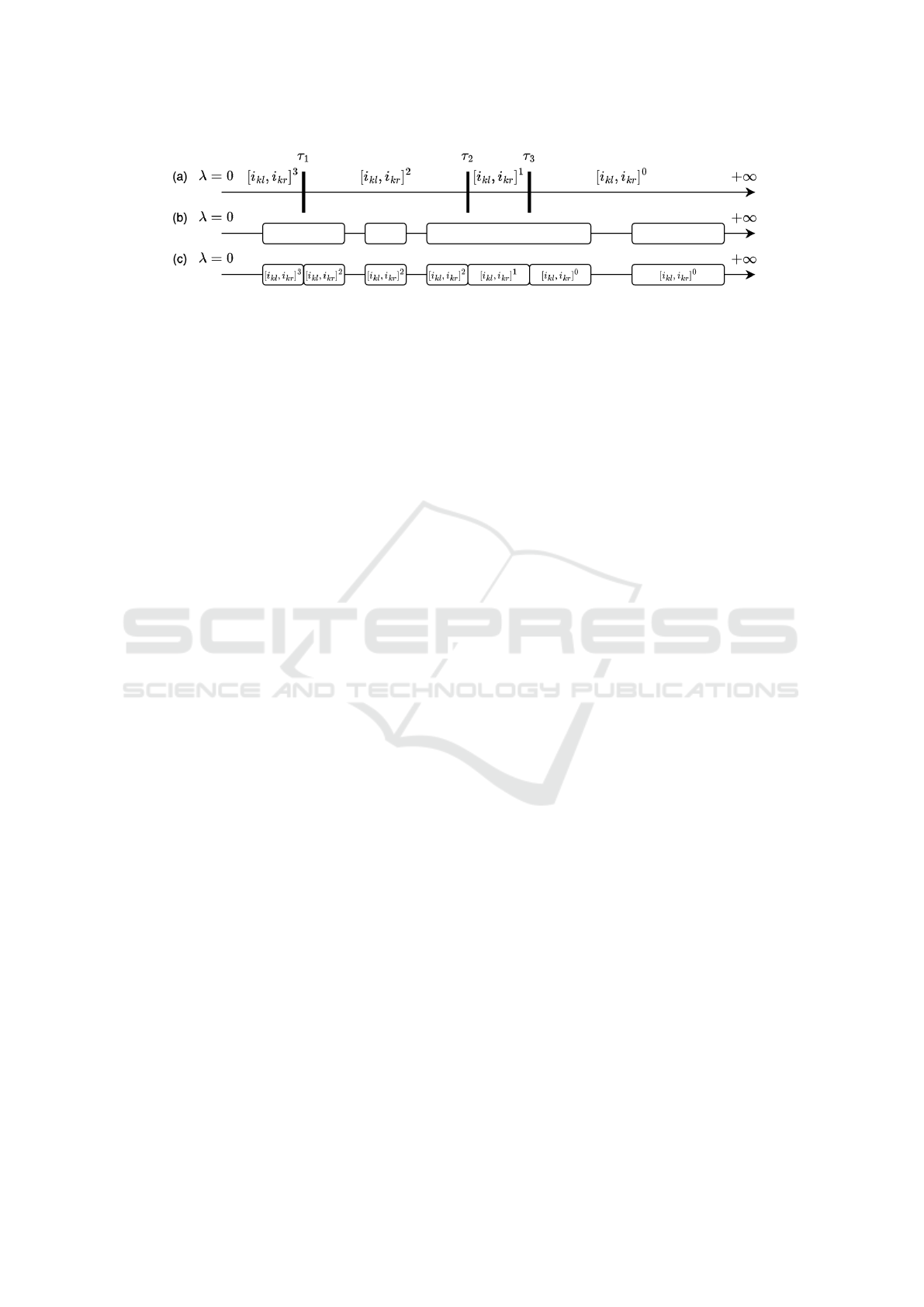

Figure 4 illustrates Λ

0

,Λ

1

and Λ

2

which are

the sets of λ-ranges that lead to different minimum

cut solutions. Each λ-range in Λ

2

corresponds

to a particular sequence of minimum cut solution

{T

0

(λ),T

1

(λ),T

2

(λ)}.

The process continues until we complete the it-

eration n of the algorithm and obtain the set of

λ-ranges Λ

n

. Each λ-range in Λ

n

corresponds

to a particular sequence of minimum cut solution

{T

0

(λ),T

1

(λ),...,T

n

(λ)}, which corresponds to a par-

ticular solution of x

∗

(λ).

ICPRAM 2024 - 13th International Conference on Pattern Recognition Applications and Methods

112

Figure 4: λ-Ranges according to the minimum cut solutions of G

0

(λ), G

1

(λ) and G

2

(λ).

3.2 Iteration k of the Path of Solutions

Algorithm

Iteration k of the algorithm involves similar steps and

has the same goal as in the first iteration, that is to

partition each λ-range from the previous iteration ac-

cording to their respective minimum cut solutions.

3.2.1 Finding the Minimum Cuts of G

k

(λ)

By the end of iteration k − 1, we have found the set

of λ-ranges, Λ

k−1

, and (S

k−1

(Λ),T

k−1

(Λ) for each

Λ ∈ Λ

k−1

. Our goal in the iteration k is to find

the minimum cut solution (S

k

(λ),T

k

(λ)) of the graph

G

k

(λ) for λ in each Λ ∈ Λ

k−1

. This minimum cut so-

lution might contain several cases depending on the

value of λ, like the result in (5). These different cases

will then divide the λ-range Λ into smaller λ-ranges.

If i

k

∈ T

k−1

(λ), then according to Lemma 2.4, the

minimum cut solution (S

k

(λ),T

k

(λ)) is then equal to

(S

k−1

(λ),T

k−1

(λ)). A more interesting and less trivial

case is when i

k

∈ S

k−1

(λ).

We discussed toward the end of Section 2 that,

based on Lemma 2.5, finding the minimum cut

(S

k

(λ),T

k

(λ)) of G

k

(λ) is equivalent to finding the

node status change interval in S

k−1

(λ) that move to

T

k

(λ). According to Lemma 2.6, given the maxi-

mal s-interval containing i

k

in G

k−1

(λ), [i

kl

,i

kr

], we

can find the node status change interval containing i

k

,

[i

k1

,i

k2

], by solving problem (3) defined on G

k

(λ), re-

stated here:

min

[i

k1

,i

k2

]

C({s},[i

k1

,i

k2

]) +C([i

kl

,i

kr

]\[i

k1

,i

k2

],{t}) (3)

+ C([i

kl

,i

kr

]\[i

k1

,i

k2

],[i

k1

,i

k2]

] ∪ {i

kl

− 1,i

kr

+ 1})

s.t. i

k

∈[i

k1

,i

k2

] ⊆ [i

kl

,i

kr

] or [i

k1

,i

k2

] =

/

0

For each Λ ∈ Λ

k−1

, we know its max-

imal s-interval containing i

k

, [i

kl

,i

kr

], from

(S

k−1

(Λ),T

k−1

(Λ)). To solve for the node sta-

tus change interval [i

k1

,i

k2

], we write out the cost (3)

for different feasible solutions of [i

k1

,i

k2

] ⊆ [i

kl

,i

kr

],

for general λ ∈ [0, ∞). Similar to the computation

of T

1

(λ) in Subsection 3.1, the cost functions for

different possible cases of [i

k1

,i

k2

] may have different

coefficients of λ. These different coefficients of λ

Figure 5: Example when 1 < i

kl

< i

k1

≤ i

k

≤ i

k2

< i

kr

<

n. Here, we only show arcs of weight λ, which connect

consecutive nodes.

in (3) are contingent upon the positions of i

k

and

[i

k1

,i

k2

], as well as [i

kl

,i

kr

].

Consider, for example, the most typical case of the

maximal s-interval containing i

k

, [i

kl

,i

kr

], such that

i

kl

∈ (1,i

k

) and i

kr

∈ (i

k

,n). Different cases of [i

k1

,i

k2

]

that are feasible solutions to problem (3) consist of:

Case 1. i

k1

∈ (i

k1

,i

k

] and i

k2

∈ [i

k

,i

kr

), illustrated

in Figure 5. The cost function (3) due to this case

of [i

k1

,i

k2

] can be written as 4λ +

∑

i

k2

i=i

k1

w

i

· (a

i

>

a

i

k

)+

∑

i

k1

−1

i=i

kl

w

i

· (a

i

≤ a

i

k

)+

∑

i

kr

i=i

k2

+1

w

i

· (a

i

≤ a

i

k

).

The first term is the cost due to arcs of weight λ.

There are 4 such arcs of weights λ that connect nodes

in [i

kl

,i

kr

]\[i

k1

,i

k2

], which remain in the source set

S

k

(λ), to other nodes in the sink set T

k

(λ). The co-

efficients of λ is then equal to 4. These 4 arcs are

displayed in bold in Figure 5.

The second term is the sum of the weights w for

nodes in [i

k1

,i

k2

] that are still connected to the node s.

The sum of the third term and the fourth term is the

sum of the weights w for nodes in [i

kl

,i

k1

− 1] ∪ [i

k2

+

1,i

kr

] that are connected to the node t.

The optimal node status change interval for Case

1 is argmin

[i

k1

,i

k2

]:i

k1

∈(i

k1

,i

k

],i

k2

∈[i

k

,i

kr

)

∑

i

k2

i=i

k1

w

i

· (a

i

>

a

i

k

)+

∑

i

k1

−1

i=i

kl

w

i

· (a

i

≤ a

i

k

)+

∑

i

kr

i=i

k2

+1

w

i

· (a

i

≤ a

i

k

).

Case 2. There are two subcases.

Case 2.1. i

k1

= i

kl

,i

k2

∈ [i

k

,i

kr

). The cost is 2λ +

∑

i

k2

i=i

kl

w

i

· (a

i

> a

i

k

) +

∑

i

kr

i=i

k2

+1

w

i

· (a

i

≤ a

i

k

).

Case 2.2. i

k1

∈ (i

k1

,i

k

],i

k2

= i

kr

. The cost is equal to

2λ +

∑

i

kr

i=i

k1

w

i

· (a

i

> a

i

k

) +

∑

i

k1

−1

i=i

kl

w

i

· (a

i

≤ a

i

k

).

Case 3. [i

k1

,i

k2

] is an empty interval, that is,

[i

k1

,i

k2

] ⊆ S

k

(λ). The cost function can be written as

2λ +

∑

i

kr

i=i

kl

w

i

· (a

i

≤ a

i

k

).

Case 4. i

k1

= i

kl

,i

k2

= i

kr

. The cost is

∑

i

kr

i=i

kl

w

i

· (a

i

>

a

i

k

).

Path of Solutions for Fused Lasso Problems

113

Let [i

k1

,i

k2

]

ρ

denote the node status change in-

terval with the smallest cost among all s-intervals in

[i

kl

,i

kr

] whose coeffcients of λ in the cost are ρ, for

ρ = 0,2,4. This is a similar notation as in Subsection

3.1. For ρ = 0, there is only one case, that is Case 4.

For ρ = 2, we compare Case 2 and 3. For ρ = 4, there

is only one case, Case 1. We do not provide explicit

forms of [i

k1

,i

k2

]

ρ

here due to the space limit.

With the same method as presented in Subsection

3.1, we can write out the optimal solution of [i

kl

,i

kr

]

in the following form:

[i

k1

,i

k2

](λ) =

[i

k1

,i

k2

]

0

, if λ > τ

2

[i

k1

,i

k2

]

2

, if τ

1

≤ λ ≤ τ

2

[i

k1

,i

k2

]

4

if λ ≤ τ

1

(7)

(see the next paragraph for the solution when λ =

τ

1

) where τ

1

and τ

2

are the threshold values that

split λ ∈ [0,∞) into ranges. τ

1

and τ

2

can be ob-

tained by comparing the objective functions (3) due

to [i

k1

,i

k2

]

0

,[i

k1

,i

k2

]

2

and [i

k1

,i

k2

]

4

.

Remark here that when λ = τ

1

, we select between

[i

k1

,i

k2

]

2

and [i

k1

,i

k2

]

4

the solution that results in a

larger source set, as it depends on the problem in-

stance. When λ = τ

2

, we always prefer [i

k1

,i

k2

]

2

over

[i

k1

,i

k2

]

0

= [i

kl

,i

kr

].

We see for this example of [i

kl

,i

kr

], where i

kl

∈

(1,i

k

) and i

kr

∈ (i

k

,n), that there can be up to 3 differ-

ent solutions, as shown in (7), due to 3 different co-

efficients of λ in the cost function across all possible

cases of [i

k1

,i

k2

]. These 3 different solutions corre-

spond to 3 λ-ranges, [0,τ

1

],[τ

1

,τ

2

] and (τ

2

,∞).

For other types of the maximal s-interval contain-

ing i

k

, [i

kl

,i

kr

], we list all possible coefficients of λ

and the number of solutions of the node status change

interval, [i

k1

,i

k2

], in Table 1.

We have shown here the procedure to find the node

status change interval [i

k1

,i

k2

] from G

k−1

(λ) to G

k

(λ),

for any λ ≥ 0, given a maximal s-interval containing

i

k

, [i

kl

,i

kr

], which can be obtained from the minimum

cut (S

k−1

(λ),T

k−1

(λ)). Next, we show how we apply

this procedure to partition each λ-ranges in Λ

k−1

and

get the set Λ

k

to complete the iteration k of PoS.

3.2.2 Partitioning of λ-Ranges

The implication of the procedure in Subsection 3.2.1

is that λ-ranges in Λ

k−1

that have the same maximal

s-interval containing i

k

in G

k−1

also have the same

node status change interval solution. Therefore, the fi-

nal step of the iteration k of PoS is to group λ-ranges

in Λ

k−1

based on their maximal s-intervals contain-

ing i

k

. For each group, with a particular maximal s-

intervals containing i

k

, we compute for the node status

change interval, like in Subsection 3.2.1, and apply

the corresponding solution to λ-ranges in that group.

Table 1: Different types of the maximal s-intervals [i

kl

,i

kr

]

containing i

k

in the minimum cut of G

k−1

, considered in

the k-th iteration of PoS. For each type of [i

kl

,i

kr

], we list

all possible coefficients of λ in the objective function (3).

Types of [i

kl

,i

kr

]:

i

k

̸= 1, n and . . .

Coef.

of λ

# cases

i

kl

∈ (1, i

k

),i

kr

∈ (i

k

,n) 0, 2, 4 3

i

kl

∈ (1, i

k

),i

kr

= n 0, 1, 2, 3 4

i

kl

= 1, i

kr

∈ (i

k

,n) 0, 1, 2, 3 4

i

kl

= 1, i

kr

= n 0, 1, 2 3

i

kl

= i

k

,i

kr

= n 0, 2 2

i

kl

= 1, i

kr

= i

k

0, 2 2

i

kl

= i

k

,i

kr

∈ (i

k

,n) 0, 1 2

i

kl

∈ (1, i

k

),i

kr

= i

k

0, 1 2

i

kl

= i

k

,i

kr

= i

k

0, 2 2

Types of [i

kl

,i

kr

]:

i

k

∈ {1, n} and . . .

Coef.

of λ

# cases

i

kl

,i

kr

∈ {1, n} 0, 1 2

i

kl

= i

k

= 1, i

kr

∈ (1, n) 0, 1, 2 3

i

kl

∈ (1, n), i

kr

= i

k

= n 0, 1, 2 3

For example, suppose the λ-ranges depicted by

rounded rectangular boxes in Figure 6(b) are λ-ranges

in Λ

k−1

that have the same maximal s-interval con-

taining i

k

, and their node status change interval solu-

tion, in the form similar to (7), is shown in Figure

6(a). τ

1

,τ

2

and τ

3

are threshold values that divide

[0,∞) into 4 segments, each corresponds to a partic-

ular node status change interval solution. Here, we

show the case with 4 solutions, separated by 3 thresh-

olds, which is the maximum number possible (see Ta-

ble 1). This is a different case from (7) where we have

3 solutions.

Then, we intersect these λ-ranges, which have the

same maximal s-interval containing i

k

(Figure 6(b)),

with the ranges of λ from the node status change in-

terval solution (Figure 6(a)). The result is shown in

Figure 6(c) where some λ-ranges are split into mul-

tiple λ-ranges by threshold values of λ that belong

to the ranges. Each of these resulting λ-ranges takes

the node status change interval solution from the seg-

ments of λ-values (Figure 6(a)) that it belongs to, as

written in the rectangular boxes in Figure 6(c). For

each of these λ-ranges, say a λ-range Λ, with the

node status change interval solution [i

kl

,i

kr

](Λ), its

minimum cut in G

k

is (S

k

(Λ),T

k

(Λ)) where T

k

(Λ) is

T

k−1

(Λ) ∪ [i

kl

,i

kr

](Λ).

After we perform this step on all groups of λ-

ranges that share the same maximal s-intervals con-

taining i

k

in G

k−1

, we obtain a set of λ-ranges span-

ning [0,∞), which together form the set Λ

k

.

ICPRAM 2024 - 13th International Conference on Pattern Recognition Applications and Methods

114

Figure 6: At iteration k, we group λ-ranges that have the same maximal s-interval containing i

k

in the minimum cut of G

k−1

together. Let λ-ranges in (b) be an example of such group of λ-ranges for a specific maximal s-interval containing i

k

. The

node status change interval solution can be computed accordingly for this s-interval. An example of such solution is illustrated

in (a). This solution (a) is then applied onto λ-ranges in (b), resulting in a set of λ-ranges in (c), with their node status change

intervals solutions written in their respective rectangular boxes.

3.3 Outputs of the PoS Algorithm

The Path of Solutions algorithm divides the range of

nonnegative λ values, [0,∞), into a set of smaller

ranges, which are then subsequently divided into

smaller ranges in each iteration.

At the end of PoS, we obtain the set of λ-ranges,

Λ

n

. We show in Section 5 that the number of λ-

ranges in Λ

n

is at most n

3

. For an interval Λ in

Λ

n

, we have an associated sequence of graph cuts

{(S

k

(Λ),T

k

(Λ))}

n

k=0

. We then obtain the solution

x

∗

(λ) for λ ∈ Λ based on the HL-algorithm by set-

ting x

∗

i

(λ) to a

i

k

, the k-th smallest breakpoint, for the

index k such that i ∈ S

k−1

(Λ) and i ∈ T

k

(Λ).

PoS solves ℓ

1

-PFL in O(n

2

qlogn). We show this

in Section 5 as well.

4 PATH OF SOLUTIONS

ALGORITHM FOR CONVEX

PIECEWISE LINEAR FIDELITY

FUNCTIONS

Here, we address the path of solutions for a general

fused lasso problem with convex piecewise linear fi-

delity function, or PFL (1):

(PFL) min

x

1

,...,x

n

n

∑

i=1

f

pl

i

(x

i

) + λ

n−1

∑

i=1

|x

i

− x

i+1

| (1)

The extension from ℓ

1

-PFL to PFL can be done in

a similar way as the extension of the HL-algorithm

on GIMR from the case of ℓ

1

-fidelity function to

convex piecewise linear fidelity functions, given by

Hochbaum and Lu (2017). We give a brief descrip-

tion here:

Let the number of breakpoints of f

pl

i

(x

i

) be q

i

,

and they are denoted by a

i,1

,a

i,2

,...,a

i,q

i

. The to-

tal number of breakpoints is

∑

n

i=1

q

i

, denoted by q.

We sort the q breakpoints in the ascending order with

the indices (i

1

, j

1

),(i

2

, j

2

),...(i

q

, j

q

) such that a

i

1

, j

1

<

a

i

2

, j

2

< . . . < a

i

q

, j

q

.

The PoS algorithm applied on PFL involves

the computation of the minimum cuts of graphs

G

0

,...,G

q

, for different λ-ranges, which is done in

a similar manner as when PoS is applied on ℓ

1

-PFL

in Section 3. The difference here comes from the

update of G

k−1

to G

k

, which is described in Section

2. The differences between the two graphs are the

weights of the source and sink adjacent arcs of node

i

k

. In G

k

, w

s,i

k

= max(−( f

pl

i

)

′

(a

i

k

),0) and w

i

k

,t

=

max(( f

pl

i

)

′

(a

i

k

),0). The maximal s-interval of inter-

est when finding the node status change interval in it-

eration k is the maximal s-interval containing i

k

, sim-

ilar to when we solve ℓ

1

-PFL.

5 BOUND OF THE NUMBER OF

SOLUTIONS AND TIME

COMPLEXITY

We first provide the following lemma and theorem

that lead to a conclusion that the number of different

solutions to PFL across all nonnegative λ is equal to

the number of λ-ranges in Λ

n

.

Lemma 5.1. The set of λ-ranges obtained from the

iteration k of PoS, Λ

k

, has the following property:

for two different nonnegative values of λ, λ

a

and λ

b

,

they belong to the same λ-range in Λ

k

if and only if

T

j

(λ

a

) = T

j

(λ

b

) for all j = 0,1,...,k.

Proof. Suppose T

j

(λ

a

) ̸= T

j

(λ

b

) for some j ∈

{0,1,...,k}. Let h be the smallest index such that

T

h

(λ

a

) ̸= T

h

(λ

b

). We know that h ≥ 1 since T

0

(λ

a

) =

T

0

(λ

b

) = {}. It follows that T

j

(λ

a

) = T

j

(λ

b

) for all

j ≤ h − 1. Hence, λ

a

and λ

b

are in the same λ-

range in Λ

h−1

. Since T

h

(λ

a

) ̸= T

h

(λ

b

) and T

h−1

(λ

a

) =

T

h−1

(λ

b

), the node status change interval going from

G

h−1

to G

h

for λ

a

, which is T

h

(λ

a

)\T

h−1

(λ

a

), and

Path of Solutions for Fused Lasso Problems

115

that for λ

b

, which is T

h

(λ

b

)\T

h−1

(λ

b

), must be dif-

ferent. After the partition of their common λ-range in

Λ

h−1

, they must be in separate λ-ranges in Λ

h

. Since

any two λ-ranges never merge, they must also be in

different λ-intervals in Λ

k

. Hence, we have proven

that if λ

a

and λ

b

are in the same λ-range in Λ

k

then

T

j

(λ

a

) = T

j

(λ

b

) for all j = 0,1,...,k.

For the opposite direction of the proof, suppose

λ

a

and λ

b

are not in the same λ-range in Λ

k

. Let

h be the largest index such that λ

a

and λ

b

are in

the same λ-range in Λ

h

(there exists such h because

λ

a

and λ

b

are in the same range of [0,∞) ∈ Λ

0

).

From the proof of the first direction, it follows that

T

h

(λ

a

) = T

h

(λ

b

). Since λ

a

and λ

b

are not in the

same λ-range in Λ

h+1

, it implies that their node

status change intervals, denoted by [i

k1

,i

k2

](λ

a

) and

[i

k1

,i

k2

](λ

b

) are different. Hence, T

h+1

(λ

a

), which is

equal to T

h

(λ

a

) ∪ [i

k1

,i

k2

](λ

a

), and T

h+1

(λ

b

), which is

equal to T

h

(λ

b

) ∪ [i

k1

,i

k2

](λ

b

), must be different.

Theorem 5.2. The solutions of PFL (1) for two dif-

ferent values of λ, x

∗

(λ

a

) and x

∗

(λ

b

), are equal if

and only if λ

a

and λ

b

are in the same λ-range in Λ

n

.

Proof. It follows from Lemma 5.1 that λ

a

and λ

b

are

in the same λ-range in Λ

n

if and only if T

j

(λ

a

) =

T

j

(λ

b

) for all j = 0,1,...,n.

The threshold theorem implies that, if T

j

(λ

a

) =

T

j

(λ

b

) for j = 0,...,n then x

∗

(λ

a

) = x

∗

(λ

b

).

If T

j

(λ

a

) ̸= T

j

(λ

b

) for some j ∈ {0, . . .,n}, then

there is a node i such that i ∈ T

j

(λ

a

) but i /∈ T

j

(λ

b

) (or

i /∈ T

j

(λ

a

) but i ∈ T

j

(λ

b

)). Suppose i ∈ T

j

(λ

a

) but i /∈

T

j

(λ

b

). It follows that x

∗

i

(λ

a

) ≤ a

j

< x

∗

i

(λ

b

). Hence,

x

∗

(λ

a

) ̸= x

∗

(λ

b

).

The bounds of the number of solutions to ℓ

1

-PFL

and PFL across all λ ≥ 0 are given in Theorem 5.3

and 5.4.

Theorem 5.3. The number of different optimal so-

lutions of ℓ

1

-PFL (4) across all λ values is at most

n

3

+3n

2

+2n+2

2

, which is smaller than n

3

when n ≥ 4.

Proof. Theorem 5.2 implies that the number of solu-

tions is equal to the number of λ-ranges in Λ

n

.

The iteration k of the algorithm involves dividing

λ-ranges in Λ

k−1

into groups based on the maximal s-

interval containing i

k

in G

k−1

and then solving for the

node status change interval solution for each maximal

s-interval containing i

k

.

The node status change interval solution for each

maximal s-interval containing i

k

may consist of mul-

tiple solutions, similar to how (7) consists of multiple

cases. If there are p cases of the node status change

interval solution, there will be p − 1 λ-threshold val-

ues that cut into the set of λ-ranges that have the same

maximal s-interval containing i

k

. In Figure 6, for ex-

ample, p = 4. In (7), p = 3. Each threshold con-

tributes to an increase of at most one additional λ-

ranges. In Figure 6(c), there is an increase of 3 λ-

ranges from Figure 6(b) after we use the λ-threshold

values to split the λ-ranges in Figure 6(b).

Our summary in Table 1 shows that the number of

cases of the node status change interval solution for

any type of the maximal s-interval is at most 4. This

implies that for a set of λ-ranges in Λ

k−1

that have

the same maximal s-interval containing i

k

, there will

be at most 3 threshold values of λ that cut through

them and the increase in the number of λ-ranges in

Λ

k

due to this maximal s-interval can be at most 3.

Since the number of s-intervals that contain i

k

, or

the number of [i

kl

,i

kr

] such that 1 ≤ i

kl

≤ i

k

≤ i

kr

≤ n is

i

k

(n − i

k

+ 1), the increase in the number of λ-ranges

from Λ

k−1

to Λ

k

is at most 3i

k

(n − i

k

+ 1).

Λ

0

= {[0,∞)} has only one λ-range. After n iter-

ations, in Λ

n

, the number of λ-ranges is at most 1 +

∑

n

i

k

=1

3i

k

(n −i

k

+1) = 1+

n(n+1)(n+2)

2

=

n

3

+3n

2

+2n+2

2

.

For n ≥ 4, this bound is smaller than n

3

.

Theorem 5.4. The number of different optimal solu-

tions of PFL (1), with q breakpoints, across all non-

negative λ is at most 1 +

3q(n+1)

2

4

, which is smaller

than n

2

q for n ≥ 7.

Proof. The proof is similar to that of Theorem 5.3. In

each graph update, or each iteration, say iteration k of

the algorithm, the increase in the number of λ-ranges

in Λ

k

from Λ

k−1

can be at most 3. When solving

PFL with q breakpoints, the number of iterations is q,

as we update the graph from G

0

to G

1

, and so forth,

until G

q

.

Suppose the k-th smallest breakpoint comes from

the i-th fidelity function, f

pl

i

. At iteration k, we do the

computation on the maximal s-interval that contains

node i. The increase in the number of λ-ranges in this

iteration is then at most 3i(n − i + 1).

There are q

i

iterations where we consider the max-

imal s-interval that contains the node i. Hence, this in-

crease of at most 3i(n − i + 1) happens q

i

times. This

implies that the bound of the number of λ-ranges in

Λ

q

is 1 +

∑

n

i=1

3q

i

i(n − i + 1).

When n is an odd number, max

i=1,...,n

i(n − i +

1) =

(n+1)

2

4

. For an even number n, max

i=1,...,n

i(n −

i+1) =

n

2

+2n

4

<

(n+1)

2

4

. Hence, the bound of the num-

ber of λ-ranges in Λ

q

is at most 1 +

∑

n

i=1

3q

i

(n+1)

2

4

=

1 +

3q(n+1)

2

4

, which is at most n

2

q for n ≥ 7.

Theorem 5.5. PFL (1) is solved by PoS in

ICPRAM 2024 - 13th International Conference on Pattern Recognition Applications and Methods

116

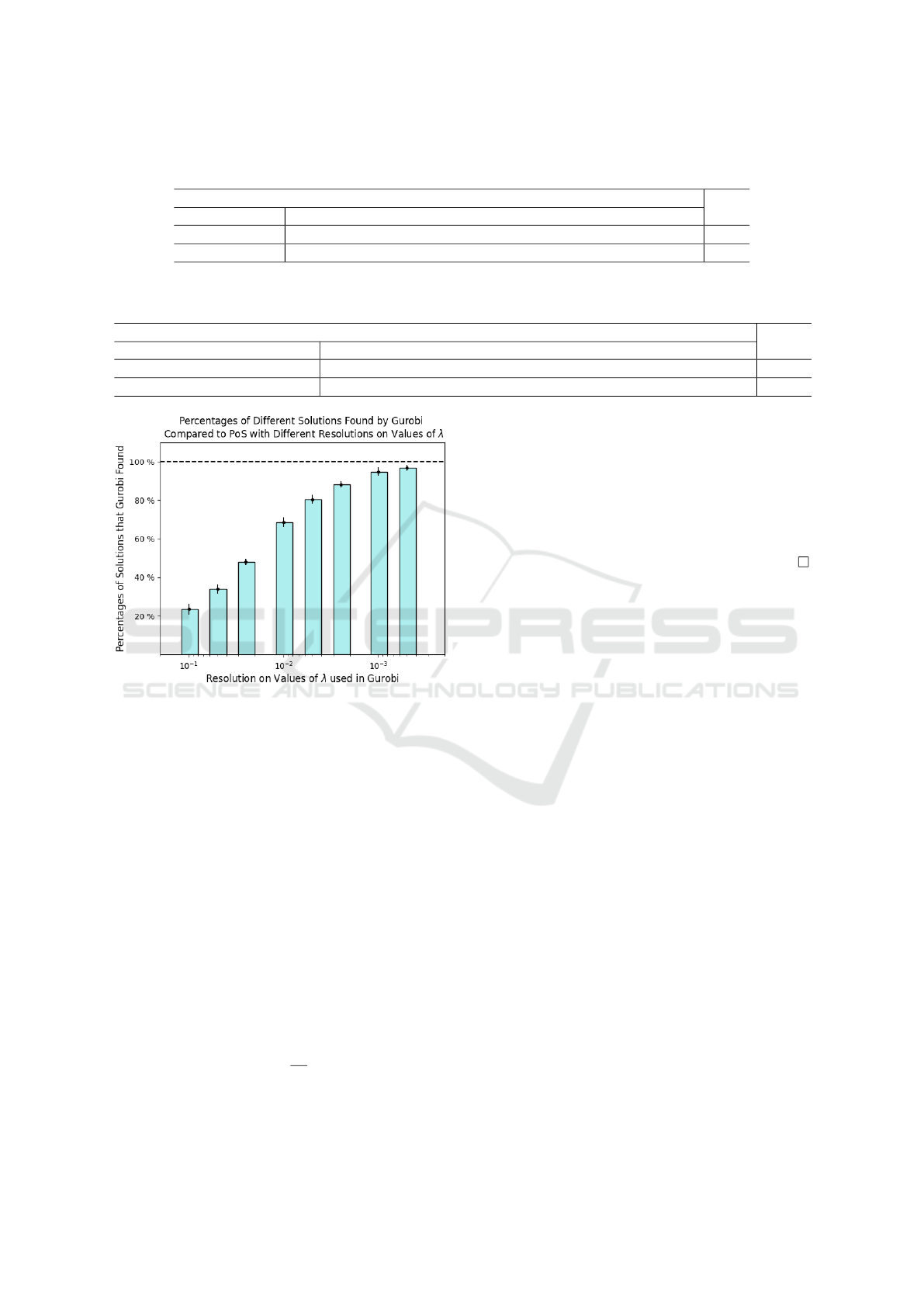

Table 2: The number of solutions found by our path of solutions algorithm and Gurobi as well as the computation times, taken

from one of the 10 experiments. For Gurobi, we vary the resolution of the list of values of λ that we provide to the solver.

Gurobi

PoS

Resolution (∆) 0.1 0.05 0.025 0.01 0.005 0.0025 0.001 0.0005

# Solutions 24 36 53 77 93 101 104 108 114

Time (s) 0.45 0.82 1.63 4.10 8.19 16.43 40.72 83.55 0.42

Table 3: Average percentage of solutions found by Gurobi compared to those found by our path of solutions algorithm as well

as the average relative computation time of Gurobi compared to the path of solutions algorithm, across 10 runs.

Gurobi

PoS

Resolution (∆) 0.1 0.05 0.025 0.01 0.005 0.0025 0.001 0.0005

Avg % solutions found 23% 34% 48% 69% 81% 88% 95% 97% 100%

Avg runtime compared to PoS 1.22 2.40 4.78 11.92 23.72 47.47 119.21 237.05 1

Figure 7: Percentages of different solutions of ℓ

1

-PFL

found by Gurobi with varying resolutions, averaged across

10 experiments. Error bars of 1 standard deviation are dis-

played atop the bar plots. PoS can find all solutions across

all λ, indicated by the dashed line at the 100% level.

O(n

2

qlogn) time where q is the number of break-

points of n convex piecewise linear fidelity functions.

Proof. Suppose the k-th smallest breakpoint comes

from the i-th fidelity function, f

pl

i

. At iteration k of

the algorithm, we compute for the node status change

interval for each group of λ-ranges in Λ

k−1

that have

the same maximal s-interval containing i. There are

i(n − i + 1) such maximal s-intervals. This is also the

number of the node status change interval computa-

tion at iteration k since we do one computation for

one maximal s-interval.

With the same reasoning as in the proof of Theo-

rem 5.4, the number of the computation for node sta-

tus change interval across q iterations is

∑

n

i=1

q

i

i(n −

i + 1), which is bounded by

n

2

q

3

as shown earlier.

Hochbaum and Lu (2017) provided a method that

performs a computation of the node status change in-

terval in O(log n). By relying on the same method

and the data structures used in that work, the total

time complexity due to the node status change interval

computation throughout the algorithm is O(n

2

qlogn).

Other steps such as the sorting of the breakpoints

as well as the updates in the data structures are domi-

nated by the node status change interval computation

time. Therefore, the time complexity of PoS in solv-

ing PFL for all λ ≥ 0 is O(n

2

qlogn).

6 EXPERIMENTS

To demonstrate the performance of PoS in finding dif-

ferent solutions for all λ ≥ 0, we compare it to Gurobi,

a state-of-the-art linear programming solver (Gurobi

Optimization, LLC, 2023). We implemented PoS in

Python and run both PoS and Gurobi on the same lap-

top with Apple M2 CPU, 16GB RAM.

We evaluate both PoS and Gurobi on ℓ

1

-PFL (4)

where the number of samples, n, is 100, the weights

w

1

,...,w

n

and the breakpoints a

1

,...,a

n

are sampled

from a uniform distribution between 0 and 1.

PoS generates the solutions for all λ. However,

Gurobi solves the problem only for a specific value of

λ, which needs to be specified, one at a time. We pro-

vide a list of values of λ to Gurobi: [0, ∆, 2∆, ...,U].

∆ is the resolution for the grid search on the values of

λ, and U is the upper bound of λ. We take the value

of U from the result of PoS. We vary the resolution ∆

and report the result for all resolutions that we tested.

We run the experiment 10 times. In each run, the

weights and breakpoints are resampled. We measure

the computation time of PoS and Gurobi as well as

the number of different solutions that they found. The

results for one particular run, as an example, are re-

ported in Table 2. In this table, we report the number

of different solutions found by both algorithms and

their runtimes. The reported number of solutions for

Path of Solutions for Fused Lasso Problems

117

PoS is the true number of different solutions since it

finds all solutions for all nonnegative λ.

As shown in Table 2, Gurobi, with a low resolu-

tion (large ∆), fails to find some solutions that cor-

respond to λ not included in the list. With ∆ = 0.1,

both PoS and Gurobi have about the same compu-

tation time. However, Gurobi found only 24 differ-

ent solutions while PoS was able to produce all 114

different solutions. As ∆ decreases, the list of λ for

Gurobi becomes finer. Gurobi is able to find more

different solutions, but it also takes longer time. At

the resolution of 0.0005, Gurobi found 108 solutions,

which is close to the total number of different solu-

tions. However, it takes Gurobi more than 80 seconds

while PoS can achieve a better result in 0.42 seconds.

In Table 3 and Figure 7, we report the percent-

ages of solutions found by Gurobi compared to PoS,

averaged across 10 runs. A reported number of 100%

implies that we find all possible solutions. We also re-