A Concept for Daily Assessments During Nutrition Intake: Integrating

Technology in the Nursing Process

Sandra Hellmers

1 a

, Tobias Krahn

2 b

, Martina Hasseler

3

and Andreas Hein

1 c

1

Assistance Systems and Medical Device Technology, Carl von Ossietzky University Oldenburg, Oldenburg, Germany

2

OFFIS e.V. – Institute for Information Technology, Oldenburg, Germany

3

Faculty of Health Sciences, Ostfalia University of Applied Sciences, Wolfsburg, Germany

fi

Keywords:

Nursing Documentation, Nursing Process, Generative AI, Nutrition, Body Tracking, Functional Assessment,

Nursing Language.

Abstract:

The nursing process involves a cyclic sequence of functional and cognitive assessments and diagnosis, care

planning, implementation, and evaluation of care. Ideally, this process should be performed regularly and

documented in a standardized nursing language. However, due to the high workload of nurses, this approach

is not systematically followed. Therefore, we developed a concept that enables a daily, technology-supported

assessment during the activity of nutrition intake. For this purpose, we used camera-based body tracking

to derive the hand and relevant object trajectories to analyze the movements regarding assistance needs and

functional changes over time. We tested the approach of using generative AI to create training data sets. Our

feasibility study has shown that trajectories can be derived and analyzed regarding assistance requirements.

Although the quality is not yet satisfactory, generative AI can be used to create training data. Considering the

rapid pace of further developments in generative AI, the approach seems to be promising. In conclusion, we

believe that the technical support and documentation of the nursing process have the potential to increase the

quality of care while reducing the workload of nurses.

1 INTRODUCTION

Due to the demographic change, the number of peo-

ple reaching old age increases. This development

will also result in an increasing demand for health

and care services. The number of people in need of

care in Germany will increase by 37% by 2055 due

to ageing alone (Statistisches Bundesamt (Destatis),

2023). There is already a gap between the supply

of carers and the demand for care. Additionally, it

was shown that the patient-to-caregiver ratio has mea-

surable negative effects on patient mortality rates and

the stress experienced by caregivers (H

¨

ohmann et al.,

2016; Aiken et al., 2012).

To realize qualified, patient-centered, and needs-

based care, the nursing process was established,

which is a systematic approach to organizing nurs-

ing practice, nursing knowledge, and nursing care

(Hojdelewicz, 2021; Doenges and Moorhouse, 2012).

a

https://orcid.org/0000-0002-1686-6752

b

https://orcid.org/0009-0001-5619-8138

c

https://orcid.org/0000-0001-8846-2282

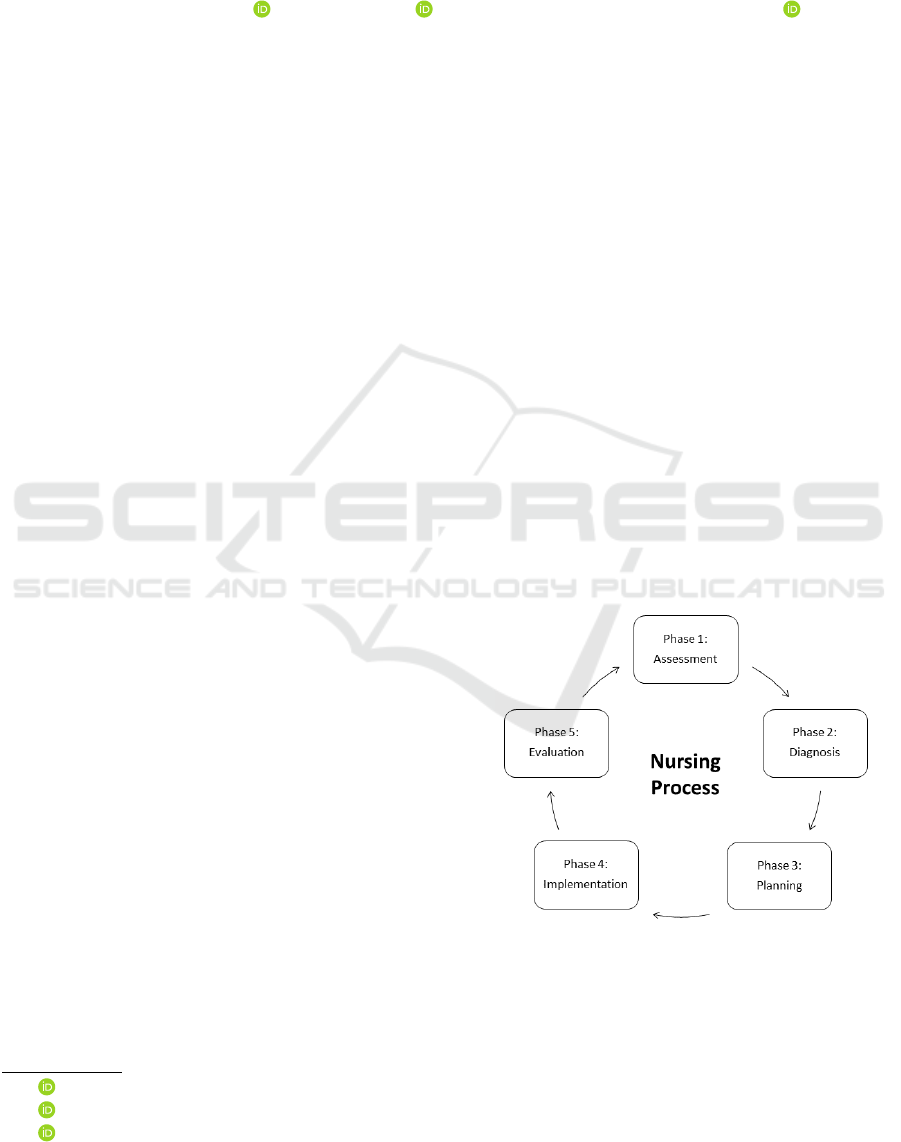

Figure 1: Five phases of the nursing process.

The five-phases model of the nursing process (Do-

enges and Moorhouse, 2012) is shown in Figure 1 and

starts with an assessment to collect information about

the patient, the diseases, and the functional status. In

phase two a diagnosis is made on the basis of the as-

sessment results. Phase three is the treatment and care

planning. The treatments and nursing actions are im-

Hellmers, S., Krahn, T., Hasseler, M. and Hein, A.

A Concept for Daily Assessments During Nutrition Intake: Integrating Technology in the Nursing Process.

DOI: 10.5220/0012428700003657

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 17th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2024) - Volume 2, pages 613-619

ISBN: 978-989-758-688-0; ISSN: 2184-4305

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

613

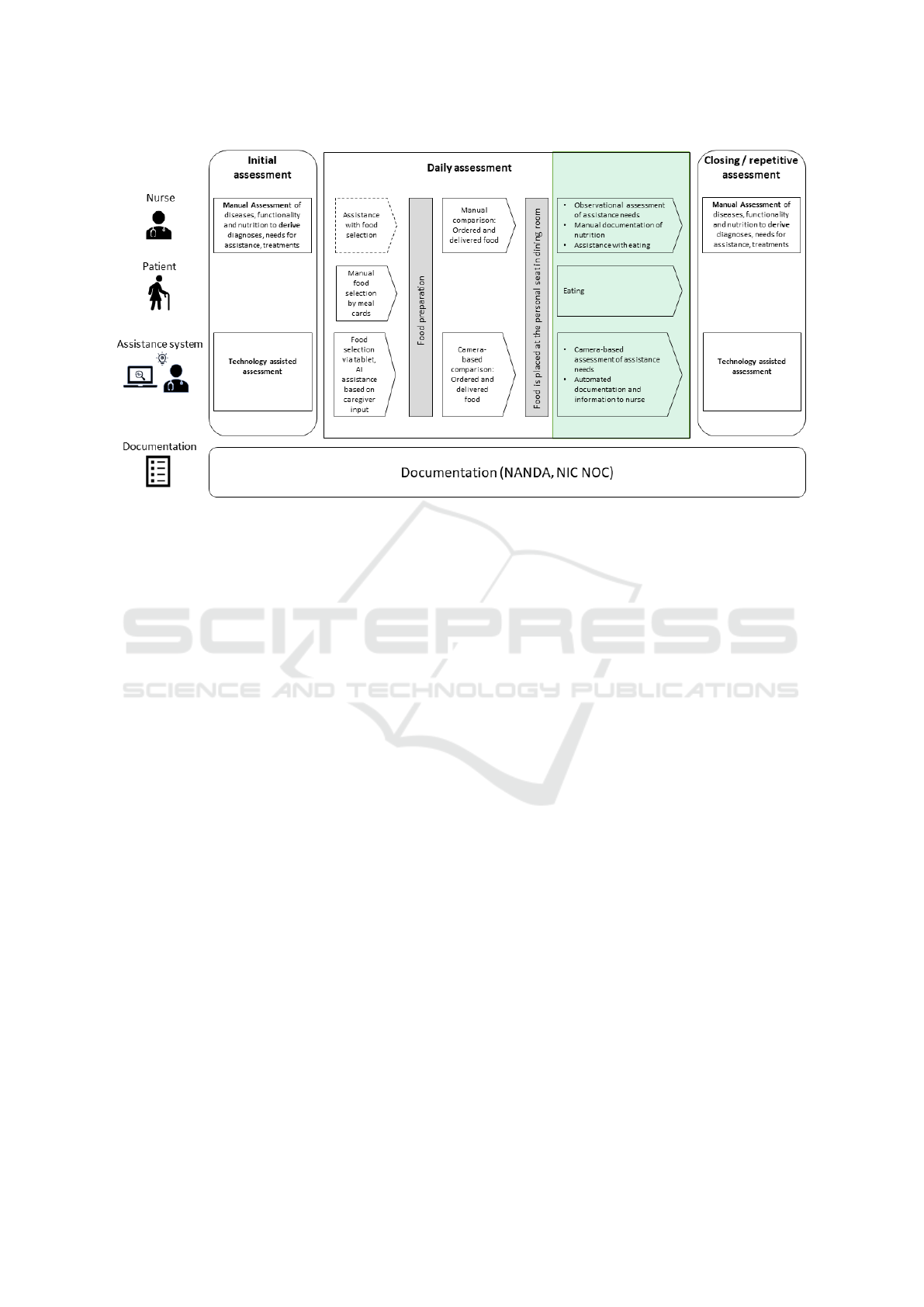

Figure 2: Assessment phases in a care process should be performed regularly. They can be done manually by a nurse or

technology assisted by an assistance system. The focus of this paper is to assess the activity of eating (green box).

plemented in phase four and evaluated in phase five.

Since the care process is of continuous nature, it starts

again with phase one.

Due to the high workload of nurses, there is of-

ten a lack of time to implement the nursing process

regularly at short intervals and, above all, to docu-

ment nursing care adequately. The use of a standard-

ized nursing language is particularly important for the

implementation of electronic health records (EHR)

(Lunney, 2006). Standardized nursing language in-

cludes NANDA (Ackley and Ladwig, 2010) for nurs-

ing diagnoses, the Nursing Interventions Classifica-

tion (NIC) (Bulechek et al., 2012), which describes

the activities that nurses perform as a part of the plan-

ning phase and the Nursing Outcomes Classification

(NOC) (Moorhead et al., 2023) to evaluate the effects

of nursing care.

Ongoing technological development is increasingly

finding its way into the care sector and can sup-

port nurses in their daily work. There are already

a number of approaches to technology-assisted as-

sessments (Hellmers, 2021), technical systems to re-

duce the physical strain on nurses (Brinkmann et al.,

2022), and applications of extended reality (XR) in

the care context (Carroll et al., 2020; W

¨

uller et al.,

2019). Artificial intelligence (AI) in nursing is mainly

used for early disease detection and clinical decision

making, support systems for patient monitoring and

workfow optimisation, nursing training and educa-

tion (Martinez-Ortigosa et al., 2023). Newer develop-

ments such as generative AI (GenAI) also offer inter-

esting possibilities, as they are able to generate syn-

thetic data, which can be used to augment training

data and create diverse datasets for research and med-

ical training (Lancet Regional Health-Europe, 2023).

Therefore, we will focus in this article on a holistic

approach to the technical support of the care process,

considering the application of GenAI. Figure 2 shows

the assessment phase of the care process and the ac-

tions of the nurse, the patient, and the assistance sys-

tem in this phase. This article focuses on the daily

assessment (green box). However, an initial assess-

ment is performed upon admission to a care facility to

determine the patient’s functional status, identify any

assistance needed, and plan care. For short-time stays

a closing assessment is usually performed before dis-

charge to determine the success of treatment. For

longer stays, the assessment should be repeated regu-

larly as part of the repetitive care process. The assess-

ments can be done manually by a nurse or technology-

assisted.

High frequent assessments can also be realized by

monitoring and analyzing daily activities like nutri-

tion intake. Inadequate nutritional and hydration sta-

tus in older people with healthcare needs has rele-

vant negative effects on the immune system, cogni-

tive function, and physical mobility. It is also a risk

factor for susceptibility to infection, delayed wound

healing, falls, delirium, altered metabolism of medi-

cations, deterioration of physical and cognitive func-

tion, and other adverse reactions (Volkert et al., 2022;

Feldblum et al., 2009). The high relevance of nutri-

tion and hydration for maintaining functional status,

autonomy, and quality of life (Volkert et al., 2022) is

HEALTHINF 2024 - 17th International Conference on Health Informatics

614

a major motivation for this work.

The food selection is often done by the patients

via meal cards and can be assisted by nurses if nec-

essary. Technological systems can also assist the pa-

tient with the food selection based on the individual

nurses’ input for each patient. After food preparation,

the nurses check if the ordered and delivered food

matches. This comparison is relevant for disease-

specific diets, allergies, or if the patient can only eat

soft food. Object recognition by assistance systems

can also be used for this comparison. Since the food

intake is at focus in this paper (green box in Figure 2),

the phase of eating is relevant to derive requirements

for assistance needs and changes in the functional

status. The assessment can be conducted through

observation and manual documentation by a nurse.

This observational assessment can also be supported

by a camera-based assessment, in which the assis-

tance systems derive assistance needs and functional

changes based on activity recognition and the analy-

sis of the activities, for example, the hand trajectories

while eating. The results can be automatically doc-

umented in a standardized language (NANDA, NIC,

NOC). In this case, the nurses can screen the informa-

tion and plan the nursing care regarding the nursing

process.

In summary, we concentrate on the overall con-

cept of a technology-assisted daily assessment using

new technological developments and focus on the fol-

lowing research questions:

• What can a high frequent assessment look like in

which the caregiver and the assistance system sup-

port each other in a meaningful way?

• How can new technological developments such as

generative AI be used?

• How can a standardized care language be imple-

mented in this concept?

2 METHODS

2.1 Concept of Daily Assessments

One point of criticism of monitored assessments is

that they only represent selective measurements and

often no progressions are recorded. The aim is there-

fore to derive care-relevant assessment parameters

from complex everyday activities. To do this, com-

plex activities must first be recognized and relevant

parameters derived on the basis of their performance.

Deficits in self-care result for example from lack of

hand functionality and coordination skills. For sen-

sory recognition of activities, aspects such as con-

textualization and parallelism must be taken into ac-

count (actions have a certain duration and sequence

and may also involve interaction with objects). The

digital information should be collected uniformly and

documented in a standardized language. Nursing pro-

fessionals assess information collected through tech-

nology, extend it by own observations, and may uti-

lize a decision support system in the future to deter-

mine and implement practical interventions for nutri-

tion and hydration. With regard to activating care,

nursing interventions are also examined in order to

derive and document suitable strategies for food and

fluid intake. Based on the successful strategies, self-

help can be supported in a targeted manner and the

care staff can be relieved.

2.2 AI-Generated Norm-Trajectories

and Real Measurements

As a specific use case we concentrated on eating soup

in this paper, since holding and moving a spoon with-

out spilling can be quite challenging for people with

functional disabilities. Three study participants took

part in the study to demonstrate the feasibility of our

concept. We used the RGB-D-camera Azure Kinect

DK and its Azure Kinect Body Tracking SDK with

the Direct ML processing mode. The trajectories of

the right hand, right wrist, and right hand tip were

calculated. Since the mouth can’t be tracked with the

Azure Kinect Body Tracking SDK we calculated the

trajectories of the key points head and nose. The tra-

jectories were filtered with a first-order Butterworth

filter with a cutoff frequency of 5 Hz to reduce noise.

We also generated AI-based videos with the Run-

wayML Gen-2 text-to-video tool (RunwayML, 2023)

using the prompt: ”Old man is sitting at a table and

eats soup. He holds a spoon in the right hand”. We

performed a manual body and object tracking of the

AI-generated videos. However, machine learning so-

lutions like MediaPipe (Lugaresi et al., 2019) can be

used for automatic tracking.

3 RESULTS

3.1 Concept of Daily Assessment

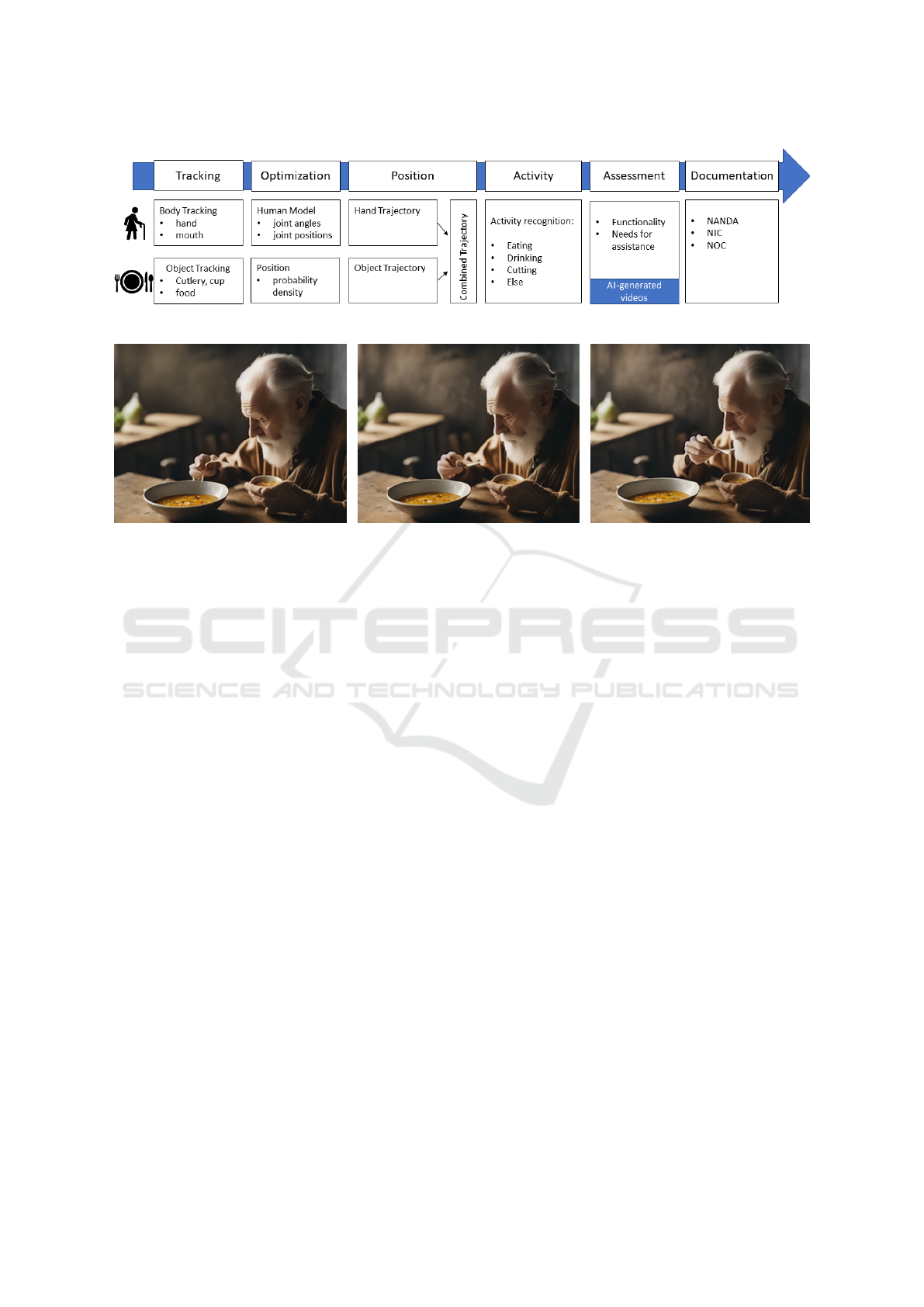

Figure 3 shows the concept of a camera-based

technology-supported assessment. The main aspect

of realizing such an assessment is the recognition of

the human and the food. Therefore, body tracking is

used to recognize the position of the mouth and the

hand. Object recognition is used to determine the po-

A Concept for Daily Assessments During Nutrition Intake: Integrating Technology in the Nursing Process

615

Figure 3: Technical process of activity recognition and assessment.

Figure 4: Screenshots of an AI-generated video of an old man eating soup (RunwayML, 2023).

sition of the food and other relevant objects like cut-

lery or cups. For optimization of the body tracking a

human body model should be considered. This model

consists of valid joint angles and relative positions so

that unrealistic positions are automatically removed.

For objects a position probability density should be

considered, to remove outliers and unrealistic posi-

tions as well as the problem of suddenly disappearing

objects for example due to occlusions. Based on the

optimized body tracking results the hand and object

trajectories can be calculated and related to each other

to get a combined trajectory. Analyzing the combined

trajectories leads to activity recognition. Machine

learning based methods for activity recognition can

be divided into two categories: Direct classification

and sequential modeling (Poppe, 2010). When the

temporal sequence of an activity is important, sequen-

tial models are required to represent this sequence in

the form of state models. Eating and drinking corre-

spond to a sequential activity: the glass must first be

grasped, then brought to the mouth and tilted slightly

in order to drink. Then the glass is put down again.

In the next step the activity can be analyzed and as-

sessed regarding functional and assistance needs. A

focus of inquiry could be the shapes of the trajecto-

ries (intentional movement, movement disorders such

as tremors) and the effectiveness of food consump-

tion, exemplified by the ability to hold and use cutlery

successfully.

Machine learning approaches often require a huge

set of training data of normal and pathological ac-

tivities. The ongoing development of generative AI

might be a game changer in this field. We proved the

concept of using AI-generated videos to create train-

ing data. Since the AI models are trained on many

videos mostly without pathological findings, these

videos are used as norm trajectories. The last step

includes the documentation of the assessment with a

standardized nursing language.

3.2 AI Generated Norm-Trajectories

We created AI-generated videos with textual input.

Figure 4 shows three screenshots of one video. This

video fits the description very well. From the patient

information (old man) and the delivered food (soup)

as well as the context (eating food while sitting at the

table), the correct objects (bowl, spoon) as well as

the correct trajectories (spoon to mouth) and hand ori-

entations (horizontal posture) are derived. However,

there are also some contextual errors or curiosities.

For example, the man is also holding a second plate

of soup in his left hand. The manual body and ob-

ject tracking is shown in Figure 5. The trajectories of

the mouth (green), the knuckles (blue), and the tip of

the spoon (orange) are visualized. The man sits in a

slightly bent posture during the video. There is almost

no movement of the upper body and the head. There-

fore, the position of the mouth varies only in a small

range. The trajectory of the hand starts with an arc to

fill the spoon with soup, followed by a direct trajec-

tory to the mouth. The video stops before the spoon

HEALTHINF 2024 - 17th International Conference on Health Informatics

616

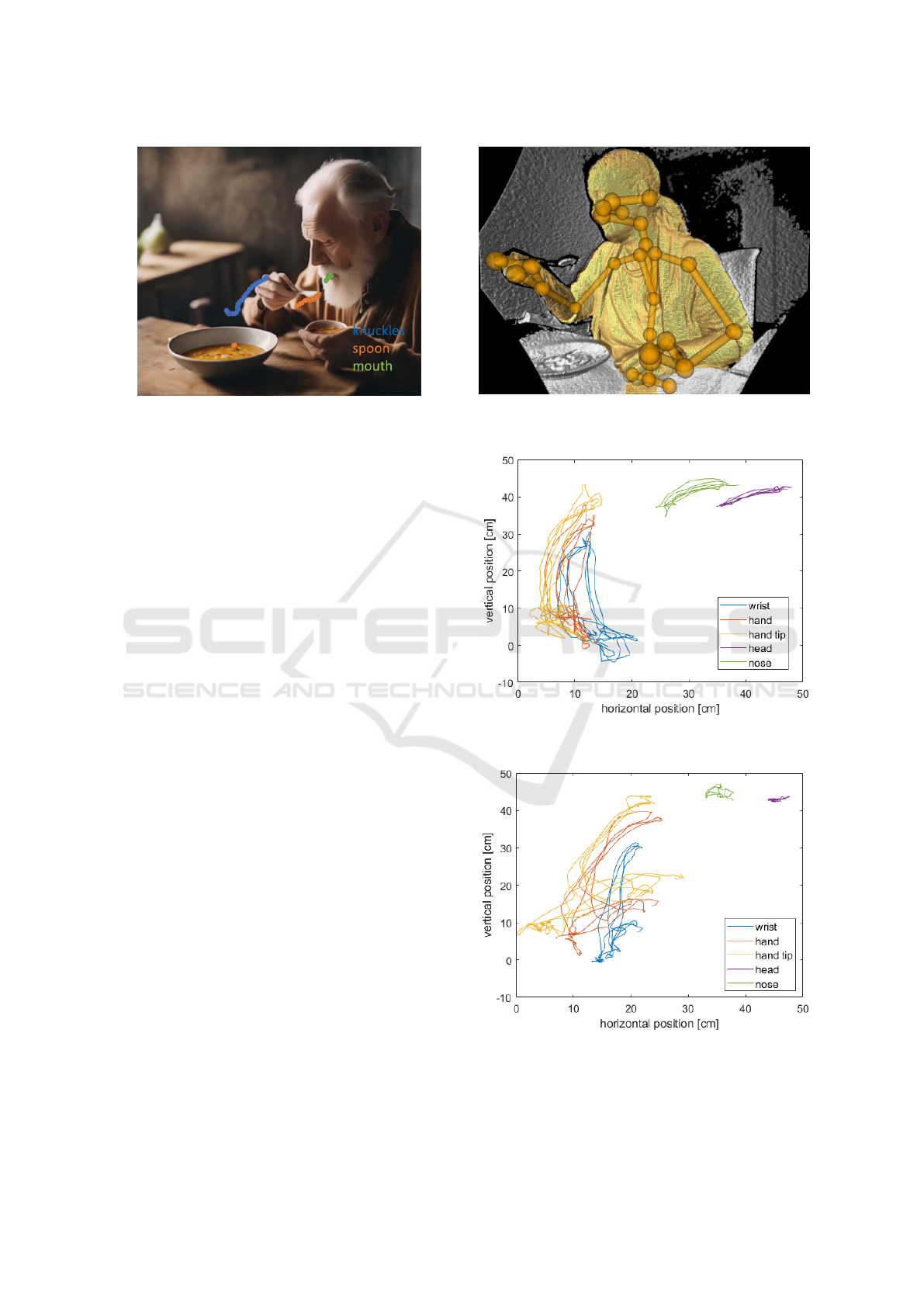

Figure 5: Manual tracking of mouth, knuckles, and spoon

in the AI-generated video (RunwayML, 2023).

reaches the mouth. The measurement of the trajectory

of the spoon (orange) is interrupted quite extensively

due to the disappearance of the spoon in the middle

of the video. The presented video is one of the best-

fitting videos. The challenges and further observa-

tions in generating AI-based videos are described in

the discussion section 4. However, the feasibility of

using generative AI to create training data for activity

recognition could be confirmed. A selection of some

further videos with similar textual prompts as input is

uploaded here (AI-generated Videos, 2023).

3.3 Measurement of Real Trajectories

Figure 6 shows the body tracking results of one par-

ticipant based on depth data using the Azure Kinect

SDK. Similar to the tracking in the AI-generated

video, the trajectories of the relevant key points were

recorded and presented in Figure 7. For the right

hand, the key points are the wrist, the hand itself as

well as the hand tip. Since the mouth can’t be tracked

with the Azure Kinect SDK, the key points head and

nose are visualized. For better comparison and in-

terpretation of the trajectories with the video screen-

shot in Figure 6, a 2D representation was chosen.

However, the positions of the key points are avail-

able in 3D so that the perspective can be adjusted.

The trajectories for three cycles (spoon to mouth) are

shown. The trajectories are similar for each cycle.

The hand position is stable while bringing the spoon

to the mouth. The head is moved in the direction of

the spoon.

Figure 8 shows the trajectories of the same person

while eating with a fork. The trajectories differ from

eating with a spoon. There is a 90-degree tilt of the

hand while using a fork. It can also be seen that the

head movement varies in a smaller range than while

Figure 6: Body tracking of a real measurement of a person

eating with a spoon.

Figure 7: Trajectories of relevant key points of a person

eating with a spoon.

Figure 8: Trajectories of relevant key points of a person

eating with a fork.

eating with a spoon. Comparisons with trajectories of

the other study participants (not shown here) indicate

A Concept for Daily Assessments During Nutrition Intake: Integrating Technology in the Nursing Process

617

that each person has an individual trajectory, but the

trajectories stay similar during several cycles.

3.4 Documentation

The use of a standardized nursing language in daily

work and communication is particularly important for

the care of patients and those in need of care be-

tween the various actors in health and nursing care

(Bernhart-Just et al., 2010).

The nursing diagnosis, which can be derived from

the presented daily assessment is ”feeding self-care

deficit”, which includes for example the inability to

bring food to mouth, get food onto utensils, handle

utensils, open containers, pick up a cup or a glass

(Wilkinson, 2014; Herdman et al., 2021). These in-

abilities can be derived from the evaluated videos and

the object and hand trajectories. Consider the exam-

ple of eating soup: If, for example, the trajectory for

eating soup is similar to the trajectory of the (AI-

) generated norm trajectories, no deficit in eating is

coded. However, if the trajectory deviates from the

norm trajectories, inabilities such as bringing food to

the mouth or handling utensils can be assumed. In

this case, a feeding deficit is documented.

A relevant NOC outcome for these patients is

”Self-Care: Eating”, which is related to the patient’s

ability to prepare and ingest food and fluid indepen-

dently with or without assistive device. Relevant NIC

interventions include ”Feeding” for a patient who is

unable to feed him- or herself, or ”Self-Care Assis-

tance: Feeding,” which means assisting a person to

eat. ”Nutrition monitoring” as another intervention

involves the collection and analysis of patient data to

prevent or minimize malnutrition (Wilkinson, 2014).

Nursing activities also include ”Eating Techniques In-

struction” to demonstrate the proper use of utensils,

assistive devices, and adaptive activities to teach pa-

tients alternative methods of eating and drinking.

4 DISCUSSION

We developed a concept that enables a daily,

technology-supported assessment during the activity

of nutrition intake. For this purpose, we used camera-

based body tracking to derive the hand and rele-

vant object trajectories to analyze the movements re-

garding assistance needs and functional changes over

time. Additionally, we tested the feasibility of using

generative AI to create training data sets.

We were able to demonstrate the general imple-

mentation of the assessment concept in this paper

in a pilot study, although only the nursing diagnosis

of feeding self-care deficit can be included. Other

nutrition-relevant nursing diagnoses like frailty syn-

drome, unbalanced diet, impaired swallowing, fluid

imbalance, and inadequate fluid intake have yet not

been considered. Especially, the feasibility of assess-

ing the diagnosis of impaired swallowing and the un-

balanced diet could be realized in the next step via

video analyses and digital before and after plate pro-

tocols.

Generative AI seems to be a promising approach

to creating training data, especially when consider-

ing the high pace of further developments. We ex-

pect that the quality of AI-generated videos by the

text-to-video function will highly increase in the next

years. This enables the possibility to generate training

data sets without involving and burdening patients. It

holds also the possibility to generate videos with pa-

tients with specific symptoms like tremors or paraly-

sis in the future. However, in addition to the still poor

match between text input and generated videos, and

contextual or physical errors or abnormalities (second

cup of soup, oversized spoon, spoon disappears or

seems to melt), we also observed ethical issues. The

videos with an ”old person” as input often generated a

clich

´

ed video background with dark old wooden fur-

niture. Stereotypes are also used, e.g. by generat-

ing videos where instead of a woman eating soup, an

old woman spills the soup and it runs down her chin.

This also shows the dangers of artificial intelligence in

terms of prejudice and stigmatization. Therefore, syn-

thetically generated data should be used with caution

and it is important to bear in mind that the expertise

of care professionals is required for the meaningful

training of AI and the integration of meaningful data.

This is particularly important if the AI is to take over

clinical pathways, disease progression, or the pre-

diction of deterioration and thus prepare the ground

for professional action for example in clinical deci-

sion support systems. According to the literature, IT-

and AI-based processes in nursing can support clini-

cal decisions or even generate automatic warning sys-

tems and thus also systematically support the nursing

workflow and enable personalized patient care (Sens-

meier, 2017; Buchanan et al., 2020). But new tech-

nologies in nursing influence the interaction between

the actors (caregivers and care recipients), the organi-

zational processes in the nursing setting, and the as-

sociated information relationships between the actor

(Zerth et al., 2021). Therefore, we suggest that deci-

sions need to be made with the actors, especially the

nurses, about what data needs to be collected and in-

tegrated, for what reasons, and with what purpose, so

that it is adequate for the care process and decision-

making.

HEALTHINF 2024 - 17th International Conference on Health Informatics

618

ACKNOWLEDGEMENTS

This work was funded by the Lower Saxony Ministry

of Science and Culture under grant number 11-76251-

12-10/19 ZN3491 within the Lower Saxony “Vorab”

of the Volkswagen Foundation and supported by the

Center for Digital Innovations (ZDIN). We would like

to thank Linda B

¨

uker and Lea Ortmann for their valu-

able input and feedback.

REFERENCES

Ackley, B. J. and Ladwig, G. B. (2010). Nursing diagnosis

handbook-e-book: An evidence-based guide to plan-

ning care. Elsevier Health Sciences.

AI-generated Videos (2023). https://cloud.uol.de/s/bKcKG

y3QKG7LdEm.

Aiken, L. H., Sermeus, W., Van den Heede, K., Sloane,

D. M., Busse, R., McKee, M., Bruyneel, L., Raf-

ferty, A. M., Griffiths, P., Moreno-Casbas, M. T., et al.

(2012). Patient safety, satisfaction, and quality of hos-

pital care: cross sectional surveys of nurses and pa-

tients in 12 countries in europe and the united states.

BMJ, 344:e1717.

Bernhart-Just, A., Lassen, B., and Schwendimann, R.

(2010). Representing the nursing process with nursing

terminologies in electronic medical record systems: a

swiss approach. CIN: Computers, Informatics, Nurs-

ing, 28(6):345–352.

Brinkmann, A., B

¨

ohlen, C. F.-v., Kowalski, C., Lau, S.,

Meyer, O., Diekmann, R., and Hein, A. (2022).

Providing physical relief for nurses by collaborative

robotics. Scientific Reports, 12(1):8644.

Buchanan, C., Howitt, M. L., Wilson, R., Booth, R. G., Ris-

ling, T., and Bamford, M. (2020). Predicted influences

of artificial intelligence on the domains of nursing:

scoping review. JMIR nursing, 3(1):e23939.

Bulechek, G. M., Butcher, H. K., Dochterman, J. M. M.,

and Wagner, C. (2012). Nursing interventions classi-

fication (NIC). Elsevier Health Sciences.

Carroll, W. M. et al. (2020). Emerging technologies for

nurses: Implications for practice. Springer Publishing

Company.

Doenges, M. E. and Moorhouse, M. F. (2012). Application

of nursing process and nursing diagnosis: an interac-

tive text for diagnostic reasoning. FA Davis.

Feldblum, I., German, L., Bilenko, N., Shahar, A., Enten,

R., Greenberg, D., Harman, I., Castel, H., and Sha-

har, D. R. (2009). Nutritional risk and health care use

before and after an acute hospitalization among the el-

derly. Nutrition, 25(4):415–420.

Hellmers, S. (2021). Technikgest

¨

utztes klinisches Assess-

ment der k

¨

orperlichen Funktionalit

¨

at f

¨

ur

¨

altere Men-

schen. Verlag Dr. Hut.

Herdman, T. H., Kamitsuru, S., and Lopes, C. (2021).

NANDA International Nursing Diagnoses: Defini-

tions and Classification 2021-2023. New York:

Thieme, 12th edition.

H

¨

ohmann, U., Lautenschl

¨

ager, M., and Schwarz, L. (2016).

Belastungen im pflegeberuf: Bedingungsfaktoren, fol-

gen und desiderate. Pflege-Report, pages 73–89.

Hojdelewicz, B. M. (2021). Der Pflegeprozess:

Prozesshafte Pflegebeziehung. Wien: facultas.

Lancet Regional Health-Europe, T. (2023). Embracing gen-

erative ai in health care. The Lancet Regional Health-

Europe, 30.

Lugaresi, C., Tang, J., Nash, H., McClanahan, C., Uboweja,

E., Hays, M., Zhang, F., Chang, C.-L., Yong, M., Lee,

J., et al. (2019). Mediapipe: A framework for per-

ceiving and processing reality. In Third workshop on

computer vision for AR/VR at IEEE computer vision

and pattern recognition (CVPR), volume 2019.

Lunney, M. (2006). Helping nurses use nanda, noc, and

nic: Novice to expert. JONA: The Journal of Nursing

Administration, 36(3):118–125.

Martinez-Ortigosa, A., Martinez-Granados, A., Gil-

Hern

´

andez, E., Rodriguez-Arrastia, M., Ropero-

Padilla, C., Roman, P., et al. (2023). Applications of

artificial intelligence in nursing care: A systematic re-

view. Journal of Nursing Management, 2023.

Moorhead, S., Swanson, E., and Johnson, M. (2023). Nurs-

ing Outcomes Classification (NOC)-e-book: Mea-

surement of health outcomes. Elsevier Health Sci-

ences.

Poppe, R. (2010). A survey on vision-based human action

recognition. Image and vision computing, 28(6):976–

990.

RunwayML (2023). https://runwayml.com. accessed:

2023-11-15.

Sensmeier, J. (2017). Harnessing the power of artificial in-

telligence. Nursing management, 48(11):14–19.

Statistisches Bundesamt (Destatis) (2023). Pflegevoraus-

berechnung: 1,8 millionen mehr pflegebed

¨

urftige bis

zum jahr 2055 zu erwarten. Pressemitteilung Nr. 124

vom 30. M

¨

arz 2023, https://www.destatis.de/DE/Press

e/Pressemitteilungen/2023/03/PD23\ 124\ 12.html.

Volkert, D., Beck, A. M., Cederholm, T., Cruz-Jentoft, A.,

Hooper, L., Kiesswetter, E., Maggio, M., Raynaud-

Simon, A., Sieber, C., Sobotka, L., et al. (2022). Es-

pen practical guideline: Clinical nutrition and hydra-

tion in geriatrics. Clinical Nutrition, 41(4):958–989.

Wilkinson, J. M. (2014). Pearson nursing diagnosis hand-

book with NIC interventions and NOC outcomes.

Pearson.

W

¨

uller, H., Behrens, J., Garthaus, M., Marquard, S., and

Remmers, H. (2019). A scoping review of augmented

reality in nursing. BMC nursing, 18(1):1–11.

Zerth, J., Jaensch, P., and M

¨

uller, S. (2021). Technik,

pflegeinnovation und implementierungsbedingungen.

In Jacobs, K., Kuhlmey, A., Greß, S., Klauber, J., and

Schwinger, A., editors, Pflege-Report 2021: Sicher-

stellung der Pflege: Bedarfslagen und Angebotsstruk-

turen, pages 157–172, Berlin, Heidelberg. Springer

Berlin Heidelberg.

A Concept for Daily Assessments During Nutrition Intake: Integrating Technology in the Nursing Process

619