On Feasibility of Transferring Watermarks from Training Data to

GAN-Generated Fingerprint Images

Venkata Srinath Mannam, Andrey Makrushin and Jana Dittmann

Department of Computer Science, Otto von Guericke University, Magdeburg, Germany

Keywords:

Watermarking, Biometrics, Synthetic Fingerprints, Synthetic Data Detection, GAN, Pix2pix.

Abstract:

Due to the rise of high-quality synthetic data produced by generative models and a growing mistrust in images

published in social media, there is an urgent need for reliable means of synthetic image detection. Passive

detection approaches cannot properly handle images created by ”unknown” generative models. Embedding

watermarks in synthetic images is an active detection approach which transforms the task from fake detection

to watermark extraction. The focus of our study is on watermarking biometric fingerprint images produced

by Generative Adversarial Networks (GAN). We propose to watermark images used for training of a GAN

model and study the interplay between the watermarking algorithm, GAN architecture, and training hyper-

parameters to ensure the watermark transfer from training data to GAN-generated fingerprint images. A

hybrid watermarking algorithm based on discrete cosine transformation, discrete wavelet transformation, and

singular value decomposition is shown to produce transparent logo watermarks which are robust to pix2pix

network training. The pix2pix network is applied to reconstruct realistic fingerprints from minutiae. The

watermark imperceptibility and robustness to GAN training are validated by peak signal-to-noise ratio and bit

error rate respectively. The influence of watermarks on reconstruction success and realism of fingerprints is

measured by Verifinger matching scores and NFIQ2 scores respectively.

1 INTRODUCTION

Since the invention of Generative Adversarial Net-

works (GANs) (Goodfellow et al. 2014), there has

been a significant rise in related research from six

papers in 2015 to 762 in 2020 (Farou et al. 2020).

GANs can generate realistic synthetic samples which

are not tied to real persons, making such data very

useful in areas with limited real data or strict restric-

tions on private data use such as medical research or

biometrics. Synthetic images are often referred to as

deepfakes because deep learning techniques are uti-

lized for their production. Due to the security threats

that may be caused by deepfakes (read synthetic im-

ages), the Chinese government banned production of

deepfakes that are not watermarked (Edwards 2022).

The same might happen in other countries soon.

The primary concern of our initial study was syn-

thesis of realistic biometric fingerprint images. It has

been shown in (Bahmani et al. 2021, Bouzaglo and

Keller 2022, Makrushin et al. 2023) that GANs is a

valid approach for this purpose. Despite all the ben-

efits, GAN-generated biometric fingerprints can be

misused for e.g. identity fraud. The study in (Bon-

trager et al. 2018) shows that synthetic fingerprints

can mimic multiple identities without requiring a spe-

cific individual’s fingerprint. Another example of ma-

licious use of synthetic fingerprints is a fingerprint

morphing attack (Makrushin et al. 2021b). Hence,

our current concern is the active protection of syn-

thetic fingerprints by watermarking them.

Indeed the passive protection approach, that is a

”blind” detection of synthetic images, has its natural

limits when it comes to detection of fakes produced

by ”unknown” generative models. Moreover, synthe-

sis techniques and corresponding generative models

constantly improve over time. Hence, embedding a

watermark in all images produced by GANs is seen

as a remedy to the problem of growing fake media.

Watermarks enable an active protection transforming

the task of fake detection to the task of watermark ex-

traction.

In contrast to the most common goal of water-

marking generative models which is intellectual prop-

erty rights (IPR) protection, our motivation is linking

synthetic fingerprints to a particular generative model.

We currently disregard the fingerprint’s integrity veri-

fication due to the technical aspects of our embedding

Mannam, V., Makrushin, A. and Dittmann, J.

On Feasibility of Transferring Watermarks from Training Data to GAN-Generated Finger print Images.

DOI: 10.5220/0012418100003660

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 19th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2024) - Volume 4: VISAPP, pages

435-445

ISBN: 978-989-758-679-8; ISSN: 2184-4321

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

435

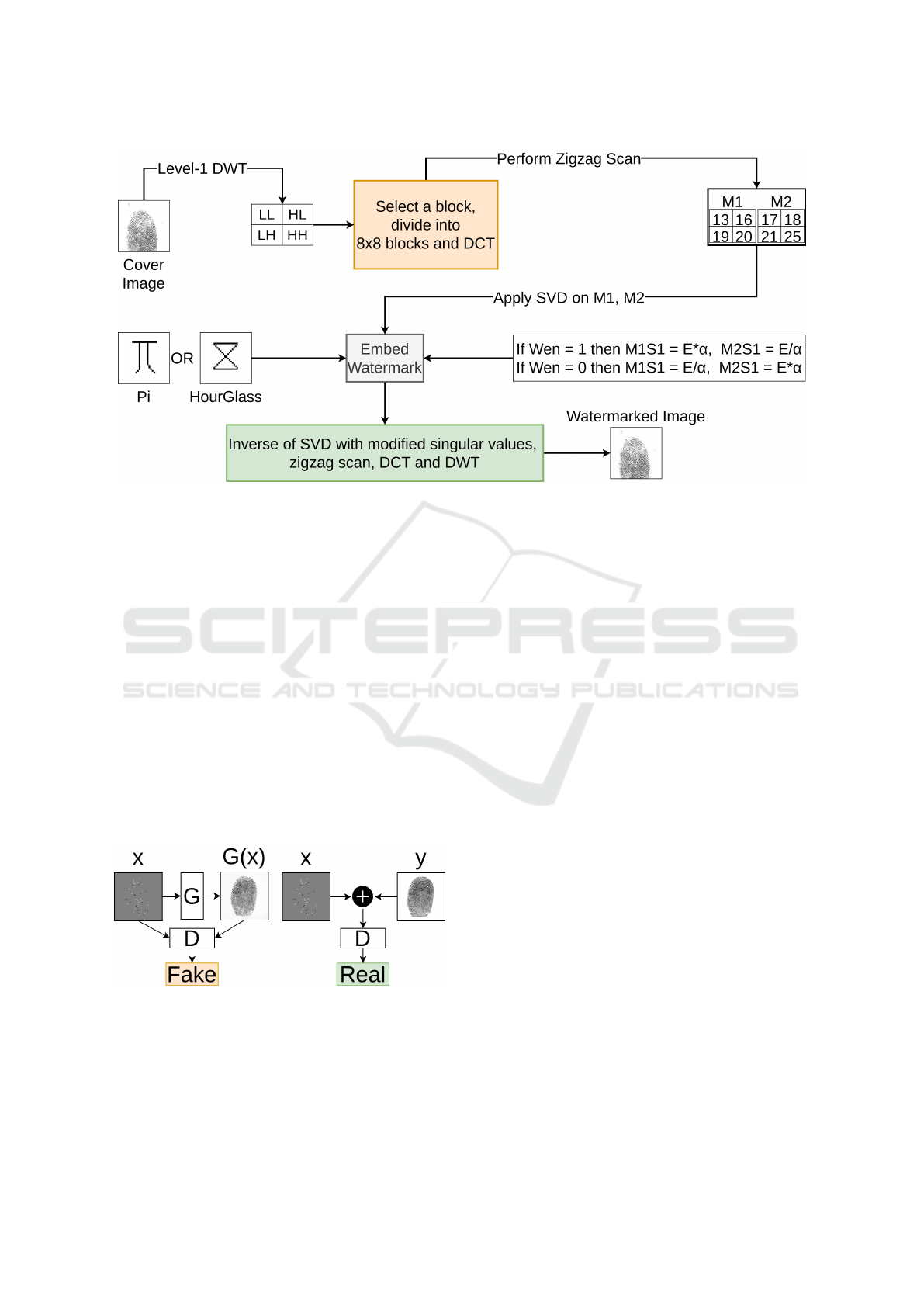

Figure 1: Overall schematic process.

algorithm. It means that our approach is applicable

mainly for annotation purposes. In other words, if we

have successfully extracted the watermark, we know

that the fingerprint image has been produced by our

model. If we cannot extract the watermark, we can

say nothing about the origin of the fingerprint image.

We see it also as a protection of the authors of a gen-

erative model. If the watermark has been removed by

perpetrators, the sample is not authentic anymore and

the model creators take no responsibility for its mali-

cious use. The study of watermark security is subject

to future research.

Note that the watermarking mechanism needs to

be an integral part of the GAN model, because after

sharing the model, the image generation is not con-

trolled by model creators and there is no chance to

watermark synthetic images. Hence, we propose to

embed transparent and robust watermarks into train-

ing images so that after the model training the gener-

ated fingerprint images contain the same transparent

watermark and are still of high utility. Figure 1 pro-

vides an overview of our experimental process.

Our evaluation addresses two objectives: assess-

ing the retrieved watermark and evaluating the qual-

ity of the fingerprints generated by the GAN. For

the former, we utilize the Peak Signal-to-Noise Ratio

(PSNR) to measure imperceptibility and the Bit Error

Rate (BER) to calculate the watermark’s robustness.

For the latter, we employ NIST Fingerprint Image

Quality scores (NFIQ2) (NIST 2023) to determine the

realistic appearance of the fingerprints, and Verifinger

matching scores (Neurotechnology 2023) to evaluate

the fingerprint reconstruction success.

Our contributions can be summarized as follows:

• We introduce a novel combination of a traditional

watermarking algorithm based on DCT, SVD and

DWT (Kang et al. 2018) and the pix2pix net-

work for fingerprint generation (Makrushin et al.

2023) which ensures the transfer of logo water-

marks from training to GAN-generated fingerprint

images;

• We derive the optimal parameters of the water-

marking algorithm along with optimal pix2pix hy-

perparameters;

• We extensively evaluate the proposed combina-

tion showing that our GAN models generate de-

cent fingerprint images from which watermarks

can be extracted.

Hereafter, the paper is structured as follows: Sec-

tion 2 introduces relevant literature, followed by a de-

tailed description of our concept and implementation

in Section 3. Section 4 summarizes our experiments,

Section 5 contains results and discussion and Section

6 concludes the paper offering a summary and future

work.

2 RELATED WORK

2.1 Deepfake Detection

Our focus is on synthesis of fingerprint images via

GANs. GANs are currently the state of the art in im-

age generation, creating high-resolution, photorealis-

tic images (Karras et al. 2018, Isola et al. 2017), and,

most importantly, the generated images are deepfakes.

These deepfakes are a threat if misused. A study re-

ported in (Marra et al. 2019) addresses this issue by

detecting GAN-generated images through the unique

noise residual patterns left behind by generative mod-

els. The study in (Yu et al. 2019) introduces a neural

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

436

network classifier capable of identifying the origin of

image generation. These detection approaches can be

attributed as ”passive”.

2.2 Watermarks

The ease of copying, storing, or modifying data has

also led to increased malicious activities. To combat

such activities, watermarking techniques have been

introduced, where visible or invisible information is

embedded into a carrier signal. Further, watermarks

are seen as additional information with which the ori-

gin is annotated. Imperceptibility, robustness, secu-

rity, and recovery are the most important characteris-

tics of watermarking. Various forms of watermarks,

including text, images, audio, or video, can be em-

bedded. Here, we focus on embedding text and im-

ages into images. Embedding a watermark into im-

ages can be done via spatial or frequency domain-

based methods, each with its own advantages and

drawbacks. Spatial domain techniques such as LSB,

correlation-based techniques, spread spectrum tech-

niques, and patchwork work by manipulating pixel

values and bitstreams directly offer computational

simplicity. Frequency domain techniques such as

Discrete Wavelet Transform (DWT), Discrete Cosine

Transform (DCT), Discrete Fourier Transform (DFT),

Singular Value Decomposition (SVD) etc. are com-

plex but robust against resizing or cropping (attacks).

As per (Kumar et al. 2018), using a specific water-

marking method may only satisfy one or two char-

acteristics of watermarking. To address this, hybrid

techniques like DCT+SVD (Tian et al. 2020) and

DWT+DCT+SVD (Kang et al. 2018) have been in-

troduced. We use a hybrid technique based on the

frequency domain.

2.3 Fingerprint Synthesis via GANs

Biometrics is a field that greatly benefits from

high-quality data generated by GANs. The rea-

son is that acquiring biometric data from real per-

sons is challenging due to high costs and privacy

concerns caused by data protection regulations like

the European Union (EU) General Data Protection

Regulation (GDPR). Current open-source fingerprint

databases are limited in quality and number of sam-

ples.

To address this, the Anguli (Ansari 2011) syn-

thetic fingerprint generator, based on the SFinGe al-

gorithm (Cappelli 2004), has been developed. How-

ever, the patterns generated by Anguli lack realism

and therefore can be easily recognized as such. To

generate more realistic synthetic fingerprints, GANs

have been employed in (Bouzaglo and Keller 2022,

Makrushin et al. 2023) showing their ability to create

convincing synthetic fingerprints.

Fingerprint synthesis can be achieved through var-

ious approaches, including physical, statistical, or

data-driven (GAN) modeling. Current statistical and

physical modeling approaches tend to produce fin-

gerprints that lack realism. They are usually visu-

ally distinguishable from real fingerprints. In con-

trast, GAN-based approaches usually produce realis-

tic synthetic fingerprints. Modeling approaches can

be combined by, for instance, applying CycleGAN

that makes outcomes of a model-based generator ap-

pear realistic (Wyzykowski et al. 2020). Another

technique in (Bahmani et al. 2021) uses StyleGAN

to generate a fingerprint from a random latent vec-

tor. Also, the pix2pix network can be employed to

reconstruct a fingerprint from a given minutiae tem-

plate (Makrushin et al. 2023).

2.4 Watermarking Generative Models

The trend of watermarking Deep Neural Networks

(DNNs) has recently gained prominence. From now

on the watermarks are embedded not in media, but

in functions. Given this paradigm shift and the ur-

gent need for Intellectual Property Rights (IPR) pro-

tection in DNNs, research in (Barni et al. 2021) states

the similarities, challenges, and errors to avoid in

DNN watermarking in comparison to traditional wa-

termarking. A study in (Chen et al. 2019) proposes a

watermarking approach in which the watermark is di-

rectly integrated into the weights of specific layers of

the network. Unlike many other DNN watermarking

methods primarily focused on IPR protection, this ap-

proach also tackles the challenge of uniquely tracking

users. A study in (Wu et al. 2021) employs a dual-

DNN-network approach. They train a GAN model

and its output is fed to another network tasked with re-

constructing a predefined watermark. Their key nov-

elty includes an objective function that calculates the

watermark loss and also, a secret key that is needed to

decode the watermark.

The first study addressing the transferability of ar-

tificially embedded watermarks from training images

to outputs produced by GAN (Yu et al. 2021) pro-

poses the four-step approach. The first step begins

with training an encoder-decoder network. Second,

the trained encoder network is utilized to embed a

watermark into the training data set. Third, GAN is

trained using the watermarked dataset. Fourth, the de-

coder is employed to extract the watermark from the

GAN-created deepfakes.

Another study by (Fei et al. 2022) offers GAN

On Feasibility of Transferring Watermarks from Training Data to GAN-Generated Fingerprint Images

437

Intellectual Property Protection (IPR) using a super-

vised method. The methodology begins with the

training of a deep learning-based image watermark-

ing network that incorporates an imperceptible wa-

termark into an image employing an encoder-decoder

network. Following the successful training of the wa-

termarking network, the decoder component remains

fixed and is leveraged in the GAN training process

to ensure the integration of the watermark within the

images generated by the GAN. The novelty lies in

a combined loss function comprising both the con-

ventional GAN loss and the watermark loss. They

have also introduced an image processing layer capa-

ble of performing data augmentation operations, en-

suring the robustness of the embedded watermark.

In contrast to (Wu et al. 2021, Yu et al. 2021, Fei

et al. 2022), our approach combines traditional wa-

termarking with a pix2pix-based fingerprint genera-

tor, requiring no training of the watermarking part and

no watermark loss function during the GAN training.

The main novelty is in finding a viable combination

of a GAN-based fingerprint generator and a water-

marking algorithm that produces watermarks robust

to GAN training.

3 OUR CONCEPT

In this research, we present a novel approach that

aims to watermark the images produced by the gen-

erator of a trained GAN model using traditional dig-

ital watermarking techniques applied to GAN train-

ing images. We first embed a watermark into the

training dataset with the selected digital watermark-

ing method. Next, the data pre-processing step per-

forms minutiae map creation from the fingerprint.

Finally, training with the modified pix2pix network

from (Makrushin et al. 2023) is performed. The

reason for selecting pix2pix as a generative model is

its ability to produce high-quality realistic fingerprint

images. The main criteria for selection of the water-

marking algorithm is its conformity with the pix2pix

model implying that the watermark survives in a GAN

training process. The repositories containing the wa-

termarking algorithm and the GAN model code are

available at https://github.com/mannam95/dct svd

in dwt watermark and https://gitti.cs.uni-magdeburg.

de/Andrey/gensynth-pix2pix respectively.

3.1 Watermarking Techniques

For our goal of annotating the model, we choose

watermarking methods that ensure imperceptibility

(making the watermarked image indistinguishable

from the original) and robustness (withstanding the

GAN training process).

We employ two hybrid watermarking approaches,

namely DCT-SVD-in-DWT (Kang et al. 2018) and

DWT-DCT-SVD (guofei 2022) to embed the water-

mark into the training data. The former enables the

embedding of images, while the latter can embed

text. During our initial studies, the text watermarking

had poor results, so we focused on the DCT-SVD-

in-DWT (Kang et al. 2018) method (hereafter, it is

referred to as IWA). IWA involves watermark embed-

ding and extraction. The watermark information or

payload, a 2-bit 32x32 binary image, is embedded

into a cover image. The binary logo size varies de-

pending on the cover image size. For more details see

(Kang et al. 2018). IWA extracts the watermark di-

rectly from the watermarked image requiring no orig-

inal cover image or watermark logo/text. Note that

some algorithms may require the original cover im-

age.

In our approach, the embedding key is not given

explicitly. It is rather implicit in the embedding al-

gorithm so that the embedding key can be seen in

a combination of the watermark’s location, size and

pattern. More precisely, our embedding key is a tu-

ple of logo-size, logo-shape, and coordinates where

the top left pixel of the logo is located. Although the

watermarking algorithm is publicly known, decoding

the logo without this tuple is extremely challenging.

In essence, it requires an exhaustive search with all

possible combinations. Randomization of the water-

mark location and encryption of the watermark pat-

tern would introduce the strong security into our wa-

termark embedding scheme. For the matter of sim-

plicity, we currently work with a particular watermark

logo at a fixed location.

At a high level, the process of watermark embed-

ding and extraction is given by Equations 1, 2 and 3.

K = (coordinates, size, shape) (1)

I

∗

= IWA

emb

(I,W, K) (2)

W

∗

= IWA

ext

(I

∗

, K) (3)

In Equation-1 coordinates, size, and shape are of

the watermark logo. I represents the original cover

image, which is a fingerprint in our case. W denotes

the watermark information being embedded. The wa-

termarked image is represented by I

∗

, while W

∗

refers

to the recovered watermark. IWA

emb

, and IWA

ext

de-

note watermark embedding and extraction functions

respectively. Given I and W , IWA

emb

produces I

∗

.

Subsequently, given a watermarked image I

∗

, IWA

ext

extracts the watermark W

∗

.

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

438

Figure 2: Watermarking process adopted from (Kang et al. 2018). “Wen” stands for the watermark image bit. “M1S1” and

“M2S1” are the largest singular values of the applied SVD on the respected “M1” and “M2”. “E” is the mean of the largest

singular values. α is the embedding strength. Cover image is from Neurotechnology CrossMatch Dataset (Neurotechnology

2023).

The overall watermarking process is depicted in

Figure 2. First, the cover image undergoes a trans-

formation called 2D-DWT. From the resulting sub-

bands (LL, HL, LH, HH), one is chosen. This selected

sub-band is then divided into non-overlapping blocks

of size 8x8. For each block, another transformation

called 2D-DCT is applied. From the resulting coef-

ficient matrix, 8 elements are selected based on their

index using zig-zag scanning. These 8 elements are

arranged in two matrices. Both matrices go through

SVD, and the largest singular values are modified ac-

cordingly as shown in Figure 2. To extract the water-

mark, the same steps are applied. Instead of modify-

ing the singular values, the watermark information is

extracted. For more details see (Kang et al. 2018).

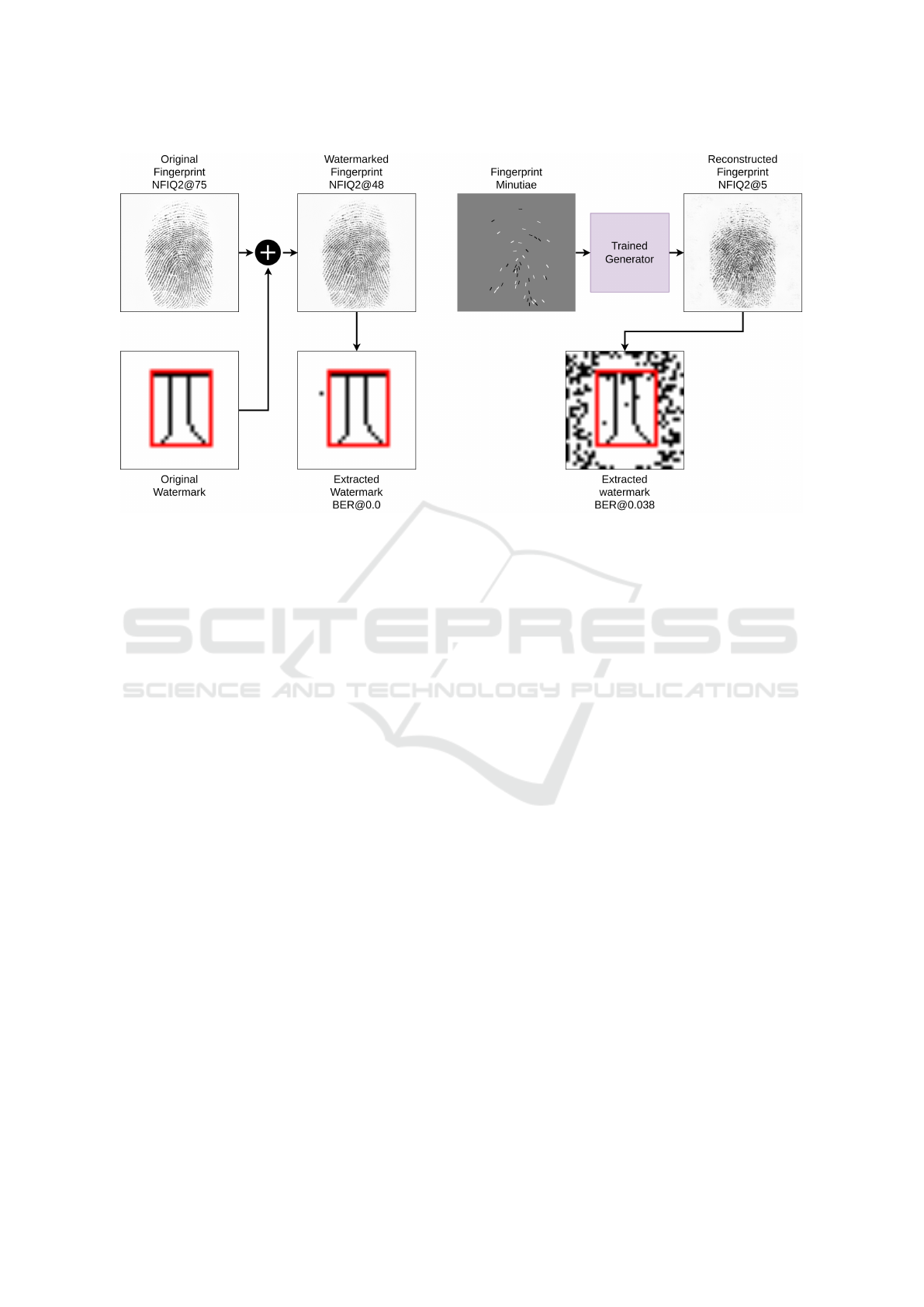

Figure 3: A high-level overview of the pix2pix architecture.

G stands for generator, and D for discriminator. x is the

minutiae map, y is the watermarked fingerprint, and G(x) is

a fingerprint synthesized by G.

3.2 Fingerprint Generative Models

Our experimental approach utilizes the pix2pix net-

work, specifically a conditional generative adversar-

ial network (CGAN). The CGAN consists of two key

components: a generator and a discriminator, which

undergo adversarial training. On the one hand, the

generator is based on U-Net architecture proposed by

(Ronneberger et al. 2015) and adapted by (Isola et al.

2017), which is responsible for image-to-image trans-

lation. On the other hand, the discriminator functions

as a patch-based binary classifier. The initial design

of the pix2pix architecture (Isola et al. 2017) was in-

tended for 256x256 pixel images. In our study, the

fingerprint images have native resolution of 500 ppi

and depicted on 515x512 pixel images. Hence, we

adopt the modified version of the pix2pix network de-

veloped by (Makrushin et al. 2023). At a high level,

the pix2pix network can be seen in Figure 3. For more

details see (Isola et al. 2017, Makrushin et al. 2023).

The pix2pix network (Isola et al. 2017) genera-

tor requires two images: one for conditioning and the

other as the true target. Here, the conditioning im-

age is the minutiae map of the fingerprint, while the

true targets are the watermarked fingerprints. To cre-

ate the minutiae map, we extract minutiae using the

Neurotechnology VeriFinger SDK v12.0 (Neurotech-

nology 2023). The extracted minutiae are then en-

coded into a minutiae map. The encoding methods

for minutiae are directed lines and pointing minutiae

as introduced in (Makrushin et al. 2023).

On Feasibility of Transferring Watermarks from Training Data to GAN-Generated Fingerprint Images

439

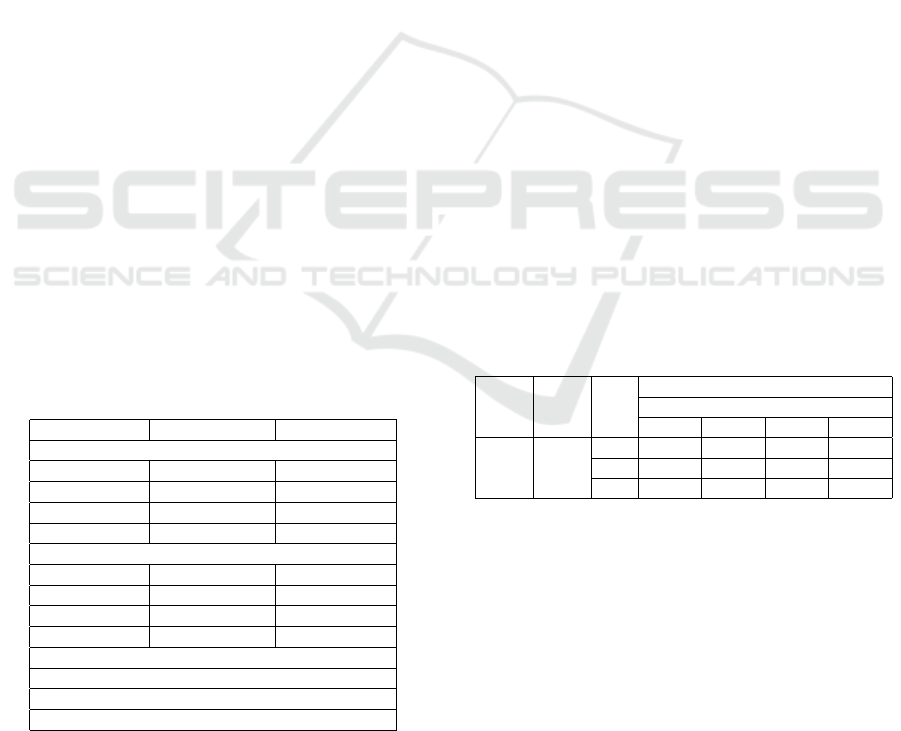

Figure 4: The process of embedding the watermark into the training dataset and extracting the watermark from the recon-

structed fingerprint.

Since we focus on the transferability of water-

marks and not on comparing different minutiae en-

codings, we simply adopt the directed lines for en-

coding the minutiae. The discriminator receives a

concatenated tensor as input, which consists of the

minutiae map supplied to the generator and the origi-

nal fingerprint. The discriminator is utilized solely for

training. Post-training, the generator is used alone to

reconstruct fingerprints. The overall process of em-

bedding the watermark into the training dataset and

extracting the watermark from the reconstructed fin-

gerprint is depicted in Figure 4.

4 EVALUATION

4.1 Evaluation Metrics

The watermark can be assessed via several metrics:

Peak Signal to Noise Ratio (PSNR), Structural Simi-

larity (SSIM), Bit Error Rate (BER), Mean Absolute

Error (MAE), and Normalized Correlation (NC). For

evaluating the imperceptibility, we can use PSNR or

SSIM. In contrast, the robustness of the watermarked

image can be evaluated using MAE, BER, or NC. In

this study, we use PSNR and BER to evaluate imper-

ceptibility and robustness, respectively.

Following the ideas from (Makrushin et al. 2021a)

we measure the realistic appearance of fingerprints by

NFIQ2 scores (NIST 2023) yielding values from 0 to

100. The higher, the better utility and realism.

For fingerprint reconstruction, the True Accep-

tance Rate (TAR) is obtained by comparing the recon-

structed and original fingerprints using the fingerprint

matcher from VeriFinger SDK v12.0 (Neurotechnol-

ogy 2023) which returns similarity scores from 0 to

infinity. The higher, the more similar the fingerprints

are. The decision thresholds are set by False Accept

Rate (FAR) levels - 36, 48, and 60 for FAR levels of

0.1%, 0.01%, and 0.001%, respectively.

4.2 Training and Test Datasets

Our study utilizes a dataset of 50,000 fingerprints

generated by a StyleGAN2-ada model (Karras et al.

2020) trained with 408 Neurotechnology (Neurotech-

nology 2023) fingerprint samples. These samples

were captured using a CrossMatch Verifier 300 scan-

ner at 500 ppi. The images were padded to 512x512

pixels prior to training. We select subsets of 2,000,

100, and 10,000 samples for training, validation, and

test respectively. To ensure the diversity of dataset

splits, we computed Mean Absolute Error (MAE) for

all combinations, identifying a diverse range of MAE

values, approximately between 30 and 230. Calculat-

ing the Verifinger scores could also help us to identify

the diversity. However, we omit it in this study due to

time constraints.

Further, the training dataset is embedded with the

IWA watermarking algorithm and followed by a two-

step filtration process. We first extract the embedded

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

440

watermark and compute the BER score between the

extracted watermark logo and the original. Secondly,

we pick the watermarked fingerprint samples where

the BER score is less than or equal to 0.03. The reason

for filtering the training set only is to include finger-

prints with recoverable watermarks and ensuring their

utility for our GAN training objectives. It is important

to note that this filtration step is applied to the training

dataset only. From this filtered dataset, 1,000 images

were randomly selected for training, while the vali-

dation and test sets remained unchanged at 100 and

10,000 samples, respectively.

4.3 Experiments

We validate the proposed approach of reconstructing

the watermarked fingerprints from the given minutiae

maps via the following four experiments:

• Exp1: Find initial watermarking parameters.

• Exp2: Find optimal watermarking + GAN param-

eters.

• Exp3: Train with a different watermark logo.

• Exp4: Train with un-watermarked fingerprints.

Table 1 contains the set of parameters that are

tested for the optimal performance of the watermark-

ing algorithm IWA along with the fingerprint gener-

ative model. The optimal watermarking parameters

identified in the first experiment (Exp1) include the

watermark’s embedding strength and the embedding

level. We explore the embedding strengths of 5, 8,

and 10 and assess all four wavelet subbands for em-

bedding level: LL, HL, LH, and HH.

Table 1: Watermarking and GAN experiment configura-

tions with training hyperparameters.

Parameter Type Parameter Name Selected Values

Exp1

Watermarking α (Alpha) 5, 8, 10

Watermarking Embedding Level LL, HL, LH, HH

GAN Learning Rate 0.001

GAN Epochs 1200

Exp2

Watermarking α (Alpha) α1, α2

Watermarking Embedding Level EL1, EL2

GAN Learning Rate 0.001, 0.0007

GAN Epochs 1200, 1600, 2000

Exp3

The best hyperparameters from Exp2

Exp4

The best hyperparameters from Exp2

In our second experiment (Exp2) we select the

best (in terms of highest recovery rates) two param-

eters for the embedding strength (α1, α2) and the em-

bedding level (EL1, EL2). Here, we explore the GAN

parameters with learning rates of 1e-3 and 7e-4 with

1200, 1600, and 2000 training epochs.

Additionally, we conduct two ablation studies in

Exp3 and Exp4 to assess the impact of the watermark

itself. In Exp3, we embed different watermark logo

into training data. Please note that in Exp1 and Exp2,

we embed “Logo-Pi” as a standard watermark, just as

in Exp3 the “Logo-HourGlass”. Both watermarks can

be seen in Figure 2. In Exp4, the generative model is

trained with raw fingerprints without any watermarks.

For both Exp3 and Exp4, the best model hyperparam-

eters are selected from the results of Exp2.

In total, we conducted 12 experiments for Exp1,

trained 24 models for Exp2, and one model each for

Exp3 and Exp4. All training setups utilized the Adam

optimizer, batch normalization with a batch size of

64, and dropout layers are excluded. In all training

runs, the learning rate linearly decays to zero after the

model completes half of its training.

Notice that our GAN watermarking scheme is

specified for biometric fingerprint images only. To

the best of our knowledge, this is the very first study

that attempts to watermark GAN-generated finger-

print images making a fair comparative study to other

generic GAN watermarking approaches hardly possi-

ble.

5 RESULTS AND DISCUSSION

Table 2: Exp1 results: watermarking recovery rates. The

scores (in%) represent the total number of samples out of

all the test data where the ”BER < 0.1”. LR: learning rate,

EP: epochs, α: embedding strength.

LR EP α

Watermarking Recovery Rates in %

Embedding Level

LL HL LH HH

0.001 1200

5 88.99 24.78 22.12 0

8 78.99 78.11 78.99 0.23

10 74.60 90.25 91.86 7.47

The metric used in our Exp1 is the Bit Error Rate

(BER) between the original and recovered watermark

from the pix2pix reconstructed fingerprint, specifi-

cally within the bounding box with coordinates (x

1

=

9, y

1

= 6, x

2

= 24, y

2

= 25). We adopted this approach

as GANs, including pix2pix, are known to generate

noise around the produced images.

We consider watermark is recovered if BER is less

than 0.1. This threshold is determined manually by

a visual inspection. We have found that embedding

the watermark in the pix2pix network training images

is effective. However, recovery rates vary for each

embedding level, as shown in Table-2. HH subband

embedding results in near-zero recovery rates, pre-

On Feasibility of Transferring Watermarks from Training Data to GAN-Generated Fingerprint Images

441

Table 3: Exp2 results: Evaluation of watermarking and GAN parameters in 24 training configurations. EL: embedding level,

LR: learning rate, EP: epochs, and ”BER < 0.1” - the number of samples (in %) recovered at this threshold.

Id

Parameters TAR at FAR of Avg.

NFIQ2

Watermark

Recovery Rate

Avg.

PSNR

α EL LR EP 0.1% 0.01% 0.001% at BER< 0.1

1 8 HL 0.001 1200 48.64 24.89 11.27 13.70 78.11 % 31.15

2 8 HL 0.001 1600 52.31 26.58 11.46 15.47 85.69 % 31.22

3 8 HL 0.001 2000 54.64 29.57 14.79 14.75 86.44 % 31.20

4 8 HL 0.0007 1200 58.15 33.64 17.85 14.89 79.93 % 31.25

5 8 HL 0.0007 1600 58.21 34.16 17.54 15.42 86.21 % 31.22

6 8 HL 0.0007 2000 52.60 29.55 14.52 14.07 84.32 % 31.29

7 8 LH 0.001 1200 49.01 24.40 10.30 08.49 78.99 % 31.13

8 8 LH 0.001 1600 45.60 20.65 07.85 10.23 86.18 % 31.13

9 8 LH 0.001 2000 48.61 23.78 10.11 09.90 83.13 % 31.10

10 8 LH 0.0007 1200 59.45 35.58 18.62 11.61 81.51 % 31.20

11 8 LH 0.0007 1600 54.59 29.64 14.40 13.19 83.61 % 31.14

12 8 LH 0.0007 2000 59.69 35.77 19.60 11.05 82.25 % 31.20

13 10 HL 0.001 1200 52.80 27.50 12.58 12.25 90.25 % 31.23

14 10 HL 0.001 1600 50.01 26.29 14.40 12.08 92.65 % 31.10

15 10 HL 0.001 2000 59.80 36.27 19.17 10.62 89.03 % 31.20

16 10 HL 0.0007 1200 48.55 26.31 12.36 10.40 86.52 % 31.21

17 10 HL 0.0007 1600 48.22 25.64 11.65 09.63 88.60 % 31.17

18 10 HL 0.0007 2000 46.46 23.91 11.01 10.11 84.47 % 31.21

19 10 LH 0.001 1200 49.48 24.71 10.32 06.27 91.86 % 31.04

20 10 LH 0.001 1600 45.89 22.17 08.69 06.13 89.74 % 31.13

21 10 LH 0.001 2000 63.45 39.29 20.49 09.25 92.92 % 31.23

22 10 LH 0.0007 1200 51.64 26.93 12.26 07.33 87.41 % 31.12

23 10 LH 0.0007 1600 57.67 33.13 17.23 09.16 87.24 % 31.22

24 10 LH 0.0007 2000 57.21 32.68 16.45 10.87 91.21 % 31.22

sumably due to its sensitivity to filtering operations.

Conversely, the LL subband shows promising recov-

ery rates but the original image quality has degraded.

The reason for that is that the LL band contains high-

level image information. Changing it will directly im-

pact the image content. HL and LH subbands have

a mix of image frequencies and show high recovery

rates. Thus, we select them for further experiments.

Assessing the importance of embedding strength (α

factor), we discovered that increasing the α improves

watermark robustness, with a recovery rate exceeding

90% for both HL and LH subbands, if α is set to 10.

However, the α of 8 also yields almost 80% recov-

ery rate. Therefore, we proceed with combinations of

embedding strengths 8 and 10 and embedding levels

HL and LH in our further investigations.

In Exp2, we employ the top-performing water-

marking parameters from Exp1 to refine GAN param-

eters across 24 training runs, as outlined in Table-

3. We assess the robustness and imperceptibility of

watermarked fingerprints via BER and PSNR scores,

with models 14 and 21 demonstrating superior robust-

ness with the α value of 10. The watermarks are im-

perceptible enough as all models exceed the accept-

able PSNR threshold of 30dB. The poor visual qual-

ity and low reconstruction rates measured by NFIQ2

and Verifinger matching scores respectively reveal

that our approach has a room for improvement. Fin-

gerprints of good quality are represented by NFIQ2

scores exceeding 35, whereas scores under 6 suggest

ineffective patterns. Our average NFIQ2 scores lie

around 10, suggesting that the visual quality of the

fingerprints is poor. Fingerprint reconstruction rates

reported in (Makrushin et al. 2023) are over 80%,

70%, and 60% at FAR levels of 0.1%, 0.01%, and

0.001% respectively. Our models demonstrate signif-

icantly lower reconstruction rates, with a maximum

of 63.45% and 59.80% at 0.1% FAR, using models

15 and 21 both with the α value of 10.

Table 4: Exp3 results; The “Model Id” column corresponds

to the configurations (“Id”) reported in Table 3.

Model Id Logo Avg BER

21 Pi 0.044

21 HourGlass 0.052

The results of Exp3 are reported in Table-4.

We see that watermarking capacity affects water-

marking robustness. On average, the model trained

with “Logo-Pi” embedded data outperforms the one

trained with “Logo-HourGlass” embedded data in

terms of BER scores.

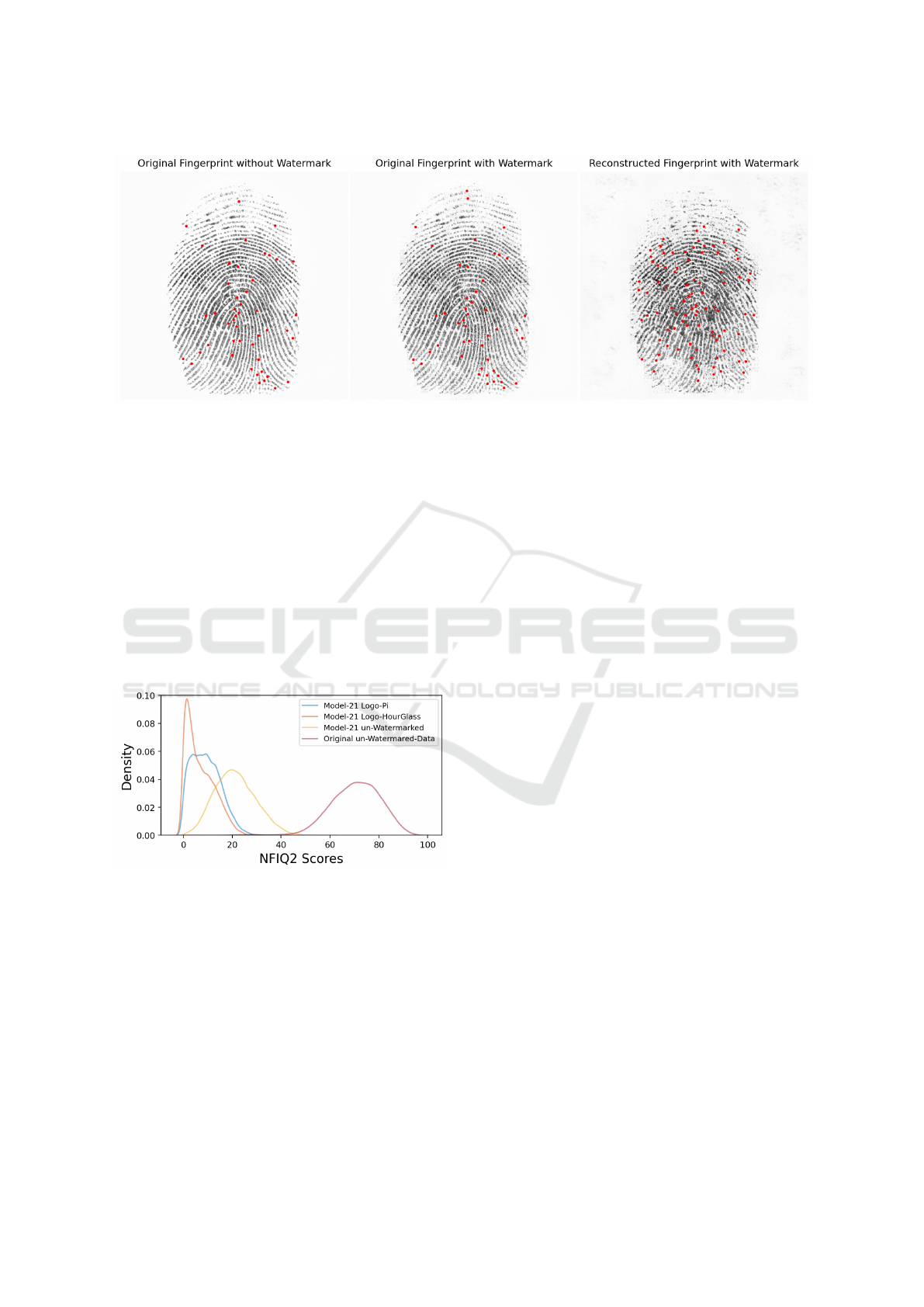

The results of Exp4 are visualized in Figure 6.

The original un-watermarked data has an average

NFIQ2 score of around 75. The models trained with

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

442

Figure 5: Visualization of a fingerprint image with and without a watermark. The red dots in the figure denote the locations

of minutiae.

“Logo-Pi”, “Logo-HourGlass”, and un-watermarked

data have the average NFIQ2 scores of approximately

10, 5, and 20, respectively. We see that watermark-

ing has an impact on the visual quality of recon-

structed fingerprints, but the highest degradation of

fingerprints is due to the reconstruction process, as

the model trained with un-watermarked data achieves

an average NFIQ2 score of only 20, compared to the

original raw scores of around 75. We suspect that the

GAN parameters obtained may not be optimal and re-

quire further exploration.

Figure 6: Distributions of NFIQ2 scores plotted for Exp3

and Exp4: Comparison of watermarks to each other and to

the training with un-watermarked data.

All in all, the configurations with the embedding

strength of 10, embedding levels HL or LH, the learn-

ing rate of 1e-3, and 1600 or 2000 epochs demonstrate

better performance than the remaining configurations.

The low fingerprint reconstruction scores could be at-

tributed to GAN noise or suboptimal GAN parame-

ters. A visual sample result can be seen in Figure 5.

6 CONCLUSION

Watermarking of synthetic images produced by a gen-

erative model is an important step towards protecting

the creators of generative models. This paper intro-

duces a novel approach to watermarking the training

images with DCT-SVD-in-DWT (Kang et al. 2018)

and training the pix2pix network with these water-

marked images. Our primary goal is to create real-

istic synthetic fingerprints and protect the GAN au-

thors by watermarking the model-generated images.

We achieve the former one using the pix2pix model

and the latter by transferring the watermark from the

training dataset to the model’s generated images. We

experiment with various parameters of the selected

watermarking technique in conjunction with training

hyperparameters of GAN. For watermarking, an em-

bedding strength of 10 results in superior outcomes,

primarily when the embedding level is either HL or

LH. Even though the optimal watermarking and GAN

parameters enable watermark extraction from the vast

majority of the reconstructed fingerprints so that the

fingerprints do not loose their utility, there is a room

for algorithm tuning to improve the NFIQ2 and Ver-

ifinger matching scores. The watermark recovery rate

in our experiments is not high enough to consider our

GAN watermarking scheme mature for application in

a practical scenario. Notice that fingerprint images,

due to their limited information content with black

and white lines, have a very low watermark capacity.

Applying our approach to colored more informative

images which accommodate more watermark capac-

ity may lead to significantly higher watermark recov-

ery rates. An adversarial attack might find the minu-

tiae setups that lead to vanishing watermarks in syn-

thetic fingerprint images. Hence, improvement of the

On Feasibility of Transferring Watermarks from Training Data to GAN-Generated Fingerprint Images

443

practical effectiveness and robustness of our approach

is the subject to future work. All in all, given a robust

watermarking algorithm, we confirm that the water-

marks can be transferred from the GAN training im-

ages to the GAN-generated images. Future work will

include a thorough study of watermark transferability

across various generative network architectures and

extend the study to other domains like video. Further-

more, the embedding of encrypted watermarks will be

studied to address the security aspect.

ACKNOWLEDGEMENTS

This research has been funded in part by the Deutsche

Forschungsgemeinschaft (DFG) through the research

project GENSYNTH under the number 421860227.

REFERENCES

Ansari, A. H. (2011). Generation and storage of large syn-

thetic fingerprint database. M.E. Thesis, Indian Insti-

tute of Science Bangalore.

Bahmani, K., Plesh, R., Johnson, P., Schuckers, S., and

Swyka, T. (2021). High fidelity fingerprint genera-

tion: Quality, uniqueness, and privacy. In Proc. of the

IEEE Int. Conf. on Image Processing (ICIP). IEEE.

Barni, M., P

´

erez-Gonz

´

alez, F., and Tondi, B. (2021). DNN

watermarking: Four challenges and a funeral. In Pro-

ceedings of the 2021 ACM Workshop on Information

Hiding and Multimedia Security, IH&MMSec ’21,

page 189–196, New York, NY, USA. Association for

Computing Machinery.

Bontrager, P., Roy, A., Togelius, J., Memon, N., and Ross,

A. (2018). DeepMasterPrints: Generating master-

prints for dictionary attacks via latent variable evolu-

tion. In Proc. BTAS, pages 1–9.

Bouzaglo, R. and Keller, Y. (2022). Synthesis and recon-

struction of fingerprints using generative adversarial

networks. CoRR, abs/2201.06164.

Cappelli, R. (2004). SFinGe: an approach to synthetic fin-

gerprint generation. In Proc. of the Int. Workshop on

Biometric Technologies.

Chen, H., Rouhani, B. D., Fu, C., Zhao, J., and Koushanfar,

F. (2019). DeepMarks: A secure fingerprinting frame-

work for digital rights management of deep learning

models. In Proceedings of the 2019 on International

Conference on Multimedia Retrieval, ICMR ’19, page

105–113, New York, NY, USA. Association for Com-

puting Machinery.

Edwards, B. (2022). China bans AI-generated media with-

out watermarks. https://arstechnica.com/information-

technology/2022/12/china-bans-ai-generated-media-

without-watermarks/, last check 14.7.2023.

Farou, Z., Mouhoub, N., and Horv

´

ath, T. (2020). Data

generation using gene expression generator. Lecture

Notes in Computer Science (including subseries Lec-

ture Notes in Artificial Intelligence and Lecture Notes

in Bioinformatics), 12490:54–65.

Fei, J., Xia, Z., Tondi, B., and Barni, M. (2022). Supervised

GAN watermarking for intellectual property protec-

tion. In 2022 IEEE International Workshop on Infor-

mation Forensics and Security (WIFS), pages 1–6.

Goodfellow et al., I. (2014). Generative adversarial nets. In

Ghahramani et al., Z., editor, Advances in Neural In-

formation Processing Systems (NIPS’14), volume 27,

pages 2672–2680. Curran Associates, Inc.

guofei (2022). Blind watermark based on DWT-DCT-SVD.

https://github.com/guofei9987/blind watermark, last

check 14.7.2023.

Isola, P., Zhu, J.-Y., Zhou, T., and Efros, A. A. (2017).

Image-to-image translation with conditional adversar-

ial networks. In Proc. CVPR.

Kang, X., Zhao, F., Lin, G., and Chen, Y. (2018). A novel

hybrid of DCT and SVD in DWT domain for robust

and invisible blind image watermarking with optimal

embedding strength. Multimedia Tools and Applica-

tions, 77:13197–13224.

Karras, T., Aila, T., Laine, S., and Lehtinen, J. (2018). Pro-

gressive growing of GANs for improved quality, sta-

bility, and variation. In Proc. of the International Con-

ference on Learning Representations (ICLR).

Karras, T., Aittala, M., Hellsten, J., Laine, S., Lehtinen, J.,

and Aila, T. (2020). Training generative adversarial

networks with limited data. CoRR, abs/2006.06676.

Kumar, C., Singh, A. K., and Kumar, P. (2018). A recent

survey on image watermarking techniques and its ap-

plication in e-governance. Multimedia Tools and Ap-

plications, 77:3597–3622.

Makrushin, A., Kauba, C., Kirchgasser, S., Seidlitz, S.,

Kraetzer, C., Uhl, A., and Dittmann, J. (2021a). Gen-

eral requirements on synthetic fingerprint images for

biometric authentication and forensic investigations.

In Proc. IH&MMSec’21, page 93–104. ACM.

Makrushin, A., Mannam, V. S., and Dittmann, J. (2023).

Data-driven fingerprint reconstruction from minutiae

based on real and synthetic training data. In Proc.

VISIGRAPP 2023 - Volume 4: VISAPP, pages 229–

237.

Makrushin, A., Trebeljahr, M., Seidlitz, S., and Dittmann,

J. (2021b). On feasibility of GAN-based fingerprint

morphing. In Proc. of the IEEE Int. Workshop on Mul-

timedia Signal Processing (MMSP), pages 1–6.

Marra et al., F. (2019). Do GANs leave artificial fin-

gerprints? In Proc. of the 2019 IEEE Conference

on Multimedia Information Processing and Retrieval

(MIPR), pages 506–511. IEEE.

Neurotechnology (2023). Neurotechnology Verifinger

SDK. https://www.neurotechnology.com/verifinger

.html, last check 14.7.2023.

NIST (2023). NIST Fingerprint Image Qual-

ity (NFIQ) 2. https://www.nist.gov/services-

resources/software/nfiq-2, last check 14.7.2023.

Ronneberger, O., Fischer, P., and Brox, T. (2015). U-

Net: Convolutional networks for biomedical image

segmentation. CoRR, abs/1505.04597.

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

444

Tian, C., Wen, R. H., Zou, W. P., and Gong, L. H. (2020).

Robust and blind watermarking algorithm based on

DCT and SVD in the contourlet domain. Multimedia

Tools and Appl., 79:7515–7541.

Wu, H., Liu, G., Yao, Y., and Zhang, X. (2021). Watermark-

ing neural networks with watermarked images. IEEE

Transactions on Circuits and Systems for Video Tech-

nology, 31(7):2591–2601.

Wyzykowski, A. B. V., Segundo, M. P., and de Paula Lemes,

R. (2020). Level three synthetic fingerprint genera-

tion.

Yu, N., Davis, L., and Fritz, M. (2019). Attributing fake

images to GANs: Learning and analyzing GAN fin-

gerprints. In Proc. of the IEEE/CVF Int. Conference

on Computer Vision (ICCV), pages 7555–7565.

Yu, N., Skripniuk, V., Abdelnabi, S., and Fritz, M. (2021).

Artificial fingerprinting for generative models: Root-

ing deepfake attribution in training data. In Proc. of

the IEEE Int. Conference on Computer Vision (ICCV).

On Feasibility of Transferring Watermarks from Training Data to GAN-Generated Fingerprint Images

445