An Image Sharpening Technique Based on Dilated Filters and 2D-DWT

Image Fusion

Victor Bogdan

1 a

, Cosmin Bonchis¸

1 b

and Ciprian Orhei

2 c

1

West University of Timis¸oara, Bd. V. P

ˆ

arvan 4, 045B, Timis¸oara, RO-300223, Romania

2

Polytechnic University of Timis¸oara, Timis¸oara, RO-300223, Rom

ˆ

ania

Keywords:

Dilated Filters, Image Sharpening, Unsharp Masking, Image Contrast Enhancement, Image Processing.

Abstract:

Image sharpening techniques are pivotal in image processing, serving to accentuate the contrast between darker

and lighter regions in images. Building upon prior research that highlights the advantages of dilated kernels in

edge detection algorithms, our study introduces a multi-level dilatation wavelet scheme. This novel approach

to Unsharp Masking involves processing the input image through a low-pass filter with varying dilatation

factors, followed by wavelet fusion. The visual outcomes of this method demonstrate marked improvements

in image quality, notably enhancing details without introducing any undesirable crisping effects. Given the

absence of a universally accepted index for optimal image sharpness in current literature, we have employed a

range of metrics to evaluate the effectiveness of our proposed technique.

1 INTRODUCTION

Image enhancement encompasses various techniques

aimed at improving the quality, clarity, and percep-

tibility of images. Digital images often suffer from

poor quality due to factors like inadequate contrast,

undesirable shading, artifacts, or poor focus. Widely

considered one of the most crucial techniques in im-

age processing, image enhancement aims to improve

the quality and visual appearance of an image. Nu-

merous techniques have been developed and these are

reviewed in (Archana and Aishwarya, 2016) and (Qi

et al., 2021).

Unsharp Masking (UM) (Ramponi and Polesel,

1998) is a well-established technique for sharpening

images that relies on subtracting a blurred version

of the image from the original. Variants of UM,

as discussed in (Polesel et al., 2000), control fea-

ture enhancement in specific areas of the image. In

(Bilcu and Vehvilainen, 2008), UM is combined with

sigma filters to simultaneously highlight noise reduc-

tion and edge enhancement. Furthermore, a general-

ized framework for UM is proposed in (Deng, 2010),

which combines blocks for edge-preserving filters,

contrast enhancement, and adaptive gain control.

a

https://orcid.org/0000-0003-1810-234X

b

https://orcid.org/0000-0001-6660-282X

c

https://orcid.org/0000-0002-0071-958X

Contrast enhancement is crucial in many appli-

cations for highlighting image features. A classi-

cal method for achieving this is histogram equaliza-

tion (HE) (Jain et al., 1995), which involves adjust-

ing the grey levels of an image to achieve a uniform

distribution of pixel values. Various variants of this

method exist, such as the sub-regions HE analyzed in

(Ibrahim and Kong, 2009), which partitions the in-

put image and smoothens intensity values. In (Somal,

2020), the UM technique is applied to an image be-

fore implementing the HE technique.

The usage of dilated filters in image sharpening

algorithms was proposed in (Orhei and Vasiu, 2022)

and extended in (Orhei, 2022) or (Orhei and Vasiu,

2023). The extended spatial domain of the kernels

has improved the performance of basic and complex

enhancement algorithms.

The two-dimensional Discrete Wavelet Transform

(2D-DWT) is a powerful algorithm for image en-

hancement, leveraging the wavelet theory to analyze

and process images in both spatial and frequency do-

mains. This method involves decomposing an im-

age into a set of wavelet coefficients, representing

different levels of detail. These approach was ex-

perimented in algorithms proposed by (Demirel and

Anbarjafari, 2011), (Papamarkou et al., 2014), and

(Zafeiridis et al., 2016).

In recent years, the problem of image sharpen-

ing has been approached using Convolutional Neural

Bogdan, V., Bonchi¸s, C. and Orhei, C.

An Image Sharpening Technique Based on Dilated Filters and 2D-DWT Image Fusion.

DOI: 10.5220/0012416600003660

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 19th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2024) - Volume 3: VISAPP, pages

591-598

ISBN: 978-989-758-679-8; ISSN: 2184-4321

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

591

Networks (CNNs). (Kinoshita and Kiya, 2019) de-

fines a CNN architecture that utilizes local and global

features for image sharpening. (Li et al., 2021) pro-

poses a more complex progressive-recursive image

enhancement network for low-light images.

In this paper, we present a novel multi-level di-

lation wavelet scheme designed to enhance details at

various scales while being robust to noise and com-

putationally efficient. Our sharpening algorithm pro-

cesses the input image using a cluster of UM tech-

niques with varying dilation factors, followed by post-

processing through a wavelet fusion mechanism. The

visual results obtained using our approach demon-

strate clear enhancements in image quality.

The proposed algorithm analyzes the image at

multiple scales by using dilated UM, which means it

can effectively sharpen both subtle details and promi-

nent features while being selective about what fea-

tures to enhance. By using the wavelet transform,

edge preservation and noise reduction are increased

along with the enhancement process. The fusion pro-

cess in the wavelet domain allows the algorithm to

adaptively combine information from different scales

and orientations.

This algorithm is designed to leverage the

strengths of both UM and 2D-DWT, using dilation

to capture more detailed information and wavelet fu-

sion to effectively combine these details into a single,

enhanced image. The fusion process is particularly

significant as it determines the final quality and char-

acteristics of the sharpened image.

2 METHODOLOGY

2.1 Linear Unsharp Masking

In classical (linear) UM the term ’unsharp’ refers to

the subtraction of a blurred image, which effectively

enhances edges. This is described by Equation 1,

where I(x,y) is the original image, I

um

(x,y) the out-

put image, I

l p f

the result of computing I with a Low

Pass Filter (LPF), I

hp f

the result of computing I with

a HPF, and λ is the positive scaling factor control-

ling the level of contrast enhancement. However,

this approach has downsides, including high sensi-

tivity to noise and unintended enhancements in high-

contrast areas of the image (Ramponi and Polesel,

1998), (Polesel et al., 2000), (Deng, 2010).

I

um

(x,y) = I(x,y) + λI

hp f

(x,y)

= I(x,y) + λ(I(x,y) − I

l p f

(x,y)) (1)

For our work, we employ the standard Laplacian

operator, defined by Equation 2.

I

l p f

(x,y) = 4I(x,y) − I(x − 1,y) − I(x + 1,y)

− I(x, y − 1) − I(x,y + 1) (2)

2.2 Dilated Filters

In our approach, we utilize dilated filters, a technique

presented in (Bogdan et al., 2020). The core concept

is to leverage a larger neighborhood around a pixel

to capture more information. This is achieved by in-

serting additional rows and columns into the kernels,

which act as gaps and are assigned a value of 0.

Consider K as a 2-D kernel with dimensions w×h,

where h is the height and w is the width of the filter.

Introducing a dilation factor d, we can construct a di-

lated kernel K

′

with dimensions (2d + h) × (2d + w),

as described in Equation 3. The Kronecker delta func-

tion δ is used to selectively include elements from

the original kernel K in K

′

. Specifically, δ ensures

that only the elements at positions satisfying the di-

lation condition are retained from K, while all other

positions are set to zero. This approach effectively

expands the kernel, introducing 0 between the origi-

nal kernel elements, as detailed in (Orhei and Vasiu,

2023) or (Orhei et al., 2021a).

K

′

(i, j) =K(i/(d + 1), j/(d + 1))

· δ(i%(d + 1)) · δ( j%(d + 1)) (3)

The underlying hypothesis of the technique is that

dilation, as opposed to expansion, covers a larger area

in terms of pixel distance rather than pixel density.

Thus, it incorporates more information without in-

creasing the number of pixels. Using a dilated filter

is akin to applying the original filter to a downscaled

image, an experiment explored in (Orhei et al., 2020).

2.3 2D Discrete Wavelet Transform

The DWT has established itself as a prominent tool in

signal processing and image processing with notable

applications since Mallat’s foundational work (Mal-

lat, 1989), which introduced the concept of multi-

resolution signal analysis based on wavelet decom-

position. DWT is known for its ability to simulta-

neously process localizations for time and frequency

across various pyramid levels of an image, a concept

elaborated in (Acharya and Chakrabarti, 2006).

Consider S

j,k

as the scaling (approximation) coef-

ficients and W

k

j,k

as the wavelet coefficients at scale j

for a signal f (t), where k ∈ {1,2,3} corresponds to

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

592

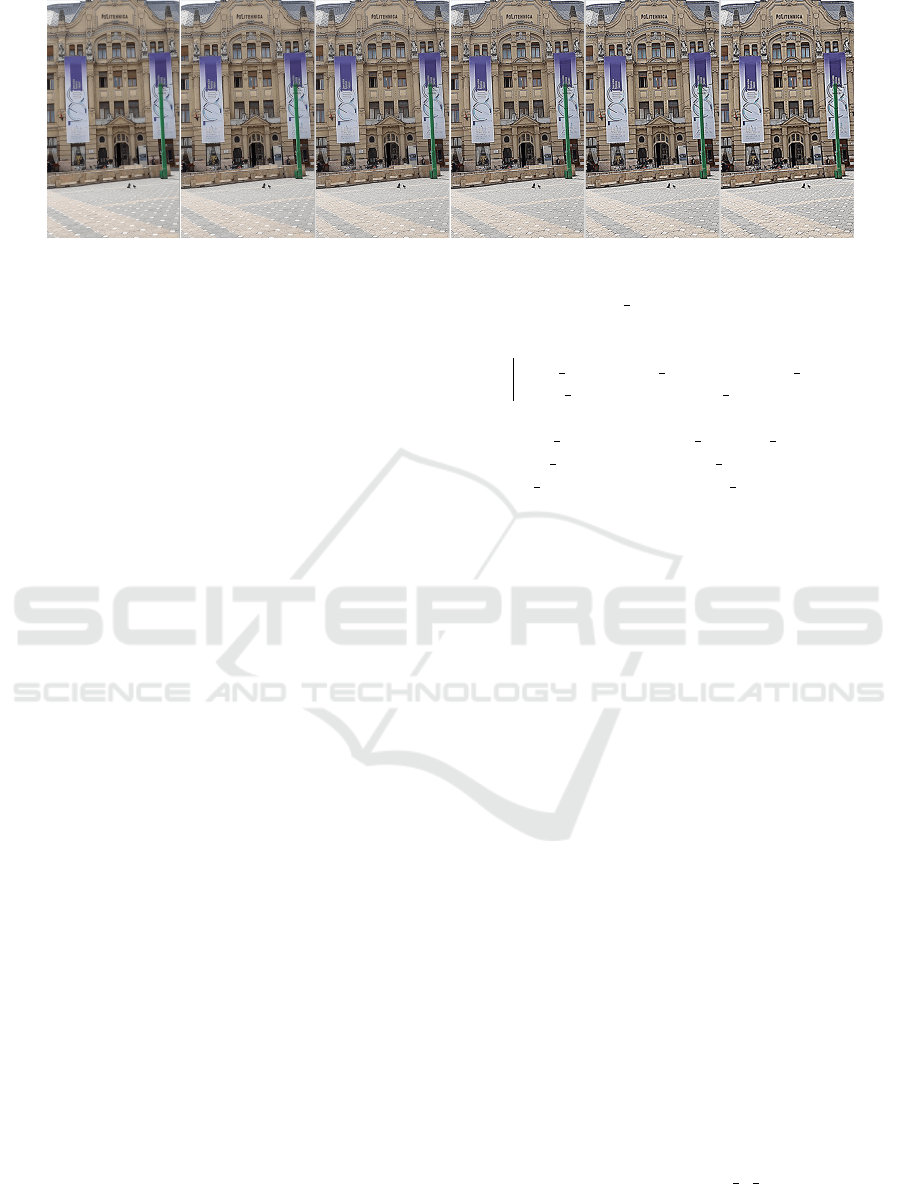

Figure 1: Example of resulting images from dilated UM phase, left to right the dilated factor increases from 0 to 5.

the type of detail (horizontal, vertical, or diagonal),

and v and u are indices for the rows and columns, re-

spectively. The indices l and m are used for summa-

tion. In the context of 2D signal processing with or-

thonormal filters, these coefficients can be expressed

as products of a LPF (h ) and a HPF (g). The coeffi-

cients at scale j can be derived from the coefficients

at the subsequent scale, j + 1. The approximation co-

efficients are calculated using Equation 4 and 5.

S

j,v,u

=

∑

l

∑

m

h(l − 2v)h(m − 2u)S

j+1,l,m

(4)

W

k

j,v,u

=

∑

l

∑

m

f ilter

type

(l − 2v, m − 2u)S

j+1,l,m

(5)

For our use case, we choose the Daubechies-4

(Db4) wavelets variant, which is one of the most pop-

ular (Daubechies, 1992).

2.4 Proposed UM

The UM algorithm we propose is based on multi-

scale analysis and wavelet fusion, scheme was in-

spired from two distinct sharpening approaches. The

first approach involves UM using dilated kernels, as

presented in (Orhei and Vasiu, 2022), where the au-

thors propose the use of kernel dilation techniques for

an expanded scale factor. The second approach com-

bines 2D-DWT with UM, as detailed in (Papamarkou

et al., 2014). Here, the authors propose a 2D wavelet

transform sharpening algorithm that varies the UM

and incorporates an image fusion concept.

Our novel sharpening method, outlined in Algo-

rithm 1 is divided into two stages. In the first stage, to

exploit important image details, we process the initial

image using UM with varying dilatation factors. In

the second stage, the resulting sharpened images are

aggregated through a wavelet fusion technique, pro-

ducing the final enhanced image.

Our method adopts a multiscale approach de-

signed to accentuate image information across vari-

ous levels of abstraction by utilizing dilated filters at

Data: original image, octaves

Result: Processed image

for k in octaves do

um res

k

← UM dilated(original img,k)

img coe f f ← 2dwt(um res

k

)

end

coe f f f usion ← fusion rule(img coe f f )

i2dwt out ← i2dwt(coe f f f usion)

img out ← normalize(i2dwt out)

Algorithm 1: UM Dilated 2D-DWT Approach.

different dilation factors. This is evident in Figure 1,

where each level of dilation reveals fewer details, al-

beit with a trade-off in increased artifact size and a

potential over-crisp effect.

By integrating information from different dila-

tion levels, referred to as octaves, our method places

greater emphasis on the dominant features in the im-

age that are accentuated by dilation. However, caution

is necessary regarding the number of octaves used to

avoid an undesirable state of over-crisping.

The second stage of our method introduces a

wavelet fusion technique that enhances the sharpen-

ing features processed in the first stage. This fusion

process aims to integrate features from the set of pro-

cessed images to create a single, improved image.

The decision to use wavelet fusion is driven by its

proven effectiveness in the spatial domain, as demon-

strated in various applications.

As defined in Algorithm 1, the final block in our

process is fusion. The aggregation rule applied here

is crucial for determining the optimal combination of

different coefficients to produce the best output im-

age. In this context, we have explored various fusion

rules, detailed below. In these rules, I

F

represents the

fused image, and D

I

F

(x) denotes the 2D-DWT coeffi-

cient for image location x:

1. Maximum Fusion Rule (UM P MAX):

D

I

F

(x) = max(|D

I

0

|(x),|D

I

1

|(x),...,|D

I

n

|(x))

(6)

An Image Sharpening Technique Based on Dilated Filters and 2D-DWT Image Fusion

593

Figure 2: Results on 00109 image: (a) Original image; (b) UM ST D; (c) UM ST D LAP; (d) UM 5D; (e) UM 7D (f)

UM 2DW T (g) UM P MAX; (h) U M P AV G 1; (i) U M P AV G 2; (j) U M P AV G 3.

2. Direct Proportional with dilation Average Rule

(UM P AV G 1):

D

I

F

(x) =

∑

n

k=0

k · D

I

k

(x)

n

(7)

3. Inverse Proportional with dilation Average Rule

(UM P AV G 2):

D

I

F

(x) =

∑

n

k=0

(n − k) · D

I

k

(x)

n

(8)

4. Average Rule (U M P AV G 3):

D

I

F

(x) =

∑

n

k=0

D

I

k

(x)

n

(9)

The integration step in the fusion process is crucial

for the performance of the dilated filter. Image fu-

sion has become a significant operation in image pro-

cessing, particularly when combined with multi-scale

analysis. Wavelet-based techniques are widely recog-

nized as the most effective for image fusion, having

been successfully implemented in numerous applica-

tions. Their ability to seamlessly blend features from

multiple images makes them an ideal choice.

2.5 Evaluation Metrics

Evaluating the sharpening quality is challenging due

to the lack of objective measures for image quality or

sharpness, so we will evaluate several metrics.

Entropy (H), it serves as a measure of uncertainty,

rising as disorder increases. In other words, an in-

crease in H corresponds to an augmentation in the

level of details.

Spatial Frequency (SF), which expresses the over-

all activity level in an image, is defined by Equation

10, where RF is the row frequency (Equation 11) and

CF is the column frequency (Equation 12), where

M × N image block F with grey values.

SF =

q

(RF)

2

+ (CF)

2

(10)

RF =

s

1

MN

M

∑

m=1

N

∑

n=2

[F(m, n) − F(m,n − 1)]

2

(11)

CF =

s

1

MN

N

∑

n=1

M

∑

m=2

[F(m, n) − F(m − 1,n)]

2

(12)

Root Mean Square Contrast (RMSC), a pixel-

based metric where higher values indicate better con-

trast, is presented in Equation 13 ( where M ×N is the

dimensions of image F and F is the mean intensity ).

RMSC =

s

1

MN

M−1

∑

m=0

N−1

∑

n=0

(F(m, n) − F)

2

(13)

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

594

The Blind/Referenceless Image Spatial Quality

Evaluator (BRISQU E), is a non-reference image

quality assessment metric that operates in the spa-

tial domain. It evaluates up to 18 statistical fea-

tures across two image scales, contributing to distor-

tion evaluation and quality verification through a two-

stage classification algorithm (Mittal et al., 2012).

3 RESULTS

In this section, we present the results obtained using

the proposed approach, as described in Section 2. Our

approach introduces four distinct fusion rules for the

UM algorithm, as detailed in Equations 6 up to 9.

To evaluate the effectiveness of our proposed algo-

rithm variants, we compare them against the classical

UM method (Ramponi and Polesel, 1998) (U M ST D)

and using la Laplace kernel (U M ST D LAP); the di-

lated UM approach (Orhei and Vasiu, 2022) (UM 5D

and UM 7D); the 2D-DWT technique from (Papa-

markou et al., 2014) (U M 2DW T ).

Our evaluation is two-fold. First, we use a small

dataset extracted from TMBuD (Orhei et al., 2021c).

This initial evaluation aims to provide a focused anal-

ysis of the algorithms’ performance in diverse scenar-

ios. Second, we extend our evaluation to the entire

TMBuD dataset, which allows us to observe metric

trends across a significant number of scenarios and

gain a comprehensive understanding of the effective-

ness in a broader context.

In the scope of reproducible research, we

used for our simulations EECVF - End-to-End

Computer Vision Framework - (Orhei et al.,

2021b), a Python open-source solution that aims

to support researchers. The experiments done

in this paper can be reproduced by running

main dilated 2dwt sharpening dilated.py module.

3.1 Small Dataset

In Figure 3, we showcase the images selected for

our small dataset analysis. This dataset comprises 10

images, each representing a unique challenge com-

monly encountered in real-life photography scenar-

ios. Notably, the dataset includes images captured in

low-light conditions (figures 00005 and 00511), ad-

verse weather conditions (figures 00109 and 07403).

Additionally, the dataset encompasses images that

are blurred, a common issue resulting from incorrect

camera usage or the limitations of the capturing de-

vice. These diverse examples provide a comprehen-

sive range of scenarios to effectively assess the perfor-

mance of our proposed image processing techniques.

Figure 3: Small dataset used to evaluate created from TM-

BuD (Orhei et al., 2021c).

Figure 4: Proposed UM P AV G 1 example where: (a)

Original image; (b) Proposed UM result; (c) Difference be-

tween (a) and (b); (d) Zoom on (a); (e) Zoom on (b).

In Figure 2, we showcase the visual outcomes of

the various sharpening algorithms evaluated, as pre-

viously outlined. However, it’s important to note that

in some instances, the sharpening process has led to

an over-enhancement effect, akin to ”burning” of the

image. This is especially evident when examining im-

ages f and g in contrast to the others. Another in-

teresting aspect observed is the benefit that dilation

brings, particularly evident in images d, e, and h to

An Image Sharpening Technique Based on Dilated Filters and 2D-DWT Image Fusion

595

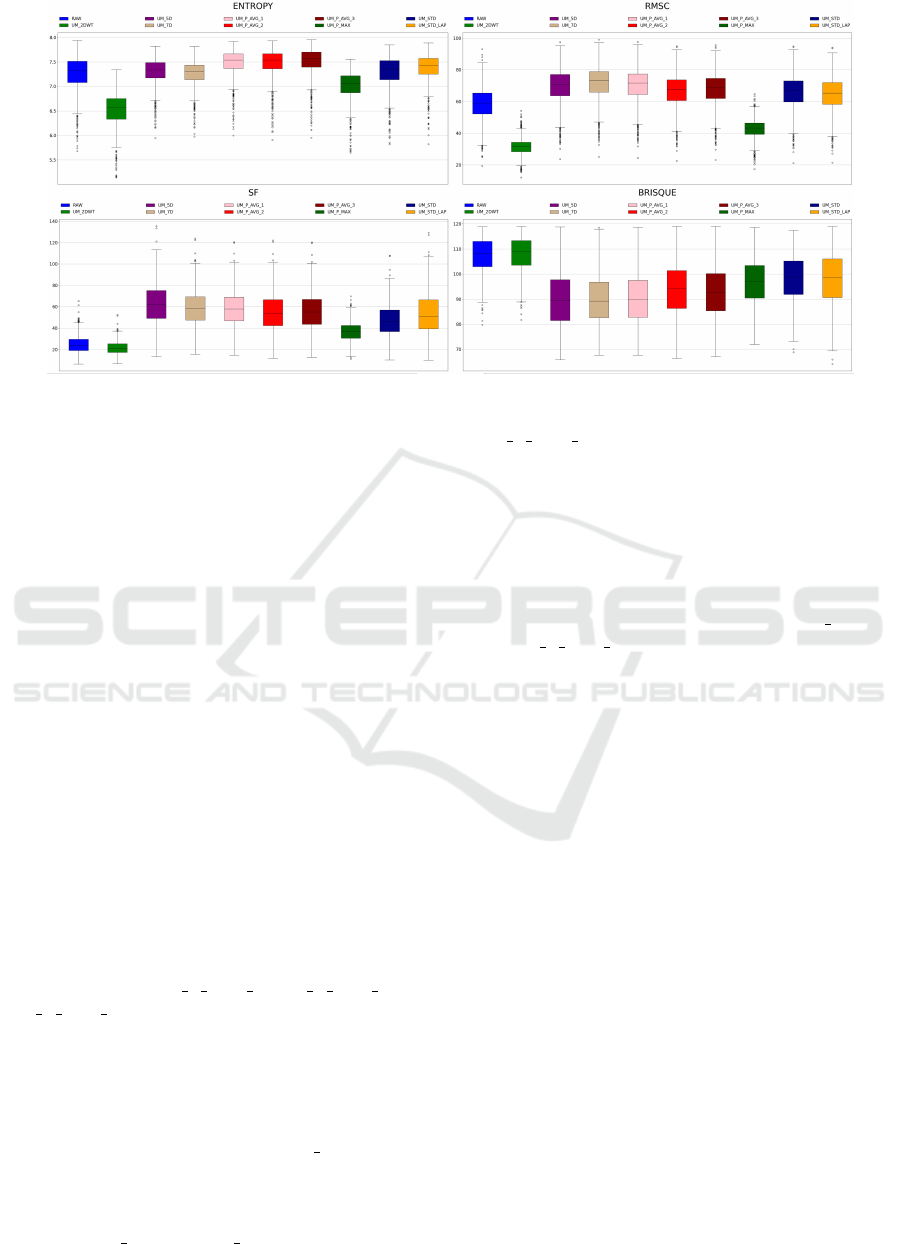

Figure 5: H, RMSC, SF and BRISQUE metrics on the small dataset.

j, in terms of contrast enhancement. This enhance-

ment is especially apparent in the computation of the

weighted average, as demonstrated in U M P AV G 1.

In Figure 4, we observe the enhanced results

achieved by applying our proposed UM algorithm

with the direct proportional average fusion rule, as

defined in Equation 7. Specifically, in the zoomed-

in sections of the original image (Figure 4 d) and the

sharpened image (Figure 4 e), enhancements are evi-

dent in areas such as the text on top the building and

the contours of the windows and wall ornaments.

In Figure 5, the outcomes of the H, SF, RMSC,

and BRISQUE metrics on the small dataset are pre-

sented. An analysis of these results indicates that

the proposed UM algorithms, particularly those uti-

lizing average fusion rules, exhibit a slight over-

shooting across all evaluation methods, with a pro-

nounced effect in the H assessment. Conversely,

the UM algorithm employing the maximum fu-

sion rule (UM P MAX) demonstrates values closer

to the median in the H and SF evaluations, and

slightly below the median for RMSC. Notably, the

UM algorithms using the inverse proportional av-

erage rule (UM

P AV G 2) and the simple average

rule (UM P AV G 3) show comparable results in the

RMSC and SF metrics, indicating a consistency in

their performance across these evaluation methods.

Further insights can be drawn from Figure 5. The

average fusion rule-based methods (UM P AV G 1,

UM P AV G 2, UM P AV G 3) show elevated H val-

ues, suggesting an increase in information content or

image variability. This could indicate enhanced image

details but may also imply the introduction of noise.

In terms of SF, the dilated UM variants (UM 5D and

UM 7D), along with UM P AV G 1, demonstrate the

highest values, indicating a marked improvement in

image sharpness and edge definition. The RMSC re-

sults align with SF findings, where the dilated UM

methods and U M P AV G 1 score highest, implying

enhanced image contrast. However, this contrast en-

hancement could potentially lead to the overempha-

sis of features or noise. Conversely, the BRISQUE

scores reveal that the dilated UM methods, especially

UM 7D, and U M P AV G 1 method, enhance per-

ceived image quality compared to the original.

3.2 TMBuD Dataset

Encouraged by the promising results achieved with

the smaller dataset, we are now motivated to expand

our analysis to encompass the entire TMBuD dataset,

which comprises a substantial collection of 1120 im-

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

596

Figure 6: H, RMSC, SF and BRISQUE metrics on the entire TMBuD dataset.

ages. This dataset is an excellent choice for further

evaluation due to its diverse range of conditions repre-

sented in the images and the fact that all images were

captured using mobile devices.

For a better visibility of the results on the entire

TMBUD dataset evaluation is presented in Figure 6.

A notable observation is the prevalence of outliers in

the H metric, even among the original images. This

trend suggests the presence of images with compara-

tively lower information content within the dataset.

Such a characteristic could be attributed to images

having more uniform pixel distributions, repetitive

textures, or a dominance of similar objects across the

dataset. This hypothesis is further supported by the

presence of low outliers in the RMSC evaluation, in-

dicating a subset of images with lower contrast. Inter-

estingly, the lack of a significant number of outliers

in the BRISQU E metric suggests that these charac-

teristics are inherent to the images themselves, rather

than being a result of the applied algorithms. This ob-

servation underscores the importance of considering

the intrinsic properties of images when evaluating the

performance of image processing techniques.

The proposed methods utilizing average fusion

rules, specifically UM P AV G 1, UM P AV G 2, and

UM P AV G 3, consistently show the highest H val-

ues. This suggests they are particularly effective in

introducing additional information or variability into

the images, potentially enhancing detail. However,

this enhancement might come with the trade-off of

increased noise. In contrast, the original image and

the dilated UM with dilatation 2 (UM 7D) maintain

H values close to the original, indicating a more con-

servative approach.

In terms of SF and RMSC, the dilated UM

methods (UM 5D and UM 7D) and the proposed

UM P AV G 1 method stand out with significantly

higher values. These results suggest a substantial en-

hancement in image sharpness, edge definition, and

contrast, which are crucial for visual clarity and de-

tail perception.

The BRISQUE scores, which provide a measure

of image quality without reference, further comple-

ment these findings. The lower BRISQUE scores for

the dilated UM methods, particularly UM 7D, and

the UM P AV G 1 method suggest an improvement

in perceived image quality compared to the original.

This aligns with their higher SF and RMSC values,

indicating that these methods not only enhance sharp-

ness and contrast but also do so in a way that is gen-

erally perceived as an improvement in image quality.

In summary, the proposed methods with average

fusion rules generally enhance image details and con-

trast more aggressively than other methods, as indi-

cated by higher H, SF, and RMSC values. However,

this might come at the cost of potentially introducing

noise. The dilated UM methods also show strong per-

formance in enhancing image quality, particularly in

terms of sharpness and perceived quality.

4 CONCLUSIONS AND FUTURE

WORK

In this paper we proposed an image sharpening tech-

nique that integrates wavelet fusion with multiple di-

lated UM algorithms. This approach stands out for

its computational efficiency without compromise the

quality of image enhancement.

The performance metrics for our proposed ap-

proach align with our initial hypothesis, images pro-

An Image Sharpening Technique Based on Dilated Filters and 2D-DWT Image Fusion

597

cessed with our algorithm exhibit improved sharpness

while avoiding common issues such as overshooting

or introduction of noticeable artifacts.

The proposed UM algorithms utilizing average fu-

sion rules (especially U M P AV G 1) demonstrated

considerable performance both in a smaller dataset

and across a more extensive dataset.

Despite the sophisticated processing involved,

wavelet transforms are computationally efficient, and

multiscale analysis can be performed quickly.

As future work we might consider other fusion

rules and also other multi-scale fusion methods, such

as a pyramidal approach in order to enhance the re-

sults of the image sharpening.

REFERENCES

Acharya, T. and Chakrabarti, C. (2006). A survey on lifting-

based discrete wavelet transform architectures. Jour-

nal of VLSI signal processing systems for signal, im-

age and video technology, 42(3):321–339.

Archana, J. and Aishwarya, P. (2016). A review on the

image sharpening algorithms using unsharp masking.

International Journal of Engi-neering Science and

Computing, 6(7).

Bilcu, R. C. and Vehvilainen, M. (2008). Constrained un-

sharp masking for image enhancement. In Interna-

tional Conference on Image and Signal Processing,

pages 10–19. Springer.

Bogdan, V., Bonchis¸, C., and Orhei, C. (2020). Custom

dilated edge detection filters. Computer Science Re-

search Notes - CSRN, CSRN 3001:161–168.

Daubechies, I. (1992). Ten lectures on wavelets. SIAM.

Demirel, H. and Anbarjafari, G. (2011). Discrete wavelet

transform-based satellite image resolution enhance-

ment. IEEE Transactions on Geoscience and Remote

Sensing, 49(6):1997–2004.

Deng, G. (2010). A generalized unsharp masking algorithm.

IEEE transactions on Image Processing, 20(5):1249–

1261.

Ibrahim, H. and Kong, N. S. P. (2009). Image sharpen-

ing using sub-regions histogram equalization. IEEE

Transactions on Consumer Electronics, 55(2):891–

895.

Jain, R., Kasturi, R., Schunck, B. G., et al. (1995). Machine

vision, volume 5. McGraw-hill New York.

Kinoshita, Y. and Kiya, H. (2019). Convolutional neural

networks considering local and global features for im-

age enhancement. In 2019 IEEE International Confer-

ence on Image Processing (ICIP), pages 2110–2114.

IEEE.

Li, J., Feng, X., and Hua, Z. (2021). Low-light image en-

hancement via progressive-recursive network. IEEE

Transactions on Circuits and Systems for Video Tech-

nology, 31(11):4227–4240.

Mallat, S. G. (1989). A theory for multiresolution signal de-

composition: the wavelet representation. IEEE trans-

actions on pattern analysis and machine intelligence,

11(7):674–693.

Mittal, A., Moorthy, A. K., and Bovik, A. C. (2012).

No-reference image quality assessment in the spatial

domain. IEEE Transactions on image processing,

21(12):4695–4708.

Orhei, C. (2022). Urban Landmark Detection Using Com-

puter Vision. PhD thesis, Universitatea Politehnica

Timis¸oara. Politehnica Publishing House, ”PhD the-

ses of UPT”, series 7: ”Electronic and Telecommuni-

cation Engineering, ISBN=978-606-35-0513-3.

Orhei, C., Bogdan, V., and Bonchis¸, C. (2020). Edge

map response of dilated and reconstructed classical

filters. In 2020 22nd International Symposium on

Symbolic and Numeric Algorithms for Scientific Com-

puting (SYNASC), pages 187–194. IEEE.

Orhei, C., Bogdan, V., Bonchis, C., and Vasiu, R. (2021a).

Dilated filters for edge-detection algorithms. Applied

Sciences, 11(22):10716.

Orhei, C. and Vasiu, R. (2022). Image sharpening using di-

lated filters. In 2022 IEEE 16th International Sympo-

sium on Applied Computational Intelligence and In-

formatics (SACI), pages 000117–000122.

Orhei, C. and Vasiu, R. (2023). An analysis of extended and

dilated filters in sharpening algorithms. IEEE Access,

11:81449–81465.

Orhei, C., Vert, S., Mocofan, M., and Vasiu, R. (2021b).

End-to-end computer vision framework: An open-

source platform for research and education. Sensors,

21(11):3691.

Orhei, C., Vert, S., Mocofan, M., and Vasiu, R. (2021c).

TMBuD: A dataset for urban scene building detection.

In International Conference on Information and Soft-

ware Technologies, pages 251–262. Springer.

Papamarkou, I., Papamarkos, N., and Theochari, S. (2014).

A novel image sharpening technique based on 2d-dwt

and image fusion. In 17th International Conference

on Information Fusion (FUSION), pages 1–8. IEEE.

Polesel, A., Ramponi, G., and Mathews, V. J. (2000). Im-

age enhancement via adaptive unsharp masking. IEEE

transactions on image processing, 9(3):505–510.

Qi, Y., Yang, Z., Sun, W., Lou, M., Lian, J., Zhao, W., Deng,

X., and Ma, Y. (2021). A comprehensive overview of

image enhancement techniques. Archives of Compu-

tational Methods in Engineering, pages 1–25.

Ramponi, G. and Polesel, A. (1998). Rational unsharp

masking technique. Journal of Electronic Imaging,

7(2):333–338.

Somal, S. (2020). Image enhancement using local and

global histogram equalization technique and their

comparison. In First International Conference on Sus-

tainable Technologies for Computational Intelligence,

pages 739–753. Springer.

Zafeiridis, P., Papamarkos, N., Goumas, S., and Seimenis,

I. (2016). A new sharpening technique for medical

images using wavelets and image fusion. Journal of

Engineering Science & Technology Review, 9(3).

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

598