DKCDF: Dual-Kernel CNN with Dual Feature Fusion for

Lung Cancer Detection

Wariyo G. Arero

1a

, Yaqin Zhao

1b

, Longwen Wu

1c

and Yi Wang

2

1

School of Electronics and Information Engineering, Harbin Institute of Technology, Harbin, China

2

Fushan Environmental Monitoring Center, Yantai, China

Keywords: Multi Feature Fusion, CNN, Lung Cancer, HOG, LBP.

Abstract: One of the main reasons for cancer-related fatalities worldwide is lung cancer. Early diagnosis is essential

for enhancing patient outcomes and lowering mortality rates. Deep learning-based approaches have recently

demonstrated promising outcomes in medical image analysis applications, such as lung cancer identification.

In order to improve lung cancer detection, this research suggests a unique method that combines a dual-kernel

convolutional neural network (DKC) with dual-feature fusion using the Histogram of oriented gradients

(HOG) and local binary patterns (LBP). Convolutional neural networks are good at extracting and detecting

features. CNN features are built using low-level features from the first convolution layer, which might only

partially capture some local features and lead to the loss of some crucial details like edges and contours. HOG

is quite good at describing the shape of objects. LBP can record local structure and information about spatial

texture. The distribution of edge directions or local gradients in intensity can provide a good definition of an

object's shape and local appearance. The lung image is loaded with bone, air, blood, water and other

substances and appears noisy in the lung image. As a result, in this research, we favor the HOG and LBP

feature fusion for lung cancer detection.

1 INTRODUCTION

The prognosis of patients who have lung cancer can

be significantly improved by early identification,

which is a primary global health concern. The manual

analysis of medical pictures used in traditional lung

cancer screening procedures can be time-consuming

and prone to human error. Therefore, it is crucial to

create automated and reliable lung cancer detection

technologies. In recent times, the convergence of

computer vision and medical imaging has become a

promising frontier in the pursuit of diagnostic tools

that are both more accurate and efficient(Han et al.,

2019; Liang et al., 2023). Within this realm, the

amalgamation of dual kernel techniques, along with

the integration of various features like Histogram of

Oriented Gradients (HOG) and Local Binary Patterns

(LBP), has demonstrated significant promise in

elevating the sensitivity and specificity of systems

designed for detecting lung cancer. In this study, we

a

https://orcid.org/0009-0001-9074-4948

b

https://orcid.org/0000-0002-0167-0597

c

https://orcid.org/0000-0002-6914-6695

introduce a dual-kernel CNN-based method for

improving lung cancer detection by combining HOG

and LBP characteristics.

Cancer is characterized by the uncontrolled

growth of cells in the body, with lung cancer

specifically involving the formation of malignant

cells within the lungs. Especially in developing

nations, it stands out as the most prevalent cancer

among both men and women and the second most

frequently diagnosed disease. The main contributors

to lung cancer are believed to be smoking, exposure

to air pollution, and insufficient nutrition. Globally,

the number of lung cancer cases and deaths has

considerably grown (Bade & Cruz, 2020). Annually,

the American Cancer Society provides estimates for

new cancer cases and deaths in the United States by

compiling the latest data on population-based cancer

occurrences and outcomes. This information is

derived from incidence data gathered by central

cancer registries and mortality data collected by the

54

Arero, W., Zhao, Y., Wu, L. and Wang, Y.

DKCDF: Dual-Kernel CNN with Dual Feature Fusion for Lung Cancer Detection.

DOI: 10.5220/0012406100003657

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 17th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2024) - Volume 1, pages 54-64

ISBN: 978-989-758-688-0; ISSN: 2184-4305

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

National Center for Health Statistics. For the year

2023, it is projected that there will be 1,958,310 new

cancer cases and 609,820 cancer-related deaths in the

United States (Siegel, Miller, Wagle, & Jemal, 2023).

As scientists explore the complexities of medical

imaging, the need for advanced algorithms capable of

discerning meaningful patterns from intricate datasets

has surged(Ma, Wan, Hao, Cai, & Liu, 2023). The

dual-kernel approach, a robust concept in machine

learning, entails utilizing multiple kernels to capture

varied facets of data representation. This paper

centers on the utilization of the dual-kernel

methodology within the realm of lung cancer

detection, seeking to harness the complementary

information inherent in different feature spaces.

A critical element of our proposed approach

revolves around merging two distinct texture

descriptors, namely HOG and LBP. The HOG

descriptor excels at capturing the spatial arrangement

of pixel intensities, emphasizing gradient information

crucial for delineating structural nuances in medical

images. Conversely, LBP, renowned for its ability to

encode local texture patterns, contributes a

supplementary layer of information, enhancing the

overall feature representation.

The rationale behind this fusion strategy is

grounded in the notion that different imaging

modalities may highlight diverse aspects of the

underlying pathology. By combining the strengths of

HOG and LBP within a dual kernel framework, our

goal is to construct a more comprehensive and

discriminative feature set, thereby bolstering the

robustness of our lung cancer detection system.

Additionally, the diagnosis is typically made at an

advanced stage, when there is no longer hope for

treatment (Soerjomataram et al., 2023). In order to

enhance overall survival, detect lung cancer in its

earliest stages while successful therapies are still

viable, and lower side effects associated with

systemic treatments, it is vital to develop novel

diagnostic techniques that boost the accuracy of early

diagnosis (Shah, Malik, Muhammad, Alourani, &

Butt, 2023). Examining computed tomography (CT)

images is one of the crucial steps in the pre-diagnosis

of lung cancer. The pre-diagnosis process that follows

X-ray or Computed Tomography (CT) scanning takes

the radiologist a lot of time and energy. Additionally,

this scanning procedure calls for a very high level of

focus and proficiency. In particular, if interpretation

is heavily reliant on prior expertise, less experienced

radiologists have extremely variable detection rates,

which accelerates the speed of false positive detection

(S. Shen, Han, Aberle, Bui, & Hsu, 2019).

Low-dose helical Computed Tomography

(LDCT) (Fang Lei, 2019) (Fedewa et al., 2021) is

currently being used as a method for lung cancer

screening (Jonas et al., 2021). To increase the

diagnostic accuracy for the classification of lung

cancer detection, several efforts are being made to

develop computer-assisted diagnosis and detection

systems. The development of computer-aided

systems was motivated by the requirement for

trustworthy and impartial analysis. The purpose of

this research is to identify whether a picture is

cancerous or not and to extract features for detection

(Ani Brown Mary & Dejey, 2018).

The identification, segmentation, and

classification of benign and malignant pulmonary

nodules are the core topics of research on deep

learning-based lung imaging approaches. To enhance

the performance of deep learning models, researchers

mainly concentrate on creating new network

architectures and loss functions. Review papers on

deep learning approaches have lately been published

by a number of research groups (Mandal & Vipparthi,

2021) (Hamedianfar, Mohamedou, Kangas, &

Vauhkonen, 2022) (Highamcatherine &

Highamdesmond, 2019). However, deep learning

techniques have advanced quickly, and every year,

numerous new approaches and applications appear.

This study has topics that earlier studies were unable

to cover.

Early detection of lung cancer patients can

considerably improve their prognosis, which is a

serious global health concern. Traditional lung cancer

screening methods include the manual examination of

medical images, which can be time-consuming and

prone to human error. Therefore, developing

automated and trustworthy lung cancer detection

methods is essential. In this article, we combine the

HOG and LBP feature fusion mechanisms to present

a dual-kernel CNN-based technique for enhancing

lung cancer identification. The following is our

work's primary contribution:

1. We propose dual paths CNN with different

receptive fields (dual-kernel).

2. We propose that HOG and LBP features are

fused with the output of our dual-kernel CNN to

supplement the edge, profile information and

spatial texture information of lung images.

3. We fix the problem of class imbalance by using

data augmentation.

In the subsequent sections, we delve into the

technical foundations of our methodology, which

involves dual kernels and the fusion of multiple

features. We illustrate its potential through

experimental results and comparative analyses. As we

DKCDF: Dual-Kernel CNN with Dual Feature Fusion for Lung Cancer Detection

55

navigate through the intricacies of this innovative

approach, it becomes evident that the amalgamation

of diverse features and dual kernel processing not

only enhances the accuracy of lung cancer detection

but also provides a more nuanced understanding of

the disease at the pixel level. To conclude, this paper

introduces an innovative advancement in medical

image analysis by highlighting the effectiveness of a

dual kernel framework combined with the fusion of

HOG and LBP features for improved lung cancer

detection. Our study emphasizes the significance of

harnessing diverse information sources and

showcases how advanced machine learning

techniques have the potential to reshape the landscape

of early cancer diagnosis.

The remainder of this paper is organized as follows:

we describe related works in part 2, the method and

dataset in part 3, next experiment in part 4, ablation

study in part 5 and finally conclusion in part 6.

2 RELATED WORK

Image processing methods have been studied in the

past to detect lung cancer (Gurcan et al., 2002). The

field of medical imaging has recently seen the

adoption of neural networks and deep learning

techniques (Fakoor, Ladhak, Nazi, & Huber, 2013)

(Greenspan, Van Ginneken, & Summers, 2016) (D.

Shen, Wu, & Suk, 2017). In order to categorize and

diagnose lung cancer using machine learning and

neural networks, a number of researchers (Cai et al.,

2015) (Al-Absi, Belhaouari, & Sulaiman, 2014)

(Gupta & Tiwari, 2014) (Penedo, Carreira, Mosquera,

& Cabello, 1998) (Taher & Sammouda, 2011)

(Kuruvilla & Gunavathi, 2014) have made an effort.

Deep learning methods have not been used frequently

to identify lung cancer. This is due to the dearth of a

sizable dataset of medical photographs, particularly

those of lung cancer. Urine samples are used by

Shimizu et al. (Shimizu et al., 2016) to identify lung

cancer.

When the literature is searched, a sizable number

of research are discovered that help with the quick

detection of lung cancer. Wang and colleagues (Wang

et al., 2018) suggested a new CNN-based

methodology to categorize cancerous or non-

cancerous tissue. In the suggested model, full-slide

imaging (WSI) is typically one megapixel. Hence,

considerably smaller picture patches recovered from

WSI are frequently employed as input. Each 300x300

pixel image patch from lung adenocarcinoma (ADC)

WSIs was employed in this 2018 study. The

suggested model had a success percentage of 89.8%.

A deep convolutional neural network-based

pulmonary nodule identification technique is

proposed by Deng and Chen (DENG & CHEN,

2019), which ingeniously includes the deep

supervision of incomplete CNN layers. (S. Chen,

Han, Lin, Zhao, & Kong, 2020) uses balanced CNN

with traditional candidate detection to create a

computer-aided detection (CADe) strategy. A

convolutional neural network-based automatic

pulmonary nodule identification and classification

system with only four convolutional layers is

proposed by Masud et al. (Masud et al., 2020).

The DSC (dice coefficient) for nodule segmenta-

tion is 73.6%, according to Tong et al.'s (Tong, Li,

Chen, Zhang, & Jiang, 2018) proposed pulmonary

nodule segmentation algorithm, which is based on an

upgraded U-Net and adds a residual network.

(Guo et al., 2014) suggests using a convolution

neural network to create a lung cancer prediction

system, which resolves the problems with manual

cancer prediction. During this procedure, CT scan

images are gathered and processed using a layer of

neural network that automatically extracts image

features. These features are then processed using deep

learning to predict the features associated with cancer

using a large volume of images.

The system the authors developed assists in

decision-making while analysing the patient's CT scan

report. With the aid of convolution neural networks,

lung nodules from CT scan pictures were predicted (El-

Baz et al., 2013). In order to effectively classify lung

cancer-related features as benign and malignant, LIDC

IDRI database images are gathered and put into the

stack encoder (SAE), convolution neural network

(CNN), and deep neural network (DNN). A technique

developed by the author provides an accuracy of up to

84.32%. In our work, we introduce dual-kernel CNN,

which is used for local and global range dependencies

because most deep learning networks are limited to

fixed receptive field size; we also propose a feature

fusion mechanism, which is HOG and LBP, which are

used for obtaining more comprehensive lung feature

and improve the ability to describe and identify lung

cancer image.

3 METHODS

3.1 Data Set and Pre-Processing

We take advantage of the Kaggle Data Science Bowl

2017 (KDSB, 2017) (Kaggle, 2017) database of

medical images. The data set includes 2101 images

that have been labelled with 0 for patients without

BIODEVICES 2024 - 17th International Conference on Biomedical Electronics and Devices

56

cancer and 1 for patients with cancer. Digital Imaging

and Communications in Medicine, or DICOM, is the

format used for the image. This dataset has a label of 0

for 70% of the data and a label of 1 for the remaining

30%. The CT scan for each patient consists of a

variable number of images (often 100–400; each image

is a 2-D axial slice) with a resolution of 512x512

pixels. Nodules in this dataset are not labelled.

Due to tumours in the lung tissue, the lung image

consists of unimportant parts that must be removed

through segmentation. These unimportant parts

include bone, air, blood, water, and other substances

that must be excluded due to their effects on data noise

and nodule learning. The Hounsfield (HU), a unit of

radio density and representative of CT scan radio

densities, is the measurement used in CT scans.

Diverse researchers employ several segmentation

techniques to weed out irrelevant data, including

clustering (Rao, Pereira, & Srinivasan, 2016), k-means

(Gurcan et al., 2002), watershed (Ronneberger,

Fischer, & Brox, 2015), and thresholding (Alakwaa et

al., 2017). In our work, we used thresholding with a

filter value of -600 to our 2D image.

Initially, the pixel values of each CT scan are

transformed into Hounsfield Units (HU), a

quantitative metric used to express the radio density

of substances in lung CT images. Notably, the lung,

bone, blood, kidney, and water exhibit radio density,

values of -500 HU, 700 HU, 0 HU, 30 HU, and 0 HU

respectively. Following this conversion, each CT

scan comprises multiple slices, with pixel values

corresponding to HU and falling within the range of

[-1024, 3071].

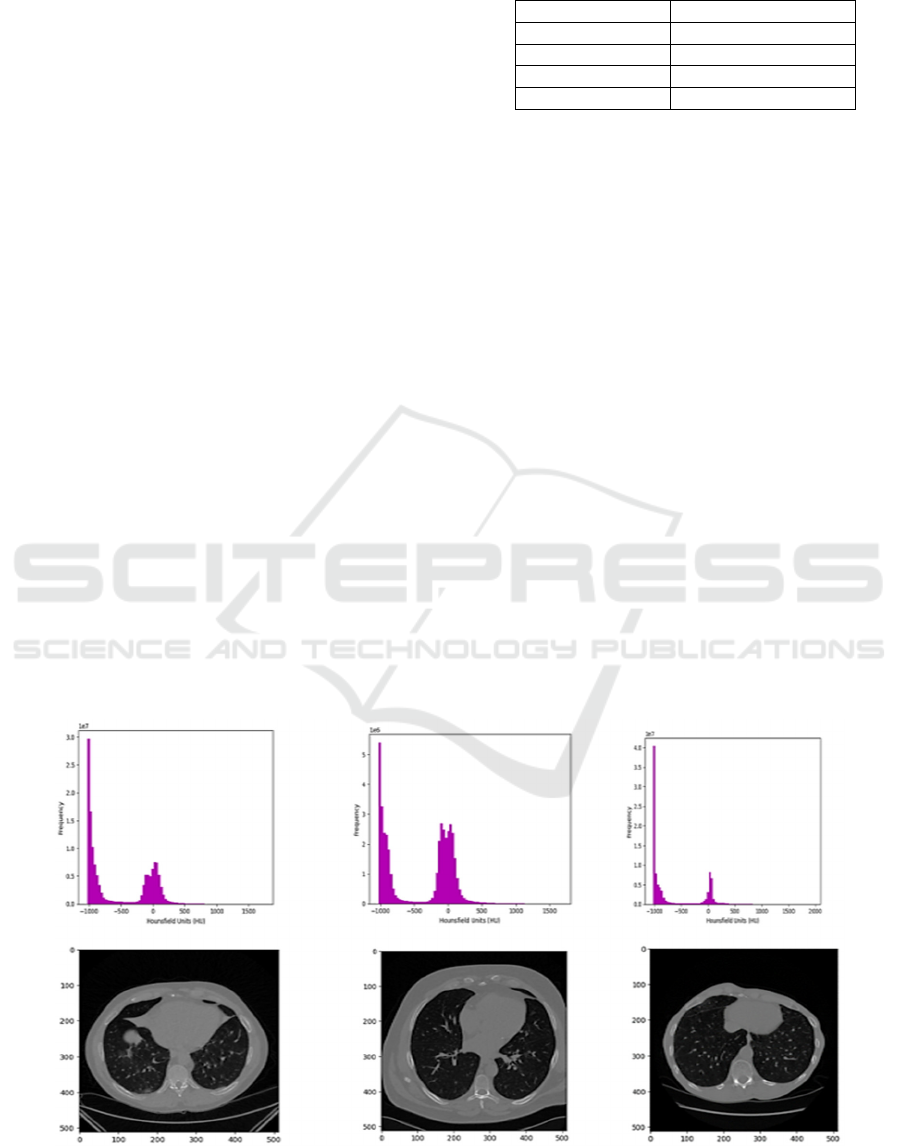

Table 1: Typical Radio densities in HU of Various

Substances in a CT scan (Alakwaa, Nassef, & Badr, 2017).

Substance Radio density(HU)

Ai

r

-1000

Lung tissue -500

Water and Bloo

d

0

Bone 700

The subsequent step involves the removal of

specific tissues, a process commonly addressed by

scholars through methods described above. In our

study, we opt for thresholding. To accomplish this, a

Gaussian filter is applied, and pixel values are

normalized to fit within the [0, 1] range, utilizing a

threshold of -600. Figure 2 depicts a CT scan slice of

a patient alongside its segmentation outcome based

on thresholding.

To enable the utilization of the proposed network,

we convert the HU values of each slice into UINT8,

signifying that the initial raw data, ranging from [-

1024, 3071], undergo linear transformation to [0,

255]. Subsequently, the mask employed for lung

tissue segmentation is multiplied by these values,

with substances outside the mask set to 170,

representing a standard tissue luminance.

We used the thresholding technique to segment

the CT scan image. The unnecessary parts of the

lungs with their typical radio densities of different

parts of the CT scan are shown in Table 1; as shown

in Table 1, the pixels near -1000 and greater than -320

are masked. The resampled image with thresholding

-600 of sample patients with 3D plotting is shown in

Figure 2.

Figure 1: Histogram of pixel values in HU (Hounsfield) for patient 601 at 180 slices, patient 801 at 70 slices, and patient 1001

at slice 120, respectively and with corresponding 2D axial.

DKCDF: Dual-Kernel CNN with Dual Feature Fusion for Lung Cancer Detection

57

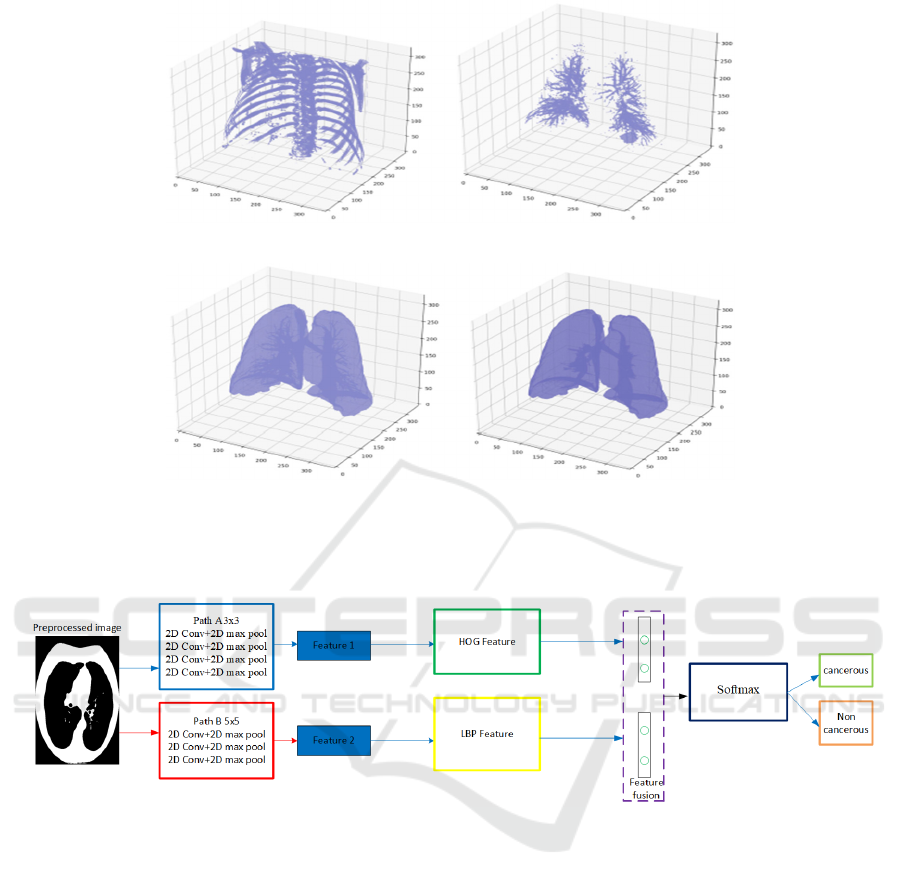

(a) (b)

(c) (d)

Figure 2: 2(a) resample of a 3D image with 600-pixel value HU uncover the bone segment 2(b) resample of sample patient

including lung bronchioles 2(c) resample sample patient performing mask with air 2(d) resample sample patient with

bronchioles included as a terminal mask.

Figure 3: Our proposed dual-kernel with dual-feature fusion model.

3.2 Dual-Kernel CNN

Because most researchers only use one receptive field

within a single path, this affects the nearby pixel and

high-level information during feature extraction. To

address this challenge, we propose two dual CNNs

with different receptive field sizes with dual-feature

fusion mechanisms. We named our paths as path A

and path B with receptive field sizes 3x3 and 5x5,

respectively. The output of path A from the fourth

convolution layer is concatenated with the first

convolution layer of the second path. Path A has four

convolution layers and four max pooling layers.

Three convolution layers and three maximum pooling

layers are present in the second path. We only paid

attention to the indicated kernel sizes and two paths.

In order to study the problems described. Table 2

contains the model parameters. Furthermore, in the

proposed model, we incorporate a feature fusion

7strategy. Figure 3 shows the network of the proposed

model.

3.3 Feature Fusion

The objective of feature extraction is typically to

portray the raw data as a condensed set of features that

more accurately captures its essential characteristics

and attributes. By doing so, we can lower the original

input's dimensionality and train pattern recognition

and classification algorithms using the new features

as input. In our study, we make use of two types of

features, HOG and LBP, which we will go through

individually below.

BIODEVICES 2024 - 17th International Conference on Biomedical Electronics and Devices

58

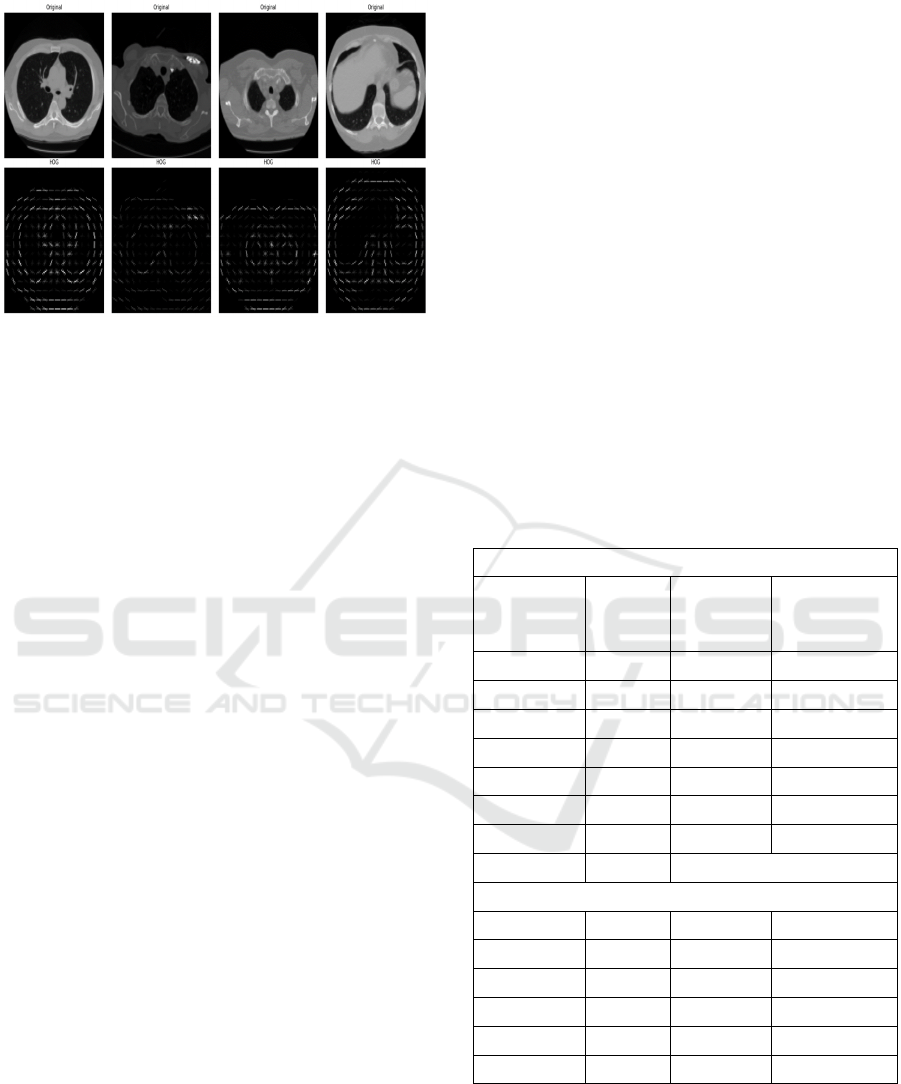

3.3.1 Histogram of Gradients (HOG)

Feature Fusion

The HOG description highlights an object's structure

or shape, distinguishing it from the edge features used

in photo extraction. While edge features focus solely

on determining if a pixel is part of an edge, HOG goes

further by providing information on edge direction.

This involves extracting gradients and orientations

(magnitude and direction) of edges, dividing the

entire image into smaller sections, and determining

gradients and orientation for each region.

Subsequently, HOG generates separate histograms

for each zone. The term "Histogram of Oriented

Gradients" denotes the histograms produced from

pixel values' gradients and orientations. In a dense

grid, the HOG approach (Dalal & Triggs, 2005)

evaluates locally normalized histograms of image

gradient origins, effectively characterizing an object's

form and local appearance through edge distribution

or local intensity gradients. This method proves

valuable in discerning lung characteristics,

particularly in identifying lung cancer, as it provides

orientation information about the lung boundary and

texture details in the surrounding area. The lung,

containing extraneous elements like air, bone, tissue,

and water in the low-attenuation region, benefits from

the nuanced information provided by the HOG

feature extraction approach.

The HOG feature extraction method (Dalal &

Triggs, 2005) evaluates locally normalized

histograms of picture gradient origins in a dense grid.

Edge distribution or local intensity gradients can

efficiently describe an object's form and local

appearance. The lung is filled with extraneous

components, including air, bone, tissue, and water

and appears in a low-attenuation region. Therefore,

the only information offered is orientation

information of the lung boundary and texture

information of the surrounding area. Therefore, in this

study, we favor the HOG characteristic for

identifying lung cancer. We show a sample image

with HOG feature fusion in Figure 4.

() ( )( )

() ( )( )

,,1,1

,1,1,

x

y

Grc Irc Irc

Grc Ir c Ir c

=+−−

=−−+

(1)

After calculating

x

G

and

Gy

, the magnitude and

angle of each pixel are calculated using the formulae

mentioned below.

22

1

()

() tan

xy

y

x

Magnitude G G

G

Angle

G

μ

θ

−

=−

=

(2)

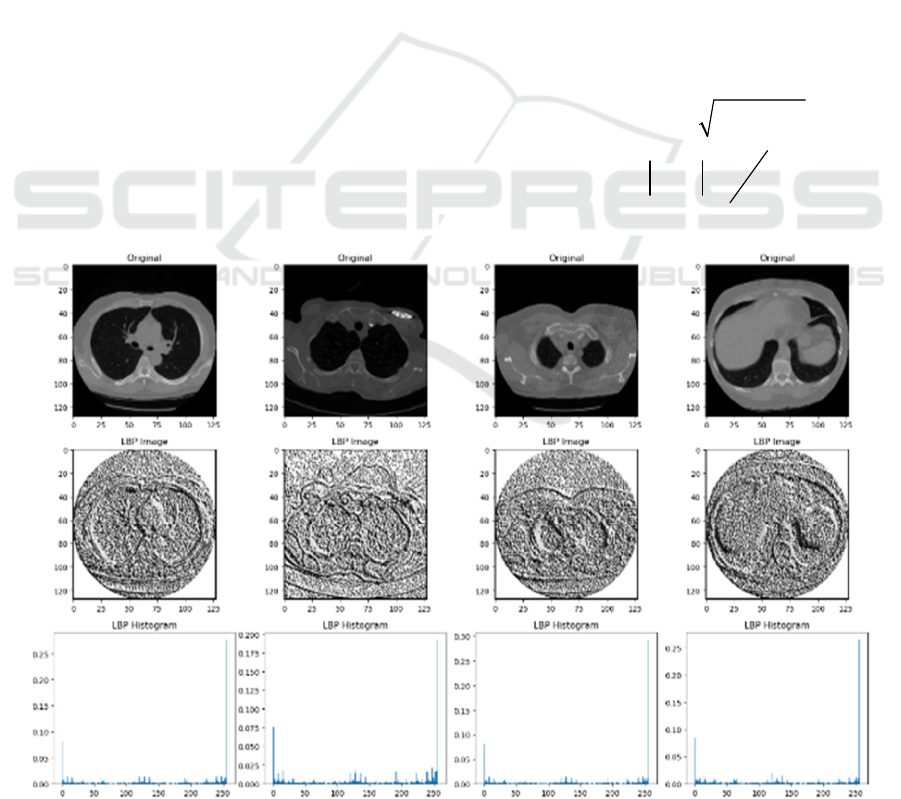

Figure 4: Sample of Lung image by LBP feature with its Histogram.

DKCDF: Dual-Kernel CNN with Dual Feature Fusion for Lung Cancer Detection

59

Figure 5: A sample lung image with HOG feature.

3.3.2 Local Binary Feature (LBP)

In biomedical image analysis, two-dimensional

texture analysis is incredibly crucial. A practical and

multiresolution method for processing a grayscale

image is LBP (Ojala, Pietikäinen, & Harwood, 1996).

It is a rotation-invariant texture descriptor built using

nonparametric sample discrimination and local

binary patterns. There are two different sorts of

distinguishing information for the lung imaging sign:

(1) edge orientation and grayscale gradient

information and (2) backdrop texture. The edge

information of the lung is only auxiliary and generic

for class differentiation because the lung itself is

packed with extraneous components like air, bone,

tissue, water, and other substances. The background

information of a lung cancer imaging sign is crucial

for its recognition, making the LBP helpful texture for

lung cancer diagnosis.

()

()

1

,

0

,2

P

p

PR c c p c

p

LBP x y s g g

−

=

=−

(3)

()

10

00

x

sx

x

≥

=

<

(4)

where

c

x

and

c

y

are the coordinates of the center

pixel, p are circular sampling points, P is the number

of sampling points or neighborhood pixels,

p

g

is the

grayscale value of p,

c

g

is the center pixel, and s or

sign is threshold function. For classification purposes,

the LBP values are represented as a histogram, as we

show in Figure 5.

A variety of applications, including face

recognition (Ahonen, Hadid, & Pietikainen, 2006)

and medical picture analysis (Tian, Fu, & Feng,

2008), have made extensive use of the LBP (Ojala et

al., 1996), a potent tool for characterizing texture

properties. The first LBP operator which was first

presented in (Ojala et al., 1996) by Ojala et al. By

comparing the points of, for instance, 3×3

neighboring pixels with respect to the value of the

central pixel, LBP is a straightforward approach that

creates binary codes. If the neighboring pixel's value

is less than the center pixel's, it produces the binary

code 0. If not, it produces the binary code 1. The LBP

code is created by multiplying the binary codes by the

respective weights and adding the results. This value

is determined using Eq. (1) as follows:

() ( )

1

,

0

,2

P

i

PR i c

i

LBP x y s g g

−

=

=−

(5)

()

1

0

ic

ic

if g g

Sg g

else

≥

−=

(6)

Table 2: The parameter of our multi-kernel model.

Path A

Layers

w. size/

#weight

Activation Input

Conv 3×3/32 ReLu 128×128×1

Max pool 2×2 32×126×126

Conv 3×3/32 ReLu 32×125×125

Max pool 2×2 32×123×123

Conv 3×3/32 ReLu 32×122×122

Max pool 2×2 32×120×120

Conv 3×3/32 ReLu 32×119×119

Max pool 4×4 32 ×115×115

Path B

Conv ReLu 128×128×1

Max pool 2×2 32×124×124

Conv 5×5/32 ReLu 32×123×123

Max pool 2×2 32×119×119

Conv 5×5/32 ReLu 32×118×118

Max pool 2×2 32×115×115

4 EXPERIMENTS

We implement our model by using one of the deep

learning library tensors flows with Keras backend,

which supports a graphical processing unit (GPU).

BIODEVICES 2024 - 17th International Conference on Biomedical Electronics and Devices

60

This tensor flow backend Keras with GPU speeds up

the process of a deep learning algorithm. We describe

the parameters of our model, such as kernel size,

convolution layer, pooling layer, hidden layer, stride

and others, in Table 2. The parameter in the table is

the one in which our model achieves the best

performance on the validation set. The training

hyperparameters, including initial momentum, end

momentum, learning rate, and weight decay, were

configured as 0.5, 0.8, 0.001, and 0.001, respectively.

Stride 1 was applied to convolution and max-pool

layers to maintain per-pixel precision. The filters for

all layers, except the softmax layer's parameter

initialized to the label's log, were randomly initialized

from uniform distributions (-0.005, 0.005). Finally,

the network's bias was set to zero.We have shown our

results in Table 3.

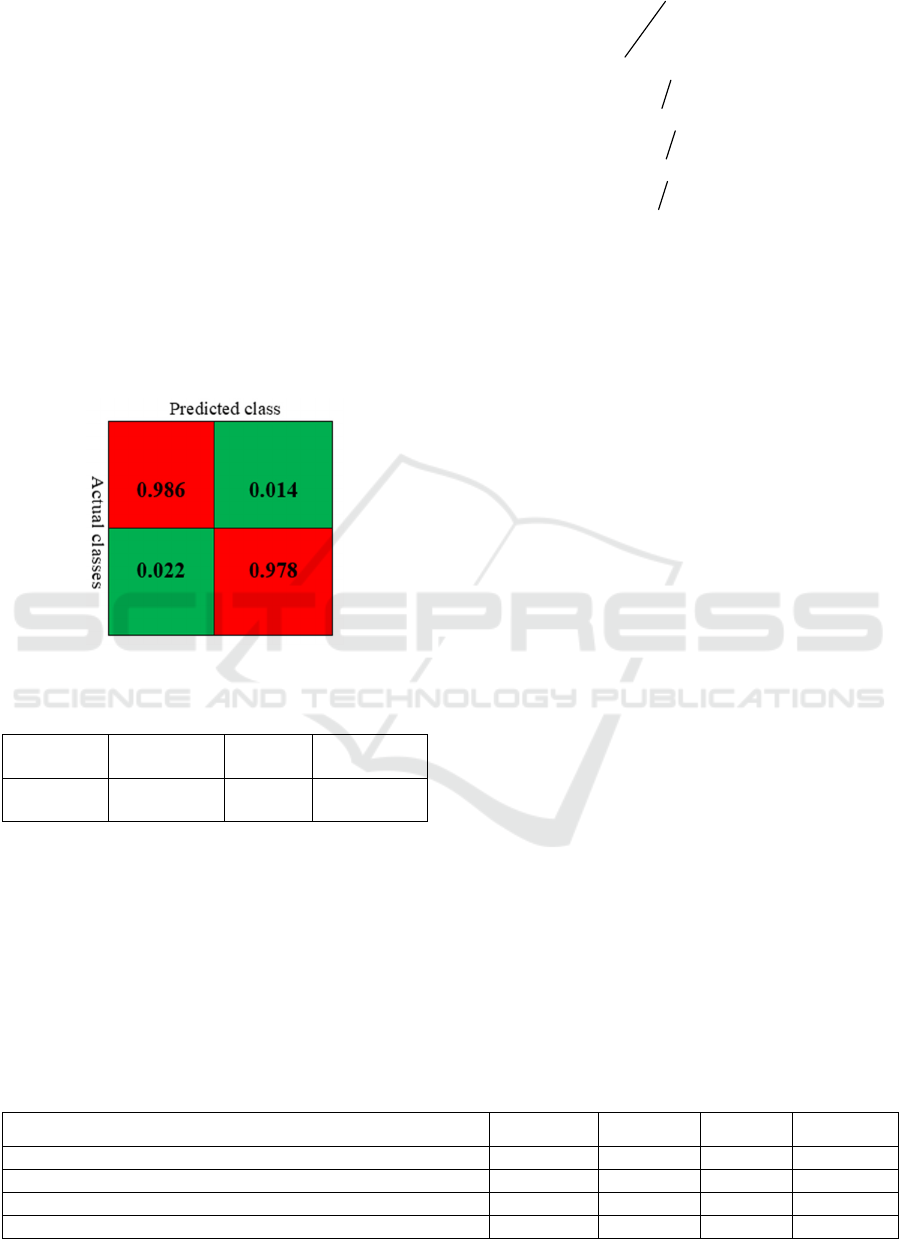

Figure 6: Confusion matrix of our model.

Table 3: Result achieved from our model.

Accuracy Precision Recall Specificity

98.2% 98.6% 97.8% 98.5%

4.1 Performance Evaluation

Researchers have put up a number of performance

evaluations for medical image identification.

Accuracy, recall, and specificity are among the

metrics that are frequently used. We frequently use

performance metrics like accuracy (A), recall (R),

precision (P), and specificity (S) to gauge how well

our model performs.

()

()

tp tn

A

tp fp fn tn

+

=

+++

(7)

nn p

Stt f=+

(8)

pp n

Rtt f=+

(9)

pp P

Ptt f=+

(10)

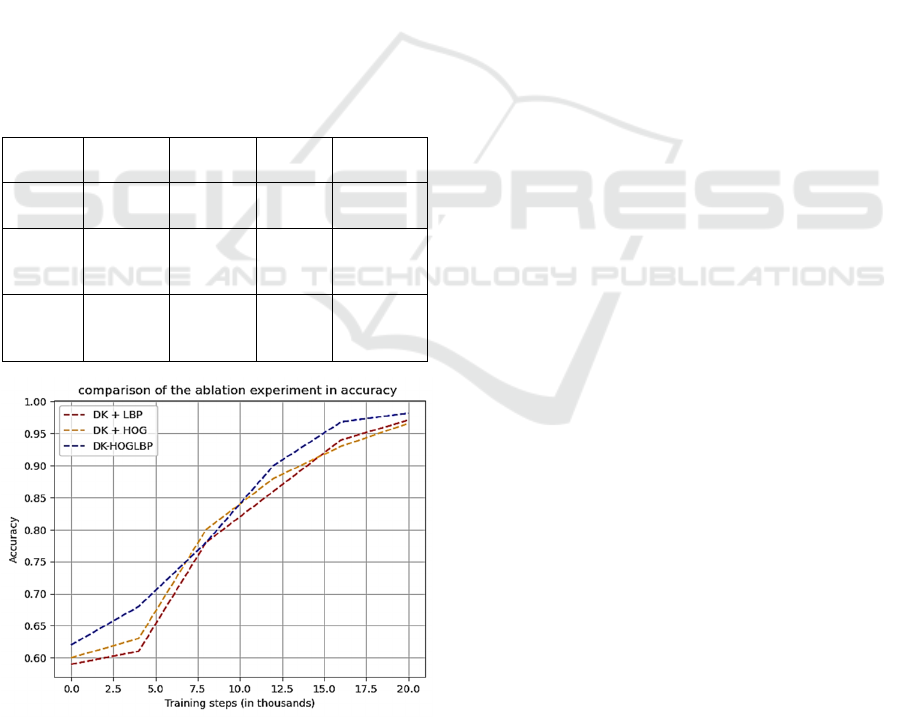

5 ABLATION STUDY

Our suggested lung cancer detection model, which

uses a dual-kernel technique with the fusion of two

different feature types, Histogram of Oriented

Gradients (HOG) and Local Binary Patterns (LBP),

conducts an ablation study to examine the influence

of individual components. Understanding how each

feature type contributes to the performance of the

entire model is the primary goal of this study.

For the purpose of detecting lung cancer, our

foundational model, known as the dual-kernel with

dual-feature fusion, combines both HOG and LBP

features. After that, while maintaining the values of

all other model elements and hyper parameters, we

systematically assess how well the model performs

when one of these feature types is removed.

Considered are three main experimental conditions:

The combination of dual kernels and multiple

features represents our entire model architecture.

In this configuration, we use a dual-kernel with

LBP (without HOG) and only LBP features for

classification, excluding HOG features from the

model.

HOG and dual kernels without LBP Here, we use

HOG features for classification and omit LBP

features from the model.

With both HOG and LBP features included, the

dual-kernel with dual-feature fusion achieves the

most remarkable accuracy of 98.2%. This

demonstrates how the dual-kernel technique with

feature fusion effectively improves the model's

performance for detecting lung cancer. The accuracy

of the model falls to 96.7% when HOG features are

Table 4: Contestation of our method with other methods.

Methods Accuracy Precision Recall Specificity

DCLCCST (Y. Chen et al., 2022) 94.7% 95.6% 93.9% 95.5%

MLBLCDMIF (Nazir, AlQahtani, Jadoon, & Dahshan, 2023) 97.1% 97.8% 96.4% 97.7%

GLCDGM (Salama, Shokr

y

, & Al

y

, 2022) 97.6% 98.4% 96.8% 98.3%

DKCDF 98.2% 98.6% 97.8% 98.5%

DKCDF: Dual-Kernel CNN with Dual Feature Fusion for Lung Cancer Detection

61

removed, and only LBP features are used. This shows

that HOG characteristics highly influence the model's

capacity to identify lung cancer. The accuracy decline

(-1.5%) highlights the significance of HOG elements

in our model.

Conversely, the accuracy stays high at 97.1%

when we do not include LBP characteristics and

solely use HOG features. Even while this setup

outperforms employing only LBP characteristics, it

still falls short of the dual-kernel with dual-feature

fusion model. This shows that, although to a lesser

extent than HOG, LBP features help offer additional

information. Our lung cancer detection model's

overall accuracy is improved by both the HOG and

LBP features, according to our ablation study. As

their removal causes a more significant accuracy loss

than the omission of LBP features, HOG features, in

particular, are more crucial to improving model

performance. The significance of feature fusion and

the dual-kernel technique in enhancing the

performance of deep learning models for lung cancer

detection is therefore highlighted by our research.

Table 5: Result analysis from ablation experiment.

Methods Accuracy Precision Recall Specificity

DKCDF 98.2% 98.6% 97.8% 98.5%

DKC-

HOG

97.1% 97.8% 96.4% 97.7%

DKC-

LBP

96.6% 97.0% 96.4% 96.9%

Figure 7: Accuracy graph from the ablation experiment.

6 CONCLUSIONS

The article presents an innovative approach to lung

cancer detection by employing a dual-kernel with

dual-feature fusion method, incorporating Histogram

of Oriented Gradient and Local Binary Pattern fusion

techniques. Our assessment using the Kaggle Data

Science Bowl 2017 (KDSB, 2017) dataset reveals

superior outcomes when compared to recent

methodologies, highlighting advancements in

accuracy, recall, precision, and specificity. To be

specific, our model demonstrates an enhancement of

98.2%, 98.6%, 97.8% and 98.5% of accuracy,

precision, recall and specificity respectively

achieved.

These improved findings emphasize the potential

impact of our approach on enhancing lung cancer

detection, with implications for early diagnosis and

treatment strategies. In our forthcoming research, we

aim to investigate transfer learning methods to further

refine the accuracy of our proposed model. This

strategic approach seeks to leverage the insights

gained from our current model and apply them to new

data, fostering ongoing enhancements in lung cancer

detection.

ACKNOWLEDGEMENTS

This work is supported by The National Natural

Science Foundation of China under Grant Numbers

61671185 and 62071153.

REFERENCES

Ahonen, T., Hadid, A., & Pietikainen, M. (2006). Face

description with local binary patterns: Application to

face recognition. IEEE transactions on pattern analysis

and machine intelligence, 28(12), 2037-2041.

Al-Absi, H.R., Belhaouari, S.B., & Sulaiman, S. (2014). A

computer aided diagnosis system for lung cancer based

on statistical and machine learning techniques. J.

Comput., 9(2), 425-431.

Alakwaa, W., Nassef, M., & Badr, A. (2017). Lung cancer

detection and classification with 3d convolutional

neural network (3d-cnn). International Journal of

Advanced Computer Science and Applications, 8(8).

Ani Brown Mary, N., & Dejey, D. (2018). Classification of

coral reef submarine images and videos using a novel z

with tilted z local binary pattern (z⊕ tzlbp). Wireless

Personal Communications, 98, 2427-2459.

Bade, B.C., & Cruz, C.S.D. (2020). Lung cancer 2020:

Epidemiology, etiology, and prevention. Clinics in

chest medicine, 41(1), 1-24.

BIODEVICES 2024 - 17th International Conference on Biomedical Electronics and Devices

62

Cai, Z., Xu, D., Zhang, Q., Zhang, J., Ngai, S.-M., & Shao,

J. (2015). Classification of lung cancer using ensemble-

based feature selection and machine learning methods.

Molecular BioSystems, 11(3), 791-800.

Chen, S., Han, Y., Lin, J., Zhao, X., & Kong, P. (2020).

Pulmonary nodule detection on chest radiographs using

balanced convolutional neural network and classic

candidate detection. Artificial Intelligence in Medicine,

107, 101881.

Chen, Y., Feng, J., Liu, J., Pang, B., Cao, D., & Li, C.

(2022). Detection and classification of lung cancer cells

using swin transformer. Journal of Cancer Therapy,

13(7), 464-475.

Dalal, N., & Triggs, B. (2005). Histograms of oriented

gradients for human detection. Paper presented at the

2005 IEEE computer society conference on computer

vision and pattern recognition (CVPR'05).

DENG, Z., & CHEN, X. (2019). Pulmonary nodule

detection algorithm based on deep convolutional neural

network. Journal of Computer Applications, 39(7),

2109.

El-Baz, A., Elnakib, A., El-Ghar, A., Gimel'farb, G., Falk,

R., & Farag, A. (2013). Automatic detection of 2d and

3d lung nodules in chest spiral ct scans. International

journal of biomedical imaging, 2013.

Fakoor, R., Ladhak, F., Nazi, A., & Huber, M. (2013).

Using deep learning to enhance cancer diagnosis and

classification. Paper presented at the Proceedings of the

international conference on machine learning.

Fang Lei, B. (2019). Barriers to lung cancer screening with

low-dose computed tomography. Paper presented at the

Oncology nursing forum.

Fedewa, S.A., Kazerooni, E.A., Studts, J.L., Smith, R.A.,

Bandi, P., Sauer, A.G., . . . Silvestri, G.A. (2021). State

variation in low-dose computed tomography scanning

for lung cancer screening in the united states. JNCI:

Journal of the National Cancer Institute, 113(8), 1044-

1052.

Greenspan, H., Van Ginneken, B., & Summers, R.M.

(2016). Guest editorial deep learning in medical

imaging: Overview and future promise of an exciting

new technique. IEEE transactions on medical imaging,

35(5), 1153-1159.

Guo, Y., Feng, Y., Sun, J., Zhang, N., Lin, W., Sa, Y., &

Wang, P. (2014). Automatic lung tumor segmentation

on pet/ct images using fuzzy markov random field

model. Computational and mathematical methods in

medicine, 2014.

Gupta, B., & Tiwari, S. (2014). Lung cancer detection using

curvelet transform and neural network. International

Journal of Computer Applications, 86(1).

Gurcan, M.N., Sahiner, B., Petrick, N., Chan, H.P.,

Kazerooni, E.A., Cascade, P.N., & Hadjiiski, L. (2002).

Lung nodule detection on thoracic computed

tomography images: Preliminary evaluation of a

computer‐aided diagnosis system. Medical Physics,

29(11), 2552-2558.

Hamedianfar, A., Mohamedou, C., Kangas, A., &

Vauhkonen, J. (2022). Deep learning for forest

inventory and planning: A critical review on the remote

sensing approaches so far and prospects for further

applications. Forestry, 95(4), 451-465.

Han, G., Liu, X., Zhang, H., Zheng, G., Soomro, N.Q.,

Wang, M., & Liu, W. (2019). Hybrid resampling and

multi-feature fusion for automatic recognition of cavity

imaging sign in lung ct. Future Generation Computer

Systems, 99, 558-570.

Highamcatherine, F., & Highamdesmond, J. (2019). Deep

learning. SIAM Rev, 32, 860-891.

Jonas, D.E., Reuland, D.S., Reddy, S.M., Nagle, M., Clark,

S.D., Weber, R.P., . . . Armstrong, C. (2021). Screening

for lung cancer with low-dose computed tomography:

Updated evidence report and systematic review for the

us preventive services task force. Jama, 325(10), 971-

987.

Kaggle. KDSB (2017). Data Science Bowl 2017 lung

Cancer Detection (dsb3).

Kuruvilla, J., & Gunavathi, K. (2014). Lung cancer

classification using neural networks for ct images.

Computer methods and programs in biomedicine,

113(1), 202-209.

Liang, H., Hu, M., Ma, Y., Yang, L., Chen, J., Lou, L., . . .

Xiao, Y. (2023). Performance of deep-learning

solutions on lung nodule malignancy classification: A

systematic review. Life, 13(9), 1911.

Ma, L., Wan, C., Hao, K., Cai, A., & Liu, L. (2023). A novel

fusion algorithm for benign-malignant lung nodule

classification on ct images. BMC Pulmonary Medicine,

23(1), 474.

Mandal, M., & Vipparthi, S.K. (2021). An empirical review

of deep learning frameworks for change detection:

Model design, experimental frameworks, challenges

and research needs. IEEE Transactions on Intelligent

Transportation Systems, 23(7), 6101-6122.

Masud, M., Muhammad, G., Hossain, M.S., Alhumyani, H.,

Alshamrani, S.S., Cheikhrouhou, O., & Ibrahim, S.

(2020). Light deep model for pulmonary nodule

detection from ct scan images for mobile devices.

Wireless Communications and Mobile Computing,

2020, 1-8.

Nazir, I., AlQahtani, S.A., Jadoon, M.M., & Dahshan, M.

(2023). Machine learning-based lung cancer detection

using multiview image registration and fusion. Journal

of Sensors, 2023.

Ojala, T., Pietikäinen, M., & Harwood, D. (1996). A

comparative study of texture measures with

classification based on featured distributions. Pattern

recognition, 29(1), 51-59.

Penedo, M.G., Carreira, M.J., Mosquera, A., & Cabello, D.

(1998). Computer-aided diagnosis: A neural-network-

based approach to lung nodule detection. IEEE

Transactions on Medical Imaging, 17(6), 872-880.

Rao, P., Pereira, N.A., & Srinivasan, R. (2016).

Convolutional neural networks for lung cancer

screening in computed tomography (ct) scans. Paper

presented at the 2016 2nd international conference on

contemporary computing and informatics (IC3I).

Ronneberger, O., Fischer, P., & Brox, T. (2015). U-net:

Convolutional networks for biomedical image

segmentation. Paper presented at the Medical Image

DKCDF: Dual-Kernel CNN with Dual Feature Fusion for Lung Cancer Detection

63

Computing and Computer-Assisted Intervention–

MICCAI 2015: 18th International Conference, Munich,

Germany, October 5-9, 2015, Proceedings, Part III 18.

Salama, W.M., Shokry, A., & Aly, M.H. (2022). A

generalized framework for lung cancer classification

based on deep generative models. Multimedia Tools

and Applications, 81(23), 32705-32722.

Shah, A.A., Malik, H.A.M., Muhammad, A., Alourani, A.,

& Butt, Z.A. (2023). Deep learning ensemble 2d cnn

approach towards the detection of lung cancer.

Scientific Reports, 13(1), 2987.

Shen, D., Wu, G., & Suk, H.-I. (2017). Deep learning in

medical image analysis. Annual review of biomedical

engineering, 19, 221-248.

Shen, S., Han, S.X., Aberle, D.R., Bui, A.A., & Hsu, W.

(2019). An interpretable deep hierarchical semantic

convolutional neural network for lung nodule

malignancy classification. Expert systems with

applications, 128, 84-95.

Shimizu, R., Yanagawa, S., Monde, Y., Yamagishi, H.,

Hamada, M., Shimizu, T., & Kuroda, T. (2016). Deep

learning application trial to lung cancer diagnosis for

medical sensor systems. Paper presented at the 2016

International SoC Design Conference (ISOCC).

Siegel, R.L., Miller, K.D., Wagle, N.S., & Jemal, A. (2023).

Cancer statistics, 2023. CA: a cancer journal for

clinicians, 73(1), 17-48.

Soerjomataram, I., Cabasag, C., Bardot, A., Fidler-

Benaoudia, M.M., Miranda-Filho, A., Ferlay, J., . . .

Znaor, A. (2023). Cancer survival in africa, central and

south america, and asia (survcan-3): A population-

based benchmarking study in 32 countries. The Lancet

Oncology, 24(1), 22-32.

Taher, F., & Sammouda, R. (2011). Lung cancer detection

by using artificial neural network and fuzzy clustering

methods. Paper presented at the 2011 IEEE GCC

conference and exhibition (GCC).

Tian, G., Fu, H., & Feng, D.D. (2008). Automatic medical

image categorization and annotation using lbp and

mpeg-7 edge histograms. Paper presented at the 2008

international conference on information technology and

applications in biomedicine.

Tong, G., Li, Y., Chen, H., Zhang, Q., & Jiang, H. (2018).

Improved u-net network for pulmonary nodules

segmentation. Optik, 174, 460-469.

Wang, S., Chen, A., Yang, L., Cai, L., Xie, Y., Fujimoto, J.,

. . . Xiao, G. (2018). Comprehensive analysis of lung

cancer pathology images to discover tumor shape and

boundary features that predict survival outcome.

Scientific reports, 8(1), 10393.

BIODEVICES 2024 - 17th International Conference on Biomedical Electronics and Devices

64