Heuristic Feedback for Generator Support in Generative Adversarial

Network

Dawid Połap

a

and Antoni Jaszcz

b

Faculty of Applied Mathematics, Silesian University of Technology, Kaszubska 23, 44-100 Gliwice, Poland

Keywords:

Generative Adversarial Network, Neural Network, Heuristic, Image Processing.

Abstract:

The possibilities of using generative adversarial networks (GANs) are enormous due to the possibility of

generating new data that can deceive the classifier. The zero-sum game between two networks is a solution

used on an increasingly large scale in today’s world. In this paper, we focus on expanding the model of

generative adversarial networks by introducing a block with a selected heuristic algorithm. The additional

block allows for creating a set of features extracted from the discriminator. The heuristic algorithm is based

on the analysis of feature maps and extracting the position of selected pixels. Then they are clustered into

averaged sets of features and used on created images by the generator. If the specified number of points within

any set of features is higher than the threshold value, then the generator performs classical training. Otherwise,

the loss function is subject to the penalty function. The proposed mechanism affects the operation of the GAN

through additional sample analysis concerning containing specific features. To analyze the solution and impact

of the proposed heuristic feedback, tests were performed based on known data sets.

1 INTRODUCTION

Recent years have brought new neural classifiers and

new methods of training including federated learn-

ing. The huge development is caused by the need for

fast and accurate analysis in the applications of these

methods (Yang et al., 2022; Salankar et al., 2023). An

example of what is the Internet of Things, Web 3.0,

Industry 4.0 (Zhang et al., 2022), etc. In addition,

neural networks are trained on huge data sets, which

contributes to high efficiency, as well as networks that

enable the generation of new data. Of course, the

basic application of such data may be augmentation,

however, the achievements of artificial intelligence

have allowed the creation of solutions that enable the

generation of data that can be mistaken for the works

of people. Examples of this are image generators and

different types of CNN-based models (Shahriar, 2022;

Artiemjew and Tadeja, 2022; Xu et al., 2023), etc.

The described possibilities are a consequence of

creating a zero-sum game where the players are two

neural networks. One trains to classify data as real

and fake, and the other generates new samples to de-

ceive the other network. Such contention is called

a

https://orcid.org/0000-0003-1972-5979

b

https://orcid.org/0000-0002-8997-0331

generative adversarial networks (GANs) (Cai et al.,

2022). The very wide application of such a solution

has contributed to increasing the capabilities of neural

networks in the industry. On the other hand, scientific

research on this type of network and their rivalry is

one of the main trends in improving their shortcom-

ings as well as increasing the accuracy or speed of

achieving high values of evaluation metrics. Aug-

mentation allows to the creation of artificial data to

increase the number of samples that train neural net-

works. In the case of dedicated applications, creating

a large amount of data in training databases may be

unattainable in a short time. Hence, generators are

used to streamline the base building process as well

as to enable faster achievement of higher efficiency.

Such a solution was presented in (Scarpiniti et al.,

2022), where the authors used GAN to generate au-

dio data presented in graphical form. For this pur-

pose, the focus was on spectral representation, which

enabled the correct further classification of the data.

A similar application was the use of augmentation to

obtain equinumerous training sets in the problem of

diagnosing bearing faults (Liu et al., 2022a). GAN

training involves a pre-prepared database for identi-

fying true and false images. Hence, in (Hung and

Gan, 2022) the modification of the generator architec-

ture with a double encoder and decoder was shown.

862

Połap, D. and Jaszcz, A.

Heuristic Feedback for Generator Support in Generative Adversarial Network.

DOI: 10.5220/0012405800003636

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 16th International Conference on Agents and Artificial Intelligence (ICAART 2024) - Volume 3, pages 862-869

ISBN: 978-989-758-680-4; ISSN: 2184-433X

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

The purpose of the modification was to increase the

amount of data in the training set, which in the end

allowed to obtain a higher accuracy of the classifier

by more than 10%. GAN can contribute to network

learning by generating synthetic images indicative of

segmentation. Such an example of use is shown in

(Ali and Cha, 2022). The authors proposed a pro-

prietary model of the GAN network and performed

tests based on images of damage to concrete elements.

Based on the presented research results, the authors

indicate that GAN-type networks can surpass other

neural networks in accuracy. The topic of data gener-

ation and their reliability is discussed in (Mozo et al.,

2022). The researchers presented the methodology of

using a heuristic algorithm to select the best generator

during GAN training.

An interesting solution is the use of GAN net-

works to generate super-resolution images (Jia et al.,

2022). To enable this, the generator architecture

in GAN has been refined through three processing

blocks: a convolution block, an upsampling block and

an attention fusion block. Another solution is to use

the GAN model for the classification or labeling of

samples. An example of such use is the development

of the architecture with the addition of additional dis-

criminators (Liu et al., 2022b). The described so-

lution operates on a proprietary middle-generation

module, which is introduced between the generator

and the combined discriminator. the conducted re-

search showed that it is an effective and universal so-

lution in terms of adaptability to data sets. Another

use of GAN is to generate data to attack intrusion de-

tection systems (Lin et al., 2022). The proposed so-

lution is based on the use of a generator to modify

the original data. The authors of the research, based

on the results, indicate the superiority of the proposal

over other methods due to the much greater general-

ization of attacks. Again, in (Mira et al., 2022), GAN

was used to increase the reality of video-to-speech

models. The model is based on video-to-speech con-

version, and then the result is evaluated by a critic.

This solution increases the credibility and realism of

the obtained measurements. Such solutions are also

analyzed through the safety and correctness of the re-

sults. An example of research in this area is the imple-

mentation of an evaluation mechanism using a clus-

tering algorithm(Venugopal et al., 2022). The authors

drew attention to the cosine similarity of the data as-

suming the preservation of statistical properties.

Based on the analysis of the literature, it can be

seen that GAN-type architectures are a very important

element used in generating various data. This is a so-

lution to increase the accuracy of the network during

training the classifier due to the creation of new train-

ing data. Based on these motivations, in this research

paper, we present the architecture of the GAN net-

work extended with a dedicated analysis of results us-

ing a heuristic algorithm. The analysis of the heuristic

algorithm enables better adaptability of the generated

images to increase the efficiency of the generator. In

addition, the use of heuristic feedback allows to speed

up the generator’s operation. The main contributions

of this research are:

• heuristic feedback mechanism for image analysis,

• extending GAN architecture by additional block

to support generator,

• proposed GAN evaluation by known datasets and

comparison with state-of-art.

2 PROPOSED METHODOLOGY

GAN architecture is based on two networks: discrim-

inator D and generator G. The discriminator will

be trained using a database X =

x

0

, x

1

, . . . , x

|X|−1

.

The result of the classification of a i-th sample will be

marked as D(x

i

). The generator will be trained by a

random given as latent vector z, so the created sample

will be denoted as G(z). Evaluation of the generated

sample by discriminator will be D (G(z)). Moreover,

let us denote Z as a set of generated vectors. E(·, ·)

as an error function between a given two values. The

loss function for the discriminator will be defined as:

L

D

= E (D (x) , 1) + E (D (G(z)), 0) , (1)

and for the generator, it will be:

L

G

= E (D (G(z)) , 1) . (2)

Applying a binary cross-entropy formula, the above

loss functions will be changed as:

L

D

= −

∑

x∈X,z∈Z

log(D(x)) + log (1 − D (G(z))) (3)

and for the generator, it will be:

L

G

= −

∑

z∈Z

log(D (G(z))) . (4)

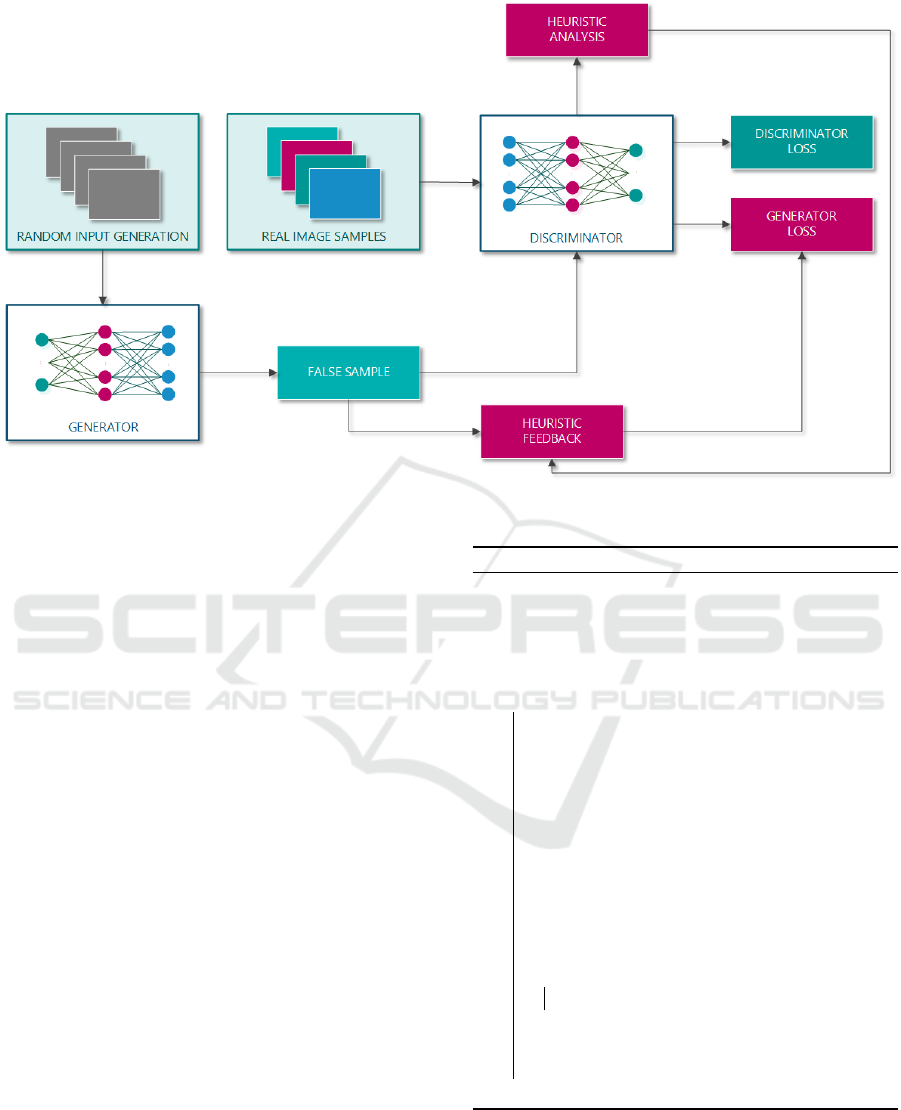

2.1 Heuristic Feature Extractor

In GAN architecture, the training process is per-

formed separately for the discriminator and the gener-

ator. Hence, we can consider training a discriminator.

The idea is to train the network to classify the incom-

ing sample as true or false. In the case of real sam-

ples, the convolutional neural network will extract the

features of images occurring in a specific class. To

Heuristic Feedback for Generator Support in Generative Adversarial Network

863

analyze them, we propose the use of a heuristic algo-

rithm that will enable the detection of those features

that the classifier treats as significant. To access these

features, feature maps from the last convolution layer

will be extracted after the training process ends in the

current iterations. The obtained feature maps for a

given image will be presented as a set of n images

S = {s

1

, s

2

, . . . , s

n

}.

The application of the heuristic algorithm is based

on finding selected features. For this purpose, the al-

gorithm performs two stages of action: environment

recognition, i.e. pixel analysis to identify the back-

ground of the image, and then analysis of pixels of

the opposite color. If the background of the image

is black, the heuristic analyzes white pixels. How-

ever, it should be noted that individual pixels do not

indicate a specific feature. To enable the heuristic to

analyze the image and return specific patterns repre-

senting the feature, a two-stage displacement is pro-

posed. The first is the location of the most intensive

areas, followed by a search and neighborhood analy-

sis that indicates the area’s surroundings. The exact

operation of the heuristics will be described on the

example of the selected algorithm, which is the fox

algorithm (Połap and Wo

´

zniak, 2021). It is an algo-

rithm inspired by the behavior of foxes when hunting

prey. We assume that each heuristic algorithm has two

basic parameters, which are the number of individu-

als N and the number of iterations T . The number

of individuals determines the number of points (here

pixels) that will be analyzed in one iteration. Within

each iteration, the algorithm moves the points accord-

ing to two mechanisms, which are global and local

displacement. Assume that, the initial population of

foxes is generated at random, so there will be a set of

pixels’ coordinates marked as P = {p

1

, p

2

, . . . , p

N

},

where p

i

= (x

i

, y

i

) on image I, so the i-th pixel val-

ues is I(p

i

) and x

i

∈ ⟨0, w⟩, y

i

∈ ⟨0, h⟩ (w, h is width

and height of the image I). At the beginning of each

iteration, the best individuals p

best

in the entire popu-

lation are found - the selection depends on the given

fitness function F(·). Each pixel in t-th iteration is

placed according to a global movement, which allows

the evaluation of pixels much further away from the

current position. It is made using the following equa-

tion:

p

t

i

= p

t−1

i

+

α · sign

p

t

best

− p

t−1

i

, (5)

where α ∈

0, d(p

t−1

i

, p

t

best

)

is a random coefficients

in the given range, where d(·) is Euclidean metric.

Then, each fox p

i

makes a selection regarding fur-

ther movement according to a random parameter µ ∈

⟨0, 1⟩:

(

Move closer if µ > 0.75

Stay and disguise if µ ≤ 0.75

. (6)

If the choice is the movement, then the value of the

subject’s field of view is determined by:

r =

a

sin(φ

0

)

φ

0

if φ

0

̸= 0

rand(0, 1) if φ

0

= 0

, (7)

which allows the individual to be moved to a better

position in the immediate vicinity as:

(

x

t

i

=

⌈

ar · cos(φ

1

)

⌉

+ x

t−1

i

y

t

i

=

⌈

ar · sin(φ

1

)

⌉

+

⌈

ar · cos(φ

2

)

⌉

+ y

t−1

i

, (8)

where φ

1

, φ

2

∈ ⟨0, 2π⟩ and a ∈ ⟨0, 1⟩. In the situation

that a choice is made to stay (Eq. (6)), then the indi-

vidual does not perform a local displacement.

Figure 1: Visualization of the heuristic algorithm (from left

to right): the original image, individuals in the heuristic al-

gorithm located important pixels, returned a set of features

in the given image.

For feature extraction, we propose to analyze fea-

ture maps from the discriminator. The first iteration of

heuristic algorithms analyzes the environment which

is the image. This is to determine the color of the

features you are looking for. To this end, the first it-

eration of the heuristic is evaluated against a simple

fitness function that returns the average value of the

components of the RGB model, that is:

F

1

(I, p

i

) =

1

3

∑

k∈{R,G,B}

I

k

(p

i

). (9)

It should be also noted, that in the case of a

grayscale image, the values of all three components

are the same, hence we can assume that the above av-

erage is equal to the value of any of the components.

For this function, there is no preferred best value, so

the best individual in the population will be selected

at random. After the first iteration, each individual

is evaluated and the average values of all individuals

are determined. If this value is less than 128 (half of

the color range in the RGB model), the background

color will be black. However, when the average value

is higher than 128, the background will be white and

the searched features will be black.

Subsequent iterations can analyze the image by

looking for features that may be significant for the

classifier. For this purpose, individuals are evaluated

ICAART 2024 - 16th International Conference on Agents and Artificial Intelligence

864

Figure 2: Visualization of the operation of the GAN architecture with the proposed heuristic algorithm.

using the second fitness function (the higher the value,

the more important the pixel):

F

2

(I, p

i

, ξ) =

ξ

∑

j=−ξ

ξ

∑

l=−ξ

F

3

(I, (x

i

, y

i

), (x

i

+ j, y

i

+ l)),

(10)

where F

3

(·) is an auxiliary function that evaluates a

given pixel by determining whether it is a pixel in-

dicating a difference in neighboring values. This is

important because the boundary points will be inter-

esting, i.e.:

F

3

(I, p

1

, p

2

) =

(

1 if I(p

1

) > 128 and I(p

2

) < 128,

0 otherwise.

(11)

The above equations search on a white background,

but in the opposite case, majority and minority signs

will be reversed.

When the algorithm reaches the iteration limit, the

obtained points are clustered to group them according

to a certain number of features. The algorithm per-

forms the same operation on all other feature maps

and then selects those features that are duplicated in

more than half of the maps. In discriminant training,

such features are extracted from samples. When all

analyses are completed, the set of features for each

sample is compared to combine features for the same

classes. It is done by clustering into κ classes (in the

case when this number is large, then more feature sets

reaming to analyze by generator). Within each class,

the sets are combined and clustered to the initial num-

Algorithm 1: GAN with heuristic support.

Input: Generator, Discriminator, T

GAN

training iterations, T heuristic

iterations, N heuristic population size,

γ matching value

1 i := 0;

2 while i < T

GAN

do

3 Train discriminator;

4 Get feature maps;

5 Use heuristics to finding locate features

by T iterations with N points;

6 Combine all features into one set;

7 Reduce the number of feature sets using

clustering algorithm;

8 Generate random sample;

9 Evaluate sample by discriminator and

heuristic matching algorithm;

10 if sample is not covered by one of the

heuristic class more than γ% points then

11 Apply penalty function;

12 end

13 Modify weights and filters in generator;

14 i+ = 1;

15 end

ber of points - this makes it possible to obtain aver-

aged values of features within a given class. A sim-

plified visualization of the operation of the heuristic

algorithm is shown in Fig. 1.

Heuristic Feedback for Generator Support in Generative Adversarial Network

865

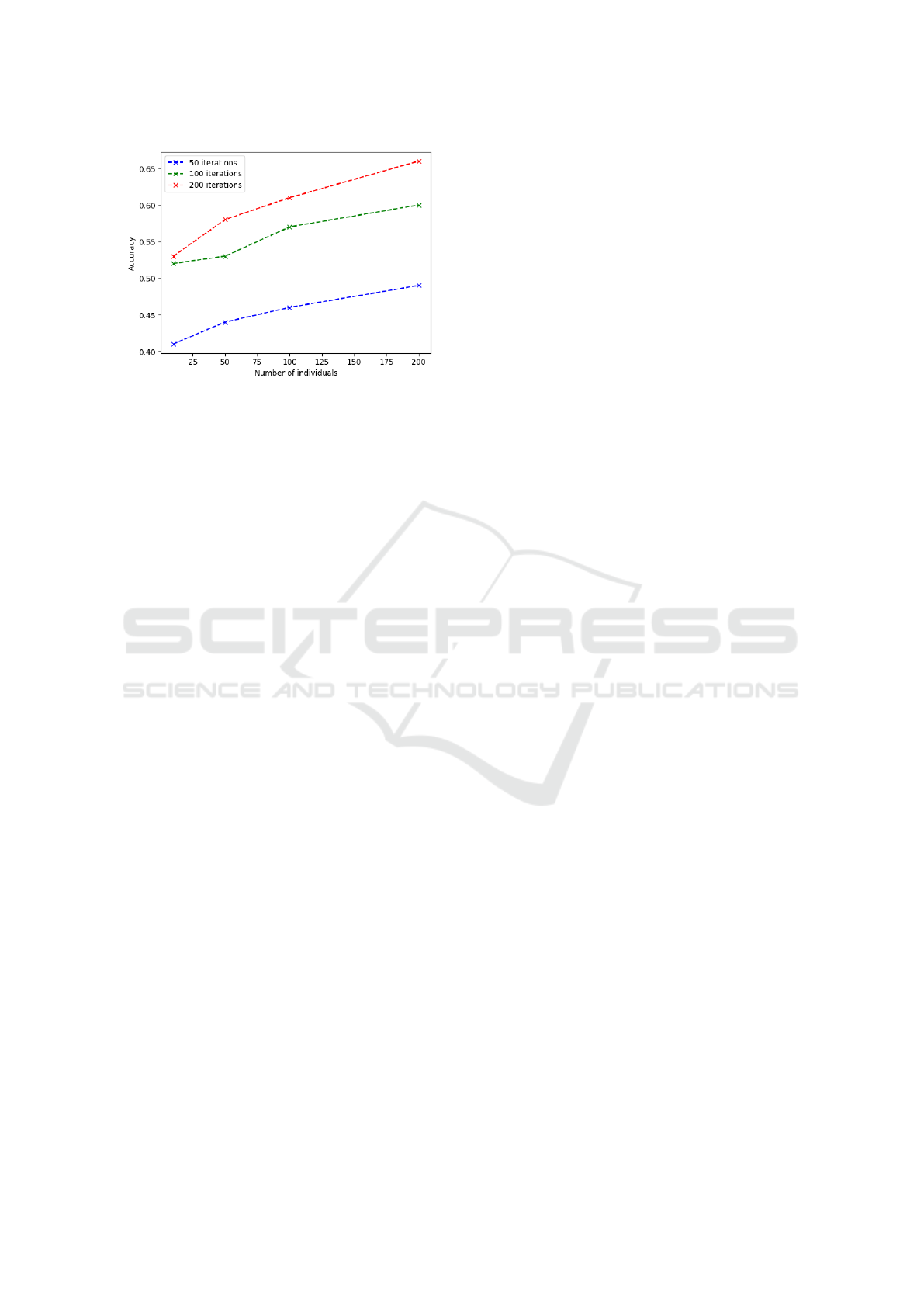

Figure 3: Chart of accurate measurements of the used GAN

model depending on the used parameters of the heuristic

algorithm.

2.2 Heuristic Feedback Mechanism for

Generator

The sets of features prepared by heuristics during the

training of the discriminator are finally used during

the training of the generator. After the sample is cre-

ated, it is first checked by feature sets of heuristics.

The check consists of superimposing points from a

single set on the generated image. The pixel values

under these points are then checked. The check con-

sists of verifying the white color, i.e. if the pixel has

a value greater than 128, the pixel is passed. this op-

eration is performed for all matrices. If there is a sit-

uation in which more than γ% points from any set are

covered, that is, the sample contains features of one

of the classes.

According to the adopted training model of the

generator, the sample is evaluated by the discrimina-

tor and the value of the loss function is determined.

In the absence of feature assignment by comparing

the sets obtained by heuristics, the loss function is in-

creased by 10%, which is understood as the penalty

function. A simplified scheme of operation is pre-

sented in Alg. 1

3 EXPERIMENTS

To evaluate the proposed method and analyze the co-

efficients, two classical databases, MNIST (LeCun,

1998) and Fashion-MNIST (Xiao et al., 2017), were

selected. Databases contain images of size 28 × 28.

The DCGAN (Dewi et al., 2022) model implemented

in Keras was used as the GAN architecture. All

tests were performed on the Intel Core i7-8750H with

24GB RAM and NVIDIA GeForce GTX 1050 Ti.

3.1 Coefficients Analysis

In the beginning, the heuristic coefficients were an-

alyzed with the assumption of obtaining the best

possible results. For this purpose, we used only

the MNIST dataset with the following parameters:

three different iteration values T = {50, 100, 200} and

the number of individuals in the population as N =

{10, 50, 100, 200}. The first analysis was to find out

the best value for the mentioned heuristic parame-

ters. Therefore, a constant value of γ = 0.5 was cho-

sen. This γ value means that at least 50% of the lo-

calized features (in one of the ten classes - it was

set up to create only ten clustered classes) should be

covered on the generated samples in order not to ap-

ply the penalty function. The training iteration was

set as 1000. All tests were performed ten times and

all results were averaged. The obtained charts are

shown in Fig. 3. The obtained results indicate that

the greater the number of individuals and iterations,

the higher the accuracy. With only 50 iterations, the

accuracy of the GAN with the methodology used in-

creases. However, even with 200 individuals, the ac-

curacy reached below 50%. Doubling the iteration

allowed for achieving nearly 15% better results when

using 10 individuals. By increasing them to 200, the

efficiency reached 60%. However, the best results

were seen using 200 iterations, where the accuracy

increased rapidly and was able to exceed 65%. How-

ever, it should be noted that the use of minimum iter-

ation values or the number of individuals means quite

random values due to too small several analyzed im-

age areas. Especially through the use of clustering of

these features, which with high randomness may re-

sult in generating sets of random features. Hence, the

use of a large number of individuals and iterations is

an important element.

In the next part of the conducted experiments, we

used 100 individuals with 200 iterations to analyze the

effect of the gamma coefficient. For this purpose, the

generator was trained with different parameter values

of these parameters: γ = {0.1, 0.2, . . . , 0.9, 1}. The

value of the coefficient equal to 0 means that there

is no proposed mechanism for using the heuristic al-

gorithm. In Fig. 4 the relationship between the av-

erage accuracy of the GAN and the applied value of

the coverage coefficient of the set of heuristic features

and the generated images is presented. The applica-

tion of the proposed mechanism achieves results sim-

ilar to its absence in the case of a coefficient value

below 0.4. This is because this is a very small point

coverage value and its rapid exceeding will not cause

changes in the value of the generator loss function.

Hence, at values equal to or greater than 0.4, a dif-

ICAART 2024 - 16th International Conference on Agents and Artificial Intelligence

866

Table 1: Comparison of the accuracy on GAN models with and without the proposed mechanism.

Accuracy Discriminator loss Generator loss

MNIST

DCGAN 0,6934 0,59 0,96

DCGAN with RFOA 0,7445 0,58 0,82

Fashion-MNIST

DCGAN 0,5234 0,63 1,03

DCGAN with RFOA 0,6639 0,52 0,83

Figure 4: Graph of the average accuracy values to the used

gamma parameter.

ference can be seen. The best results were obtained

using values γ = 0.6, where the accuracy with such

a small number of training (1000 iterations). Accu-

racy results were obtained higher by 5% compared to

the lack of the proposed method. Higher values in-

dicate frequent use of the penalty function, which in

turn manifests itself in worse and worse accuracy.

3.2 Heuristic Analysis

The next stage of evaluating the proposals was the

use of other heuristic algorithms and the indication

of differences between them. Three selected heuris-

tics were implemented, such as RFOA (presented in

this paper), artificial rabbits optimization algorithm

(AROA) (Wang et al., 2022) and white shark opti-

mizer (WSO) (Braik et al., 2022). All algorithms

were identically modified to analyze the images by

adding the ceiling function to the equations and us-

ing the presented fitness functions. A variant of one

hundred individuals in each population and different

numbers of iterations were used. Each of the tests

for the selected algorithm was performed ten times,

and the results were averaged, as shown in Fig.5. All

selected algorithms obtained similar results, differing

from each other at the level of 0.2%. It should be

noted that AROA is the only one to have more jumpy

accuracies with the increasing number of iterations.

For the other two algorithms, the accuracy increases

almost linearly. It is worth noting that each heuristic

Figure 5: Dependence of the number of iterations for 100

individuals in selected heuristic algorithms with γ = 0.6 to

accuracy.

algorithm works on almost identical operations with

the difference related to the modeled equations. Con-

sidering that the same coefficient values were used in

the tested algorithms, the difference can be stated that

with smaller numbers of iterations, RFOA can locate

image features faster based on the declared fitness

functions.

3.3 GAN Analysis

The best parameters in previous tests turned out to be

RFOA with 200 iterations, 200 individuals and the

parameter γ = 0.6. To reduce the number of opera-

tions, the number of individuals was reduced to 100,

but the number of GAN training iterations was in-

creased threefold. Using these values, we train the

GAN model with and without the proposed heuris-

tic mechanism for two datasets: MNIST and Fashion-

MNIST. The obtained results are shown in Tab. 1.

In the case of the MNIST dataset, the accuracy for

the original GAN model was 69,34% with a generator

loss of 0,96. In the case of using the proposed mecha-

nism, the accuracy obtained was higher by more than

5%, as it reached 74,45%. A more important factor

is the generator loss, which is significantly lower -

0.82. Compared to the original classifier, this is a dif-

ference of 0.14. Again, for the second database, the

results turned out to be much weaker due to the analy-

sis of more complex objects in terms of features. The

Heuristic Feedback for Generator Support in Generative Adversarial Network

867

original GAN model achieved an accuracy of 52.34%

and the proposed algorithm 66.39%. This is a much

higher result, which is visible in the values of the loss

function. For the discriminator, there is a difference

of 0.11 (while for the previous database, it was 0.01),

and for the generator, it was 0.2. Based on the ob-

tained results, it can be concluded that the proposed

mechanism of image analysis in terms of having cer-

tain features is an effective solution that can signifi-

cantly affect the operation of GAN models.

4 CONCLUSIONS

In this paper, we presented a modification of the GAN

learning model. We extended the operations by build-

ing a set of essential features based on the images re-

turned by the discriminator by the heuristic algorithm.

The set is used by the generator to check whether the

generated image has essential features for the discrim-

inator. If not, the loss function is subject to the value

of the penalty mechanism. Based on the test results, it

was noticed that the proposed methodology allows for

a significant increase in the accuracy of the generator.

Especially when the database was much more diverse.

It was noticed that the modification of the value of the

loss function affects the training of the network due to

the learning algorithm, which is ADAM. In addition,

the implemented heuristic algorithm can achieve good

results with lower parameter values. The presented

method is important for enabling more efficient train-

ing of the generator in GAN models.

REFERENCES

Ali, R. and Cha, Y.-J. (2022). Attention-based generative

adversarial network with internal damage segmenta-

tion using thermography. Automation in Construction,

141:104412.

Artiemjew, P. and Tadeja, S. K. (2022). Using convnet for

classification task in parallel coordinates visualization

of topologically arranged attribute values. In ICAART

(3), pages 167–171.

Braik, M., Hammouri, A., Atwan, J., Al-Betar, M. A., and

Awadallah, M. A. (2022). White shark optimizer: A

novel bio-inspired meta-heuristic algorithm for global

optimization problems. Knowledge-Based Systems,

243:108457.

Cai, J., Li, C., Tao, X., and Tai, Y.-W. (2022). Image multi-

inpainting via progressive generative adversarial net-

works. In Proceedings of the IEEE/CVF Conference

on Computer Vision and Pattern Recognition, pages

978–987.

Dewi, C., Chen, R.-C., Liu, Y.-T., and Tai, S.-K. (2022).

Synthetic data generation using dcgan for improved

traffic sign recognition. Neural Computing and Appli-

cations, 34(24):21465–21480.

Hung, S.-K. and Gan, J. Q. (2022). Boosting facial emotion

recognition by using gans to augment small facial ex-

pression dataset. In 2022 International Joint Confer-

ence on Neural Networks (IJCNN), pages 1–8. IEEE.

Jia, S., Wang, Z., Li, Q., Jia, X., and Xu, M. (2022). Multi-

attention generative adversarial network for remote

sensing image super resolution. IEEE Transactions

on Geoscience and Remote Sensing.

LeCun, Y. (1998). The mnist database of handwritten digits.

http://yann. lecun. com/exdb/mnist/.

Lin, Z., Shi, Y., and Xue, Z. (2022). Idsgan: Genera-

tive adversarial networks for attack generation against

intrusion detection. In Pacific-Asia Conference on

Knowledge Discovery and Data Mining, pages 79–91.

Springer.

Liu, Y., Jiang, H., Liu, C., Yang, W., and Sun, W.

(2022a). Data-augmented wavelet capsule generative

adversarial network for rolling bearing fault diagnosis.

Knowledge-Based Systems, 252:109439.

Liu, Y., Wei, X., Lu, Y., Zhao, C., and Qiao, X. (2022b).

Source free domain adaptation via combined discrim-

inative gan model for image classification. In 2022

International Joint Conference on Neural Networks

(IJCNN), pages 1–8. IEEE.

Mira, R., Vougioukas, K., Ma, P., Petridis, S., Schuller,

B. W., and Pantic, M. (2022). End-to-end video-

to-speech synthesis using generative adversarial net-

works. IEEE Transactions on Cybernetics.

Mozo, A., Gonz

´

alez-Prieto,

´

A., Pastor, A., G

´

omez-Canaval,

S., and Talavera, E. (2022). Synthetic flow-based

cryptomining attack generation through generative ad-

versarial networks. Scientific reports, 12(1):1–27.

Połap, D. and Wo

´

zniak, M. (2021). Red fox optimiza-

tion algorithm. Expert Systems with Applications,

166:114107.

Salankar, N., Koundal, D., Chakraborty, C., and Garg, L.

(2023). Automated attention deficit classification sys-

tem from multimodal physiological signals. Multime-

dia Tools and Applications, 82(4):4897–4912.

Scarpiniti, M., Mauri, C., Comminiello, D., Uncini, A., and

Lee, Y.-C. (2022). Coval-sgan: A complex-valued

spectral gan architecture for the effective audio data

augmentation in construction sites. In 2022 Interna-

tional Joint Conference on Neural Networks (IJCNN),

pages 1–8. IEEE.

Shahriar, S. (2022). Gan computers generate arts? a sur-

vey on visual arts, music, and literary text generation

using generative adversarial network. Displays, page

102237.

Venugopal, R., Shafqat, N., Venugopal, I., Tillbury, B.

M. J., Stafford, H. D., and Bourazeri, A. (2022). Pri-

vacy preserving generative adversarial networks to

model electronic health records. Neural Networks,

153:339–348.

Wang, L., Cao, Q., Zhang, Z., Mirjalili, S., and Zhao,

W. (2022). Artificial rabbits optimization: A new

bio-inspired meta-heuristic algorithm for solving en-

ICAART 2024 - 16th International Conference on Agents and Artificial Intelligence

868

gineering optimization problems. Engineering Appli-

cations of Artificial Intelligence, 114:105082.

Xiao, H., Rasul, K., and Vollgraf, R. (2017). Fashion-

mnist: a novel image dataset for benchmark-

ing machine learning algorithms. arXiv preprint

arXiv:1708.07747.

Xu, L., Song, Z., Wang, D., Su, J., Fang, Z., Ding, C.,

Gan, W., Yan, Y., Jin, X., Yang, X., et al. (2023).

Actformer: A gan-based transformer towards general

action-conditioned 3d human motion generation. In

Proceedings of the IEEE/CVF International Confer-

ence on Computer Vision, pages 2228–2238.

Yang, W., Xiang, W., Yang, Y., and Cheng, P. (2022).

Optimizing federated learning with deep reinforce-

ment learning for digital twin empowered industrial

iot. IEEE Transactions on Industrial Informatics,

19(2):1884–1893.

Zhang, T., Gao, L., He, C., Zhang, M., Krishnamachari,

B., and Avestimehr, A. S. (2022). Federated learning

for the internet of things: Applications, challenges,

and opportunities. IEEE Internet of Things Magazine,

5(1):24–29.

Heuristic Feedback for Generator Support in Generative Adversarial Network

869