Speech Recognition for Indigenous Language Using Self-Supervised

Learning and Natural Language Processing

Satoshi Tamura, Tomohiro Hattori, Yusuke Kato and Naoki Noguchi

Gifu University, 1-1 Yanagido, Gifu, Japan

Keywords:

Speech Recognition, Self-Supervised Learning, Neural Machine Translation, Transformer, HuBERT,

Indigenous Language, Under-Resourced Language.

Abstract:

This paper proposes a new concept to build a speech recognition system for an indigenous under-resourced

language, by using another speech recognizer for a major l anguage as well as neural machine translation and

text autoencoder. D eveloping the recognizer for minor languages suffers from the lack of training speech data.

Our method uses natural language processing techniques and text data, to compensate the lack of speech data.

We focus on the model based on self-supervised learning, and utilize its sub-module as a feature extractor. We

develop the recognizer sub-module for indigenous languages by making translation and autoencoder models.

We conduct evaluation experiments for every systems and our paradigm. It is consequently found that our

scheme can build the recognizer successfully, and improve the performance compared to the past works.

1 INTRODUCTION

Automatic Speech Recognition (ASR) is a technique

to transcribe human speech. In recent yea rs, speech

recogn ition technology has been widely used in a lot

of environments, improving work efficiency and en-

hancing quality of life. Voice assistance and voice

input are ofte n employed on smartphones and smart

speakers. ASR is also used to take minutes in on-

line meetings and on-site conferences. Spee ch trans-

lation based on ASR is expected to make our inter-

national com munication richer and easier. ASR has

made remarkable progress for this decade, with the

rapid development of Deep Learning (DL). Many re-

searchers have attempted to build DL models, result-

ing in significant improvements in recognition accu-

racy. Several languages with large populations, such

as English, Mandarin, and Spanish, now have high-

performance ASR technolo gy, as DL requires huge

data sets, and it is relatively easier to do so. Develop-

ers are also interested in having such the techniques

for languages spoken in emerging markets, such as

Hindi, Bahasa Indonesia, and so on.

It is k nown that there a re more than 7,000 lan-

guages on this plane t. However, only a few lan-

guages have been well studied for DL-based ASR,

while most indigenous languages having small pop-

ulations used in limited area s have not yet, due to

the lack of spoken data. To discuss this issue, UN -

ESCO, ELRA (European Language Resource Asso-

ciation) and several societies jointly organized a con-

ference on L anguage Technologies for All (LT4All)

in 2019 (UNESCO, 2019). We need to strongly en-

courag e researcher s and developers to build ASR sys-

tems for these minor languages. And in order to do

so, we should develop and improve DL techn iques in

under-resource d conditions.

Recently, Self-Supe rvised Le a rning (SSL) has

been fo cused on in the DL field. SSL allows us to uti-

lize unla beled data or low-quality data, and to obtain

effective feature representation for subsequent tasks.

In ASR resear c h works, several SSL techniq ues such

as HuBERT (Hsu et al., 2021) and wav2vec (Baevski

et al., 2020) are o ften employed. Focusing on the Hu-

BERT model, we have developed a speech recogni-

tion scheme for under-resourced languages (Hattori

and Tamura, 2023). In our previous work, we firstly

prepare d an English ASR system consisting of a pre-

trained HuBERT and a shallow DL model for recog-

nition. We secondly applied fine-tuning to the system

using Japanese speec h data, to obtain the recognizer

for Japanese.

This paper proposes a new paradigm of ASR for

indigenous languages, enhan cing the recognition per-

formance. In our work, we employ several Natu-

ral Language Processing (NLP) techniques, such as

Neural Machine Translation (NMT) and text autoen-

coder. We assume that the HuBERT-based English

Tamura, S., Hattori, T., Kato, Y. and Noguchi, N.

Speech Recognition for Indigenous Language Using Self-Supervised Learning and Natural Language Processing.

DOI: 10.5220/0012396300003654

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 13th International Conference on Pattern Recognition Applications and Methods (ICPRAM 2024), pages 779-784

ISBN: 978-989-758-684-2; ISSN: 2184-4313

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

779

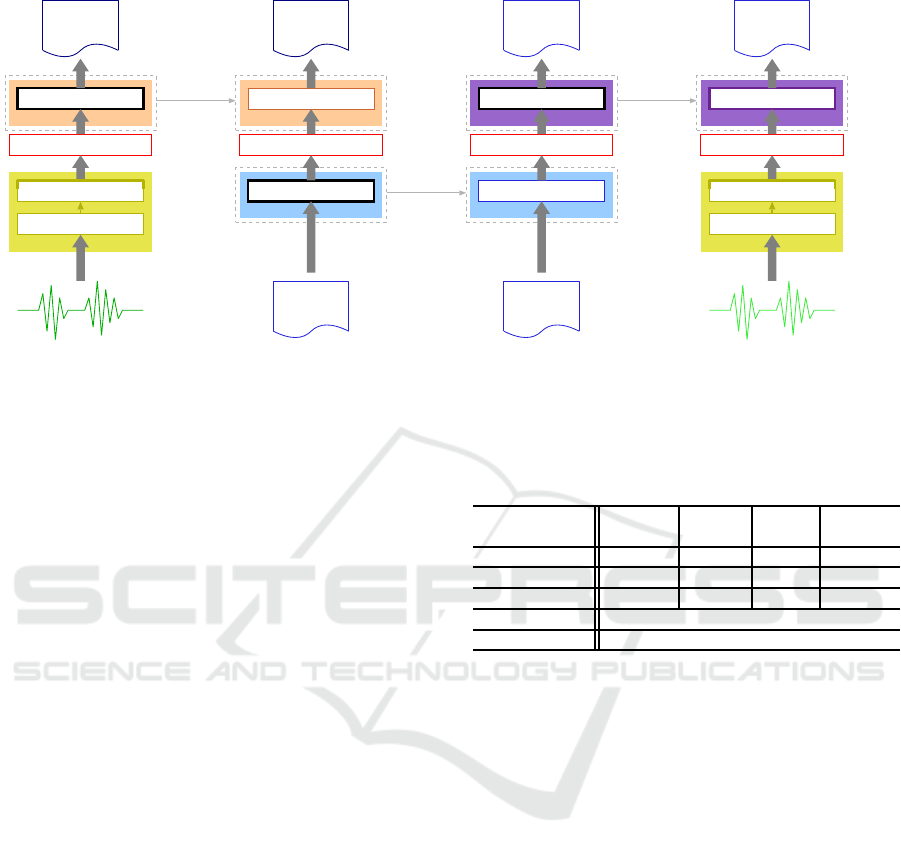

Feature representation

. . . . . . . . . . . . . .

. . . . . . . . . . . . . .

. . . . . . . . . . . . . .

. . . . . . . . . . . . . .

Transformer

Japanese encoder

Japanese text

(c) Japanese autoencoder

Transformer

Japanese decoder

Japanese text

. . . . . . . . . . . . . .

. . . . . . . . . . . . . .

. . . . . . . . . . . . . .

. . . . . . . . . . . . . .

Feature representation

Transformer

English decoder

English text

. . . . . . . . . . . . . .

. . . . . . . . . . . . . .

. . . . . . . . . . . . . .

. . . . . . . . . . . . . .

. . . . . . . . . . . . . .

. . . . . . . . . . . . . .

. . . . . . . . . . . . . .

. . . . . . . . . . . . . .

Transformer

Japanese text

encoder

Japanese text

(b) Japanese-English NMT

English speech signal

CNN Encoder

Feature representation

Transformer

Transformer

Pre-trained HuBERT-

based encoder

English decoder

English transcription

(a) English ASR

. . . . . . . . . . . . . .

. . . . . . . . . . . . . .

. . . . . . . . . . . . . .

. . . . . . . . . . . . . .

Japanese speech signal

CNN Encoder

Feature representation

Transformer

Transformer

Pre-trained HuBERT-

based encoder

Japanese decoder

Japanese transcription

(d) Japanese ASR

. . . . . . . . . . . . . .

. . . . . . . . . . . . . .

. . . . . . . . . . . . . .

. . . . . . . . . . . . . .

(Japanese as an indigenous language and English as a major language. Bold indicates model training or fine-tuning)

Figure 1: Our proposed concept to build an AS R method for an indigenous language.

ASR can be divided into two sub -modules: a fe a ture

extraction module based on HuBERT and a recogni-

tion module. By using the rec ognition module as a n

English decoder and adding a new Japanese encoder,

we build a Japanese-English NMT system. Subse-

quently, we choose the Japanese encoder and pre-

pare a new Japane se decoder to make a text autoen-

coder. Finally, we can develop a Japanese ASR sys-

tem by employing the HuBERT feature extractor and

the Japanese dec oder. The novelty of this work is that,

we can build a n ASR system using le ss or ideally no

speech data of a target indigenous language, thanks

to state-of-the-art SSL and NLP technology. In addi-

tion, using NLP techniques is expected to enhance the

semantic fea ture representation, improving the ASR

performance. As far as the authors know, there is no

other work regarding indigenou s ASR incorporating

HuBERT, NMT and text autoencoder.

The rest of this paper is o rganized as follows. Sec-

tion 2 explains o ur concept in detail. Experiments are

reported in Section 3. Section 4 concludes this work.

2 METHODOLOGY

Figure 1 illustrates our proposed scheme to build an

ASR sysem for an indigenous language. In this pa-

per, we use Japanese as an indigenous minor language

and English as a major language; though Japanese has

a large population, th e reason why Japanese is cho-

sen in this work is that, we can compare our scheme

with conventional D L-based ASR methods as large-

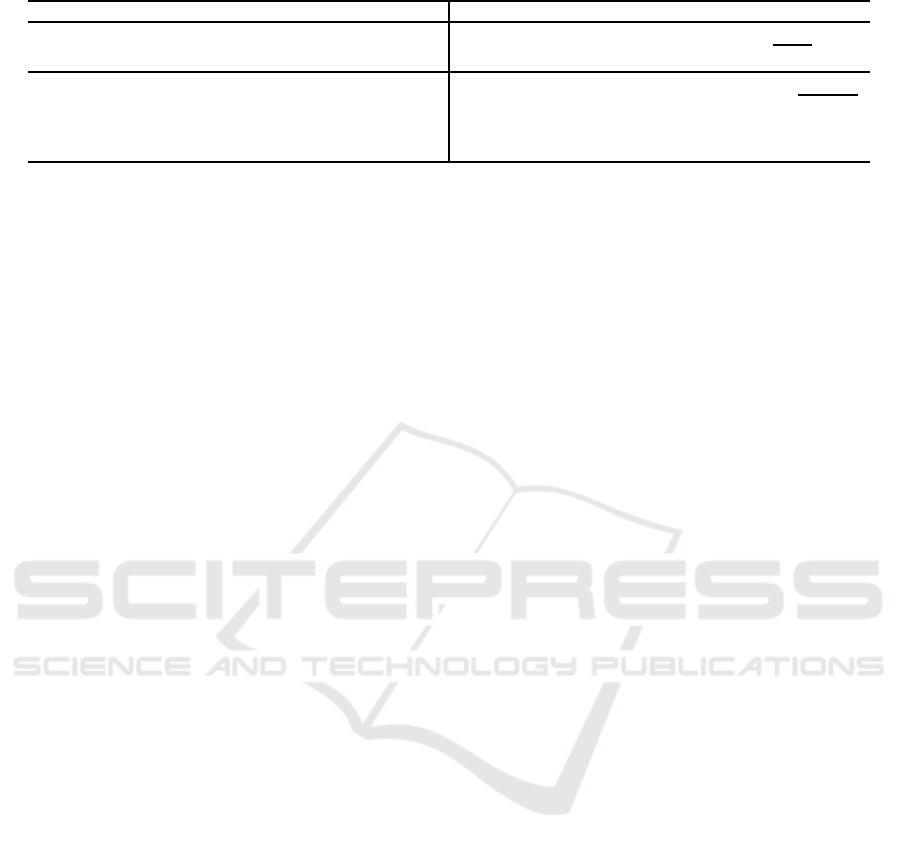

Table 1: Training and fine-tuning settings.

(a) E. (b) J-E (c) J. (d) J.

ASR NMT AE ASR

# epochs 40 50 50 40

Batch size 8 256 256 8

Optimizer RAdam Adam Adam RAdam

Learning rate 1e-4

Loss function Cross entropy loss

size Japanese corpora are available, and the authors

can easily conduct subjective evaluation to the results.

(a) First of all, a DL-based SSL model is prepared,

that is trained using a number of speec h data of

the major language. The model is used as a fea-

ture extractor from speech data. We employ a

pre-train ed English HuBERT mode l in this work.

We then build an English ASR system using the

model followed by a transformer-based decoder.

We believe that a feature vector extracted by the

first part includes contextua l or semantic informa-

tion, and the second model can be performed as a

speech recognizer to generate English sente nces.

(b) Suppose a text encoder for an indigenous lan-

guage, in this case Japanese. Using the en-

coder and the decoder introduced above, we build

an NMT system from the indigenous language

(Japanese) to the major language (English). Whe n

training the sy stem, all the para meters in the En-

glish decod er are fixed, while the model parame-

ters in the Japanese encoder are optimized using

Japanese-English parallel text data.

ICPRAM 2024 - 13th International Conference on Pattern Recognition Applications and Methods

780

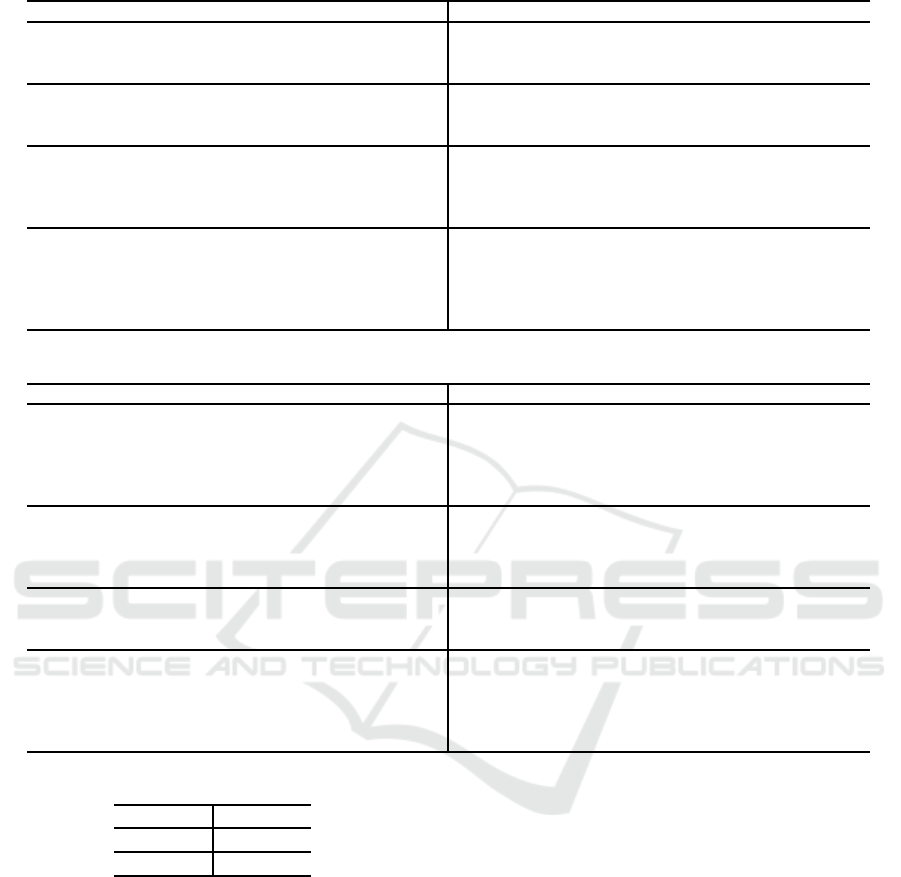

Table 2: Examples of English ASR results in Figure 1 (a). Underlined words mean recognition errors.

Reference Recognized characters

THE WHOLE CAMP WAS COLLECTE D BEFORE A ROT CABIN

ON THE OUTER EDGE OF THE CLEARING

THE WHOLE CAMP WAS COLLECTED BEFORE A RUDE CAB IN

ON THE OUTER EDGE OF THE CLEARING

INTO THE LAND BEYOND THE SYRIAN DESERT BUT EITHER

OF THEM DREAMED THAT THE SCATTERED AND DISUNITED

TRIBES OF ARABIA WOULD E V ER COMBINE OR BECOME A

SERIOUS DANGER

INTO THE LAND BEYOND THE SYRIAN DESERT BUT NEITHER

OF THEM DREAMED THAT THE SCATTERED AND DISUNITED

TRIBES OF ARABIA WOULD E V ER COMBINE OR BECOME A

SERIOUS DANGER

(c) We further introduce a new text decoder for the

indigenous language. Next, we make an text au-

toencod er for the m inor language, consisting of

the above encoder as well as the decoder. We ap-

ply model train ing using the text data written in

the indigenous language, only to the decoder.

(d) Finally, we use the HuBERT encoder as a feature

extractor and the text decoder for the indigenous

languag e, to build an ASR system for the mino r

languag e. It is said that English phonemes fully

cover Japanese vowels and consonants, therefore,

the English feature extractor is expected to also

work for Japanese speech data as well. Note that

in this paper, to improve the p erformance we ap-

ply fine-tuning not only to the decoder but also to

a part of the encoder.

The ad vantage of this scheme is that, ideally we do

not need any speech data for the indigenous la nguage,

or only a few data may be significant for fine-tuning

to finalize the A SR model. As mentioned above, it

is har d to collect speech data for such a minor lan-

guage with a small population . In our scheme we uti-

lize a pre-trained SSL-based feature extractor, that is

originally built for different languages, because a hu-

man speech production system is language inde pen-

dent . Furthermo re, for indigen ous languages, it is rel-

atively easier to collect text data than speech data; w e

can obtain text data from official government docu-

ments, textbooks, news sites and internet articles such

as Wikipedia.

3 EXPERIMENT

We conducted exper iments to evaluate the effective-

ness of our proposed approach . First, we report

preliminar y experime ntal results about training data

size for an indigenous langua ge. Second, we exam-

ine our NMT and autoencoder performance to check

Japanese encoder and decoder. Finally, we evaluate

our Japanese ASR. Table 1 shows model training an d

fine-tunin g settings in the following experiments.

3.1 Preliminary Experiments

3.1.1 Machine Translation

In our pr evious work, we investigated the influence of

training data size and model complexity in NMT. We

used the M ultiUN and Wikipedia data sets provided in

OPUS ( Tiedemann, 201 2), in order to obtain par allel

sentences. We then chose German-English sentence

pairs as a training data set. Though German has a

large population, in this experiment German is treated

as an indigenous language, while English was a ma-

jor language . We employed a pr e-trained NMT model

provided by OpenNMT (Klein et al. , 2017), that was

based on a tiny transformer; the encoder and decoder

had six layers respectively. A transformer model was

then explored w ith different settings, such as the num-

ber of layers in the encoder and decoder parts, and the

number of training sente nces.

It turns out that, with the small data set, we can

build an NM T model, which achieves roughly the

same performance as the pre-trained model, by ad-

justing the hyperparameters; we should make an en-

coder for indigenous language smaller to m a intain

the translation performance, while the decoder should

still be large because it directly affects the perfor-

mance. It is well known that the larger the training

data set becomes, the better NMT performance is. On

the other hand, it is sometimes hard to obtain larger

data sets. Acco rding to our preliminary results, in this

work we decided to use 10,000 sentences in the fol-

lowing experiments, which is quite small compared to

the data set used in existing works.

3.1.2 English Speech Recognition

Next, we tested an English ASR shown in Figure 1

(a). We adopted an English Hu BERT model provid ed

by Facebook, which was trained using 960 -hour spo-

ken data f rom Librispeech (Panayotov et al., 2015).

The transformer consisted of a CNN encoder a nd a

12-layer transfor mer. As an En glish text decoder, we

employed a two-layer transformer, each having 12 at-

tention heads. When building the ASR system, in the

encoder transformer, we fixed the eight layers on the

Speech Recognition for Indigenous Language Using Self-Supervised Learning and Natural Language Processing

781

Table 3: Examples of input, reference and t r anslated sentences in Figure 1 (b).

Input Japanese text Reference text Tr anslated English text

かれにあいたいの

i — w a n t — t o — s e e — h i m i — — — — — m e e t — h i m

わたしのむすこがちょうど

ぴあのをはじめたんです

m y — s o n — s t a r t e d —

p l a y i n g — p i a n o

m y — s o n — — — — —

— — — — — p i a n o

よかったらつかってください

p l e a s e — u s e — i t u s e — — — — — — — — — —

† ’—’ indicates space.

Table 4: Examples of Japanese autoencoder results in Figure 1 (c).

Reference Reconstructed characters

かれにあいたいの かれはあううすす

わたしのむすこがちょうどぴあのをはじめたんです わたしのののぴあのはちかかがはじめたです

よかったらつかってください よかかかつかうういい

input side, while fine-tuning the remaining four lay-

ers on the output side. The decoder was trained from

scratch. We used the Librispeech test-clean-100 data

set to re-train the model, a nd a one-hou r subset of Lib-

riLightLimited (Liu et al., 2019) for evaluation.

Table 2 shows reco gnition result examples. As a

result, the English ASR achieved a word error rate

of 11.04%. We finally confirmed that the feature en-

coder and the English text decoder were properly pre-

pared for the following exper iments.

3.2 Experiments in NLP

3.2.1 Japanese-English Machine Translation

First, we checked our machine translation model, de-

picted in Figure 1 (b). We utilized a JESC corpus

(Pryzant et al., 2018 ), consisting o f Japanese-English

subtitle pairs. From the c orpus, we ran domly se-

lected 10,000 pairs for model training, 1,000 for vali-

dation, and 1,0 00 for evaluation. As explained in Sec-

tion 2 , only the Japanese enco der was trained, while

the decoder was derived from the E nglish ASR sys-

tem. The architecture of the Japan e se encoder was

the same as that of the English decoder. Note that

our Japanese encode r only accepted Japanese hira-

gana characters. Japanese characters were firstly con-

verted to IDs, followed by the embedding pr ocess to

obtain a 768-dimensional vecto r for each character,

the size of whic h was the same as the input/output

size of th e English decoder.

Table 3 shows examples of translation results,

where the BLEU score is 0.20. Although it was

not sufficient, it can be seen that our model can cor-

rectly translate several words that probably app eared

in the training data set. It is generally acceptable that

words which did not appear in the training data set

can hardly be translated correctly, because the model

did not know the terms. It is fina lly concluded that

the translation system was trained, and can tran slate

Japanese sentences into English sentences to some ex-

tent.

3.2.2 Japanese Text Autoencoder

Second, we investigated our Japanese text autoen-

coder, illustrate d in Figure 1 (c). We used the same

data set as in the NMT experimen t above; only

Japanese sentences were used this time. The encoder

was the same as the Japanese enco der in NMT, which

was fixed thro ughout this experiment. The decoder

had the same model architecture, and was op timized

using the Japa nese sentences.

Table 4 indic a te s th e results, and the BLEU score

is 0. 24. Similar to the N MT results, some words could

be reconstructed correctly, while the other parts could

not. In spite that the output sentences seem to be in-

appropriate perha ps due to the lack of vocabulary in

the data set, it can be said that semantic information

may still be retained in our model.

In NMT and autoencoder experiments, we ob-

served still lower BLEU performance, mainly due to

the lack of vocabulary. It is of course needed to im-

prove the scores, however, it was unknown that such

the systems could contribute to o ur final goal, to build

a better ASR system. We then moved to the next ASR

experiment usin g the above NLP models.

3.3 Experiments in ASR

3.3.1 Our Proposed Method

Finally, we evaluated our Japanese ASR system,

shown in Figure 1 (d ). For fine-tuning, we prepared

5,880 Japanese spoken sentences from the Commo n

Voice 7 .0 Japanese data set (Ardila et al., 2019). Re-

garding a recognition model, the English HuBERT-

based feature extractor wa s chosen, in addition to the

Japanese text decoder. The whole decoder and the

four layers on the output side in the encoder were

ICPRAM 2024 - 13th International Conference on Pattern Recognition Applications and Methods

782

Table 5: Examples of recognition results of our proposed method in Figure 1 (d).

Reference (English translation) Recognized characters

がめんがこうこくだらけでみにくてしょうがない

(The screen is full of ads, making it difficult to see.)

がめんがこうぽくだらけでみにくてしょうがない

こまっているひとはほっておけないせいかく

(I have a personality that cannot leave people in need.)

こまっているひとはほうっておうけないせいかく

う

かれらはゆうびんはいたついんにわいろをわたし

なんとかそのてがみをてにはいれました

(They bribed the postman and managed to get the letter.)

かれらはゆうびんはいったついんにわいろをわた

しなんとかそんてがめをてにいでました

おなじないようのちしきでもじょうしきとかがく

とではありかたがちがっている

(Even for the same content knowledge, common sense

and science have different ways of being.)

おなじないようのちしきでもじょうしきとかがく

とではありかたがちがっている

Table 6: Examples of recognition results of the competitive method.

Reference (English translation) Recognized characters

りかはにがてだがどっぷらーこうかだけはおぼえ

てる

(I’m not good at science, but I only remember the

Doppler effect)

でぃかーはにがてだがどっぷらーこうかだけはお

ぼえてる

なぜならさいこうのゆうじんはかれしかいないか

ら

(Because he is the best friend I have.)

なぜならさいこうのゆうじんはかでしかいないか

ら

ふたりはたけーなといった

(Two said it was expensive.)

ふたりはたけいなとをいった

どうめいのすーぱーでもおみせごとにしなぞろえ

にとくちょうがある

(Even within the same chain of supermarkets, each

store has its own unique selection of products.)

どうめいのすーぱーでもおみせごとにしなぞくち

ょうがある

Table 7: Character err or rates of Japanese ASR systems.

Model CER [%]

Proposed 20.18

Baseline 27.97

then fine-tuned. We also obtained test da ta , i.e. 1,928

Japanese spoken sentences, from the same data set.

Table 5 shows examples of Japanese rec ognition

results. The who le character err or rate is 20.18%. It

is found that the recognition re sults seem to be accept-

able; they are not perfect, but in many cases we can

easily guess the meaning.

3.3.2 Competitive Baseline Method

For comparison, we also built another Japanese ASR

model as a baseline, which was similar to ( H a ttori

and Tamura, 2023). We prepared a model consist-

ing of the same architectu re as our proposed scheme:

the En glish HuBERT-ba sed feature extractor and the

Japanese recognizer. Similar to our previous work,

we carried out fine -tuning to the four laye rs in the en-

coder, and trained the decoder fro m scratch. Table 6

indicates rec ognition examples. We then obtained a

character erro r rate of 27.9 7%, which means that our

scheme achieved a 7.79% absolute improvement or a

27.85% relative error reduction over the baseline.

Table 7 summa rizes both accu racy. Still, the per-

formance needs to be improved fo r practical use.

However, as mentioned it is hard to collect speech

data for any minor language, and the lack of the data

set causes low ASR accuracy. It is found that o ur

scheme could comp e nsate the performance by em-

ploying NLP technology and text data. Consequently,

we believe that the effectivene ss of our proposed con-

cept to build a better indigenous ASR method using

another SSL-based ASR for a major language as well

as NLP techniques is clarified.

Speech Recognition for Indigenous Language Using Self-Supervised Learning and Natural Language Processing

783

4 CONCLUSION

This pape r proposed a novel framework to build an

ASR system for under-resourced languages by utiliz-

ing an SSL-b ased ASR system for a major language,

as well as NLP technology such as NMT and text au-

toencod er. First, we made English ASR, Japanese-

English NMT and Japanese text autoencoder. We

checked their performance, and confirmed that we

had developed th e m well. Second, we built a Japanese

ASR using sub-modules of the above systems. We

condu c te d evaluation exp eriments, and it is found that

we could successfully develop the system with ac-

ceptable accuracy.

As our future works, we need to improve mod-

els to achieve higher BLEU scores using larger data

sets. Investigating the relationship between the da ta

size and the performance is also useful, sin ce col-

lecting a larger database for indigenous languages re-

quires higher costs. We will also compare our scheme

with the state-of-the-art NLP and ASR system so that

we could know the performanc e upper limit, which is

useful for future improvement. It is also obvious that

applying the proposed technique to real indigenous

languag es is included in our future tasks.

REFERENCES

Ardila, R., Branson, M., Davis, K., Henretty, M., Kohler,

M., Meyer, J., Morais, R., Saunders, L., Tyers,

F. M., and Weber, G. (2019). Common voice: A

massively-multilingual speech corpus. arXiv preprint

arXiv:1912.06670.

Baevski, A., Zhou, H., Mohamed, A., and Auli, M.

(2020). wav2vec 2.0: A framework for self-

supervised learning of speech representations. arXiv

preprint arXiv:2006.11477.

Hattori, T. and Tamura, S. (2023). Speech recognition for

minority languages using hubert and model adapta-

tion. In International Conference on Pattern Recog-

nition Applications and Methods (ICPRAM), pages

350–355.

Hsu, W.- N ., Bolte, B., Tsai, Y.-H. H., Lakhotia, K.,

Salakhutdinov, R., and Mohamed, A. (2021). Hu-

bert: Self-supervised speech representation learning

by masked prediction of hidden units. IEEE/ACM

Transactions on Audio, Speech, and Language Pro-

cessing.

Klein, G., Kim, Y., Deng, Y., Senellart, J., and Rush, A. M.

(2017). Opennmt: Open-source toolkit for neural ma-

chine translation. arXiv preprint arXiv:1701.02810.

Liu, L., Jiang, H., He, P., Chen, W., Liu, X., Gao,

J., and Han, J. (2019). On the variance of the

adaptive learning rate and beyond. arXiv preprint

arXiv:1908.03265.

Panayotov, V., Chen, G., Povey, D., and Khudanpur, S.

(2015). Librispeech: an asr corpus based on public

domain audio books. In International Conference on

Acoustics, Speech and Signal Processing (ICASSP).

Pryzant, R., Chung, Y., Jurafsky, D., and Britz, D.

(2018). JESC: Japanese-english subtitle corpus. arXiv

preprint arXiv:1710.10639.

Tiedemann, J. (2012). Parallel data, tools and interfaces

in OPUS. In International Conference on Language

Resources and Evaluation (LREC), pages 2214–2218.

UNESCO (2019). LT4All. https://lt4all.org/en/index.html.

ICPRAM 2024 - 13th International Conference on Pattern Recognition Applications and Methods

784