Large Language Models in Enterprise Modeling: Case Study and

Experiences

Leon G

¨

orgen

1

, Eric M

¨

uller

1

, Marcus Triller

1 a

, Benjamin Nast

1 b

and Kurt Sandkuhl

1,2 c

1

Institute of Computer Science, Rostock University, Albert-Einstein-Str. 22, 18059 Rostock, Germany

2

School of Engineering, J

¨

onk

¨

oping University, Gjuterigatan 5, 55111 J

¨

onk

¨

oping, Sweden

Keywords:

Enterprise Modeling, Large Language Model, ChatGPT, Artificial Intelligence, Proxy Domain Expert,

Process Modeling.

Abstract:

In many engineering disciplines, modeling is considered an essential part of the development process. Exam-

ples are model-based development in software engineering, enterprise engineering in industrial organization,

or digital twin engineering in manufacturing. In these engineering disciplines, the application of modeling

usually includes different phases such as target setting, requirements elicitation, architecture specification,

system design, or test case development. The focus of the work presented in this paper is on the early phases

of systems development, specifically on requirements engineering (RE). More specifically, we address the

question of whether domain experts can be substituted by artificial intelligence (AI) usage. The aim of our

work is to contribute to a more detailed understanding of the limits of large language models (LLMs). In this

work, we widen the investigation to include not only processes but also required roles, legal frame conditions,

and resources. Furthermore, we aim to develop not only a rough process overview but also a detailed pro-

cess description. For this purpose, we use a process from hospitality management and compare the output of

ChatGPT, one of the most popular LLMs currently, with the view of a domain expert.

1 INTRODUCTION

In many engineering disciplines, modeling is consid-

ered an essential part of the development process. Ex-

amples are model-based development in software en-

gineering, enterprise engineering in industrial orga-

nization, or digital twin engineering in manufactur-

ing. In these engineering disciplines, the application

of modeling usually includes different phases such

as target setting, requirements elicitation, architec-

ture specification, system design, or test case develop-

ment. The focus of the work presented in this paper is

on the early phases of systems development, specifi-

cally on requirements engineering (RE). More specif-

ically, we address the question of whether domain ex-

perts can be substituted by artificial intelligence (AI)

usage.

The aim of our work is to contribute to a more

detailed understanding of the limits of large language

models (LLMs).

a

https://orcid.org/0000-0002-7709-0353

b

https://orcid.org/0000-0003-4659-9840

c

https://orcid.org/0000-0002-7431-8412

In previous work (Sandkuhl et al., 2023), we fo-

cused on retrieving domain knowledge from Chat-

GPT regarding processes common in an application

domain or general tasks to be performed. The core

result of this previous study was that the more spe-

cific the domain knowledge required, the less suitable

LLMs seem to be.

In this work, we widen the investigation to include

not only processes but also required roles, legal frame

conditions, and resources. Furthermore, we aim to

develop not only a rough process overview but also a

detailed process description. For this purpose, we use

a process from hospitality management and compare

the output of ChatGPT, one of the most popular LLMs

currently, with the view of a domain expert.

The paper is structured as follows: section 2 de-

scribes the background for our work from enterprise

modeling (EM), LLMs, and the application potential

of LLMs in EM. Section 3 introduces the research

method applied in our work, followed by a systematic

literature review (SLR) in section 4. Section 5 de-

scribes the experiment and discusses the results. Sec-

tion 6 gives a conclusion and implications for future

work.

74

Görgen, L., Müller, E., Triller, M., Nast, B. and Sandkuhl, K.

Large Language Models in Enterprise Modeling: Case Study and Experiences.

DOI: 10.5220/0012387000003645

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 12th International Conference on Model-Based Software and Systems Engineering (MODELSWARD 2024), pages 74-85

ISBN: 978-989-758-682-8; ISSN: 2184-4348

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

2 BACKGROUND AND RELATED

WORK

2.1 Large Language Models

LLMs belong to the broader category of deep learning

models, address the area of natural language process-

ing, and are designed to interpret and generate human-

like text. Essential concepts of LLMs and their evo-

lution have been widely documented, for example, in

the publication by Brown et al. (Brown et al., 2020).

The most influential architecture in recent times

for building LLMs is the Transformer. It uses at-

tention mechanisms (Vaswani et al., 2017) to weigh

the importance of different words or tokens in a se-

quence when producing an output. LLMs are trained

on vast amounts of text data to be able to gener-

ate coherent and contextually relevant text across a

wide range of topics. For instance, models like Ope-

nAI’s GPT (Generative Pre-trained Transformer) se-

ries have been trained on books, articles, and web

pages. The pre-training of LLMs is supposed to be

task-agnostic (Huang et al., 2022).

Capabilities of LLMs include tasks such as trans-

lation, question-answering, summarization, and text

generation without needing task-specific training

data. One of the most important features of mod-

els like GPT is their ability to generate coherent, di-

verse, and contextually relevant text over long pas-

sages. One of the currently most popular LLMs, Ope-

nAI’s GPT-4 with its Chatbot frontend - ChatGPT

1

can also be used for translation, grammar correction,

or email composition (Floridi and Chiriatti, 2020).

While LLMs are powerful, they can sometimes pro-

duce incorrect or nonsensical answers, which often

are termed “hallucinations”.

The use of LLMs starts from inputs (called

prompts) stating the task to be completed by the

LLM. LLMs are sensitive to the input phrasing.

Thus, prompt engineering and prompting methods

(Liu et al., 2023) have developed into a critical topic

of study for LLMs as they investigate the techniques

by which end-users can use LLMs to perform tasks.

2.2 Enterprise Modeling

EM is addressing the “systematic analysis and mod-

eling of processes, organization structures, products

structures, IT-systems or any other perspective rel-

evant for the modeling purpose” (Vernadat, 2003).

The role of EM is usually to provide methods, tools,

and practices for capturing and visualizing the current

1

https://chat.openai.com

(“as-is”) situation and developing the future (“to-be”)

situation. In particular, a model of the current situa-

tion forms one of the fundamentals for supporting the

future development of organizations. Without knowl-

edge of the “as-is”, a systematic design and develop-

ment of future capabilities, products, or services are

usually difficult.

The variety and dynamics of methods, languages,

and tools supporting EM are visible in work on re-

search roadmaps and future directions, originating

both from the information systems community (see,

e.g., (Sandkuhl et al., 2018)) and from scholars in in-

dustrial organizations (e.g., (Vernadat, 2020)).

Given the complexity of enterprises, in the course

of modeling an enterprise, there is the need to under-

stand, analyze, capture, and represent what is relevant

for different stakeholders and/or modeling purposes.

In this context, there seems to be an agreement in the

academic literature related to enterprise modeling that

a key feature of an enterprise model is that it includes

various perspectives. (Frank, 2014), e.g., states that “a

perspective as a psychological construct constitutes a

conception of reality, comparable to a particular view-

point in spatial perception [. . . ], which helps to reduce

complexity by constituting sense [. . . ].” EM projects

can have different purposes.

In some cases, making an EM activity is help-

ful when capturing, delimiting, and analyzing the

initial problem situation and deciding on a course

of action. In such cases, EM is mostly used as a

problem-solving and communication tool. The en-

terprise model created during this type of modeling

is used for documenting the discussion and the deci-

sions made. The main characteristics of this purpose

are that the company does not intend to use the models

for further development work and that the modeling

activity has been planned to be only a single iteration.

2.3 Application Potential of LLMs in

EM for Requirements Engineering

The potential of LLMs as a proxy for domain ex-

perts has been investigated by (Sandkuhl et al., 2023)

starting from the expected contribution of domain ex-

perts to EM. We consider this perspective as also suit-

able for our investigation of EM use in RE. (Stirna

and Persson, 2018) describe the role of domain ex-

perts in EM in general as “supplying domain knowl-

edge, knowledge about organization units involved

[. . . ]; examining and evaluating the results of enter-

prise modeling, and integration of modeling results of

different teams into a consistent whole.” In RE, these

contributions are required for different aspects of the

system to be developed, for example, the required

Large Language Models in Enterprise Modeling: Case Study and Experiences

75

Table 1: Potential application areas of LLMs in EM for Requirements Engineering.

Task of Domain Experts in RE

EM support for RE Supply of Domain Knowledge Integrate Modeling Results Evaluate Results

Model of current perspectives relevant for the models developed for the individual models

situation scope (e.g., goals, organisation, perspectives and inter-model

structure, process, products, integration

IT, resources)

Required changes potential changes; how realistic

and alternatives and accepted are they?

Model of future all perspectives relevant models developed for individual models

situation for the change specifying the change and inter-model

integration

functionality or organizational integration. For or-

ganizational integration, the different perspectives of

EM (see section 2.2) are useful. An analysis by (Ver-

nadat, 2020) showed that frequently used perspectives

are goals, organization structure, process, products,

and IT and resources.

In addition to different contributions expected

from domain experts and various perspectives, the

different modeling tasks in the course of RE require

different ways of participation from the domain ex-

perts. (Krogstie, 2016) concludes that the most rele-

vant modeling phases to be distinguished in this con-

text are scoping of the project, modeling of the current

situation, analysis of required changes and potential

alternatives, and modeling of the future situation. As

scoping usually has to be finished before starting RE,

we exclude this phase from our investigation.

The results of modeling the current situation have

to be examined by the domain expert for accuracy and

completeness. In the process of analysis and find-

ing alternatives, creativity in designing feasible and

acceptable changes is most important. In modeling

the future situation, the domain experts have to make

sure that the different perspectives add to a consistent

whole.

The above considerations result in a variety of

tasks that could potentially be supported by LLM. Ta-

ble 1 summarizes these tasks by showing the phases

of EM support for RE as rows and the different con-

tributions of domain experts as columns.

3 RESEARCH METHOD

The starting point of our work is the question pre-

sented in the introduction and the decision to focus

on supporting domain experts in the task of model-

ing the current situation. Based on this, two research

questions (RQ) were defined for the paper:

• RQ 1: How consistent and complete is the out-

put of ChatGPT compared to the knowledge of a

domain expert in the context of an EM project?

• RQ 2: How can prompt pattern improve the output

of ChatGPT?

The overall research strategy for work presented

in this paper is of an explorative nature, i.e., we aim

to gather new knowledge by exploring the potential of

ChatGPT use in EM. More concretely, the work com-

bines literature studies with quasi-experiments and

argumentative-deductive work.

The literature review objective was to identify rel-

evant studies and findings from other researchers to

consider when exploring the potential of LLMs for

use in EM. Kitchenhams’s SLR approach (Kitchen-

ham, 2004) was utilized for this purpose. Six steps

are suggested, which we briefly introduce below and

document in detail in section 4.

The first step is to develop the research questions

(RQ) to be answered by the SLR. The process of

paper identification begins with the definition of the

overall search space (step 2), which basically consists

of determining the literature sources to be considered

in light of the research questions. Paper identifica-

tion continues with the population phase (step 3). In

this step, the search string is developed and applied by

searching the literature sources. This is followed by

the paper selection step, in which inclusion and exclu-

sion criteria are defined, and relevant papers found in

the population phase (step 4) are manually selected.

The data collection phase (step 5) focuses on extract-

ing the information relevant to answering the research

question from the set of identified relevant papers.

The final step is data analysis and interpretation, i.e.,

answering the research question defined in step 1 us-

ing the collected data from relevant papers.

We structured the field of EM along with the tasks

to be performed during a project (see section 2.2).

This is the argumentative-deductive part of our work.

In our work, we conduct a quasi-experiment us-

ing ChatGPT and domain experts as the study objects.

MODELSWARD 2024 - 12th International Conference on Model-Based Software and Systems Engineering

76

The treatment is the task of eliciting required roles, le-

gal frame conditions, and resources for a process from

hospitality management. A quasi-experiment is “an

experiment in which units are not assigned to condi-

tions randomly” (Cook et al., 2002). The purpose of

the experiment is to conduct exploratory research to

answer the defined research questions rather than to

test a specific hypothesis. The experiment design is

described in detail in section 5.1.

4 SYSTEMATIC LITERATURE

REVIEW

Related work was identified through an SLR follow-

ing the six-step method proposed by Kitchenham (see

section 3). The research questions (step 1) were al-

ready introduced in section 3. Scopus, IEEE Xplore,

and AISeL databases constituted the search space

(step 2). The search string used in these databases

combines the term “Enterprise Modeling” with “large

language model” and its synonyms, such as “Process

Modeling”, “LLM”, “neural text”, and “ChatGPT”.

The final search string used was (“Enterprise Mod-

eling” OR “Process Modeling”) AND (“Large Lan-

guage Model” OR “LLM” OR “Neural Text” OR

“ChatGPT”). The search in title, abstract, and key-

words yielded 18 papers. The inclusion criterion (step

4) required that the papers discuss LLM use in the

context of EM.

Of the 6 results found in Scopus, 2 were excluded

due to their status as conference proceedings that con-

tained papers on either EM or LLM, but no papers

covered both topics in the same work. 2 papers used

synonyms in the title or abstract but did not address

the topic of the use of LLM in EM, and 1 paper ap-

peared but meant something else by the abbreviation

“LLM”. 1 paper was relevant to our work: (Simon

et al., 2023) describe systematic experiments on using

ChatGPT-3 to interpret a textual process description

and to convert it into a formal representation. This

work is not intended to substitute an expert or assist

in creating a new process description or model. How-

ever, an LLM is also used to support certain modeling

phases.

No results were found in IEEE Xplore.

In AISeL, the query interface only allowed for

search within all metadata. 11 of the 12 results did

not mention EM and LLM or synonyms for them to-

gether in title, abstract, or keywords. One paper was

found that is relevant to our work: In our previous

work (Sandkuhl et al., 2023), we investigated the po-

tential of LLMs as a proxy for domain experts, start-

ing from the role of domain experts in EM and their

expected contribution. The focus was on the prepara-

tion of RE in EM and the identification of alternatives

for change. While the scope was limited to support-

ing the role of the domain expert, the research in this

paper is expanded to include legal frame conditions

and resources of the process. The previous results

show that ChatGPT can work with domain experts

to improve productivity, completeness, and accuracy.

ChatGPT can assist during the preparation phase by

gathering comprehensive information on the applica-

tion domain as well as on general business processes

and their flow of information. However, the results

should not be considered complete, and an expert is

always needed for the specifics of a company.

Table 2 summarizes the number of papers found in

the different databases and the relevant ones. In con-

clusion, the SLR returned 1 paper (Sandkuhl et al.,

2023) addressing LLM use in EM, focusing on the

same phases of EM projects as our work. However,

we expanded the scope to include more aspects (le-

gal frame conditions and resources). (Simon et al.,

2023) are focusing on creating models based on ex-

isting knowledge. For this reason, we decided not to

consider the identified work to improve the output of

ChatGPT with prompt pattern (RQ2). However, parts

of our previous work (Sandkuhl et al., 2023) aim to

answer similar questions as we want to answer in this

work (RQ1). It was also identified that future work

should consider investigating tasks that cover a range

of specificity, from general to specific. This work in-

cludes an experiment with such tasks, so a compari-

son of the results could be interesting.

Table 2: Results of the SLR.

Database Results Relevant Papers

Scopus 6 (Simon et al., 2023)

IEEE Xplore none none

AISeL 12 (Sandkuhl et al., 2023)

5 EXPERIMENT

5.1 Experiment Design

This section presents the experiment design of this

work. First, an overview of the modeling task to be

solved by ChatGPT is given. In this context, how the

results of ChatGPT are compared to those of the do-

main expert is also outlined. Then, the sequence of

prompts identified in this work to obtain a business

process model of ChatGPT is shown.

Large Language Models in Enterprise Modeling: Case Study and Experiences

77

5.1.1 Modeling Task

The task described below is intended to explore the

potential of using ChatGPT in the context of EM.

Specifically, the task involves the planning of a hypo-

thetical corporate event. Targeted and precise ques-

tions will be posed to ChatGPT in order to gain an

in-depth understanding of the diversity and complex-

ity of existing processes, roles, resources, and legal

frame conditions within an enterprise. The hypotheti-

cal event used as a testing ground is a corporate meet-

ing. The meeting is scheduled to start at noon and

end with a celebration in the evening. This experi-

ment focuses on supporting the domain experts’ role

in modeling the current situation (see section 2.3).

It starts with the survey of ChatGPT. The answers

obtained serve as the basis for developing an initial

business process model. In a subsequent step, a do-

main expert is consulted and confronted with the same

questions. Together with the expert, a new business

process model is then developed for the same event.

To conclude the investigation and to fully round

out the experiment, the model generated by ChatGPT

is subjected to a thorough analysis. Under the guid-

ance and with the technical support of the domain ex-

pert, the model will be examined for possible errors

or weaknesses. Here, not only is a comprehensive er-

ror analysis to be performed but also the uncovering

and highlighting of potential improvement opportuni-

ties is of central importance. This phase of the exper-

iment thus forms a comprehensive evaluation of the

suitability of ChatGPT as a proxy for a domain expert

in EM.

5.1.2 Comparison of ChatGPT and Domain

Expert

The comparison of the business process models is per-

formed in a systematic and controlled manner. This

ensures that a direct and meaningful analysis is pos-

sible between the model created using ChatGPT and

the model developed in collaboration with the domain

expert.

In the first step, the domain expert is interviewed.

The same questions that were previously asked in

ChatGPT are used here. The domain expert’s answers

serve as the basis for developing a business process

model that addresses the same context as the model

created with ChatGPT. After both models have been

created, the domain expert evaluates them. Here, the

two models (ChatGPT vs. domain expert) are jux-

taposed and evaluated with the help of various met-

rics. This systematic approach enables an objective

and comprehensive evaluation and provides valuable

insights into the strengths and weaknesses of model

development using ChatGPT compared to traditional

model development of a domain expert.

The following section presents the methodology

for evaluating and comparing the business process

model developed using ChatGPT with a business pro-

cess model created by a domain expert. Here, four

key metrics are applied to evaluate different aspects

of the models:

• Accuracy aims to determine the correctness of

the information provided by ChatGPT and the do-

main expert with respect to several issues (e.g.,

correct, out of scope, or hallucination). It is an

important indicator of the reliability of the gener-

ated business process models.

• Completeness is intended to determine the extent

to which the responses provided comprehensively

cover all necessary information. If any missing

information is identified, it is checked to see if it

is required or optional. A high level of complete-

ness ensures that all relevant aspects of the busi-

ness process are included in the model.

• Comprehensibility assesses the ease with which

the responses can be interpreted and understood

by those conducting the experiment. This is cru-

cial to ensure that the models are clear and com-

prehensible to all participants.

• Time captures the duration that both the domain

expert and ChatGPT need to provide the required

information. This is an important indicator of the

efficiency of model building and may have an im-

pact on the practicality of the approach.

5.1.3 Prompt Engineering

Achieving meaningful and optimal results in working

with ChatGPT requires careful design and formula-

tion of appropriate input requirements (prompts). An

input prompt represents a sequence of instructions or

directives that are used to guide and control the LLM.

By specifically formulating these input prompts, the

model can be programmed to generate certain re-

sponses or to improve and refine its response capa-

bilities in specific ways (Liu et al., 2023).

The focus of the following section is on the area of

“prompt engineering”, a process by which LLMs can

be programmed and controlled by providing carefully

designed prompts (White et al., 2023). This is fol-

lowed by a presentation of input prompts, which in-

cludes the context of the situation as well as the issues

involved in developing a business process model. The

prompts were entered in German.

The prompt engineering in this work can be di-

vided into three phases:

MODELSWARD 2024 - 12th International Conference on Model-Based Software and Systems Engineering

78

1. Input Refinement: Using ChatGPT-4, we en-

riched our formulated questions to improve the

output.

2. Output of the Process Description: We used the

enriched questions in ChatGPT-4 to get the infor-

mation about the process.

3. Textual Description of the Model: Finally, we

asked ChatGPT-4 to provide a textual description

of the model.

The foundation of the discussed prompt stems

from the work outlined in (White et al., 2023). To

enhance the understanding of the subject matter and

emphasize the significance of the research findings,

this summary highlights the patterns utilized here to

enhance communication with an LLM:

• Question Refinement: ChatGPT is actively in-

volved in the prompt engineering process. The

pattern enables ChatGPT to optimize the ques-

tions asked by the user in order to obtain addi-

tional information or to fill any gaps in under-

standing.

• Cognitive Verifier: Helps ChatGPT to better un-

derstand the intention of the question. The goal

is to encourage ChatGPT to decompose the cur-

rent question into additional questions to provide

a more precise answer.

• Persona: Allows the LLM to be given a specific

point of view or perspective (in this case, an expert

in EM).

• Reflection: This pattern is used to provide an au-

tomatic reasoning for the given answers. This al-

lows for a better assessment of the validity of the

output and provides insight into how ChatGPT ar-

rived at a particular answer.

Applying the patterns has equipped ChatGPT with

the requisite communication methods necessary for

more nuanced and precise interactions with users.

This includes the ability to refine inquiries, take vary-

ing perspectives, reflect on response processes, and

manage contextual reference points within ongoing

conversations.

After integrating these patterns, it becomes criti-

cal to specify the most relevant context possible. This

context allows the system to develop a clear under-

standing of the current situation and lays the founda-

tion for the subsequent conversation. In this context,

providing a precise and comprehensive description of

the situation plays a vital role in enabling ChatGPT

to concentrate on relevant aspects and generate a suit-

able response.

During the 1. Input Refinement phase, our aim

is to gain precise comprehension of ChatGPTs eval-

uations and perspectives to develop a sophisticated

business process model. It is critical to use carefully

worded questions to obtain an accurate assessment

of ChatGPT’s capabilities and responses. With the

intent of conducting such an in-depth assessment, a

series of specific questions were formulated and di-

rected to ChatGPT. These questions were designed

to explore various aspects of ChatGPT’s performance

and behavior, highlighting the nuances and complexi-

ties of the interaction. The questions were extensively

edited and expanded using two of the above patterns

(marked with square brackets in the prompt) within

an earlier dialog with ChatGPT. This methodological

adaptation served to ensure a better formulation and

selection of questions, thus generating better results

in the subsequent research:

“[Question Refinement:] If I ask a question and

you find a better wording that could avoid possible

misunderstandings, suggest this improved version of

the question. Also, think of additional questions that

could help me design a more accurate business pro-

cess model. [Cognitive Verifier:] Think of an addi-

tional one to three questions that will help you pro-

vide a more accurate answer. After answering the ad-

ditional questions, combine the answers to provide a

final answer to my original main question. [Ques-

tions:] (...)”

We then created the main prompt for the 1. Input

Refinement phase, which only required one interac-

tion in the dialog with ChatGPT:

“[Persona:] You are now acting as a domain ex-

pert in the field of enterprise modeling. I would like

to design a business process model for an event. Use

your expert knowledge as an enterprise modeler to

improve the questions I ask you. [Question Refine-

ment:] If I ask a question and you find a better word-

ing that could avoid possible misunderstandings, sug-

gest this improved version of the question. Also, think

of additional questions that could help me design a

more accurate business process model. [Context:]

Here is the context for the event: A company wants

to plan a conference in an exhibition hall that starts

at 12 noon. The conference should last until 6 pm and

be rounded off with an appropriate evening event after

6 pm until 11 pm at the latest. Answer the following

questions from the perspective of the most responsible

person. Around 200 guests are expected. During the

conference and the celebration, a catering company

will be hired to take care of all the food and drinks.

An average budget is available for the event. [Ques-

tions:]

1. What are the specific roles and responsibilities in

the planning and execution of such an event? Who

is typically responsible for what?

Large Language Models in Enterprise Modeling: Case Study and Experiences

79

2. What resources are typically required to success-

fully execute such an event?

3. What are the processes involved in such a meet-

ing?

4. What is a typical schedule for the day of the event?

What happens at what time, and who is responsi-

ble?

5. How will the number and type of personnel re-

quired be determined? How are the work sched-

ules and tasks of the staff organized?

6. How will the process of procuring ingredients and

materials for the event be controlled?

7. What special regulations and safety requirements

must be observed in the planning and execution of

such an event?

8. What follow-up activities are necessary after the

event (e.g., cleaning, evaluation, accounting with

suppliers and partners)?”

The results of this query can be seen in the prompt

for the creation of the business process model. Some

questions were rephrased (e.g., questions 1 and 8) or

made more specific (e.g., questions 3 and 4 ) in this

process. In addition, two new questions were added

(questions 2 and 10). We used an additional dialog

with ChatGPT for the phase 2. Output of the Process

Description, which required four interactions:

User (1): “[Persona:] You are now acting as a

domain expert in the field of enterprise modeling. Use

your expert knowledge as an enterprise modeler to

model a company based on the following situation

and answer my questions to the best of your abil-

ity. [Reflection:] When answering a main question,

please explain the reasoning and assumptions behind

your answer. You should also address any ambiguities

or limitations in your answer. Answer the questions

in as much detail as possible. Do not answer in gen-

eral terms, but be specific to the given context for the

planned event. In the next message, I will give you the

context of the event that you are supposed to model.

Did you understand that?”

ChatGPT: “Yes, I have understood that. I look

forward to modeling the proposed company based on

the situation provided. Please provide the context for

the company you would like modeled.”

User (2): “[Context:] A company would like to

plan a conference in an exhibition hall that starts at

12 noon. The conference is to last until 6 p.m. and be

rounded off with an appropriate evening event after 6

p.m. until 11 p.m. at the latest. Answer the following

questions from the perspective of the most responsi-

ble person. 200 participants are expected. During

the conference and the celebration, a catering com-

pany will be hired to take care of all the food and

drinks. An average budget is available for the event.

Your task is to find out which players, resources, and

processes are needed for such an event. Your task is to

answer my questions about these players, resources,

and processes. In the next message, I will ask you

questions about the event, and we will try to create a

business process model together. Did you understand

that?”

ChatGPT: “Yes, I have understood that. It sounds

like an interesting task, and I am ready to answer

your questions and create a business process model.

Please ask your questions, and we can start the mod-

eling process.”

User (3): Here are my questions:

1. What specific responsibilities must be taken into

account when planning and implementing such an

event, and what tasks usually fall within their ar-

eas of responsibility?

2. Which actors are still present in addition to the

main responsible roles? Which processes fall

within their areas of responsibility?

3. What resources are needed to successfully plan

and execute an event of this size and nature?

4. What specific processes and procedures should be

considered during the planning, execution, and

follow-up of the event?

5. What might a detailed schedule for the day of the

event look like, including responsibilities and tim-

ing for the various activities?

6. On the basis of which criteria is the required num-

ber and type of personnel determined, and how

are the work plans and tasks of the personnel or-

ganized and coordinated?

7. How is the process of procuring resources for the

event efficiently managed and coordinated?

8. What special legal regulations and safety require-

ments must be observed and complied with when

planning and holding such an event?

9. Which post-event activities are necessary, and

how can they be carried out effectively and effi-

ciently (e.g., cleaning, evaluation, billing suppli-

ers and partners)?

10. How is communication and coordination between

the various stakeholders (e.g., staff, catering com-

panies, guests) ensured?”

Using the Persona and Reflection Pattern in con-

junction with the extended questions provides a sys-

tematic approach to building and reflecting on the

model to effectively align it with the requirements of

the business process.

MODELSWARD 2024 - 12th International Conference on Model-Based Software and Systems Engineering

80

5.2 Results

This section presents the results of the experiment.

First, the output of ChatGPT is given in section 5.2.1,

followed by the comparison of the business process

models in section 5.2.2. Section 5.2.3 is a discussion

on the use of ChatGPT in the area of EM.

5.2.1 ChatGPT Output

This section describes the output of ChatGPT using

the developed prompt described in section 5.1.3 (2.

Output of the Process Description). The output is

displayed and divided according to the questions

regarding the process, roles, resources, and legal

frame conditions. We then asked for the textual

description of the process model.

1. Specific Responsibilities and Tasks

• Event Manager: Is responsible for the overall or-

ganization and planning of the event. This in-

cludes setting the budget, booking the venue, hir-

ing service providers (such as catering, security,

and cleaning), creating the schedule, and coor-

dinating communication between all parties in-

volved.

• Technical Director: Is responsible for the techni-

cal aspects of the event, such as the sound sys-

tem, lighting, video technology, and stage con-

struction.

• Security Director: Responsible for creating and

implementing safety plans, including evacuation

plans, fire code compliance, and general partici-

pant safety.

• Marketing: Responsible for promoting the event,

creating marketing materials, maintaining social

media and website, and communicating with par-

ticipants.

2. Other Players

• Catering Company: Responsible for providing

food and drinks during the meeting and evening

event.

• Cleaning Company: Responsible for cleaning be-

fore, during, and after the event.

• Suppliers: Supply necessary equipment, such as

furniture, technology, decoration, etc.

• Participants: Are the target audience of the event.

3. Required Resources

• Venue: An exhibition hall large enough to accom-

modate 200 participants.

• Technical Equipment: Audio-visual equipment,

microphones, lighting, stage, etc.

• Staff: Technicians, security staff, cleaning staff,

event managers, PR/marketing staff.

• Food and Drinks: Provided by the catering com-

pany.

• Decoration: On the design of the venue.

4. Specific Processes and Procedures

• Planning Phase: Selecting and booking the

venue, setting the budget, hiring staff and service

providers, and creating the schedule.

• Implementation Phase: Setting up the equipment

and decorations, running the event, monitoring

the safety and well-being of participants, provid-

ing food and drinks, and ongoing cleaning.

• Follow-up Phase: Dismantling and cleaning, eval-

uation of the event, accounting with service

providers and partners.

5. Proposal for a Detailed Schedule

• 07:00 - 10:00: Setup Technology and Decoration

• 10:00 - 12:00: Last Checks and Safety Briefings

• 12:00 - 18:00: Implementation of the Event,

Catering Provides Food and Drinks

• 18:00 - 23:00: Implementation of the Evening

Event, Catering Continues to Provide Food and

Drinks

• 23:00 - 01:00: Dismantling and Cleaning

6. Number and Type of Staff

• Staffing levels and types are determined based on

the scope of the event, the number of participants,

and the specific requirements of the event. Staff

work schedules and tasks are organized and coor-

dinated by the event manager.

7. Resource Procurement

• The procurement process is managed by the event

manager and/or a dedicated procurement man-

ager. This includes selecting and negotiating with

suppliers, tracking deliveries, and managing stock

and inventory.

8. Legal Frame Conditions

• When planning and staging such an event, vari-

ous legal regulations must be observed, including

fire protection regulations, noise protection reg-

ulations, hygiene regulations (especially with re-

gard to catering services), data protection regu-

lations, and possibly regulations on serving and

consuming alcohol.

Large Language Models in Enterprise Modeling: Case Study and Experiences

81

9. Post-Event Activities

• Post-event activities include dismantling and

cleaning the venue, evaluating the event (e.g.,

through surveys of participants), settling accounts

with suppliers and partners, and documenting and

analyzing the event for future events.

10. Communication

• Communication and coordination between the

various stakeholders are mainly ensured by the

event manager, often supported by communica-

tion tools and platforms. This may include reg-

ular meetings and updates, clear communication

guidelines and channels, and dedicated communi-

cation or project management software where ap-

propriate.

Afterward, we asked for a textual description of a

business process model (3. Textual Description of the

Model) using the business process model and notation

(BPMN):

User (4): “Create a textual description for a busi-

ness process model for the entire event (before the

event, during the event, after the event) using BPMN.

Include all processes and actors in the event. There

are several actors in the notation. Each process in

the event must be associated with an actor. Repre-

sent each process of the event in this model. If some

processes also require resources, point them out in

the respective processes. The processes must also be

linked to other processes. Try to break down the pro-

cess as detailed as possible. In addition, show me the

connection to the further processes for each process

mentioned. Assign IDs to the processes so that your

assignment is unique. Each process must be linked to

at least one other process.”

The answers were divided into before, during,

and after the event, and the processes were assigned

to the different roles. Based on this, we were able to

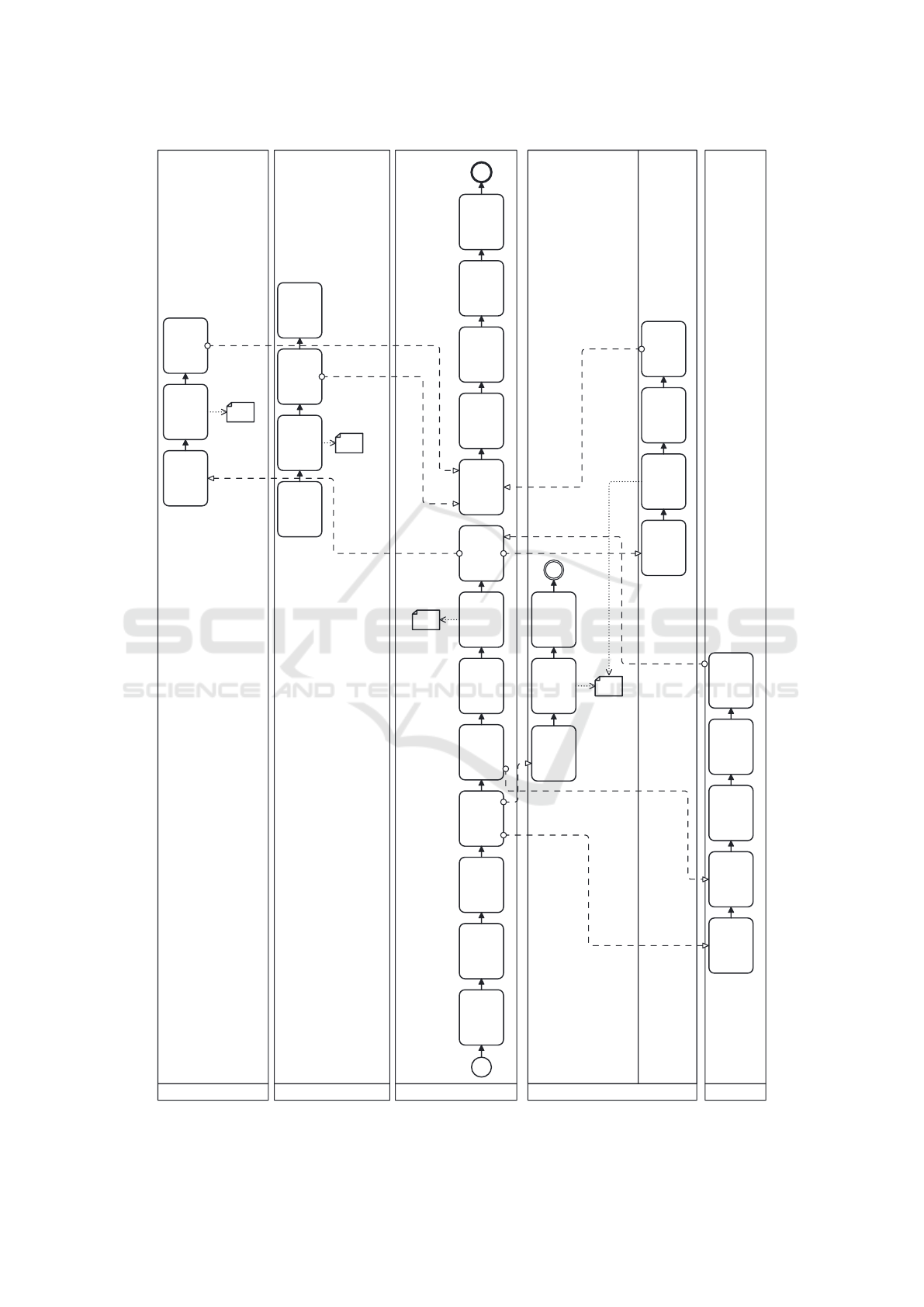

develop the business process model (see Figure 1).

The following is an example output for the role of

Event Manager before and after the event:

Before Event:

• Process 1.1: Event starts

• Process 1.2: Selection and booking of the venue

→ Connected with Process 1.3

• Process 1.3: Budget setting → Connected with

Process 1.4

• Process 1.4: Hiring staff and service providers →

Connected with Process 1.5, Process 2.1, Process

3.1

• Process 1.5: Creation of a schedule → Connected

with Process 1.6, Process 3.2

• Process 1.6: Coordination of communication with

service providers → Connected with Process 1.7

• Process 1.7: Organisation of procurement (Re-

source: Inventory) → Connected with Process 2.2

• Process 1.8: Event planning completed → Con-

nected with Process 4.1, Process 5.1

After Event:

• Process 7.1: Event ends → Connected with Pro-

cess 4.4, Process 5.3, Process 6.3, Process 7.2

• Process 7.2: Selection and booking of the venue

→ Connected with Process 1.3

• Process 7.3: Event evaluation → Connected with

Process 7.1, Process 7.3

• Process 7.4: Documentation and analysis of the

event → Connected with Process 7.3, Process 6.4

• Process 7.5: Event follow-up completed → Con-

nected with Process 7.4

5.2.2 Comparison of the Models

As described above, the assessment is based on four

essential parameters: Accuracy, Completeness, Com-

prehensibility, and Time.

Regarding the Accuracy of ChatGPT, significant

errors in the created connections are revealed. Pro-

cesses that, upon closer inspection, were considered

to have been initiated for no reason came to light (e.g.,

Process 6.1). In addition, it was recognized that Chat-

GPT neglected the temporal sequencing of processes,

which is a fundamental violation of BPMN. Despite

the identified flaws, the results of ChatGPT and the

model created were overall comprehensible.

In terms of Completeness, ChatGPT showed a

stronger focus on the execution of the event, while

the domain expert placed more weight on the plan-

ning phase, which usually makes up the bulk of such

an event. Further, the domain expert placed consid-

erable focus on communication between roles, par-

ticularly between customers and responsible parties,

while ChatGPT omitted customers or participants

from the model entirely. Additionally, the domain ex-

pert incorporated an additional role for support staff

that was left out of ChatGPT’s modeling. In gen-

eral, the model created by ChatGPT was less detailed,

which was reflected in the integration of the few re-

sources associated with the processes. In addition,

ChatGPT was strongly oriented toward the supporting

questions posed in the prompt and integrated fewer

branches for different alternatives and end states.

Both subjects showed high Comprehensibility, al-

though ChatGPT occasionally caused confusion due

to illogical links (e.g., Process 6.3 → Process 7.1),

MODELSWARD 2024 - 12th International Conference on Model-Based Software and Systems Engineering

82

Catering Company

Process 5.1

Event starts

Process 5.2

Provision of

food and

beverages

Process 5.3

Catering

completed

Event Manager

Process 1.1

Event planning

starts

Process 1.2

Selection and

booking of the

venue

Process 1.3

Budget setting

Process 1.4

Hiring staff and

service

provders

Process 1.5

Creation of a

schedule

Process 1.6

Coordination of

communication

with service

providers

Process 1.7

Organisation of

Procurement

Process 1.8

Event planning

completed

Process 7.1

Event ends

Process 7.2

Event

Evaluation

Process 7.3

Billing with

service

providers and

partners

Process 7.4

Documentation

and analysis of

the event

Process 7.5

Event follow-up

completed

Cleaning Staff

Process 6.2

Cleaning while

event

Process 6.3

Cleaning after

event

Process 6.1

Event starts

Process 6.4

Cleaning

completed

Technique

Technical Staff Technical Director

Process 4.1

Event starts

Process 2.1

Technical

planning starts

Process 4.2

Technique

build-up and

check

Process 4.3

Technical

support during

event

Process 4.4

Technique

dismantling

Process 2.2

Selection and

Procurent of

the technique

Process 2.3

Planning of

build-up and

technique

checks

Marketing

Process 3.1

Marketing

planning starts

Process 3.2

Creation of

marketing

materials

Process 3.3

Update website

and social

media

Process 3.4

Communication

with participants

Process 3.5

Marketing plan

completed

Start

Inventory

End

Cleaning

equipment

Technical Devices

Technical

planning

completed

Food and drinks

Figure 1: Business process model created with ChatGPT.

Large Language Models in Enterprise Modeling: Case Study and Experiences

83

elements, and contradictions. Nevertheless, model-

ing with ChatGPT was considered somewhat easier

because all processes, roles, and resources were ac-

curately described and linked. In contrast, the do-

main expert left more room for interpretation. Due

to the domain expert’s extensive knowledge, he be-

came aware of additional aspects of a previous topic

at a later stage, which made it slightly more difficult

to understand.

With regard to Time, the study showed that the ac-

tual modeling with the domain expert could be com-

pleted much more quickly while waiting for an ap-

pointment took more time. In contrast, ChatGPT is

available immediately. In addition, a significant time

advantage could be achieved through the use of pre-

built templates and targeted prompt engineering.

5.2.3 On the Use of ChatGPT in Enterprise

Modeling

The results of the comparison (see section 5.2.2) re-

flect many different facets. It was recognized that

the actual added value of ChatGPT depends on the

complexity of the modeling task and the prompt. Al-

though the generated model of ChatGPT is not as ex-

pressive, it still proves to be useful for simpler com-

prehension questions for those without expertise.

The use of a variety of patterns and prompts has

shown that this can increase the effectiveness of the

results. At the same time, however, difficulties in un-

derstanding the specific requirements became appar-

ent. In addition, it was found that ChatGPT is able

to generate process models even though they are not

yet available in graphical form and are not completely

semantically correct.

The experiment showed that the limitations of

ChatGPT are mainly in the following areas:

• There are limitations in verifying the accuracy of

information: ChatGPT is based on trained data

and can potentially provide false or misleading in-

formation. It is not able to verify facts or check

the accuracy of information like a knowledgeable

human. However, using the Input Refinement pat-

tern is a starting point to improve the output.

• There is limited understanding of context: Chat-

GPT may have difficulty grasping the full context

of a question or conversation. This can lead to

inconsistent or inaccurate responses, especially if

the context is complex or ambiguous.

• Sensitivity to input variations: The smallest

changes in the wording of a question can lead to

different answers. ChatGPT is sensitive to nu-

ances and word choice, potentially yielding incon-

sistent results.

• It is not possible to express uncertainty: ChatGPT

tends to present answers with some conviction,

even if it is uncertain. It cannot express uncer-

tainty or lack of knowledge, which can lead to

misleading or inaccurate information.

It is obvious that the results of this study indicate

that ChatGPT can already be used in EM as a sup-

porting tool. In summary, and with respect to RQ1, it

proves our assumption (see section 2.3 and (Sandkuhl

et al., 2023)) that it helps more with general ques-

tions than with specific ones. ChatGPT, for example,

can provide novices with a rudimentary understand-

ing when familiarizing themselves with a subject area.

However, it is important to emphasize that ChatGPT

can by no means completely replace a domain expert,

as the results of this study reveal.

The part 1. Input Refinement led to an overall im-

provement of ChatGPT’s outputs and thus provided

good insight into how to use prompt patterns effec-

tively (RQ 2). Nevertheless, more and more errors

or deficiencies occurred in detailed questions in the

2. Output of the Process Description phase. In ad-

dition, problems occurred in 3. Textual Description

of the Model, especially when it came to relations

or resources. Thus, it can be concluded that general

aspects are better supported in the modeling of the

current situation than specific ones regarding the EM

support for RE.

To use ChatGPT more effectively, targeted work

on prompts and providing even better and more sig-

nificant context is needed. This can be done by de-

veloping a specific guide in collaboration with do-

main experts, which can then be used repeatedly to in-

tegrate understanding and knowledge into ChatGPT.

Only then should consideration be given to which in-

teractions could be automated.

6 CONCLUSIONS AND FUTURE

WORK

This work has shown that ChatGPT can be a useful

tool in enterprise modeling. Especially for beginners,

ChatGPT offers the possibility to develop a basic un-

derstanding of different topics and help aspiring do-

main experts identify missing aspects. However, it is

important to note that ChatGPT cannot be seen as a

proxy for a domain expert in our specific case.

ChatGPT can assist in compensating for human

errors through a synthetic approach, working along-

side domain experts to complement their explana-

tions. The benefit is also a more efficient utilization of

the domain expert’s time. However, it is important to

MODELSWARD 2024 - 12th International Conference on Model-Based Software and Systems Engineering

84

critically scrutinize all statements provided by Chat-

GPT to ensure accuracy and objectivity. Although

ChatGPT provides valuable support, the expertise and

knowledge of human domain experts are still crucial.

While our research has led to a number of results,

it also has many limitations that identify some aspects

for future work. The development of the prompts is

mainly based on the application of existing patterns

rather than on systematic development. It is possible

that the prompts could be improved to provide a more

relevant and complete output. As ChatGPT was inten-

tionally used without prior knowledge of the domain

in this work, it would be interesting to investigate to

what extent the expert’s knowledge (e.g., the model)

can be emulated by subsequent prompt engineering.

Since our results are based on only one experi-

ment, further research is needed to make them gen-

eralizable. Future work should consider additional

patterns and focus more on evaluating the resulting

changes in responses. The ChatGPT response-based

process model is founded in textual descriptions. It

is recommended to try to generate the model in an

appropriate visual modeling language. In addition

to evaluating how responses vary based on different

prompts, future studies should also aim to investigate

their potential usefulness for other LLMs.

It is crucial to verify if other domain experts pro-

vide identical evaluations on this topic. Additionally,

there is a need to explore whether the quality of Chat-

GPT’s output changes when considering other phases

of an EM project or targeting other application areas

or model types.

Improving the accuracy and quality of ChatGPT

results is of great importance. This can be achieved

by developing methods for verifying correctness and

a better understanding of the context. In addition, col-

laboration with domain experts and optimization of

the interaction between humans and AI models offer

promising approaches for further improving ChatGPT

and enhancing its performance.

Overall, the use of ChatGPT in enterprise model-

ing opens promising opportunities but also presents

challenges and limitations. With further research and

consideration of the identified limitations, ChatGPT

can be better integrated into the enterprise context in

the future to provide valuable support. This paper’s

contribution highlights the significance of further re-

search in this area.

REFERENCES

Brown, T., Mann, B., Ryder, N., Subbiah, M., Kaplan,

J. D., Dhariwal, P., Neelakantan, A., Shyam, P., Sas-

try, G., and Askell, A. et al. (2020). Language models

are few-shot learners. In Advances in Neural Infor-

mation Processing Systems, volume 33, pages 1877–

1901. Curran Associates, Inc.

Cook, T. D., Campbell, D. T., and Shadish, W. (2002). Ex-

perimental and Quasi-experimental Designs for Gen-

eralized Causal Inference. Houghton Mifflin Boston,

MA.

Floridi, L. and Chiriatti, M. (2020). GPT-3: Its nature,

scope, limits, and consequences. Minds and Ma-

chines, 30:681–694.

Frank, U. (2014). Multi-perspective enterprise modeling:

Foundational concepts, prospects and future research

challenges. Software & Systems Modeling, 13(3):941–

962.

Huang, W., Abbeel, P., Pathak, D., and Mordatch, I. (2022).

Language models as zero-shot planners: Extracting

actionable knowledge for embodied agents. In In-

ternational Conference on Machine Learning, pages

9118–9147. PMLR.

Kitchenham, B. (2004). Procedures for performing sys-

tematic reviews. Keele, UK, Keele University,

33(2004):1–26.

Krogstie, J. (2016). Quality of Business Process Models.

Springer.

Liu, P., Yuan, W., Fu, J., Jiang, Z., Hayashi, H., and Neubig,

G. (2023). Pre-train, prompt, and predict: A system-

atic survey of prompting methods in natural language

processing. ACM Computing Surveys, 55(9):1–35.

Sandkuhl, K., Barn, B., and Barat, S. (2023). Neural text

generators in enterprise modeling: Can ChatGPT be

used as proxy domain expert? In 31st International

Conference on Information Systems Development.

Sandkuhl, K., Fill, H.-G., Hoppenbrouwers, S., Krogstie, J.,

Matthes, F., Opdahl, A., Schwabe, G., Uludag,

¨

O., and

Winter, R. (2018). From expert discipline to common

practice: A vision and research agenda for extending

the reach of enterprise modeling. Business & Infor-

mation Systems Engineering, 60:69–80.

Simon, C., Haag, S., and Zakfeld, L. (2023). Experiments

on GPT-3 assisted process model development. In

37th ECMS International Conference on Modelling

and Simulation (ECMS 2023), page 270 – 276.

Stirna, J. and Persson, A. (2018). Enterprise modeling.

Cham: Springer.

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones,

L., Gomez, A. N., Kaiser, Ł., and Polosukhin, I.

(2017). Attention is all you need. Advances in Neural

Information Processing Systems, 30.

Vernadat, F. (2003). Enterprise modelling and integration:

From fact modelling to enterprise interoperability. En-

terprise inter- and intra-organizational integration:

Building International Consensus, pages 25–33.

Vernadat, F. (2020). Enterprise modelling: Research review

and outlook. Computers in Industry, 122:103265.

White, J., Fu, Q., Hays, S., Sandborn, M., Olea, C., Gilbert,

H., Elnashar, A., Spencer-Smith, J., and Schmidt,

D. C. (2023). A prompt pattern catalog to enhance

prompt engineering with ChatGPT. arXiv preprint

arXiv:2302.11382.

Large Language Models in Enterprise Modeling: Case Study and Experiences

85