ConEX: A Context-Aware Framework for Enhancing Explanation

Systems

Yasmeen Khaled and Nourhan Ehab

German University in Cairo, Cairo, Egypt

Keywords:

Context, Context-Sensitive Explainability, User-Centered Explainability.

Abstract:

Recent advances in Artificial Intelligence (AI) have led to the widespread adoption of intricate AI models, rais-

ing concerns about their opaque decision-making. Explainable AI (XAI) is crucial for improving transparency

and trust. However, current XAI approaches often prioritize AI experts, neglecting broader stakeholder re-

quirements. This paper introduces a comprehensive context taxonomy and ConEX, an adaptable framework

for context-sensitive explanations. ConEX includes explicit problem-solving knowledge and contextual in-

sights, allowing tailored explanations for specific contexts. We apply the framework to personalize movie rec-

ommendations by aligning explanations with user profiles. Additionally, we present an empirical user study

highlighting diverse preferences for contextualization depth in explanations, highlighting the importance of

catering to these preferences to foster trust and satisfaction in AI systems.

1 INTRODUCTION

There is no doubt that the field of Artificial Intelli-

gence (AI) has witnessed remarkable advancements

in recent decades, resulting in the widespread deploy-

ment of complex AI models across various domains.

However, concerns about the black-box decision-

making processes of these models have escalated.

This has sparked a growing interest in Explainable AI

(XAI), essential for enhancing trust and transparency

within AI systems. Regulatory reforms, including the

General Data Protection Regulation (GDPR) in Eu-

rope and initiatives like DARPA’s Explainable AI re-

search program in the USA, have further accelerated

this interest (Gunning and Aha, 2019).

Explainability seeks to make AI outcomes under-

standable to users (Schneider and Handali, 2019).

Unfortunately, current eXplainable AI (XAI) ap-

proaches often prioritize the needs of AI experts, ne-

glecting a broader spectrum of stakeholders (Srini-

vasan and Chander, 2021). Different stakeholders

have diverse expectations for explanation complexity

and presentation formats. Recent research explores

question-driven designs in XAI systems to better cater

to users’ requirements (Liao et al., 2021). The idea

of delivering explanations in a conversational manner

through dialogue interfaces has also emerged as a so-

cial process (Malandri et al., 2023). Some approaches

focus on explainers extracting human-understandable

input features (Apicella et al., 2022). However, these

approaches tend to overlook the contextual dimension

of explanations. An explanation may be intelligible

in certain contexts but lack relevance in others. For

instance, a system predicting diabetes risk may pro-

vide an explanation that a nine-year-old user will de-

velop diabetes due to age, which is considered out-

of-context and not aligned with factual knowledge

about the disease. There is a critical need to concep-

tualize explainability considering context, audience,

and purpose (Robinson and Nyrup, 2022). Moreover,

existing approaches often propose new implementa-

tions for explainers instead of utilizing them in a user-

centric manner without altering their core functional-

ity. An explainer-agnostic approach to contextualiz-

ing explanations, adaptable to any existing explainer,

appears to be absent.

The paper argues that constructing a good ex-

planation involves two fundamental aspects: (1) ex-

plicit knowledge considered during problem-solving

for transparency and (2) contextual knowledge sur-

rounding the instance, providing a frame of reference

for tailoring explanations. Currently, there’s no com-

prehensive taxonomy outlining contextual knowledge

elements for explaining instances (Br

´

ezillon, 2012).

To address these gaps, we present a novel tax-

onomy of context, offering guidance on key consid-

erations for creating context-sensitive explanations.

We then introduce a general framework, ”ConEX”

Khaled, Y. and Ehab, N.

ConEX: A Context-Aware Framework for Enhancing Explanation Systems.

DOI: 10.5220/0012385300003636

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 16th International Conference on Agents and Artificial Intelligence (ICAART 2024) - Volume 3, pages 699-706

ISBN: 978-989-758-680-4; ISSN: 2184-433X

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

699

as a roadmap for developing context-sensitive expla-

nations using our context taxonomy with any state-

of-the-art post-hoc explainer. Finally, we apply

the ConEX design to build a prototype for context-

sensitive explanations in movie recommendations.

This paper is structured as follows: Section 2

presents the proposed context taxonomy, Section 3 in-

troduces the ConEX framework, and Section 4 show-

cases its application in movie recommendations. In

Section 5, we present a user study measuring the ef-

fect of our prototype on different constructs of trust,

followed by concluding remarks in Section 6.

2 CONTEXT TAXONOMY

Context encompasses information characterizing the

situation of an entity, including people, places, or ob-

jects relevant to user-system interactions (Dey, 2009).

To reach context-sensitive explainability, we present

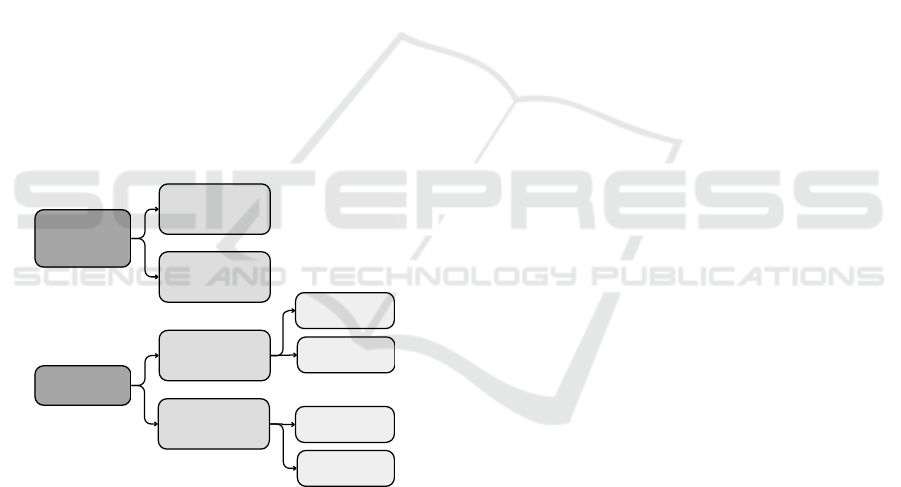

a systematic taxonomy, decipted in Figure 1, that dis-

sects various context dimensions serving as a concep-

tual roadmap for understanding context-sensitive ex-

planations and positing that context comprises static

and dynamic aspects.

Dynamic

Cognitive

Context

Identification

Mental Model

Situational

Historical

Stakeholder

Model

Static

(Pragmatic)

Domain

Knowledge

System

Information

Figure 1: Proposed Context Taxonomy.

2.1 Static Aspects

Our exploration begins with static context, the un-

changing foundation for the problem at hand, consist-

ing of two key components: domain knowledge and

system information.

Domain knowledge, curated by experts, consti-

tutes a repository of facts within a domain, such as

details about movies, actors, directors, and relevant

information in a movie recommendation system. In a

diagnostic AI system, it includes critical information

about risk factors and disease interrelationships. This

static context ensures that explanations align with the

foundational knowledge of the domain.

System information encompasses definitions of

system features and the significance of user interac-

tions. For a movie rating system, it distinguishes the

importance of a 5-star rating from a 4-star one and

may include user attributes like age groups or postal

codes, facilitating user clustering. These insights en-

able the system to cater to the unique needs of differ-

ent user groups.

2.2 Dynamic Aspects

The dynamic context involves two entities: the stake-

holder model and the cognitive context.

The stakeholder model introduces dynamic per-

sonas, acknowledging that stakeholders evolve with

each interaction. It involves stakeholder identifica-

tion, capturing attributes like role and age, and un-

derstanding each stakeholder’s objectives for person-

alized explanations. The stakeholder’s mental model,

shaped by experiences, holds tacit knowledge derived

from past interactions and contextual cues.

The cognitive context presents a dynamic user

worldview, categorized into situational and histori-

cal. Situational cognitive context encompasses emo-

tions, the user’s companions, and real-world elements

such as date, time, and weather, enriching explana-

tions. Historical cognitive context delves into past

interactions, forming the backdrop against which the

current interaction unfolds. This taxonomy offers a

comprehensive framework for integrating context into

explanations, enhancing user-centric AI systems.

3 THE ConEX FRAMEWORK

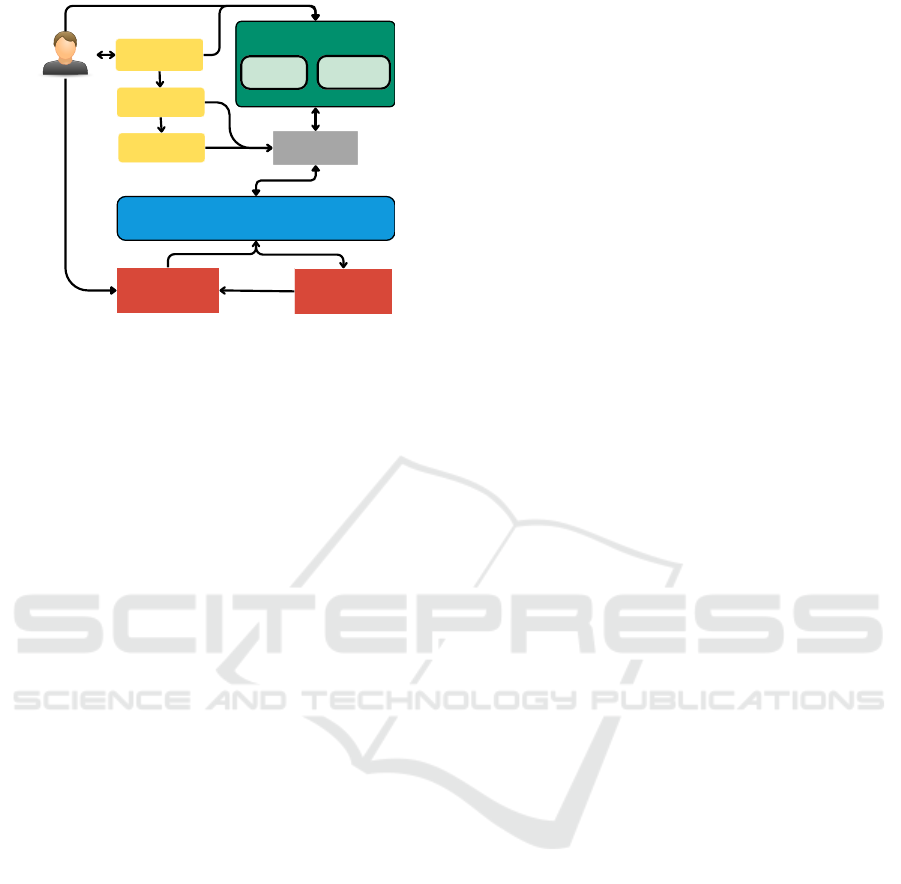

ConEX, depicted in Figure 2, provides a general

framework for generating context-sensitive explana-

tions while decoupling the model from the explana-

tion generation process, thus preserving its predictive

accuracy. ConEX comprises two distinct modules: (1)

a post-hoc explainer and (2) a context model, based on

the context taxonomy detailed in Section 2. The out-

puts of these modules are combined to create context-

sensitive explanations. ConEX serves as a guideline

for enhancing existing systems with context-sensitive

explainability or for designing new XAI systems. Be-

low, we delve into the roles of each module.

3.1 Task Model and Explainer

The AI model undergoes initial training for a specific

task within a defined domain. In our design, the task

model focuses solely on excelling at its designated

ICAART 2024 - 16th International Conference on Agents and Artificial Intelligence

700

Model

Explainer

Context Model

Static

Dynamic

System

User's

Feedback

User

Interface

Mediator

Adaptor

Figure 2: The ConEX Framework.

task without the added responsibility of explaining

its predictions. This deliberate separation enables the

optimization of the task model for accuracy without

compromising explainability.

While state-of-the-art post-hoc explainers excel at

approximating task models, adapting explanations to

diverse stakeholder needs and contexts can be chal-

lenging (Apicella et al., 2022). In our design, the post-

hoc explainer is confined to providing fundamental

explanations of the task model’s results, emphasizing

feature importance and decision rules. These expla-

nations contribute to predictions and form the basis

for subsequent context-sensitive explanations.

3.2 Context Model

The context model is tasked with supplying all rele-

vant contextual information during ongoing interac-

tions, utilizing the context taxonomy outlined in Sec-

tion 2. It should provide pertinent domain knowl-

edge components, consider stakeholders’ expecta-

tions based on their role and mental models, and ac-

count for the complexity of explanation presentation

and content tailored to situational and historical con-

texts. In essence, the context model enhances the un-

derstanding of the current interaction, regardless of

the model’s inner workings. It’s crucial to note that

the context model doesn’t generate explanations; in-

stead, it serves as a knowledge reservoir.

3.3 Mediator

The mediator functions as a gateway between the ex-

plainer, the context model, and the adaptor. It pro-

cesses the output from the post-hoc explainer, queries

the context model to retrieve contextual information

about the instance of interest, and shapes the results

to the format expected by the adaptor.

3.4 Adaptor

Contextual knowledge plays a crucial role in filtering

and determining the relevant information to consider

during a given interaction. Therefore, we contend that

excluding irrelevant data from an explanation or sup-

plementing it with external information should not

be perceived as misleading. In different contexts,

the comprehensive disclosure of the entire decision-

making process may prove unnecessary. That is the

role of the adaptor module.

The adaptor module is crucial in crafting the final

explanation for the user. It integrates the primitive

explanation from the explainer with relevant static

and dynamic contexts through a two-step process: (1)

Pragmatic fitting and (2) Dynamic fitting. In the first

step, the primitive explanation aligns with domain

knowledge. In the second step, the resulting explana-

tion achieves a balance between complexity and com-

prehensibility, tailored to the user’s identity, mental

model, and situational context, facilitating informed

decision-making.

Additionally, the Adaptor verifies the prediction

itself. If the primitive explanation does not align with

domain knowledge, the adaptor may conclude that the

prediction should be excluded, ensuring the explana-

tion does not mislead the user.

3.5 User Interface and Feedback

The final step involves presenting the explanation to

the user and gathering feedback. The User Interface

module delivers explanations in the format suggested

by the Adaptor, which may include personalized text

templates, images, etc. Users can then provide feed-

back on various aspects of the explanation. This feed-

back is invaluable for refining the explanation, align-

ing it with user preferences and needs, and ensuring it

effectively serves its purpose.

Feedback not only improves the explanation but

also empowers users by providing a sense of control

over the explanation process. This, in turn, enhances

user satisfaction and trust in the system.

4 AN APPLICATION: MOVIE

RECOMMENDATION

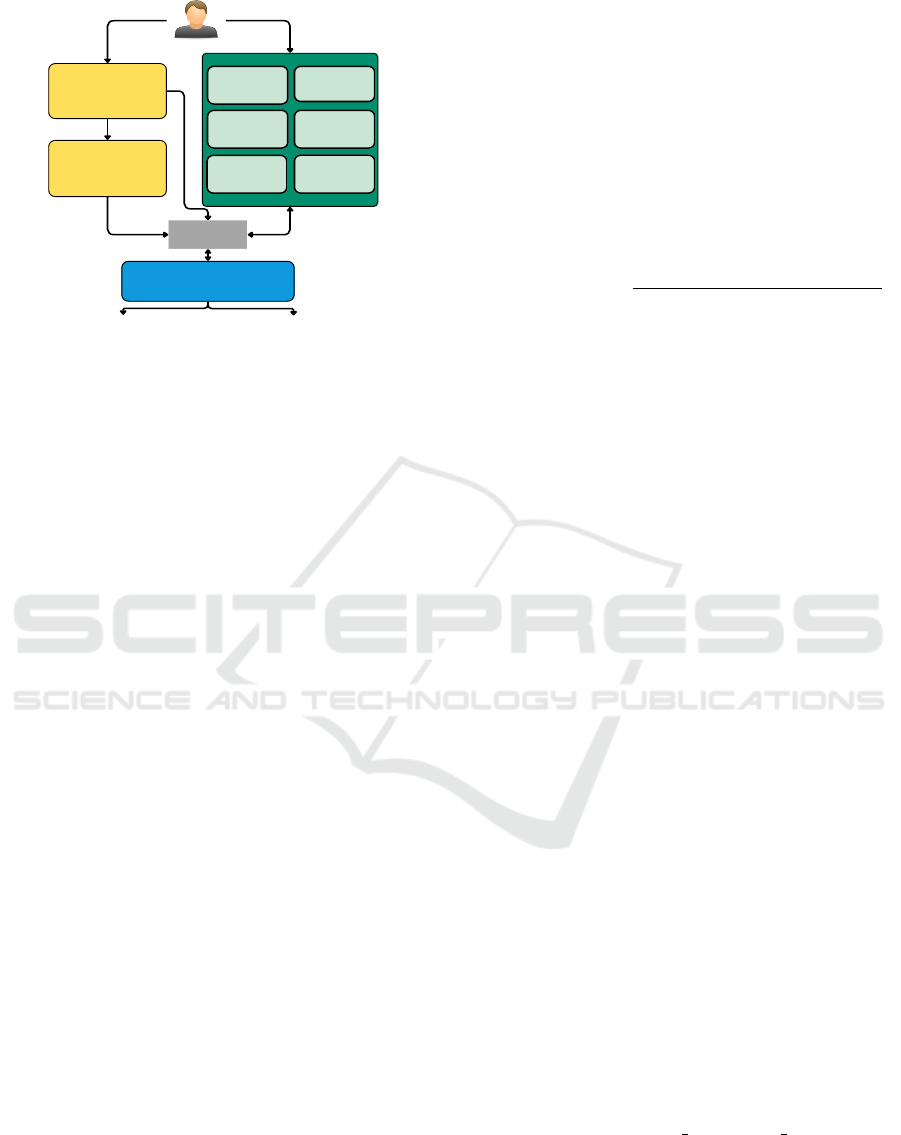

In this section, we unveil our movie recommenda-

tion prototype, developed following the design guide-

lines of ConEX to create context-sensitive explana-

tions, as depicted in Figure 3. It is worth noting that

ConEX can be used in various other applications, but

we chose this application due to data availability.

ConEX: A Context-Aware Framework for Enhancing Explanation Systems

701

Recommender

System

(FM)

Post-hoc

Explainer

(LIME RS)

MindReader

KG

User

Identity

User

Ratings

User

Preferences

Users KG

Mediator

Adaptor

The Movie and its Context-

Sensitive Explanation

Discard

Recommendation

Situation

Figure 3: Prototype.

4.1 Task Model and Explainer

The first two blocks are the task model, which is a rec-

ommender system responsible for creating the predic-

tions, and the post-hoc explainer used to extract prim-

itive explanations about the recommended instance.

4.1.1 Recommender System

The recommender system in our prototype utilized

Factorization Machines (FM) (Rendle, 2010). We

trained the model using the pyFM library with 50 la-

tent factors and 10 iterations as the stop criteria on

user-movie interactions, extended with movie genre

features. Other parameters followed default values as

per (N

´

obrega and Marinho, 2019).

Training data was derived from the well-known

MovieLens 1M dataset, comprising user ratings on a

5-star scale for movies (Harper and Konstan, 2015).

Ratings were filtered by interaction frequency, con-

sidering users with at least 200 interactions. The re-

sulting data was chronologically split (based on rating

timestamps) into 70% training and 30% testing. Rel-

evant movies were those rated 3.5 or above. Accu-

racy results for the FM model were as follows: Preci-

sion@10: 0.57, Recall@10: 0.10, and RMSE: 1.21.

4.1.2 Post-Hoc Explainer

LIME for Recommender Systems (LIME-RS)

(N

´

obrega and Marinho, 2019) was chosen as the

post-hoc explainer. LIME-RS, a local post-hoc

explainer, provides feature-based explanations for

recommendations, drawing inspiration from the

concept of LIME (Ribeiro et al., 2016).

In contrast to LIME, LIME-RS generates sam-

ples by fixing the user and sampling movies based

on their empirical distribution, rather than perturbing

data points. A ridge regression model is then trained

on the data, outputting the top-n most important fea-

tures as explanations, with n set to be 20 (20 genres).

To measure the fidelity of the ridge regression

model with respect to the recommender, we utilized

the Model Fidelity metric (Peake and Wang, 2018),

as explained in Equation 1. The model was trained on

the top 30 predictions for each user from a list of pre-

dictions for all items. The average global fidelity was

0.429, indicating that it can retrieve 42.9% of items.

ModelFidelity =

|Explainable ∩ Recommended|

|Recommended|

(1)

4.2 Context Model

As shown in the previous results, there is room for im-

provement in both the recommender’s accuracy and

the explainer’s fidelity. However, using the context

model, the recommendations and the explanations can

still be grounded in their correct context. Below we

will describe how the context model was built for this

prototype based on the taxonomy in Section 2.

In the static context, we utilize knowledge graphs

(KGs) hosted on Neo4j to represent domain knowl-

edge and user-system information. The MindReader

KG (Brams et al., 2020) serves as our source of

movie-related entities, covering movies, actors, di-

rectors, genres, subjects, decades, and companies. It

consists of 18,133 movie-related entities built using a

subset of 9,000 movies from the MovieLens dataset,

sufficient for our prototype. For system informa-

tion, user connections and demographic data are rep-

resented in a knowledge graph using the demographic

data CSV file from MovieLens 1M. GraphXR is em-

ployed to create the knowledge graph.

In the situational context of the dynamic context,

three factors—mood, company, and time of day are

considered. Mood options include happy, sad, angry,

and neutral; company options include partner, fam-

ily, and alone; time of day is categorized as morning

or night. Different combinations of these situational

factors were randomized and presented to the system

during the testing phase. The historical context of a

user includes all their ratings of movies in the train-

ing dataset. As MovieLens lacks situational data ac-

companying the ratings, the historical context is rep-

resented as a set of {user id, movie id, rating}

records without incorporating situational information.

The stakeholder model’s identification is imple-

mented using a class Person with two sub-classes:

lay users (type: user) and developers (type: devel-

oper). Both have system-specific attributes, and lay

ICAART 2024 - 16th International Conference on Agents and Artificial Intelligence

702

users have additional user-specific attributes like age.

This can be expanded with other stakeholder types.

For the stakeholder mental model, we focus on the

mental models of lay users. Users’ decision-making

is assumed to depend on the genres and actors of a

movie. The mental model is represented based on

genre and actor preferences derived from users’ his-

torical cognitive context. Frequent pattern-growth

(Han et al., 2004) (FP-Growth) is employed on the

genres and actor lists extracted from the user’s top-

rated movies to mine frequent itemsets. A subset of

the inferred genre and actor preferences of user 1004

is shown in Table 1 and Table 2, respectively.

Table 1: User 1004 Genre Preference.

support itemsets

0.50 (Action)

0.41 (Comedy)

0.30 (Adventure)

Table 2: User 1004 Actor Preference.

support itemsets

0.08 (Harrison Ford)

0.05 (Mary Ellen Trainor)

0.04 (Mel Gibson)

The higher the support the itemset has, the more

impact it has on the user’s decision. This helps deter-

mine which itemset to choose in an explanation to fur-

ther personalize it. Hypothetical explanation content

and presentation shapes (text or images) preferences

were randomly assigned to the users’ mental models.

4.3 Generating Context-Aware

Explanations

We now illustrate how a context-sensitive explanation

unfolds for a specific instance. In this case, we’re fo-

cusing on the recommendation of the movie Lethal

Weapon 4 for a user, specifically User 1004, whose

preferences are listed in Table 1 and Table 2.

4.3.1 Generating Primitive Explanations

During the recommendation process, the movie

“Lethal Weapon 4” is suggested to user “1004.”

LIME-RS is then used to generate a primitive fea-

ture importance explanation. This explanation covers

positive and negative attributions for all genres in the

training data. As depicted in Figure 4, the top positive

genre is Film-Noir, which is not present in the genres

of the movie Lethal Weapon 4. In fact, in 88.4% of the

cases when running LIME-RS on the testing dataset,

Recommender System

(FM)

LIME RS

"FILM-NOIR": 0.737, "THRILLER": 0.66, "FANTASY":0.561,

"MUSICAL": 0.471, "ADVENTURE": 0.448,"WAR": -0.438,

"ANIMATION": 0.431, "ROMANCE":0.383, "CRIME": -0.382,

"WESTERN": 0.371, "DOCUMENTARY": 0.356, "MYSTERY":

-0.351,"COMEDY":0.322, "ACTION": 0.312, "DRAMA": 0.062

Movie

Recommendation

Primitive Non Contextualized

Explanation

Figure 4: Primitive Explanation.

the highest positively attributing genre did not exist in

the genres of the corresponding movie. This discrep-

ancy arises because LIME-RS does not consider con-

text and treats all genres as separate features, includ-

ing both movie-related and non-movie-related genres

(N

´

obrega and Marinho, 2019).

Presenting this genre as an explanation to a lay

user may not be meaningful, as this particular genre

might not even exist in the movie. Therefore, contex-

tualizing the primitive explanation becomes crucial to

make it comprehensible to the lay user.

4.3.2 Fetching the Requested Instance Context

When generating context-sensitive explanations, two

vital elements demand attention: the movie itself and

the user. Through the mediator, we retrieve various

context pieces, which encompass:

1. Movie Lethal Weapon 4 genres: Comedy, Crime,

Action, and Drama.

2. Movie Lethal Weapon 4 actors which are retrieved

from MindReader KG: Mel Gibson, Danny

Glover, Mary Ellen Trainor, etc.

3. User 1004 identification: Type: lay user, age: 25-

34, gender: male, occupation: clerical/admin.

4. User 1004 genre and actor preferences from the

user’s mental model: presented in Tables 1 and 2.

5. User 1004 preferred explanation content and pre-

sentation shape from the user’s mental model:

Level 2 - Image (this means the user prefers ex-

planations displayed as images).

6. User 1004 randomized current situation:

(Sad, With Partner, In the Morning).

4.3.3 Contextualized Explanation Generation

The mediator converts the data types of the primitive

explanation and the context data to the ones expected

by the adaptor. Subsequently, the adaptor takes

the reins in crafting the ultimate context-sensitive

explanation, using a three-level approach, with each

level building upon the preceding one.

ConEX: A Context-Aware Framework for Enhancing Explanation Systems

703

Level 1: Pragmatic Fitting

In the initial contextualization step, termed Pragmatic

Fitting, the primitive explanation is harmonized with

domain knowledge. Specifically, the domain knowl-

edge comprises the actual movie genres acquired

through the mediator. The process involves extract-

ing genres from the movie Lethal Weapon 4 in the

primitive explanation (Figure 4). Subsequently, gen-

res with negative attributions are filtered out, leaving

only the positive ones. The outcome is a subset of

genres, as demonstrated in Figure 5.

CRIME": -0.382, "COMEDY": 0.322,

"ACTION":0.312, "DRAMA": 0.062

"COMEDY": 0.322, "ACTION": 0.312,

"DRAMA": 0.062

Extract Movie Genres

LIME-RS Explanation

Extract Positive Genres

Figure 5: Pragmatic Fitting of Movie Lethal Weapon 4.

This step results in a subset of genres that pos-

itively contributed to the recommendation, and are

consistent with the movie’s actual genres. These gen-

res serve as the basis for explanations directly con-

necting the recommendation to these genres. For in-

stance, one can select the highest attributing genre for

explanation, as illustrated in Figure 6.

-Movie Lethal Weapon 4 was recommended to you

because it has the genre Comedy.

Figure 6: Pragmatically Fit Explanation.

Level 2: Dynamic Fitting

In the second level of contextualization, the aim is to

enhance pragmatic alignment by incorporating addi-

tional user-related context, excluding situational de-

tails. The explanation intends to guide the user’s

decision on whether to watch the movie, aligning

with their decision-making process. To identify the

genre with the highest expected positive impact on

the user’s decisions, we filter out genres present in

the user’s preferences (as detailed in Table 1) from

the pragmatic fitting results. We then aggregate the

attribution of the filtered genres with the support these

genres have in the user’s preferences as shown in Fig-

ure 7. The selected genre with the highest expected

positive impact on the user’s decisions is Action.

Despite Comedy being the genre with the high-

est attribution in the pragmatically fit result, the genre

Action was chosen because it has a higher impact on

the user’s preferences. Genre Action did have a pos-

itive attribution in the pragmatically fit result, thus

choosing it over Comedy is not misleading the expla-

nation but rather picking the most relevant piece of

"COMEDY": 0.322, "ACTION": 0.312

"COMEDY", 0.73, "ACTION", 0.81

Aggregate attribution

Filter user's preference

Figure 7: Dynamic Fitting of Movie Lethal Weapon 4.

-Movie Lethal Weapon 4 was recommended to you

because it has the genre Action and 50% of the

movies you highly rated had the same genre.

Figure 8: Dynamically Fit Explanation.

information to the user. The resulting explanation is

detailed in Figure 8.

Additional information that is not included in the

training of the recommender can be fetched from the

context and used to enrich the dynamically fit expla-

nation, as seen in Figure 9. The actors of Lethal

Weapon 4, fetched in section 4.3.2, are matched

against the user’s actor preferences in Table 2. Since

Mary Ellen Trainor has a higher support value, she

was chosen to support the explanation.

Moreover, additional insights about similar users

can be inferred from the system information. For in-

stance, the percentage of users in the same age group

as user 1004 who highly rated Lethal Weapon 4 can

be derived. This external knowledge augments the ex-

planation, bringing it closer to the user’s context.

-Mary Ellen Trainor is starring in this movie and

this actor starred in 5% of the movies you highly

rated before.

-11% of the users that belong to your age group

rated this movie 4 stars or above.

Figure 9: Extra Information to support the Explanation.

Level 3: Incorporating Situation

The third and final level focuses on contextualizing

the outcome of Level 2 with situational information.

When requesting an explanation, the user inputs their

current state, including mood, company, and time of

day. For testing purposes, randomized user states

were employed.

The goal is to further personalize the interaction,

using the resulting genre (and actor if available) from

Level 2 as the base information. The situational de-

tails are then incorporated to create different para-

graphs of explanations, each with different tones and

information to match the user’s current state. To

achieve this, the DeepAI text generator API, backed

by a large-scale unsupervised language model, is used

to generate paragraphs of text. Thus, the explana-

tion of Lethal weapon 4 for user 1004 should include

genre Action and actress Mary Ellen Trainer.

ICAART 2024 - 16th International Conference on Agents and Artificial Intelligence

704

Using the state of the user 1004 which is (Sad,

With Partner, In the Morning), the API outputs a result

like the one shown in Figure 10.

If you want an action-packed movie to lift your

mood, Lethal Weapon 4 is the perfect choice. With

its intense fight scenes and thrilling plot, it's sure

to keep you on the edge of your seat. Plus, the

talented cast, including the late Mary Ellen Trainer,

will keep you engaged from start to finish. Trust

me, watching this movie in the morning with your

partner is the perfect way to shake off the blues

and start your day off right. Don't miss out!

Figure 10: DeepAI API result Example.

4.3.4 Explanation Reception and Feedback

The final step involves presenting the explanation to

users and gathering their feedback. Suppose that User

1004 prefers Level 2 contextualization with image-

shaped presentation. Therefore, the information de-

rived from Level 2 can be shown in Figure 11.

Figure 11: Image presentation for level 2 contextualiza-

tions.

Real users can have the option to choose their pre-

ferred level of contextualization, content, and presen-

tation format for explanations. The collected feed-

back is then utilized to refine the explanation style

to better match user expectations. Additionally, for

developers debugging recommendation instances, the

prototype logs primitive explanations and results from

each contextualization level. This comprehensive log

allows for a detailed analysis, facilitating system opti-

mization. By tailoring explanations to both users and

developers, our system provides a versatile and adapt-

able approach to context-sensitive insights, meeting

the specific needs of each user type.

4.3.5 Contextualization Failures

At Level 1 of the contextualization process, scenar-

ios may arise where no genres have positive attribu-

tions in the primitive explanation. In such cases, ex-

cluding the recommendation is advisable to avoid pre-

senting a misaligned, misleading explanation due to

negative attributions. Additionally, if a user prefers

Level 2 contextualization but none of the pragmati-

cally aligned genres match their preferences, the rec-

ommendation can either be omitted or explained us-

ing Level 1. Essentially, the role of the adaptor

extends beyond contextualization to act as a truth-

checker, filtering out incoherent explanations and en-

hancing the trustworthiness of the system.

5 EMPIRICAL USER STUDY

In this paper, we hypothesized that diverse stake-

holders require tailored explanations to address the

same problem. Moreover, we posited that individu-

als within the same stakeholder category, particularly

lay users, prioritize distinct facets of an explanation.

To substantiate our theory, we conducted an em-

pirical user study that investigated the preferences of

lay users regarding contextualization levels 1, 2, and

3 with respect to different dimensions of trust. The

dimensions of trust were adapted from the work of

Berkovsky et al. (Berkovsky et al., 2017), originally

designed for assessing trust in various recommender

systems. We rephrased these dimensions to pertain to

explanations, as seen below:

1. Competence: I think the explanation that is most

knowledgeable about the movies is...

2. Integrity: The explanation that provides the most

honest and unbiased reasons is...

3. Benevolence: The explanation that reflects my in-

terests in the best way is...

4. Transparency: The explanation that helps me

understand the recommendation reasons the best

is...

5. Re-Use: To select my next movie, I would use...

6. Overall: The most trustworthy explanation is...

We conducted a study using a survey featuring

three movies, each with corresponding explanations

labeled as Explanation A (level 1), Explanation B

(level 2), and Explanation C (level 3). Participants

were introduced to explanations generated by our pro-

totype and asked to respond to six multiple-choice

questions assessing the alignment of explanations

with the six trust dimensions.

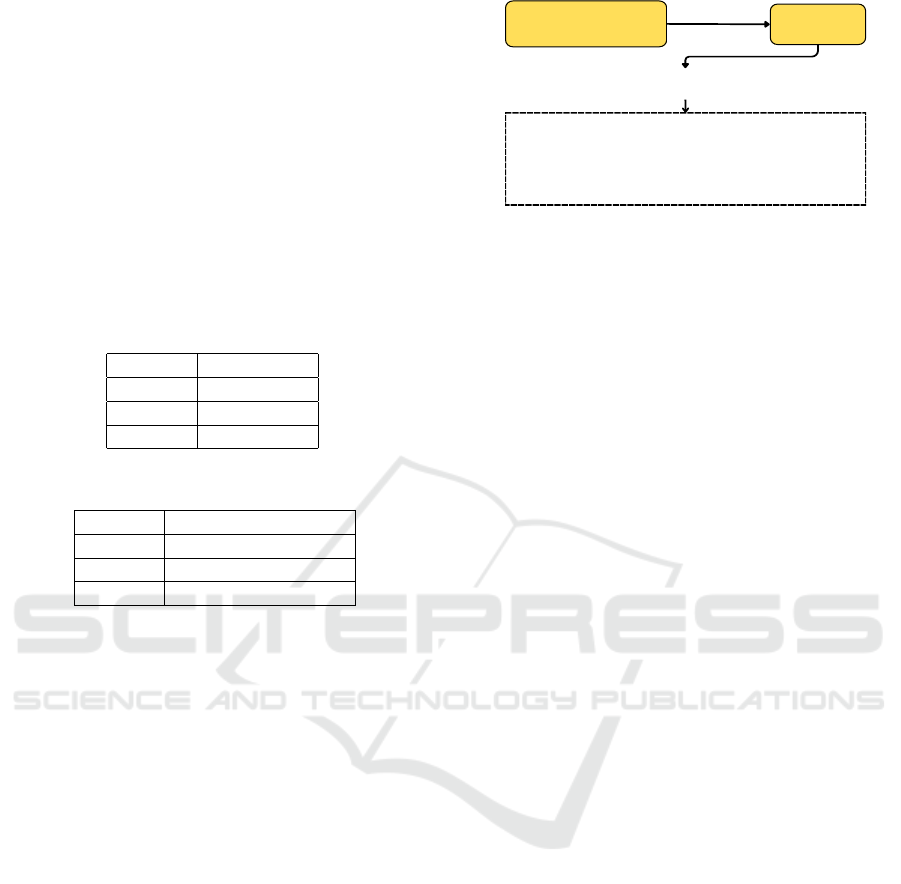

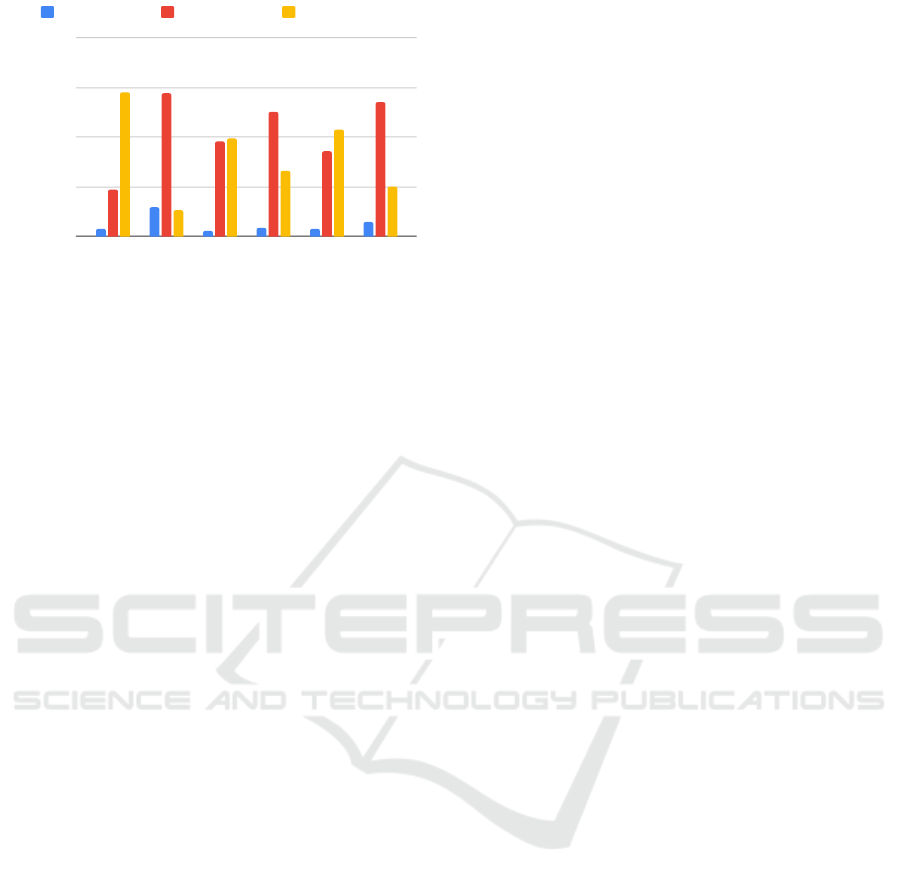

A total of 136 participants completed the survey,

with results illustrated in Figure 12. Notably, Expla-

nation A received the least favorability across all trust

dimensions, indicating a preference for more person-

alized explanations. Explanation B scored highest

in Integrity, Transparency, and Overall trustworthi-

ness, suggesting a preference for personalized, quan-

titatively structured explanations. Conversely, Expla-

nation C excelled in Competence, Benevolence, and

ConEX: A Context-Aware Framework for Enhancing Explanation Systems

705

Constructs of Trust

Users Percentage

0

25

50

75

100

Competence

Integrity

Benevolence

Transparency

Re-use

Overall

Explanation A Explanation B Explanation C

Figure 12: Experiment Results.

Re-use, implying that utilizing the DeepAI API en-

riched explanations with additional movie-related in-

formation, making them more adaptable and likely to

be chosen. These findings support our hypothesis that

diverse users prefer different explanations, underscor-

ing the need to provide users with control over their

choice to accommodate varied informational needs.

6 CONCLUDING REMARKS

In this paper, we have introduced a comprehensive

taxonomy of context that finds relevance across di-

verse domains and systems. To practically real-

ize context-sensitive explanations, we have presented

ConEX, a general framework founded on our con-

text conceptualization, along with the incorporation

of a post-hoc explainer. We presented an application

of ConEX that leverages context-sensitive explana-

tions to enhance the personalization of movie recom-

mendations. Additionally, we conducted a user study

to demonstrate that context-sensitive explanations en-

hance user trust and satisfaction empirically. Future

work in this domain can include research into au-

tomated situation recognition, reducing users’ input,

and tracking their current state. Moreover, address-

ing the challenge of temporal changes in user prefer-

ences and maintaining the context model’s accuracy

over time is a promising avenue for future research.

REFERENCES

Apicella, A., Giugliano, S., Isgr

`

o, F., and Prevete, R.

(2022). Exploiting auto-encoders and segmenta-

tion methods for middle-level explanations of image

classification systems. Knowledge-Based Systems,

255:109725.

Berkovsky, S., Taib, R., and Conway, D. (2017). How to

recommend? user trust factors in movie recommender

systems. In Proceedings of the 22nd International

Conference on Intelligent User Interfaces, IUI ’17,

page 287–300, New York, NY, USA. Association for

Computing Machinery.

Brams, A. H., Jakobsen, A. L., Jendal, T. E., Lissandrini,

M., Dolog, P., and Hose, K. (2020). Mindreader: Rec-

ommendation over knowledge graph entities with ex-

plicit user ratings. In Proceedings of the 29th ACM In-

ternational Conference on Information & Knowledge

Management, page 2975–2982, New York, NY, USA.

Association for Computing Machinery.

Br

´

ezillon, P. (2012). Context in artificial intelligence: I. a

survey of the literature. COMPUTING AND INFOR-

MATICS, 18(4):321–340.

Dey, A. (2009). Explanations in context-aware systems.

pages 84–93.

Gunning, D. and Aha, D. (2019). Darpa’s explainable

artificial intelligence (xai) program. AI Magazine,

40(2):44–58.

Han, J., Pei, J., Yin, Y., and Mao, R. (2004). Min-

ing frequent patterns without candidate generation:

A frequent-pattern tree approach. Data Mining and

Knowledge Discovery, 8(1):53–87.

Harper, F. M. and Konstan, J. A. (2015). The movielens

datasets: History and context. ACM Trans. Interact.

Intell. Syst., 5(4).

Liao, Q. V., Pribic, M., Han, J., Miller, S., and Sow, D.

(2021). Question-driven design process for explain-

able AI user experiences. CoRR, abs/2104.03483.

Malandri, L., Mercorio, F., Mezzanzanica, M., and Nobani,

N. (2023). Convxai: a system for multimodal inter-

action with any black-box explainer. Cogn. Comput.,

15(2):613–644.

N

´

obrega, C. and Marinho, L. (2019). Towards explaining

recommendations through local surrogate models. In

Proceedings of the 34th ACM/SIGAPP Symposium on

Applied Computing, SAC ’19, page 1671–1678, New

York, NY, USA. Association for Computing Machin-

ery.

Peake, G. and Wang, J. (2018). Explanation mining: Post

hoc interpretability of latent factor models for rec-

ommendation systems. In Proceedings of the 24th

ACM SIGKDD International Conference on Knowl-

edge Discovery & Data Mining, KDD ’18, page

2060–2069, New York, NY, USA. Association for

Computing Machinery.

Rendle, S. (2010). Factorization machines. In 2010 IEEE

International Conference on Data Mining, pages 995–

1000.

Ribeiro, M. T., Singh, S., and Guestrin, C. (2016). ”why

should i trust you?”: Explaining the predictions of any

classifier.

Robinson, D. and Nyrup, R. (2022). Explanatory pragma-

tism: A context-sensitive framework for explainable

medical ai. Ethics and Information Technology, 24(1).

Schneider, J. and Handali, J. (2019). Personalized explana-

tion in machine learning. CoRR, abs/1901.00770.

Srinivasan, R. and Chander, A. (2021). Explanation per-

spectives from the cognitive sciences—a survey. In

Proceedings of the Twenty-Ninth International Joint

Conference on Artificial Intelligence, IJCAI’20.

ICAART 2024 - 16th International Conference on Agents and Artificial Intelligence

706