Robotics and Computer Vision in the Brazilian Electoral Context: A

Case Study

Jairton da Silva Falc

˜

ao Filho

a

, Matheus Hopper Jansen Costa

b

, Jonas Ferreira Silva

c

,

Cec

´

ılia Virg

´

ınia Santos da Silva

d

, Felipe Augusto da Silva Mendonca

e

,

Jefferson Medeiros Norberto

f

, Riei Joaquim Matos Rodrigues

g

and Marcondes Ricarte da Silva J

´

unior

h

Informatics Center, Universidade Federal de Pernambuco, Recife, Brazil

Keywords:

Eletronic Ballot Box, Integrity Test, Robotic Arm, Computer Vision.

Abstract:

Since 2000, Brazil has had fully computerized elections using electronic ballot boxes. To prove the functioning

and security of electronic ballot boxes transparently, some tests are carried out, including the integrity test.

This test is carried out throughout the national territory in locations defined for each Brazilian state, with a

reasonable number of people. Here, an automation system for integrity testing is presented, consisting of a

robotic arm and software using computer vision for the two versions of electronic ballot boxes used in the

2022 presidential elections. Two days of tests were carried out, simulating the integrity test in the laboratory

and a test during the real integrity test of the 2022 election. The system managed to cast between 197 and 232

votes during the tests with an average vote time of between 2 minutes and 15 seconds to 2 minutes and 43

seconds, depending on the version and test day, and errors between 1.48% and 3.72%, including reading and

typing errors. The system allows you to reduce the number of people per electronic ballot box and increase

the transparency and efficiency of the integrity test.

1 INTRODUCTION

The electoral process in Brazil covers all the stages

involved in organizing elections, followed by a short

post-election period. The Electoral Justice is respon-

sible for organizing, overseeing, and conducting elec-

tions, regulating the electoral process at federal, state

and municipal levels. It has a supreme body, the Supe-

rior Electoral Court (TSE), headquartered in the coun-

try’s capital, Brasilia. In addition to the TSE, each

state and Federal District has a Regional Electoral

Court (TRE), along with judges and electoral boards

(Court, 2022e).

To ensure the normality of the elections, the secu-

rity of the vote and democratic freedom, the Brazil-

a

https://orcid.org/0000-0001-6383-7551

b

https://orcid.org/0009-0004-8121-2204

c

https://orcid.org/0000-0002-2277-132X

d

https://orcid.org/0009-0002-8549-3205

e

https://orcid.org/0000-0002-4904-9986

f

https://orcid.org/0009-0005-2180-3587

g

https://orcid.org/0009-0002-0160-9849

h

https://orcid.org/0000-0003-0359-6113

ian electoral system employs numerous mechanisms,

such as electronic ballot boxes and biometric voter

identification, making it a global reference. In the

2022 elections, 577,000 electronic ballot boxes were

used (Court, 2022g), distributed across 5,570 mu-

nicipalities in Brazil and 181 cities overseas (Court,

2022f), with more than 123 million Brazilians able to

exercise their right to vote (Court, 2022d).

The electronic ballot box is a specialized micro-

computer designed for electoral use. It has two termi-

nals: the polling station and the voter’s terminal. In

the former, the polling officer identifies the voter and a

built-in biometric reader is used to confirm the voter’s

identity. The latter is where the vote is recorded and

comprises a memory device for storing the votes, a

numeric keypad, and a liquid crystal display screen

(Court, 2022c).

To ensure the fairness of the electoral process, a

series of tests are conducted on the electronic ballot

box, including the integrity test, which is used to val-

idate its security and functionality. In every election

in Brazil, the Electoral Justice conducts this type of

audit. This process compares the votes cast on pa-

per ballots with those entered into the electronic bal-

516

Falcão Filho, J., Costa, M., Silva, J., Santos da Silva, C., Mendonca, F., Norberto, J., Rodrigues, R. and Silva Júnior, M.

Robotics and Computer Vision in the Brazilian Electoral Context: A Case Study.

DOI: 10.5220/0012379300003660

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 19th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2024) - Volume 4: VISAPP, pages

516-523

ISBN: 978-989-758-679-8; ISSN: 2184-4321

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

lot box (Court, 2022b). In the first round of the 2022

elections, 641 electronic ballot boxes were audited

across Brazil (Court, 2022h).

The system presented here was developed to au-

tomate the entire integrity test of the electronic ballot

boxes in the 2015 and 2020 versions, as they were

used in the last election. It consists of a robotic arm,

application software, information extraction from the

paper ballots, and some customizations, requiring

only one operator per system.

In addition to this introductory section, the article

is divided into five other sections: “Contextualization

of the Problem”, which contains a detailed description

of how the integrity audit process of electronic bal-

lot boxes functions; “Related Works”, which includes

some articles where authors have used robotic arms

to automate software testing; “Automation System for

Integrity Testing of Electronic Ballot Box”, which de-

scribes all parts of the presented system; “Tests and

Results”, which contains the tests conducted in the

laboratory and real-world situations; and “Conclu-

sion”.

2 CONTEXTUALIZATION OF

THE PROBLEM

The integrity testing of electronic ballot boxes begins

one day before the elections. The polling stations

from which the voting machines will be collected for

the integrity test are selected through a random draw.

The number of polling stations selected varies be-

tween 6 and 33, depending on the number of polling

stations per state and the voting round (Fachin, 2021).

These voting machines are removed from their origi-

nal municipalities, replaced with new equipment, and

installed in a monitored location with a significant

presence of people, as indicated by the Regional Elec-

toral Tribunal of each state. Representatives from po-

litical parties, the Brazilian Bar Association (OAB),

the Public Prosecutor’s Office, other oversight enti-

ties, and interested citizens participate in this process

(Court, 2022b).

Some paper ballots are filled out for each selected

voting machine, ranging from 75% to 82% of the

number of registered voters in the respective polling

station (Sans

˜

ao, 2018). Representatives from political

parties, federations, and coalitions participate in this

process. If they do not appear, third party will fill out

the ballots, excluding Electoral Justice staff (Court,

2022a). A list of candidates to be voted on at the

selected polling stations is available to fill out paper

ballots by hand in addition to party votes, blank votes

and invalid votes and are then deposited in canvas bal-

lot boxes. On the day of the test, these ballots are en-

tered into a digitizing system on a computer, which

is used for tallying and digitizing the votes. Subse-

quently, they are printed on paper ballots. The audit

work takes place on the same day and at the same

time as the regular election, from 08:00 AM to 05:00

PM. After issuing the ”Zeresima” report, which certi-

fies that no votes were counted before the start, issued

by the voting machine and support system, the proce-

dures are initiated (Court, 2022a).

Each voting machine involves four individuals in

the auditing process: the verifier, the data entry oper-

ator, the enabler, and the voter.

• The verifier retrieves the ballot from the canvas

ballot box and checks its validity. The valid bal-

lots are labeled with a sequential vote number. In

the case of a blank ballot, “EM BRANCO” (por-

tuguese for “BLANK”) is stamped next to each

space.

• The data entry operator selects a valid voter ID

and enters it into the auxiliary system. They com-

pare the sequential number labeled on the ballot

with the one displayed in the auxiliary system and

input the votes from the ballot into the auxiliary

system. They verify what has been entered with

what is on the ballot and then file the voted ballot.

The vote remains on the monitor of the auxiliary

system until the voter completes the process on

the electronic ballot box.

• The enabler activates the voter, enters the polling

station and places their fingerprint if necessary.

Then, the voting machine is enabled for the voter.

• The voter enters each digit of their vote into the

voting machine, announcing it aloud for record-

ing in the video. For each position, the candi-

date’s name indicated by the voting machine is an-

nounced, and after entering, there is a 3-seconds

wait before confirming the vote (Court, 2022a).

At the closing time of voting, at 05:00 PM, the

integrity test is also concluded, even if only some of

the ballots have been entered. The Ballot Box Report

(in portuguese, ”Boletim de Urna” or ”BU”) is printed

with the totalized votes to provide a physical record of

the results (Court, 2022a). A storage medium contin-

uing the results is removed from the voting machine,

inserted into the computer and the system generates

a comparative report between the BU and the ballots

entered in the support system.

If the results match, a closing report will be pre-

pared. However, suppose there is a discrepancy be-

tween the BU and the support system’s report. In that

case, the discrepancies will be identified, and the en-

tries on the respective ballots will be checked based

Robotics and Computer Vision in the Brazilian Electoral Context: A Case Study

517

on the timestamp and video recordings. If the discrep-

ancy persists, all the entered ballots must be reviewed,

and a detailed record of all discrepancies, even those

resolved, will be made in the report (Court, 2022a).

3 RELATED WORKS

Robotic arms offer a method for simulating user in-

teractions with touch screens and device movements,

enhancing the precision and accessibility of software

testing. This automation enables scenarios difficult

for humans and ensures consistent testing over ex-

tended movements.

In (Banerjee and Yu, 2018b), a six-axis articulated

robot automates tests on facial recognition software

for mobile phones. The robotic arm facilitates precise

positioning of phones, varying camera tilt angles, and

simulating real-world scenarios like device shaking,

enhancing algorithm testing for accuracy, functional-

ity, stability, and performance.

(Banerjee et al., 2018) applies a robotic arm to au-

tomate tests for image rectification software, improv-

ing efficiency and scalability by reducing the need for

manual testing. Specific lighting and safety condi-

tions are implemented for better coverage of configu-

ration variations.

(Frister et al., 2020) proposes using an indus-

trial robotic arm to test Android mobile applications,

equipped with a capacitive stylus for consistent and

repetitive touchscreen tests. This approach detects er-

rors or unexpected behaviors, ensuring high precision

and scalability in testing scenarios.

In (Banerjee and Yu, 2018a), a robotic arm auto-

mates tests in a motion-based image capture system,

demonstrating superior performance in image capture

latency, relative motion measurement, and precision

compared to third-party solutions.

These studies showcase diverse applications of

robotic arms in software testing, emphasizing preci-

sion, consistency, and scalability. Considering this

technology for electronic ballot box integrity testing

could offer an innovative and practical approach to

enhance the security and reliability of the electoral

process.

4 AUTOMATION SYSTEM FOR

INTEGRITY TESTING OF

ELECTRONIC BALLOT BOXES

The proposed system aims to automate the integrity

test. It will extract the voter ID and votes from the

paper ballot and input them into their respective ter-

minals, the polling station, and the voter’s terminal,

following the order of political positions. The system

consists of three main units: the robotic arm Kinova

Gen3 lite (Kinova, 2020), the application software,

and the module for extracting information from the

paper ballots. In addition to these units, customiza-

tions and the automation flow of the integrity test are

presented.

4.1 Application Software

The application software serves as the system’s user

interface with the robot and acts as a repository of

information through the database. Consequently, it

allows interaction and handles possible issues, such

as forgetting to change the paper ballot. This sys-

tem part can be subdivided into frontend, backend,

and database components.

4.1.1 Frontend

The frontend was developed using Flutter, a

cross-platform framework developed by Google

(GOOGLE, 2017). Initially, the user selects the robot

model to be connected and the model of the elec-

tronic ballot box to be audited, either 2015 or 2020.

This connection is established via socket, and these

choices can only be made if the server (backend) is

online. The application offers four ways to interact

with the robot and the electronic ballot box: manual

voting, batch voting, OCR voting and assistive voting.

In manual voting, the user manually enters the

voter ID and each vote through the application, us-

ing an interface similar to the electronic ballot box.

This feature was developed to allow voting using the

keyboard. In batch voting, which is used in the au-

tomation of the integrity test, the user has the option

to initiate the automation of votes. They are respon-

sible for changing the paper ballots on the template,

given the robot remains in a loop: extracting informa-

tion from the paper ballots, performing the release of

the terminal and voting on the electronic ballot box

for each new paper ballot. In OCR voting, compared

to batch voting, the user needs to verify each captured

image as well as the information extracted from these

images in each execution.

In assistive voting (da Silva Mendonc¸a et al.,

2023), a head mouse, with the help of a camera, is

used to assist individuals with reduced mobility while

ensuring the secrecy of their votes. With eye move-

ments, the user can move the cursor to the necessary

positions to cast their vote, and blinking eyes serves

as the click action. The interface for this method is

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

518

designed to resemble the electronic ballot boxes in-

terface closely.

Batch voting also has some exceptions that the

user manually handles. Visual feedback is provided

when a paper ballot returns an error during the reading

process or if the user forgets to change the paper bal-

lot. The application displays a message for checking

the paper ballot’s position or indicates that the paper

ballot has already been read.

4.1.2 Backend

In the backend, the connection with the robot is estab-

lished via a socket on a localhost server. All informa-

tion that arrives from the extraction unit, after pass-

ing through the backend, is sent to the robot. Each

movement is executed by extracting the information

obtained from manual mapping contained in a .json

file for each position. In the backend, adjustments are

also made for the arm’s movement time in relation to

the type of voter because there are voters with regis-

tered fingerprints and those without, as well as other

exceptions that do not follow the standard flow, such

as visually impaired individuals.

The image of the paper ballot captured by the

camera on the robotic arm is sent to the backend,

where all the information is extracted, as will be ex-

plained in the section 4.2. All extracted votes and

voter ID are saved in the database along with the in-

sertion timestamp, as will be described in the 4.1.3

section.

4.1.3 Database

The system’s database was developed using MySQL

due to its ease of understanding, scalability, security,

and multi-platform support. The database serves two

main roles: assisting in data retrieval and guiding

the voting flow. Since the integrity test is conducted

with voters from a fictitious electoral section, access

to all voters in that section is provided. Initially,

the database is populated with biometrics recognition,

voters without biometrics, and voters with visual im-

pairments. These variations lead to different voting

flows in the electronic ballot box.

During the experiments, the database stores the

voter ID, votes and timestamps. This allows check-

ing if the voter ID is extracted from the paper ballot,

otherwise the robotic arm will not attempt to enable

the same voter to vote again, thus avoiding time loss.

At the end of the voting process, the information from

the database is compared with the information on the

election results report to ensure the integrity of the

votes. Verification is also carried out with the vote

recording media inside the electronic ballot box.

4.2 Information Extraction from Paper

Ballots

The methodology proposed by (Silva et al., 2023) was

followed, which was developed in conjunction with

our system. It is based on Optical Character Recog-

nition (OCR) and its application in the context of au-

tomating integrity tests in Brazilian elections.

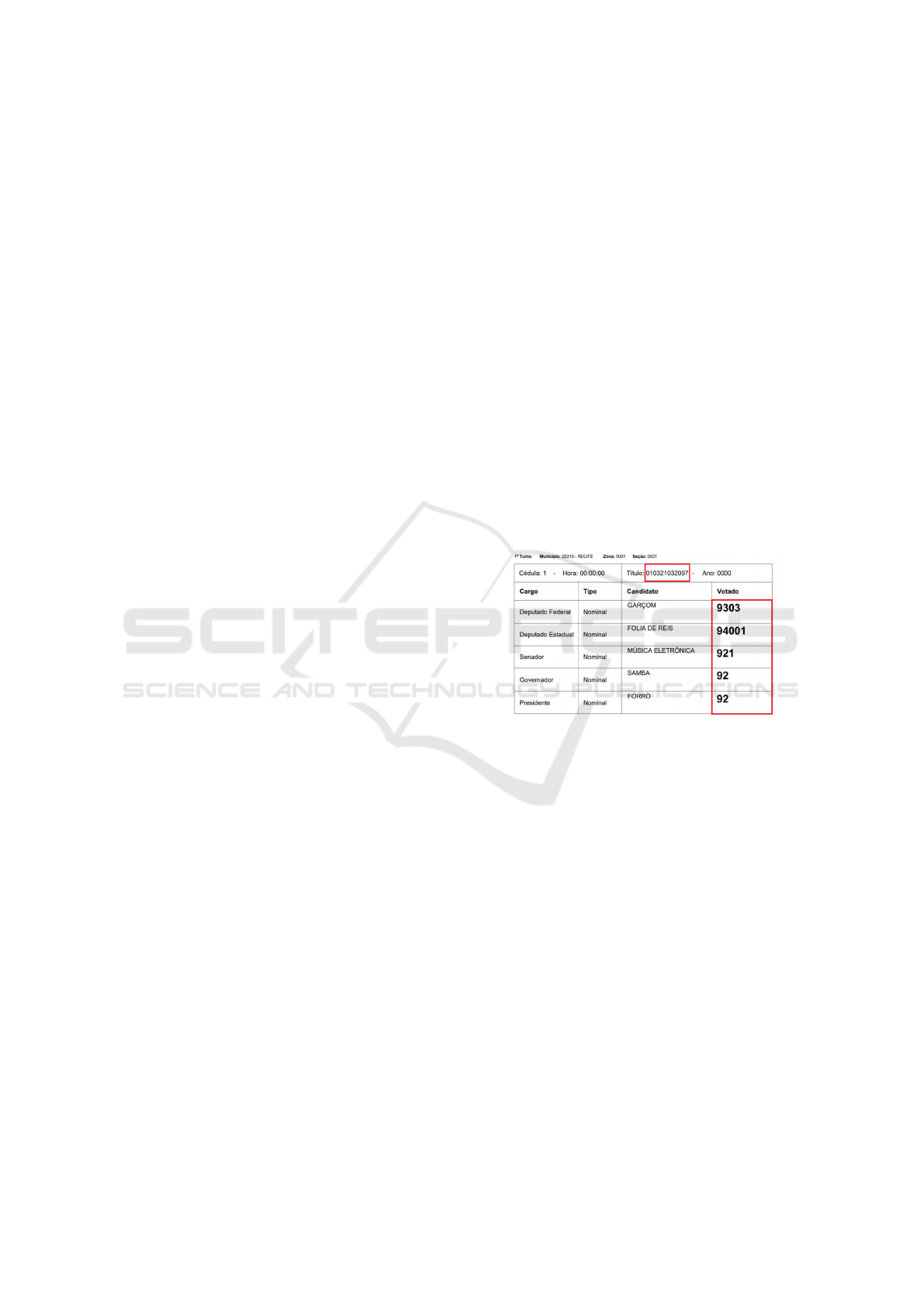

Using YOLOv4 (Bochkovskiy et al., 2020), two

regions of interest are cropped - one with the voter

ID and another with the votes, highlighted in red in

Figure 1. Then, EasyOCR (Awalgaonkar et al., 2021)

is used for information extraction. After extracting

the information from the paper ballots (voter ID and

vote numbers), the processing is performed to cor-

rect possible extraction errors, such as reading errors

when EasyOCR cannot extract the voter ID informa-

tion correctly or ballot misplacement errors when the

paper ballot is not correctly positioned in the physical

template. Then, the extracted information is sent to

the system’s backend, allowing the voting process to

continue.

Figure 1: Voting ballot.

4.3 Personalizations

Before starting the process, obtaining the spatial po-

sition of all points where the robotic arm needed to

move was necessary. Using a joystick to control the

robotic arm’s movement and a Python code to moni-

tor the joint values, the values of the six joints for each

position were obtained. These values were recorded

in a Google Sheets spreadsheet and then transformed

into a .json file that the software could read.

The mapped positions included the keys on the

presiding polling station, the keys on the voter’s ter-

minal, and the position for reading the paper ballot.

All of these positions have safety positions to ensure

safe arm movement. Each key has two positions,

one slightly above the key and another with the key

pressed.

To ensure correct positioning, a template was cre-

ated to ensure the positioning of the terminals, the

ballot box, and the paper ballot with reference to the

Robotics and Computer Vision in the Brazilian Electoral Context: A Case Study

519

robotic arm. This template was created using a 3D

printer, as shown in figures 3 and 4.

In addition to the template, two other 3D-printed

parts were developed. One is a base for a silicone fin-

ger that minimizes the effort exerted on the keys of

the 2015 version of the voter terminal and the officer

terminal. For the 2020 version, which has a touch

screen, the silicone finger is covered with a finger

glove made of silver mesh and elastane, which has a

USB cable connected to the robot’s base, allowing the

robotic arm to interact with the touch screen by gen-

erating static electricity caused by the contact of the

glove and the USB cable. Finally, a support was de-

signed and printed to attach the camera to the robotic

arm. These parts are shown in Figure 2.

Figure 2: Camera stand and silicone finger.

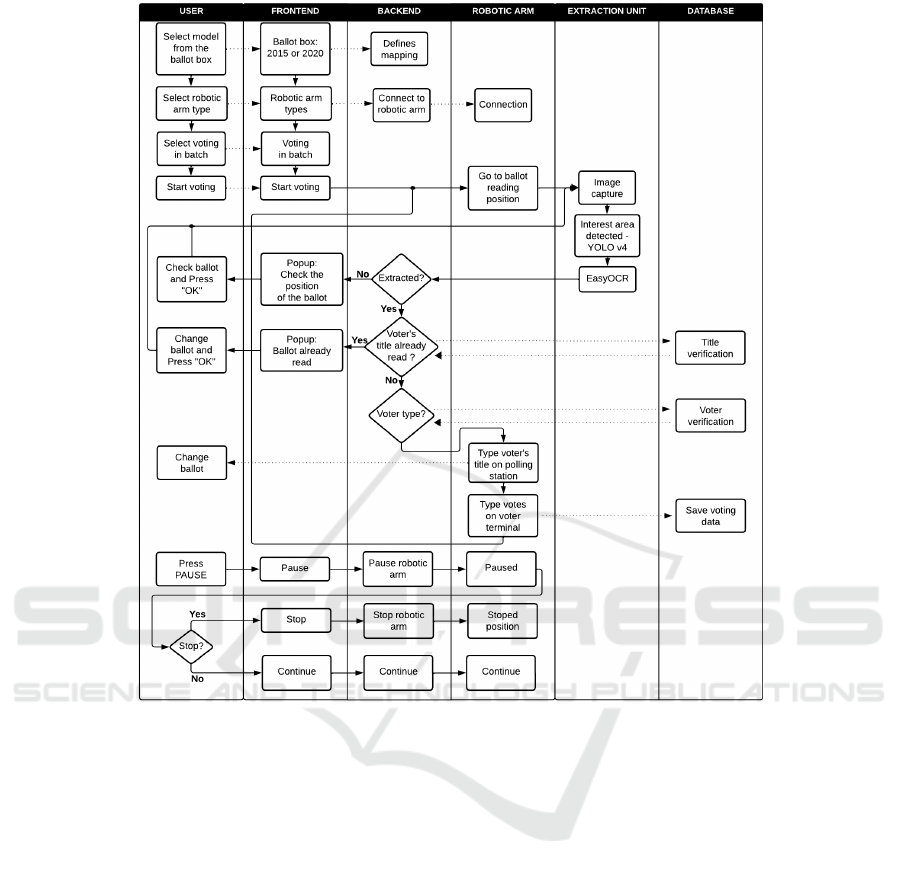

4.4 Automation Flow

The robot (with a camera and silicone finger) is ini-

tially secured to the table, and the assembly of the

template is performed. Each terminal is placed in its

respective position, and the key positions are obtained

and saved in a .json file. The model of the voting ma-

chine and the type of robot used in the system are se-

lected.

With the start of the integrity test, a voter ID is

entered into the auxiliary system along with the votes

that were preveiusly filled out, just as is done in the

non-automated process. A ballot containing the votes

and the voter ID is printed and placed in its corre-

sponding position on the template. The user initiates

the batch voting, and the robot moves to the reading

position for the ballot, as shown in Figure 3. An im-

age of the ballot is obtained, and all necessary in-

formation is extracted. Suppose the system cannot

extract the necessary information. In that case, a

warning will be displayed on the system’s frontend,

prompting the user to check the position of the ballot,

as they may have forgotten to place a ballot or some

other external factor that may be hindering the sys-

tem’s ability to extract information. If the extraction

is successful, it checks whether the voter ID is already

in the database. If the user forgot to change the bal-

lot, a warning is displayed on the system’s frontend,

instructing the user to change the ballot. If the voter

ID is not already in the database, it checks the type of

voter in the database to determine the flow, as it varies

according to the type of voter.

Figure 3: Electronic ballot box test 2015.

Figure 4: Electronic ballot box test 2020.

The robot types the extracted voter ID into the

polling station and activates the voter’s terminal. Sub-

sequently, the robot will type each vote extracted from

the paper ballot into the voter’s terminal, as shown in

Figure 4. Upon completing the votes for that voter ID,

the information is saved in the database, and the robot

returns to the ballot reading position to initiate a new

voting cycle.

When the robot initiates the voter ID typing pro-

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

520

Table 1: Test Analysis.

Model-day 2015-01 2015-02 2015-03 2020-01 2020-02 2020-03

Total time 9 h 9 h 8 h 53 min 8 h 47 min 8 h 22 min 8 h 53 min

Quantity of votes 225 225 197 232 223 197

Average time per vote 2 min 24 s 2 min 24 s 2 min 43 s 2 min 16 s 2 min 15 s 2 min 43 s

Eletronic Ballot

Box inspections

7 6 6 4 4 4

Reading errors 10 10 2 3 12 4

Ballot exchange error 0 2 0 3 0 0

Typing errors 0 0 3 1 1 1

External error 0 0 0 1 0 0

cess, the user can begin entering another voter ID with

votes into the auxiliary system, print it, and replace

the read ballot with this one to continue the process.

In case of any issue during the process, the user can

pause the system and resume from where they left off.

Figure 5 provides a more visual representation of the

entire flow.

5 EXPERIMENTS AND RESULTS

The experiments were conducted to simulate a real in-

tegrity test. Two days of testing were performed for

each electronic ballot box model, 2015 and 2020. For

these two days of testing, the robots were set up in

a central area of the audit to have more visibility, and

with a single system operator, we were able to use two

robotic arms to audit two electronic ballot boxes, one

of the 2015 model and the other of the 2020 model,

simultaneously, reducing the required workforce from

six people to one per shift (morning/afternoon). 235

ballots were used with voter IDs registered in the elec-

tronic ballot box training software and with votes ran-

domly generated among fictitious candidates.

Three problems may occur during the experiments

that require user interaction with the system. The

first is when the extraction system cannot extract the

voter ID, either because there is no ballot, which oc-

curs when there is no paper ballot in the template,

or because the lighting is inadequate, which happens

when the camera cannot obtain the ballot’s informa-

tion due to occlusion or shadow. The second is when

the ballot is not changed due to an operator error who

did not change the ballot in the template. The last

one is when the voter machine inspection process oc-

curs. This inspection is already part of the process,

occurs randomly, and serves for the election worker

to check if the voting machine is working correctly.

The worker performs quick procedures on the termi-

nal and the voting machine to release the next voter.

Table 1 contains all data related to all the experi-

ments conducted in the laboratory (day’s 01 and 02)

and on day of the real integrity test On October 2,

2022 (day 03). The first row categorizes the electronic

ballot box model and the day of the test. The total

time corresponds to the period the system spent vot-

ing from the first ballot to the last. The quantity of

votes is the number of ballots read and voted without

errors. The average time per vote is the relationship

between the total time and the quantity of votes. This

value also includes the first time-electronic ballot box

inspection errors. The next three rows represent the

number of errors corresponding to the errors that re-

quired user interaction with the system, as described

in the previous paragraph.

The 2020 model had an average time per vote of 2

minutes and 16 seconds on the first day and 2 minutes

and 15 seconds on the second day. For reading errors,

out of a total of 940 readings, there were only 35 er-

rors, which represents 3.72%. Since the 2020 model

has a touchscreen ballot terminal, there was a typing

error each day in which it registered two clicks on the

same number. An unforeseen error occurred during

the experiments where the electronic ballot box test

software crashed and needed to be restarted on the

first day of the 2020 model. Additionally, in the 2020

model, there is a slight discrepancy in the number of

votes cast between the first and second day. This oc-

curred due to a particular error in which some bal-

lots with many leading zeros before voting ID (Ex:

0000.0076.8762) could not be read on the second day

of testing. Therefore, the total time was reduced be-

cause fewer ballots were successfully read.

On ”day 03” the system was run on two electronic

ballot boxes, models 2015 and 2020, at the designed

integrity test location. The test on that day highlighted

the potential of the application, with the system only

being surpassed in the number of votes by one of

the twenty-seven sections audited manually, and the

number of registered voters in that section was way

smaller than the section that the robot arm audited.

The tests on that day highlighted the potential of the

application, being surpassed in the number of votes

only by one of the twenty-seven sections operated by

Robotics and Computer Vision in the Brazilian Electoral Context: A Case Study

521

Figure 5: Flow automation.

people.

It is observed that both models took the same

amount of time, 8 hours and 53 minutes, to complete

the voting process, processing a total of 197 votes

with an average time per vote of 2 minutes and 43

seconds. Regarding reading errors, out of 404 read-

ings made, there were only six errors, which is 1.48%.

In terms of typing errors, there are only four double-

click errors. There were no errors caused by other

external agents that were not mapped, nor were there

any errors regarding the exchange of ballots.

6 CONCLUSION

The implementation of an automation system for elec-

toral integrity auditing represents a significant ad-

vancement in the context of elections in Brazil. Cur-

rently, this process involves using 6 to 33 electronic

ballot boxes for testing and an average of 100 peo-

ple to conduct these audits, depending on the state

performing the audit. This study sought to evaluate

the system’s effectiveness developed through labora-

tory tests and an integrity test conducted during the

2022 presidential elections. The results indicate that

the system can handle different models of electronic

ballot boxes and adverse situations, demonstrating ro-

bustness in the face of ballot reading errors.

The system provides a way to mitigate typing er-

rors caused by inattention or fatigue, which can be

highly detrimental to ensuring a clean election. In

such cases, one could argue that the fault lies within

the electronic ballot boxes software and not with hu-

man error, even though the entire process is recorded.

It also reduces the workload on the TREs teams, who

would otherwise need to review the recordings and

identify why a particular error occurred. This ap-

proach can increase the number of completed votes,

reduce the number of people involved in the auditing

process, and make it more transparent.

As the audit is conducted across the entire Brazil-

ian territory, there is no standard setup, leading to nu-

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

522

merous variables that need to be addressed for an au-

tomation system, such as the issue of varying illumi-

nation. This study serves as a starting point for future

research and development in the field of electoral au-

tomation, emphasizing the importance of improving

audit systems to ensure the integrity of the democratic

process.

As a future work, it is possible to make the au-

tomation system more secure and automated by ver-

ifying the data entered in both terminals through

screen reading.

ACKNOWLEDGEMENTS

This work was supported by the research and inno-

vation cooperation project between Softex (funded by

the Ministry of Science, Technology and Innovation

through Law 8.248/91 in the scope of the National

Priority Program) and CIn-UFPE.

REFERENCES

Awalgaonkar, N., Bartakke, P., and Chaugule, R. (2021).

Automatic license plate recognition system using ssd.

In 2021 International Symposium of Asian Control As-

sociation on Intelligent Robotics and Industrial Au-

tomation (IRIA), pages 394–399. IEEE.

Banerjee, D. and Yu, K. (2018a). Integrated test automation

for evaluating a motion-based image capture system

using a robotic arm. IEEE Access, 7:1888–1896.

Banerjee, D. and Yu, K. (2018b). Robotic arm-based face

recognition software test automation. IEEE Access,

6:37858–37868.

Banerjee, D., Yu, K., and Aggarwal, G. (2018). Image recti-

fication software test automation using a robotic arm.

IEEE Access, 6:34075–34085.

Bochkovskiy, A., Wang, C.-Y., and Liao, H.-Y. M. (2020).

Yolov4: Optimal speed and accuracy of object detec-

tion. arXiv preprint arXiv:2004.10934.

Court, R. E. C. (2022a). Como ocorre o teste de integri-

dade das urnas eletr

ˆ

onicas. url= https://www.tre-

ce.jus.br/eleicao/eleicoes-2022/auditoria-de-

funcionamento-das-urnas-eletronicas/como-ocorre-

a-auditoria-de-funcionamento-das-urnas-eletronicas.

Accessed: 2023-09-10.

Court, R. E. S. (2022b). Auditoria dia da eleic¸

˜

ao

(teste de integridade). url= https://www.tre-

sp.jus.br/eleicoes/eleicoes-2022/auditoria-dia-da-

eleicao-teste-de-integridade. Accessed: 2023-09-10.

Court, S. E. (2022c). Ballot box. https://international.

tse.jus.br/en/electronic-ballot-box/presentation. Ac-

cessed: 2023-09-10.

Court, S. E. (2022d). Comparecimento/abstenc¸

˜

ao.

https://sig.tse.jus.br/ords/dwapr/r/seai/

sig-eleicao-comp-abst/home?session=

204150010670548. Accessed: 2023-09-15.

Court, S. E. (2022e). Election process in brazil.

https://international.tse.jus.br/en/elections/

election-process-in-brazil. Accessed: 2023-09-

10.

Court, S. E. (2022f). Eleitorado da eleic¸

˜

ao — es-

tat

´

ısticas. https://sig.tse.jus.br/ords/dwapr/

r/seai/sig-eleicao-eleitorado/home?session=

204150010670548. Accessed: 2023-09-15.

Court, S. E. (2022g). Justic¸a eleitoral re-

cebe mais de 206 mil novas urnas que

ser

˜

ao utilizadas nas eleic¸

˜

oes 2022. url:

https://www.tse.jus.br/comunicacao/noticias/2022/

Junho/justica-eleitoral-recebe-mais-de-206-mil-

novas-urnas-que-serao-utilizadas-nas-eleicoes-2022.

Accessed: 2023-09-12.

Court, S. E. (2022h). Testes de integridade - re-

lat

´

orios referentes ao 1º e 2º turnos. url:

https://www.tse.jus.br/eleicoes/eleicoes-2022/testes-

de-integridade-relatorios-referentes-ao-1o-e-2o-

turnos. Accessed: 2023-09-15.

da Silva Mendonc¸a, F. A., Teixeira, J. M. X. N., and

da Silva J

´

unior, M. R. (2023). Includevote: Devel-

opment of an assistive technology based on computer

vision and robotics for application in the brazilian

electoral context. In Radeva, P., Farinella, G. M.,

and Bouatouch, K., editors, Proceedings of the 18th

International Joint Conference on Computer Vision,

Imaging and Computer Graphics Theory and Appli-

cations, VISIGRAPP 2023, Volume 4: VISAPP, Lis-

bon, Portugal, February 19-21, 2023, pages 755–765.

SCITEPRESS.

Fachin, E. (2021). ResoluC¸

˜

Ao nº 23.674,

de 16 de dezembro de 2021. https:

//www.tse.jus.br/legislacao/compilada/res/2021/

resolucao-no-23-674-de-16-de-dezembro-de-2021.

Accessed: 2023-09-10.

Frister, D., Oberweis, A., and Goranov, A. (2020). Auto-

mated testing of mobile applications using a robotic

arm. In 2020 International Conference on Com-

putational Science and Computational Intelligence

(CSCI), pages 1729–1735. IEEE.

GOOGLE (2017). Flutter. https://flutter.dev/. Accessed:

2023-10-04.

Kinova (2020). Gen3 lite robot. https://www.

kinovarobotics.com/product/gen3-lite-robots. Ac-

cessed: 2023-08-20.

Sans

˜

ao, S. (2018). ResoluC¸

˜

Ao nº 23.674, de 16 de dezem-

bro de 2021. https://www.tre-ro.jus.br/legislacao/

compilada/resolucao/2018/resolucao-n-06-2018. Ac-

cessed: 2023-08-10.

Silva, J., Guerra, G., Rodrigues, Y., Veloso, P., Silva, C.,

Souza, P., and da Silva, M. (2023). Ocrs for ex-

tracting characters from ballots in the process of au-

tomating the integrity test in the brazilian elections.

Proceedings of the Future Technologies Conference

(FTC) 2023, Volume 1.

Robotics and Computer Vision in the Brazilian Electoral Context: A Case Study

523