Dynamic Path Planning for Autonomous Vehicles: A Neuro-Symbolic

Approach

Omar Elrasas

1

, Nourhan Ehab

1

,Yasmin Mansy

1

and Amr El Mougy

2

1

Department of Computer Science and Engineering, German University in Cairo, Cairo, Egypt

2

Department of Computer Science and Engineering, American University in Cairo, Cairo, Egypt

Keywords:

Neuro-Symbolic AI, Dynamic Path Planning, Autonomous Vehicles.

Abstract:

The rise of autonomous vehicles has transformed transportation, promising safer and more efficient mobility.

Dynamic path planning is crucial in autonomous driving, requiring real-time decisions for navigating complex

environments. Traditional approaches, like rule-based methods or pure machine learning, have limitations

in addressing these challenges. This paper explores integrating Neuro-Symbolic Artificial Intelligence (AI)

for dynamic path planning in self-driving cars, creating two regression models with the Logic Tensor Net-

works (LTN) Neuro-Symbolic framework. Tested on the CARLA simulator, the project effectively followed

road lanes, avoided obstacles, and adhered to speed limits. Root mean square deviation (RMSE) gauged the

LTN models’ performance, revealing significant improvement, particularly with small datasets, showcasing

Neuro-Symbolic AI’s data efficiency. However, LTN models had longer training times compared to linear and

XGBoost regression models.

1 INTRODUCTION

Self-driving vehicles are a transformative technology

with the potential to revolutionize global transporta-

tion systems. The ability of autonomous cars to sense,

decide, and navigate dynamic environments relies on

sophisticated computational models and complex al-

gorithms. Among the challenges faced, dynamic path

planning is crucial for real-time decision-making and

adaptability to changing road conditions.

Conventional approaches to path planning in self-

driving cars include rule-based methodologies and

pure machine learning techniques (Gonz

´

alez et al.,

2015). However, rule-based methods struggle with

adaptability, while machine learning methods lack

transparency. Addressing these challenges requires

an approach that combines clear rule-based thinking

with machine learning capabilities. Neuro-Symbolic

approaches aim to achieve this integration by com-

bining symbolic reasoning with deep neural networks

(Sarker et al., 2021).

Our objective is to explore Neuro-Symbolic Com-

puting for dynamic path planning in autonomous ve-

hicles. The aim is to develop a path planning system

that can swiftly adapt to changing road conditions and

potential risks. The paper investigates the integration

of symbolic reasoning techniques with deep learning

models using the Logic Tensor Network framework

(Badreddine et al., 2022), resulting in a data-efficient

Neuro-Symbolic regression model.

The rest of the paper is organized as follows. Sec-

tion 2 provides background information, Section 3

discusses related research, and Section 4 presents the

proposed neuro-symbolic path planning system. Sec-

tion 5 explains model evaluation and presents results,

including comparisons with other machine learning

methods. Section 6 concludes the paper by summa-

rizing the work and suggesting future research direc-

tions.

2 BACKGROUND

In this section, we delve deeper into the background

of our research landscape, shedding light on crucial

aspects that underpin our exploration of Logic Tensor

Networks (LTN), path planning for autonomous vehi-

cles, and the CARLA simulator.

2.1 Logic Tensor Networks

Logic Tensor Networks (LTN) is a novel Neuro-

Symbolic Python framework that seamlessly com-

584

Elrasas, O., Ehab, N., Mansy, Y. and El Mougy, A.

Dynamic Path Planning for Autonomous Vehicles: A Neuro-Symbolic Approach.

DOI: 10.5220/0012374700003636

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 16th International Conference on Agents and Artificial Intelligence (ICAART 2024) - Volume 3, pages 584-591

ISBN: 978-989-758-680-4; ISSN: 2184-433X

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

bines key properties of both neural networks (for

training on data) and symbolic logic (for logical

reasoning). Two versions of LTN have been pub-

lished—one using TensorFlow and the other using

PyTorch, the widely-used Python machine learning

frameworks. In Logic Tensor Network (LTN), the

framework utilizes a first-order logic knowledge base

with axioms, functions, predicates, or logical con-

stants. LTN employs logical axioms as a loss func-

tion, seeking solutions that maximally satisfy all the

knowledge base axioms (Badreddine et al., 2022).

Real Logic, a fully differentiable logical language

introduced by LTN, facilitates learning by ground-

ing elements of a first-order logic signature onto data

through neural computational graphs and first-order

fuzzy logic semantics. Grounding, defined by Real

Logic, involves mapping logical domain elements

(constants, variables, and logical symbols) to tensors,

enabling the incorporation of data and logic. LTN

converts Real Logic formulae into PyTorch or Tensor-

Flow computational graphs, depending on the version

used (Figure 1) (Badreddine et al., 2022).

Figure 1: Real Logic Computational Graphs (Badreddine

et al., 2022).

2.2 Path Planning for Autonomous

Vehicles

Path planning is vital for autonomous vehicles to nav-

igate safely and efficiently in complex environments,

prioritizing passenger comfort. It encompasses two

main types: local and global path planning. Local

path planning focuses on generating short-term tra-

jectories to avoid immediate obstacles, while global

path planning finds a path from the starting point

to the destination, considering long-term constraints

(Gonz

´

alez et al., 2015).

A popular local path planning approach is the Ar-

tificial Potential Field (APF) method, creating a vir-

tual potential field to guide the vehicle and avoid

obstacles. This method, widely used in robotics,

is also applied in autonomous vehicle path planning

(Gonz

´

alez et al., 2015). For global path planning,

the graph-based method represents the environment

as a graph and uses algorithms like Dijkstra’s or A* to

find the shortest path. Another method is the Rapidly-

exploring Random Tree (RRT) algorithm, which gen-

erates a tree-based search space for optimal path find-

ing (Gonz

´

alez et al., 2015).

Machine learning techniques like deep learn-

ing and reinforcement learning have recently en-

hanced both local and global path planning for au-

tonomous cars. These approaches, such as a super-

vised reinforcement learning-based method proposed

by Hebaish et al. (Hebaish et al., 2022), demonstrate

promising outcomes in navigating complex situations.

2.3 CARLA Simulator

The CARLA simulator, standing for Car Learning

to Act, is an open-source platform designed for test-

ing and validating autonomous driving systems in re-

search and development. Built on the Unreal Engine,

CARLA provides a realistic environment for algo-

rithm and model testing across various driving sce-

narios, featuring customizable elements such as ob-

stacles, autonomous traffic, and weather conditions

in different towns with complex layouts (Dosovitskiy

et al., 2017).

CARLA supports various sensors, including Li-

DAR, radar, collision detection, and RGBA cameras,

enabling simulation of diverse perception scenarios

and testing perception algorithms. Researchers, such

as Dworak et al. (Dworak et al., 2019), have utilized

CARLA to evaluate LiDAR object detection deep

learning architectures based on artificially generated

point cloud data from the simulator.

In addition to sensor support, CARLA allows

the integration of various control algorithms for au-

tonomous vehicles. For instance, Vlachos et al. (Vla-

chos and Lalos, 2022) proposed a cooperative control

algorithm for platooning using CARLA, demonstrat-

ing its effectiveness in different scenarios to improve

stability and fuel efficiency. CARLA has also been

extensively used in various research tasks, including

path planning, motion control, and behavior model-

ing. Hebaish et al. (Hebaish et al., 2022) tested their

supervised reinforcement learning-based path plan-

ning algorithm for autonomous driving in CARLA,

demonstrating its ability to generate safe and efficient

driving trajectories in complex scenarios.

3 RELATED WORK

In examining related work, it is observed that many

research papers proposing machine learning ap-

proaches for self-driving vehicles predominantly em-

ploy reinforcement learning. Sallab et al. (Sallab

et al., 2017) introduced a deep reinforcement learning

framework that handles partially observable scenarios

through recurrent neural networks. This framework

Dynamic Path Planning for Autonomous Vehicles: A Neuro-Symbolic Approach

585

integrates attention models, focusing on relevant in-

formation to reduce computational complexity on em-

bedded hardware. Effective in complex road curva-

tures and vehicle interactions, the framework demon-

strates maneuvering capabilities. Another example by

Zong et al. (Zong et al., 2017) utilizes reinforcement

learning for obstacle avoidance, incorporating vehi-

cle constraints and sensor information with a deep

deterministic policy gradients algorithm. Both tech-

niques, however, face challenges such as long train-

ing times for achieving acceptable reward scores and

a lack of crucial context in potentially life-threatening

situations. The features of Neuro-Symbolic Artifi-

cial Intelligence (AI), such as explainability and data-

efficiency, offer potential solutions to these draw-

backs.

Given that Neuro-Symbolic AI is a relatively new

research field, practical examples of its full potential

are limited. An application in healthcare, proposed

by Lavin et al. (Lavin, 2022), involves a probabilis-

tic programmed deep kernel learning tool predicting

cognitive decline in Alzheimer’s disease. Effective in

neurodegenerative disease diagnosis, the tool is spec-

ulated to perform well in other disease areas. This

work will utilize Neuro-Symbolic AI for path plan-

ning in self-driving vehicles to explore its potential

in this field, leveraging its advantages over other ma-

chine learning techniques.

4 A NEURO-SYMBOLIC PATH

PLANNER

This section delves into the architecture, implemen-

tation, and dataset generation for Neuro-Symbolic

models with the main aim of facilitating dynamic nav-

igation for autonomous vehicles in complex scenar-

ios.

4.1 System Design

In the CARLA simulator, vehicle control involves

three main parameters: throttle (ranging from 0 to 1),

brake (ranging from 0 to 1), and steer (ranging from

-1 to 1). The objective of the LTN models is to predict

these parameters continuously based on the current

situation and environment. To implement these mod-

els in CARLA and control the vehicle, a main script

is required to connect to the CARLA server, load the

map, and spawn the vehicle.

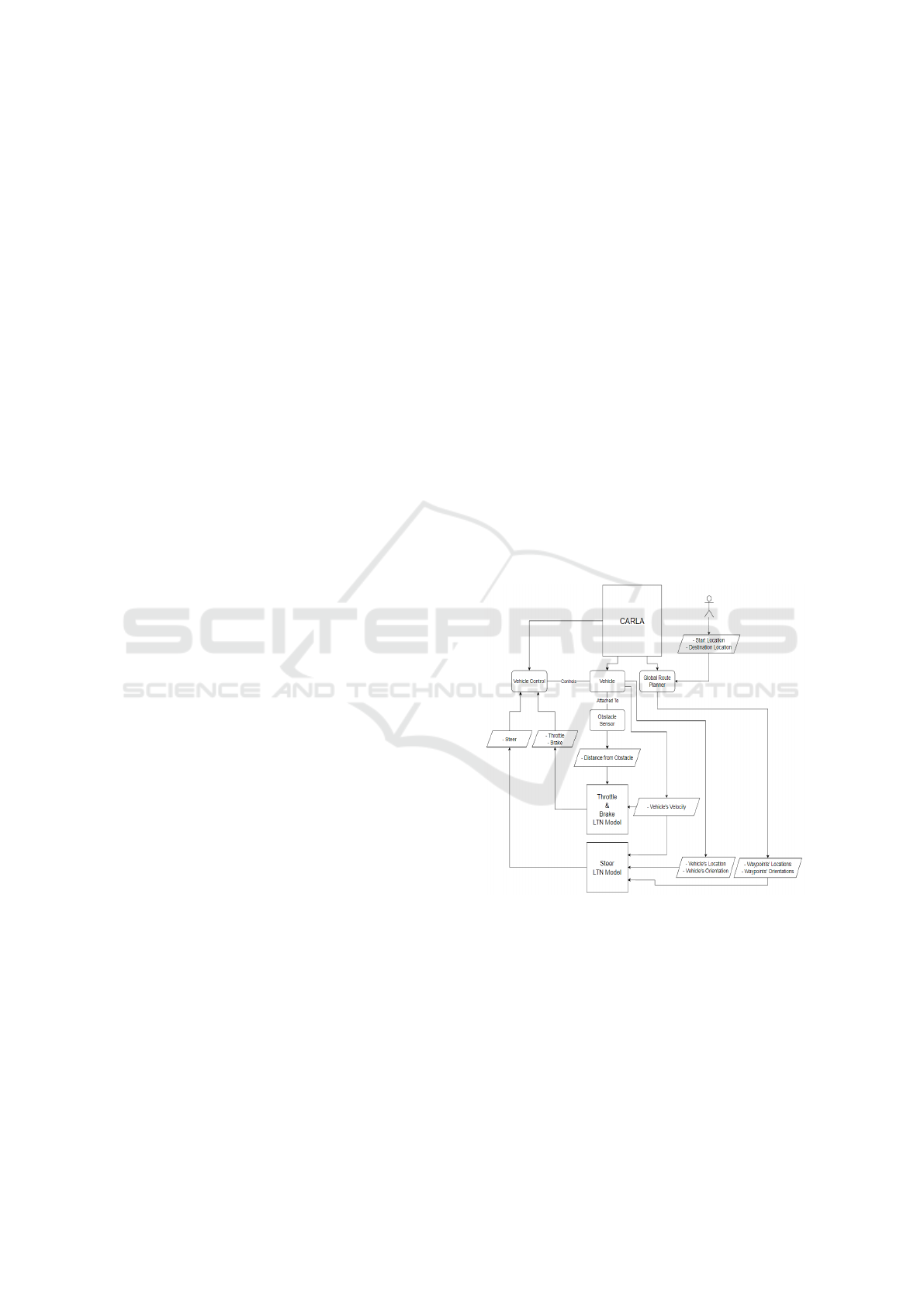

The project’s architecture, depicted in Figure 2,

comprises three main components: CARLA, the LTN

models, and the main script linking them together.

1. Route Planning: User-defined inputs, such as the

vehicle’s starting location and destination, initiate

CARLA’s global route planner. This planner cal-

culates an optimal route with a list of waypoints.

This stage occurs once at the script’s beginning.

2. Data Collection: Real-time information about

the vehicle and its environment is gathered in the

second stage. CARLA’s obstacle detection sensor

records distances to potential obstacles, while the

Python API retrieves details like speed, location,

orientation, and the next waypoint’s information

continuously.

3. Control and Prediction: The third stage involves

feeding the data collected in the second stage

into the Neuro-Symbolic LTN models. These

models predict throttle, brake, and steering com-

mands based on the current scenario using Neuro-

Symbolic techniques. The predictions control

the ego vehicle in CARLA continuously, ensur-

ing adaptability to unpredictable scenarios. The

models are trained on datasets captured within

CARLA, minimizing preprocessing and enhanc-

ing performance.

Figure 2: Detailed Architecture.

4.2 LTN Models Dataset Generation

Training data for both models was collected using

CARLA’s autopilot, and pandas was employed to

generate Comma-separated Values (CSV) files con-

taining the data. Pandas, a Python library designed

for data analysis, facilitates the conversion of reg-

ular Python arrays into pandas dataframes—a two-

dimensional data structure. These dataframes can

then be transformed into CSV files using pandas’ li-

ICAART 2024 - 16th International Conference on Agents and Artificial Intelligence

586

brary functions.

4.2.1 Throttle and Brake Model

The Python script for the throttle and brake model

in CARLA begins by loading town 10, a medium-

sized urban city map, chosen for its diverse traffic

scenarios. It spawns 100 Non-player character (NPC)

vehicles randomly across the map, with one desig-

nated as the ego vehicle for data collection. Utiliz-

ing CARLA’s autopilot function, the script config-

ures the ego vehicle to ignore traffic lights and abstain

from lane switching, ensuring dataset consistency. An

obstacle detection sensor, configured for a 30-meter

range and a hit radius matching the vehicle’s width,

captures dynamic obstacle information. The script

runs for 15 minutes, collecting data on obstacle dis-

tances, vehicle speed, and autopilot-controlled throt-

tle and brake values. The gathered data is stored in

an array, converted to a CSV file using pandas (see

section 4.2), and saved on disk.

4.2.2 Steer Model

In the steer model script, town 4, a large map with a

small town and a substantial highway, is selected to

emphasize extended lane following without distrac-

tions. Unlike the throttle and brake model, only the

ego vehicle is deployed, and CARLA’s world debug

function visualizes spawn point locations for suitable

routes.

No NPC vehicles are spawned to maintain dataset

clarity. CARLA’s autopilot trajectory being random

requires the use of CARLA’s vehicle Proportional-

Integral-Derivative (PID) controller class for trajec-

tory control. This class is instantiated with parame-

ters suitable for the chosen Tesla Model 3, allowing

precise route following.

The script involves traversing two lengthy routes

around the highway, collecting vehicle and waypoint

coordinates, yaw angles, and steering data. Dataset

precision is maintained by adjusting waypoint yaw

and approximating small steer values. The collected

data is stored in an array, converted to a CSV file us-

ing pandas, and saved on disk, following the approach

used for the throttle and brake model.

4.3 LTN Models Architecture and

Training

Both models, based on the PyTorch version of LTN

(LTN) known as LTNtorch, are Neuro-Symbolic re-

gression models utilizing an equality predicate for

training. This predicate measures similarity between

Figure 3: Spawn Points.

input tensors using the Euclidean similarity formula.

Eq(x, y) =

1

1 + 0.5 ∗

p

∑

i

(x

i

− y

i

)

2

(1)

The loss calculation involves a built-in aggregation

operator, subtracted from 1, utilizing the First-order

logic (FOL) ForAll quantifier for universal quantifica-

tion. Bound variables from the diagonal quantifier ex-

press statements about specific pairs of values, ensur-

ing accurate prediction of relationships between cor-

responding inputs and outputs in the CSV file. The

loss equation is described as follows.

Loss = 1.0 − SatAgg(Forall(diag(x, y), Eq( f (x), y)))

(2)

Both models use pandas for loading and shuffling

CSV file data, a dataloader for batching, and the

Adam optimizer as the chosen optimizer.

4.3.1 Throttle and Brake Model

For the throttle and brake model (Figure 4), the neu-

ral network comprises 2 input layers, 3 hidden layers,

and 2 output layers. The 2 input layers correspond

to distance and speed, while the 2 output layers cor-

respond to throttle and brake. The dataset, totaling

approximately 30,000 rows, is batched into 256-row

segments, with 85% allocated for training and 15%

for testing. The learning rate is set to 0.001, and train-

ing spans 100 epochs.

Figure 4: Throttle and Brake Model Neural Network.

Dynamic Path Planning for Autonomous Vehicles: A Neuro-Symbolic Approach

587

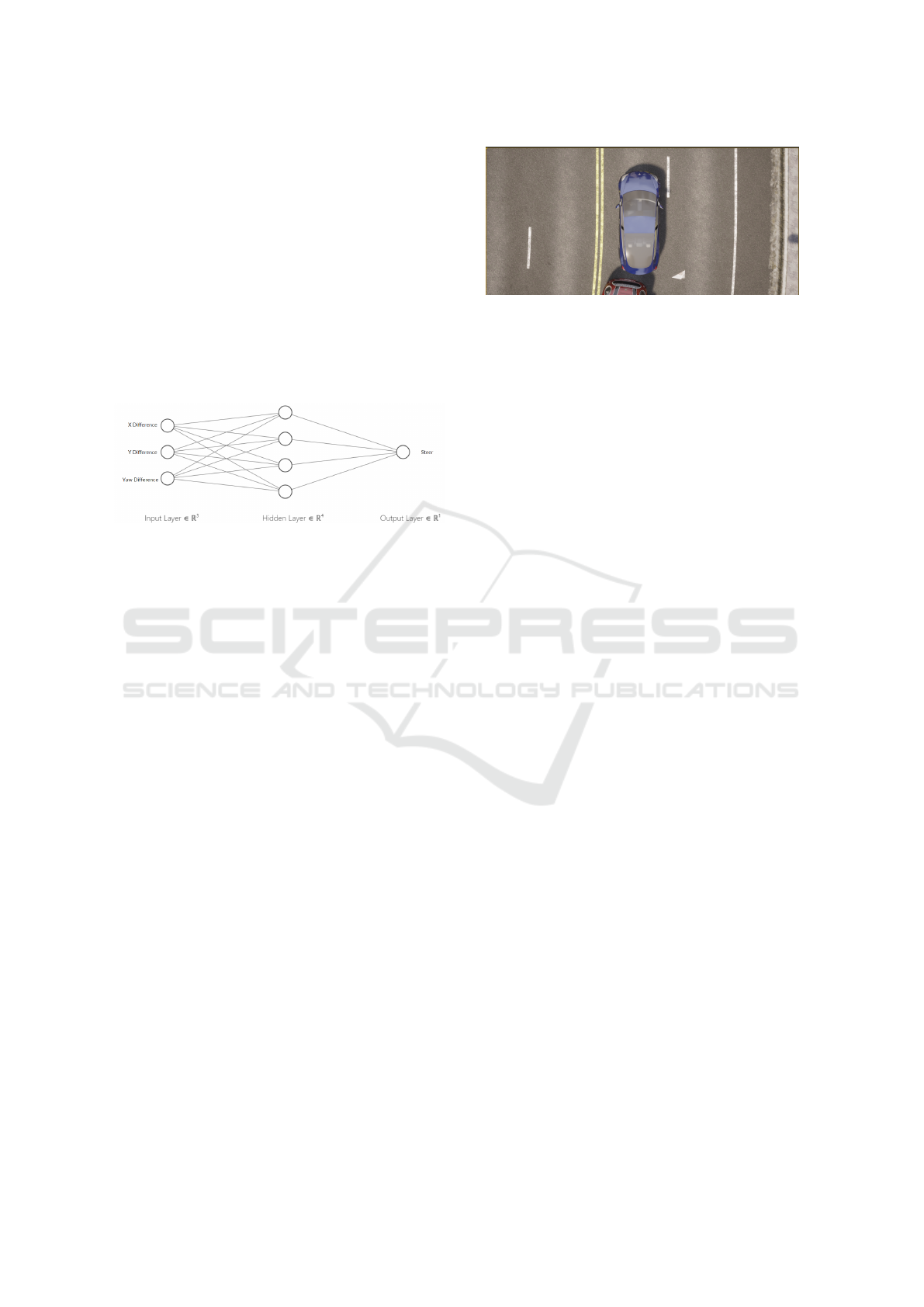

4.3.2 Steer Model

For the steer model (Figure 5), the neural network

comprises 3 input layers, 4 hidden layers, and 1 out-

put layer. The first two input layers capture the differ-

ence in x and y coordinates between the vehicle and

the waypoint, while the third input layer represents

the difference in yaw angle between the vehicle and

the waypoint. The single output layer corresponds

to steer. The dataset, totaling approximately 500,000

rows, is batched into segments of 19,000 rows, with

90% allocated for training and 10% for testing. The

learning rate is set to 0.001, and training spans 20

epochs.

Figure 5: Steer Model Neural Network.

Both LTN model scripts feature a function de-

signed for ease of use. This function takes necessary

inputs, processes them, and outputs the model’s pre-

dictions. The scripts commence by loading the CSV

files for the dataset, instantiating the predicates and

the neural network according to the specified architec-

ture. Subsequently, the training process begins. Once

trained, the models are ready for import and utiliza-

tion in the main script, as detailed in the next section

(4.4).

4.4 Main Script

CARLA operates on a client-server architecture, re-

quiring the creation of a Python script to connect

as a client to the running CARLA simulator server.

For this project, a local host connection was estab-

lished. Subsequently, both LTN models are imported

and instantiated. Town 10, chosen for its diversity and

medium size, is loaded for testing both models.

After loading the map, the user-specified spawn

location is used to spawn the ego vehicle, along with

the obstacle detection sensor. The spectator camera is

instantiated and transformed to hover over the vehicle,

providing the default camera view out of three im-

plemented views. The second camera view is a hood

view, and the third is a top view. As an example, the

top view can be seen in Figure 6.

Once the spectator camera is configured, 50 ad-

ditional NPC vehicles are spawned to create moder-

ate traffic in the map. Following the overview pro-

Figure 6: Top Camera View.

vided in section (4.1), the ego vehicle’s spawn point

and destination are determined, supplying the global

route planner with this information to generate the

list of waypoints. CARLA’s world debug function

is employed to draw these waypoints, ensuring accu-

rate tracking by the ego vehicle (Figure 3). Subse-

quently, the ego vehicle begins moving, continuously

invoking the functions in the LTN models to predict

throttle, brake, and steer using the necessary inputs.

The predicted outputs of the LTN models are then uti-

lized to control the ego vehicle through CARLA’s ve-

hicle control function, as explained in section (4.1).

This process continues until the ego vehicle reaches

the specified destination. Upon reaching the destina-

tion, CARLA’s destroy actor command removes all

spawned vehicles, concluding the simulation.

5 RESULTS AND DISCUSSION

This section outlines the evaluation metrics employed

to assess the effectiveness and efficiency of the

Neuro-Symbolic regression models. Additionally, a

comparative analysis of the results with other regres-

sion models is presented.

5.1 Evaluation Metrics

To highlight the advantages of employing our pro-

posed neuro-symbolic approach, a statistical linear re-

gression model is established as a benchmark, along-

side another regression model using XGBoost (Chen

and Guestrin, 2016), which is an efficient implemen-

tation of gradient boosting suitable for regression pre-

dictive modeling. Both models are created to fa-

cilitate a performance comparison with the Neuro-

Symbolic LTN models. To ensure a fair compari-

son, the linear regression model utilizes the same ma-

chine learning framework (PyTorch), neural network

architecture, optimizer, etc., as the Neuro-Symbolic

LTN models. Additionally, both the linear and XG-

Boost models share the same learning rate, number

of epochs, etc., as the Neuro-Symbolic LTN models.

The only distinction lies in the loss function, where

ICAART 2024 - 16th International Conference on Agents and Artificial Intelligence

588

the linear and XGBoost models rely on the mean

squared error criterion, a common choice for regres-

sion models, as opposed to the Neuro-Symbolic LTN

models based on LTN predicates. Evaluation metrics

such as Root Mean Square Error (RMSE), alongside

training and prediction times, are utilized to assess the

models’ performance.

5.1.1 Root Mean Square Error

Also known as the standard error of regression, the

RMSE stands out as one of the most widely utilized

metrics for assessing the performance of machine

learning regression models. Recognized as a proper

scoring rule by Gneiting et al. (Gneiting and Raftery,

2007), it provides an intuitive measure of how far pre-

dictions deviate from actual measured values, with

lower RMSE values indicating superior performance.

The calculation of RMSE is expressed by the follow-

ing equation, where n represents the number of data

rows, y(i) is the i-th output, and ˆy(i) is its correspond-

ing prediction.

RMSE =

r

∑

n

i=1

||y(i) − ˆy(i)||

2

n

(3)

5.1.2 Training Time and Prediction Time

Training time refers to the duration required for the

model to complete all epochs and become prepared

for predictions, excluding the initial instantiation of

predicates and loading the CSV file. On the other

hand, prediction time is the duration taken by the

model to process an input and produce its prediction.

The RMSE and training time metrics are com-

puted for both Neuro-Symbolic LTN models and

compared to benchmark models across varying data

row sizes from the dataset. This analysis aims to

assess the models’ accuracy with different data vol-

umes, emphasizing one of Neuro-Symbolic AI’s key

advantages—data efficiency. Prediction time is com-

puted once at the end, with further details discussed

in the following section.

5.2 Results

5.2.1 Throttle and Brake Model RMSE

The RMSE of the throttle and brake LTN model and

both benchmark models was calculated while using

500, 1000, 2000, 5000, and 20000 data rows to train

the models, the following results in Figure 7, and 8

were observed.

Figure 7: Throttle and Brake RMSE Table Comparison.

Figure 8: Throttle and Brake RMSE Graph Comparison.

5.2.2 Steer Model RMSE

The RMSE of the steer LTN model and both bench-

mark models was calculated while using 50000,

125000, 250000, and 500000 data rows to train the

models, the following results in Figure 9, and 10 were

observed.

Figure 9: Steer RMSE Table Comparison.

Figure 10: Steer RMSE Graph Comparison.

5.2.3 Throttle and Brake Model Training Time

The training time of the throttle and brake LTN model

and both benchmark models was calculated while us-

ing 500, 1000, 2000, 5000, and 20000 data rows to

train the models, the following results in Figure 11,

and 12 were observed.

Dynamic Path Planning for Autonomous Vehicles: A Neuro-Symbolic Approach

589

Figure 11: Throttle and Brake Training Time Table Com-

parison.

Figure 12: Throttle and Brake Training Time Graph Com-

parison.

5.2.4 Steer Model Training Time

The training time of the steer LTN model and bench-

mark models was calculated while using 50000,

125000, 250000, and 500000 data rows to train the

models, the results in Figure 13, and 14 were ob-

served.

Figure 13: Steer Training Time Table Comparison.

Figure 14: Steer Training Time Graph Comparison.

5.3 Discussion

The outcomes presented in the preceding section sub-

stantiate one of the primary advantages of Neuro-

Symbolic AI—data efficiency. In contrast to the two

benchmark models, the Neuro-Symbolic LTN mod-

els exhibit notably lower RMSE values, particularly

when employing a smaller dataset. However, this effi-

ciency is accompanied by a trade-off: longer training

times, as elucidated in the ensuing discussion.

5.3.1 Throttle and Brake Model RMSE

As anticipated, Figures 7 and 8 illustrate that the

throttle & brake LTN model exhibits a 55.6% lower

RMSE than the linear model and a 12.1% lower

RMSE than the XGBoost model with a dataset size of

500 rows. Nevertheless, as the dataset size increases,

the disparity in RMSE between the throttle & brake

LTN model and the two benchmark models dimin-

ishes. For instance, with 1000 data rows, the LTN

model demonstrates a 6.9% reduction in RMSE com-

pared to the linear model and a 5.6% reduction com-

pared to the XGBoost model. While this reduction

may seem modest, it can hold significance in many

practical scenarios. The trend of diminishing differ-

ences is not consistent, as seen with a dataset size

of 2000 rows, where the RMSE difference expands

to 17.8% less than the linear model and 26.9% less

than the XGBoost model. However, as the dataset

size continues to grow from 2000 to 20000 rows, the

RMSE difference gradually decreases, becoming neg-

ligible between the LTN model and the linear model,

and reducing from 26.9% to 22.7% between the LTN

model and the XGBoost model.

5.3.2 Steer Model RMSE

In line with the results observed in the throttle &

brake model, Figures 9 and 10 underscore a signif-

icant divergence in RMSE between the LTN model

and the two benchmark models. Commencing with

the 50,000 data rows dataset, the steer LTN model ex-

hibits a 65.2% reduction in RMSE compared to the

linear model and a substantial 79.1% reduction com-

pared to the XGBoost model. Similar to the pattern

seen with the linear model in the throttle & brake

model results, the gap gradually narrows when using

125,000 data rows. The LTN model displays a 49.2%

reduction in RMSE compared to the linear model, but

this difference increases again to 68% with 250,000

data rows. Subsequently, the difference gradually de-

creases until it becomes negligible for the expected

output of this model.

Unlike the throttle & brake model, the XGBoost

model performs poorly in the steer model compared

to the other two models, exhibiting a substantial dif-

ference in RMSE. This discrepancy suggests that the

XGBoost regression model’s performance is subopti-

mal when the number of epochs is relatively low, as

in the steer model, where it only undergoes training

ICAART 2024 - 16th International Conference on Agents and Artificial Intelligence

590

for 20 epochs to expedite the process due to the larger

dataset size compared to the throttle & brake dataset.

5.3.3 Training Time

As anticipated, the training time for the LTN models

is longer than that of the benchmark models. Figures

11, 12, 13, and 14 illustrate that the training time for

the LTN models exceeds that of the linear model by an

average of 41.8% in the throttle & brake model and an

average of 19.8% in the steer model, with the differ-

ence generally increasing as the dataset grows larger.

A similar trend is observed for the XGBoost model,

where the LTN model’s training time surpasses that

of the XGBoost model by an average of 81.5% in the

throttle & brake model and an average of 61.5% in

the steer model. The longer training time for the LTN

models is expected due to the additional steps in the

LTN framework, as outlined in Section 2.1, which are

necessary to calculate the loss function described in

Section 4.3.

6 CONCLUSION AND FUTURE

WORK

The paper aims to implement a practical application

for Neuro-Symbolic AI in dynamic path planning

for autonomous vehicles. Two Logic Tensor Net-

work (LTN) regression models were developed us-

ing the Neuro-Symbolic paradigm—one for control-

ling throttle and brake parameters, and another for

steering. These models were tested and evaluated in

the CARLA simulator, demonstrating effective vehi-

cle control in complex scenarios.

The Neuro-Symbolic models were then compared

with a linear regression model and an XGBoost re-

gression model using similar datasets and configu-

rations. Evaluation metrics, including Root Mean

Square Error and training time, were employed to

assess model performance. Results indicated a sig-

nificant improvement in the RMSE of the Neuro-

Symbolic models, particularly with smaller datasets.

However, this enhancement came at the expense of

longer training times compared to the linear and XG-

Boost models.

Limitations were encountered during develop-

ment, due to the computational demands of the

CARLA simulator, necessitating a constraint on the

number of simulated vehicles. Future studies should

explore additional Neuro-Symbolic features, such as

explainability, to enhance the analysis of decision-

making processes and provide drivers with valuable

insights. Additionally, it is worth delving deeper into

the symbolic aspects of Neuro-Symbolic AI, incor-

porating diverse predicates to further refine decision-

making by enforcing specific rules.

REFERENCES

Badreddine, S., Garcez, A. d., Serafini, L., and Spranger, M.

(2022). Logic tensor networks. Artificial Intelligence,

303:103649.

Chen, T. and Guestrin, C. (2016). Xgboost: A scalable

tree boosting system. In Proceedings of the 22nd acm

sigkdd international conference on knowledge discov-

ery and data mining, pages 785–794.

Dosovitskiy, A., Ros, G., Codevilla, F., Lopez, A., and

Koltun, V. (2017). CARLA: An open urban driving

simulator. In Proceedings of the 1st Annual Confer-

ence on Robot Learning.

Dworak, D., Ciepiela, F., Derbisz, J., Izzat, I., Ko-

morkiewicz, M., and W

´

ojcik, M. (2019). Perfor-

mance of lidar object detection deep learning architec-

tures based on artificially generated point cloud data

from carla simulator. In 2019 24th International Con-

ference on Methods and Models in Automation and

Robotics (MMAR), pages 600–605.

Gneiting, T. and Raftery, A. E. (2007). Strictly proper scor-

ing rules, prediction, and estimation. Journal of the

American statistical Association, 102(477):359–378.

Gonz

´

alez, D., P

´

erez, J., Milan

´

es, V., and Nashashibi, F.

(2015). A review of motion planning techniques for

automated vehicles. IEEE Transactions on intelligent

transportation systems, 17(4).

Hebaish, M. A., Hussein, A., and El-Mougy, A. (2022).

Supervised-reinforcement learning (srl) approach for

efficient modular path planning. In 2022 IEEE 25th

International Conference on Intelligent Transporta-

tion Systems (ITSC), pages 3537–3542. IEEE.

Lavin, A. (2022). Neuro-symbolic neurodegenerative dis-

ease modeling as probabilistic programmed deep ker-

nels. In AI for Disease Surveillance and Pandemic

Intelligence: Intelligent Disease Detection in Action,

pages 49–64. Springer.

Sallab, A. E., Abdou, M., Perot, E., and Yogamani,

S. (2017). Deep reinforcement learning frame-

work for autonomous driving. arXiv preprint

arXiv:1704.02532.

Sarker, M. K., Zhou, L., Eberhart, A., and Hitzler, P. (2021).

Neuro-symbolic artificial intelligence. AI Communi-

cations, 34(3):197–209.

Vlachos, E. and Lalos, A. S. (2022). Admm-based coop-

erative control for platooning of connected and au-

tonomous vehicles. In ICC 2022 - IEEE International

Conference on Communications, pages 4242–4247.

Zong, X., Xu, G., Yu, G., Su, H., and Hu, C. (2017). Obsta-

cle avoidance for self-driving vehicle with reinforce-

ment learning. SAE International Journal of Passen-

ger Cars-Electronic and Electrical Systems, 11(07-

11-01-0003):30–39.

Dynamic Path Planning for Autonomous Vehicles: A Neuro-Symbolic Approach

591