A Predictor for Triangle Mesh Compression Working in Tangent Space

Petr Van

ˇ

e

ˇ

cek

a

, Filip H

´

acha

b

and Libor V

´

a

ˇ

sa

c

Department of Computer Science and Engineering, Faculty of Applied Sciences, University of West Bohemia,

Univerzitn

´

ı 8, 301 00 Plze

ˇ

n, Czech Republic

Keywords:

Geometry Compression, Parallelogram Prediction, Tangent Space.

Abstract:

Triangle mesh compression has been a popular research topic for decades. Since a plethora of algorithms

has been presented, it is becoming increasingly difficult to come up with significant performance improve-

ments. Some of the recent advances in compression efficiency come at the cost of rather steep implementation

and/or computational expense, which has profound consequences on their practicality. Ultimately it becomes

increasingly difficult to come up with improvements that are reasonably easy to implement and do not harm

the computational efficiency of the compression/decompression procedure. In this paper, we analyze a com-

bination of two previously known techniques, namely using the local coordinates for expressing compression

residuals and weighted parallelogram prediction, which were not previously investigated together. We report

that such approach outperforms industry standard Draco on a large set of test meshes in terms of rate/distortion

ratio, while retaining beneficial properties such as simplicity and computational efficiency.

1 INTRODUCTION

Triangle mesh compression is a common task in mesh

processing pipelines, and even the ever continuing

growth of commonly available computational power

has not diminished its importance. Its is essential for

both efficient storage and transfer of highly detailed

meshes, since even the most basic approaches allow

for a quite drastic reduction of code length in com-

parison with plain encoding.

The first generation of compression algorithms

has focused on reducing mechanistic error measures

(MSE, PSNR, Hausdorff distance) while achieving a

low data rate. Later, the field has seen a renewed

interest when perceptual metrics (DAME, MSDM,

FMPD) came into focus and perceptual fidelity be-

came the main goal (Sorkine et al., 2003; Marras

et al., 2015). Now, after decades of research, a wide

variety of algorithms is available, each bringing a

certain improvement in compression efficiency. It is

therefore quite difficult to come up with an algorithm

that outperforms the state-of-the-art significantly.

On the other hand, many of the advanced tech-

niques seem to lack in terms of real-life applicabil-

ity. Compression is mostly used to save time for

a

https://orcid.org/0000-0002-1858-2411

b

https://orcid.org/0000-0001-8956-6411

c

https://orcid.org/0000-0002-0213-3769

users, trading the slow data transmission time for fast

processing (decompression). This purpose is often

neglected in research papers, where only the com-

pression efficiency is investigated, while the compu-

tational cost of advanced algorithms is often down-

played. Similarly, the ease of implementation is often

neglected, while in real-world applications, a small

compression efficiency gain can often be neglected

when facing a high implementation cost, since in

contrast with academic-level proof-of-concept test-

ing, real-world implementations have much higher

requirements on robustness and reliability. This is,

for example, demonstrated by the current de-facto

standard for industrial mesh compression, the Google

Draco library: out of the plethora of advanced com-

pression techniques, a rather modest subset of basic,

most efficient algorithms is chosen and implemented

at the industrial robustness level.

One of the evergreen approaches is the combina-

tion of traversal-based connectivity encoding (Edge-

Breaker (Rossignac, 1999) or valence-based cod-

ing (Alliez and Desbrun, 2001)) with the parallelo-

gram prediction rule used for encoding the vertex po-

sitions. This approach has been used as a baseline

for several advancements, such as weighted parallel-

ogram coding, double/multiple parallelogram coding,

angle-based coding, encoding of residuals in local co-

ordinates and many others. Without exhaustive test-

236

Van

ˇ

e

ˇ

cek, P., Hácha, F. and Váša, L.

A Predictor for Triangle Mesh Compression Working in Tangent Space.

DOI: 10.5220/0012352900003660

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 19th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2024) - Volume 1: GRAPP, HUCAPP

and IVAPP, pages 236-243

ISBN: 978-989-758-679-8; ISSN: 2184-4321

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

ing, it is hard to tell which of these advancements

”eat the same piece of the pie”, making each other

less efficient when combined, and which can be used

in conjunction, combining their advantages. This is

especially true when considering the performance in

terms of perceptual metrics.

In these circumstances, the contributions of the

presented paper are the following:

• we demonstrate, that a combination of weighted

parallelogram prediction with encoding in local

coordinates (tangential + normal) leads to a com-

bined benefit in terms of rate-distortion perfor-

mance,

• we show that non-uniform quantization of local

coordinates leads to a considerable improvement

in rate-distortion performance in terms of percep-

tual error metrics,

• we provide comparison with state-of-the-art in-

dustrial compression library, showing that the

combined approach, in spite of its simplicity, pro-

vides a performance advantage at negligible addi-

tional cost in both implementation effort and exe-

cution computational expense.

2 RELATED WORK

In terms of single-rate triangle mesh compres-

sion, the most prominent approaches are driven

by connectivity encoding algorithms (e.g., Edge-

breaker (Rossignac, 1999), Valence coding (Touma

and Gotsman, 1998) or TFAN (Mamou et al., 2009)).

Such algorithms process a mesh during a connectiv-

ity traversal, which these approaches use to exploit

the spatial coherence of vertex positions by prediction

inferred from already encoded vertices. Their popu-

larity mainly stems from their simplicity.

While not the first, certainly the most influential

is the Parallelogram scheme proposed by Touma and

Gotsman (Touma and Gotsman, 1998). It forms a pla-

nar parallelogram from vertex positions of an adjacent

triangle to predict the position of the currently coded

vertex. Not only are most of the modern connectivity-

driven methods directly derived from it, but it is also

still being used in modern mesh compression software

(e.g., Google Draco (Galligan et al., 2018)).

The data rate of geometry can be improved by

averaging over multiple predictions where possible.

Dual parallelogram scheme proposed by Sim et

al. (Sim et al., 2003) uses a connectivity traversal

which quite often allows prediction of a single ver-

tex by two parallelograms. The FreeLence method

proposed by K

¨

alberer et al. (K

¨

alberer et al., 2005)

added an additional parallelogram prediction com-

puted from three incident vertices on the boundary of

the already processed area.

Another way of improvement is the adjustment

of the prediction itself. On curved surfaces, the

planar prediction might be inefficient in terms of

the normal direction. To this end, Gumhold and

Amjoun (Gumhold and Amjoun, 2003) proposed to fit

a higher-order surface to already encoded geometry to

estimate a dihedral angle. Ahn et al. (Ahn et al., 2006)

also predicted the dihedral angles but from neighbour-

ing triangles. By introducing weighting in the paral-

lelogram formula, some approaches were able to im-

prove the tangential part of the prediction. This was

discussed by K

¨

alberer et al. (K

¨

alberer et al., 2005)

and elaborated on by Courbet and Hudelot (Courbet

and Hudelot, 2011) who used Taylor expansion to de-

termine weights even for more complicated stencils

than parallelograms. More recently, V

´

a

ˇ

sa and Brun-

nett (V

´

a

ˇ

sa and Brunnett, 2013) estimated the weights

from vertex valences and already-known inner angles

to obtain state-of-the-art performance in predicting

tangential information.

The quantization and encoding of correction vec-

tors is also a crucial part of the problem. Most of

the methods (e.g., (Touma and Gotsman, 1998; V

´

a

ˇ

sa

and Brunnett, 2013)) quantize the encoded values in

a global coordinate system, each coordinate quan-

tized with equal precision. As was pointed out by

Lee et al. (Lee et al., 2002), this, however, leads

to high normal distortion particularly visible on pla-

nar surfaces not aligned with one of the main coor-

dinate axes. Their proposal was to work in a local

frame either represented by two inner and one dihe-

dral angle, or by axes aligned with an adjacent tri-

angle. Both these representations enable the separa-

tion of normal and tangent information, thus exploit-

ing different distributions of values in entropy cod-

ing. Gumhold and Amjoun (Gumhold and Amjoun,

2003) combined these two representations and used

a dihedral angle for normal information, while tan-

gent information was represented by planar coordi-

nates. K

¨

alberer et al. (K

¨

alberer et al., 2005) improved

this approach by quantizing the normal and tangent

information with different precision.

All the listed methods aimed to optimize mech-

anistic distortion measures (e.g., MSE, Hausdorff

distance or PSNR), which give an upper bound in

the error of absolute coordinates. These metrics,

however, do not reflect the way the human brain

perceives distortion. This is better measured by

perception-oriented metrics (e.g., MSDM2 (Lavou

´

e,

2011), DAME (V

´

a

ˇ

sa and Rus, 2012), FMPD (Wang

et al., 2012), TPDM (Torkhani et al., 2014) and

A Predictor for Triangle Mesh Compression Working in Tangent Space

237

TPDMSP (Feng et al., 2018)). In the past, there were

multiple methods that optimized such criteria (e.g.,

(Karni and Gotsman, 2000; Sorkine et al., 2003; Mar-

ras et al., 2015)). These, however, are quite complex

and are usually outperformed by conventional meth-

ods in terms of mechanistic measures. More recently,

there have been proposed a few methods that aim at

both criteria (Alexa and Kyprianidis, 2015; Lobaz and

V

´

a

ˇ

sa, 2014; V

´

a

ˇ

sa and Dvo

ˇ

r

´

ak, 2018), but require ad-

ditional complexity.

3 PRELIMINARIES

The problem that we address in this paper is the fol-

lowing: a triangle mesh is given, consisting of a con-

nectivity C = {t

i

}

T −1

i=0

, and geometry G = {v

i

}

V −1

i=0

.

Each t

i

is a triplet of integers, representing the indices

of the vertices that form the i-th triangle, and each v

i

is a triplet of floats (v

x

i

,v

y

i

,v

z

i

), representing the Carte-

sian coordinates of the i-th vertex. The objective is

to store this information into a binary stream of the

shortest possible length so that reconstruction can be

built from the stream with the following properties:

1. the connectivity is reconstructed without loss,

up to an allowed reordering of triangles and re-

indexation of vertices, and

2. the geometry is reconstructed with a certain user-

controllable precision.

Many algorithms only work when additional condi-

tions are met. In particular, a connectivity-manifold is

a mesh, where each edge is part of at most two tri-

angles (mesh border is allowed). In the following,

we assume that the input triangle mesh fulfills this

property. In order to put our contribution into con-

text, we shortly review the main building blocks of a

typical single-rate triangle mesh encoder. Next, we

are also going to discuss some extensions proposed

previously.

The most common approach to static mesh com-

pression is to process the surface in a traversal driven

by the connectivity. In each traversal step, a single tri-

angle is attached to the part of the mesh that has been

already processed. The edge of the known part of the

mesh is identified as gate, and the vertex opposite of

the gate is either already part of the processed part of

the mesh, in which case it is the task of the connec-

tivity encoder to identify which one it is, or it has not

been processed yet: in that case, the vertex position is

encoded into the data stream in some efficient way.

The authors of the popular connectivity encoder

EdgeBreaker have shown, that for connectivity-

manifold meshes, it suffices to store one of five sym-

v

B

v

L

v

O

p

O

v

R

residual

¯

±

°

®

Figure 1: Prediction stencil.

bols C, L, E, R or S per triangle, where the C symbol

represents encountering a previously unknown vertex,

while the remaining symbols encode which known

vertex is the tip vertex of the current triangle. After

each expansion step, the next gate is selected using

certain implicit rules, i.e. without the need for storing

any additional data.

A typical situation that arises when a previously

unvisited vertex is encountered during mesh traversal

is depicted in Fig. 1. The local configuration is also

known as the prediction stencil. The objective is to

store information that allows reconstructing the coor-

dinates of the opposite vertex v

R

with as few bits as

possible. A common prediction-correction strategy is

usually applied: based on the known information, the

decoder builds a prediction of the following encoded

value(s); the encoder mimics the prediction and en-

codes the difference between the actual value(s) and

the prediction, usually quantized to some precision.

If the predictions are accurate, then the magnitudes of

the corrections are generally small in comparison with

original data, leading to a decrease in the entropy of

the data stream and ultimately to efficient compres-

sion.

A triangle adjacent to the gate in the processed

part of the mesh (base triangle) consists of vertices

v

L

, v

R

and v

B

. The coordinates of these vertices are

known to both the encoder and the decoder (up to

quantization error), and therefore the decoder can use

them to estimate the position of the opposite vertex

v

O

. A popular choice of prediction scheme is the par-

allelogram predictor, formulated as

p

O

=

¯

v

L

+

¯

v

R

−

¯

v

B

, (1)

where p

O

is the prediction and

¯

v stands for the co-

ordinates of a vertex v as known by the decoder, i.e.

possibly affected by quantization.

This basic scheme has been modified in different

ways, usually trying to incorporate additional infor-

mation available at the decoder in order to improve

the accuracy of the prediction. In particular, when a

new vertex is encountered, it can be predicted from

GRAPP 2024 - 19th International Conference on Computer Graphics Theory and Applications

238

two (Sim et al., 2003) or multiple (Cohen-Or et al.,

2002) gates. The predictions are usually averaged and

for practical meshes, this results in an improved per-

formance.

Alternatively, it is possible to transmit the mesh

connectivity first, processing the mesh geometry in

a separate subsequent traversal. This way, the infor-

mation from the connectivity, in particular vertex va-

lences, can be used for the prediction as well. With

the valences known, it is possible to estimate the inner

angles in the currently processed triangle as 2π/d

i

,

where d

i

is the valence of the i-th vertex. The authors

of the weighted parallelogram scheme have derived

that after normalising the inner angles to sum up to

π, so that they form a proper triangle, it is possible to

predict the position of the vertex v using a generalised

parallelogram predictor

p

O

= w

1

¯

v

L

+ w

2

¯

v

R

− (1 − w

1

− w

2

)

¯

v

B

, (2)

where the weights w

1

and w

2

are computed as

w

1

=

cot(β) + cot(δ)

cot(δ) + cot(γ)

, (3)

w

2

=

cot(α) + cot(γ)

cot(δ) + cot(γ)

, (4)

where the angles are the known inner angles of the

base triangle α and β, and the predicted inner angle

of the newly attached triangle γ and δ, as shown in

Fig. 1. By incorporating the inner angles around the

vertices v

L

and v

R

in other triangles known to the de-

coder, it is possible to build an even more accurate

prediction of the angles γ and δ, leading to further im-

provement of the data rate.

Another modification to the basic algorithm has

been proposed by the authors of the Angle-Analyzer

algorithm(Lee et al., 2002). They proposed two in-

dependent ideas: first, rather than representing the

encoded vertex in terms of Cartesian coordinates (or

their corrections), they represent it in terms of inner

angles of the new triangle and a dihedral angle be-

tween the known adjacent triangle and the newly at-

tached triangle. Second, they propose separating the

normal and tangential components of the correction in

separate contexts of the entropy coder. This approach

can be manifested as either

• encoding the inner angles in a single context and

dihedral angles in another, when encoding the ver-

tex position in terms of angles, or

• separating the tangential and normal components

of the correction and encoding each in separate

contexts, when encoding the vertex position in

terms of coordinates.

In the latter case, the authors use a local coordinate

frame as follows: v

L

is used as the origin, and the

following orthonormal vectors are used as the basis:

x

1

=

(v

L

− v

R

) × (v

B

− v

R

)

∥(v

L

− v

R

) × (v

B

− v

R

)∥

x

2

=

v

L

− v

R

∥v

L

− v

R

∥

x

3

= x

1

× x

2

(5)

The coordinates of the opposite vertex v

O

are ex-

pressed in this coordinate system and encoded. This

way the first coordinate of the encoded vector rep-

resents the normal component, while the remaining

ones represent the tangential component. The main

benefit of this approach is that the statistical distribu-

tion of the normal component is different from that of

the tangential components, which is in turn exploited

by the used entropy coder.

Another approach to encoding vertices in local co-

ordinates has been taken by the authors of the Higher

Order Prediction scheme (Gumhold and Amjoun,

2003). They use a cylindrical coordinate system with

the origin at the center of the gate and axis-aligned

with the gate. A vertex in this coordinate system is

described by a triplet of scalars (x,y,α), and recon-

structed as

¯

v

O

= (

¯

v

L

+

¯

v

L

)/2 + xx

2

+ sin(α)yx

1

+ cos(α)yx

3

,

(6)

where (x

1

,x

2

,x

3

) represent the same local basis ori-

entation as in Eq. (5). The local coordinates x and y

can be predicted using the parallelogram rule, while

the angle α can be estimated using the higher order

fitting of already known vertices, at a non-negligible

computational cost. Note that in order to compensate

for different sampling rates at varying distances from

the coordinate system axis, rather than quantizing the

angle α directly, the quantity yα is quantized end en-

coded. In decoding, y is decoded first and used to

decode the actual value of α.

These approaches to encoding vertex positions us-

ing local coordinates suffer from serious flaws. The

approach of Lee et al. (Lee et al., 2002) uses the left

gate vertex as origin. This way the information from

known positions of the right gate vertex and base ver-

tex is essentially ignored, except for gate orientation

and base triangle normal, which influence the spatial

alignment of the basis. The approach by Gumhold

and Amjoun (Gumhold and Amjoun, 2003) on the

other hand uses a non-orthogonal, non-uniform cylin-

drical grid, which leads to a hard-to-predict behaviour

of the quantization procedure. In the following, we

propose a simple way to avoid both these problems.

A Predictor for Triangle Mesh Compression Working in Tangent Space

239

4 PROPOSED ALGORITHM

We propose using the common connectivity-driven

traversal based approach, with corrections encoded

in a local orthonormal basis. The key insight is that

origin of the basis can be put to the predicted po-

sition of the tip vertex p

O

. In terms of resulting

symbol entropy, this approach is equivalent to esti-

mating the local coordinates using some prediction

scheme. In contrast with the approach of Gumhold

and Amjoun (Gumhold and Amjoun, 2003), we use

the weighted parallelogram scheme for prediction.

Another design choice is the section of the basis

orientation. We have experimented with two possi-

bilities. One is equivalent to the basis described by

Eq. 5, i.e. aligned with the gate edge. However, a dif-

ferent basis with similar properties can be constructed

as follows:

x

′

1

=

(v

L

− v

R

) × (v

B

− v

R

)

∥(v

L

− v

R

) × (v

B

− v

R

)∥

x

′

2

=

p

O

− v

B

∥p

O

− v

B

∥

x

′

3

= x

1

× x

2

(7)

Rather than aligning the basis with the gate ori-

entation, this basis is aligned with the direction to-

wards the prediction from the known vertex v

B

. Note

that, for a symmetric prediction stencil, the bases are

equivalent, and in both cases the bases separate the

normal information into the first component of the lo-

cal coordinate triplet.

Separating the normal has two profound conse-

quences: first, the normal component usually has a

significantly different statistical distribution than the

tangential components. While the tangential compo-

nents represent the sampling of the surface, which

may or may not be regular, the normal component es-

sentially carries the information about the shape.

This then implies the second consequence: having

the sampling and shape information separated, it is

possible to use a different precision in encoding each.

While for textured meshes, even the tangential posi-

tion of vertices may be important, from the point of

view of shape the sampling is mostly irrelevant, and

therefore it may be beneficial to sample the normal

component more precisely.

5 EXPERIMENTS

As mentioned above, the distortion of normals plays

a significant role in perceiving the quality of a com-

pressed triangle mesh. Figure 2 shows the compar-

ison of mesh distortion while maintaining the same

compression ratio using the prediction corrections in

world coordinates and in coordinates aligned with the

tangential plane.

We have tested the compression distortion of a

planar surface in various orientations. The traditional

world space prediction space is very sensitive to the

orientation of the surface. This is significantly re-

duced when using the tangent space. While target-

ing a human perception metric (high sensitivity to

changes in dihedral angles close to flat), it is even pos-

sible to obtain better results by increasing the number

of quantization levels for the normal direction, while

reducing the number of quantization levels in tangent

directions to maintain the same compression ratio.

To compare the proposed method, we have se-

lected three existing algorithms. Draco (Galligan

et al., 2018) is a well-known and commonly used

library for mesh compression. In our tests, we

use the default settings. Error propagation con-

trol in laplacian mesh compression (EPC) (V

´

a

ˇ

sa and

Dvo

ˇ

r

´

ak, 2018) is a compression method that focuses

on achieving good results in both perceptual and

mechanistic quality of the compressed meshes. Fi-

nally, we compare the results against the Weighted

parallelogram prediction (WP) (V

´

a

ˇ

sa and Brunnett,

2013) in world space.

To measure the quality of compressed models, we

have chosen three different metrics:

1. Mean squared error (MSE) is a widely used met-

ric, unfortunately, this metric only measures the

distances between the original and distorted ver-

tices. Similarly to other areas, this metric is not

very suitable for measuring the quality of com-

pression with respect to shape preservation or vi-

sual quality, yet, it is used very often.

2. Metro (Cignoni et al., 1998) metric is another

commonly used mechanistic error measure. It

builds on analyzing the nearest point pairs on the

two compared meshes, and it does not require the

compared meshes to have the same connectivity.

3. Dihedral angle mesh error (V

´

a

ˇ

sa and Rus, 2012)

(DAME) is one of the metrics designed to take hu-

man perception into account, which we have used,

since comparing artifacts introduced by compres-

sion, it is important to measure, how these arti-

facts are perceived by humans.

For our tests we have used models from the

thingi10k archive (Zhou and Jacobson, 2016). In or-

der to be able to compare all methods at the same

BPV, we use a subset fulfilling these criteria: (a) has

more than 20.000 vertices, (b) has a single compo-

nent, (c) is 2-manifold and (d) is watertight. Out of

the available models, over 500 meet these criteria.

GRAPP 2024 - 19th International Conference on Computer Graphics Theory and Applications

240

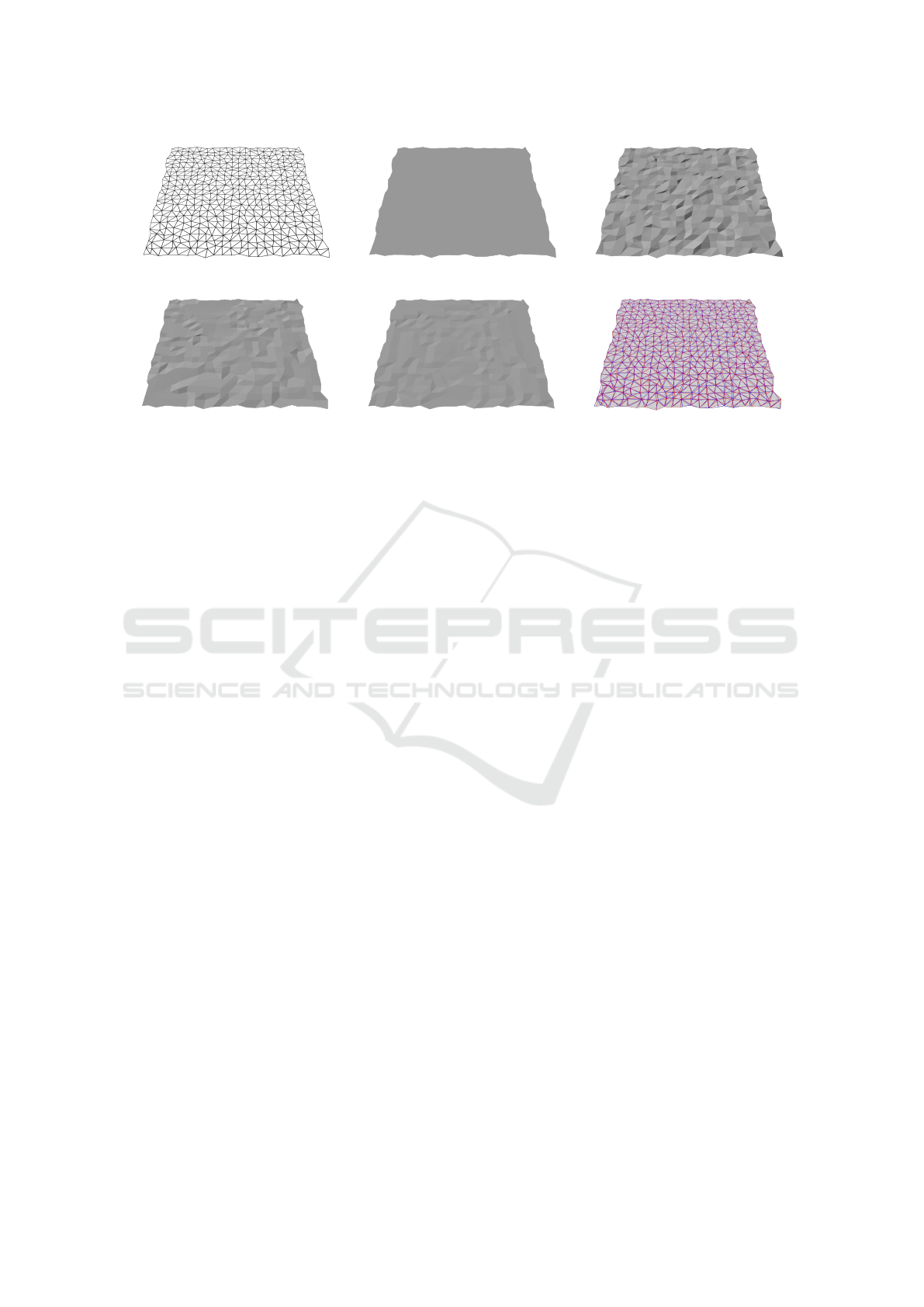

(a) Original mesh (wireframe). (b) Original mesh (shaded). (c) Compression in world space.

(d) Compression in tangent space. (e) Finer quantization in normal direc-

tion.

(f) Precise encoding of the first triangle.

Figure 2: The comparison of quantization in world space and tangential space. The original model is a plane rotated 17.5°

around the x axis - (a) the wireframe and (b) shaded model. Compressed versions have a compression rate of approx. 13 BPV.

(c) The compression in world space coordinates is very sensitive to rotation. (d) In tangent space, the errors are mainly

introduced by the precision of the first triangle, but generally it is less sensitive to rotation than compression in world space.

(e) It is even possible to increase the precision in the normal direction, while reducing the precision in target directions while

maintaining the same compression rate. (f) In this particular scenario, when all triangles have the same normal, the normal

component should be zero. Due to the distortion of the first triangle, this is not generally true. Storing the first triangle

(dark triangle in top right corner) without distortion allows a significant increase of the compression rate (8.6 BPV), while

maintaining constant normal vectors. The triangles of the original mesh are blue, and the compressed mesh is red.

The proposed method based on weighted parallel-

ogram is presented in two variants - the standard tan-

gent space compression and compression with double

precision of the normal component.

We have tested two formulations of local basis.

The choices are the basis aligned with the prediction

gate, as defined in Eq. 5, and the basis aligned with

the direction towards tip vertex, as defined in Eq. 7. In

the experiments, the basis of Eq. 7 provides slightly

better results on average, but slightly worse in the me-

dian. For the rest of our experiments, we have used

this basis, but choosing the better of these two op-

tions for a particular model can in some cases lead to

a considerable reduction of the resulting distortion of

up to 45% in terms of MSE and up to 22% in terms of

Metro and DAME. Note, however, that for a majority

of models, the difference tends to be rather small.

Note that Draco does not allow fine control over

the data rate (measured in bits per vertex - BPV), but

only allows choosing an integer-valued quality con-

trol parameter. For this reason, we use Draco as the

baseline method and we compare the other methods

relative to Draco, since the other competing method

allow fine tuning the resulting data rate and matching

the Draco result in terms of achieved data rate. There-

fore, in the figures, we present a quantity that relates

a particular error measure achieved by a particular al-

gorithm to the same error measure achieved by Draco

at the same data rate. A value of 1 indicates equiv-

alent result, while a values smaller than 1 indicate a

performance gain w.r.t. Draco (lower distortion at the

same data rate), and values larger than 1 indicate a

performance loss.

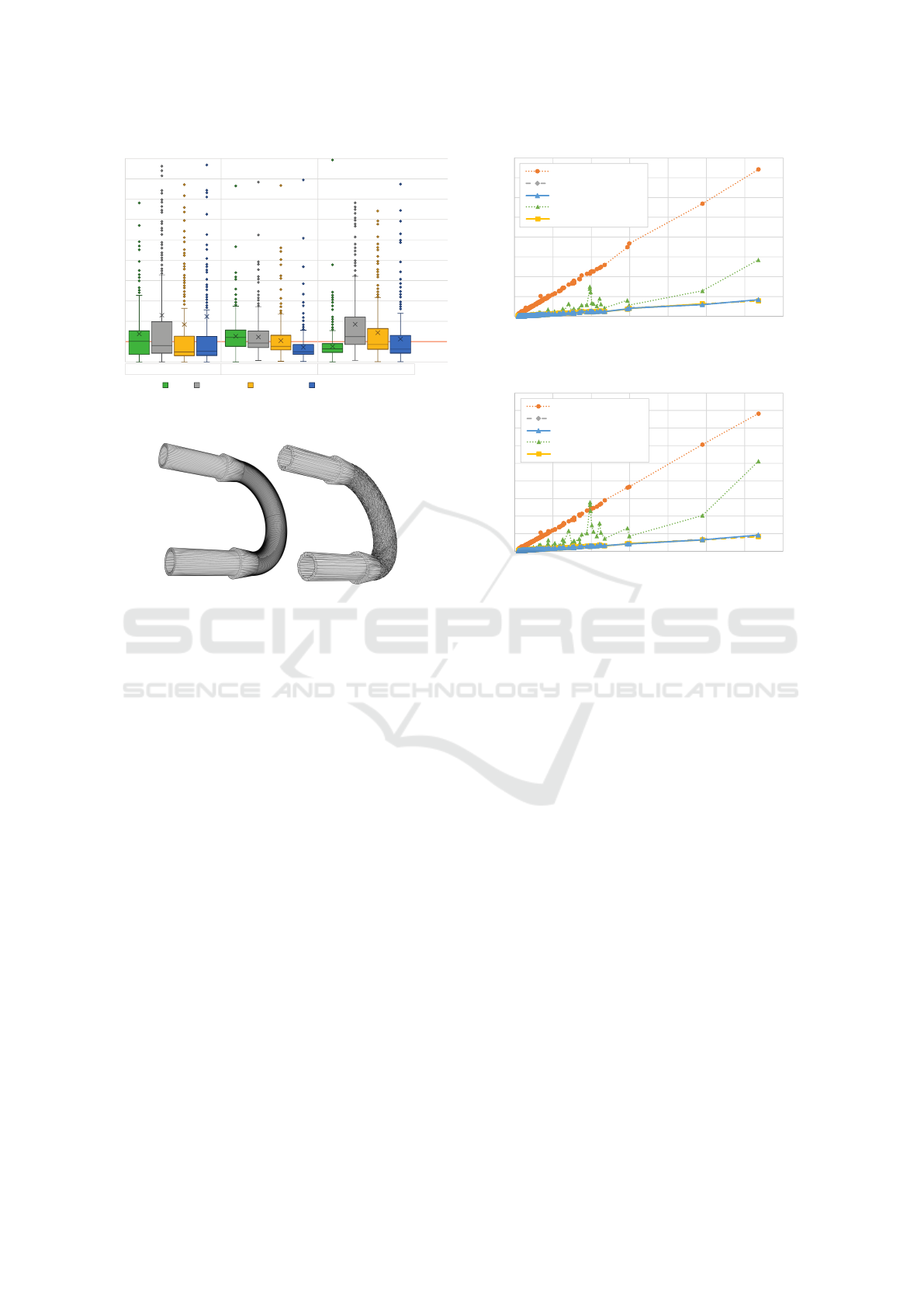

In terms of MSE, our method outperforms Draco

and EPC in most cases (Figure 3). On the other hand,

in the given dataset, there appears a number of models

where it performs rather poorly. One such example is

depicted in Figure 4. Its MSE is 151× higher than

for Draco, maintaining the same BPV (these outliers

are not in the plot). Due to these outliers, there is a

large difference between median and average cases. A

common feature of these outliers are usually a rather

low data rate for Draco standard settings (5 - 6 BPV)

and a high variability of triangle sizes. Using Metro

metric, the proposed algorithm is slightly better than

Draco when using uniform quantization in all direc-

tions. It outperforms Draco when halving the size of

quantization bins (doubling the number of levels) for

normal direction. For most of the models, the pro-

posed method performs better in DAME metric than

Draco. Similarly to MSE, the outliers are pushing the

average much higher than the median case.

The increasing computational power allows the

processing of huge triangle meshes. The compres-

sion process itself, although not time-critical, should

not exceed a user-acceptable limit, both in compres-

A Predictor for Triangle Mesh Compression Working in Tangent Space

241

0

1

2

3

4

5

6

7

8

9

10

Draco

EPC World Space Tangent Space Tangent Space Subsampled

MSE Metro Dame

Figure 3: Comparison of compression methods relative to

Draco using MSE, Metro and Dame.

Figure 4: Original model (left) and model compressed by

proposed method (right) on 5.77 BPV. The MSE is 151

times higher than the Draco compression at comparable

BPV. The model consists of triangles with extremely vary-

ing sizes and shapes, from large narrow triangles to rela-

tively small, nearly equilateral triangles.

sion and especially in decompression phase. We have

measured the compression and decompression time

for all methods. Unfortunately, it is not possible to

measure purely the processing time, as the process is

usually combined with I/O operations. For this rea-

son, we have measured the overall time of the process,

including the reading and parsing of the input file and

writing the result to the disk, targeting at the same

BPV. For the measurement, we have used a commod-

ity hardware - a notebook with AMD Ryzen 7 5800H,

16 GB of RAM, and an SSD hard drive. Our method

is implemented in .NET 6 as a single-threaded appli-

cation, without any special optimization.

The compression processes for Draco and our im-

plementation exhibit a linear behavior in terms of

number of vertices, while EPC is less predictable

(Figure 5), probably since the process involves solv-

ing a system of linear equations. Due to the simplicity

of the compression algorithm, the weighted parallel

prediction and our proposed modifications are much

faster than Draco, even in the non-optimized devel-

oper version. There is also only a negligible impact of

the proposed modification on the overall performance

0

10

20

30

40

50

60

70

80

0 200 400 600 800 1000 1200 1400

Time [s]

Number of vertices [thousands]

Draco

World Space

Tangent Space Subsampled

EPC

Tangent Space

Figure 5: Comparison of compression time.

0

5

10

15

20

25

30

35

40

45

0 200 400 600 800 1000 1200 1400

Time [s]

Number of vertices [thousands]

Draco

World Space

Tangent Space Subsampled

EPC

Tangent Space

Figure 6: Decomparison of compression time.

caused by the transformation of the coordinates into

the tangent space. It is also worth noting that Draco

compression exceeds 10 seconds for models with ap-

proximately 200,000 vertices.

While the compression process usually affects

only a limited group of users - the creators, de-

compression affects the user experience of the end

users (Figure 6). The decompression process is faster

than the compression process for all methods. While

Draco and Parallelogram-based method nearly halved

the time, in the case of EPC, the difference is much

smaller. Similarly to the compression process, our im-

plementation is more than 8 times faster than Draco.

6 CONCLUSION

We proposed a modification of the weighted parallel-

ogram compression method based on the transforma-

tion of vertex coordinates into tangential space. The

transformation considerably improves the quality of

compression in terms of objective and subjective met-

rics, while maintaining a simple algorithm. Accord-

ing to our tests, this modification outperforms in terms

of rate-distortion ratio some well-known compression

methods , such as Draco and EPC, for most meshes,

while being considerably faster.

GRAPP 2024 - 19th International Conference on Computer Graphics Theory and Applications

242

There are, however, some triangle configurations

that are not suitable for the proposed algorithm. In the

future, we plan to investigate the particular properties

that cause the drop of compression performance of

our algorithm in these cases, and the possible strate-

gies to mitigate this performance loss.

ACKNOWLEDGEMENTS

This work was supported by the project 20-02154S

of the Czech Science Foundation. Filip H

´

acha was

partially supported by the University specific research

project SGS-2022-015, New Methods for Medical,

Spatial and Communication Data.

REFERENCES

Ahn, J.-H., Kim, C.-S., and Ho, Y.-S. (2006). Predictive

compression of geometry, color and normal data of 3-

d mesh models. IEEE Transactions on Circuits and

Systems for Video Technology, 16(2):291–299.

Alexa, M. and Kyprianidis, J. E. (2015). Error diffusion on

meshes. Computers & Graphics, 46:336–344. Shape

Modeling International 2014.

Alliez, P. and Desbrun, M. (2001). Valence-Driven Connec-

tivity Encoding for 3D Meshes. Computer Graphics

Forum.

Cignoni, P., Rocchini, C., and Scopigno, R. (1998). Metro:

Measuring error on simplified surfaces. Computer

Graphics Forum, 17:167 – 174.

Cohen-Or, D., Cohen, R., and Irony, R. (2002). Multi-way

geometry encoding. Tech. Rep., Tel Aviv University.

Courbet, C. and Hudelot, C. (2011). Taylor prediction for

mesh geometry compression. Computer Graphics Fo-

rum, 30(1):139–151.

Feng, X., Wan, W., Xu, R. Y. D., Chen, H., Li, P., and

S

´

anchez, J. A. (2018). A perceptual quality metric for

3d triangle meshes based on spatial pooling. Frontiers

of Computer Science, 12(4):798–812.

Galligan, F., Hemmer, M., Stava, O., Zhang, F., and Brettle,

J. (2018). Google/draco: a library for compressing

and decompressing 3d geometric meshes and point

clouds.

Gumhold, S. and Amjoun, R. (2003). Higher order pre-

diction for geometry compression. In Proceedings

of Shape Modeling International 2003, SMI ’03,

page 59, USA. IEEE Computer Society.

Karni, Z. and Gotsman, C. (2000). Spectral compression

of mesh geometry. In Proceedings of the 27th An-

nual Conference on Computer Graphics and Inter-

active Techniques, SIGGRAPH ’00, page 279–286,

USA. ACM Press/Addison-Wesley Publishing Co.

K

¨

alberer, F., Polthier, K., Reitebuch, U., and Wardetzky, M.

(2005). Freelence - coding with free valences. Com-

puter Graphics Forum, 24(3):469–478.

Lavou

´

e, G. (2011). A multiscale metric for 3d mesh vi-

sual quality assessment. Computer Graphics Forum,

30(5):1427–1437.

Lee, H., Alliez, P., and Desbrun, M. (2002). Angle-

analyzer: A triangle-quad mesh codec. Computer

Graphics Forum, 21(3):383–392.

Lobaz, P. and V

´

a

ˇ

sa, L. (2014). Hierarchical laplacian-based

compression of triangle meshes. Graphical Models,

76(6):682–690.

Mamou, K., Zaharia, T., and Pr

ˆ

eteux, F. (2009). Tfan:

A low complexity 3d mesh compression algorithm.

Comput. Animat. Virtual Worlds, 20(2-3):343–354.

Marras, S., V

´

a

ˇ

sa, L., Brunnett, G., and Hormann, K. (2015).

Perception-driven adaptive compression of static tri-

angle meshes. Computer-Aided Design, 58:24–33.

Solid and Physical Modeling 2014.

Rossignac, J. (1999). Edgebreaker: Connectivity compres-

sion for triangle meshes. IEEE Transactions on Visu-

alization and Computer Graphics, 5(1):47–61.

Sim, J.-Y., Kim, C.-S., and Lee, S.-U. (2003). An efficient

3d mesh compression technique based on triangle fan

structure. Signal Processing: Image Communication,

18(1):17–32.

Sorkine, O., Cohen-Or, D., and Toledo, S. (2003). High-

pass quantization for mesh encoding. In Proceedings

of the 2003 Eurographics/ACM SIGGRAPH Sympo-

sium on Geometry Processing, SGP ’03, page 42–51,

Goslar, DEU. Eurographics Association.

Torkhani, F., Wang, K., and Chassery, J.-M. (2014). A

curvature-tensor-based perceptual quality metric for

3d triangular meshes. Machine GRAPHICS & VI-

SION, 23(1/2):59–82.

Touma, C. and Gotsman, C. (1998). Triangle mesh com-

pression. In Proceedings of the Graphics Interface

1998 Conference, June 18-20, 1998, Vancouver, BC,

Canada, pages 26–34.

V

´

a

ˇ

sa, L. and Rus, J. (2012). Dihedral angle mesh error: a

fast perception correlated distortion measure for fixed

connectivity triangle meshes. Computer Graphics Fo-

rum, 31(5):1715–1724.

V

´

a

ˇ

sa, L. and Brunnett, G. (2013). Exploiting connectiv-

ity to improve the tangential part of geometry predic-

tion. IEEE Transactions on Visualization and Com-

puter Graphics, 19(9):1467–1475.

V

´

a

ˇ

sa, L. and Dvo

ˇ

r

´

ak, J. (2018). Error propagation control

in laplacian mesh compression. Computer Graphics

Forum, 37(5):61–70.

Wang, K., Torkhani, F., and Montanvert, A. (2012). A

fast roughness-based approach to the assessment of

3d mesh visual quality. Computers & Graphics,

36(7):808–818. Augmented Reality Computer Graph-

ics in China.

Zhou, Q. and Jacobson, A. (2016). Thingi10k: A

dataset of 10,000 3d-printing models. arXiv preprint

arXiv:1605.04797.

A Predictor for Triangle Mesh Compression Working in Tangent Space

243