Hand Movement Recognition Based on Fusion of Myography Signals

Shili Wala Eddine

1 a

, Youssef Serrestou

2

, Slim Yacoub

3

, Ali H. Al-Timemy

4 b

and Kosai Raoof

4 c

1

ENSIM- LAUM ENISO, Le Mans University, University of Sousse (ENISO), France

2

Le Mans Univeristy, Le Mans, France

3

INSAT-Carthage University, Tunis, Tunisa

4

Biomedical Eng. Department, Al-Khwarizmi College of Engineeing, University of Baghdad, Iraq

Wala Eddine.Shili.Etu@univ-lemans.fr, youssef.serrestou@univ-lemans.fr, slim.yacoub@insat.ucar.tn,

ali.altimemy@kecbu.uobaghdad.edu.iq, kosai.raoof@univ-lemans.fr

Keywords:

Acousto Myography (AMG), Electromyography (EMG), Mechanomyography (MMG), Support Vector

Machine (SVM).

Abstract:

This article presents a hand movement classification system that combines acoustic myography (AMG) sig-

nals, electromyography (EMG) signals and mechanomyogram signal (MMG) data. The system aims to accu-

rately predict hand movements, with the potential to improve the control of hand prostheses. A dataset was

collected from 9 individuals who repeated 10 times each of 4 hand movements (hand close, hand open, fine

pinch and index flexion). The system, with a Support Vector Machine (SVM) classifier, achieved an accuracy

score of 97%, demonstrating its potential for real-time hand prosthesis control. The combination of AMG,

EMG, and MMG signals proved to be effective in accurately classifying hand movements.

1 INTRODUCTION

Enhancing quality of life for people with impaired

hand mobility is a major public health challenge. Ad-

vanced real-time controlled hand prosthetics offer a

promising solution (Smith, 2020). However, accu-

rately predicting hand movements under real-life con-

ditions remains an unmet need (Johnson and Chen,

2017).

Recent advances in machine learning have created

new possibilities to address this challenge (Hastie

et al., 2009). Prior studies have proposed pre-

diction systems based on electromyography (EMG)

(Li and Zhang, 2013), acoustic myography (AMG)

(Gupta and Patel, 2022) or MMG signals (Castillo

et al., 2021). However, single modalities have limita-

tions—EMG is susceptible to electromagnetic noise

while AMG suffers from motion artifacts (Scheme

and Englehart, 2011). Hybrid systems combining

EMG and MMGs have shown promise (Harrison

et al., 2013) but have not fully mitigated these issues.

To overcome these hurdles, we propose a novel

multi-modal approach by fusing AMG, EMG and

a

https://orcid.org/0009-0004-3815-8361

b

https://orcid.org/0000-0003-2738-8896

c

https://orcid.org/0000-0002-9775-7485

MMG signals. This provides complementary infor-

mation for robust movement prediction: AMG cap-

tures muscle vibrations revealing motor unit recruit-

ment (Mamaghani et al., 2001); EMG measures elec-

trical potentials for high temporal resolution (Shcher-

bynina et al., 2023); MMGs detect limb accelerations

indicating direction and speed (Al-Timemy et al.,

2022). Furthermore, the multi-channel input gives

machine learning algorithms more informative fea-

tures to accurately discriminate movements (Farina

et al., 2014a). Modality-specific artifacts also aver-

age out when the signals are combined, improving

the overall signal-to-noise ratio (Lim et al., 2008). Fi-

nally, ensemble methods leveraging classifiers trained

on each signal lead to higher accuracy than single

modalities alone (Farina et al., 2014b).

In summary, the diversity of signal sources, the

complementary nature of the information they pro-

vide, and the possibility of using ensemble methods

all contribute to the potential for improved accuracy

when combining AMG with EMG and MMGs for

hand movement classification. Extracting discrimi-

native features from different signal channels and ef-

fectively combining them through machine learning

techniques are essential for achieving high accuracy

in this field.

In this paper, we detail the development of a ma-

Eddine, S., Serrestou, Y., Yacoub, S., Al-Timemy, A. and Raoof, K.

Hand Movement Recognition Based on Fusion of Myography Signals.

DOI: 10.5220/0012350000003657

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 17th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2024) - Volume 1, pages 733-738

ISBN: 978-989-758-688-0; ISSN: 2184-4305

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

733

chine learning model for classifying hand movements

exploiting the synergistic fusion of AMG, EMG and

MMGs. The proposed model could potentially be ap-

plied for real-time hand prosthesis control to improve

accuracy, pending future implementation and testing.

The results demonstrate promising performance to-

wards more intuitive prosthetic control in the future.

2 DATA ACQUISITION

Dataset was collected from individuals performing

four distinct hand movements: hand close, hand open,

fine pinch, and index finger flexion. The integra-

tion of AMG, EMG, and MMG signals, also known

as Mechanomyography (MMG) resulted in a multi-

dimensional dataset primed for advanced processing.

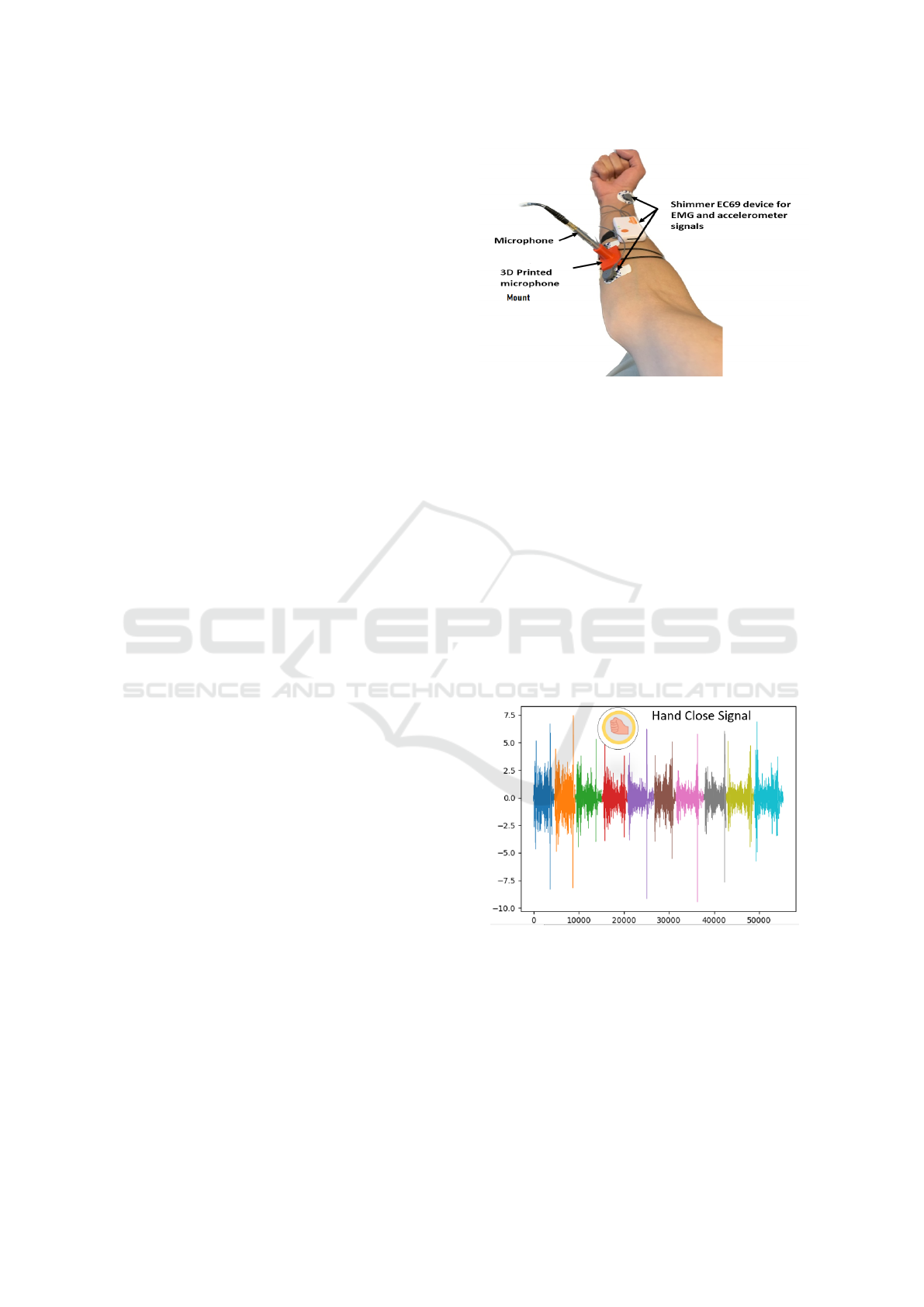

2.1 Materials

The acoustic myography (AMG) signal was recorded

using a microphone model MPA416, with a frequency

range of 20-20kHz and sensitivity of 50mV/Pa. Pre-

vious literature (Orizio et al., 1989)-(Beck and et al.,

2005) has shown that the frequency range of AMG

signal is 5-100 Hz. To securely position the micro-

phone and reduce signal noise (see Fig 1.), a custom

3D-printed apparatus was developed to fix the micro-

phone on the skin surface over the target forearm mus-

cles (Harrison et al., 2013). This minimized motion

artifacts and ensured consistent AMG capture during

hand movements. AMG signals were sampled at 1024

Hz.

The 3D-printed microphone enclosure (Yacoub

et al., ) was specifically designed to house micro-

phone. The base of the enclosure has a tapered shape,

and the opposite end of the microphones snugly fits

into the mount, thereby eliminating any distortions

caused by microphone movements. Inadequate fix-

ation of the microphone can cause alterations of the

muscle signals.

The Shimmer EC69 device was used to record

both the electromyography (EMG) and MMG sig-

nals. Sensors were strategically positioned on the up-

per arm to capture muscle activation potentials and

limb acceleration. EMG signals were also sampled at

1024 Hz.

Fig. 1 shows the locations of shimmer device that

was used to record both of EMG and MMG signals,

with microphone which record AMG signal.

Figure 1: Channel locations on the upper forearm of the

right hand of the subject.

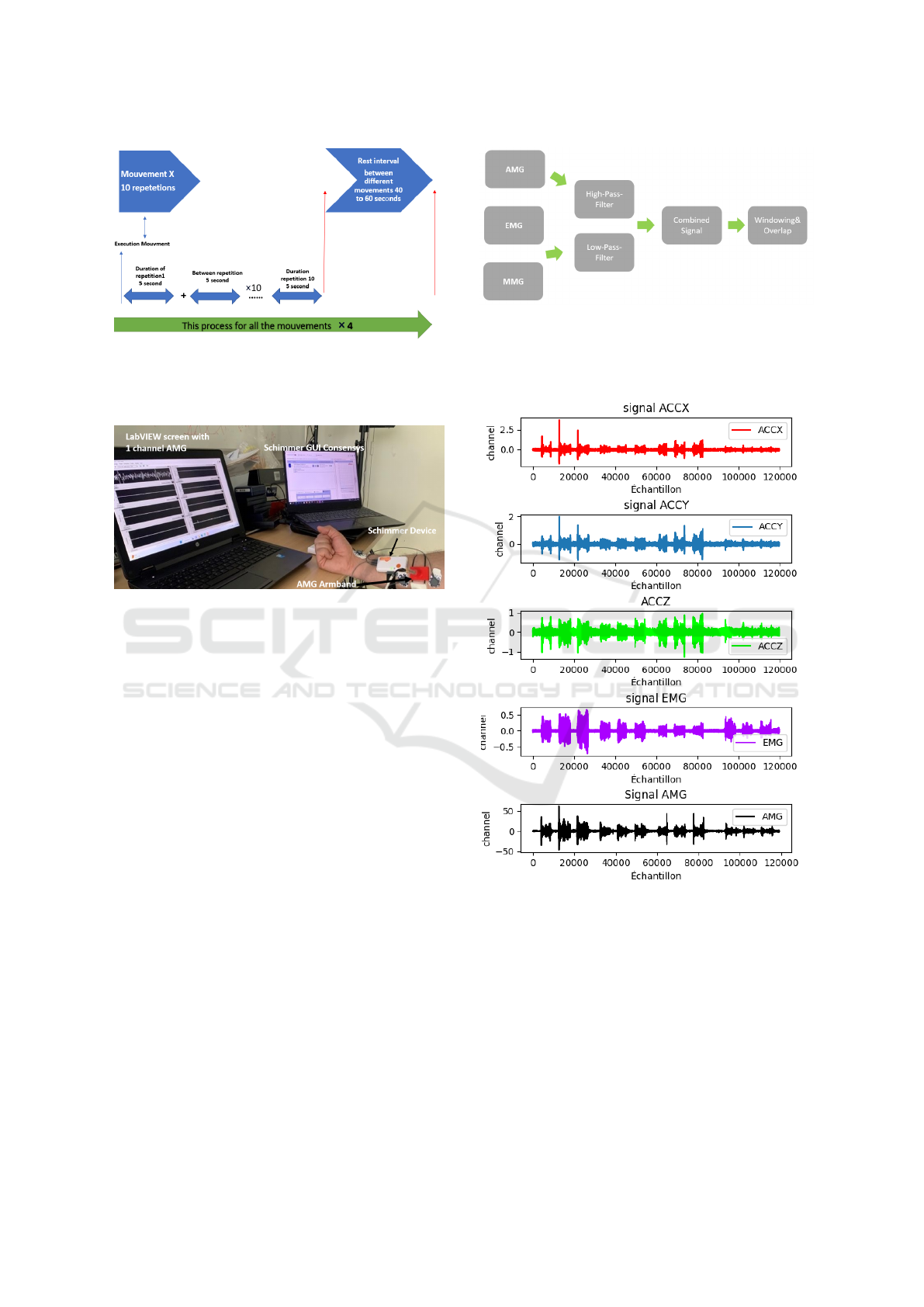

2.2 Data Collection

To evaluate the effectiveness of combing signals for

hand movement classification, a dataset was collected

from 9 subjects (9 males). The mean age of partic-

pants is 23 years. Prior to participating in the study,

all participants provided informed consent and signed

consent forms. The exprimental protocol was done

according to the declaration of Helsinki. Fig.1 il-

lustrate the position of each sensors. Subjects are

asked to repeat 10 times four distinct hand move-

ments: hand close, hand open, fine pinch, and index

finger flexion. For exemple Fig.2 illustrates an ex-

ample of a signal where one person’s hand is closed,

repeated 10 times.

Figure 2: Example of ten repetitions of hand closure AMG

Signal.

In total, the dataset comprises data from 9 subjects

executing 4 distinct hand movements: close, open,

fine pinch, and index finger flexion. Each movement

was repeated 10 times, recorded across 5 channels

(AMG, EMG, and 3 MMG), with a sampling rate of

1024 Hz. The duration of each repetition was approx-

imately 5 seconds, and there was a rest interval of 40

to 60 seconds between movements as Fig.3 illustrates.

BIOSIGNALS 2024 - 17th International Conference on Bio-inspired Systems and Signal Processing

734

Figure 3: Execution mouvement Process.

Fig.4 illustrates the full experimental setup which

integrates signals from AMG, EMG, and MMG.

Figure 4: The setup of combining AMG with EMG and

MMG signals.

3 FEATURE EXTRACTION

3.1 Data Processing

The acquisition of AMG and EMG-MMG signals was

done by different devices. A synchronization pro-

cess is necessary to harmonize these signals. Ini-

tially, AMG data was loaded, followed by the ac-

quisition of data from MMGs and EMG sensors (via

a Shimmer device). Subsequently, the EMG signal

underwent high-pass filtering, and the signal lengths

among AMG, MMG, and EMG data streams were

harmonized to achieve synchronization. These syn-

chronized signals were combined into a singular vari-

able, yielding a cohesive dataset suitable for advanced

processing. As shown in Fig.5, the data streams were

first loaded separately and underwent pre-processing

such as filtering. They were then aligned in length and

combined into a single matrix for further analysis.

Signal segmentation employed a window size of

100 samples, facilitating the division of the signal

stream into discrete segments. To ensure continuous

and overlapping signal segments, an intentional over-

lap of 50 was strategically applied, enhancing the ro-

bustness and completeness of subsequent analyses.

Figure 5: Preprocessing signals.

Fig. 6, it displays an example of the 5 channels

(AMG, 3-channels MMG and EMG) signals recorded

simultaneously during a hand movement.

Figure 6: Example of the 5 channels AMG EMG and MMG

signals.

3.2 Feature Extraction

The feature extraction process was performed using

windowing and signal characteristics such as min,

max, and standard deviation. The window size used

was 100 samples, and the overlap was 50 to increase

the amount of data for training. Each window was

considered as a data sample.

Given that the signals were recorded from 5 chan-

nels (AMG, 3 MMGs, EMG), and 4 features (mean,

Hand Movement Recognition Based on Fusion of Myography Signals

735

standard deviation, min, max) were extracted from

each channel per window, the total number of features

extracted per window was 5 channels × 4 features =

20 features.

Therefore, the total number of samples (win-

dows) multiplied by the number of features per sam-

ple yielded the final feature space/dimension of the

dataset.

The features extracted from each window were the

signal mean, standard deviation, minimum and max-

imum values from each of the 5 channels. These 20

features per window for each person were then nor-

malized using standard scaling to prepare them for the

machine learning model.

Summary of Feature Extraction Process

The feature extraction in totaling 20 features per win-

dow. Multiplying this by the number of windows pro-

vided the dataset’s final feature space. Each window’s

20 features, capturing signal characteristics from each

channel, were normalized using standard scaling for

machine learning preparation

Dataset Specifications:

Number of Persons 9

Number of Movements 4

Number of Channels 5

Number of Features per Window per Movement 20

Number of Windows per Movement 1000

4 HAND MOVEMENT

RECOGNITION SYSTEM

The methodology involves the use of sensitive micro-

phones and EMG and MMG signals to combine sig-

nal quality. We will divide each movement into fixed-

size windows and extract features from each window.

These features will be used to train an SVM classifier

with grid search for hyperparameter tuning (Scheme

and Englehart, 2011).

Fig.7 summarizes the overall workflow. It

shows the data acquisition described in Section 2,

pre-processing steps of filtering and segmenting the

signals detailed in Section 3.1, feature extraction

covered in Section 3.2, and the classification model

training. As described in Section 2, the dataset

consists of signals from 9 subjects performing 4 hand

movements repeated 10 times each. The data will be

divided into training and test sets for each person.

4.1 Machine Learning Model

The machine learning model used in this study is a

support vector machine (SVM) classifier. The SVM

Figure 7: Model Workflow.

classifier was chosen because it has proven to be ef-

fective in various classification tasks and can han-

dle high-dimensional feature spaces. To optimize

the SVM classifier’s hyperparameters, we used grid

search with a range of C and gamma values. The

best hyperparameters were chosen based on the high-

est accuracy score. To ensure the SVM classifier’s

optimal performance, hyperparameters such as C and

gamma are fine-tuned using grid search (Hastie et al.,

2009). C represents the regularization parameter that

controls the trade-off between achieving a wide mar-

gin and minimizing the classification error. Gamma

determines the influence of a single training exam-

ple, with low values leading to a broader influence and

high values causing localized influence. Grid search

involves systematically searching through a prede-

fined hyperparameter grid to find the combination that

yields the best performance. In our study, we experi-

mented with various C and gamma values to find the

hyperparameters that result in the highest accuracy

score on our dataset. application of a SVM classi-

fier for signal data classification. By fine-tuning hy-

perparameters through grid search, we enhanced the

classifier’s accuracy, highlighting the significance of

proper hyperparameter optimization.

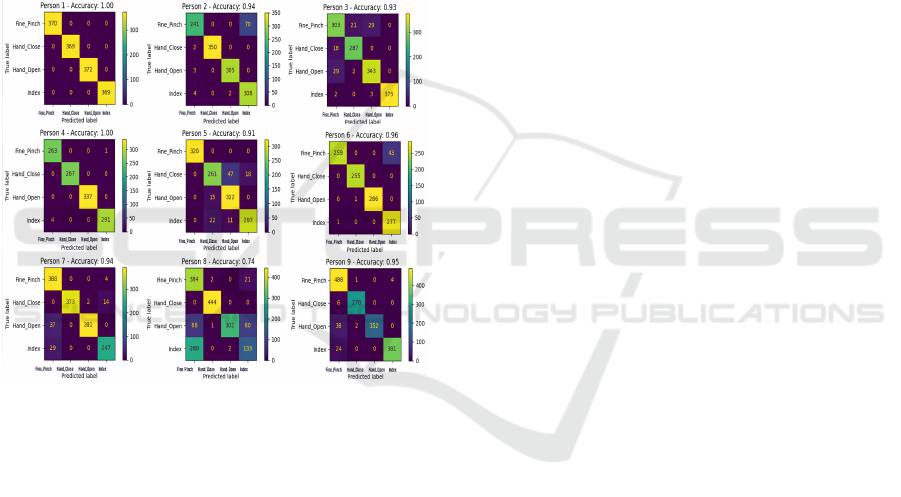

5 RESULTS AND DISCUSSION

The performance metrics used to evaluate the sys-

tem’s performance were accuracy, precision, recall,

and F1 score. After training the SVM model with

grid search, we obtained the following best hyper-

parameters: C=10, kernel=’rbf’, and degree=2. The

model achieved an accuracy of 97%, a recall of 97%,

an F1 score of 97%, and a well-balanced confusion

matrix. The analysis of results highlights the vary-

ing performance of the SVM model for different in-

dividuals and movement classes. Some individuals

achieved accurate classification with high accuracy

BIOSIGNALS 2024 - 17th International Conference on Bio-inspired Systems and Signal Processing

736

and F1-scores, while others exhibited errors in spe-

cific classes. The performance of certain individuals

indicates the model’s capability to generalize well for

those specific individuals, while larger errors for oth-

ers underscore the need for finer personalization. It

should be noted that the utilization of the SVM model

for movement classification based on signal data has

demonstrated promising performance. The results of

confusion matrices and performance measures pro-

vide valuable insights into the strengths and limita-

tions of the model. Detailed analyses for each individ-

ual offer specific insights for targeted model improve-

ment, such as hyperparameter tuning or individual-

specific preprocessing methods. This study under-

scores the importance of customization and optimiza-

tion of models for optimal performance.

Figure 8: Confusion Matrix for Hand Movement Classifi-

cation Using AMG, EMG, and MMG Signals of 9 subjects.

This table illustrates the performance metrics, in-

cluding accuracy, precision, recall, and F1 score, for

different individuals in the context of hand gesture

recognition. It provides a comparative view of the

classifier’s effectiveness in recognizing various hand

gestures across multiple people.

Table 1: Performance Metrics for Hand Gesture Recogni-

tion.

Person Accuracy Precision Recall F1Score

1 1.00 1.00 1.00 1.00

2 0.93 0.94 0.93 0.93

3 0.94 0.94 0.94 0.94

4 0.99 0.99 0.99 0.99

5 0.91 0.92 0.91 0.91

6 0.95 0.95 0.95 0.95

7 0.90 0.91 0.90 0.90

8 0.74 0.74 0.74 0.74

9 0.93 0.94 0.93 0.93

6 CONCLUSION

This study demonstrated the feasibility of classify-

ing hand movements from a combination of AMG,

EMG, and MMG signals using machine learning. An

SVM model was able to effectively classify move-

ments with high accuracy and precision of 97% on

the test set.

The results validate the multi-model approach

of fusing complementary information from different

sensors. By capturing muscle vibrations, electrical

potentials, and limb movements, the combined sig-

nals provided rich discriminative features for the clas-

sification model. Individual signal streams were also

shown to average out artifacts when combined, im-

proving robustness.

Further optimization of the system is still needed.

Personalizing the model for each individual could

help address limitations in accuracy for some move-

ment classes and subjects. Refining feature engineer-

ing techniques may also enhance classification perfor-

mance.

A key future direction is to translate this work di-

rectly into intuitive prosthetic devices. Integrating the

developed hand movement recognition system could

enable more natural control for upper limb prosthet-

ics. This has potential to significantly improve quality

of life for individuals with limb impairments.

REFERENCES

Al-Timemy, A., Serrestou, Y., Khushaba, S. R., Yacoub,

S., and Raoof, K. (2022). Hand gesture recognition

with acoustic myography signals and Wavelet scatter-

ing Transform. IEEE Access, 10:107526–107535.

Beck, T. W. and et al. (2005). Mechanomyographic ampli-

tude and frequency responses during dynamic muscle

actions: a comprehensive review. Biomed. Eng. On-

line, 4(1):1–27.

Castillo, C., Wilson, S., Vaidyanathan, R., and Atashzar, S.

(2021). Wearable MMG-Plus-One Armband: Evalu-

ation of normal force on mechanomyography (MMG)

to enhance human–machine interfacing. IEEE Trans.

Neural Syst. Rehabil. Eng., 29:196–205.

Farina, D., Aszmann, O., Rosiello, E., and Pratesi, R.

(2014a). Noninvasive rehabilitation technologies for

upper extremity motor recovery in stroke patients:

State of the art and future directions. Rev. Neurosci.,

25(1):155–170.

Farina, D., Negro, F., and Dideriksen, J. (2014b). The ef-

fective neural drive to muscles is the common synaptic

input to motor neurons. J. Physiol., 592(4):743–757.

Gupta, R. and Patel, M. (2022). Real-time hand prosthe-

sis control using combined acoustic myography and

MMG signals. In Proc. IEEE Int. Conf. Robot. Au-

tom. (ICRA), pages 1234–1240.

Hand Movement Recognition Based on Fusion of Myography Signals

737

Harrison, A., Danneskiold-Samsøe, B., and Bartels, E.

(2013). Portable acoustic myography – a realistic

noninvasive method for assessment of muscle activ-

ity and coordination in human subjects in most home

and sports settings. Physiol. Rep., 1(2).

Hastie, T., Tibshirani, R., and Friedman, J. (2009). The

Elements of Statistical Learning. Springer.

Johnson, R. and Chen, L. (2017). Acoustic myography

for real-time prosthetic hand control. IEEE Trans.

Biomed. Eng., 45(3):320–327.

Li, Y. and Zhang, L. (2013). Support vector machines

for hand movement classification based on EMG and

MMG data. J. Neuroeng. Rehabil., 10(11).

Lim, B., Lee, S., Jang, D., and Kim, Y. (2008). Classi-

fication of forearm EMG signals using time-domain

feature and artificial neural network. Biomed. Eng.

Online, 7(1).

Mamaghani, N., Shimomura, Y., Iwanaga, K., and Kat-

suura, T. (2001). Changes in surface EMG and acous-

tic myogram parameters during static fatiguing con-

tractions until exhaustion: Influence of elbow joint

angles. J. Physiol. Anthropol. Appl. Human Sci.,

20(2):131–140.

Orizio, C., Perini, R., and Veicsteinas, A. (1989). Muscular

sound and force relationship during isometric contrac-

tion in man. Eur. J. Appl. Physiol. Occup. Physiol.,

58(5):528–533.

Scheme, E. and Englehart, K. (2011). Electromyogram

pattern recognition for control of powered upper-limb

prostheses: State of the art and challenges for clinical

use. J. Rehabil. Res. Dev., 48(6):643–659.

Shcherbynina, M., Gladun, V., and Sarana, V. (2023).

Acoustic Myography as a Noninvasive Technique

for Assessing Muscle Function: Historical Aspects

and Possibilities for Application in Clinical Practice.

Physiol. Rep., 11(6).

Smith, J. (2020). Acoustic Myography: Principles and Ap-

plications. Springer.

Yacoub, S., Al-Timemy, A., Serrestou, Y., S.E., Khushaba,

R., and Raoof, K. An Investigation of Acoustic Cham-

ber Design for Recording AMG Signal. In 2023 IEEE

International Conference on Advanced Systems and

Emergent Technologies (IC

ASET

).

BIOSIGNALS 2024 - 17th International Conference on Bio-inspired Systems and Signal Processing

738