Classification of Fine-ADL Using sEMG Signals Under Different

Measurement Conditions

Surya Naidu

a

, Anish Turlapaty

b

and Vidya Sagar

c

Biosignal Analysis Group, IIIT Sri City, Sri City, India

Keywords:

sEMG, Fine-ADL, Feature Extraction, Neural Networks, CNN Bi-LSTM, Class-Wise Analysis.

Abstract:

Most studies on surface electromyography (sEMG) related to finger activities have concentrated on grips,

grasps and general arm movements without any emphasis on the correlation of body postures and hand po-

sitions on the finger-centric activities. The main objective of the new dataset is to investigate activities of

daily living (ADL) needing focus on finer motor control in diverse measurement conditions. In this paper, we

present a novel dataset of finger-centric activities of daily living comprising 5-channel sEMG signals collected

under different body postures and hand positions. The dataset encompasses sEMG signals acquired from 10

subjects, performing 10 distinct fine-ADLs in various body postures and hand positions. Further, a machine

learning framework for classification of the fine-ADL is developed as follows. Each signal is segmented into

250ms windows and Time Domain (TD), Frequency Domain (FD), Wavelet Domain (WD) and Eigenvalues

features are extracted. Four classifier frameworks using the features are implemented for the analyses. The

results reveal that a hybrid CNN Bi-LSTM achieves an average test accuracy of 76.85%, followed by a 5-

layered fully connected neural network (FCNN) with 72.42%, in aggregate scenario. An average subject-wise

test accuracy of 88% is achieved by the FCNN across all body postures and hand positions combined. Most

importantly, the CNN Bi-LSTM, enhances subject-wise classification by an average test accuracy of 27.47%

than the FCNN under varying body postures. Dependencies of the test accuracy on measurement conditions

are analyzed. Stand body posture is found to be the easiest to classify in Aggregate scenario, whereas Folded

Knees was the most difficult to classify. An increase in test accuracy with an increase in training data is ob-

served body postures combinations analysis.

1 INTRODUCTION

1.1 Background

Activities of Daily Living (ADL) refer to the basic

tasks and activities that individuals perform on a daily

basis. ADL are particularly important for individuals

with disabilities or chronic illnesses who may require

assistance or accommodations to perform them. Fur-

thermore, insights gained from studying ADL can in-

form the design of assistive technologies to help indi-

viduals with disabilities perform daily tasks indepen-

dently.

The applications of ADL assessment are wide-

ranging and include geriatric care, rehabilitation, dis-

ability evaluation (Chen et al., 2022), and assistive

a

https://orcid.org/0009-0007-6390-8074

b

https://orcid.org/0000-0003-0078-3845

c

https://orcid.org/0009-0006-3464-9205

technology design. In geriatric care (Sandberg et al.,

2012), the ADL assessment can help identify func-

tional decline and enable healthcare providers to im-

plement interventions to maintain independence and

quality of life (Faria et al., 2020). In rehabilitation

care, it is important for establishing baselines, track-

ing progress (Dai et al., 2021), and developing effec-

tive treatment plans (He et al., 2021). In the design

of assistive technology, it can help ensure that devices

are tailored to the specific needs and abilities of a user

(Park et al., 2020).

We propose Fine-ADL as a class of activities of

daily living that require fine motor ability as described

in (Fauth et al., 2017) for precise control of the fingers

and wrists. Examples include ADL such as writing,

typing, and using standard mechanical tools such as

a kitchen knife. Assessment of Fine-ADL is impor-

tant in evaluation of the ADL score (Katz, 1983) and

various related applications. ADL are analyzed using

motion sensors, visual information and sEMG signals

Naidu, S., Turlapaty, A. and Sagar, V.

Classification of Fine-ADL Using sEMG Signals Under Different Measurement Conditions.

DOI: 10.5220/0012346000003657

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 17th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2024) - Volume 1, pages 591-598

ISBN: 978-989-758-688-0; ISSN: 2184-4305

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

591

(Toledo-P

´

erez et al., 2019).

Surface electromyography (sEMG) is a non-

invasive measurement technique to record a muscle’s

electrical activity providing valuable information into

its contractions. Hence, this method finds applica-

tion in the examination of these finer activities. Pat-

tern recognition of sEMG signals is a promising ap-

proach for wearable robotic control. To realize their

widespread real-world adaptation, it is important to

develop control technologies that can perform Fine-

ADL for the users (Castellini et al., 2009). Toward

realization of this goal a fundamental requirement is

a pattern recognition framework that can reliably clas-

sify intended actions of a user based on multi-channel

sEMG signals.

One major challenge is the limited performance

of the pattern recognition algorithms under variable

measurement conditions different from the controlled

laboratory experiments. One reason for this limita-

tion is tasks performed in controlled circumstances

with limited trials and specific instructions may not

reflect the variability and complexity of Fine-ADL in

the everyday life. (Rosenburg and Seidel, 1989) ob-

served a significant correlation between sEMG sig-

nals and body postures. However, the underlying

mechanisms driving this relationship have remained

elusive. The observed variability has been attributed

to bio-mechanical factors such as lever arm of mus-

cles, force distribution across muscles, gravity and

others. Consequently, it is important to incorpo-

rate diverse measurement conditions when conduct-

ing sEMG studies.

There are a few efforts available in literature

studying the impact of measurement conditions,

specifically body and hand positions on classification

of ADL and other limb movements based on different

measurement modalities. In (Song and Kim, 2018),

a classification algorithm using a single inertial sen-

sor to categorize three fundamental gait activities was

proposed. The experiments in this study included

measurements both within a gait lab and in an out-

door walking course, allowing analysis under vary-

ing conditions. Another study (Williams et al., 2022)

explored control strategies for myoelectric prostheses

that incorporate position awareness. By considering

the positional information of the prosthetic hand, a

natural and intuitive control can be achieved. In (Yang

et al., 2015) and the references therein, the impact

of upper limb positions and dynamic movements on

classification of finger motions, which usually con-

tribute to Fine-ADL, is demonstrated. However in the

existing literature there is no study or sEMG dataset

with focus on Fine-ADL under different measurement

conditions. Furthermore, our objective is to collect

Table 1: List of Activities and activity duration.

Activity Name (Class) Action

Swiping on a Phone (C1) 5s

Zoom In on a Phone (C2) 5s

Zoom Out on a Phone (C3) 5s

Pressing Button using Thumb (C4) 5s

Flipping a Switch (C5) 5s

Cutting a fruit (knife) (C6) 7s

Eating With Spoon(C7) 7s

Flipping a Bottle cap (C8) 5s

Writing on paper with pen (C9) 7s

Using Scissors (C10) 7s

Table 2: Body Postures and Hand Positions.

Body Postures Hand Positions

Folded Knees (b1)

Folded Legs (b2) P1

Sit (b3)

Sit to Stand (b4) P2

Stand (b5)

sEMG signals during Fine-ADL from a group of In-

dian subjects and analyze the impact of measurement

conditions on ADL classification.

1.2 Contributions

• A novel sEMG dataset is developed that corre-

sponds to Fine-ADL under different body pos-

tures and hand positions.

• The impact of different body postures and arm

positions on the classification of Fine-ADL and

class-wise analysis are studied through various

experiments.

• Various classical Machine Learning (ML) frame-

works and hybrid CNN Bi-LSTM are imple-

mented for the classification of Fine-ADL.

2 METHODOLOGY

2.1 Fine-ADL sEMG Dataset

In this work, a new sEMG dataset corresponding to

Fine-ADL is presented. The data is collected from 10

subjects who have no abnormalities or impairments in

their upper limbs. The group of subjects have diverse

demographics, consisting of 7 males and 3 females,

with 2 left-handed and 8 right-handed individuals. In

terms of age distribution, there are 8 subjects in the

17 − 20 age group, 1 subject above 30, and 1 subject

above 40. The research study was approved by the

BIOSIGNALS 2024 - 17th International Conference on Bio-inspired Systems and Signal Processing

592

10 Subjects

5 Body Postures

2 Hand Positions

10 Activities

Labels

Experiments:

Aggregate Analysis

Subject

-wise Analysis

Body

-Postures Combinations

ML Models Training:

FCNN

RF

kNN

EMGHandNet

Classification

Analysis

Class

-wise

Analysis

5-channels sEMG signals

Preprocessing:

20Hz HPF, 50Hz BSF

Time Domain

– 16

Frequency Domain

– 8

Wavelet

Domain

-3

Eigenvalues

- 5

Feature Extraction

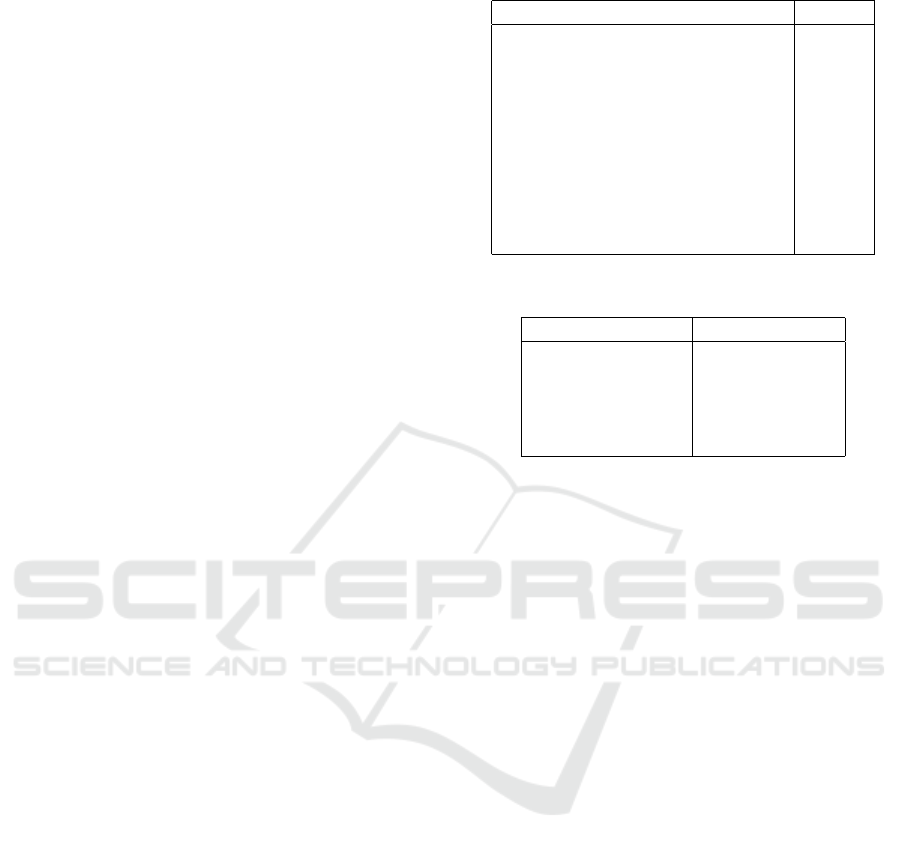

Figure 1: Block Diagram.

ethics committee at the Indian Institute of Information

Technology, Sri City (No. IIITS/EC/2022/01) and is

in accordance with the principles of the Declaration of

Helsinki. The data acquisition process is non-invasive

and prior to a measurement session, each subject gave

a written informed consent and was introduced to the

experimental protocol.

In this study, each subject performed a series of

10 activities of different durations as listed in Table

1. Further, to ensure accurate measurements, these

activities in the selected body postures and hand po-

sitions were demonstrated to the subjects. The EMG

signal from the hand is recorded using a wireless 5-

channel Noraxon Ultium sEMG sensor configuration.

The electrical contact is made with dual Ag/AgCl

self-adhesive electrodes at the densest region of the

selected forearm muscle sites (Criswell, 2010) given

in Table 3. Prior to electrode placement, the hands are

cleaned using an alcohol-based wet wipe. Each sub-

ject is asked to perform each of the fine-activities of

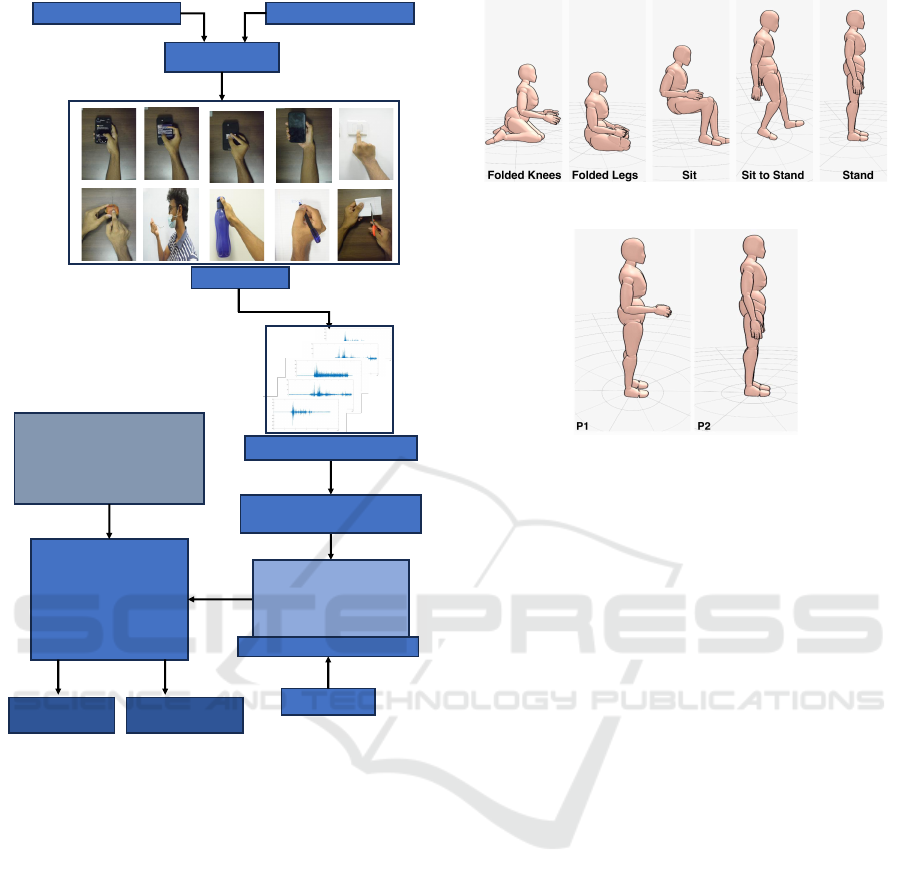

Figure 2: Body Postures.

Figure 3: Hand Positions.

daily living in three phases: rest, action, and release.

Subjects start with the rest phase, where they relax the

muscles and refrain from any physical activity. Dur-

ing the action phase, the activity is executed and the

release phase denotes a smooth transition from the ac-

tion state to the rest state.

During a measurement session, the subject per-

forms 10 Fine-ADL in 5 different body postures as

shown in Fig. 2 and 2 different hand positions as

shown in Fig. 3. Thus, the total number of measure-

ment conditions is 10. The signal specifications are as

follows: 1) sampling rate: 4000 samples/sec, 2) dura-

tion of a trial: 11s or 13s, and 3) the break between

two consecutive body postures: 5 minutes. Each ac-

tivity in a given posture is repeated 5 times. Hence

the total number of trials is 10 subjects × 5 postures

× 2 positions × 10 activities × 5 trials = 5000.

2.2 Methodology

The methodology for classification of the sEMG sig-

nals corresponding to Fine-ADLs consists of the fol-

lowing stages: 1) data preparation, 2) segmentation,

3) feature extraction, 4) ML model training, and 5)

testing and analysis as shown in Fig. 1.

2.2.1 Data Preparation

In this phase, all the 5-channeled sEMG signals are

processed by two digital filters. Initially, the sEMG

signals are high-pass filtered with a lower cut off fre-

quency of 20Hz to remove any motion artifacts. The

output of this filter is processed by a 50Hz notch fil-

ter to remove any electric line noise. From the filter

Classification of Fine-ADL Using sEMG Signals Under Different Measurement Conditions

593

Folded Knees Folded Legs Sit Sit to Stand Stand P1 P2 All

Poses

CNN Bi-LSTM

FCNN

Random Forest

kNN

Subjects

0.795

0.688

0.771

0.693

0.756

0.668

0.768

0.651

0.598

0.839

0.764

0.712

0.748

0.713

0.646

0.713

0.73

0.715

0.671

0.794

0.852

0.847

0.782

0.43

0.466

0.517

0.491

0.478

0.531

0.551 0.476

0.416

0.45

0.5

0.55

0.6

0.65

0.7

0.75

0.8

0.85

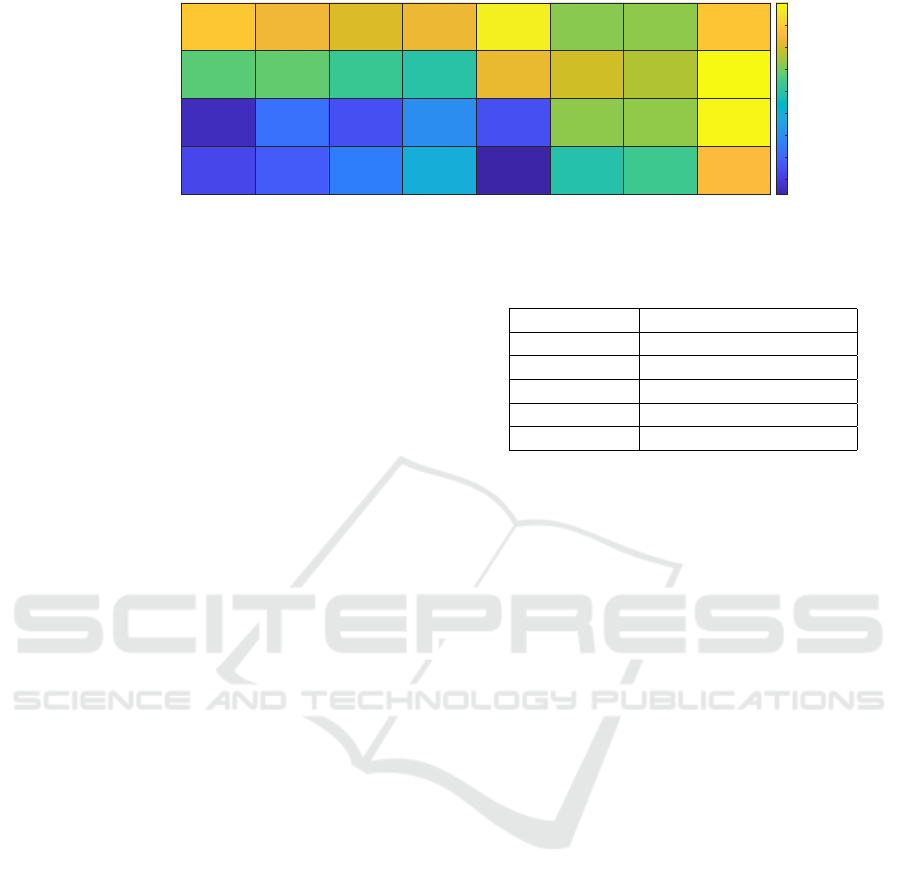

Figure 4: Test accuracies from classification under various Conditions using different Classifiers in the aggregate scheme.

output, the rest phase and the samples from the final

one second of the release phase are discarded to retain

the action and 2 seconds of the release phase. These

processed sEMG signals are annotated with the rele-

vant class labels corresponding to the Fine-ADL cat-

egories.

2.2.2 Feature Extraction

To improve classification performance, the pre-

processed sEMG signals are segmented into win-

dows of 250ms duration. For each of these sEMG

segments, 16 time domain features (Sapsanis et al.,

2013),(Karnam et al., 2021), 8 frequency domain fea-

tures, 3 wavelet domain features and eigenvalues are

computed. The features from each of these segments

and the 5 channels of a signal are concatenated to

build the full feature vector. The specific combina-

tion of features is used after analysing various feature

combinations.

2.2.3 Classification Framework

The next stage consists of model training and testing

four classifiers: Fully Connected Neural Networks

(FCNN), Random Forests (RF), k-Nearest Neighbors

(k-NN), and the CNN Bi-LSTM (Karnam et al., 2022)

with minor modifications. Note that in the case of the

deep learning model the feature extraction is implicit

within the ML framework. The modifications to the

CNN Bi-LSTM include changing the dropout rate to

0.3, the CNN window size to 4×7, and the batch size

to 8. The FCNN consists of five dense layers with re-

spective number of neurons: 256, 128, 64, 16, and

10. The ReLU activation function is utilized in the

first four layers and the softmax function used in the

output layer.

3 IMPLEMENTATION

In this paper, three distinct experiments, 1) the aggre-

gate scheme, 2) the subject-wise scheme and, 3) im-

Table 3: Sensor placement on hand muscles.

Channel No. Muscle Name

1 Abductor digiti minimi

2 Extensor pollicis brevis

3 First dorsal interosseous

4 Abductor pollicis brevis

5 Brachioradialis

pact analysis of body postures combinations are con-

ducted. These experiments investigate the impact of

the body postures and the hand positions on classifi-

cation performance.

3.1 Aggregate Scheme

This experiment involves the utilization of the afore-

mentioned four classifiers to classify the feature data

from all of the 10 subjects. The objective of this

scheme is to investigate the general impact of differ-

ent measurement conditions on the classification of

Fine-ADL and also to analyse if the classifiers can

learn and classify across different individuals. The

classifiers are evaluated for the following three condi-

tions.

Body Postures. In this case, the trials of the 10

subjects from any set of four body postures are em-

ployed for training purposes while the trials in the left

out body posture are utilized for testing. This process

is repeated till trials from the five body postures are

tested separately(Fougner et al., 2011). This method-

ology is referred to as Leave-One-Posture-Out analy-

sis.

Hand Positions. In this case, the trials of all sub-

jects from one hand position are utilized for training

while the trials from the other hand position are used

for testing. This process is repeated for both hand po-

sitions(Fougner et al., 2011).

All Positions. In this case, 80% of all trials from

each combination of a body posture and a hand po-

sition, aggregated across all subjects are utilized for

training. The remaining 20% of the trials are tested.

BIOSIGNALS 2024 - 17th International Conference on Bio-inspired Systems and Signal Processing

594

Folded Knees Folded Legs Sit Sit to Stand Stand P1 P2 All

Poses

1

2

3

4

5

6

7

8

9

10

Average

Subjects

0.704

0.737

1

0.869

0.768

0.859

0.768

0.697

0.723

0.899

0.735

0.889

0.816

0.859

0.808

0.745

0.778

0.745

0.758

0.802

0.848

1

0.724

0.646

0.859

1

0.687

0.806

0.687

0.786

0.838

0.717

0.889

0.848

0.687

0.796

1

0.838

0.747

0.795

0.848

0.727

0.908

0.859

0.806

0.796

0.939

0.758

0.889

0.848

0.837

0.831

0.707

0.759

0.729

0.782

0.765

0.859

0.745

0.803

0.757

0.843

0.726

0.843

0.731

0.807

0.699

0.775

0.655

0.759

0.819

0.765

0.899

0.838

0.944

0.853

0.884

0.858

0.919

0.784

0.832

0.889

0.87

0.434

0.404

0.606

0.596 0.586

0.5

0.6

0.7

0.8

0.9

1

Figure 5: Test accuracies from Subject-wise average performance under various conditions using the hybrid CNN Bi-LSTM.

3.2 Subject-Wise Analysis

In the subject-wise experiment, a model’s learning ca-

pability at the subject level is evaluated. In this experi-

ment, only the results from the hybrid CNN Bi-LSTM

are reported. For the signals from each of the ten sub-

jects, the training, testing, and performance analysis

is carried out under the three sets of conditions men-

tioned previously. Hence, in the subject-wise scheme,

in a given classification analysis, the amount of data

analyzed is only one tenth of the first experiment. Fi-

nally, the results averaged across the subjects are also

reported.

3.3 Body Postures Combinations

Analysis

In the body posture combinations analysis, the impact

of diverse body postures on Fine-ADL is investigated.

The study encompasses data from each of the 10 sub-

jects. The methodology involves training the model

using different sets of body postures and testing on

the remaining body postures (Fougner et al., 2011).

The number of specific combinations when i condi-

tions are used in training is 5

C

i

. The total is

∑

4

i=1

5

C

i

resulting in the examination of 30 distinct scenarios.

The averaged results from FCNN across the 4 cate-

gories and the class-wise F

1

scores are reported.

4 RESULTS AND ANALYSIS

4.1 Aggregate Analysis

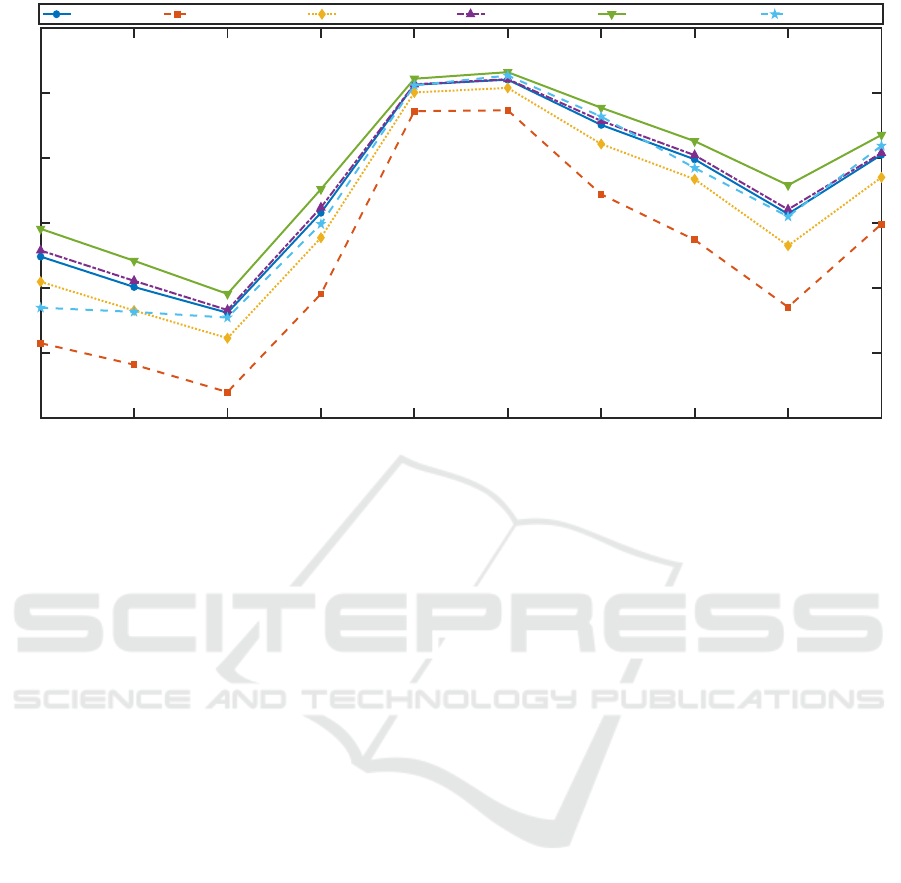

Fig. 4 illustrates the results of the aggregate scheme.

Across various body postures and hand positions, on

average, the hybrid CNN Bi-LSTM performs the best,

achieving an average test accuracy of 76.89%, fol-

lowed by the FCNN with 72.42%. In the classifica-

tion analysis versus the body postures, Fig. 4 shows

that the Stand position is the least challenging posture

for the model to understand with a test accuracy of

83.93% by hybrid CNN Bi-LSTM, while Sit posture

is the most difficult with 75.62%. An 8% difference in

accuracy is observed between the Stand and Sit pos-

tures indicating an impact of body postures on Fine-

ADL classification. However, in the hand position

analysis, the FCNN is the top-performing classifier

with an average test accuracy of 73.9%. Moreover,

reduction of the training data to 50% has little impact

on the accuracy for most classifiers. The hand posi-

tions seem to be less influential in case of aggregate

analysis as they produce similar accuracies. In the

third condition where all positions are considered, the

FCNN outperforms others with an accuracy of 85.2%,

followed by the RF with 84.7% and the hybrid CNN

Bi-LSTM with 79.4%.

4.2 Subject-Wise Analysis

Fig. 5 illustrates the subject-wise test accuracy of

the hybrid CNN Bi-LSTM across the different pos-

tures and positions. CNN Bi-LSTM outperforms the

FCNN in the body posture analysis, achieving an av-

erage test accuracy of 78.93% compared to FCNN’s

51.46%. In terms of variations across subjects, the

data from subjects 3 and 7 have the highest test ac-

curacies averaged across the different conditions at

89.8% and 88.2% respectively. The lowest average

performance is observed for data from subject 8 at

68.9%. In the body postures analysis, Folded Knees

prove to be the most challenging posture for the clas-

Classification of Fine-ADL Using sEMG Signals Under Different Measurement Conditions

595

C1 C2 C3 C4 C5 C6 C7 C8 C9 C10

Class

b1

b2

b3

b4

b5

b1, b2

b1, b3

b1, b4

b1, b5

b2, b3

b2, b4

b2, b5

b3, b4

b3, b5

b4, b5

b1, b2, b3

b1, b2, b4

b1, b2, b5

b1, b3, b4

b1, b3, b5

b1, b4, b5

b2, b3, b4

b2, b3, b5

b2, b4, b5

b3, b4, b5

b1, b2, b3, b4

b1, b2, b3, b5

b1, b2, b4, b5

b1, b3, b4, b5

b2, b3, b4, b5

P1

P2

All Conditions

Combination

0.588

0.602

0.653

0.601

0.594

0.608

0.612

0.624

0.638

0.666

0.672

0.619

0.685

0.609

0.658

0.659

0.689

0.666

0.657

0.767

0.637

0.659

0.644

0.751

0.637

0.826

0.609

0.633

0.643

0.659

0.588

0.658

0.615

0.626

0.619

0.587

0.719

0.598

0.631

0.595

0.668

0.606

0.752

0.618

0.599

0.606

0.714

0.661

0.744

0.606

0.598

0.587

0.625

0.687

0.688

0.729

0.649

0.667

0.669

0.7

0.707

0.704

0.728

0.778

0.698

0.732

0.677

0.73

0.672

0.752

0.756

0.719

0.774

0.706

0.791

0.703

0.788

0.698

0.7

0.843

0.88

0.879

0.845

0.864

0.895

0.881

0.919

0.925

0.892

0.916

0.892

0.884

0.884

0.907

0.912

0.942

0.926

0.858

0.938

0.905

0.914

0.917

0.92

0.907

0.91

0.966

0.914

0.884

0.92

0.927

0.913

0.911

0.949

0.854

0.878

0.875

0.852

0.909

0.907

0.894

0.894

0.924

0.907

0.932

0.914

0.878

0.905

0.926

0.915

0.948

0.919

0.884

0.917

0.936

0.925

0.909

0.947

0.917

0.947

0.905

0.964

0.914

0.932

0.935

0.92

0.954

0.72

0.795

0.737

0.69

0.78

0.827

0.817

0.804

0.803

0.865

0.837

0.82

0.768

0.844

0.833

0.881

0.862

0.791

0.845

0.858

0.831

0.896

0.881

0.851

0.879

0.927

0.875

0.807

0.861

0.918

0.838

0.891

0.902

0.688

0.697

0.635

0.623

0.73

0.786

0.732

0.812

0.754

0.747

0.76

0.762

0.757

0.763

0.806

0.799

0.847

0.778

0.836

0.747

0.835

0.793

0.771

0.813

0.828

0.884

0.742

0.834

0.834

0.838

0.768

0.802

0.914

0.59

0.599

0.623

0.651

0.724

0.702

0.657

0.663

0.667

0.645

0.715

0.655

0.771

0.742

0.605

0.757

0.772

0.708

0.695

0.733

0.683

0.745

0.851

0.751

0.657

0.789

0.745

0.685

0.736

0.859

0.728

0.708

0.653

0.668

0.736

0.773

0.808

0.802

0.775

0.788

0.761

0.746

0.713

0.793

0.748

0.847

0.815

0.753

0.846

0.861

0.793

0.784

0.824

0.764

0.795

0.895

0.842

0.758

0.869

0.815

0.814

0.824

0.912

0.511

0.434

0.527

0.541

0.564

0.58

0.503

0.527

0.507

0.388

0.458

0.532

0.555

0.551

0.54

0.566

0.572

0.483

0.572

0.577

0.558

0.562

0.522

0.472

0.394

0.47

0.393

0.472

0.526

0.524

0.541

0.47

0.563

0.506

0.529

0.508

0.53

0.535

0.507

0.485

0.525

0.584

0.586

0.585

0.572

0.531

0.523

0.528

0.544

0.566

0.54

0.578

0.534

0.51

0.575

0.4

0.5

0.6

0.7

0.8

0.9

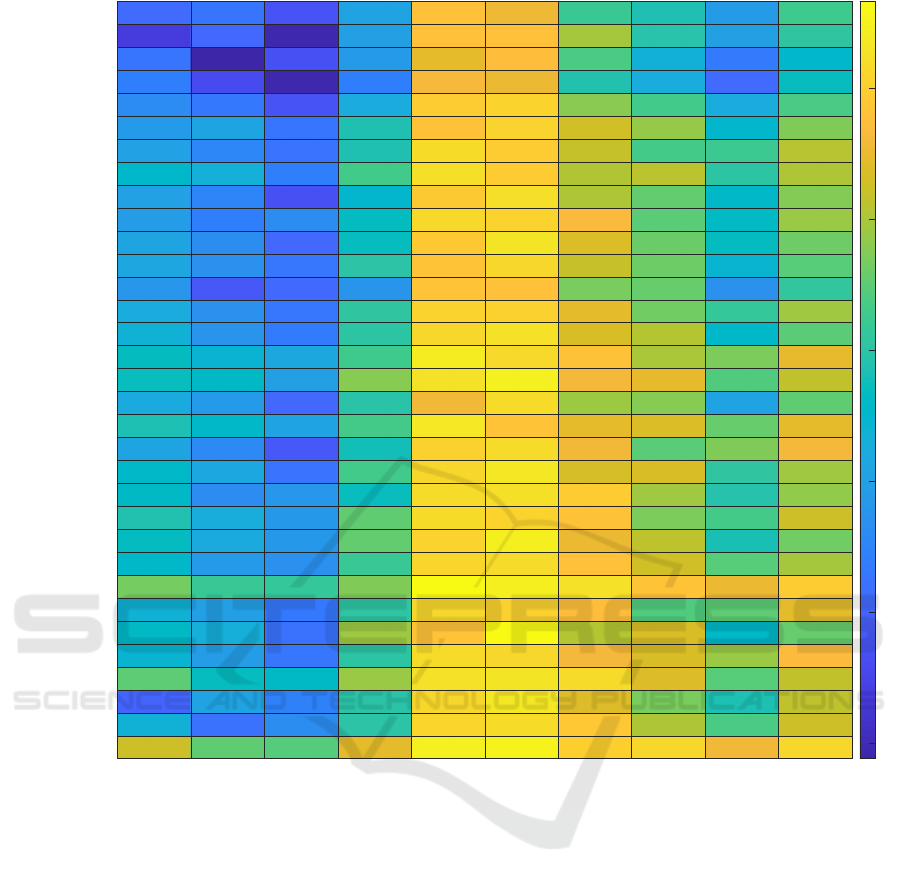

Figure 6: Class-wise F1 score averaged across the test conditions as function of combinations of training conditions.

sifier, with an average test accuracy of 72.39%. In

contrast, Stand posture yields the highest accuracy of

83.78%, exhibiting an 11.39% difference. The re-

sults show that the body postures have a significant

influence among the subject level results. On aver-

age, there is a 23% gap in accuracy between the high-

est and lowest performing postures. This distinction

is particularly pronounced in subjects 9 and 6, with

a substantial 42.42% and 48.48% disparity between

Folded Knees and Stand, respectively. Interestingly,

while Stand posture boasts the highest average accu-

racy, Folded Knees and Folded Legs emerge as the top

performers in three subjects each, while Stand pre-

vails in only two subjects. These results suggest that

the impact of body postures is notably intricate on a

subject-specific basis.

In the hand positions analysis, as shown in Fig. 5,

the hybrid CNN Bi-LSTM seems to have an average

accuracy (across subjects) close to 76% for both the

hand positions. The standard deviation across sub-

jects is 7.55% and 6.3% respectively. Subjects 3, 8, 7,

and 6 exhibit considerable discrepancies in hand po-

sition accuracy, highlighting distinct subject-specific

trends. Generally, the performance closely aligns

with the aggregate analysis. Finally, in all positions

case, the hybrid CNN Bi-LSTM achieves an average

test accuracy of 86.99%, with a minimum of 78.39%

and a maximum of 94.44%.

4.3 Body Postures Combinations

Analysis

Figs. 6 and 7 illustrate class-wise F1 scores when

different combinations of body postures are used for

BIOSIGNALS 2024 - 17th International Conference on Bio-inspired Systems and Signal Processing

596

1 2 3 4 5 6 7 8 9 10

Classes

0.4

0.5

0.6

0.7

0.8

0.9

1

Test Accuracy

Overall Average Single body Posture Double body Posture Triple body Posture Quadriple body Posture Hand Postures

Figure 7: Class-wise test F1 score averaged across a group of training conditions when a number of conditions are used for

training.

training. Fig. 6, shows the test F1 scores for each of

the classes (columns) when a specific combination of

conditions is used for training (rows). Fig. 7 shows

the test F1 scores further averaged across the 5

c

i

con-

ditions for each i, indicating the average performance

when any of i conditions are used for training. From

these Figs., the F1 scores increase as the number of

body postures used for training increases. On aver-

age, using one posture yields an F1 score of 64.64%,

while employing two, three, and four postures results

in F1 scores of 72.11%, 75.87%, and 78.3% respec-

tively. The combination {b1, b2, b3, b4} exhibits the

highest F1 score, reaching 84.5%. It’s worth noting

that b5 proves to be the easiest posture to learn, even

without explicit training from the same condition. In-

terestingly, as the number of postures used for training

increases, the increase in F1 score diminishes. The

class-wise analysis reinforces the observation that C3

has the lowest F1 score, while C5 and C6 consistently

demonstrate the highest scores across all combina-

tions of body postures.

Moreover, the average for hand postures falls be-

tween that of a single combination and all other com-

binations. This suggests that while hand postures do

contribute to accuracy, they are not as influential as

combined body postures. Furthermore, it’s notable

that activities C2 (Zoom In) and C3 (Zoom Out), be-

ing very similar in nature, might be causing substan-

tial confusion for the model. Surprisingly, a dip in F1

score is observed for C9 (Writing), which could be at-

tributed to potential similarities with other activities,

leading to mis-classification.

4.4 Discussion

The hybrid CNN Bi-LSTM model consistently out-

performs other models in both Subject-wise scenario

and Body Posture analyses within the Aggregate sce-

nario. Moreover, the FCNN demonstrates superior

classification ability specifically for Body Posture

Combinations analysis. Consistent trends are ob-

served in both aggregate and subject-wise analyses.

Stand posture is always the easiest to classify on av-

erage. Additionally, as the amount of training data in-

creased, there was a noticeable improvement in test

accuracy, particularly evident in the hand position

analysis where 50% of the data was utilized, com-

pared to body postures which utilized 80% of the

data. This incremental trend was also apparent in

the analysis of body posture combinations. The con-

sistent shape of Fig. 7 indicates that the ranking of

class performance remains stable across various com-

binations. The subject-wise analysis further empha-

sized the impact of measurement conditions and the

subject-specific nature of Fine-ADL. Overall, these

findings underscore the impact of measurement con-

ditions on Fine-ADL.

5 CONCLUSION AND FUTURE

WORK

This paper presents a new sEMG dataset consisting of

10 Fine-ADL activities conducted under various mea-

Classification of Fine-ADL Using sEMG Signals Under Different Measurement Conditions

597

surement conditions. The dataset includes data cap-

tured in different body postures and hand positions.

The analysis of the dataset is carried out from two

perspectives: Aggregate and Subject-wise, consider-

ing three cases: body postures, hand positions, and all

positions in both experiments along with class-wise

analysis on impact of body postures. Among the clas-

sifiers examined, the hybrid CNN Bi-LSTM demon-

strates the best performance, successfully recognizing

Fine-ADL even in diverse measurement conditions.

The most challenging body posture for the classifier is

Folded Knees, while the least challenging is the Stand

posture. Interestingly, both the hand positions consid-

ered yield similar accuracies. Nevertheless, the cur-

rent outcomes highlight the potential of the proposed

framework for real-time Fine-ADL and also demon-

strate the impact of various measurement conditions

on Fine-ADL. In terms of future work, there is po-

tential for further enhancing the model through fine-

tuning to achieve improved results. Additionally, the

impact of the amount of training data, pertaining to a

specific measurement condition, on testing accuracy

needs to be investigated. Furthermore, feature selec-

tion analysis also requires further improvements and

the generalization ability of the model to new subjects

needs to be explored.

REFERENCES

Castellini, C., Fiorilla, A. E., and Sandini, G. (2009). Multi-

subject/daily-life activity emg-based control of me-

chanical hands. Journal of neuroengineering and re-

habilitation, 6(1):1–11.

Chen, M., Dutt, A. S., and Nair, R. (2022). Systematic re-

view of reviews on activities of daily living measures

for children with developmental disabilities. Heliyon,

8(6):e09698.

Criswell, E. (2010). Cram’s introduction to surface elec-

tromyography. Jones & Bartlett Publishers, Burling-

ton.

Dai, S., Wang, Q., and Meng, F. (2021). A telehealth frame-

work for dementia care: an adls patterns recognition

model for patients based on nilm. In 2021 Interna-

tional Joint Conference on Neural Networks (IJCNN),

pages 1–8.

Faria, A. L., Pinho, M. S., and Berm

´

udez i Badia, S. (2020).

A comparison of two personalization and adaptive

cognitive rehabilitation approaches: a randomized

controlled trial with chronic stroke patients. Journal

of NeuroEngineering and Rehabilitation, 17(1):78.

Fauth, E. B., Schaefer, S. Y., Zarit, S. H., Ernsth-Bravell,

M., and Johansson, B. (2017). Associations between

fine motor performance in activities of daily living and

cognitive ability in a nondemented sample of older

adults: Implications for geriatric physical rehabilita-

tion. Journal of Aging and Health, 29(7):1144–1159.

Fougner, A., Scheme, E., Chan, A. D. C., Englehart, K.,

and Stavdahl, Ø (2011). Resolving the limb position

effect in myoelectric pattern recognition. IEEE Trans-

actions on Neural Systems and Rehabilitation Engi-

neering, 19(6):644–651.

He, C., Xiong, C.-H., Chen, Z.-J., Fan, W., Huang, X.-L.,

and Fu, C. (2021). Preliminary assessment of a pos-

tural synergy-based exoskeleton for post-stroke upper

limb rehabilitation. IEEE Transactions on Neural Sys-

tems and Rehabilitation Engineering, 29:1795–1805.

Karnam, N. K., Dubey, S. R., Turlapaty, A. C., and

Gokaraju, B. (2022). Emghandnet: A hybrid cnn and

bi-lstm architecture for hand activity classification us-

ing surface emg signals. Biocybernetics and Biomed-

ical Engineering, 42(1):325–340.

Karnam, N. K., Turlapaty, A. C., Dubey, S. R., and

Gokaraju, B. (2021). Classification of semg signals

of hand gestures based on energy features. Biomedi-

cal Signal Processing and Control, 70:102948.

Katz, S. (1983). Assessing self-maintenance: activities of

daily living, mobility, and instrumental activities of

daily living. J Am Geriatr Soc, 31(12):721–727.

Park, J., Zahabi, M., Kaber, D., Ruiz, J., and Huang, H.

(2020). Evaluation of activities of daily living tes-

beds for assessing prosthetic device usability. In 2020

IEEE International Conference on Human-Machine

Systems (ICHMS), pages 1–4.

Rosenburg, R. and Seidel, H. (1989). Electromyography of

lumbar erector spinae muscles–influence of posture,

interelectrode distance, strength, and fatigue. Eur J

Appl Physiol Occup Physiol, 59(1-2):104–114.

Sandberg, M., Kristensson, J., Midl

¨

ov, P., Fagerstr

¨

om, C.,

and Jakobsson, U. (2012). Prevalence and predictors

of healthcare utilization among older people (60+):

Focusing on adl dependency and risk of depression.

Archives of Gerontology and Geriatrics, 54(3):e349–

e363.

Sapsanis, C., Georgoulas, G., and Tzes, A. (2013). Emg

based classification of basic hand movements based

on time-frequency features. In 21st Mediterranean

Conference on Control and Automation, pages 716–

722.

Song, M. and Kim, J. (2018). An ambulatory gait moni-

toring system with activity classification and gait pa-

rameter calculation based on a single foot inertial sen-

sor. IEEE Transactions on Biomedical Engineering,

65(4):885–893.

Toledo-P

´

erez, D. C., Rodr

´

ıguez-Res

´

endiz, J., G

´

omez-

Loenzo, R. A., and Jauregui-Correa, J. (2019). Sup-

port vector machine-based EMG signal classification

techniques: A review. Applied Sciences, 9(20):4402.

doi: 10.3390/app9204402.

Williams, H., Shehata, A. W., Dawson, M., Scheme, E.,

Hebert, J., and Pilarski, P. (2022). Recurrent convo-

lutional neural networks as an approach to Position-

Aware myoelectric prosthesis control. IEEE Trans

Biomed Eng, 69(7):2243–2255.

Yang, D., Yang, W., Huang, Q., and Liu, H. (2015). Clas-

sification of multiple finger motions during dynamic

upper limb movements. IEEE journal of biomedical

and health informatics, 21(1):134–141.

BIOSIGNALS 2024 - 17th International Conference on Bio-inspired Systems and Signal Processing

598