Increasing User Engagement with a Tracking App

Through Data Visualizations

Daniela Nickmann and Victor Adriel de Jesus Oliveira

a

St. P

¨

olten University of Applied Sciences, St. P

¨

olten, Austria

Keywords:

Infovis, Engagement, Mobile Applications, Food Waste.

Abstract:

According to the United Nations, 17 percent of global food production is wasted, causing economic losses

and significant environmental impact. Digital solutions like food storage management apps can raise aware-

ness and combat this issue. However, their effectiveness relies on consistent user engagement. Therefore, this

paper proposes and evaluates data visualization designs to enhance user engagement in mobile applications

for tracking food waste. The study involves three steps: discussing the domain situation supported by relevant

literature, outlining the process of creating two sets of four data visualization designs and conducting quan-

titative user surveys to validate the designs. The first experiment assesses user experience, while the second

determines user engagement. Results indicate a preference for design approach two (Chart Set B), which also

provides more accuracy and higher user engagement when the design aligns with users’ sustainability inter-

ests. These findings emphasize the potential of engaging data visualizations to curb food waste and contribute

to a more sustainable future.

1 INTRODUCTION

Self-tracking tools empower individuals or groups

to monitor biological, physical, behavioral, or envi-

ronmental metrics. The data obtained through self-

tracking efforts can be summarized as the quantified

self (QS) (Swan, 2013). When designing effective

and accessible user interfaces for tracking applica-

tions, the use of data visualization techniques is es-

sential. They enable users to see and understand

trends, outliers, and patterns in data (Shneiderman

et al., 2016). Furthermore, users can quickly gain in-

sights into their behavior, as well as identify and influ-

ence their behavioral trends and patterns (Peters et al.,

2018).

Bridging the domains of QS, data visualization,

and user-centered design, we outline the development

of data visualization designs for an application that in-

tends to help users identify and improve their house-

holds’ food waste trends. The objective is to create

data visualizations that are not only informative but

also engaging. Peters et al. define engagement in a

human-computer interaction (HCI) context as atten-

tional and emotional involvement (Peters et al., 2009).

The model of engagement proposed by O’Brien et al.

highlights that emotions are closely tied to engage-

a

https://orcid.org/0000-0002-8477-0453

ment (O’Brien and Toms, 2008). Finding appropri-

ate characteristics to measure and quantify the level

of engagement poses different challenges. Concepts

such as PENS (Peters et al., 2018) and contributions

by Hung and Parsons (Hung and Parsons, 2017), Ed-

wards (Edwards, 2016) and Kennedy et al. (Kennedy

et al., 2016) emphasize that understanding user en-

gagement requires looking beyond technical metrics

to capture the complexity of the user experience.

Increased engagement can help motivate users to

maintain their attention and interest in the application

and update it more consistently. This provides users

with more data to explore, a more detailed representa-

tion of their QS, and subsequently more insights into

their behavior, which could potentially lead to posi-

tive behavioral changes. The two conducted experi-

ments aim to firstly assess user experience by using

the Single Ease Question (SEQ) (Rotolo, 2023) and

the User Experience Questionnaire (UEQ) (Laugwitz

et al., 2008) and secondly measure engagement by us-

ing the VisEngage questionnaire by Hung and Parsons

(Hung and Parsons, 2017) with the different visualiza-

tion designs.

Nickmann, D. and Oliveira, V.

Increasing User Engagement with a Tracking App Through Data Visualizations.

DOI: 10.5220/0012322800003660

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 19th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2024) - Volume 1: GRAPP, HUCAPP

and IVAPP, pages 637-644

ISBN: 978-989-758-679-8; ISSN: 2184-4321

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

637

2 DATA VISUALIZATION

Munzner introduces the nested model for visualiza-

tion design and evaluation. The domain situation level

aims to define the target users, while the abstraction

level helps identify what data is shown and why it is

relevant for the user. The goal on the idiom level is to

define how the data is shown, and the algorithm level

is concerned with effective computation of visual en-

coding and interaction design (Munzner, 2009). This

model inspired the design process of the visualiza-

tions for the tracking application. The following sub-

sections represent levels 1 through 3 of the nested

model, focusing on the visual encoding.

2.1 Domain Situation

A study conducted in 2012 by Stenmarck et al. shows

that 20% of the total food produced in the EU in 2011

was wasted (Stenmarck et al., 2016). It also defines

five different sections in which food waste occurs:

primary production, processing, wholesale and retail,

food service, and households. With about 46.5%,

the households sector contributes the most to food

waste. This behavior not only results in economic

losses but has a significant impact on the environ-

ment and causes loss of resources such as land, wa-

ter, and energy (Institute of Technology Assessment

(ITA), 2015).

These issues inspired a group of participants in an

interdisciplinary project semester program to find a

solution to help people with their food management

(St. P

¨

olten University of Applied Sciences, 2023).

After going through an iterative design thinking pro-

cess, the project team proposed an app that guides

the user through creating a shopping list, the grocery

shopping process, and the use (or waste) of the pur-

chased products: the What a Waste app. The target

group of the application was defined as people be-

tween the ages of 25 and 50 living in Austrian house-

holds with at least one other person. The application

was tested and adapted by conducting user interviews

and field studies using low-fidelity mockups and, in

later stages, a high-fidelity prototype.

The What a Waste application’s interface consists

of three main tabs. The shopping list view lets the

user create a shopping list and is intended to help

the user before and during their purchasing process.

The storage tab provides the user an overview of their

storage’s current inventory with additional informa-

tion about the product such as expiry date and status

(full, half, empty) along with the option to add it to

the shopping list. Tracking whether an item was used

or wasted is done by a popup asking the user how

they used each item they remove from the storage

list. Lastly, the household view shows an overview

of the household’s statistics and enables the user to

view and edit a household member’s profile, which

contains information about the user’s diet, allergies,

and preferences. The heightened awareness about

the user’s behavior concerning food ideally results in

more conscious shopping habits, planning meals, and

ultimately less food waste. The overall goal is that the

user becomes more aware of their habits and reduces

their use of resources, which positively impacts the

environment.

2.2 Visualization Requirements

Gaining a deeper understanding of the user’s possi-

ble goals and tasks when using the application was

crucial for defining the visualizations’ requirements.

Finding out more about people’s motivations to re-

duce their food waste (Giordano et al., 2019), under-

standing more about the action-cognition-perception-

loop (Peters et al., 2009), and matching the different

kinds of engagement (Doherty and Doherty, 2019) to

it provided a good base understanding for defining the

following requirements:

1. The visualization interface should be easy to use,

so the everyday user can focus on the data rather

than the interaction itself.

2. The visualization being mobile friendly is crucial

since the application is designed for mobile de-

vices. A seamless interaction without scaling or

readability issues enhances overall usability, re-

duces possible user frustration, and supports the

previous requirement.

3. Hierarchy: Showing how data or objects are

ranked and ordered together in a system can help

the user identify certain counterproductive behav-

iors and patterns.

4. Comparison: Showing the differences and simi-

larities between values can provide the opportu-

nity to gain insights into patterns and trends.

5. Data over time: Showing data over a time period

as a way to find trends or changes over time can

help identify trends (Ribecca, 2023).

2.3 Visual Encoding

To select appropriate visualization methods for the

application, gaining an overview of the different vi-

sualization methods and their features, advantages,

and disadvantages was important. 42 visualization

methods from different sources (Ribecca, 2023; Zoss,

IVAPP 2024 - 15th International Conference on Information Visualization Theory and Applications

638

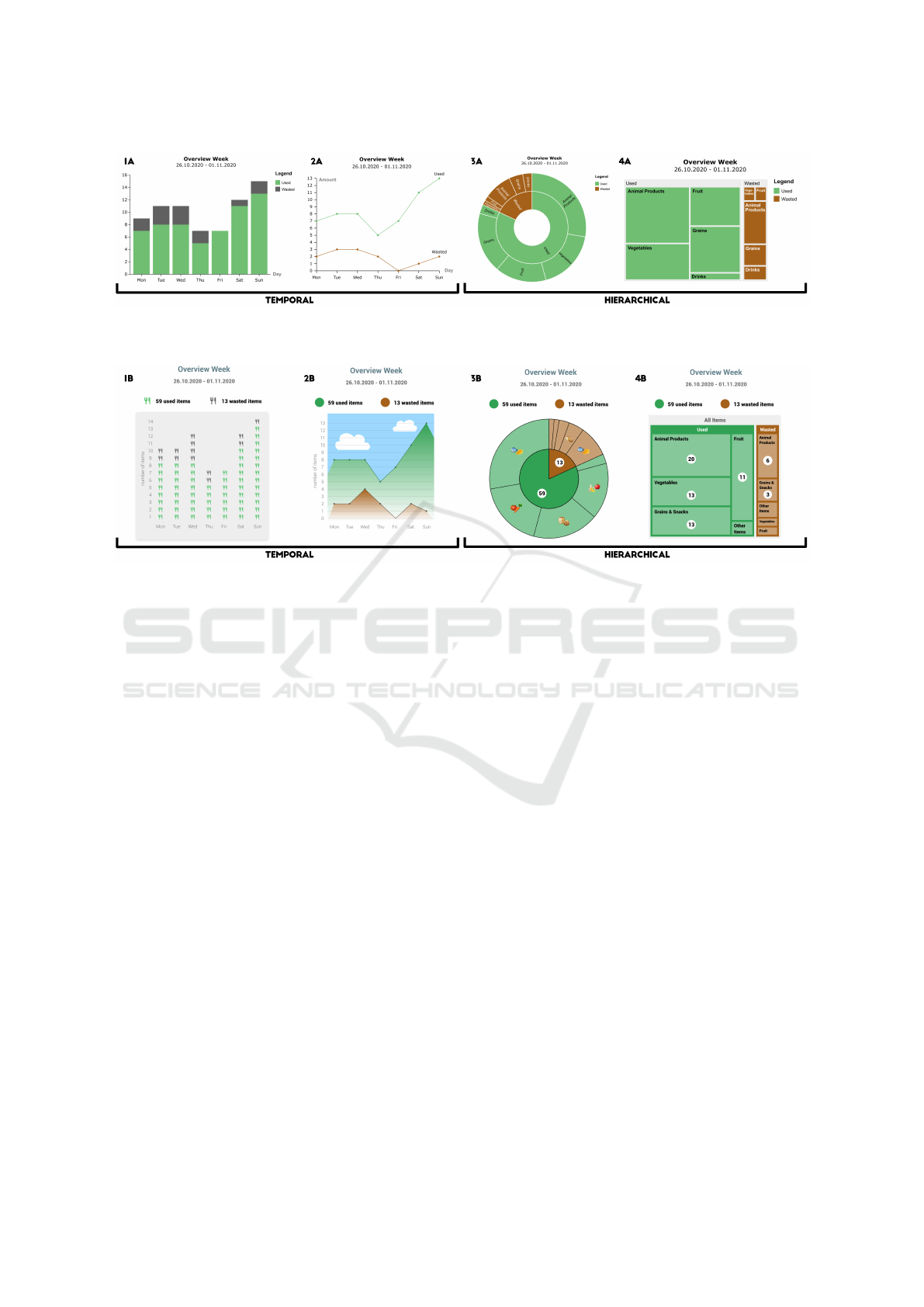

Figure 1: Visualization Group A. The charts in A include two temporal representations encoded as (1A) a bar chart and (2A)

a line chart, as well as two hierarchical representations encoded as (3A) a sunburst diagram and (4A) a treemap.

Figure 2: Visualization Group B. The charts in B also include two temporal representations, encoded as (1B) a pictogram

chart and (2B) a stylized area chart, as well as two hierarchical representations encoded as (3B) a stylized sunburst diagram

and (4B) a stylized treemap.

2019; Datalabs, 2014; Valcheva, 2020; Munzner and

Maguire, 2015) were gathered and analyzed by iden-

tifying which visualization method meets which of

the previously defined requirements and which tech-

niques were appropriate for the application’s data set.

The following four methods met four out

of five requirements: histograms, line graphs,

stacked/grouped area graphs, and treemaps. The fol-

lowing eight methods met three out of five require-

ments: area graphs, bar charts, multi-set bar charts,

pictogram charts, pie charts, radial bar charts, stacked

bar graphs, and sunburst diagrams.

After drafting initial concepts, we decided to cre-

ate two temporal and two hierarchical visualization

designs to convey consumption and waste over time

and (sub)categories of consumed and wasted prod-

ucts. For the following experiments, two sets of

four visualizations each were designed. The first set

(Charts A) includes conventional data representations

of each of the chosen types. The second set (Charts

B) proposes alternatives to improve the charts from

the first set, providing icons and metaphors that could

foster engagement. The charts are designed as fol-

lows:

1. Bar and Pictogram Charts. Both charts visu-

alize data that represents a state in time, which

makes them temporal (Esri, 2023). Chart 1A is

a bar chart that is used to show discrete numer-

ical comparisons across categories. Chart 1B is

an Isotype (Doris, 2020) or pictogram chart. The

use of icons gives a more engaging overview of

small sets of discrete data, where an icon can rep-

resent one or any number of units. This tech-

nique makes comparison of different categories

easy and also overcomes barriers created by lan-

guage, culture, or education. The two charts are

not directly comparable; however, we agreed that

a bar chart would be the best option to compare

against the pictogram chart.

2. Line and Area Charts. Charts 2A and 2B are

also temporal visualizations, and both use the line

chart visualization method. Chart 2A is a sim-

ple line chart visualization that displays quantita-

tive values over a continuous interval or time pe-

riod. A line graph is most frequently used to show

trends and analyze how the data has changed over

time. Chart 2B uses the grouped area graph visu-

alization method. It is a version of the line graph

technique, with the area below the line filled in

with a certain color or texture and the graphs start-

ing from the same zero axis.

3. Sunburst Diagram. Charts 3A and 3B both use

the sunburst diagram visualization method, which

shows hierarchy through a series of rings that are

Increasing User Engagement with a Tracking App Through Data Visualizations

639

sliced for each category node. Each ring corre-

sponds to a level in the hierarchy, with the central

circle representing the root node and the hierarchy

moving outwards from it.

4. Treemap. Charts 4A and 4B are both treemaps,

which are used to visualize hierarchical structure

while also displaying quantities for each category

via area size. Each category is assigned a rectan-

gle area, with the subcategory rectangles nested

inside (Ribecca, 2023).

To create the first set of data visualizations (Charts

A) the software RAWGraphs (Mauri et al., 2017) was

used. To make more detailed changes, such as the

placement of labels and colors, an SVG editor soft-

ware was used.

The second set of visualizations (Charts B) was

created using an iterative design process. Keeping

Experiment II in mind, interactive/clickable mockups

with illustrated gamification elements (Trivedi, 2021)

and emoji (Bai et al., 2019) were created using Adobe

XD. There were three versions in total and each ver-

sion was tested with two experts from the fields of

HCI, data visualization, and usability. With each ver-

sion, the feedback from the expert interviews—which

concerned color encoding, legends, categorization,

readability, and labeling—was implemented.

3 EXPERIMENT I

The study’s goal was to test the user experience and

performance with two different types of visualization

approaches (Charts A and Charts B) and to identify

users’ preferences for one of the design types. Only

static visualizations of the weekly overview of used

and wasted items were used for this study.

3.1 User Study Setup

To compare the different visualization approaches, a

survey was created. First, the participants’ demo-

graphics were collected, followed by questions about

their households, food waste habits, and digital lit-

eracy. The order of the visualization sets was coun-

terbalanced (Lazar et al., 2017). Each visualization

was followed by a question about the visualized data

and the Single Ease Question (SEQ) (Rotolo, 2023)

about the task using a Likert scale from 1 (very diffi-

cult) to 7 (very easy). Each set of charts was followed

by the User Experience Questionnaire (UEQ) (Laug-

witz et al., 2008) to assess the user experience of the

data visualizations. At the end, the participants se-

lected their preferred visualization for each type and

one overall favorite.

3.2 Results and Analysis

Success rates for each question asked about the data

visualized in the charts were calculated, as well as the

mean (M) and standard deviation (SD) for each task’s

SEQ. To calculate the results of the UEQ, the UEQ

data analysis tools were used. A statistical analysis

using R was conducted to provide further insights into

the obtained data of the experiment. An alpha level of

.05 was used for all statistical tests.

3.2.1 Test Subjects

24 people took part in the study. The participants were

aged between 25 and 58 years old (M = 30.29). 10 of

them identified as male, 11 as female, and the remain-

ing preferred not to indicate their gender. 21 lived in

Austria and 3 did not. 9 lived alone and 15 lived in

a shared household with between 1 and 4 other adults

and/or children. On a scale of 1 (no attention) to 7

(a lot of attention) of how much attention the partici-

pants pay to their household’s amount of food waste,

they indicated a mean of 5.41 (SD = 1.38).

3.2.2 Success Rates and Easiness

The success rates and the SEQ results for Charts A

and Charts B are summarized in Table 1.

Table 1: Success rate (SR), mean (M) and standard devia-

tion (SD) of SEQ.

Charts A Charts B

SR M (SD) SR M (SD)

1 91.67% 3.87 (1.3) 95.83% 6.29 (1.1)

2 79.17% 4.75 (1.5) 87.50% 6.08 (1.3)

3 100.00% 4.96 (1.5) 100.00% 5.79 (1.3)

4 100.00% 6.46 (0.6) 91.67% 6.79 (0.5)

Overall SR = 92.70% Overall SR = 93.75%

The results show that success rates were generally

very high, except for Chart 3A. The SEQ results show

that answering questions for Charts 1A and 2A was

harder compared to questions for Charts 3A and 4A.

Success rates for Charts B were also high, and the

SEQ results show that answering questions for Charts

B was fairly easy (see Figure 3).

Spearman’s rank correlation was computed to as-

sess the relationship between success rates for ques-

tions about Charts B and the participant’s level of

attention paid to food waste. There was a positive

correlation between the two variables, r(22) = .51,

p = .011. This suggests that the higher the level of at-

tention paid to food waste, the better the success rates

when answering questions about the visualized data

in Charts B.

IVAPP 2024 - 15th International Conference on Information Visualization Theory and Applications

640

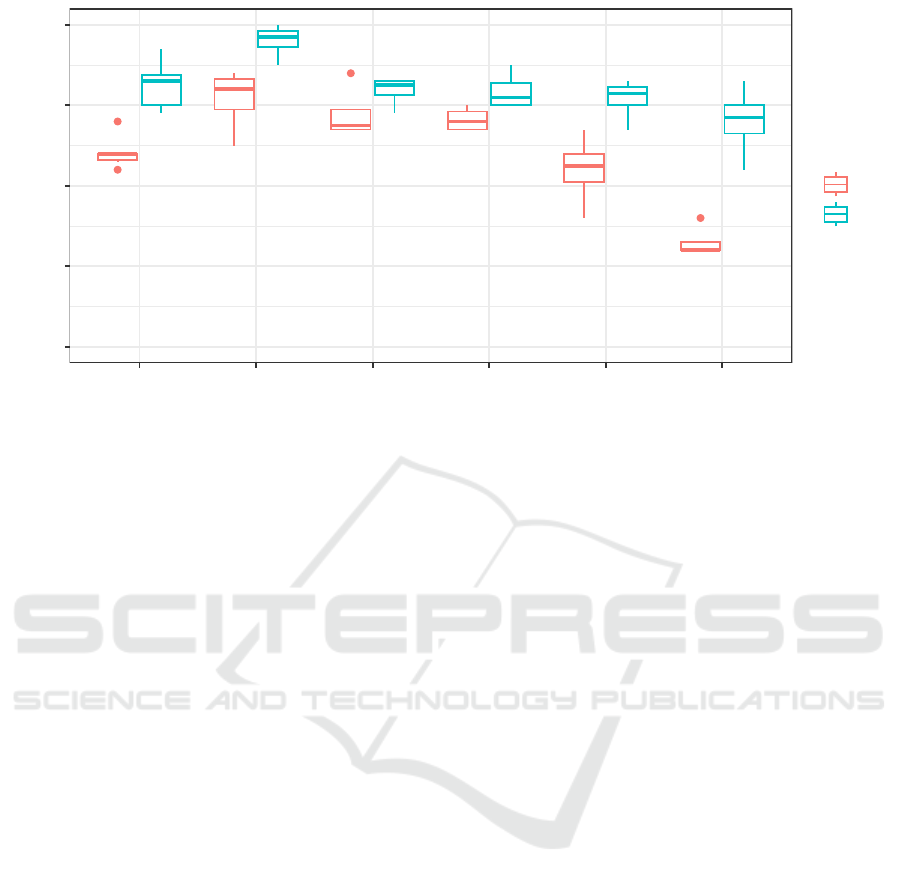

Figure 3: Overall SEQ results and results of each SEQ.

3.2.3 User Experience

Table 2 shows the UEQ results divided into 6 differ-

ent dimensions, with their corresponding mean (M)

and variance (V ). Values between −0.8 and 0.8 rep-

resent a more or less neutral evaluation of the corre-

sponding scale, values > 0.8 represent a positive eval-

uation, and values < −0.8 represent a negative evalu-

ation (Laugwitz et al., 2008) (see Figure 4).

Table 2: Mean (M) and variance (V ) for each dimension of

the UEQ.

Charts A Charts B

M V M V

Attractiveness 0.410 1.72 1.243 1.40

Perspicuity 1.073 1.17 1.823 1.26

Efficiency 0.875 1.46 1.167 1.66

Dependability 0.823 0.74 1.177 1.25

Stimulation 0.198 2.12 1.063 1.53

Novelty -0.698 2.11 0.823 1.92

The results for the UEQ for Charts A show a pos-

itive evaluation of the perspicuity, efficiency, and de-

pendability of the charts. Comparing the data to a

benchmark data set, however, shows that the results

for all scales are either below average (50% of results

better, 25% worse) or bad (in the range of 25% worst

results). Charts B, on the other hand, yield a positive

evaluation for all scales, with novelty being the low-

est and perspicuity the highest. Comparing the data

to a benchmark data set shows that the results for all

scales are either above average (25% of results better,

50% worse) or good (10% of results better, 75% of

results worse).

A Shapiro-Wilk normality test for each scale of

each visualization type shows that the data for UEQ

results for Charts A are normally distributed. The

data for Charts B are also normally distributed apart

from the data for perspicuity and novelty. A two-way

ANOVA was performed to analyze the effect of the

scale and the visualization type on the corresponding

mean scores. The test revealed that there was a statis-

tically significant effect of scale (F(5) = 14.20, p <

.001) and visualization type (F(1) = 62.23, p < .001)

on user experience, though the interaction between

these terms was not significant. This suggests that

participants had a better user experience with Charts

B compared to Charts A.

3.2.4 Preferred Visualizations

Each of the 24 participants was asked to indicate their

favorite chart for each category and an overall favorite

visualization.

• Of Charts 1, 21 people prefer the pictogram chart

(1B) and 3 the bar chart A.

• Of Charts 2, 20 participants prefer line chart B

(2B) and 4 line chart A (2A).

• Of Charts 3, 16 people prefer sunburst diagram B

(3B) and 8 sunburst diagram A (3A).

• 22 participants prefer treemap B (4B) and 2

treemap A (4A).

• Overall, 8 people indicated bar chart B (1B), 7 line

chart B (2B), 4 treemap B (3B), 2 treemap A (3A),

2 sunburst B (4B), and 1 sunburst A (4A) as their

favorites.

The results show that participants tend to prefer

Charts B over Charts A. The overall favorite is bar

chart B (1B), closely followed by line chart B (2B),

which both are temporal visualization methods.

4 EXPERIMENT II

The goal of this study was to determine engagement

with the interactive versions of Charts B only, and to

identify which variables have a (positive) effect on

user engagement.

4.1 User Study Setup

Two different surveys were used for evaluation, one

for the temporal visualizations and the other for the

hierarchical visualizations. Visualizations were coun-

terbalanced for controlling the assignment of treat-

ments and allowing a clean comparison between the

experimental conditions (Lazar et al., 2017). More-

over, the users were instructed to test the visualiza-

tions on a mobile device, since the application and

therefore the visualizations are designed for mobile

devices.

Demographic data such as age and gender were

collected, as well as details about the participant’s

household and their awareness of their food waste.

Moreover, data about possible motivations for re-

ducing food waste based on Giordano was collected

Increasing User Engagement with a Tracking App Through Data Visualizations

641

−2

−1

0

1

2

Attractiveness Perspicuity Efficiency Dependability Stimulation Novelty

Scale

Mean

Type

A

B

Figure 4: UEQ scores for each user experience dimension and for each visualization set (Charts A and Charts B).

(Giordano et al., 2019). After the exploration of

each visualization, the user was asked to answer

three questions about the visualized data and to com-

plete the VisEngage questionnaire (Hung and Par-

sons, 2017), which focuses on engagement with data

visualizations and covers eleven different engagement

characteristics with two items each, to determine their

engagement score. After going through the same pro-

cess with the second visualization, the participant had

to select their preferred visualization design and had

the option to leave feedback.

4.2 Results and Analysis

Temporal and hierarchical visualizations were ana-

lyzed separately in a between-subjects design. A test

of normality was performed on the two main variables

of the data set. The overall engagement score was cal-

culated based on the seven-point Likert scale, which

ranges from strongly disagree (1) to strongly agree

(7). The sum of the values indicates the engagement

score, which ranges from 22 to 154 (Hung and Par-

sons, 2017). The performance was calculated based

on the number of correct answers to the questions

about the visualization designs. The mean and stan-

dard deviation of the participants’ ages, the overall en-

gagement score and the engagement scores per visual-

ization method were calculated, as well as overall per-

formance and performance per visualization method.

To determine the correlation of selected parame-

ters, the Pearson method was used. A chi-squared

test as well as parametric tests, which included

ANOVA and a t-test, were conducted. The Wilcox

and Kruskal–Wallis tests were used for the non-

parametric tests. An alpha level of .05 was used for

all tests.

4.2.1 Temporal Visualizations

14 people, of which 6 identified as female and 8 as

male, took part in the survey. The participants of

the survey were rather young, with an average age

of 26.14 (SD = 8.33). The overall average of en-

gagement scores was 95.54 (SD = 22.14) with the

line graph method showing a slightly higher average

(M = 100.64, SD = 24.44) than the pictogram method

(M = 90.43, SD = 19.08). Overall performance of

the participants was relatively high, with an average

of 84.52% (SD = 23.10%). Performance when in-

teracting with the line graph visualization was higher

(M = 90.48, SD = 15.63) compared to the pictogram

visualization (M = 78.57, SD = 28.06).

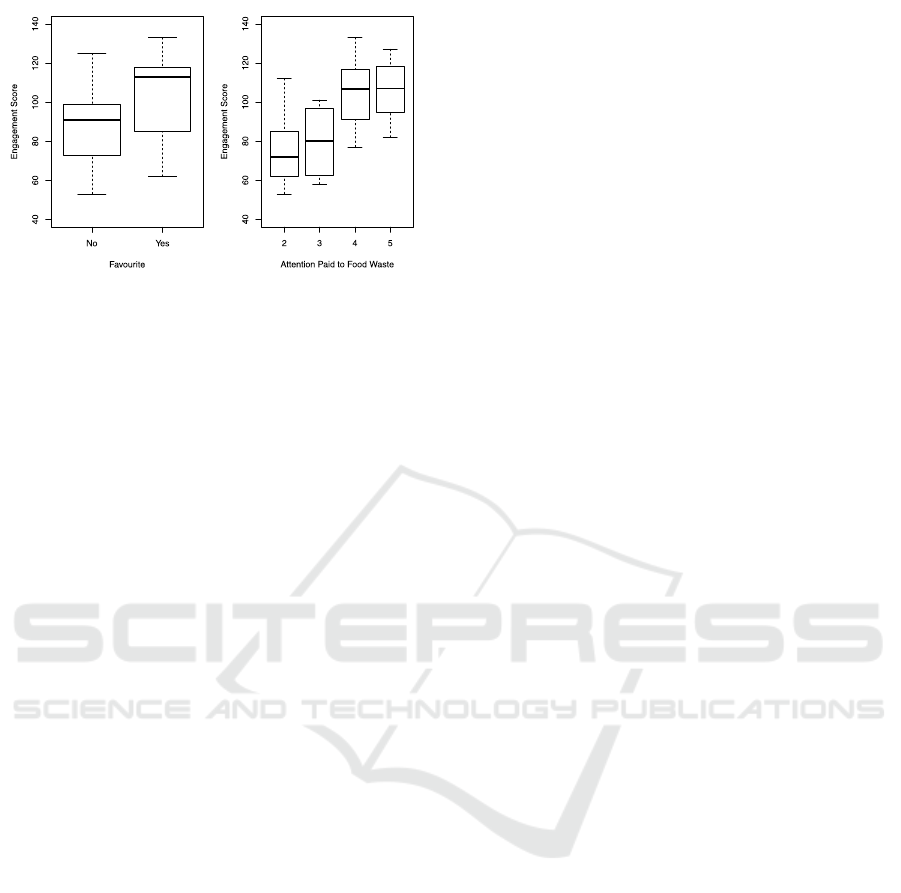

Based on the data set, it was possible to con-

clude that the users had a higher engagement score

on their favorite visualization design since a Pear-

son correlation coefficient showed a positive corre-

lation, r(26) = .41, p = .031. A t-test showed that

there was a significant effect for the user’s favorite

visualization, t(25.52) = −2.29, p = .03, with vi-

sualizations which were marked as favorite showing

higher engagement scores (M = 104.43, SD = 21.95)

than visualizations which were not marked as favorite

(M = 86.64, SD = 19.11) (see Figure 5).

Lastly, a Pearson correlation coefficient was com-

puted to assess the linear relationship between the en-

gagement score and the attention a user pays to how

much food their household wastes. A strong positive

correlation was detected, r(26) = .57, p = .001 (see

Figure 5). A one-way ANOVA test showed that the

IVAPP 2024 - 15th International Conference on Information Visualization Theory and Applications

642

Figure 5: Engagement for (not) preferred temporal visual-

izations and Engagement scores by attention paid to food

waste for temporal visualizations.

effect of the attention paid to food waste in a house-

hold was significant, F(3, 24) = 5.02, p = .008. A

post hoc analysis using the Tukey method conducted

a pairwise comparison and showed that the average

engagement score of the 5 participants who selected 4

(M = 105.10, SD = 18.21) and the 4 participants who

selected 5 (M = 106.13, SD = 15.81) on a five-point

Likert scale, upon getting asked how much attention

they pay to food waste in their household, was signif-

icantly higher than the 3 participants who marked 2

(M = 76.00, SD = 20.67) on the same scale (p < .05).

4.2.2 Hierarchical Visualizations

14 people, of which 8 identified as female and 6 as

male, took part in the survey. The participants of

the survey were middle aged with an average age

of 31.29 (SD = 14.78). The overall average of en-

gagement scores was 100.00 (SD = 22.71) with the

sunburst method showing a slightly higher average

(M = 103.14, SD = 21.73) than the treemap method

(M = 96.86, SD = 24.04). The overall performance

of the participants was relatively high, with an aver-

age of 83.33% (SD = 24.84%). Performance when

interacting with the sunburst visualization was higher

(M = 88.10, SD = 16.57) compared to the treemap

visualization design (M = 78.57, SD = 30.96).

The data set’s values for engagement scores and

performance showed a strong positive correlation

when computing a Pearson correlation coefficient,

r(26) = .60, p < .001. This suggests that a higher

engagement score results in better performance based

on the survey’s data.

A Pearson correlation coefficient showed a

marginally significant positive correlation, r(26) =

.38, p = .046, of the engagement score with the at-

tention a user pays to how much food their household

wastes. A one-way ANOVA test did not show an ef-

fect of the attention paid to food waste in a household,

F(3, 24) = 1.53, p = .23.

5 CONCLUSION

The results of Experiment I show that the user perfor-

mance with the two visualization sets was compara-

ble. However, participants perceived the tasks as eas-

ier with the new stylized charts (Charts B) over the

basic ones (Charts A). The overall favorite in the set

was the pictogram chart (1B), closely followed by the

stylized area chart (2B). These findings are supported

by the results of the statistical analysis for the UEQ,

which suggest that participants preferred the attrac-

tiveness, perspicuity, efficiency, dependability, stimu-

lation, and novelty of visualizations in Charts B com-

pared to Charts A.

While it was not possible to identify a single visu-

alization method that unequivocally led to increased

user engagement in Experiment II, the results show

that the engagement score was higher for the visu-

alization the user marked as their favorite. More-

over, people who pay more attention to food waste

in their households had a higher engagement score

when interacting with the temporal visualization de-

signs. These findings can be recognized in the en-

gagement model by O’Brien et al. The user’s general

interest in the topic can be linked to the parameters of

interest, motivation, and specific goal for the point of

engagement (and reengagement) thread. Heightened

engagement scores when interacting with the user’s

favorite visualization can be traced back to the pa-

rameters aesthetic and sensory appeal, interactivity,

novelty, interest, and positive affect for the period of

engagement thread (O’Brien and Toms, 2008).

Limitations of the study to keep in mind are the

use of the treemap visualization method (Group,

2023) and the impact of the chosen colors on the user.

Moreover, providing a summary of the data only in

Charts B might limit the comparability of the two sets

of charts.

The study illustrates that by stylizing conventional

data representations, users can perform tasks more

easily and enjoy a better user experience with the vi-

sualizations. Taking these findings into account when

designing data visualizations for mobile tracking apps

can potentially lead to users updating the apps more

consistently, resulting in more accurate results and

potentially influencing changes in behavioral trends

and patterns. Further studies, however, including

longitudinal ones, must be conducted to comprehen-

sively assess the effect of user engagement with visu-

alizations and mobile tracking apps in bringing about

effective behavioral change.

Increasing User Engagement with a Tracking App Through Data Visualizations

643

REFERENCES

Bai, Q., Dan, Q., Mu, Z., and Yang, M. (2019). A sys-

tematic review of emoji: Current research and future

perspectives. Front. Psychol., 10:2221.

Datalabs (2014). 15 Most Common Types of Data Visual-

ization — Datalabs.

Doherty, K. and Doherty, G. (2019). Engagement in HCI:

Conception, Theory and Measurement. ACM Comput.

Surv., 51(5):1–39.

Doris, G. (2020). Isotype – Entstehung, Entwicklung, Erbe.

Edwards, A. (2016). Engaged or Frustrated?: Disambiguat-

ing engagement and frustration in search. ACM SIGIR

Forum, 50(1):88–89.

Esri (2023). What is temporal data? – ArcMap — Docu-

mentation.

Giordano, C., Alboni, F., and Falasconi, L. (2019). Quan-

tities, Determinants, and Awareness of Households’

Food Waste in Italy: A Comparison between Di-

ary and Questionnaires Quantities. Sustainability,

11(12):3381.

Group, N. N. (2023). Treemaps: Data Visualization of

Complex Hierarchies — nngroup.com. https://www.

nngroup.com/articles/treemaps/.

Hung, Y.-H. and Parsons, P. (2017). Assessing User En-

gagement in Information Visualization. In Proceed-

ings of the 2017 CHI Conference Extended Abstracts

on Human Factors in Computing Systems - CHI EA

’17, pages 1708–1717, Denver, Colorado, USA. ACM

Press.

Institute of Technology Assessment (ITA) (2015). Reducing

food waste. ITA dossier no. 14en.

Kennedy, H., Hill, R. L., Allen, W. L., and Kirk, A. (2016).

Engaging with (big) data visualizations: Factors that

affect engagement and resulting new definitions of ef-

fectiveness.

Laugwitz, B., Held, T., and Schrepp, M. (2008). Construc-

tion and evaluation of a user experience questionnaire.

In Holzinger, A., editor, HCI and Usability for Ed-

ucation and Work, pages 63–76, Berlin, Heidelberg.

Springer Berlin Heidelberg.

Lazar, J., Feng, J. H., and Hochheiser, H. (2017). Research

Methods in Human-Computer Interaction. Morgan

Kaufmann, Cambridge, MA.

Mauri, M., Elli, T., Caviglia, G., Uboldi, G., and Azzi, M.

(2017). RAWGraphs. CHItaly ’17: Proceedings of the

12th Biannual Conference on Italian SIGCHI Chap-

ter.

Munzner, T. (2009). A Nested Model for Visualization De-

sign and Validation. IEEE Transactions on Visualiza-

tion and Computer Graphics, 15(6):921–928.

Munzner, T. and Maguire, E. (2015). Visualization Analysis

& Design. CRC Press, Taylor & Francis Group, Boca

Raton, FL.

O’Brien, H. L. and Toms, E. G. (2008). What is user en-

gagement? A conceptual framework for defining user

engagement with technology. J. Assoc. Inf. Sci. Tech-

nol., 59(6):938–955.

Peters, C., Castellano, G., and de Freitas, S. (2009). An ex-

ploration of user engagement in HCI. In Proceedings

of the International Workshop on Affective-Aware Vir-

tual Agents and Social Robots - AFFINE ’09, pages

1–3, Boston, Massachusetts. ACM Press.

Peters, D., Calvo, R. A., and Ryan, R. M. (2018). Designing

for Motivation, Engagement and Wellbeing in Digital

Experience. Front. Psychol., 9.

Ribecca, S. (2023). The Data Visualization Catalogue.

Rotolo, T. (2023). Measuring task usability: The Single

Ease Question (SEQ) - The Trymata blog.

Shneiderman, B., Plaisant, C., Cohen, M., Jacobs, S.,

Elmqvist, N., and Diakopoulos, N. (2016). Designing

the User Interface: Strategies for Effective Human-

Computer Interaction. Pearson, 6th edition.

St. P

¨

olten University of Applied Sciences (2023). Interdis-

ciplinary Lab – iLab — fhstp.ac.at. https://www.fhstp.

ac.at/en/onepager/ilab.

Stenmarck,

˚

a., Jensen, C., Quested, T., Moates, G., Buk-

sti, M., Cseh, B., Juul, S., Parry, A., Politano, A.,

Redlingshofer, B., Scherhaufer, S., Silvennoinen, K.,

Soethoudt, H., Z

¨

ubert, C., and

¨

Ostergren, K. (2016).

Estimates of European food waste levels.

Swan, M. (2013). The Quantified Self: Fundamental Dis-

ruption in Big Data Science and Biological Discovery.

Big Data, 1(2):85–99.

Trivedi, R. (2021). Exploring Data Gamification. PhD the-

sis, Northeastern University.

Valcheva, S. (2020). 21 Data Visualization Types: Exam-

ples of Graphs and Charts.

Zoss, A. (2019). Visualization Types - Data Visualization -

LibGuides at Duke University.

IVAPP 2024 - 15th International Conference on Information Visualization Theory and Applications

644