Leveraging VR and Force-Haptic Feedback for an Effective Training

with Robots

Panagiotis Katranitsiotis

a

, Panagiotis Zaparas

b

, Konstantinos Stavridis

c

and Petros Daras

d

The Visual Computing Lab, Centre for Research and Technology Hellas, Information Technologies Institute,

Thessaloniki, Greece

Keywords:

Training Framework, Virtual Environment, Force Feedback, Robot Manipulation, Kuka Robot,

Insect Farming.

Abstract:

The utilization of robots for numerous tasks is what defines automation in the industrial sector in the era we are

going through for multiple fields, including insect farming. As an outcome of this progression, human-robot

collaboration is becoming increasingly prevalent. Industrial workers must receive adequate training in order

to guarantee optimal operational efficiency and reduce potential risks connected with the use of high-value

machinery like robots given the precise and delicate handling requirements of these machines. Accordingly,

we propose a framework that integrates Virtual Reality (VR) technologies with force and haptic feedback

equipment. This framework aims to simulate real-world scenarios and human-robot collaboration tasks, with

the goal of familiarizing users with the aforementioned technologies, overcoming risks that may arise, and

enhancing the effectiveness of their training. The proposed framework was designed in regard to insect farming

automation domain with the objective of facilitating human-robot collaboration for workers in this field. An

experiment was designed and conducted to measure the efficiency and the impact of the proposed framework

by analyzing the questionnaires given to participants to extract valuable insights.

1 INTRODUCTION

Due to globalization and the growing demand for dis-

tinctive products, new challenges have emerged in

the industrial sector. Industries, in order to main-

tain their competitiveness in the mass production

model, needed to redesign their manufacturing sys-

tem (Braganc¸a et al., 2019). Therefore, the opera-

tion of robots became a key component by automat-

ing a variety of tasks and thus handling the constant

increase of demand which also corresponds to the ris-

ing of annual revenue. Consequently, a necessity for

humans to engage in collaborative efforts with robots

at an industrial level to boost overall productivity and

enhance efficiency has emerged. Thereby, the concept

of Industry 4.0 was introduced (Robla-G

´

omez et al.,

2017).

Industry 4.0 is envisioned as the fourth Industrial

revolution that signifies today’s industrial sector con-

sidering that technological leaps have a tremendous

a

https://orcid.org/0009-0004-6509-6338

b

https://orcid.org/0009-0001-5407-7295

c

https://orcid.org/0000-0002-3244-2511

d

https://orcid.org/0000-0003-3814-6710

impact on the growth and evolution of Industries. The

first field of mechanization (1st industrial revolution),

the immense usage of electricity (2nd industrial rev-

olution) and widespread digitalization (3rd industrial

revolution) characterized the industrial sector in the

past years (Lasi et al., 2014). The progression of tech-

nology and artificial intelligence entered the capabil-

ity of automation and more robust decision-making

mechanisms for industries, empowered with real-time

performance management systems, leading to Indus-

try 4.0 (Aoun et al., 2021).

Companies are progressively employing sensors

and wireless technologies to gather data on the en-

tire lifecycle of a product achieving smart manufac-

turing. These mechanisms are increasingly used to

accumulate data that contribute to product design and

manufacturing. In addition, the utilization of big data

analytics is also used to identify causes of failure as

well as to optimize product performance and enrich

production efficiency (Kusiak, 2017). A key chal-

lenge for smart manufacturing is to connect the phys-

ical and virtual spaces. The rapid evolution of sim-

ulations, data communication, and cutting-edge tech-

nologies created a new era in the interactions between

Katranitsiotis, P., Zaparas, P., Stavridis, K. and Daras, P.

Leveraging VR and Force-Haptic Feedback for an Effective Training with Robots.

DOI: 10.5220/0012319200003654

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 13th International Conference on Pattern Recognition Applications and Methods (ICPRAM 2024), pages 651-660

ISBN: 978-989-758-684-2; ISSN: 2184-4313

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

651

Figure 1: (a) Spatial Setup (b) SenseGlove (c) HTC Vive VR Headset and two Vive Trackers.

physical and virtual spaces (Tao et al., 2019). Digital

Twins integrate the physical and virtual data through-

out a product lifecycle. When combined with analysis

results of the collected data, can be used to improve

the performance of a process in the physical space,

hence is a vital feature of Industry 4.0 (Qi and Tao,

2018).

Industry 4.0 is characterized by humans and

robots having the same goal and following a sequence

of shared actions to achieve it (Weiss et al., 2021). For

the collaborative assignments between humans and

robots, humans need to be trained to ensure smooth

cooperation. Stemming from the unfamiliarity of hu-

mans with robots, which are expensive equipment re-

quiring precise manipulation and to mitigate risks per-

taining to the safety of humans during their coopera-

tion, proper and efficient training is mandatory.

Robots have already become a key component of

Industry 4.0 and have replaced many procedures that

have so far been performed manually and started to

move out of laboratory and manufacturing environ-

ments into more complex human working environ-

ments (Bauer et al., 2008). Collaboration between

humans and robots became an efficient way for en-

terprises to increase their productivity level while re-

ducing production costs and thereby increasing their

annual revenue (Matheson et al., 2019). Collaborative

robots, often referring as cobots (Colgate et al., 1996),

are designed with the purpose of working simultane-

ously with human workers to perform a specific task

in a much more productive and safer environment.

On a robotic environment, a novel form of train-

ing, overcoming risks associated with real equipment

to ensure the safety of the trainee, occurs. Human-

robot collaboration can be dangerous due to the high-

speed movements and massive forces generated by

industrial robots (Oyekan et al., 2019). Therefore,

digital training emerged through technologies such as

Virtual Reality (VR), force and haptic feedback, and

digital twins. In particular, digital twin, which is a

virtual replica of real-life objects that simulate their

behavior, not only enhances the training process but

also can enable the integration of cyber-physical sys-

tems (Hochhalter et al., 2014).

Based on a comprehensive review of several train-

ing mechanisms that utilize VR technologies, this

paper proposes an innovative and efficient training

framework in a robotic environment. Contrary to the

majority of VR training techniques that integrate VR

controllers of the respective VR headset, this frame-

work leverages force and haptic feedback gloves, and

more specifically Sense Glove, to enable the user’s

interactions within the virtual environment. These

gloves not only give the ability to the user to observe

his own hands in the VR but also provide realistic ma-

nipulation within the environment by applying force

and haptic feedback when an interaction with a VR

object occurs, which is not possible to be achieved by

utilizing only the controllers of a VR headset. Ad-

ditionally, cases, where force-feedback gloves were

integrated, are mainly involved healthcare and mil-

itary domains while the presented framework pro-

vides a novel and innovative industrial robotic envi-

ronment and training workflow where the user can

freely manipulate the robot with his hands and be

trained through this procedure by manually program-

ming a VR industrial robot.

Taking into account that the simplest way to pro-

gram a robot is its manual movement with hands, this

framework allows the user not only to observe a VR

environment that utilizes a 7 joints Kuka Robot but

also to interact with virtual objects and the robot it-

self by integrating Force and Haptic Feedback gloves,

with the purpose of his efficient training. Thereby, the

proposed VR training framework overcomes any po-

tential issues regarding highly expensive equipment

(i.e. Kuka robot) and gives the ability to users with no

prior robotic knowledge, to learn the kinematics and

limitations of the robot’s joints, to understand how the

robot needs to be moved to perform a specific task

ICPRAM 2024 - 13th International Conference on Pattern Recognition Applications and Methods

652

and thus to achieve appropriate training. After the VR

training is completed the user will be able to interact

with the real robot more efficiently and safely.

To evaluate the realism of the VR environment and

hand interactions with the robot, an experiment was

conducted in which the participants needed to set the

robot for a specific task in an insect farm robotic en-

vironment. After the completion of the task, ques-

tionnaires were given to the participants to evaluate

the realism of the experience of the VR environment,

the movement of the robot by their own hands and

the training framework workflow. Statistical Anal-

ysis and correlation methods were performed to ex-

tract valuable insights along with Cronbach alpha test

to ensure the reliability of the questionnaires and the

overall contribution of the proposed haptic-based VR

framework and its impact on an effective and safer

human-robot collaboration environment.

2 RELATED WORK

Virtual Reality technology has immense significance

in various domains such as gaming, education, health-

care, and industry. It revolutionizes the user expe-

rience by providing the ability of transferring to a

computer-generated simulation place while also re-

defining the traditional training methods in a safer and

more efficient manner.

In healthcare, for instance, VR aids in patient

therapy and surgeon training. Previous work indi-

cated that simulation-based training can remarkably

decrease the mistakes of healthcare workers as well

as improve patient safety (Salas et al., 2005). VR-

based training is a promising area that can assess

task-specific clinical skills and simulate multifari-

ous medical procedures and clinical cases (O’Connor,

2019). These cases include orthopedic surgery (Laith

K Hasan and Petrigliano, 2021), neuroradiology pro-

cedures (Magnus Sundgot Schneider et al., 2023),

gunshot wounds (Dascal et al., 2017), and mental dis-

eases such as schizophrenia by creating a VR expe-

rience that puts participants on a city bus with addi-

tional surroundings like sights and sounds (Mantovani

et al., 2003). Consequently, a healthcare VR training

program that gives the ability to the trainee to interact

realistically with the necessary equipment overcomes

substantial risks and provides the essential skills be-

fore being applied to a real patient.

An efficient training mechanism in the VR is com-

prised of multiple critical elements. The most vital

component is the VR Headset which corresponds to

the display quality and the tracking motion of the

user’s head to provide the ultimate experience. At

present, the most popular VR hardware devices are

Oculus VR (Yao et al., 2014), HTC Vive, Valve Index

(Valve, 2019), and Samsung HMD Odyssey (Sam-

sung, 2021; Yildirim, 2020). The most important

features that categorize VR headsets are the display

quality, the motion tracking, and the VR controllers’

response. For instance, research indicates that an in-

creased level of visual realism improves the sense of

presence (Bowman and McMahan, 2007). All the

aforementioned VR headsets achieve a high level of

image quality using LCD and AMOLED displays to

provide an excellent three-dimensional environment

with a remarkable tracking system and are equipped

with VR controllers (Angelov et al., 2020).

Furthermore, in all training procedures, the

trainee’s hands must be visible within the the VR to

accomplish the desired educational level of the user

performing a specific task. VR controllers which are

available with most VR headsets, provide this ca-

pability but they lack of realistic interactions since

they exclusively map the user’s hand with the con-

troller and failing to deliver a sufficient hand simula-

tion within the VR.

On the contrary, force and haptic feedback gloves

leverage a realistic user interaction in VR. In (Whit-

mire et al., 2018), a haptic revolver was introduced

that offers the user’s ability to interact with surfaces

and perform tasks such as picking and placing of ob-

jects. Nevertheless, it does not provide the mapping

of fingers on the VR and is more similar to a VR con-

troller equipped with a haptic capability. Moreover,

research efforts in (Kim et al., 2017) led to the de-

velopment of a low-cost portable hand haptic system

designed as an Arduino-based sensor architecture for

each finger. Similar work was presented in (Martinez-

Hernandez and Al, 2022) in which researchers pro-

posed a wearable fingertip device for sliding and vi-

bration feedback in VR. The corresponding device

comprised an array of servo motors and 3D-printed

components. However, these research focus on appli-

cations outside of training workflows, and not in cases

where the movement of the hands must be precise by

applying the corresponding force-feedback such as in

industrial robotic domains. It is notable that in the lit-

erature these gloves are mostly employed preliminar-

ily in healthcare domains while it could be very ben-

eficial to be integrated into an industrial robotic field

to simulate realistic worker training for programming

robotic tasks.

In (P

´

erez et al., 2019), researchers proposed a sys-

tem utilizing a VR interface connected to the robot

controller providing the ability to control the virtual

robot. This system can be used for training, simula-

tion, and integrated robot control all in a cost-effective

Leveraging VR and Force-Haptic Feedback for an Effective Training with Robots

653

Figure 2: Virtual Environment for Training.

manner. They employed HTC Vive headset and uti-

lized its controllers for the interaction with VR but-

tons that move the robot. It must be noted that the

HTC Vive controllers cannot simulate the haptic ex-

perience in a realistic way.

Similarly, (Garcia et al., 2019) proposed Virtual

and Augmented Reality as means of optimizing train-

ing time and cost reduction. The application created

with Unity Pro enhanced the familiarization of their

system and procedures. META2 Development Kit

was selected as equipment, which is an Augmented

reality device. Again in that situation, emphasis was

not given to the haptic experience.

The majority of the research for Virtual Training

employed the controllers of each Virtual Headset for

the appropriate training. However, this training pro-

cess lacks intrinsically since the trainee is not capa-

ble of manipulating machines realistically, but only

limited to the controllers. Our proposed framework

utilizes not only VR for training but also force and

haptic feedback gloves enabling trainees to interact

with and manipulate realistically with VR objects in

a more intuitive manner. Particularly, Sense Gloves

were utilized for the force and haptic feedback capa-

bility combined with the HTC Vive VR headset along

with Vive trackers to map the hands into the VR en-

vironment. Thereby, the proposed framework con-

tributes an industry learning mechanism providing the

ability for the trainee to freely view the Virtual En-

vironment, interact with his own hands as if it was

a real physical environment, learn from it and kines-

theticaly interact with the VR robot and subsequently

learn how to program a robot efficiently.

3 THE PROPOSED

FRAMEWORK

The proposed framework was developed by leverag-

ing the capabilities of the open-source game devel-

opment engine, Unity3D. Unity3D offers numerous

features that facilitate the integration of multiple inde-

pendent modules into a cohesive environment (Haas,

2014). Additionally, the framework leverages the sup-

port for force and haptic feed-back gloves, Sense-

Glove, which enables natural interaction in VR En-

vironments and manipulation of VR objects.

Contrary to traditional controllers, SenseGlove

provides users with the ability to feel the size, stiff-

ness, and resistance, allowing a more realistic experi-

ence when pushing, holding, and touching virtual ob-

jects (SenseGlove, 2018). SenseGloves are exoskele-

ton gloves equipped with servo motors and tires that

can block the movement of the fingers. Accordingly,

whenever the user interacts with a virtual object, the

servos actuators block the tires, preventing the user

from closing his hand. Thereby they deliver the sense

of touching the object while also applying the ap-

propriate force and haptic feedback. Finally, a Vive

Tracker was attached to each of the Gloves to deliver

the capability of the free hand move within the VR

environment.

The HTC Vive headset, which is also supported

by Unity, is utilized in the framework to enable free

viewing and movement within the VR environment

(HTC, 2011). Moreover, two VR base stations are

placed to enhance the motion track of the headset and

the Sense Gloves. The complete hardware and spatial

setup are illustrated in Figure 1.

3.1 Virtual Training Environment

The virtual environment has been developed with the

intention of providing effective training to users re-

garding the automation in insect farming domain.

This environment is a virtual training room that has

been designed to simulate real-world scenarios . In

particular, an industrial level robot, Kuka IIWA 7,

was chosen to carry out robotic tasks in the VR train-

ing room, as depicted in Figure 2. A 3D crate con-

taining animated worms and substrate was placed in

front of the Kuka in which the robot assists in qual-

ity management tasks (i.e. scanning crate). In ad-

dition, the SenseGlove technology has been utilized

to allow users to see a virtual representation of their

hands. This technology also enables users to manipu-

late the Kuka robot freely within the designated area,

while taking into account the robot’s joint limitations.

Furthermore, a table with two VR buttons has

ICPRAM 2024 - 13th International Conference on Pattern Recognition Applications and Methods

654

Figure 3: Kuka Robot Manipulation in VR.

been installed within the interaction VR area. The

main objective of these buttons is to record the robot’s

movement and display it on the corresponding VR

screen. Therefore, the users and their trainees can

evaluate the task and identify the optimal robot ma-

nipulation movement for this specific task.

Overall, this virtual environment is designed to

provide users with a practical and hands-on experi-

ence in a simulated training room. It incorporates

cutting-edge technologies such as VR, SenseGlove,

and a 3D model of the Kuka IIWA 7 robot to simulate

real-world scenarios and enable users to acquire prac-

tical skills that can be applied in real-world situations.

The corresponding robot is an exact representation of

the real 7 joint Kuka one in Unity 3D by respecting

its mass, torque, stiffness, acceleration and all the at-

tributes that affect its movement.

3.2 Training Procedure

In numerous tasks, there may be a requirement for

the manual movement of a robot, such as in the case

of insect farming, where the robot must be manually

moved to scan a crate of insects. This manual robotic

movement is valuable for workers lacking prior exper-

tise in robot manipulation, particularly in program-

ming a robotic task. The robot records the user’s man-

ual movement and replicates the exact trajectory. A

significant application of this method within insect

farming is the visual inspection of the crate where

a camera is attached to the robot’s end-effector and

must be positioned above the crate to capture images

and perform the corresponding visual inspection (e.g.

using AI algorithms).

The movements necessary for this task require

precise and careful handling of the robot. A realistic

and sufficient utilization of hand manipulation within

the VR occurred. Therefore, Sense Glove was inte-

grated in conjunction with the VR training framework

which allows the user not only to perceive his hands

Figure 4: Framework Setup.

on the VR but also touch and move the robot realis-

tically by applying Force and Haptic Feedback tech-

nology. To achieve an effective training for this task,

the user, wearing the aforementioned equipment, as

depicted on Figure 4, manually moves and interacts

with the robot in a natural manner, provided with the

appropriate force feedback and hence be familiarized

with its kinematics. Finally, using the VR recording

button the user can record the robot’s movement and

display it on the screen for evaluation purposes.

Particularly, using the proposed framework, the

user is first able to move freely within the VR envi-

ronment and interact with both the robot and other

components of the environment, as depicted on Fig-

ure 3. As the user gains confidence in manually mov-

ing the robot, he records the robot’s movements using

the green VR button and after completing the task,

stops the recording by pressing the red one. The cor-

responding recorded movement is then displayed on

the VR screen and is visible to the employee, as de-

picted on Figure 5. It can also be evaluated along with

his trainee for its precision and accuracy and can be

further analyzed to determine the most optimal move-

ments for this assigned task.

For instance, in the terms of insect farming, the

Leveraging VR and Force-Haptic Feedback for an Effective Training with Robots

655

worker can be trained in this Virtual environment, to

lower the end-effector of the Kuka Robot above the

crate in order to perform its scanning to assist the

quality management of insects prior to executing the

task given the real robot.

3.3 Experiment

An experiment aimed to evaluate the realism and the

efficiency of the proposed VR training environment

and the manipulation of the Kuka Robot was con-

ducted. The established setup was created according

to Figure 1, in which the two HTC Vive Base Sta-

tions were positioned to configure the VR space and

to track the movement of both the headset and the

Sense Gloves (by utilizing two Vive Trackers attached

to each hand). The application for the experiment was

created in Unity, utilized the SteamVR plugin, and ran

on the NVIDIA GeForce RTX 3090 GPU with 24GB

memory to maximize frames per second (FPS) and

to avoid potential motion sickness that the user might

experience.

Figure 5: VR Screen to evaluate robot’s recorded move-

ment.

The experiment was conducted by involving 25

participants with no prior expertise in robot manip-

ulation. Each participant was equipped with the nec-

essary hardware and was initially asked to familiar-

ize himself with the VR environment and freely move

within this environment. Afterward, participants en-

gaged in manual manipulation of the robot with their

hands to learn the kinematics and limitations of each

joint. Accordingly, each participant was assigned the

same task which consisted of a sequence of actions.

Particularly:

• Lower the robot’s end-effector and position it

above the crate

• Press the green button in order to record the up-

coming movement

• Move the robot to scan the crate

• Press the red button to stop the recording

After the recording was stopped, the movement

was displayed on the VR display in order to eval-

uate whether the corresponding robot manipulation

was the optimal one for the given task. Otherwise,

the participants had the option of pressing again the

green button and repeating the procedure.

Following the completion of the experiment, a

questionnaire was provided to each participant aimed

to evaluate the realism of the experience in combi-

nation with the hand manipulation of the robot and

to analyze valuable insights. The questionnaire items

are detailed in Table 1.

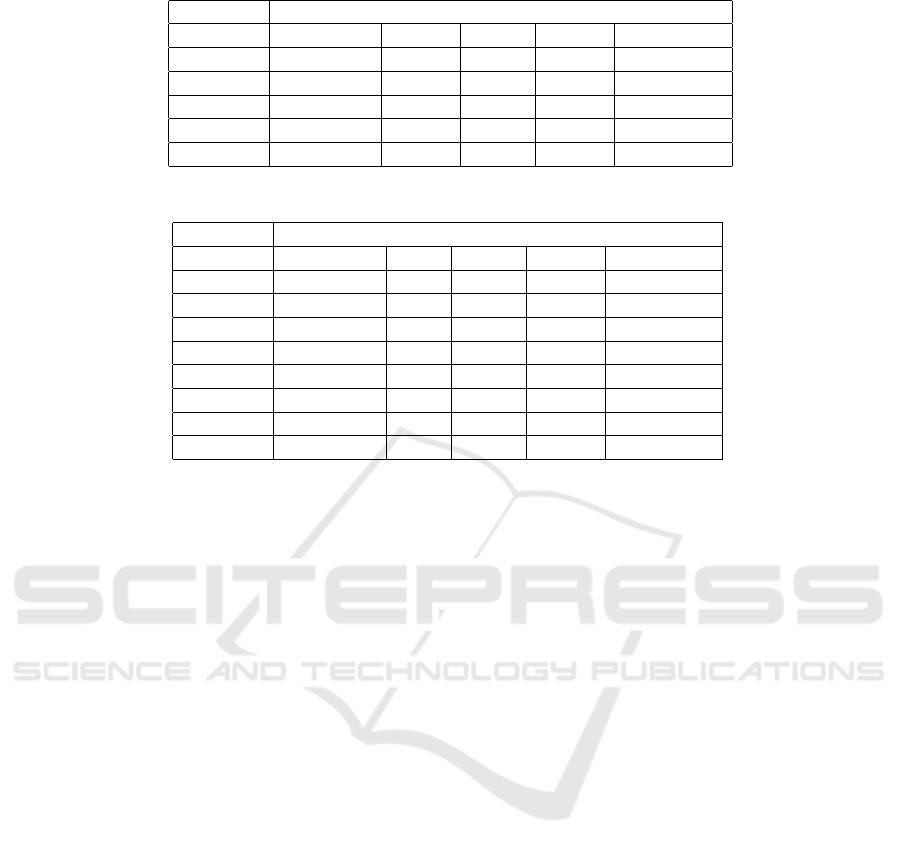

Table 1: Questions.

Questions

Q1 Do you have any prior experience with VR?

Q2 Were the SenseGlove easy to use?

Q3 Was the VR Headset easy to use?

Q4

Did you experience nausia or motion

sickness? (1: Not at all)

Q5

How would you rate the force-feedback

while interacting with the Kuka Robot?

Q6

Was the process of the training framework

easy to undertand?

Q7 Was the VR interface easy to understand?

Q8

How impactful was moving the robot for

your training?

Q9

How impactful was the recording process of

robot movement?

Q10

How would you rate the realiability of the

training framework?

Q11

Do you consider that this framework leads

to a safer human robot collaboration scheme?

Q12

Do you consider the training framework to be

useful tool in your work?

Q13

Would you recommend using a similar VR

framework in other jobs similar to yours?

3.4 Results

The duration of the experiment was approximately 20

minutes per participant including the time required for

wearing the essential equipment. Furthermore, de-

tailed instructions were provided to users about the

utilization of Sense Gloves and VR headset along

with comprehensive guidance during the conduction

of the experiment to ensure the proper execution of

the procedures.

The questionnaires provided to the participants

consisted of a total of 13 inquiries, divided into two

categories: five regarding the realisticness of the

robotic manipulation and eight concerning the reli-

ability of the training framework. Participants pro-

vided their feedback using a scale ranging from 1 to

5. The lowest value of the scale corresponded to 0%

while the highest one represented 100%.

To ensure that the questionnaire produces accurate

ICPRAM 2024 - 13th International Conference on Pattern Recognition Applications and Methods

656

Table 2: Evaluation of the manipulation’s realism.

Answer

Question 1 (Lowest) 2 3 4 5 (Highest)

Q1 26.1% 47.9% 17.4% 8.6% 0%

Q2 0% 4.3% 21.7% 26.2% 47.8%

Q3 0% 0% 4.3% 39.1% 56.6%

Q4 97.9% 2.1% 0% 0% 0%

Q5

0% 3.4% 11.4% 64.8% 20.4%

Table 3: Reliability of the VR Training Framework.

Answer

Question 1 (Lowest) 2 3 4 5 (Highest)

Q6 0% 0% 0% 41.7% 58.3%

Q7 0% 0% 4.1% 29.2% 66.7%

Q8 0% 0% 20.8% 37.5% 41.7%

Q9 0% 0% 15.4% 30.4% 54.2%

Q10 0% 0% 0% 58.3% 41.7%

Q11 0% 0% 25% 41.7% 33.3%

Q12 0% 8.7% 8.7% 26.1% 56.5%

Q13 0% 4.2% 8.3% 20.8% 66.7%

and reliable results, it is essential to test its reliability.

Therefore, Cronbach Alpha Test (Bland and Altman,

1997) was performed, particularly for questions 11,

12, and 13. The Cronbach Alpha between Q11 and

Q12 is 0.749 and between Q12 and Q13 is 0.879 ac-

cordingly. Both indicate a good realiability and inter-

nal consistency.

Both the training framework and the robotic ma-

nipulation provided efficient and valuable outcomes.

As illustrated in Table 2, most of the participants had

no prior experience with Virtual Reality Technolo-

gies. Nevertheless, they found that SenseGlove uti-

lization and VR headset were easy to use and 97.9%

of them did not encounter any kind of nausea or mo-

tion sickness. The force and haptic feedback dur-

ing interactions with the robot define the realistic-

ness of the manipulation because it portrays the sense

of touching and moving objects like in real world.

The majority of the participants (precisely 85.2% of

them), rated the force feedback with 4 and 5 indicat-

ing a highly realistic interaction with the robot.

Considering the competence and the impact of our

proposed framework, the majority of the participants

rated the training workflow with more than 75% by

scoring 4 and 5 the corresponding questions, as il-

lustrated in Table 3. As depicted in the descriptive

statistics in Table 4 all the mean values regarding the

competency of the framework are above 3 suggest-

ing the efficiency of the proposed VR training mech-

anism. In particular, apart from Q5, all the variables

have a score above 4. The reason behind this is that

SenseGlove may not provide the corresponding feed-

back efficiency for creating the ultimate manipulation

experience in VR. Nevertheless, the 3.83 mean score

of the Sense Glove is significant enough and provides

adequate training to inexperienced users as illustrated

by the mean value of Q10. Consequently, all partici-

pants found our framework easy to understand while

reliable to provide sufficient training to users lacking

robotic expertise. Additionally, they rated both the

provided robot task and recording procedure as intu-

itive for effective training.

Additionally, Pearson correlation (Sedgwick,

2012) was used to examine the relationship between

the previous participant’s experience with VR and

Kuka Robot Manipulation to the reliability of the pro-

posed framework. The Pearson correlation between

the past VR experience (Q1) and the competence of

the robotic movement (Q7) stands at 0.36 indicating

a lack of relation between them. Moreover, it is note-

worthy that the Pearson correlation between the past

expertise with Kuka Robot and the training frame-

work is 0.0017 confirming the independence of these

variables.

The independence of these variables is vital for the

realism and particularly for the impact of our frame-

work since otherwise the efficiency of the proposed

framework will be biased by users who already ex-

perienced similar technologies. On the contrary, the

primary objective is to train inexperienced users to

manipulate Kuka Robot by utilizing VR technologies.

Therefore, it is concluded that the prior knowledge

and manipulation of Kuka Robot and the prior ex-

perience with VR do not affect the intuition of the

Leveraging VR and Force-Haptic Feedback for an Effective Training with Robots

657

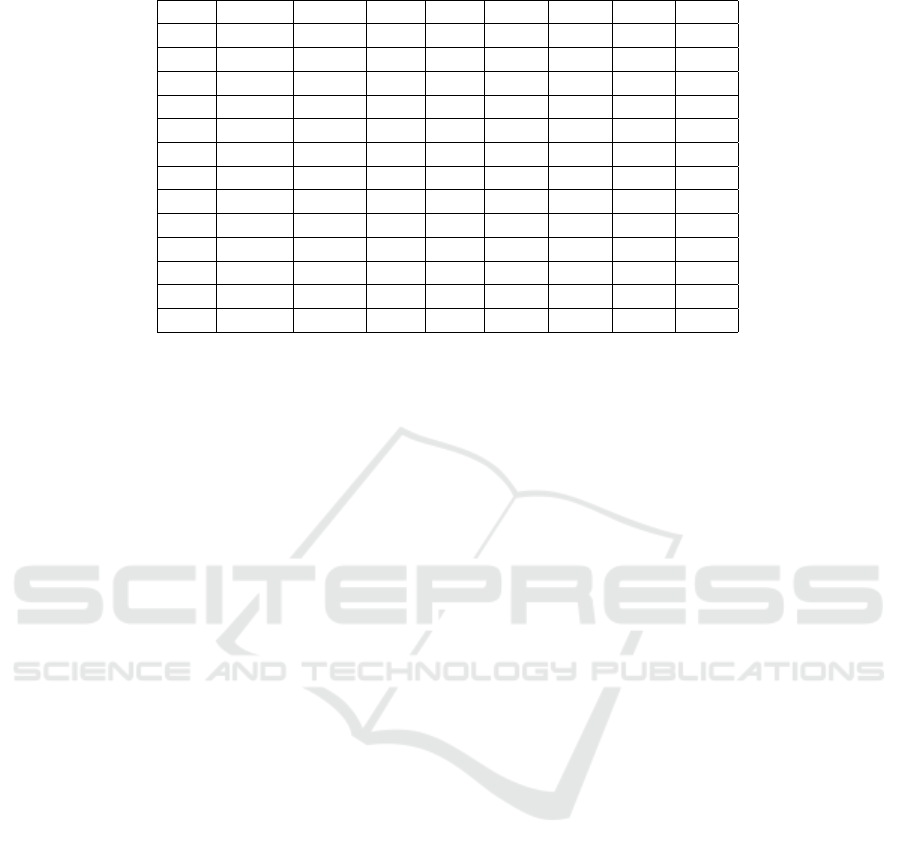

Table 4: Descriptive Statistics.

Count Mean std Min 25% 50% 75% Max

Q1 25 2.16 0.96 1 1.75 2 3 4

Q2 25 1.79 1.35 1 1 1 2 5

Q3 25 4 0.83 2 3.75 4 5 5

Q4 25 4.54 0.58 3 4 5 5 5

Q5 25 1.04 0.20 1 1 1 1 2

Q6 25 4.41 0.65 3 4 4.5 5 5

Q7 25 4.62 0.57 3 4 5 5 5

Q8 25 4.21 0.78 3 4 4 5 5

Q9 25 4.33 0.86 2 4 4 5 5

Q10 25 4.16 0.63 3 4 4 5 5

Q11 25 4.08 0.77 3 3.75 4 5 5

Q12 25 4.29 0.95 2 4 5 5 5

Q13 25 4.5 0.83 2 4 5 5 5

framework, hence the proposed training framework is

highly valuable and efficient. Moreover, the Pearson

Correlation comparing the reliability of the frame-

work (Q10) and its impact on a safer human-robot

collaboration environment (Q11) stands at 0.67 sug-

gesting their notable relation between. Accordingly,

apart from effective training, the proposed framework

additionally results in a more secure human-robot col-

laboration scheme.

Consequently, this mechanism simulates effi-

ciently the real movement and manipulation of the

robot, thereby providing sufficient and secure training

to new users in a robotic environment.

4 CONCLUSION AND FUTURE

WORK

The presented framework provided an innovative

training methodology, implemented in VR, able for

the user to intrinsically interact with robots, to ana-

lyze and understand their kinematics and joint limita-

tions and to overcome possible risks while operating

real world robots. Therefore, it contributes to the ef-

fective training of employees in human-robot collab-

oration schemes and thus will ensure wariness when

the actual task on the real robot has to occur.

The conduction of our experiment resulted in a

highly realistic VR environment concerning the in-

sect farming domain and a 7-joint Kuka IIWA robot

while also providing a realistic simulation of the hand

interaction and manipulation of the corresponding

robot. Therefore, the proposed framework, leverag-

ing VR with force and haptic feedback technologies,

achieves effective training for non-expertise employ-

ees securely by overcoming any potential risks while

handling highly expensive equipment i.e. robots. Ad-

ditionally, the regular use of this mechanism will raise

the confidence and the safety of the employees deal-

ing and working with robots while reducing the uncer-

tainty when the real robot task occurs. Consequently,

it is a vital component for new robot users to simply

learn how to program a robot task safely correspond-

ing to their needs while they have been trained funda-

mentally in VR.

The majority of the Virtual Training methods uti-

lize the controllers of the corresponding VR head-

set that they use resulting in a limitation of hands-

on experience and real interactions within VR lack-

ing an effective simulation in reality. On the contrary,

our proposed framework that utilizes force and hap-

tic feedback gloves overcomes this limitation and im-

proves the realistic experience of the user. In the liter-

ature, only in a few cases, VR force-feedback gloves

are integrated for training purposes and observed pri-

marily in the healthcare domain. However, our train-

ing framework acts as an educational tool for inexpe-

rienced users (provides the ability to learn the kine-

matics and constraints of robotic joints) and is an in-

novative approach to program robotic tasks that could

be further expanded as a digital twin of an actual

robot.

For future work, robotic joint data will be gath-

ered with their timing characteristics from the real

robot to be transferred in the VR robotic digital twin

in order to provide a more realistic experience. Hav-

ing constructed the realistic digital twin of the Kuka

robot, the proposed framework will be further utilized

to program the actual robot by non-technical workers.

Specifically, the ultimate goal is the robot’s movement

in the Virtual Environment to correspond to the move-

ment of the real robot. By utilizing the VR train-

ing room, the user can analyze the recorded move-

ments of the robot and determine the optimal trajec-

tory path. Thereby, the user can transfer the corre-

ICPRAM 2024 - 13th International Conference on Pattern Recognition Applications and Methods

658

sponding movement to the physical robot by pressing

a designated VR button. This process can enhance the

existing human-robot collaboration schemes in terms

of of human safety and robot integrity (avoiding dam-

age).

ACKNOWLEDGMENTS

The work presented in this paper was supported by

the European Commission under contract H2020 -

101016953 CoRoSect.

REFERENCES

Angelov, V., Petkov, E., Shipkovenski, G., and Kalushkov,

T. (2020). Modern virtual reality headsets. In 2020 In-

ternational congress on human-computer interaction,

optimization and robotic applications (HORA), pages

1–5. IEEE.

Aoun, A., Ilinca, A., Ghandour, M., and Ibrahim, H. (2021).

A review of industry 4.0 characteristics and chal-

lenges, with potential improvements using blockchain

technology. Computers & Industrial Engineering,

162:107746.

Bauer, A., Wollherr, D., and Buss, M. (2008). Human–

robot collaboration: a survey. International Journal

of Humanoid Robotics, 5(01):47–66.

Bland, J. M. and Altman, D. G. (1997). Statistics notes:

Cronbach’s alpha. Bmj, 314(7080):572.

Bowman, D. A. and McMahan, R. P. (2007). Virtual re-

ality: How much immersion is enough? Computer,

40(7):36–43.

Braganc¸a, S., Costa, E., Castellucci, I., and Arezes, P. M.

(2019). A brief overview of the use of collaborative

robots in industry 4.0: human role and safety. Occu-

pational and environmental safety and health, pages

641–650.

Colgate, J. E., Wannasuphoprasit, W., and Peshkin, M. A.

(1996). Cobots: Robots for collaboration with hu-

man operators. In ASME international mechanical

engineering congress and exposition, volume 15281,

pages 433–439. American Society of Mechanical En-

gineers.

Dascal, J., Reid, M., IsHak, W. W., Spiegel, B., Recacho,

J., Rosen, B., and Danovitch, I. (2017). Virtual re-

ality and medical inpatients: A systematic review of

randomized, controlled trials. Innov. Clin. Neurosci.,

14(1-2):14–21.

Garcia, C. A., Naranjo, J. E., Ortiz, A., and Garcia, M. V.

(2019). An approach of virtual reality environment

for technicians training in upstream sector. Ifac-

Papersonline, 52(9):285–291.

Haas, J. K. (2014). A history of the unity game engine.

Hochhalter, J., Leser, W. P., Newman, J. A., Gupta, V. K.,

Yamakov, V., Cornell, S. R., Williard, S. A., and

Heber, G. (2014). Coupling damage-sensing particles

to the digitial twin concept - nasa technical reports

server (ntrs).

HTC (2011). Htc vive. Accessed on April 2023.

Kim, M., Jeon, C., and Kim, J. (2017). A study on immer-

sion and presence of a portable hand haptic system for

immersive virtual reality. Sensors, 17(5).

Kusiak, A. (2017). Smart manufacturing must embrace big

data. Nature, 544:23–25.

Laith K Hasan, Aryan Haratian, M. K. I. K. B. A. E. W. and

Petrigliano, F. A. (2021). Virtual reality in orthopedic

surgery training. Advances in Medical Education and

Practice, 12:1295–1301.

Lasi, H., Fettke, P., Kemper, H.-G., Feld, T., and Hoff-

mann, M. (2014). Industry 4.0. Bus. Inf. Syst. Eng.,

6(4):239–242.

Magnus Sundgot Schneider, K. O. S., Kurz, K. D., Dalen,

I., Ospel, J., Goyal, M., Kurz, M. W., and Fjetland,

L. (2023). Metric based virtual simulation training for

endovascular thrombectomy improves interventional

neuroradiologists’ simulator performance. Interven-

tional Neuroradiology, 29(5):577–582.

Mantovani, F., Castelnuovo, G., Gaggioli, A., and Riva, G.

(2003). Virtual reality training for health-care profes-

sionals. Cyberpsychology & behavior: the impact of

the Internet, multimedia and virtual reality on behav-

ior and society, 6:389–95.

Martinez-Hernandez, U. and Al, G. A. (2022). Wearable

fingertip with touch, sliding and vibration feedback

for immersive virtual reality. In 2022 IEEE Interna-

tional Conference on Systems, Man, and Cybernetics

(SMC), pages 293–298.

Matheson, E., Minto, R., Zampieri, E. G., Faccio, M.,

and Rosati, G. (2019). Human–robot collaboration

in manufacturing applications: A review. Robotics,

8(4):100.

Oyekan, J. O., Hutabarat, W., Tiwari, A., Grech, R., Aung,

M. H., Mariani, M. P., L

´

opez-D

´

avalos, L., Ricaud,

T., Singh, S., and Dupuis, C. (2019). The effec-

tiveness of virtual environments in developing collab-

orative strategies between industrial robots and hu-

mans. Robotics and Computer-Integrated Manufac-

turing, 55:41–54.

O’Connor, S. (2019). Virtual reality and avatars in health

care. Clinical Nursing Research, 28(5):523–528.

P

´

erez, L., Diez, E., Usamentiaga, R., and Garc

´

ıa, D. F.

(2019). Industrial robot control and operator training

using virtual reality interfaces. Computers in Industry,

109:114–120.

Qi, Q. and Tao, F. (2018). Digital twin and big data to-

wards smart manufacturing and industry 4.0: 360 de-

gree comparison. IEEE Access, 6:3585–3593.

Robla-G

´

omez, S., Becerra, V. M., Llata, J. R., Gonz

´

alez-

Sarabia, E., Torre-Ferrero, C., and P

´

erez-Oria, J.

(2017). Working together: A review on safe human-

robot collaboration in industrial environments. IEEE

Access, 5:26754–26773.

Salas, E., Wilson, K. A., Burke, C. S., and Priest, H. A.

(2005). Using simulation-based training to improve

Leveraging VR and Force-Haptic Feedback for an Effective Training with Robots

659

patient safety: what does it take? Jt. Comm. J. Qual.

Patient Saf., 31(7):363–371.

Samsung (2021). Samsung. Accessed on October 2023.

Sedgwick, P. (2012). Pearson’s correlation coefficient. Bmj,

345.

SenseGlove (2018). Senseglove. Accessed on April 2023.

Tao, F., Zhang, H., Liu, A., and Nee, A. Y. C. (2019). Dig-

ital twin in industry: State-of-the-art. IEEE Transac-

tions on Industrial Informatics, 15(4):2405–2415.

Valve (2019). Valve. Accessed on October 2023.

Weiss, A., Wortmeier, A.-K., and Kubicek, B. (2021).

Cobots in industry 4.0: A roadmap for future practice

studies on human–robot collaboration. IEEE Transac-

tions on Human-Machine Systems, 51(4):335–345.

Whitmire, E., Benko, H., Holz, C., Ofek, E., and Sinclair,

M. (2018). Haptic revolver: Touch, shear, texture,

and shape rendering on a reconfigurable virtual reality

controller. pages 1–12.

Yao, R., Heath, T., Davies, A., Forsyth, T., Mitchell, N., and

Hoberman, P. (2014). Oculus vr best practices guide.

Oculus VR, 4:27–35.

Yildirim, C. (2020). Don’t make me sick: investigating the

incidence of cybersickness in commercial virtual real-

ity headsets. Virtual Reality, 24(2):231–239.

ICPRAM 2024 - 13th International Conference on Pattern Recognition Applications and Methods

660