Contextual Online Imitation Learning (COIL): Using Guide Policies in

Reinforcement Learning

Alexander Hill, Marc Groefsema, Matthia Sabatelli, Raffaella Carloni

∗

and Marco Grzegorczyk

∗

Faculty of Science and Engineering, Bernoulli Institute for Mathematics, Computer Science and Artificial Intelligence,

University of Groningen, The Netherlands

Keywords:

Machine Learning.

Abstract:

This paper proposes a novel method of utilising guide policies in Reinforcement Learning problems; Contex-

tual Online Imitation Learning (COIL). This paper demonstrates that COIL can offer improved performance

over both offline Imitation Learning methods such as Behavioral Cloning, and also Reinforcement Learning

algorithms such as Proximal Policy Optimisation which do not take advantage of existing guide policies. An

important characteristic of COIL is that it can effectively utilise guide policies that exhibit expert behavior in

only a strict subset of the state space, making it more flexible than classical methods of Imitation Learning.

This paper demonstrates that through using COIL, guide policies that achieve good performance in sub-tasks

can also be used to help Reinforcement Learning agents looking to solve more complex tasks. This is a sig-

nificant improvement in flexibility over traditional Imitation Learning methods. After introducing the theory

and motivation behind COIL, this paper tests the effectiveness of COIL on the task of mobile-robot navigation

in both a simulation and real-life lab experiments. In both settings, COIL gives stronger results than offline

Imitation Learning, Reinforcement Learning, and also the guide policy itself.

1 INTRODUCTION

Imitation Learning (IL) is a technique of solving Re-

inforcement Learning (RL) problems where instead

of directly training an agent using the rewards col-

lected through exploration of an environment, an ex-

pert guide policy provides the learning agent with a

set of demonstrations of the form (s, π

guide

(s)) where

π

guide

(s) is the action the guide policy takes in state s

(Halbert, 1984). The guide policy π

guide

is defined

as any mapping from states in the environment to

actions that exhibit the desired behavior. The agent

then tries to learn the optimal policy by imitating

the expert’s decisions. In recent years, research in

the field of Imitation Learning has become very topi-

cal, with many new methods being developed such as

Confidence-Aware Imitation Learning (Zhang et al.,

2021) and Coarse-to-Fine Imitation Learning (Ed-

ward, 2021), and Jump-Start Reinforcement Learn-

ing (Uchendu et al., 2022), and Adversarially Ro-

bust Imitation Learning (Wang et al., 2022). Fur-

thermore, applications of Imitation Learning are very

broad, ranging from self-driving car software (Chen

∗

These authors contributed equally to this work and

share last authorship

et al., 2019) and industrial robotics (Fang et al., 2019)

, to financial applications such as stock market trad-

ing (Liu et al., 2020). In general, Imitation Learning is

a powerful technique when one has access to an expert

guide policy able to show the desired behaviour in the

environment. Influential examples of this methodol-

ogy are Behavioral Cloning (Ross et al., 2011), DAg-

ger (Pomerleau, 1998), and Generative Adversarial

Imitation Learning (Ho and Ermon, 2016).

Imitation Learning methods can utilise expert

guide policies in many different ways, such as esti-

mating the underlying reward function of a task di-

rectly from guide policy demonstrations such as in

Inverse Reinforcement Learning, through minimiz-

ing a carefully crafted loss function representing the

relative distance between the learning policy and the

guide policy, or even through using the guide policy

for a more sophisticated sampling of states for the

learning agent (Uchendu et al., 2022). Contextual On-

line Imitation Learning (COIL) utilises the guide pol-

icy in a different way. Instead, the agent’s observa-

tions of the environment are modified to include the

guide policies ‘suggestions’ for what action to take

next. From here, the agent is able to learn complex re-

lationships between the guide policies actions and the

178

Hill, A., Groefsema, M., Sabatelli, M., Carloni, R. and Grzegorczyk, M.

Contextual Online Imitation Learning (COIL): Using Guide Policies in Reinforcement Learning.

DOI: 10.5220/0012312700003636

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 16th International Conference on Agents and Artificial Intelligence (ICAART 2024) - Volume 3, pages 178-185

ISBN: 978-989-758-680-4; ISSN: 2184-433X

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

underlying state of the environment. This allows for

the agent to extract value from the guide policy on a

state by state basis, which is fundamentally different

to most current methods of Imitation Learning (Osa

et al., 2018). In COIL, the agent can learn for itself

exactly how and where in the environment is advan-

tageous to mimic the guide policy. This potentially

allows researchers and engineers to use guide policies

that only offer high rewards in a strict subset of states

in the environment. Furthermore, COIL can be easily

generalised to using multiple guide policies.

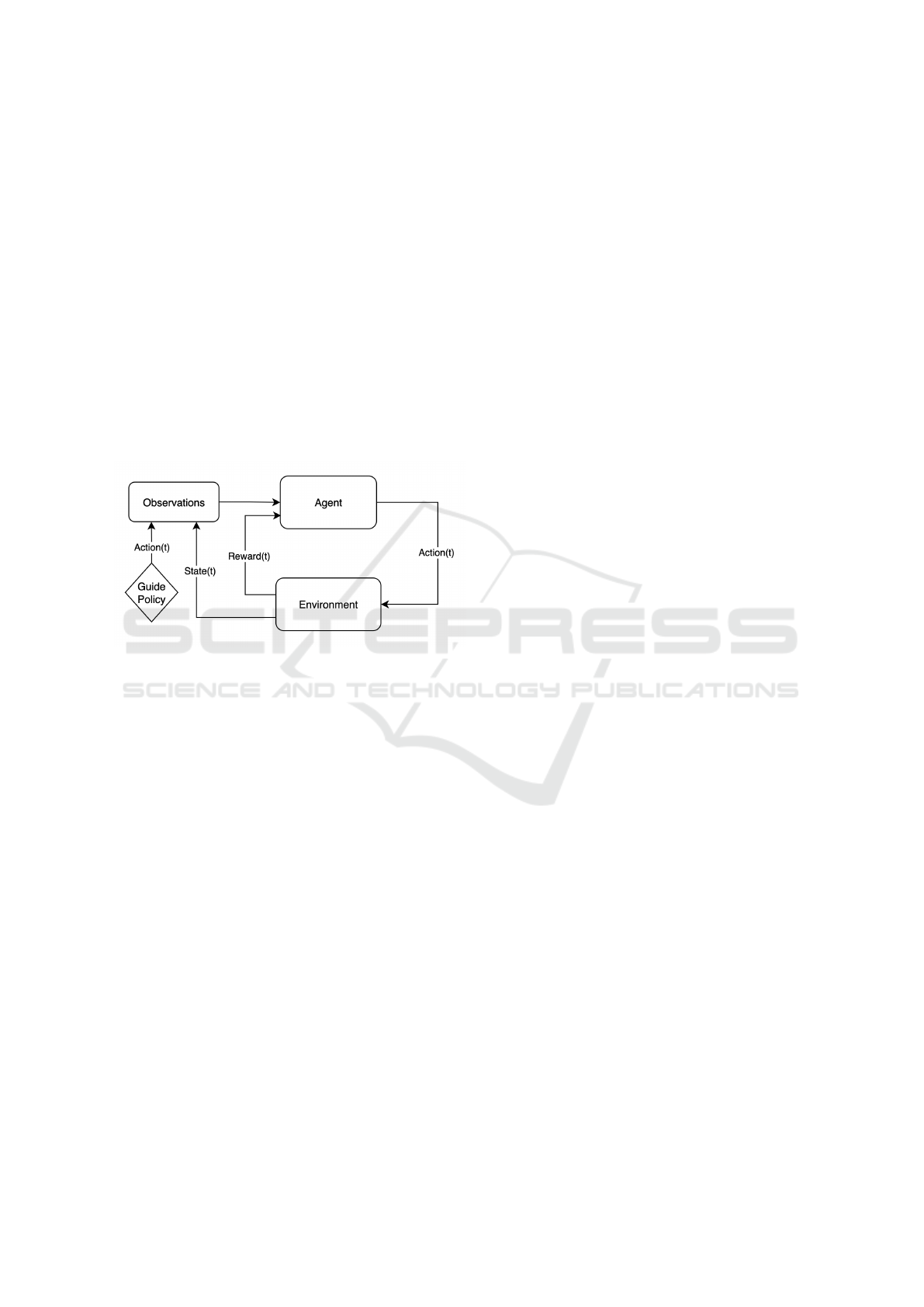

In COIL, at each time step t and state s during

training of the agent, the action of the guide policy

π

guide

(s) is provided as an additional observation to

the agent, along with any other useful observations

that are given by the environment. This proposed

methodology is shown in Fig. 1.

Figure 1: Illustration of the Contextual Online Imitation

Learning (COIL) methodology.

Additionally, if we have a set of guide policies

π

guide

1

, . . . , π

guide

n

, then we can generalise this frame-

work to include the actions of all guide policies at

time step t as observations to the learning agent.

Now that the agent is able to see what the guide

policy would do given the current context of the en-

vironment, it is able to learn through exploration how

best to use this information in order to maximise its

expected future reward. In other words, the agent is

able to learn for itself in which states within the en-

vironment it should ‘listen’ to the guide policy’s sug-

gestion, and in which states within the environment

it should ‘ignore’ the guide policy’s suggestion. This

methodology is therefore a form of contextual Imita-

tion Learning, as the agent is not directly encouraged

to follow the actions of the guide policy in all possi-

ble states. Furthermore, this method is online as it is

trained using continuous interaction with the environ-

ment. Therefore, we named this method Contextual

Online Imitation Learning.

It is important to note that COIL makes no explicit

assumption about the type of Reinforcement Learning

algorithm equipped with this new observation to the

agent. COIL can be used equally well with Value-

based methods as with Policy-gradient methods.

A major advantage of COIL in comparison to

other methods of utilising guide policies is that there

is no requirement for the guide policy to be an expert

at all states within the learning environment. Even

if the guide policy is only able to exhibit success in

some strict subset of the total states within the envi-

ronment, the agent is able to learn this fact through

enough exploration of the environment, and then ap-

propriately use this information to its advantage. Ad-

ditionally, if the guide policy exhibits only moderate

success across all states in the environment, the agent

could also learn to fine-tune the actions of the guide

policy in order to maximise reward.

This method can therefore be seen from two per-

spectives. The first is that COIL allows us to take a

non-optimal hand-crafted guide policy, and use Rein-

forcement Learning methods to encode additional de-

sired behaviours into the policy via the reward func-

tion. The other perspective is that this method allows

us to utilise existing guide policies to aid the agent

during training, and in the process the agent can learn

to use the suggested actions of the guide policy in

whatever way maximises the total expected reward.

In COIL, there is no explicit penalty in deviating from

the guide policy, this gives the Reinforcement Learn-

ing agent complete flexibility to use the guide policy

in complex and situational ways.

2 CONTEXTUAL ONLINE

IMITATION LEARNING

In many tasks in Reinforcement Learning there are

multiple competing objectives that determine the

overall success of a given policy. Let Ω

1

, . . . , Ω

n

be

the set of objectives that we want the agent to learn

during training, where n is the total number of objec-

tives.

Then let Ω

I

be the set of objectives that the guide

policy is capable of achieving near-optimal perfor-

mance. This guide policy could be hand-crafted or

extracted from data via Imitation Learning. We have

that I ⊂ {1, . . . , n}.

Now, we shall denote the part of the reward func-

tion associated with the objective Ω

i

at time step t as

r

Ω

i

(s

t

, a

t

) where s

t

is the state at time t and a

t

is the

action at time t. Then, the overall reward function

taking into account each objective is defined as:

r(s

t

, a

t

) =

n

∑

i=1

C

i

· r

Ω

i

(s

t

, a

t

) (1)

and additionally the reward function that the guide

Contextual Online Imitation Learning (COIL): Using Guide Policies in Reinforcement Learning

179

policy achieves near-optimal performance is given by

r

Ω

I

(s

t

, a

t

) =

∑

i∈I

C

i

· r

Ω

i

(s

t

, a

t

) (2)

where C

i

is the coefficient which determines the prior-

ity of objective Ω

i

. Some objectives are more impor-

tant than others, therefore these coefficients are very

important for encoding the desired behavior for the

agent to learn.

So far, the policy of the agent π has been a func-

tion of the current state s and action a. In practice,

the state is represented by a finite dimensional vector

of data points s = {s

1

, . . . , s

m

} which gives the agent

as much information about the context of the environ-

ment as possible. Therefore, we have

π(s, a) = π(s

1

, . . . , s

m

, a) (3)

and in COIL we also provide the action taken by the

guide policy π

guide

in that same state:

π(s, a) = π(s

1

, . . . , s

m

, π

guide

(s), a). (4)

Many modern policy-based Reinforcement Learn-

ing algorithms use neural networks to model the pol-

icy of the agent. A result of the universal approxi-

mation theorem is that neural networks are capable of

approximating any continuous function given enough

units within the hidden layers (Hornik et al., 1989).

It is consequently possible that a neural network with

sufficient training can learn the simple rule of ‘copy-

ing’ a single input parameter:

π(s, a) = π(s

1

, . . . , s

m

, π

guide

(s), a) ≈ π

guide

(s) (5)

where this approximation can become arbitrarily ac-

curate (Hornik et al., 1989). In this case the policy of

the agent trained via COIL π

COIL

is capable of achiev-

ing at least the same performance as the guide policy

π

guide

. However, this result is not guaranteed as in

many cases training data can be sparse or access to an

adequate training environment can be limited. If Q-

learning is used, then it is guaranteed that if the guide

policy is not the optimal policy then the COIL agent

will surpass the guide policy in expected reward given

sufficient exploration of the environment. This is a

result of Watkins’ proof of Q-learning convergence in

1992 (Watkins and Dayan, 1992).

Following this, it is interesting to investigate

whether the policy learned via COIL can be shown

to achieve better results than the policy learned via

Reinforcement Learning methods that do not utilise

the guide policy. The success of COIL is likely de-

pendent on the environment, the task, and the quality

of the guide policy utilised. Later in this paper we

will apply COIL to various applications and clearly

demonstrate the capabilities of the new method.

3 EXPERIMENTAL SET UP

3.1 Simulation

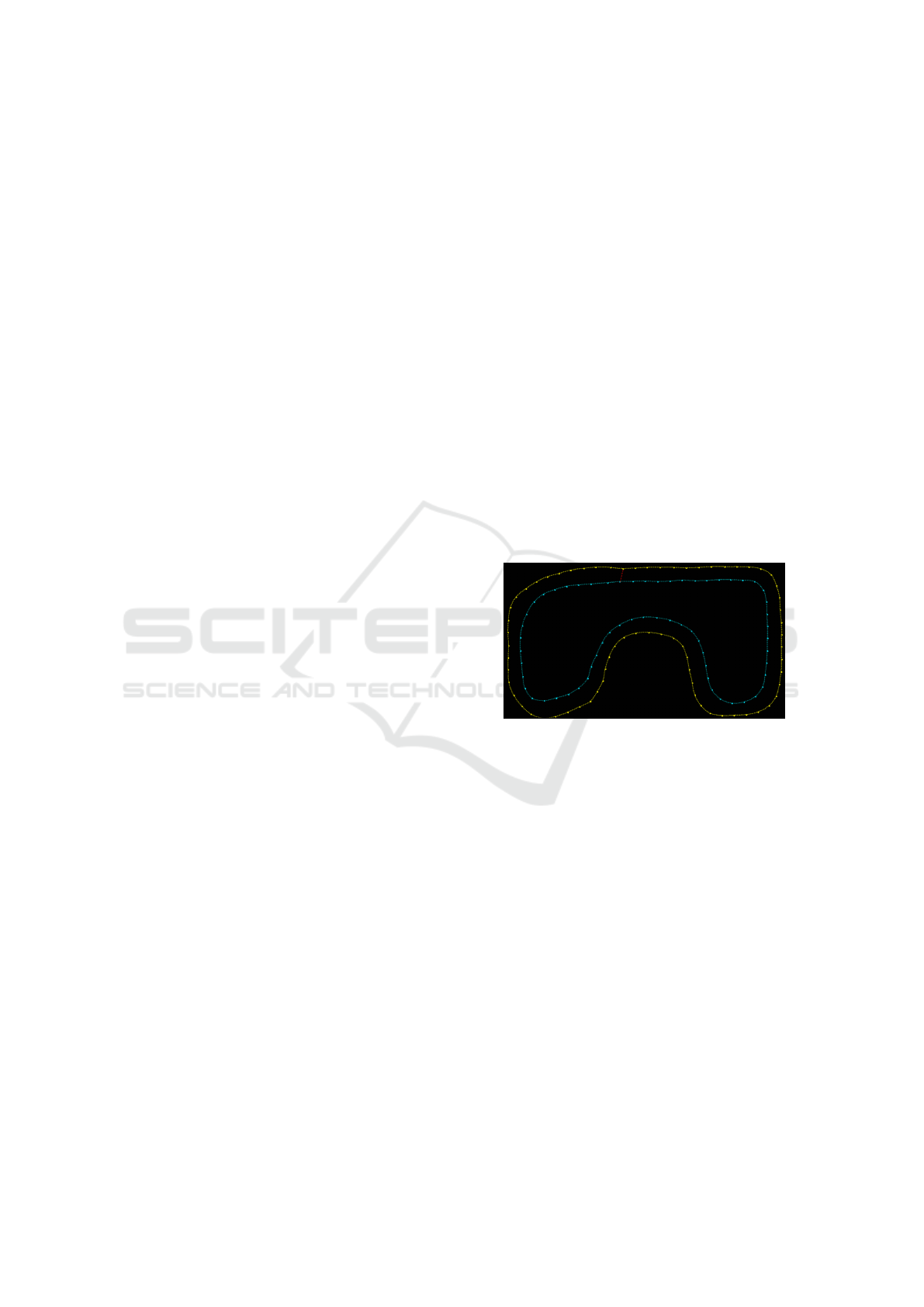

A mobile robot simulation was designed in Python3

using the package Pygame in order to test the effec-

tiveness of COIL. In the simulation, the car is able

to drive around on a 2D plane where its movement

is governed by a dynamic model referred to as a bi-

cycle model. Within the simulation, left cones and

right cones specify the sides of a track for the car to

drive through. An example of such a track can be

seen in Fig. 2. In order to turn this simulation into a

Figure 2: Image of the mobile robot simulation pro-

grammed using Python.

Reinforcement Learning problem, an action set A, an

observation set O, and a reward function R must be

decided.

To construct the observation, a cubic spline was

first applied to both the left side and right side of the

car’s track. This cubic spline acts as a boundary es-

timation for each side of the track. This cubic spline

boundary estimation can be seen in Fig. 2 as grey dot-

ted lines between detected cones. For more informa-

tion on cubic splines the reader is directed to Durrle-

man and Simons paper on the topic (Durrleman and

Simon, 1989).

Finally, to turn the boundary estimates into a

fixed-size observation for the agent, we can take n

equidistant sample points along each side of the track.

For this practical implementation n = 5 was an ap-

propriate choice given the computational constraints.

Therefore, we end up with 10 sample points in total,

each of which is comprised of two pieces of informa-

tion; the distance to the car r, and the relative angle of

the sample point with respect to the car axis, θ. These

20 values are what we shall use as our observation to

the agent, thus we have

O = {r

1

, θ

1

, . . . , r

10

, θ

10

}

where the first five (r, θ) pairs represent the boundary

sample points on the left side of the track, and the last

ICAART 2024 - 16th International Conference on Agents and Artificial Intelligence

180

five (r, θ) pairs represent the boundary sample points

on the right side of the track.

For the set of actions A we simply allow the agent

to decide the steering angle (in degrees) of the car in

the interval [−80, 80]. Thus

A = [−80, 80].

The mobile robot in our simulation has three main ob-

jectives: Ω

1

: Survival, Ω

2

: Speed, and Ω

3

: Smooth-

ness. Four properties were chosen in order to encode

these objectives within the reward function:

i) A positive reward for successfully progressing

along the track (Ω

1

)

ii) A negative reward for crashing (Ω

1

)

iii) A positive reward for driving fast (Ω

2

)

iv) A negative reward for unstable driving (Ω

3

)

Using these four motivations, our reward function

to impose these objectives can be:

• r

Ω

1

= 70 · 1{Car finishes track} − 100 ·

1{Car crashes} + 2.5 · 1{New cone detected}

• r

Ω

2

= −0.8T · 1{Car finishes track}

• r

Ω

3

= − ∥ θ −

˜

θ ∥

where 1 is the indicator function, T is the time taken

for the car to finish the track, θ is the direction the

car is facing, and

˜

θ is a exponentially weighted mov-

ing average of previous car directions. The coeffi-

cients superseding the indicator functions in the re-

ward function (70, -100, 2.5, -0.8) were decided in

order to incentivise the agent to learn the desired be-

haviour with respect to the three competing objec-

tives. Our final reward function for the entire task is

then defined as

r =

3

∑

i=1

r

Ω

i

.

Following this, in order to use COIL with this

simulation, we needed a guide policy π

guide

. Conse-

quently, it was necessary to design a suitable guide

policy. Thus a guide policy was created that aims to

keep the car as ‘safe’ as possible at all times. The

guide policy first calculates an estimate for the cen-

tre path that goes through the middle of the track, and

then takes the steering angle best suited to follow this

path. We can call this guide policy the ‘safety policy’

as it encodes our safety objective Ω

1

.

This guide policy is a great example of a non-

expert guide policy as it achieves optimal perfor-

mance on only certain subsets of the state space. If the

track is straight, driving directly down the middle op-

timises all three objectives, but on corners it exhibits

sub-optimal performance for the speed objective Ω

2

as ‘corner-cutting’ can reduce the lap time.

Proximal Policy Optimisation (PPO), designed in

2017 by OpenAI (Schulman et al., 2020), is a power-

ful Reinforcement Learning technique which has seen

significant success in solving Reinforcement Learn-

ing tasks with greater stability and reliability than pre-

vious state of the art algorithms (Wang et al., 2020; Yu

et al., 2021). For this reason PPO (with MLP network

architecture for both actor/critic networks, 2 layers of

64 each, Adam optimizer, learning rate of 10

−5

, and

tanh activation function) was chosen as the Reinforce-

ment Learning algorithm for our agent, and also when

applying the COIL methodology.

To train the RL and COIL agents, 10 tracks were

created in the simulation. For training, 7 of the tracks

were provided to the agent randomly during each

episode of the simulation, this was to ensure the agent

would not just learn memorise a single track. An

episode of our simulation is defined by 3 full laps

around the randomly selected training track. The re-

maining 3 tracks were reserved for testing generali-

sation ability. An example of one of the simulated

tracks can be seen in Fig 3.

Figure 3: Example of one of the seven tracks used to train

the Reinforcement Learning agent to drive autonomously.

As a final mobile robot navigation method to com-

pare with COIL, an Offline Imitation Learning (OIL)

method is applied to mimic the behavior of the guide

policy discussed previously, in particular, Behavioral

Cloning is applied. As the behavior of the guide pol-

icy is simple, well-defined, consistent across the en-

tire state space, and by design a non-expert policy, we

found that it is sufficient in this case to use Behav-

ioral Cloning over a more complex method. How-

ever, in future research more advanced Offline Imita-

tion Learning algorithms could also be compared to

COIL.

At each time step t, the observation (boundary es-

timate samples) (r

1

, θ

1

, . . . , r

10

, θ

10

) is fed into a Ma-

chine Learning model, with the objective of predict-

ing the action of the guide policy π

guide

(s). In order to

train the model, a dataset of demonstrations was col-

lected by the guide policy driving around each of the

training tracks for 3 laps, and at each time step t the

Contextual Online Imitation Learning (COIL): Using Guide Policies in Reinforcement Learning

181

sample

(

(r

1

(t), θ

1

(t), . . . , r

10

(t), θ

10

(t)) , π

guide

(s

t

)

)

was stored. In total, this generated a dataset with

16510 data points. The Machine Learning model

that we used was a Random Forest Regression model.

This was chosen as it offered a very low Mean

Square Error (0.01489) for this task compared to other

methods we tested. For details on Random Forests

the reader is directed to the original 2001 paper by

Breiman (Breiman, 2001).

The Python code for this mobile robot simula-

tion can be found on Github at: https://github.com/

alex21347/Self Driving Car. Furthermore, the simu-

lation was ran using a 2.3 GHz Intel Core i5 CPU, an

Intel Iris Plus Graphics card, and 8GB of RAM.

3.2 Mobile Robot

COIL was also tested on a real life mobile robot in

order to answer the following research questions:

i) Does COIL still work effectively with a mobile

robot with real sensors?

ii) How robust are the algorithms with regards to

transfer learning?

Transfer learning is the method of using a pre-trained

model on a different task than the one that was used

for training the model (Pan and Yang, 2009). Trans-

fer learning has become an important research topic

in recent years (Ruder et al., 2019; Wan et al., 2021;

Aslan et al., 2021), and in this paper COIL will be

also tested in its robustness to Transfer learning.

The robot can be seen in Fig. 4 below. On top

of the robot there is a single Lidar detector (RPLi-

dar A1M8) which is the only sensor available for au-

tonomous driving.

Figure 4: Photograph of the robot car used for comparing

autonomous driving algorithms.

Using only this robot and the Lidar sensor, the

self-driving algorithms trained in the simulation can

now be compared using the task of navigating a real-

life track made out of cones. This test track can be

seen in Fig. 5.

Figure 5: Photograph of the test track used for comparing

autonomous driving algorithms.

4 RESULTS

The COIL and RL agents were trained in the simu-

lation over 6 runs for 2 million time steps each, and

the maximum average episode reward

1

achieved dur-

ing training was recorded for each run. The average

maximum reward for COIL and RL can be seen in

Table 1.

Table 1: Table of training results for Reinforcement Learn-

ing (RL) and Contextual Online Imitation Learning (COIL).

Method Mean Max Reward

COIL 477.6

RL 414.2

It can be seen in Table 1 that COIL achieves much

more advantageous policies during training, giving a

15.3% increase in the average max reward. A one-

sided t-test on the mean maximum reward between

COIL and RL gave p = 0.034, indicating statistically

significant improvement over regular RL during train-

ing.

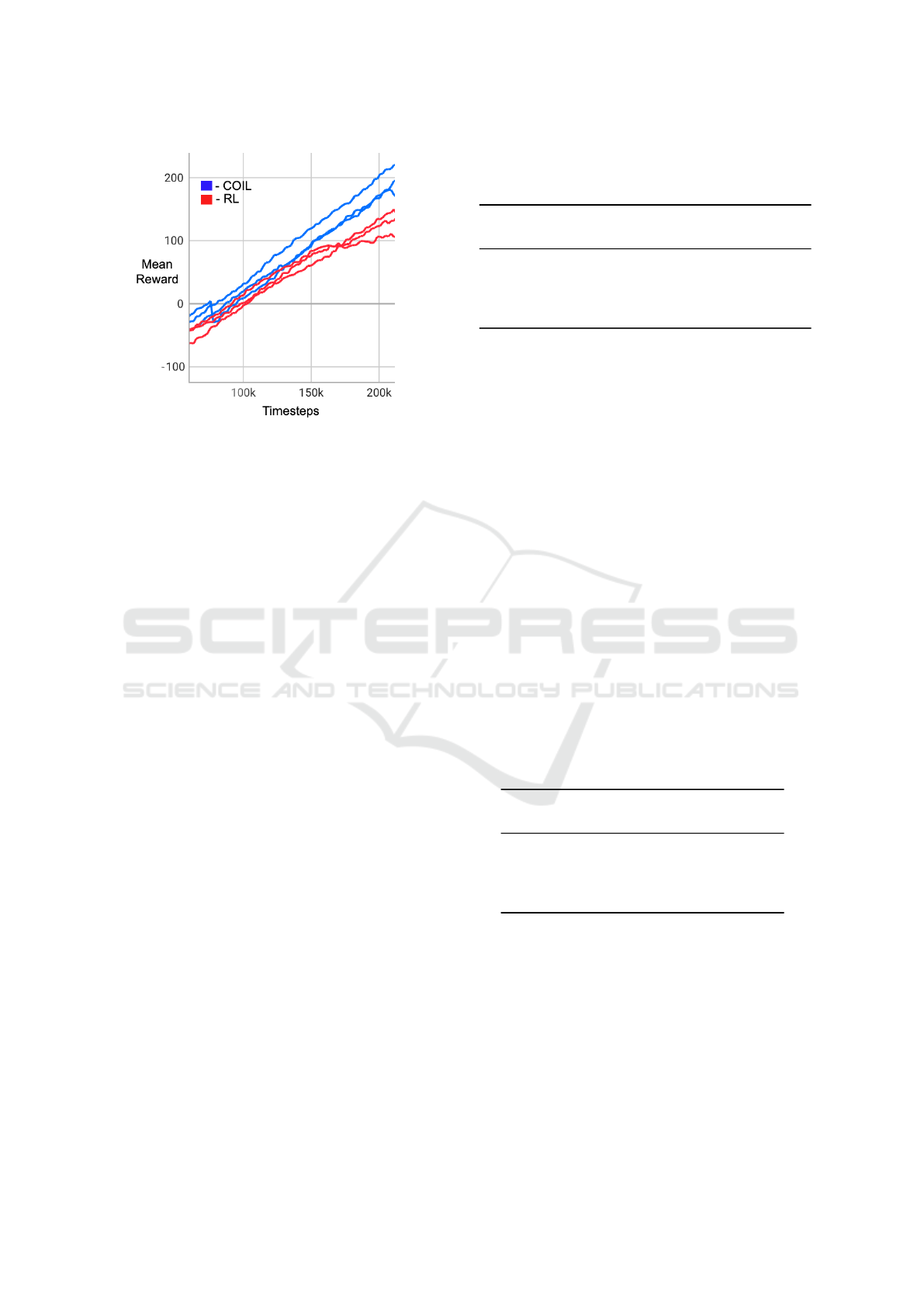

An additional trait that was noticed during training

was that the COIL agent consistently trained ‘quicker’

than the Reinforcement Learning agent, especially in

the earlier stages of training. This can be seen in

Fig. 6.

Therefore, there is evidence that by providing the

actions of the guide policy to the agent during training

in COIL, we achieve both quicker training and with a

higher maximum reward. In the next step of our anal-

ysis, the different algorithms are tested on 3 unseen

1

average over all training episodes in the given iteration.

ICAART 2024 - 16th International Conference on Agents and Artificial Intelligence

182

Figure 6: The first 200k timesteps of agents trained via PPO

with and without COIL. COIL can be seen to offer faster

increases in average reward in these early stages of training.

For clarity, only 3 runs of each algorithm were selected for

this figure.

test tracks in order to investigate generalisation capa-

bilities.

Table 2 contains the results for the different poli-

cies on the unseen test tracks (10 trials with 3 laps

each). It should be noted that for COIL and RL, the

policies that were chosen for testing were the policies

that achieved the highest average episode reward dur-

ing training. Table 2 shows that COIL achieved the

highest mean reward of all four methods when tested

on the test tracks. This provides evidence that COIL

has strong generalisation ability, as its performance is

highly competitive both on the training tracks and the

test tracks. A one-sided t-test on the mean reward be-

tween COIL and RL gave p = 4.79 · 10

−8

, indicating

statistically significant improvement over regular RL

on the unseen test tracks. COIL is also the fastest of

the policies, achieving the lowest average lap time of

the four methods. However, COIL is also the least

smooth of the policies. This might be because the

agent has learned to prioritise speed over smoothness

in order to achieve the higher rewards.

The smoothness is calculated by taking the aver-

age magnitude of all of the r

Ω

3

values generated dur-

ing the tests. To recap, we have that

r

Ω

3

= − ∥ θ −

˜

θ ∥

where θ is the direction the car is facing, and

˜

θ is a ex-

ponentially weighted moving average of previous car

directions. Therefore, the larger the average magni-

tude of r

Ω

3

, the less smooth the journey is.

Lastly, we will analyse how the four methods

hold up in a more complex situation and a significant

change in incoming sensor data using the robot re-

sults.

Table 2: Table of results for four mobile robot driving algo-

rithms tested in a Python simulation on 3 unseen test tracks.

For each track, 30 laps were completed by each algorithm.

Method Mean Mean Mean

Lap Time Smoothness Reward

Guide Policy 48.81s 0.121 609.4

OIL 48.82s 0.061 620.8

RL 48.58s 0.557 614.5

COIL 48.48s 0.606 632.9

The reward function designed for the simulation

cannot be directly applied in real life because we lack

the necessary information to calculate the reward at

each time step. Instead, the lap time of the robot on

the test track can be used and by recording the steering

angle of the car over time we can also determine the

smoothness of the cars journey. The smoothness of

the cars journey was calculated by the same method as

in the simulation, by taking the difference between the

current steering angle and the exponentially weighted

moving average of previous steering angles.

For each mobile robot algorithm, three attempts at

driving around the track were made and the lap times

and angular velocities were recorded. The results can

be seen in Table 3. Table 3 shows that COIL offers the

fastest and smoothest journey of all four mobile robot

algorithms. This provides evidence that COIL is ca-

pable of effectively solving more complex and diffi-

cult challenges. Despite the change of environment,

agent, and observations, COIL still achieves strong

results as a mobile robot algorithm.

Table 3: Table of results for four mobile robot driving al-

gorithms tested by a robot. The algorithms are judged on

speed, smoothness, and safety.

Method Mean Mean

Smoothness Lap Time

Guide Policy 0.265 43.67s

OIL 0.104 43.32s

RL 0.102 40.14s

COIL 0.082 39.53s

It must be noted that the success of the real-life

mobile-robot navigation algorithm is highly depen-

dent on how realistic the simulation is during train-

ing. Aligning the dynamics of a simulation to real-

ity is very important when testing algorithms. The

accuracy and complexity of the simulation was lim-

ited by the resources at hand and scope of the project,

and thus the results for the real-life robot have ad-

ditional components to consider. By switching from

simulation to reality, we are introducing a significant

Contextual Online Imitation Learning (COIL): Using Guide Policies in Reinforcement Learning

183

shift between the training data (from the simulation)

and the test data (from the Lidar sensor), therefore

these results allow us to examine the affect of trans-

fer learning on our algorithms. Given this additional

difficultly in the task, the results in this section are

indicative that each algorithm exhibits robustness to

transfer learning. Given more resources and time, the

alignment between reality and simulation can be fur-

ther improved, and the results will be more suitable

for direct comparison.

These results show that COIL offers a method

of effectively utilising guide policies even when the

guide policy is both non-expert, and when faced with

transfer learning. In this case, not only is the task

much more difficult because of the transfer learning,

but COIL is faced with the additional difficulty that

the actions from the guide-policy are also effected by

the transfer learning. Despite the degradation of the

guide policy, COIL remains the strongest algorithm at

the task, demonstrating that COIL is capable of utilis-

ing non-expert guide policies even when withstanding

the effect of transfer learning. Consequently, there is

evidence that COIL is a robust and flexible method

of effectively incorporating guide policies into Rein-

forcement Learning problems.

5 DISCUSSION

The computational cost of running the COIL agent in

the environment is greater than Reinforcement Learn-

ing methods that do not utilise the guide policy be-

cause it is necessary to compute the action of the

guide policy at each time step (on top of the other

computations in the Reinforcement Learning algo-

rithm). In practice, the computational cost of comput-

ing the guide policies action is lightweight and thus

using COIL is only marginally more computationally

expensive than Reinforcement Learning methods that

do not use the guide policy. However, in rare cases

where calculation of the guide policies action is com-

putationally heavy, the available computing power

should be taken into account when using COIL. In this

case, it might be more feasible for researchers to ap-

ply Imitation Learning techniques in order to achieve

a more lightweight approximation of the guide policy.

Additionally, a potential shortcoming of COIL is

the requirement of a present guide policy. If the guide

policy cannot be queried during the exploration of the

environment then it is not possible to use it in COIL.

However, there is a suitable alternative given a guide

policy that is not present. In this case researchers can

add a preliminary step of applying Imitation Learning

techniques to generate a corresponding present guide

policy as an approximation of the original non-present

guide policy. In theory, any Offline Imitation Learn-

ing algorithm could be used for this purpose. There-

fore, although the requirement of a present guide pol-

icy is certainly a limitation of the proposed COIL

method, given the successful implementation of Of-

fline Imitation Learning COIL can still be used. Fur-

thermore, as COIL does not require an expert guide

policy, it is still applicable even if this Offline Imi-

tation Learning approximation is limited in capacity.

Thus theoretically, even a non-present and non-expert

guide policy can still be utilised with COIL, which

reinforces the notion that COIL is a highly-flexible

technique.

On the basis of the discussed findings, first results

in the research of COIL seem very promising, how-

ever, a limitation in our present analysis of COIL is

that the scope of results in this initial paper are fo-

cused on the task of mobile-robot navigation. In fu-

ture research, COIL must be also tested on other Re-

inforcement Learning tasks in order to get a deeper

understanding of its capabilities.

6 CONCLUSIONS

Contextual Online Imitation Learning (COIL) offers

researchers a promising new method of incorporating

guide policies into the learning process of Reinforce-

ment Learning algorithms in a practical way. In this

paper, COIL has been demonstrated to provide signif-

icant improvement over other methods in the context

of mobile-robot navigation. In both the simulation

and real-life experiments this was the case. Addition-

ally, the results from the lab experiment demonstrated

that COIL also has the ability to withstand the ef-

fect of transfer learning. Furthermore, COIL demon-

strated consistently faster policy training than the cor-

responding agent trained without COIL.

This paper has introduced the COIL methodology

and two applications in which it offers improved re-

sults over competing methods. However, future re-

search must still be done to more thoroughly inves-

tigate this novel method. Future research on COIL

could focus on four interesting topics. Firstly, re-

search could be conducted to analyse the effect of us-

ing multiple guide policies with COIL. In particular, it

would be interesting to analyse the deviation between

the agents policy and the guide policies during explo-

ration of the environment to see which of the guide

policies are being ‘listened to’ and in which states.

Secondly, additional research could focus on compar-

ing the COIL methodology for different Reinforce-

ment Learning algorithms such as Deep Q-Networks

ICAART 2024 - 16th International Conference on Agents and Artificial Intelligence

184

(Mnih et al., 2016b) or A2C (Mnih et al., 2016a), to

see if similar success is achieved. Thirdly, the effect

of treating the parameters of the guide policy as addi-

tional trainable parameters within the Reinforcement

Learning algorithm in order to fine-tune the actions

of the guide policy might also be an interesting av-

enue for future research. Lastly, COIL could be fur-

ther tested and compared to other Imitation Learning

algorithms in various tasks in order to get a broader

understanding of how it compares to other existing

methods.

REFERENCES

Aslan, M. F., Unlersen, M. F., Sabanci, K., and Durdu,

A. (2021). Cnn-based transfer learning–bilstm net-

work: A novel approach for covid-19 infection detec-

tion. Applied Soft Computing, 98:106912.

Breiman, L. (2001). Random forests. Machine Learning,

45(1):5–32.

Chen, J., Yuan, B., and Tomizuka, M. (2019). Deep imita-

tion learning for autonomous driving in generic urban

scenarios with enhanced safety. In 2019 IEEE/RSJ In-

ternational Conference on Intelligent Robots and Sys-

tems (IROS), pages 2884–2890.

Durrleman, S. and Simon, R. (1989). Flexible regression

models with cubic splines. Statistics in medicine,

8(5):551–561.

Edward, J. (2021). Coarse-to-fine imitation learning : Robot

manipulation from a single demonstration. In 2021

IEEE International Conference on Robotics and Au-

tomation (ICRA), pages 4613–4619.

Fang, B., Jia, S., Guo, D., Xu, M., Wen, S., and Sun,

F. (2019). Survey of imitation learning for robotic

manipulation. International Journal of Intelligent

Robotics and Applications, 3(4):362–369.

Halbert, D. (1984). Programming by example. PhD thesis,

University of California, Berkeley.

Ho, J. and Ermon, S. (2016). Generative adversarial im-

itation learning. In Advances in neural information

processing systems 29.

Hornik, K., Stinchcombe, M., and White, H. (1989). Multi-

layer feedforward networks are universal approxima-

tors. Elsevier.

Liu, Y., Liu, Q., Zhao, H., Pan, Z., and Liu, C. (2020).

Adaptive quantitative trading: An imitative deep re-

inforcement learning approach. In Proceedings of the

AAAI conference on artificial intelligence, volume 34,

pages 2128–2135.

Mnih, V., Badia, A. P., Mirza, M., Graves, A., Lillicrap, T.,

Harley, T., Silver, D., and Kavukcuoglu, K. (2016a).

Asynchronous methods for deep reinforcement learn-

ing. In International conference on machine learning,

pages 1928–1937.

Mnih, V., Kavukcuoglu, K., Silver, D., Graves, A.,

Antonoglou, I., Wierstra, D., and Riedmiller, M.

(2016b). Playing atari with deep reinforcement learn-

ing. In International conference on machine learning,

pages 1928–1937.

Osa, T., Pajarinen, J., Neumann, G., Bagnell, J. A., Abbeel,

P., and Peters, J. (2018). An algorithmic perspec-

tive on imitation learning. Foundations and Trends

in Robotics.

Pan, S. J. and Yang, Q. (2009). A survey on transfer learn-

ing. IEEE Transactions on Knowledge and Data En-

gineering, 22(10):1345–1359.

Pomerleau, D. (1998). An autonomous land vehicle in a

neural network. In Advances in Neural Information

Processing Systems.

Ross, S., Gordon, G., and Bagnell, D. (2011). A reduc-

tion of imitation learning and structured prediction

to no-regret online learning. In Proceedings of the

fourteenth international conference on artificial intel-

ligence and statistics, pages 627–635. JMLR Work-

shop and Conference Proceedings.

Ruder, S., Peters, M. E., Swayamdipta, S., and Wolf, T.

(2019). Transfer learning in natural language process-

ing. In Proceedings of the 2019 Conference of the

North American Chapter of the Association for Com-

putational Linguistics: Tutorials, pages 15–18.

Schulman, J., Wolski, F., Dhariwal, P., Radford, A., and

Klimov, O. (2020). Proximal policy optimization al-

gorithms. In Uncertainty in Artificial Intelligence,

pages 113–122.

Uchendu, I., Xiao, T., Lu, Y., Zhu, B., Yan, M., Simon,

J., and Bennice, M. (2022). Jump-start reinforcement

learning. arXiv preprint arXiv:2204.02372.

Wan, Z., Yang, R., Huang, M., Zeng, N., and Liu, X. (2021).

A review on transfer learning in eeg signal analysis.

Neurocomputing, 421:1–14.

Wang, J., Zhuang, Z., Wang, Y., and Zhao, H. (2022). Ad-

versarially robust imitation learning. In Conference on

Robot Learning, pages 320–331.

Wang, Y., He, H., and Tan, X. (2020). Truly proximal policy

optimization. In Uncertainty in Artificial Intelligence,

pages 113–122.

Watkins, C. J. and Dayan, P. (1992). Q-learning. Machine

learning, 8(3):279–292.

Yu, C., Velu, A., Vinitsky, E., Wang, Y., Bayen, A., and

Wu, Y. (2021). The surprising effectiveness of ppo

in cooperative, multi-agent games. arXiv preprint

arXiv:2103.01955.

Zhang, S., Cao, Z., Sadigh, D., and Sui, Y. (2021).

Confidence-aware imitation learning from demonstra-

tions with varying optimality. In Advances in Neu-

ral Information Processing Systems 34, pages 12340–

12350.

Contextual Online Imitation Learning (COIL): Using Guide Policies in Reinforcement Learning

185