Investigating Color Illusions from the Perspective of Computational

Color Constancy

Oguzhan Ulucan, Diclehan Ulucan and Marc Ebner

Institut f

¨

ur Mathematik und Informatik, Universit

¨

at Greifswald,

Walther-Rathenau-Straße 47, 17489 Greifswald, Germany

Keywords:

Computational Color Constancy, Color Assimilation Illusions, Color Illusion Perception.

Abstract:

Color constancy and color illusion perception are two phenomena occurring in the human visual system, which

can help us reveal unknown mechanisms of human perception. For decades computer vision scientists have

developed numerous color constancy methods, which estimate the reflectance of the surface by discounting

the illuminant. However, color illusions have not been analyzed in detail in the field of computational color

constancy, which we find surprising since the relationship they share is significant and may let us design more

robust systems. We argue that any model that can reproduce our sensation on color illusions should also be

able to provide pixel-wise estimates of the light source. In other words, we suggest that the analysis of color

illusions helps us to improve the performance of the existing global color constancy methods, and enable them

to provide pixel-wise estimates for scenes illuminated by multiple light sources. In this study, we share the

outcomes of our investigation in which we take several color constancy methods and modify them to reproduce

the behavior of the human visual system on color illusions. Also, we show that parameters purely extracted

from illusions are able to improve the performance of color constancy methods. A noteworthy outcome is

that our strategy based on the investigation of color illusions outperforms the state-of-the-art methods that are

specifically designed to transform global color constancy algorithms into multi-illuminant algorithms.

1 INTRODUCTION

More than 20% of the human brain is devoted to vi-

sual processing (Sheth and Young, 2016). Color vi-

sion might be the simplest visual attribute to under-

stand as stated by Semir Zeki (Zeki, 1993), hence

understanding color processing might be the key to

unraveling how the brain works as a whole. Color

illusion perception and color constancy are two phe-

nomena related to color processing, whose mecha-

nisms are still not entirely discovered. These phe-

nomena can help us to reveal unknown mechanisms

of the brain and let us design artificial systems one

step closer to mimicking the human visual system.

One of the interesting facts of color processing is

that under some circumstances, the perceived color

can be quite different than the actual physical re-

flectance of the object. The way we are fooled by

color illusions can be given as an example of the dif-

ference between the perceived color and actual re-

flectance of an object. An example color illusion can

be seen in Fig. 1. We perceive the colors of the disks

as bluish, pinkish, and yellowish, while all the disks

Assimilation Illusion Target Region

Figure 1: Color assimilation illusion (Bach, 2023). Al-

though we perceive the colors of the disks as if they have

different colors, they are in fact the same when we remove

the inducers, i.e., context, as shown in the target image.

have the same reflectance. The reason behind this il-

lusion, called the color assimilation illusion, is that

the perceived color of the target shifts towards that of

its local neighbors.

Another fascinating aspect of color processing is

that the human visual system estimates the reflectance

of a scene by discounting the illuminant. Hence,

the perceived color of an object remains constant re-

gardless of the illumination conditions (Gegenfurtner,

1999; Brainard and Radonjic, 2004; Ebner, 2007;

Hurlbert, 2007). This phenomenon is called color

constancy. For years, researchers in the fields of

neuroscience and computer vision have carried out

Ulucan, O., Ulucan, D. and Ebner, M.

Investigating Color Illusions from the Perspective of Computational Color Constancy.

DOI: 10.5220/0012311600003660

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 19th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2024) - Volume 3: VISAPP, pages

25-36

ISBN: 978-989-758-679-8; ISSN: 2184-4321

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

25

numerous studies related to color constancy (Land,

1977; Buchsbaum, 1980; Brainard et al., 2006; Cor-

ney and Lotto, 2007; Van De Weijer et al., 2007;

Ebner, 2011; Dixon and Shapiro, 2017; Qian et al.,

2019; Afifi et al., 2021). While in the former it is de-

sired to understand human color constancy, in the lat-

ter it is aimed to estimate the illuminant of the scene.

The connection between color illusions and color

constancy has been pointed out in both computational

biology and computer vision (Marini and Rizzi, 2000;

Corney and Lotto, 2007; Gomez-Villa et al., 2019;

Ulucan et al., 2022a), but never investigated together

deeply in the field of computational color constancy.

As presented in computational biology studies, it is

important to both reproduce the behavior of the hu-

man visual system on color illusions and to perform

color constancy (Corney and Lotto, 2007). Yet, com-

putational biology studies aiming at explaining color

illusion perception only accomplish to mimic the hu-

man visual system’s response to color illusions but

they do not provide any detailed analysis on illumi-

nant estimation (Marini and Rizzi, 1997; Marini and

Rizzi, 2000), whereas computational color constancy

studies only aim at estimating the illuminant. We find

it rather surprising that even though color constancy

methods can estimate the illuminant, the analysis of

color illusions is neglected, which could provide sig-

nificant benefits. In this study, by using a learning-

free approach we attempt to show that there is indeed

a relationship between color illusions and color con-

stancy, in particular between the color assimilation il-

lusions and multi-illuminant color constancy since the

relationship between the spatially close regions is im-

portant for both phenomena. We argue that if we an-

alyze color illusions from the perspective of compu-

tational color constancy, we can highlight the natural

link between these two phenomena and learn from the

outcomes of this investigation to modify global color

constancy algorithms so that they can provide pixel-

wise estimations, and hence perform multi-illuminant

color constancy. Also, we argue that by using the re-

sults of the investigation of color illusions we can en-

hance the efficiency of the global color constancy al-

gorithms. Furthermore, since color illusions and color

constancy are two important phenomena that occur

due to the processing of the human brain, the out-

comes of investigating these phenomena together may

help us to design artificial systems that are one step

closer to mimicking human visual perception (Bach

and Poloschek, 2006; Gomez-Villa et al., 2019). It is

important to note here that despite numerous studies

conducted in this field, the biological explanation for

the link between color illusions and color constancy

is still unknown. If we could explain this link bio-

logically as well as how the human visual system dis-

counts the illuminant and is fooled by the illusions,

then we would basically have a correct model of hu-

man color processing, hence, we would be able to

develop algorithms for both digital photography and

computer vision applications that would satisfy the

consumers around the world (Ebner, 2009).

To the best of our knowledge, this is the first

study in the field of computational color constancy

that stresses the importance of the relationship be-

tween color assimilation illusions and color constancy

in detail.

Our contributions can be summarized as follows;

• We investigate color illusions from the perspec-

tive of computational color constancy algorithms.

• We introduce a simple yet effective approach

based on our findings from the investigation of

color illusions that transforms the global color

constancy algorithms into multi-illuminant color

constancy methods, which do not require any in-

formation about the scene.

• We show that our approach improves the perfor-

mance of existing color constancy methods.

This paper is organized as follows. In Sec. 2, we

briefly explain the field of computational color con-

stancy. In Sec. 3, we introduce our approach. In

Sec. 4, we discuss the results for color assimilation

illusions, and in Sec. 5 we provide the outcomes for

both multiple and single illuminant color constancy.

In Sec. 6, we summarize our work.

2 BACKGROUND

Before introducing our proposed approach we would

like to give a brief summary of the widely used im-

age formation model in the field of color constancy

and color constancy algorithms since throughout the

paper we will investigate color illusions from the per-

spective of color constancy algorithms.

2.1 Theory of Color Image Formation

We begin to process visual information when the light

entering the eye is measured by the photoreceptors,

e.g., cones, present in the retina, whereas artificial

systems start to process visual information when an

array of sensors measures the incident light. In case

the camera contains three different sensors, then each

sensor responds to a specific light from the distinct

parts of the visible spectrum, i.e., short-, middle-, and

long-wavelength. Let us suppose that the camera sen-

sors are capturing every spatial location of the scene.

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

26

Then, the measured energy of the signal at the spatial

location (x, y) can be formulated as follows;

I(x, y) =

Z

w

E(x, y; λ)S

i

(λ)dλ (1)

where, E(x, y; λ) is the irradiance hitting the sensors

of the capturing device, and S

i

(λ) is the sensor speci-

fications of the camera that contains the responses of

the sensors for a specific wavelength, with i ∈ {long,

middle, short}, and λ is the wavelength of the visible

spectrum.

In color constancy studies, we mostly assume that

the surface is equally reflecting the light into all di-

rections, i.e., Lambartian surface, and the scene is il-

luminated by a point light source L(x, y; λ). Thus, the

irradiance hitting the sensors of the camera can be ex-

pressed as follows;

E(x, y; λ) = G(x, y)R(x, y; λ)L(x, y; λ) (2)

where, R(x, y; λ) is the (shaded) reflectance, and

G(x, y) is the scaling factor that can be expressed as

cos α, where α is the angle between the surface nor-

mal vector and a vector pointing in the direction of

the light source.

Consequently, an image captured by the camera is

generally modeled by using the model of Lambertian

image formation, and it can be formulated as follows;

I(x, y) = G(x, y)

Z

w

R(x, y;λ)L(x, y;λ)S

i

(λ)dλ. (3)

The aim of color constancy is to estimate the il-

luminant L from the input image I with a color cast.

However, as we can see from the Eqn. 3, color con-

stancy is framed as a computational challenge, i.e.,

an ill-posed problem, since it depends on both the

type of the sensors and the illumination, which we

cannot be sure of. Therefore, most of the algorithms

make relaxations to the ill-posed nature of color con-

stancy by assuming that the camera sensor responses

are narrow-band, i.e., they can be approximated by

Dirac’s delta functions, and the scene is uniformly il-

luminated. Moreover, most of the algorithms do not

consider the scene geometry G(x, y). Thus, the im-

age can be formed as the element-wise product of the

reflectance R and the global illumination source L as

follows;

I(x, y) = R(x, y) · L. (4)

It is noteworthy to point out that even though these

assumptions are beneficial to tackle the ill-posed na-

ture of color constancy to estimate the color vector

of the light source, assuming that the geometry factor

throughout the scene is uniform and there is a single

light source illuminating the scene are strong assump-

tions which are usually violated in the real world due

to the presence of interreflections, shadows, and mul-

tiple light sources in the scene (Ershov et al., 2023).

2.2 Related Work

Over the decades, numerous global color constancy

algorithms have been proposed. The white-patch

Retinex and the gray world algorithm are well-known

methods, which are inspired by the mechanisms of

the human visual system since the human visual sys-

tem might be discounting the illuminant of the scene

based on the highest luminance patch, and space-

average color (Land, 1977; Buchsbaum, 1980). The

white-patch Retinex method computes the maximum

responses of the image channels separately to esti-

mate the illuminant of the scene, while the gray world

algorithm averages the pixels of each channel inde-

pendently to output an illuminant estimate. These two

methods have been modified and used in several other

studies due to their simplicity and efficacy. For ex-

ample, the shades of gray algorithm assumes that the

mean of pixels raised to a certain power is gray (Fin-

layson and Trezzi, 2004). The gray edge method and

weighted gray edge algorithm stress that the gradi-

ent features of the image are beneficial cues for es-

timating the illuminant of the scene (Van De Weijer

et al., 2007; Gijsenij et al., 2009). The mean-shifted

gray pixel method detects and uses the gray pixels

for illumination estimation (Qian et al., 2018). The

block-based color constancy method divides the im-

age into non-overlapping blocks and transforms the

task of illumination estimation into an optimization

problem by making use of both the gray world and

the white-patch Retinex assumptions (Ulucan et al.,

2022b; Ulucan et al., 2023a; Ulucan et al., 2023b).

The double-opponency-based color constancy algo-

rithm is inspired by the mechanisms of the human

visual system, i.e., physiological findings on color in-

formation processing (Gao et al., 2015). The principal

component analysis-based color constancy method

considers only the informative pixels, which are as-

sumed to be the ones having the largest gradient in

the data matrix, to estimate the color vector of the il-

luminant (Cheng et al., 2014). The local surface re-

flectance statistics algorithm is based on the biolog-

ical findings about the feedback modulation mech-

anism in the eye and the linear image formation

model (Gao et al., 2014). The biologically inspired

color constancy method is based on the hierarchical

structure of the perception mechanism of the human

vision system (Ulucan et al., 2022a).

While most of the aforementioned global color

constancy algorithms are traditional methods, there

also exist learning-based algorithms (Afifi and

Brown, 2019; Laakom et al., 2019; Afifi and Brown,

2020; Afifi et al., 2021; Afifi et al., 2022; Domis-

lovi

´

c et al., 2022). As discussed in several stud-

Investigating Color Illusions from the Perspective of Computational Color Constancy

27

ies, these algorithms outperform classical methods on

well-known benchmarks. However, their effective-

ness tends to decrease when they face images with

different statistical distributions and/or images cap-

tured by cameras with unknown specifications (Gao

et al., 2017; Qian et al., 2019; Ulucan et al., 2022b).

The reason behind this performance decline can be

explained by the facts that (i) well-known bench-

marks are mostly formed with cameras, whose sensor

response characteristics are similar, (ii) illumination

conditions in most datasets do not significantly dif-

fer, for instance, lights outside and on the edges of

the color temperature curve are seldomly considered,

and (iii) learning-based methods expect their training

and test sets to be somehow similar (Ulucan et al.,

2022b; Buzzelli et al., 2023).

The assumption of having a single light source

enables us to estimate the illuminant of the scene,

however, it is usually violated in the real world since

most scenes do not have uniform illumination due

to interreflections, multiple light sources, and shad-

ows (Ebner, 2009; Gijsenij et al., 2011; Bleier et al.,

2011). To avoid this violation both traditional and

learning-based multi-illuminant color constancy algo-

rithms have been proposed. These methods output

local estimates for each spatial location in the im-

age. For instance, local space average color is one

of the first methods, which finds the pixel-wise esti-

mates to arrive at the color constant descriptor of the

scene (Ebner, 2003; Ebner, 2004). The patch-based

methodology and conditional random fields-based al-

gorithm propose strategies, which can be applied to

existing color constancy algorithms (Gijsenij et al.,

2011; Beigpour et al., 2013). The retinal mechanism-

inspired model mimics the color processing mecha-

nisms in a particular retina level and outputs a white-

balanced image without explicitly providing an illu-

mination estimate (Zhang et al., 2016). The image

regional color constancy weighing factors based algo-

rithm separates the image into multiple parts and uses

the normalized average absolute difference of each

part as a measure of confidence (Hussain and Akbari,

2018). The image texture-based algorithm utilizes the

texture to find the pixels, which have adequate color

variation and uses these to discount the illuminant of

the scene (Hussain et al., 2019). The algorithm in-

spired by the human visual system makes use of the

bottom-up and top-down mechanisms to find the color

of the light source (Gao et al., 2019). The grayness

index-based color constancy algorithm finds the gray

pixels based on the dichromatic reflection model and

uses these gray pixels to find both global and local

illumination estimates (Qian et al., 2019). The N-

white balancing method determines the number of

white points in the image to estimate the illuminant

of the scene (Akazawa et al., 2022). The convolu-

tional neural networks (CNNs) based approach con-

tains a detector that can determine the number of il-

luminants present in the scene (Bianco et al., 2017).

The physics-driven and generative adversarial net-

works (GAN) based method models the light source

estimation task as an image-to-image domain transla-

tion problem (Das et al., 2021).

The main drawback of many multi-illuminant

color constancy algorithms is the assignment of the

number of clusters/segments (Wang et al., 2022). In

other words, the provision of the number of illumi-

nants to the algorithm before processing starts. We

argue that in real-world scenarios, where the num-

ber of lights might be unknown or for arbitrary im-

ages for which the number of illuminants is not pro-

vided, algorithms depending on the number of light

sources may not work effectively. Therefore, a multi-

illuminant color constancy approach that can estimate

the illuminants without having the knowledge of the

number of lights is necessary.

3 PROPOSED APPROACH

Global color constancy algorithms estimate the color

vector of a single light source uniformly illuminat-

ing the scene. Therefore, it cannot be expected that

they reproduce our behavior on color assimilation il-

lusions without any modification. Our method modi-

fies the global color constancy algorithms with a sim-

ple yet effective approach so that they provide pixel-

wise estimates of the input image, thus reproduce our

sensation on color assimilation illusions. Since local-

ity is an important feature for both assimilation illu-

sions and multi-illuminant color constancy, our mo-

tivation is that the parameters purely extracted from

the analysis of the color illusions should be beneficial

for color constancy. Our strategy can transform the

global color constancy methods into algorithms that

can provide local estimates of the light source illumi-

nating the scenes.

In our method, we take the linearized input im-

age I and divide it into β × β non-overlapping blocks,

and β is chosen as 8 (the parameter selection is de-

tailed in Sec. 4). After we divide the image into

non-overlapping blocks, we apply a color constancy

algorithm to each block to find the estimate of that

block. Subsequently, rather than assuming that all the

spatial locations in the block have uniform estimates,

we place the computed local estimation into the cen-

ter pixel of the corresponding block and we obtain a

sparsely populated image I

s

(Fig. 2). The reason be-

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

28

Input Image Input Target

Sparse Image I

s

Estimates Output Target

Figure 2: Reproduction of color assimilation illusions. As

shown in the input target, the RGB values of the disks are

the same, yet we perceive them in the input image as if they

are different. After we apply our method, as given in the

output target the disks have colors closer to our perception.

hind this operation is to avoid sharp changes between

adjacent blocks, i.e., to obtain smooth transitions be-

tween blocks. Afterward, to fill the missing informa-

tion in I

s

, i.e., to obtain a dense image, we perform

an interpolation between the adjacent center pixels.

The interpolation is carried out by convolving I

s

with

a Gaussian kernel G (Eqn. 5), hence for each spatial

location a pixel-wise estimate is obtained.

I

p

(x, y) = I

s

(x, y) ∗ G(x, y;σ) (5)

where, I

p

contains the pixel-wise estimations normal-

ized to unit norm, ∗ denotes the convolution opera-

tion, and G =

1

2πσ

2

exp

−

x

2

+y

2

2σ

2

. The controlling pa-

rameter σ of G needs to be sufficiently large so that at

least three estimates are inside of the area of support,

hence it is chosen as 24 (discussion on selecting σ is

provided in Sec. 4).

Consequently, our simple yet effective approach

enables us to simulate the behavior of the human vi-

sual system on color assimilation illusions by using

computational color constancy algorithms as shown

in Fig. 2.

It is worth mentioning here that we only modify

the traditional color constancy techniques due to the

input size requirement of the learning-based methods.

In other words, neural networks-based methods need

a fixed input image size, while we use 8 × 8 blocks

in our approach. During our experiments, we have

observed that upsampling the block sizes does not re-

sult in satisfying outcomes, thus we do not apply our

approach to neural networks-based algorithms.

In the remainder of this section, we slightly adjust

our approach for color constancy purposes. While we

make our adjustments, we utilize the parameters, i.e.,

block size and controlling parameter of the Gaussian

kernel, which we extract from our analysis on color

assimilation illusions.

3.1 Application to Color Constancy

After we simulate the behavior of the human visual

system on color assimilation illusions, we analyze

whether the proposed approach relying on parame-

ters purely extracted from color illusions can trans-

form the global color constancy methods into multi-

illumination color constancy algorithms. Also, we in-

vestigate whether our method can improve the per-

formance of the existing color constancy methods on

single illumination conditions.

It is known that not all pixels are beneficial to

perform color constancy, i.e., the performance of al-

gorithms tends to decrease when the images contain

dominant sky regions. Hence, instead of using all

pixels in the images, choosing the most informative

ones increases the efficiency of the color constancy

methods as proposed in several studies (Finlayson

and Hordley, 2001; Yang et al., 2015; Qian et al.,

2018; Ulucan et al., 2023a). Therefore, to adapt our

proposed approach for color assimilation illusions to

color constancy, we form a confidence map by us-

ing the whitest pixels, e.g., pixels having the high-

est luminance in the image. The reason for using the

whitest pixels in the scene can be explained by bio-

logical findings, i.e., our visual system might be dis-

counting the illuminant by making use of the areas

that have the highest luminance rather than the darkest

regions (Land and McCann, 1971; Linnell and Fos-

ter, 1997; Uchikawa et al., 2012; Morimoto et al.,

2021), and from the perspective of digital photogra-

phy, i.e., the illuminant can be determined easier from

the achromatic colored areas such as white regions in

the scene rather than the colored areas (Drew et al.,

2012; Joze et al., 2012; Qian et al., 2019; Ono et al.,

2022; Ulucan et al., 2023a).

To detect the whitest pixels, we take the mean of

each color channel separately and scale the input im-

age according to this color vector. Then, we form a

pixel-wise whiteness map W by computing the pixel-

wise angular error between the scaled image I

temp

and

the white vector w = [1 1 1] as follows;

W (x, y) = cos

−1

w·I

temp

(x,y)

∥

w

∥

∥

I

temp

(x,y)

∥

. (6)

Subsequently, we form the confidence map C by

adaptively weighting W at each spatial location us-

ing a Gaussian curve (Eqn. 7) since the contribution

of the brightest pixels to color constancy may not be

equal throughout the scene. This approach coincides

with the biological findings that brighter regions have

a greater effect on human color constancy than darker

regions (Uchikawa et al., 2012).

C(x, y) =

1

2πσ

2

W

exp

−

(W (x, y) − µ

W

)

2

2σ

2

W

!

(7)

Investigating Color Illusions from the Perspective of Computational Color Constancy

29

where, µ

W

and σ

W

are the mean and the standard

deviation of W , respectively.

The pixel-wise estimates of the light source

ˆ

L

est

are obtained by applying an interpolation similar to

Eqn. 5 as follows;

L

est

(x, y) = (I

s

(x, y) × C (x, y))∗ G(x, y). (8)

In global color constancy benchmarks, the color

of the ground truth light vectors are provided as an

R, G, B triplet. Although our approach provides pixel-

wise estimations, one can obtain a global estimate of

the light source by averaging the pixels in I

s

for each

color channel individually.

4 EXPERIMENTS ON COLOR

ILLUSIONS

In this section, we explain the formation of the color

illusion set and the extraction of the parameters. Sub-

sequently, we discuss our outcomes on the reproduc-

tion of color illusions by using various global color

constancy methods.

4.1 Formation of the Color Illusion Set

The most important two features of color assimilation

illusions that affect our perception are the inducer’s

frequency of occurrence and its thickness. As seen in

Fig. 3, there is no illusion effect in the image given

in the first column. On the other hand, illusion sensa-

tions are observable in the image present in the sec-

ond column. It is important to note that while an-

alyzing the illusions, we recommend zooming in to

the figure and fixating our focal vision on each im-

age individually. The former is recommended since

when the observer views the image as small-sized or

from a distance the image will appear as if its inducer

has sufficient frequency to evoke an illusion sensa-

tion although there is no (or a weak) illusion sensation

present in the image. The latter is advised since our

peripheral vision is locally disordered (Koenderink

and van Doorn, 2000), i.e., blurred. This charac-

teristic feature of the peripheral vision can ease the

perception of color assimilation illusions, i.e., even a

very weak illusion effect can be perceived as a strong

illusion sensation, since in color assimilation illusions

the color of the target region shifts towards that of its

local neighbors.

During the formation of our illusion set, we se-

lected color assimilation illusions with various shapes

and colors by considering the inducer’s frequency of

occurrence and its thickness. We do not include im-

ages that have no illusion effect, since it is not mean-

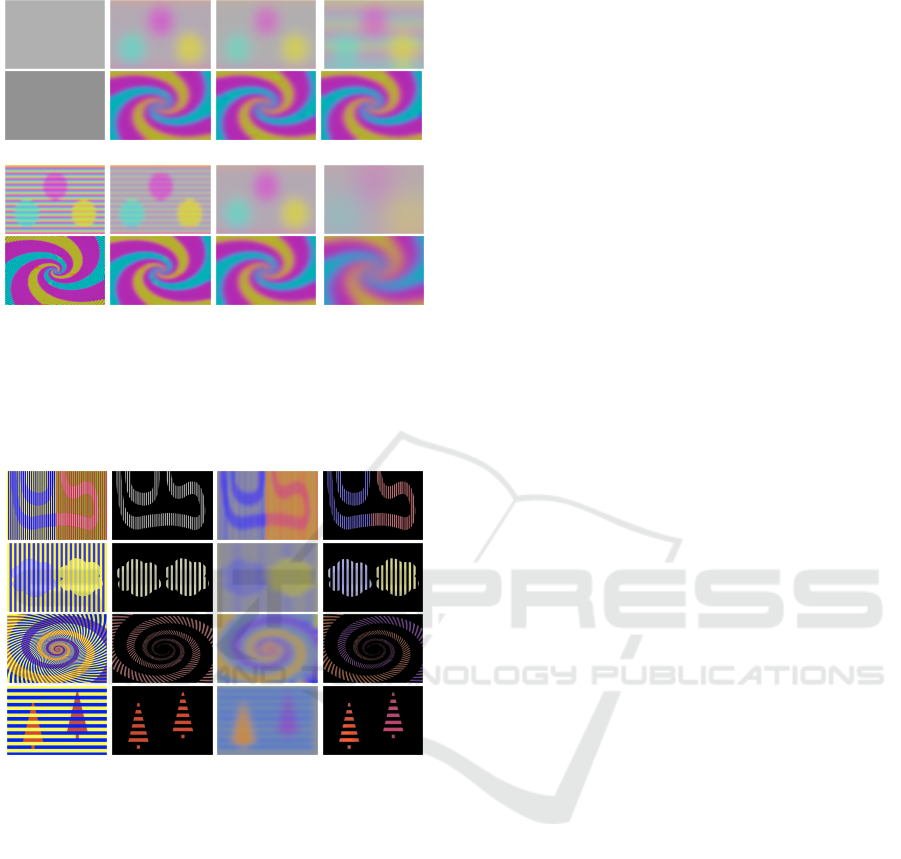

Figure 3: Evoking an illusion sensation highly depends on

the inducer’s characteristics. As given in the first column,

the image having a low inducer frequency and a large in-

ducer thickness does not evoke an illusion sensation. How-

ever, the illusion sensation is easily observable when we in-

crease the frequency and decrease the thickness of the in-

ducer as given in the second column.

Figure 4: Example assimilation illusions with different

shapes and colors used in algorithm design (Ulucan et al.,

2022a; Bach, 2023; Kitaoka, 2023).

ingful to reproduce images that do not cause an il-

lusion sensation. Thus, we create a set containing a

range of images that start to evoke an illusion sensa-

tion to images with a strong illusion effect (Fig. 4).

4.2 Discussion on Color Illusions

After we form our set, we extract the block size β

and the controlling parameter of the Gaussian ker-

nel σ by analyzing the reproduction of distinct color

assimilation illusions having various shapes, inducer

frequencies, and colors via computational color con-

stancy algorithms. While analyzing the reproduction,

we prefer to carry out a visual investigation by tak-

ing the intensities of the pixels in the target regions

into account since there is no error metric designed

for this task. The lack of an objective quantitative

evaluation method is not surprising, since color illu-

sions are a sensation and sensations cannot be quanti-

tatively measured. In other words, distinct observers

do not perceive illusions entirely the same even if we

only consider observers with a normal vision since the

sensory processing of individuals differs from each

other (Emery and Webster, 2019), i.e., even if dis-

tinct observers perceive a target as blue, the perceived

shade of blue may differ between individuals, which

is why studies mimicking illusions usually provide

the intensity change within the target image (Marini

and Rizzi, 2000; Funt et al., 2004; Corney and Lotto,

2007). It is worth mentioning that while we cannot

measure sensations quantitatively, the closest alterna-

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

30

β = 2 β = 8 β = 16 β = 32

σ = 4 σ = 16 σ = 24 σ = 48

Figure 5: Examples of the parameter selection procedure

with several combinations on different illusions. Exper-

iments are carried out with different parameter combina-

tions, and the parameters that work best on all illusions on

average are chosen. This analysis is performed by visual

analysis since there is no quantitative method for this task.

Input Image Input Target Estimates Output Target

Figure 6: Results of the proposed approach with different

methods. (Top-to-bottom) The results of gray world, white-

patch Retinex, shades of gray, and gray edge.

tive to provide quantitative measurements might be

to conduct experiments similar to color-matching ex-

periments with observers. In these experiments, the

observers might be asked to match the target’s colors

they perceive with a test patch whose RGB triplet can

be controlled by the observers. Thus, ground truth im-

ages can be obtained for illusions, which can be used

for the evaluations. Nevertheless, performing such

experiments and forming a dataset containing ground

truths is beyond the scope of this work.

The key point in selecting parameters is that they

have to be chosen so that they are able to mimic our

sensation on images having both weak and strong illu-

sion sensations. Thus, the inducer’s frequency range

is limited to the interval where we can create illusions.

As presented in Fig. 5, the best results are obtained

when we take the block size as 8 × 8. Smaller block

sizes are generally not able to produce satisfying re-

sults when our approach is applied with certain color

constancy algorithms. This is not surprising since

color constancy algorithms depend on image statis-

tics, thus, the block size cannot be too small, since ap-

plying a color constancy algorithm to a small number

of pixels could violate their assumptions, i.e., the gray

world method is only valid when there is a sufficient

number of different colors present in the scene (Ebner,

2009). On the other hand, when we consider larger

block sizes, our sensation on color assimilation illu-

sions cannot be accurately reproduced, i.e., the shapes

and textures degrade, since the locality has to be pre-

served for color illusions (Ulucan et al., 2022a).

Similar to the block size, σ cannot be too large in

order to preserve locality. As the block size, the con-

trolling parameter is determined by visually analyz-

ing the pixel-wise estimates instead of only consider-

ing the target regions, since alongside reproducing the

colors we perceive, it is also important to preserve the

shapes of the target regions in the illusions (Fig. 5).

Therefore, while in Fig. 5 more than one parameter

may seem a proper choice, we need to select the suit-

able parameter combination that reproduces the col-

ors we perceive as close as possible without damag-

ing the shapes when all different color illusions in our

set are considered. Thereupon, we determined σ as

24 which is 3 times β indicating that at least three es-

timations should fall inside the area of support.

We present the results of our approach with dif-

ferent global color constancy methods on color as-

similation illusions in Fig. 6. From the examples,

it is observable that with our modification, computa-

tional color constancy algorithms are able to respond

to color illusions similar to the human visual system.

Our approach transforms algorithms so that they can

provide accurate pixel-wise estimations for illusions.

5 EXPERIMENTS FOR COLOR

CONSTANCY

In this section, we present our experimental setup and

results on color constancy, while we also show that

the parameters selected purely from color assimila-

tion illusions provide the best statistical outcomes on

a multi-illuminant color constancy benchmark.

5.1 Experimental Setup

5.1.1 Algorithms

We investigate the effect of our approach on both

multi-illuminant and global color constancy. We

Investigating Color Illusions from the Perspective of Computational Color Constancy

31

compare our outcomes with the following algo-

rithms; white-patch Retinex (Land, 1977), gray

world (Buchsbaum, 1980), shades of gray (Fin-

layson and Trezzi, 2004), 1

st

order gray edge (Van

De Weijer et al., 2007), weighted gray edge (Gi-

jsenij et al., 2009), double-opponent cells based

color constancy (Gao et al., 2015), PCA based

color constancy (Cheng et al., 2014), color con-

stancy with local surface reflectance estimation (Gao

et al., 2014), mean shifted gray pixels (Qian et al.,

2018), gray pixels (Qian et al., 2019), block-based

color constancy (Ulucan et al., 2022b), biologi-

cally inspired color constancy (Ulucan et al., 2022a),

color constancy convolutional autoencoder (Laakom

et al., 2019), sensor-independent illumination esti-

mation (Afifi and Brown, 2019), cross-camera con-

volutional color constancy (Afifi et al., 2021), local

space average color (Ebner, 2003), Gijsenij et al. (Gi-

jsenij et al., 2011), conditional random fields (Beig-

pour et al., 2013), N-white balancing (Akazawa et al.,

2022), visual mechanism based color constancy (Gao

et al., 2019), retinal inspired color constancy (Zhang

et al., 2016), color constancy weighting factors (Hus-

sain and Akbari, 2018), color constancy adjustment

based on texture of image (Hussain et al., 2019), GAN

based color constancy (Das et al., 2021), and CNNs

based color constancy (Bianco et al., 2017). While

all traditional methods, whose codes are available are

utilized without any modification or optimization, the

results of the remaining algorithms are reported based

on the publications of these works.

5.1.2 Datasets

To benchmark our technique on images captured un-

der multiple light sources, we choose the Multiple Il-

luminant and Multiple Object (MIMO) dataset (Beig-

pour et al., 2013), one of the most notable multi-

illuminant datasets in the field of computational

color constancy, since many algorithms have al-

ready been benchmarked on this dataset (Buzzelli

et al., 2023). The MIMO dataset contains scenes,

which are taken under controlled illumination con-

ditions, and their pixel-wise ground truths. To test

our approach on images captured under global il-

lumination conditions, we use two color constancy

datasets; Recommended ColorChecker and INTEL-

TAU datasets (Hemrit et al., 2018; Laakom et al.,

2021). The Recommended ColorChecker dataset is

the modified version of the Gehler-Shi dataset (Gehler

et al., 2008). It contains indoor and outdoor scenes,

which are captured with two different capturing de-

vices. The INTEL-TAU dataset is one of the recent

color constancy benchmarks containing both indoor

and outdoor images, which are captured with three

Table 1: Analysis of the parameters on the MIMO dataset.

The mean of the pixel-wise angular error between the esti-

mates and the ground truth is provided. The best combina-

tion is highlighted. The parameter combinations that are se-

lected from color illusions work better for color constancy.

Scaling Factor σ

2 4 8 12 16 20 24 28 32 48

Block Size β

4 4.828 4.374 4.058 3.929 3.875 3.859 3.859 3.865 3.874 3.918

8 4.807 4.281 3.883 3.735 3.677 3.659 3.658 3.666 3.675 3.721

12 4.897 4.450 4.004 3.821 3.737 3.706 3.701 3.705 3.713 3.746

16 4.932 4.591 4.100 3.894 3.804 3.763 3.748 3.749 3.758 3.819

20 4.918 4.692 4.255 4.052 3.954 3.905 3.882 3.871 3.867 3.867

24 4.964 4.770 4.335 4.104 3.998 3.948 3.931 3.928 3.931 3.968

28 4.973 4.927 4.458 4.173 4.014 3.928 3.884 3.859 3.845 3.842

32 5.083 5.042 4.599 4.322 4.155 4.054 3.989 3.949 3.924 3.895

48 4.969 5.481 4.759 4.583 4.424 4.303 4.212 4.144 4.091 3.954

different cameras. During the evaluation, images cap-

tured with different cameras are combined to form a

single set since it is crucial to test the algorithms with

images, whose spectral distribution is unknown.

5.1.3 Error Metric

To quantitatively evaluate the modified version of the

color constancy algorithms, we use the angular error

which is a standardized error metric in the field of

color constancy. The angular error (ε) between the

ground truth light source (L

gt

) and the estimated illu-

minant (L

est

) can be calculated as follows;

ε(L

gt

, L

est

) = cos

−1

L

gt

L

est

∥

L

gt

∥

∥

L

est

∥

. (9)

We report the mean and the median of the errors

for the multi-illuminant cases, while we report the

mean, the median, the best 25%, the worst 25%, and

the maximum of the angular error for the global cases.

5.2 Discussion on Color Constancy

First of all, we provide an analysis of the param-

eters selected during the investigation of color illu-

sions to show that these parameters also give the

best outcomes for multi-illuminant color constancy

(Table 1). The best results on the MIMO dataset

are obtained when β is chosen as 8, and σ is set

to 24 as in the reproduction of color illusions. The

fact that the parameters obtained purely by analyz-

ing color assimilation illusions provide also the best

results on a multi-illuminant color constancy bench-

mark underlines the link between color illusions and

multi-illuminant color constancy which implies that

these two phenomena should be further investigated

together as we argue.

After we investigate the parameters obtained from

illusions, we analyze our method’s effectiveness on

color constancy benchmarks. The method we devel-

oped based on our observations on color assimila-

tion illusions is able to transform global color con-

stancy methods into algorithms, which can provide

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

32

Table 2: Statistical results on the MIMO dataset. For each

metric, the top three results are highlighted.

Real-World Laboratory

Algorithms Mean Median Mean Median

Single-Illuminant Algorithms

White-Patch Retinex 6.8 5.7 7.8 7.6

Gray World 5.3 4.3 3.5 2.9

Shades of Gray 6.2 3.7 4.9 4.6

1

st

order Gray Edge 8.0 4.7 4.3 4.1

Weighted Gray Edge 7.9 4.1 4.4 4.0

Double-Opponent Cells based Color Constancy 7.9 5.0 4.6 4.4

PCA based Color Constancy 7.7 3.5 4.1 3.8

Local Surface Reflectance Estimation 4.9 3.8 3.9 3.5

Mean Shifted Gray Pixels 5.8 5.0 13.3 12.6

Block-based Color Constancy 4.8 3.6 3.1 2.8

Biologically Inspired Color Constancy 5.0 4.3 4.2 4.1

Color Constancy Convolutional Autoencoder 12.4 12.3 13.9 14.1

Sensor-Independent Color Constancy 5.9 5.1 9.0 9.0

Cross-Camera Convolutional Color Constancy 11.9 13.0 7.0 7.1

Multi-Illuminant Algorithms

Local Space Average Color 4.9 4.2 2.7 2.5

Gijsenij et al. with White-Patch Retinex 4.2 3.8 5.1 4.2

Gijsenij et al. with Gray-World 4.4 4.3 6.4 5.9

Gijsenij et al. with 1

st

order Gray-Edge 9.1 9.2 4.8 4.2

Conditional Random Fields with White-Patch Retinex 4.1 3.3 3.0 2.8

Conditional Random Fields with Gray-World 3.7 3.4 3.1 2.8

Conditional Random Fields with 1

st

order Gray-Edge 4.0 3.4 2.7 2.6

N-White Balancing with White-Patch Retinex 4.1 3.4 2.6 2.2

N-White Balancing with Gray World 4.6 4.5 3.7 3.1

N-White Balancing with Shades of Gray 4.2 3.8 2.8 2.3

N-White Balancing with 1

st

order Gray Edge 4.7 3.6 2.5 2.2

Visual Mechanism based Color Constancy with Bottom-Up 5.0 4.0 3.7 3.4

Visual Mechanism based Color Constancy with Top-Down 3.8 2.9 2.8 2.8

Retinal Inspired Color Constancy 5.2 4.3 3.2 2.7

Color Constancy Weighting Factors 3.8 3.8 1.6 1.5

Color Constancy Adjustment based on Texture of Image 3.8 3.8 2.6 2.6

Gray Pixels with 2 clusters 3.7 3.3 3.0 2.5

Gray Pixels with 4 clusters 3.9 3.4 2.7 2.2

Gray Pixels with 6 clusters 3.9 3.4 2.6 2.1

CNNs-based Color Constancy 3.3 3.1 2.3 2.2

GAN-based Color Constancy 3.5 2.9 - -

Proposed w/ White-Patch Retinex 3.6 2.9 2.8 2.5

Proposed w/ Gray World 3.9 3.5 2.9 2.7

Proposed w/ Shades of Gray 3.7 2.9 2.9 2.6

Proposed w/ 1

st

order Gray Edge 3.9 3.3 2.8 2.5

Proposed w/ Weighted Gray Edge 3.8 3.3 2.8 2.5

Proposed w/ Double-Opponent Cells based Color Constancy 3.8 3.2 2.9 2.7

Table 3: Statistical results on global color constancy

datasets. The top three results are highlighted.

RECommended ColorChecker INTEL-TAU

Algorithms Mean Median B.25% W.25% Max. Mean Median B.25% W.25% Max.

White-Patch Retinex 10.2 9.1 1.6 20.4 50.5 11.0 13.2 1.8 19.4 43.2

Gray World 4.7 3.6 0.9 10.4 24.8 4.9 3.9 1.0 10.6 31.0

Shades of Gray 5.8 4.2 0.7 13.7 32.4 5.5 4.2 1.0 12.3 35.6

1

st

order Gray Edge 6.4 3.8 0.9 15.8 37.2 6.1 4.2 1.0 14.3 50.7

Weighted Gray Edge 6.1 3.3 0.7 15.5 33.2 6.0 3.6 0.8 14.9 38.9

Double-Opponent Cells based Color Constancy 7.2 4.2 0.7 18.0 48.5 7.2 4.7 0.8 17.0 49.4

PCA based Color Constancy 4.1 2.5 0.5 10.1 28.7 4.5 3.0 0.7 10.6 36.8

Local Surface Reflectance Estimation 4.7 3.7 1.5 9.7 23.5 4.2 3.4 1.0 8.6 31.4

Mean Shifted Gray Pixels 3.8 2.9 0.7 8.3 19.4 3.6 2.6 0.6 8.2 37.6

Gray Pixels 3.1 1.9 0.4 8.0 28.7 3.3 2.2 0.5 8.0 31.5

Biologically Inspired Color Constancy 4.4 3.3 0.8 9.8 23.4 4.1 3.1 0.7 9.4 31.5

Block-based Color Constancy 3.8 3.1 1.4 7.3 20.5 4.2 3.6 1.2 8.5 23.9

Color Constancy Convolutional Autoencoder 2.1 1.9 0.8 4.0 - 3.4 2.7 0.9 7.0 -

Sensor-Independent Color Constancy 2.7 1.9 0.5 6.5 - 3.4 2.4 0.7 7.8 -

Cross-Camera Convolutional Color Constancy 2.5 1.9 0.5 5.4 - 2.5 1.7 0.5 5.9 -

Proposed w/ White-Patch Retinex 3.7 3.2 1.1 7.5 17.9 3.9 3.0 0.9 8.2 31.2

Proposed w/ Gray World 4.2 3.5 0.9 8.7 19.5 4.2 3.2 0.8 9.2 32.0

Proposed w/ Shades of Gray 4.0 3.4 0.9 8.3 19.0 4.0 3.1 0.7 8.9 32.0

Proposed w/ 1

st

order Gray Edge 4.1 3.4 0.9 8.4 19.2 4.1 3.1 0.7 9.0 31.9

Proposed w/ Weighted Gray Edge 4.0 3.4 0.9 8.4 19.2 4.1 3.1 0.7 9.0 31.9

Proposed w/ Double-Opponent Cells based Color Constancy 4.0 3.4 1.0 8.3 19.0 4.0 3.0 0.7 8.8 31.6

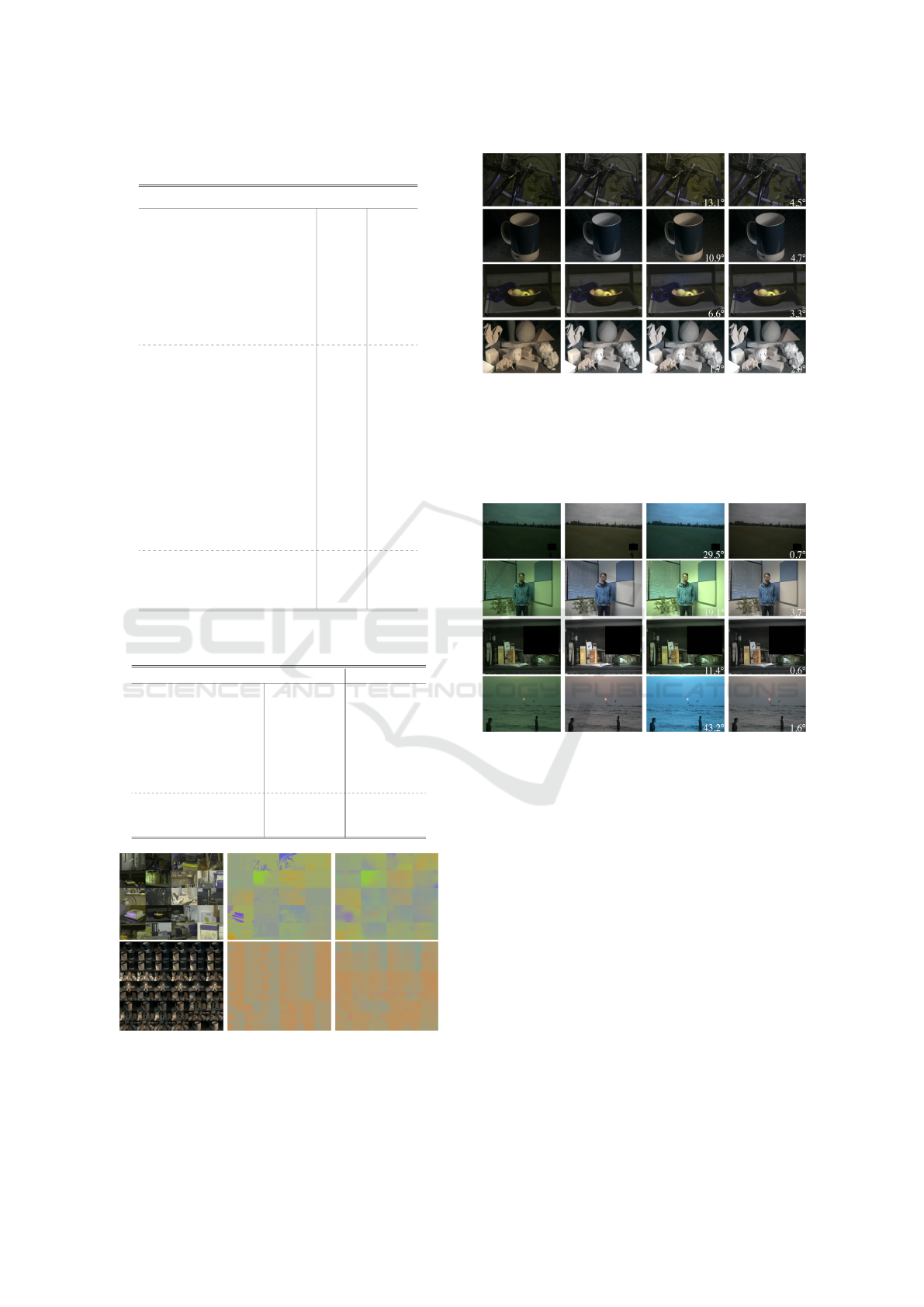

Figure 7: Results on MIMO dataset, the top row contains

scenes from the real-world set, and the bottom row shows

images from the laboratory set. (Left-to-right) The input

scenes, ground truths, and pixel-wise estimations via the

proposed approach with white-patch Retinex.

Figure 8: Random examples from the MIMO dataset. (First

two rows: Left-to-right) Input, ground truth, the result

of white-patch Retinex, and proposed method with white-

patch Retinex. (Last two rows: Left-to-right) Input, ground

truth, the result of gray pixels, and proposed method with

shades of gray. Errors are given at the bottom right corner

of the images.

Figure 9: Random worst case examples from global

datasets. (First two rows: Left-to-right) Input, ground

truth, the result of double-opponent cells based color con-

stancy and proposed method with double-opponent cells

based color constancy. (Last two rows: Left-to-right) In-

put, ground truth, the result of white-patch Retinex and pro-

posed method with white-patch Retinex. Errors are given at

the bottom right corner of the images.

pixel-wise illumination estimates (Table 2). As we

mentioned, our method does not rely on prior infor-

mation or utilize any learning-based techniques, i.e.,

clustering or segmentation methods. We see the in-

dependence of prior information and having no re-

quirement for a high amount of data as an advan-

tage of our method. Since as pointed out in several

color constancy studies we usually cannot have infor-

mation about the number of illuminants illuminating

the scene, and for multi-illuminant color constancy, it

is very challenging to form a large-scale dataset with

ground truth illumination (Gijsenij et al., 2011; Das

et al., 2021).

Investigating Color Illusions from the Perspective of Computational Color Constancy

33

The results of our experiments coincide with our

discussion that the relationship between color assim-

ilation illusions and color constancy should be taken

into account. A simple approach that can reproduce

our sensation on color assimilation illusions is able to

output pixel-wise estimates for multi-illuminant color

constancy (Fig. 7). The modified algorithms utilizing

the parameters obtained from color illusions present

very competitive results when compared with meth-

ods particularly designed for multi-illuminant color

constancy (Fig. 8), while they clearly outperform sev-

eral of them, i.e., the proposed approach with white-

patch Retinex is able to outperform most of the al-

gorithms specifically designed for multi-illuminant

purposes. Another noteworthy outcome is that our

proposed approach enhances existing algorithms with

more efficiency on the real-world set on average com-

pared to other similar strategies such as Gijsenij et

al., conditional random fields, and N-white balanc-

ing (Table 2). For the laboratory set, our outcomes do

not produce the best results but they are competitive.

The reason behind this can be explained by the block

size and controlling parameter of the Gaussian ker-

nel of our approach. These parameters are determined

by investigating color assimilation illusions that con-

tain frequent color changes as the real-world set of

the MIMO dataset. On the other hand laboratory im-

ages do not contain as much complexity as real-world

scenes (Fig. 7). We would like to emphasize that our

learning-free approach is able to output competitive

results when compared to learning-based algorithms.

Also, it is important to note here that the GAN-based

model which has the second-best results on average

on the MIMO dataset, uses the illuminants from the

MIMO dataset during training.

One might think that color assimilation illusions

and global color constancy are not directly related

since global color constancy algorithms provide only

a single RGB triplet rather than pixel-wise estimates.

Yet, as we argued, our approach for reproducing the

behavior of the human visual system on color illu-

sions is able to improve the outcomes of algorithms as

presented in Table 3. The mean angular errors of the

algorithms we modified are able to compete with the

state-of-the-art. Furthermore, the errors of the worst

cases decrease substantially, especially for the algo-

rithms using the maximum intensities as illumination

estimates of the scene (Fig. 9). Additionally, the low-

est errors for the worst cases are obtained via our ap-

proach on both benchmarks. As pointed out in color

constancy studies it is important to enhance the out-

comes for the most difficult cases.

As a final note, even though we extract hard pa-

rameters and use them to modify learning-free ap-

proaches, we are able to improve the methods’ effi-

ciency significantly. This outcome might indicate that

easily producible color assimilation illusions might be

used as input data to train the learning-based color

constancy models for mixed-illumination conditions.

Since the generated illusions will be free from the

sensor specifications of the capturing device, they

might prevent some of the current challenges of the

learning-based models and benchmarks, i.e., data bias

due to the camera sensor specifications and the type

of illuminant (Ulucan et al., 2022b; Buzzelli et al.,

2023). When we consider the outcomes of this work,

we may deduce that color assimilation illusions are

valuable tools for color constancy, hence the connec-

tion between these two phenomena should be further

investigated minutely. This investigation might lead

us to design more robust models aiming at mimicking

the abilities of the human visual system.

6 CONCLUSION

Color illusions and computational color constancy are

two phenomena that can help us to reveal the struc-

ture of the brain, and design artificial systems that are

one step closer to mimicking the human visual sys-

tem. In this study, we investigated color illusions from

the perspective of computational color constancy to

find out what we can learn from the link between

these two phenomena. We argued that if we de-

sign an approach that can reproduce the behavior of

the human visual system on color illusions by utiliz-

ing global color constancy algorithms, this approach

cannot only transform global color constancy meth-

ods into multi-illuminant color constancy algorithms

but also increase their performance on single illumi-

nant benchmarks. Thereupon, we have developed a

simple yet effective method by making use of global

color constancy algorithms to reproduce the behav-

ior of the human visual system on color assimila-

tion illusions. After we investigated color illusions,

we used our findings to see whether our argument

is valid or not. According to the experiments, our

method is able to transform global color constancy al-

gorithms into multi-illuminant color constancy algo-

rithms, which produce competitive results compared

to the state-of-the-art without requiring any prior in-

formation about the scene. Also, the effectiveness

of global color constancy algorithms modified by our

approach increases significantly in single illuminant

color constancy benchmarks especially for the worst

cases, compared to the original versions of the algo-

rithms. From our findings, we suggest that these two

phenomena should be further investigated together

while taking more color illusions into account.

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

34

REFERENCES

Afifi, M., Barron, J. T., LeGendre, C., Tsai, Y.-T., and

Bleibel, F. (2021). Cross-camera convolutional color

constancy. In Int. Conf. Comput. Vision, pages 1981–

1990, Montreal, QC, Canada. IEEE/CVF.

Afifi, M. and Brown, M. S. (2019). Sensor-independent illu-

mination estimation for DNN models. In Brit. Mach.

Vision Conf., Cardiff, UK. BMVA Press.

Afifi, M. and Brown, M. S. (2020). Deep white-balance

editing. In Conf. Comput. Vision Pattern Recognit.,

pages 1397–1406, Seattle, WA, USA. IEEE/CVF.

Afifi, M., Brubaker, M. A., and Brown, M. S. (2022). Auto

white-balance correction for mixed-illuminant scenes.

In Winter Conf. Appl. Comput. Vision, pages 1210–

1219, Waikoloa, HI, USA. IEEE/CVF.

Akazawa, T., Kinoshita, Y., Shiota, S., and Kiya, H. (2022).

N-white balancing: White balancing for multiple il-

luminants including non-uniform illumination. IEEE

Access, 10:89051–89062.

Bach, M. (Last accessed: 18.02.2023). Color assimilation

illusions. michaelbach.de/ot.

Bach, M. and Poloschek, C. M. (2006). Optical illusions.

Adv. Clin. Neurosci. Rehabil., 6(2):20–21.

Beigpour, S., Riess, C., Van De Weijer, J., and An-

gelopoulou, E. (2013). Multi-illuminant estimation

with conditional random fields. IEEE Trans. Image

Process., 23:83–96.

Bianco, S., Cusano, C., and Schettini, R. (2017). Sin-

gle and multiple illuminant estimation using convolu-

tional neural networks. IEEE Trans. Image Process.,

26(9):4347–4362.

Bleier, M., Riess, C., Beigpour, S., Eibenberger, E., An-

gelopoulou, E., Tr

¨

oger, T., and Kaup, A. (2011). Color

constancy and non-uniform illumination: Can existing

algorithms work? In IEEE Int. Conf. Comput. Vision

Workshops, pages 774–781. IEEE.

Brainard, D. H., Long

`

ere, P., Delahunt, P. B., Freeman,

W. T., Kraft, J. M., and Xiao, B. (2006). Bayesian

model of human color constancy. J. Vision, 6(11):10–

10.

Brainard, D. H. and Radonjic, A. (2004). Color constancy.

The Visual Neurosciences, 1:948–961.

Buchsbaum, G. (1980). A spatial processor model for object

colour perception. J. Franklin Inst., 310:1–26.

Buzzelli, M., Zini, S., Bianco, S., Ciocca, G., Schettini, R.,

and Tchobanou, M. K. (2023). Analysis of biases in

automatic white balance datasets and methods. Color

Res. Appl., 48(1):40–62.

Cheng, D., Prasad, D. K., and Brown, M. S. (2014). Illu-

minant estimation for color constancy: Why spatial-

domain methods work and the role of the color distri-

bution. J. Opt. Soc. America A, 31:1049–1058.

Corney, D. and Lotto, R. B. (2007). What are lightness

illusions and why do we see them? PLoS Comput.

Biol., 3(9):e180.

Das, P., Liu, Y., Karaoglu, S., and Gevers, T. (2021).

Generative models for multi-illumination color con-

stancy. In Conf. Comput. Vision Pattern Recognit.,

pages 1194–1203, Montreal, BC, Canada. IEEE/CVF.

Dixon, E. L. and Shapiro, A. G. (2017). Spatial filtering,

color constancy, and the color-changing dress. J. Vi-

sion, 17(3):7–7.

Domislovi

´

c, I., Vr

ˇ

snak, D., Suba

ˇ

si

´

c, M., and Lon

ˇ

cari

´

c, S.

(2022). One-net: Convolutional color constancy sim-

plified. Pattern Recognit. Letters, 159:31–37.

Drew, M. S., Joze, H. R. V., and Finlayson, G. D. (2012).

Specularity, the zeta-image, and information-theoretic

illuminant estimation. In Workshops Demonstrations:

Eur. Conf. Comput. Vision, pages 411–420, Florence,

Italy. Springer.

Ebner, M. (2003). Combining white-patch retinex and the

gray world assumption to achieve color constancy for

multiple illuminants. In Joint Pattern Recognit. Symp.,

pages 60–67, Magdeburg, Germany. Springer.

Ebner, M. (2004). A parallel algorithm for color constancy.

J. Parallel Distrib. Comput., 64:79–88.

Ebner, M. (2007). Color Constancy, 1st ed. Wiley Publish-

ing, ISBN: 0470058299.

Ebner, M. (2009). Color constancy based on local space

average color. Mach. Vision Appl., 20(5):283–301.

Ebner, M. (2011). On the effect of scene motion on color

constancy. Biol. Cybern., 105(5):319–330.

Emery, K. J. and Webster, M. A. (2019). Individual dif-

ferences and their implications for color perception.

Current Opinion Behavioral Sciences, 30:28–33.

Ershov, E., Tesalin, V., Ermakov, I., and Brown, M. S.

(2023). Physically-plausible illumination distribu-

tion estimation. In Int. Conf. Comput. Vision, pages

12928–12936. IEEE/CVF.

Finlayson, G. D. and Hordley, S. D. (2001). Color con-

stancy at a pixel. J. Opt. Soc. America A, 18(2):253–

264.

Finlayson, G. D. and Trezzi, E. (2004). Shades of gray and

colour constancy. In Color and Imag. Conf., pages 37–

41, Scottsdale, AZ, USA. Society for Imaging Science

and Technology.

Funt, B. V., Ciurea, F., and McCann, J. J. (2004). Retinex

in matlab™. J. Electron. Imag., 13(1).

Gao, S., Han, W., Yang, K., Li, C., and Li, Y. (2014). Ef-

ficient color constancy with local surface reflectance

statistics. In Eur. Conf. Comput. Vision, pages 158–

173, Zurich, Switzerland. Springer.

Gao, S., Zhang, M., Li, C., and Li, Y. (2017). Improv-

ing color constancy by discounting the variation of

camera spectral sensitivity. J. Opt. Soc. America A,

34:1448–1462.

Gao, S.-B., Ren, Y.-Z., Zhang, M., and Li, Y.-J. (2019).

Combining bottom-up and top-down visual mecha-

nisms for color constancy under varying illumination.

IEEE Trans. Image Process., 28(9):4387–4400.

Gao, S.-B., Yang, K.-F., Li, C.-Y., and Li, Y.-J.

(2015). Color constancy using double-opponency.

IEEE Transactions Pattern Anal. Mach. Intell.,

37(10):1973–1985.

Gegenfurtner, K. R. (1999). Reflections on colour con-

stancy. Nature, 402(6764):855–856.

Gehler, P. V., Rother, C., Blake, A., Minka, T., and Sharp, T.

(2008). Bayesian color constancy revisited. In Conf.

Investigating Color Illusions from the Perspective of Computational Color Constancy

35

Comput. Vision Pattern Recognit., pages 1–8, Anchor-

age, AK, USA. IEEE.

Gijsenij, A., Gevers, T., and Van De Weijer, J. (2009).

Physics-based edge evaluation for improved color

constancy. In Conf. Comput. Vision Pattern Recognit.,

pages 581–588, Miami, FL, USA. IEEE.

Gijsenij, A., Lu, R., and Gevers, T. (2011). Color constancy

for multiple light sources. IEEE Trans. Image Pro-

cess., 21(2):697–707.

Gomez-Villa, A., Martin, A., Vazquez-Corral, J., and

Bertalm

´

ıo, M. (2019). Convolutional neural networks

can be deceived by visual illusions. In Conf. Comput.

Vision Pattern Recognit., pages 12309–12317, Long

Beach, CA, USA. IEEE/CVF.

Hemrit, G., Finlayson, G. D., Gijsenij, A., Gehler, P.,

Bianco, S., Funt, B., Drew, M., and Shi, L. (2018). Re-

habilitating the colorchecker dataset for illuminant es-

timation. In Color Imag. Conf., pages 350–353, Van-

couver, BC, Canada. Society for Imaging Science and

Technology.

Hurlbert, A. (2007). Colour constancy. Current Biology,

17(21):R906–R907.

Hussain, M. A. and Akbari, A. S. (2018). Color constancy

algorithm for mixed-illuminant scene images. IEEE

Access, 6:8964–8976.

Hussain, M. A., Akbari, A. S., and Halpin, E. A. (2019).

Color constancy for uniform and non-uniform illu-

minant using image texture. IEEE Access, 7:72964–

72978.

Joze, H. R. V., Drew, M. S., Finlayson, G. D., and Rey, P.

A. T. (2012). The role of bright pixels in illumination

estimation. In Color Imag. Conf., pages 41–46, Los

Angeles, CA, USA. Society for Imaging Science and

Technology.

Kitaoka, A. (Last accessed: 18.02.2023). Color illusions.

ritsumei.ac.jp/ akitaoka/index-e.html.

Koenderink, J. J. and van Doorn, A. J. (2000). Blur and

disorder. J. Visual Communication Image Representa-

tion, 11(2):237–244.

Laakom, F., Raitoharju, J., Iosifidis, A., Nikkanen, J., and

Gabbouj, M. (2019). Color constancy convolutional

autoencoder. In Symp. Ser. Comput. Intell., pages

1085–1090, Xiamen, China. IEEE.

Laakom, F., Raitoharju, J., Nikkanen, J., Iosifidis, A., and

Gabbouj, M. (2021). Intel-tau: A color constancy

dataset. IEEE Access, 9:39560–39567.

Land, E. H. (1977). The retinex theory of color vision. Sci-

entific Amer., 237:108–129.

Land, E. H. and McCann, J. J. (1971). Lightness and retinex

theory. J. Opt. Soc. America A, 61(1):1–11.

Linnell, K. J. and Foster, D. H. (1997). Space-average scene

colour used to extract illuminant information. John

Dalton’s Colour Vision Legacy, pages 501–509.

Marini, D. and Rizzi, A. (1997). A computational approach

to color illusions. In Int. Conf. Image Anal. Process,

pages 62–69, Florence, Italy. Springer.

Marini, D. and Rizzi, A. (2000). A computational approach

to color adaptation effects. Image Vision Comput.,

18(13):1005–1014.

Morimoto, T., Kusuyama, T., Fukuda, K., and Uchikawa,

K. (2021). Human color constancy based on the ge-

ometry of color distributions. J. Vision, 21(3):7–7.

Ono, T., Kondo, Y., Sun, L., Kurita, T., and Moriuchi,

Y. (2022). Degree-of-linear-polarization-based color

constancy. In Conf. Comput. Vision Pattern Recog-

nit., pages 19740–19749, New Orleans, LA, USA.

IEEE/CVF.

Qian, Y., Kamarainen, J.-K., Nikkanen, J., and Matas, J.

(2019). On finding gray pixels. In Conf. Com-

put. Vision Pattern Recognit., pages 8062–8070, Long

Beach, CA, USA. IEEE/CVF.

Qian, Y., Pertuz, S., Nikkanen, J., K

¨

am

¨

ar

¨

ainen, J.-K.,

and Matas, J. (2018). Revisiting gray pixel for

statistical illumination estimation. arXiv preprint

arXiv:1803.08326.

Sheth, B. R. and Young, R. (2016). Two visual pathways

in primates based on sampling of space: exploitation

and exploration of visual information. Frontiers Inte-

grative Neuroscience, 10:37.

Uchikawa, K., Fukuda, K., Kitazawa, Y., and MacLeod,

D. I. (2012). Estimating illuminant color based on lu-

minance balance of surfaces. J. Opt. Soc. America A,

29(2):A133–A143.

Ulucan, O., Ulucan, D., and Ebner, M. (2022a). BIO-CC:

Biologically inspired color constancy. In Brit. Mach.

Vision Conf., London, UK. BMVA Press.

Ulucan, O., Ulucan, D., and Ebner, M. (2022b). Color

constancy beyond standard illuminants. In Int. Conf.

Image Process., pages 2826–2830, Bordeaux, France.

IEEE.

Ulucan, O., Ulucan, D., and Ebner, M. (2023a). Block-

based color constancy: The deviation of salient pixels.

In Int. Conf. Acoust. Speech Signal Process., pages 1–

5, Rhodes Island, Greece. IEEE.

Ulucan, O., Ulucan, D., and Ebner, M. (2023b). Multi-scale

color constancy based on salient varying local spatial

statistics. Vis. Comput., pages 1–17.

Van De Weijer, J., Gevers, T., and Gijsenij, A. (2007). Edge-

based color constancy. IEEE Trans. Image Process.,

16:2207–2214.

Wang, F., Wang, W., Wu, D., and Gao, G. (2022).

Color constancy via multi-scale region-weighed net-

work guided by semantics. Frontiers Neurorobotics,

16:841426.

Yang, K.-F., Gao, S.-B., and Li, Y.-J. (2015). Efficient illu-

minant estimation for color constancy using grey pix-

els. In Conf. Comput. Vision Pattern Recognit., pages

2254–2263, Boston, USA. IEEE/CVF.

Zeki, S. (1993). A Vision of the Brain. Blackwell Science,

ISBN: 0632030545.

Zhang, X.-S., Gao, S.-B., Li, R.-X., Du, X.-Y., Li, C.-Y.,

and Li, Y.-J. (2016). A retinal mechanism inspired

color constancy model. IEEE Trans. Image Process.,

25(3):1219–1232.

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

36