3D Nuclei Segmentation by Combining GAN Based Image Synthesis and

Existing 3D Manual Annotations

Xareni Galindo

1,∗

, Thierno Barry

1,∗

, Pauline Guyot

2

, Charlotte Rivi

`

ere

2,3,4 a

, R

´

emi Galland

1 b

and Florian Levet

1,5 c

1

CNRS, Interdisciplinary Institute for Neuroscience, IINS, UMR 5297, University of Bordeaux, Bordeaux, France

2

Univ. Lyon, Universit

´

e Claude Bernard Lyon 1, CNRS, Institut Lumi

`

ere Mati

`

ere, UMR 5306, 69622, Villeurbanne, France

3

Institut Universitaire de France (IUF), France

4

Institut Convergence PLAsCAN, Centre de Canc

´

erologie de Lyon, INSERM U1052-CNRS, UMR5286, Univ. Lyon,

Universit

´

e Claude Bernard Lyon 1, Centre L

´

eon B

´

erard, Lyon, France

5

CNRS, INSERM, Bordeaux Imaging Center, BIC, UAR3420, US 4, University of Bordeaux, Bordeaux, France

fl

Keywords:

Bioimaging, Deep Learning, Miscrocopy, Nuclei, 3D, Image Processing, GAN.

Abstract:

Nuclei segmentation is an important task in cell biology analysis that requires accurate and reliable methods,

especially within complex low signal to noise ratio images with crowded cells populations. In this context,

deep learning-based methods such as Stardist have emerged as the best performing solutions for segmenting

nucleus. Unfortunately, the performances of such methods rely on the availability of vast libraries of ground

truth hand-annotated data-sets, which become especially tedious to create for 3D cell cultures in which nuclei

tend to overlap. In this work, we present a workflow to segment nuclei in 3D in such conditions when no

specific ground truth exists. It combines the use of a robust 2D segmentation method, Stardist 2D, which

have been trained on thousands of already available ground truth datasets, with the generation of pair of 3D

masks and synthetic fluorescence volumes through a conditional GAN. It allows to train a Stardist 3D model

with 3D ground truth masks and synthetic volumes that mimic our fluorescence ones. This strategy allows to

segment 3D data that have no available ground truth, alleviating the need to perform manual annotations, and

improving the results obtained by training Stardist with the original ground truth data.

1 INTRODUCTION

The popularity of 3D cell cultures, such as organoids

or spheroids, has recently exploded due to their abil-

ity to offer valuable models to study human biol-

ogy, far more physiologically relevant than 2D cul-

tures (Jensen and Teng, 2020; Kapalczynska et al.,

2018). Nowadays, automatically acquiring hundreds

of organoids in 3D has become a reality thanks to the

advances in microscopy systems (Beghin et al., 2022).

Life scientists have therefore access to distributions

of nuclei in 3D for a wide diversity of cell types and

growing conditions, a key feature forming the basis of

advanced quantitative analysis of important cell func-

tions. However, 3D cellular cultures inherently dis-

a

https://orcid.org/0000-0002-5048-5662

b

https://orcid.org/0000-0001-7117-3281

c

https://orcid.org/0000-0002-4009-6225

∗

These two authors contributed equally

play a large diversity of nuclei features, shapes and

arrangements. And 3D microscopy of such complex

samples most often leads to images with lower con-

trast and/or signal to noise ratio as compared to 2D

microscopy of single cellular layer, with overlapping

nuclei according to the achieved optical sectioning.

Hence, accurate and automated nuclei segmentation

in these conditions has turned out to be highly com-

plex. The bottleneck has therefore shifted from the

acquisition to the downstream analysis and quantifi-

cation steps.

Many computational solutions have been pro-

posed over the years that uses traditional image pro-

cessing methods to tackle this segmentation prob-

lem (Caicedo et al., 2019; Malpica et al., 1997; Li

et al., 2007), in 2D and 3D. However, they are usually

tailored for a specific application and do not general-

ize well, resulting in the necessity to adapt their pa-

rameters and ultimately preventing an automatic and

bias-free analysis.

Galindo, X., Barry, T., Guyot, P., Rivière, C., Galland, R. and Levet, F.

3D Nuclei Segmentation by Combining GAN Based Image Synthesis and Existing 3D Manual Annotations.

DOI: 10.5220/0012309200003657

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 17th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2024) - Volume 1, pages 265-272

ISBN: 978-989-758-688-0; ISSN: 2184-4305

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

265

In parallel, the last few years have witnessed the

rapid emergence of convolutional neural networks

(CNNs) as a method of choice for microscopy image

segmentation, achieving an accuracy unheard of for a

wide range of segmentation tasks. Among those, deep

learning-based nuclei segmentation has been particu-

larly active, with more than one hundred methods be-

ing developed since 2019 (Mougeot et al., 2022).

Supervised approaches, in which paired of im-

ages and masks are used for training, are the cur-

rent state of the art for nuclei segmentation, with

Stardist (Schmidt et al., 2018; Weigert et al., 2020)

and Cellpose (Stringer et al., 2021) having reached

a prominent position. Their adoption has been fa-

cilitated by their integration into several open-source

platforms, in which life scientists are able to directly

use several pre-trained models (Caicedo et al., 2019)

to segment their images. Unfortunately, these pre-

trained models are limited to the segmentation of nu-

clei in 2D, a fact directly related to the lack of avail-

able ground truth (GT) datasets in 3D. Determining

the organization of nuclei in 3D therefore requires life

scientists to annotate themselves their data, a daunting

and time-consuming task which turns out to be much

more challenging than in 2D due to the lower image

quality and more crowded cell populations.

Generating synthetic training datasets has there-

fore emerged as a potential solution to this problem

through the use of conditional Generative Adversar-

ial Networks (cGANs) (Goodfellow et al., 2014; Isola

et al., 2018; Zhu et al., 2017). cGANs learn a map-

ping from an observed source image x and a ran-

dom noise vector z, to a “transformed” target image

y, G : x, z → y. For nuclei segmentation, the use of

such generative networks aims to generate realistic

microscopy images (background, signal to noise ra-

tio, inhomogeneity, . . . ) of nuclei (target style) from

binary masks (source style) by training the cGAN

with paired or unpaired datasets (Baniukiewicz et al.,

2019; Fu et al., 2018; Wang et al., 2022). Never-

theless, GANs are notoriously known to be difficult

to train, with a large number of hyperparameters re-

quired to be tuned.

In this work, we present a workflow to segment

nuclei in 3D when no specific GT exists by leverag-

ing on cGANs to generate fluorescence synthetic vol-

umes of nuclei from 3D masks. While life scientists

start to be accustomed to using packaged deep learn-

ing methods for segmentation or classification, image

synthesis is still far from being accessible to regu-

lar users. We therefore made the choice to specif-

ically design our workflow to be accessible to non-

expert life-scientists, leveraging on already packaged

methods, both for the segmentation and the image

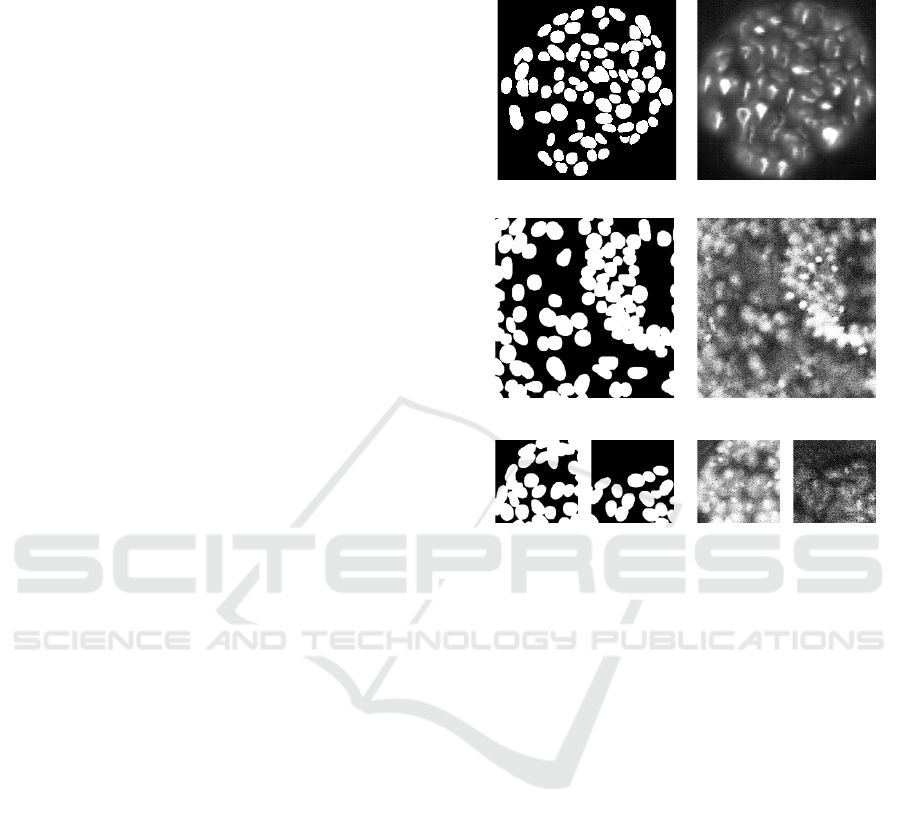

Binary mask

SpCycleGAN

s = 1024*1024

size = 512*512, crop = 128*128

size = 512*512, crop = 256*256

Figure 1: SpCycleGAN fails to generate realistic mi-

croscopy images even with different scaling and cropping.

generation, with the aim to avoid any tedious hand-

annotating steps. With that in mind, our workflow

first relies on the segmentation of the individualized

2D planes of our acquired volumes using the already

pre-trained, well established and now robust 2D seg-

mentation model Stardist 2D db2018 (Schmidt et al.,

2018). After modification of the instance masks by

applying a distance transform and a Gaussian filter,

we pair these transformed ‘GT’ masks with the flu-

orescent nuclei images to train a cGAN specifically

designed for biological images, that do not require

complex hyper-parameters tuning or specific crop-

ping data selection (Han et al., 2020). It allows to

generate 3D volumes of nuclei that resemble the flu-

orescent volumes we acquired, from existing 3D GT

masks that were typically created for a different cell

type or microscopy modality. Finally, those pairs of

GT mask volumes and synthetic fluorescent volumes

of nuclei are used to train a Stardist model in 3D ded-

icated to our 3D samples type and imaging modality.

Through this workflow, we demonstrate that us-

ing existing works to their full extent can (i) cir-

cumvent the requirement of tedious hand-annotating

steps for the segmentation of nuclei in 3D in com-

plex and crowded environment, and (ii) alleviate the

need to develop new deep learning architectures in an

BIOIMAGING 2024 - 11th International Conference on Bioimaging

266

already crowded field (Mougeot et al., 2022), while

also facilitating their usage by life scientists. We

applied this workflow on a couple of cell lines and

microscopy modalities and show that we managed

to have good qualitative segmentation results with-

out having to specifically hand annotate new volumes.

This approach greatly widens the scope of use of 3D

segmentation methods in the rapidly growing and di-

versifying field of 3D cell culture analysis, where

many imaging modalities are explored and cell types

with their own morphology characteristics used.

2 MOTIVATION

We recently acquired several oncospheres in 3D of

the colorectal cancer cell line HCT-116 expressing

the nucleus fluorescent label Fucci with a confocal

microscope, denoted as I

c

, that we aim to segment

while not having any GT mask for that cell culture

type and imaging modality. A couple of years ago

we trained a Stardist 3D model (Beghin et al., 2022),

Stardist

pre

3D

, by creating a GT dataset composed of 7

volumes that we manually annotated and that we will

denote as I

LS

GT

and M

LS

GT

for the images and masks, re-

spectively. These 7 volumes were composed of dif-

ferent 3D cell culture types (oncospheres and neu-

roectoderms), nuclear staining (DAPI and SOX) and

z-steps (500 nm and 1 µm). In addition, we acquired

them with the soSPIM imaging technology (Galland

et al., 2015), a single-objective light-sheet microscope

different from the confocal imaging modality used

to acquire the new oncospheres we aimed to seg-

ment. With this Stardist

pre

3D

model, we managed at

that time to automatically segment more than one

hundred oncospheres and neuroectoderms acquired

with the soSPIM imaging modality (Beghin et al.,

2022). However, applying Stardist

pre

3D

to I

c

gave poor

results, as it only managed to identify a portion of the

nuclei with an overall over-segmentation. This was

most probably due to the different cell morphology

and imaging modality of I

c

as compared to the GT

datasets used to train the model.

We therefore tried to generate synthetic volume

having the same style than I

c

with SpCycleGAN (Fu

et al., 2018), with the final objective of being able to

train a new Stardist 3D model more adapted to our

data. SpCycleGAN allows to generate synthetic vol-

umes from binary masks without GT by being built on

top of the unpaired image-to-image translation model

CycleGAN (Zhu et al., 2017). Being unpaired, the

network can use I

c

as target style without requir-

ing the corresponding nuclei segmentation as source.

Originally, the authors of (Fu et al., 2018; Wu et al.,

Figure 2: Nuclei segmentation with the 2D db2018 pre-

trained Stardist model results in sufficiently good results to

train a conditional GAN, scale bar = 20 µm.

2023) generated 3D nuclei masks by filling volumes

with binarized (deformed) ellipsoids even though it

could be limiting as it may not faithfully represent

the nuclei morphology. Having already a GT nuclei

masks, we therefore decided to train SpCycleGAN

with M

LS

GT

and I

c

to avoid this pitfall.

In our hand, SpCycleGAN failed to generate re-

alistic microscopy images (Fig. 1). Scrutinizing the

toy datasets available with the network, we tested dif-

ferent scaling and cropping of the data, as well as re-

moving any crops having void regions during train-

ing. Unfortunately, none of our tests were conclu-

sive. We hypothesize that this may be related to two

reasons. First, volumes composing I

c

exhibit a high

level of noise and an overall crowded and overlapping

nuclei population. SpCycleGAN may fail to transfer

all these features from binary masks. Second, SpCy-

cleGAN may require parameters fine-tuning to work

properly, but this goes against our objective of having

a more generic workflow that could be used by non-

experts.

3 METHOD

The proposed method is based upon the use of well es-

tablished and more robust deep-learning methods for

nuclei segmentation (Stardist (Schmidt et al., 2018;

Weigert et al., 2020)) and image synthesis (condi-

tional GANs in a slightly modified version (Han et al.,

2020)). Ours choices are motivated by the fact that

we wanted an as-simple-as-possible backbone. In this

manner, we intend to show that a simple approach fo-

cusing on data manipulation combined with existing

networks can perform qualitatively well. In addition,

we propose a workflow that could become generic

to enable the segmentation of a large variety of 3D

biological samples acquired upon different imaging

modalities.

3D Nuclei Segmentation by Combining GAN Based Image Synthesis and Existing 3D Manual Annotations

267

Figure 3: Manipulating instance masks to generate the FC-

GAN’s source style, scale bar = 5 µm. (a) After applying a

distance transform on the binarized instance masks, the FC-

GAN generates plausible images but with visible artefacts

related to the rough gradient of the transform (red arrows).

(b) Applying a Gaussian filter after the mask binarization

helps the FCGAN to learn a proper mapping between the

source and target style.

3.1 Fully-Conditional GAN

Contrary to natural images in which every local re-

gion contains relevant information, biological images

are composed of a mix of informative and void re-

gions. They are also inherently multiscale with both

the large-scale spatial organization of cells and their

individual morphology and texture being essential.

Since GANs have been originally developed for nat-

ural images, they tend to struggle to capture these in-

tertwined features and to fail to generate realistic void

regions.

We therefore based our synthesis method in the

fully-conditional GAN (FCGAN) architecture (Han

et al., 2020), an improvement of GAN focused on

modifications that allow to synthesize multi-scale bio-

logical images. This architecture consists of a genera-

tor built upon the cascaded refinement network (Chen

and Koltun, 2017) instead of the more traditional U-

Net (Ronneberger et al., 2015; Isola et al., 2018) ar-

chitecture as it is less prone to mode collapse. They

also added two modifications to the traditional GAN

architecture. First, they modified the input noise vec-

tor z to a noise ”image”, i.e. a 3D tensor with the

first two dimensions corresponding to the spatial po-

sitions. Instead of a noise vector that limits the size of

output images, modifying the noise image size allows

to output synthetized images of arbitrary sizes. Sec-

ond, they used a multi-scale discriminator. Having

a fixed size discriminator limits the quality and co-

herency of synthetized images to a micro (object) or

macro (global organization) level. Using a multi-scale

discriminator ensure the generator to produce images

both globally and locally accurate, a desired feature

Figure 4: Comparison of experimental confocal images

(scale bar = 20 µm) and synthetic images generated with

the FCGAN style transfer (scale bar = 10 µm).

when dealing with biological images that exhibit sev-

eral levels of organization. All these modifications

allow to directly train the FCGAN with the acquired

images, alleviating the need to fine-tune the parame-

ters and resize or crop the void regions.

3.2 Image Synthesis Based 3D Stardist

Training

In spite of its numerous advantages, FCGAN requires

however paired images for training, therefore com-

pelling us to provide corresponding mask and im-

age pairs. Fortunately, 2D pre-trained models of

well-established nuclei segmentation methods such as

Stardist (Schmidt et al., 2018) or Cellpose (Stringer

et al., 2021) have been trained with thousands of 2D

GT masks and achieve now a high degree of robust-

ness over a large variety of sample types. Conse-

quently, we can directly determine the nuclei spa-

tial distribution by segmenting each of the 2D frame

(1024 ∗ 1024) of the volumes of I

c

with the 2D pre-

trained db2018 Stardist

db

2D

model (Fig. 2) instead of

simulating them. These 2D segmentation will be de-

noted M

c

2D

.

For image generation through the FCGAN model,

binary and instance masks can lead to fuzzy results

because their gradient can be too rough during back-

propagation (Long et al., 2021) (Fig. 3(a)). We

therefore applied a distance transform to our instance

masks (Long et al., 2021) followed by an intensity

normalization and a Gaussian filter, which allows to

remove intensity stiff jumps and dependency to the

nuclei length, ultimately facilitating the style trans-

fer performed by the FCGAN model (Fig. 3(b)). Ap-

plying this process to each mask image of M

c

2D

, we

obtained a set of transformed mask images dt(M

c

2D

).

Consequently, pairing dt(M

c

2D

) (source style) with the

corresponding images from I

c

(target style), allowed

BIOIMAGING 2024 - 11th International Conference on Bioimaging

268

x

y

x

y

x

z

x

z

yy

(z = 50)

(y = 302)

(x = 512)

x

y

x

y

x

z

x

z

yy

(z = 50)

(y = 302)

(x = 512)

x

y

x

y

x

z

x

z

yy

(z = 50)

(y = 302)

(x = 512)

x

y

x

y

x

z

x

z

yy

(z = 50)

(y = 302)

(x = 512)

I

c

Stardist

3D

pre

Stardist

3D

c

Stardist

3D

bin

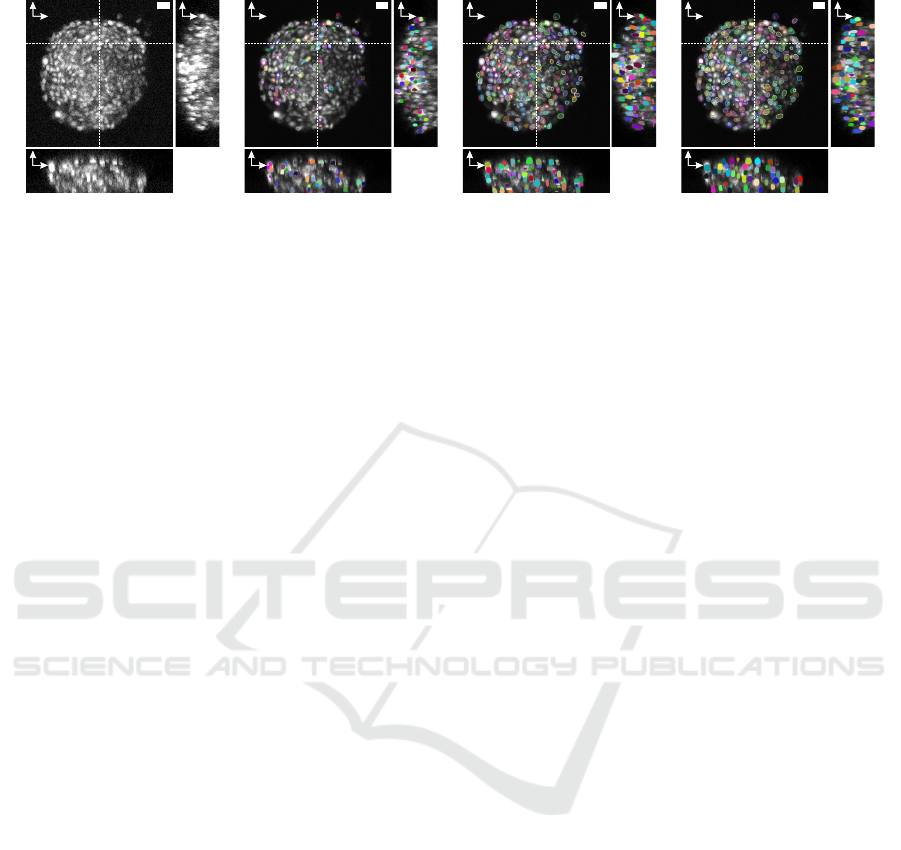

Figure 5: Comparison of segmentation obtained with Stardist

pre

3D

(Beghin et al., 2022), Stardist

bin

3D

and our Stardist

c

3D

model

trained with synthetic volumes.

us to train the FCGAN

c

model.

Finally, we generated I

c

synth

, synthetic confocal-

styled volumes (Fig 4) obtained by applying FCGAN

c

to the transformed masks dt(M

LS

GT

) of the 3D GT

dataset M

LS

GT

. This was carried out after ensuring that

the sizes of the masks composing dt(M

LS

GT

) were sim-

ilar to the sizes of the nuclei present in I

c

. We then

trained a 3D Stardist model called Stardist

c

3D

with the

paired I

c

synth

volumes and the corresponding 3D masks

M

LS

GT

.

4 RESULTS

To assess both qualitatively and quantitatively the seg-

mentation accuracy of our workflow, we compared it

to two baselines and used two different cell cultures

acquired with different microscopy modalities. In ad-

dition to I

c

, we thus acquired with a spinning-disc

confocal microscope 4 suspended S180-E-cad–GFP

cells (Fu et al., 2022) spheroids which nuclei were

stained with DAPI (denoted as I

SD

). Indeed, the vol-

umes composing I

c

are highly challenging because of

their crowded overlapping nuclei population and the

overall level of noise. This makes them unsuitable to

evaluate the segmentation accuracy of our workflow

other than qualitatively, since it is even difficult by

eye to determine the limits of each nucleus. On the

contrary, I

SD

were composed of a lower number of

cells over a shorter thickness (5-10 µm) ensuring to

have a sparser distribution of nuclei per volume (90

nuclei for the 4 volumes) easier to asses. On the side

of the segmentation, and in addition to Stardist

pre

3D

,

the second baseline was obtained by training a 3D

Stardist model Stardist

bin

3D

to learn how to reconstruct

3D instance masks from binarized masks, i.e. by pair-

ing M

LS

GT

with its binarization. 3D segmentation with

Stardist

bin

3D

were therefore done by applying the model

directly on the binarized 2D masks obtained with the

pre-trained Stardist

db

2D

model on I

c

and I

SD

.

4.1 Qualitative Assessment with I

c

Applying Stardist

pre

3D

(Beghin et al., 2022) gave poor

results with the volumes composing I

c

for two rea-

sons (Fig. 5). First, this model was trained with masks

smaller than the nuclei present in I

c

, resulting in over-

segmentation. Second, due to the different imaging

modality, I

c

exhibited more noise with its nuclei hav-

ing a different texture than the ones from I

LS

GT

. It re-

sulted with Stardist

pre

3D

missing a lot of nuclei when

applied to I

c

. On the other hand, Stardist

bin

3D

man-

aged to segment more nuclei than Stardist

pre

3D

since the

2D segmentation provided by Stardist

db

2D

managed to

identify more nuclei on a per frame basis. Neverthe-

less, results shows that Stardist

bin

3D

struggles to handle

isolated 2D masks, resulting in over-segmented small

nuclei (Fig. 6 and Fig. 5). On the contrary, the seg-

mentation provided by Stardist

c

3D

gave better qualita-

tive results than Stardist

pre

3D

and Stardist

bin

3D

(Fig. 5).

In particular, Stardist

c

3D

managed to segment nuclei

exhibiting a wide range of intensity values, from dim

to intense, while preventing over-segmentation at the

same time.

4.2 Quantitative Assessment with I

SD

Contrary to I

LS

GT

and I

c

that exhibited cells having a

bright and homogeneous texture, I

SD

is composed of

cells having a bright region surrounded by a dimer re-

gion which corresponds to the cell cytoplasm (Fig. 7-

left). Consequently, even if Stardist

pre

3D

managed to

identify more than 90% of the nuclei (Table 1), the

overall segmentation is imperfect. While the model

identified almost all the brightest nuclei (Fig. 7), ex-

plaining the high number of True Positives (TP), False

Positives (FP) results from the model separating the

bright and dim regions of a cell as 2 objects. False

Negatives (FN), on the other hand, mostly originates

from some nuclei exhibiting a different texture, for

which the model only identified a small part of the

membrane. Stardist

bin

3D

identified almost 99% of the

3D Nuclei Segmentation by Combining GAN Based Image Synthesis and Existing 3D Manual Annotations

269

Figure 6: Stardist

bin

3D

fails to properly handle isolated 2D

masks by over-segmenting them.

nuclei present in I

SD

(Table 1), thanks to Stardist

db

2D

performing well in segmenting the nuclei in this low

density nuclei distribution. Nevertheless, the seg-

mentation being done in a per-frame basis, it led to

the wrong identification of some cytoplasm as nu-

cleus, explaining the large number of FP. Finally,

we used our workflow to train a new Stardist 3D

model Stardist

SD

3D

with paired of synthetic volumes

I

SD

synth

(generated by transferring the style of I

SD

to the

transformed masks dt(M

LS

GT

)) and masks M

LS

GT

. Sim-

ilarly to Stardist

bin

3D

, Stardist

SD

3D

successfully detected

99% of the nuclei (Table 1). Nevertheless, directly ac-

counting the axial direction for segmentation, in com-

parison to merging 2D masks to 3D as in Stardist

bin

3D

,

ended up with a lower number of errors with only 3

FP resulting from over-segmented cells.

4.3 Discussion

Our workflow relies heavily on the FCGAN image

synthesis capabilities which are, in our context, much

more reliable than a CycleGAN thanks to the FC-

GAN’s multi-scale feature and paired training dataset.

We have shown that using the pre-trained Stardist

db

2D

model directly on the acquired microscopy images

and modifying the obtained instance masks was suffi-

cient to properly train a FCGAN model. On the con-

Table 1: Comparison of the segmentation accuracy between

Stardist

pre

3D

, Stardist

bin

3D

and Stardist

SD

3D

(Data 1, n = 33; Data

2, n = 23; Data 3, n = 11; Data 4, n = 23.

I

SD

Stardist

pre

3D

Stardist

bin

3D

Stardist

SD

3D

TP FP FN TP FP FN TP FP FN

Data 1 97% 15% 3% 100% 0% 0% 97% 9% 3%

Data 2 91% 13% 8% 100% 30% 0% 100% 0% 0%

Data 3 91% 27% 9% 100% 36% 0% 100% 0% 0%

Data 4 87% 9% 13% 96% 17% 4% 100% 0% 0%

Total 92% 14% 8% 99% 17% 1% 99% 3% 1%

Figure 7: Comparison of the segmentation provided by

Stardist

pre

3D

, Stardist

bin

3D

and Stardist

SD

3D

, scale bar = 5 µm.

Red arrows pinpoint regions with segmentation errors.

trary, the segmentation provided by Stardist

db

2D

was

not accurate enough to directly train a Stardist model

in 3D such as Stardist

bin

3D

. This discrepancy is related

to the fact that Stardist

db

2D

does not account for the ax-

ial direction, with several nuclei being therefore only

segmented over one or two frames. It leads to a nu-

clei over-segmentation by Stardist

bin

3D

, and therefore

a possibly larger number of FP and FN. In our work-

flow, Stardist

db

2D

is only used to learn the style transfer.

Creation of the synthetic volumes is done by apply-

ing the new style to dt(M

LS

GT

), a dataset composed of

hand annotated masks in which all the nucleus are ac-

curately identified. Since our models Stardist

c

3D

and

Stardist

SD

3D

are trained with these synthetic volumes,

they are mostly insensitive to the segmentation ac-

curacy of Stardist

db

2D

and are tailored to segment nu-

clei having the same texture as the acquisitions they

have been trained on, therefore outperforming both

Stardist

bin

3D

and Stardist

pre

3D

.

Importantly, our synthetic volumes I

c

synth

and I

SD

synth

are composed of stacked generated 2D frames that

does not guarantee intensity coherency in the axial di-

rection (Fig. 8). They also lack smooth transitions be-

tween some appearing and disappearing nuclei as one

would expect from a real acquisition. Nevertheless,

our results confirm precedent findings (Baniukiewicz

et al., 2019; Wu et al., 2023) for which training seg-

mentation networks with synthetic volumes allows to

achieve good performance.

As already explained by the authors of

Stardist (Schmidt et al., 2018; Weigert et al.,

2020) and Cellpose (Stringer et al., 2021), we want

to reinforce the fact that it is critical to train these

models with data that have objects of similar sizes

and features than the images we want to segment.

Training Stardist with synthetic volumes as proposed

in our workflow facilitates this process. It becomes

indeed sufficient to resize M

LS

GT

to generate images

with nuclei exhibiting the same sizes and textures

than the ones composing the acquired volumes we

BIOIMAGING 2024 - 11th International Conference on Bioimaging

270

Frame n

Frame n + 1

Instance masks

Synthetic images

Figure 8: As synthetic volumes are composed of stack gen-

erated frames, a lack of smooth transition between appear-

ing and disappearing nuclei is visible (red arrows), scale bar

= 10 µm.

want to segment.

Finally, the border detection of the cells compos-

ing I

SD

by Stardist

SD

3D

was not perfect and can be ex-

plained by two reasons (Fig. 7). First, the 3D seg-

mentation provided by Stardist is highly convex while

I

SD

exhibits cells with irregular and possibly concave

borders. Second, I

SD

is composed of volumes with

saturated intensity, resulting in a compressed dynam-

ics. This combined with the small number of volumes

prevented FCGAN to learn a perfect style mapping.

5 CONCLUSION

In this work, our objective was not to develop a

method achieving state of the art accuracy for seg-

menting nuclei in 3D. In the past years (Mougeot

et al., 2022), nuclei segmentation has seen the de-

velopment of hundreds of methods competing for

the leading position in term of segmentation accu-

racy. Unfortunately, most of these techniques are out

of reach for life science labs and imaging facilities

because they can be limited to one operating sys-

tem or do not provide source code, tutorial or toy

datasets (Mougeot et al., 2022). We therefore wanted

to show that it is also possible to achieve good qualita-

tive segmentation by using already established meth-

ods such as Stardist (Schmidt et al., 2018; Weigert

et al., 2020) that are widely used in labs and facilities.

Image synthesis with GANs is still, however, far from

being easily accessible to life scientists. We there-

fore focused on a GAN architecture designed for the

multi-scale organization of biological images. The

FCGAN of Han (Han et al., 2020) was therefore an

ideal choice as it allowed us to directly use our ac-

Figure 9: From the same mask image, two synthetic images

were generated with a different style.

quired microscopy images without cropping or fine-

tuning parameters of the model.

All things considered, our workflow resulted in

qualitatively good segmentation of microscopy vol-

umes for which no GT existed. We were able to

generate synthetic volumes having the style of dif-

ferent cell types and microscopy modalities from the

same set of 3D GT masks (Fig. 9). Pairing them to-

gether, we trained several Stardist models tailored for

each acquisition, managing to segment datasets with-

out spending weeks in annotating volumes. We also

quantitatively demonstrated that our workflow per-

formed better than a pre-trained Stardist 3D model on

a limited set of 4 volumes. In the future, we plan to

push further this quantification as wells as further test

its generalization capability by acquiring new datasets

having a higher complexity than the I

SD

dataset. We

also plan to test Omnipose (Cutler et al., 2022) in our

workflow, with the aim to better identify irregular cell

borders.

Another point to consider is that the image syn-

thesis provided by FCGAN will always be heavily

dependent on the quality of the nuclei 2D segmen-

tation. In our case, the pre-trained Stardist

db

2D

model

gave satisfactory segmentation for feeding the FC-

GAN model. Otherwise, it will be necessary to gener-

ate new 2D manual annotations, a task still a lot easier

than 3D annotations that can be accelerated by tech-

niques such as SAM (Kirillov et al., 2023).

Finally, we think that this workflow could be

improved by further manipulating the existing GT

masks. GANs require similar distributions of the ob-

jects morphology and spatial organization between

the source and target styles to generate realistic im-

ages (Liu et al., 2020). Similarly, Stardist would also

benefit from training with pairs having the same dis-

tributions that the volumes to segment. This requires

the ability to quantify the differences between these

distributions, as it would allow to modify the GT

masks to make them similar to the objects one would

want to segment.

3D Nuclei Segmentation by Combining GAN Based Image Synthesis and Existing 3D Manual Annotations

271

REFERENCES

Baniukiewicz, P., Lutton, E. J., Collier, S., and Bretschnei-

der, T. (2019). Generative adversarial networks for

augmenting training data of microscopic cell images.

Frontiers in Computer Science, 1.

Beghin, A., Grenci, G., Sahni, G., Guo, S., Rajendiran, H.,

Delaire, T., Mohamad Raffi, S. B., Blanc, D., de Mets,

R., Ong, H. T., Galindo, X., Monet, A., Acharya, V.,

Racine, V., Levet, F., Galland, R., Sibarita, J.-B., and

Viasnoff, V. (2022). Automated high-speed 3d imag-

ing of organoid cultures with multi-scale phenotypic

quantification. Nature Methods, 19(7):881–892.

Caicedo, J. C., Goodman, A., Karhohs, K. W., Cimini,

B. A., Ackerman, J., Haghighi, M., Heng, C., Becker,

T., Doan, M., McQuin, C., Rohban, M., Singh, S., and

Carpenter, A. E. (2019). Nucleus segmentation across

imaging experiments: the 2018 data science bowl. Na-

ture Methods, 16(12):1247–1253.

Chen, Q. and Koltun, V. (2017). Photographic image syn-

thesis with cascaded refinement networks.

Cutler, K. J., Stringer, C., Lo, T. W., Rappez, L., Strous-

trup, N., Brook Peterson, S., Wiggins, P. A., and

Mougous, J. D. (2022). Omnipose: a high-precision

morphology-independent solution for bacterial cell

segmentation. Nature Methods, 19(11):1438–1448.

Fu, C., Arora, A., Engl, W., Sheetz, M., and Viasnoff, V.

(2022). Cooperative regulation of adherens junction

expansion through epidermal growth factor receptor

activation. Journal of Cell Science, 135(4):jcs258929.

Fu, C., Lee, S., Ho, D. J., Han, S., Salama, P., Dunn, K. W.,

and Delp, E. J. (2018). Three dimensional fluores-

cence microscopy image synthesis and segmentation.

In 2018 IEEE/CVF Conference on Computer Vision

and Pattern Recognition Workshops (CVPRW), pages

2302–23028.

Galland, R., Grenci, G., Aravind, A., Viasnoff, V., Studer,

V., and Sibarita, J.-B. (2015). 3d high- and super-

resolution imaging using single-objective spim. Na-

ture Methods, 12(7):641–644.

Goodfellow, I. J., Pouget-Abadie, J., Mirza, M., Xu, B.,

Warde-Farley, D., Ozair, S., Courville, A., and Ben-

gio, Y. (2014). Generative adversarial networks.

Han, L., Murphy, R. F., and Ramanan, D. (2020). Learning

generative models of tissue organization with super-

vised gans.

Isola, P., Zhu, J.-Y., Zhou, T., and Efros, A. A. (2018).

Image-to-image translation with conditional adversar-

ial networks.

Jensen, C. and Teng, Y. (2020). Is it time to start transition-

ing from 2d to 3d cell culture? Frontiers in Molecular

Biosciences, 7.

Kapalczynska, M., Kolenda, T., Przybyla, W., Za-

jaczkowska, M., Teresiak, A., Filas, V., Ibbs, M., Bliz-

niak, R., Luczewski, L., and Lamperska, K. (2018). 2d

and 3d cell cultures – a comparison of different types

of cancer cell cultures. Archives of Medical Science,

14(4):910–919.

Kirillov, A., Mintun, E., Ravi, N., Mao, H., Rolland, C.,

Gustafson, L., Xiao, T., Whitehead, S., Berg, A. C.,

Lo, W.-Y., Doll

´

ar, P., and Girshick, R. (2023). Seg-

ment anything.

Li, G., Liu, T., Tarokh, A., Nie, J., Guo, L., Mara, A., Hol-

ley, S., and Wong, S. T. (2007). 3d cell nuclei seg-

mentation based on gradient flow tracking. BMC Cell

Biology, 8(1):40.

Liu, Q., Gaeta, I. M., Millis, B. A., Tyska, M. J., and

Huo, Y. (2020). Gan based unsupervised segmenta-

tion: Should we match the exact number of objects.

Long, J., Yan, Z., Peng, L., and Li, T. (2021). The geometric

attention-aware network for lane detection in complex

road scenes. PLOS ONE, 16(7):1–15.

Malpica, N., de Sol

´

orzano, C. O., Vaquero, J. J., Santos,

A., Vallcorba, I., Garc

´

ıa-Sagredo, J. M., and del Pozo,

F. (1997). Applying watershed algorithms to the seg-

mentation of clustered nuclei. Cytometry, 28(4):289–

297.

Mougeot, G., Dubos, T., Chausse, F., P

´

ery, E., Grau-

mann, K., Tatout, C., Evans, D. E., and Desset, S.

(2022). Deep learning – promises for 3D nuclear

imaging: a guide for biologists. Journal of Cell Sci-

ence, 135(7):jcs258986.

Ronneberger, O., Fischer, P., and Brox, T. (2015). U-

net: Convolutional networks for biomedical image

segmentation. In Navab, N., Hornegger, J., Wells,

W. M., and Frangi, A. F., editors, Medical Image Com-

puting and Computer-Assisted Intervention – MICCAI

2015, pages 234–241, Cham. Springer International

Publishing.

Schmidt, U., Weigert, M., Broaddus, C., and Myers, G.

(2018). Cell detection with star-convex polygons.

In Frangi, A. F., Schnabel, J. A., Davatzikos, C.,

Alberola-L

´

opez, C., and Fichtinger, G., editors, Med-

ical Image Computing and Computer Assisted In-

tervention – MICCAI 2018, pages 265–273, Cham.

Springer International Publishing.

Stringer, C., Wang, T., Michaelos, M., and Pachitariu, M.

(2021). Cellpose: a generalist algorithm for cellular

segmentation. Nature Methods, 18(1):100–106.

Wang, J., Tabassum, N., Toma, T. T., Wang, Y., Gahlmann,

A., and Acton, S. T. (2022). 3D GAN image synthesis

and dataset quality assessment for bacterial biofilm.

Bioinformatics, 38(19):4598–4604.

Weigert, M., Schmidt, U., Haase, R., Sugawara, K., and

Myers, G. (2020). Star-convex polyhedra for 3d object

detection and segmentation in microscopy. In 2020

IEEE Winter Conference on Applications of Computer

Vision (WACV), pages 3655–3662, Los Alamitos, CA,

USA. IEEE Computer Society.

Wu, L., Chen, A., Salama, P., Winfree, S., Dunn, K. W.,

and Delp, E. J. (2023). Nisnet3d: three-dimensional

nuclear synthesis and instance segmentation for flu-

orescence microscopy images. Scientific Reports,

13(1):9533.

Zhu, J.-Y., Park, T., Isola, P., and Efros, A. A. (2017).

Unpaired image-to-image translation using cycle-

consistent adversarial networks. In Computer Vision

(ICCV), 2017 IEEE International Conference on.

BIOIMAGING 2024 - 11th International Conference on Bioimaging

272