Estimation of Package-Boundary Confidence for Object Recognition in

Rainbow-SKU Depalletizing Automation

Kento Sekiya

1

a

, Taiki Yano

1 b

, Nobutaka Kimura

2 c

and Kiyoto Ito

1 d

1

Research & Development Group, Hitachi, Ltd., Kokubunji, Tokyo, Japan

2

Research & Development Division, Hitachi America, Ltd., Holland, Michigan, U.S.A.

Keywords:

Object Recognition, Robot, D epalletizi ng, Boundary Confidence.

Abstract:

We developed a reliable object r ecognition method for a rainbow-SKU depalletizing robot. Rainbow SKUs in-

clude various types of objects such as boxes, bags, and bottles. The objects’ areas need to be estimated in order

to automate a depalletizing robot; however, it is difficult to detect the boundaries between adjacent objects. To

solve this problem, we focus on the difference in the shape of the boundaries and propose package-boundary

confidence, which assesses whether the recognized boundary correctly corresponds to that of an object unit.

This method classifies recognition results into four categories on the basis of the objects’ shape and calculates

the package-boundary confidence for each category. The results of our experimental evaluation indicate that

the proposed method with slight displacement, which is automatic recovery, can achieve a r ecognition suc-

cess rate of 99.0 %. This is higher than that with a conventional object recognition method. Furthermore,

we verified that the proposed method is applicable to a real-world depalletizing robot by combining package-

boundary confidence with automatic recovery.

1 INTROD UC TIO N

Rainbow-SKU depalletizing, which is the process of

picking up various types of objects from a loaded

pallet, is a strenuous manual task, so automating

the task with robots is highly desirable. Many re-

searchers have proposed depalletizing systems for au-

tomating robots by combining robot motion planning

with image r e cognition (Nakamoto et al., 2016; Eto

et al., 201 9; Doliotis et al., 2016; Aleotti et al., 2021 ;

Caccavale et al., 2020; Katsoulas a nd Kosmopoulos,

2001; Kimura et al., 2016).

Automated robots need to complete a series of

picking tasks accurately and quickly in order to be

applicable in warehouses. If robots pick incorrect ob-

jects, workers must perform a manual recovery, e.g.,

remote control, wh ic h in creases downtime. The re are

several causes of incorrect picking, such as the short-

age of adsorption power in the robot hand and false

estimation of an object’s position o r p ose. To a d-

dress the hardware prob le m, robo t hands have been

a

https://orcid.org/0009-0007-1667-3081

b

https://orcid.org/0000-0001-9433-0569

c

https://orcid.org/0000-0001-5248-5108

d

https://orcid.org/0000-0002-2243-5756

developed which can grasp objects of various shapes

(Tanaka et al., 2020; Fontanelli et al., 20 20). In the

software, a f unction is needed to estimate objects’

boundaries from ima ges and point clouds. However,

there are few methods which have been successfully

used to estimate the areas of all types of objects’ in

rainbow-SKU depalletizing. This is b ecause object

boundaries differ depending on the shape and mate-

rial of the object, e.g., cardboard, ba gs, rolls of toilet

paper, and shrink-wrapped packages containing mul-

tiple bottles or cans in transparent wrapping. It is also

difficult to divide multiple ob jects placed ad ja c ent to

one another because of the missing the boundary be-

tween the objects. Without the bounda ry, multiple o b-

jects are recognized as one object and robots incor-

rectly pick multiple objects at the same time.

To estimate object boundaries with high accuracy,

we introduce package-boundary confidence, which

assesses whether the recognized boundary correctly

correspo nds to that of an object unit. When the con-

fidence is high, the robots pick the object, and wh en

the confidence is low, the robots do not pick the object

and switch to automatic recovery mode. In this study,

we use slight displacement as an automatic recovery,

which is to pick the edge of objec t and move it a short

distance. By doing this, the gap between multiple ob-

Sekiya, K., Yano, T., Kimura, N. and Ito, K.

Estimation of Package-Boundary Confidence for Object Recognition in Rainbow-SKU Depalletizing Automation.

DOI: 10.5220/0012307300003660

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 19th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2024) - Volume 3: VISAPP, pages

309-316

ISBN: 978-989-758-679-8; ISSN: 2184-4321

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

309

Object with holes

Pack of bo les in

opaque wrapping

Pack of bo les in

transparent wrapping

General objects

High con dence

Low con dence

Surface

es ma on

Classi

ca on

Con

dence

calcula

on

Line detec on

Height gap detec

on

Con

dence result

Classi

ca on result

Surface result

Camera image

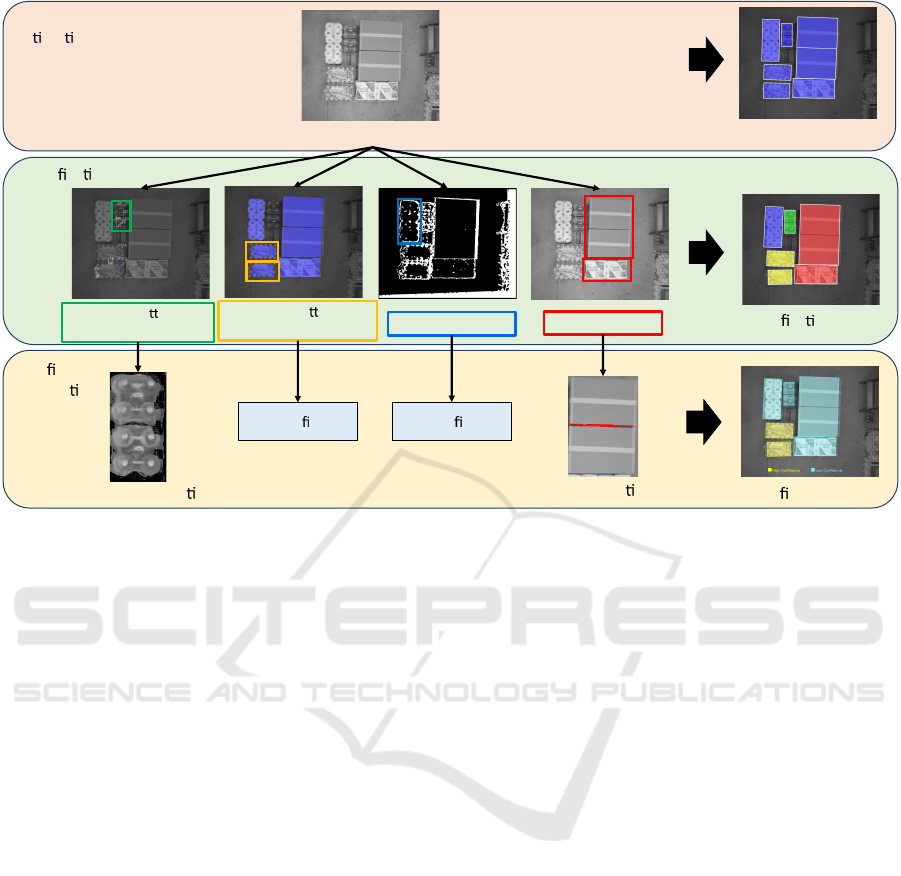

Figure 1: Overview of the proposed method, which consists of three steps: surface estimation, classification, and confidence

calculation. By classifying the results of surface esti mation into four categories depending on the objects’ shape and calculat-

ing confidence for each category, the proposed method can achieve reliable recognition for rainbow SKUs.

jects will be correctly recognized as distinct bound -

aries. Although slight displacement is quicker th an

manual rec overy, doing it too frequently causes the

throughput to deteriorate. Therefore, a recognition

method with both high accuracy and high throughput

is necessary.

In this paper, we propose a method for calculat-

ing package- bound ary confidenc e. Since robots need

to recognize the boundaries of various types of ob-

jects, we classify objects into one of four categories

and change how to calculate confidence depending on

the object’s boundaries. We condu cted experiments

to simulate a rainbow-SKU dep a lletizing process us-

ing a 3D vision sensor. The results indicate that the

proposed method achieves a success rate of 99.0%,

which is higher than that with a conventional object

recogn ition method. We also evaluated the frequency

of sligh t displacement, which was 37.5%. These re-

sults show that the pr oposed method is applicable for

a wide variety of objects in rainbow-SKU dep alletiz-

ing.

2 RELATED WORK

In this section, we discuss conventional object recog-

nition methods used for depalletizing.

2.1 Deep-Learning-Based Segmentation

Deep-learning-based segmentation has been used for

depalletizing recognition (Girshick, 2015; Liu et al.,

2016; Redmon et al., 2016; He et al., 2017). This

method estimates objects’ areas and classifies the ar-

eas into classes simultaneously. In recent years, deep-

learning meth ods are applied to object recognitio n for

depalletizing (Buongiorn o et al., 2022). However,

there is no large dataset of rainbow-SKU object, so

deep-lear ning methods are applied to a lim ited vari-

ety of objects such as cardboard.

2.2 Edge-Based Boundary Detection

Conventionally, edge-based boundary detection has

been widely used in region estimation f or boxed ob-

jects (Katsoulas and Kosmopoulos, 2001; Naumann

et al., 2020; Stein et al., 2014; Li et a l., 2020). By es-

timating the edges on the basis of the luminance gra-

dient or degree of change in the normal direction, the

boundary of each object can be recognized. However,

due to the difficulty in detecting the edges o f wraps

that canno t be measured, there is a risk of detecting in-

dividual products a s a single object rather than an en-

tire shrink-wrappe d package. The proposed method

is an extended ap proach of this ty pe of me thod; our

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

310

method does n ot req uire a large dataset to train the

model, and it can be applied to cardboard pa ckages as

well as shrink-wrapped objects in rainbow-SKU de-

palletizing.

3 METHODS

3.1 Concept of Object Recognition

This section describes the concept of the pro posed

method for recognizing various objects. In rainbow-

SKU depalletizing, the shape of object boundaries is

different from each other, such as a gap, a straight

line, and part of an arc. Also, because shrink-wra pped

objects have small g aps between individual o bjects,

gaps must be distinguished from the correct bound-

ary. Ther efore, it is d ifficult to evaluate the recognized

boundary consistently.

In the proposed metho d, we classify objects into

four categories and calculate package -boundary con-

fidence in different ways for each categories. The

number of categories are determined taking into the

varieties of ra inbow SKUs in the wareho use. To rec-

ognize various obje cts inclu ding packs of wrapped

bottles, we selected the object recognition architec-

ture from (Yano et al., 2023) as the base architecture

in this research.

3.2 Classification

This section describes how objects are classified into

the four categories. The previous method (Yano et al.,

2023) estimated object surfaces from gray-scale im-

ages and point clouds. Ther efore, in the present study

we use informatio n based on object surfaces and clas-

sify them into one of the following fo ur categories:

pack of bottles in transparent plastic wrappin g, pack

of bottles in opaque wrappin g, o bject with holes, and

general object.

Multiple bottles shrink wrapped in transparent

plastic are defined as a pack of bottles in transpar-

ent wrapping. The bottle caps a re regarded as small

surfaces. Becau se these surfaces are too small to be

recogn ized as a single object unit, m ultiple surfaces

are co nnected and recognized as a sing le object unit.

Objects recognized from con necting are as are classi-

fied into this category.

Multiple bo ttles shrink wrapped in opaque plastic

are defined as a pack of bottles in opaque wrapping.

The opaque wra pping is recognized as a large surface

in the middle areas of the object, and the bottles are

recogn ized as small surfaces in the surrounding areas.

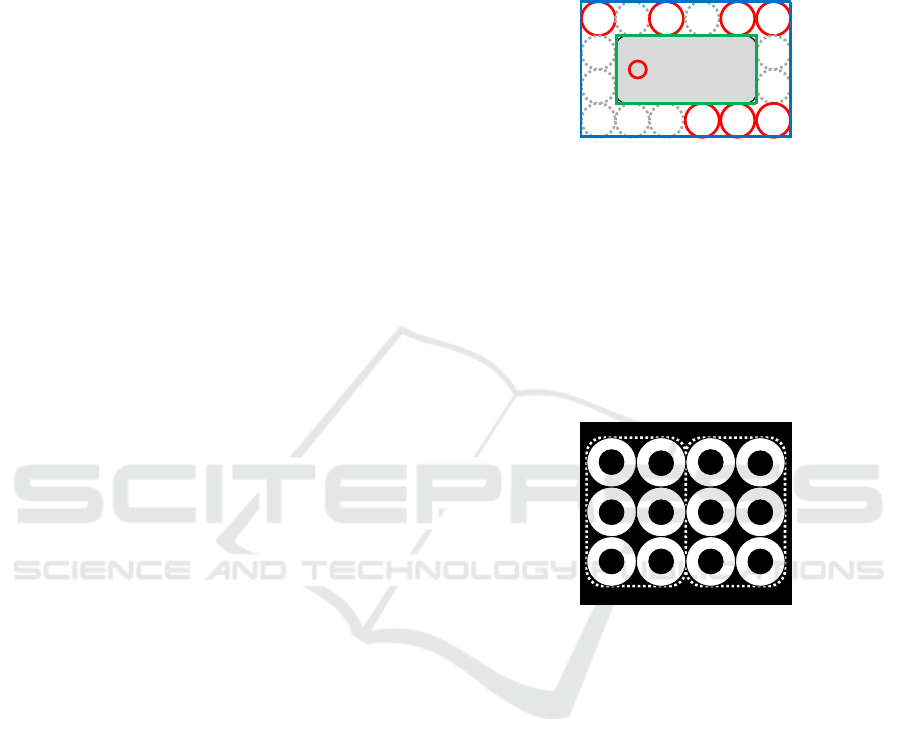

As shown in Figure 2, to detect bottle c aps, we detect

circles with Hough transformation for gray- scale im-

ages and calculate the ratio of circles in surrounding

areas to that in the middle areas (Yuen et al., 1990). If

the ratio of circles is high, the object is classified into

this category.

Figure 2: Circle detection for pack of bottles i n opaque

wrapping. Middle areas are internal green line and sur-

rounding areas are between green and blue lines.

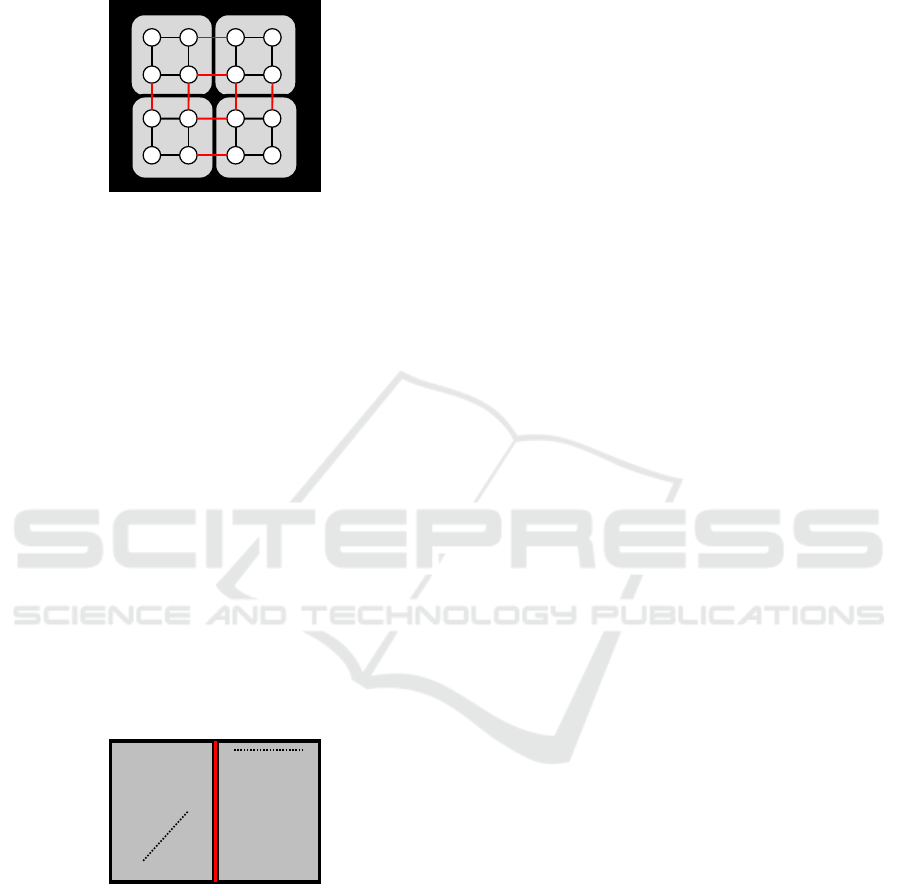

Objects which have ga p areas in depth inside ob-

jects are defined as an object with holes. As shown

in Figure 3 , we focused on a fact that depth informa -

tion of such objects have several holes corresponding

to tube holes or empty areas between tubes that are

touching. We make depth ima ges from point clouds

and calculate the depth of gap a reas. If there are many

gaps, the object is classified into this category.

Figure 3: Depth image of object with holes. Black areas

indicate tube holes or empty areas.

Finally, objects which are not classified into the

previous three categories are defined as a general ob-

ject.

3.3 Confidence Calculation

This section describes how package -boundary confi-

dence is calculated for various objects. The method

of calculating confidence changes dep ending on the

category in which the object has been classified.

3.3.1 Pack of Bottles in Transparent Wrapping

The bounda ries of this type o f objects are the gaps

between several con nected areas. As shown in Figure

4, four objects (i.e., packs of bottles) are placed ad-

jacent. Multiple bottles are connected by graph s, but

several object units are over-connected. In this situ-

ation, we calculate the depth of the gap areas on the

graphs. If there are large d e ep gaps, the reco gnition

Estimation of Package-Boundary Confidence for Object Recognition in Rainbow-SKU Depalletizing Automation

311

areas d o not need to be divided, and we set the confi-

dence to low. On the other hand, if the graphs do not

have large deep gaps, we set the confidenc e to high.

Figure 4: Four objects (packs of bottles in transparent wrap-

ping) placed adjacent. Red graphs mean overlapping large

deep gaps.

3.3.2 Pack of Bottles in Opaque Wrapping

The boundaries of this type of objects are bottle caps

in the surrounding areas. Because the opaque wrap-

ping consists of large surfaces, th e recognition areas

of large surfaces can be detected reliably. Also, if

multiple objects are placed adjacent, large surfaces do

not overlap with each other because there are bottle

caps between the large surfaces. Therefore, the confi-

dence is always set to h igh for this type of object.

3.3.3 Object with Holes

The boundaries of this type of objects are deep gaps.

However, it is difficult to determine whether single

object unit is really single object unit or be separated

into multiple object units. This is because both correct

boundaries and gaps inside objects are similar deep

gaps. Theref ore, the confidence is a lways set to low

for this type of object.

Figure 5: Line detection for a general object. Dotted lines

are removed lines and a red li ne is used for confidence cal-

culation.

3.3.4 General Object

The boundaries of this type of objects are straight

lines. This type of objects do not include bottles or

paper rolls, and one unit is square-shaped in depal-

letizing. If these objects are placed adjacent, bou nd-

aries can be detected as a pattern of straight lines even

if there are no gaps between objects. As shown in Fig-

ure 5, we use line detection with Hough transforma -

tion for a g ray-scale image and a depth image (Duda

and Hart, 1972). We also remove detected lines which

are not vertical to the side of recognition areas and

are near the side of recognition areas. This is because

these lines a re not the boundaries wh ich divide rec og-

nition areas into multiple objects. We calculate the

number of lines in re c ognition areas which are not re-

moved. If there a re many lines, the recognition areas

do not need to be divided and the con fidence is set

to low. On the other hand, if there are few lines, the

confidence is set to high.

3.4 Two Parameter Sets for Various

Objects

In the previous method (Yano et al., 2023), it was

difficult to correctly detect both large to p surfaces as

well as small top surfaces such as bottle caps using

only a single parameter set for object recognition.

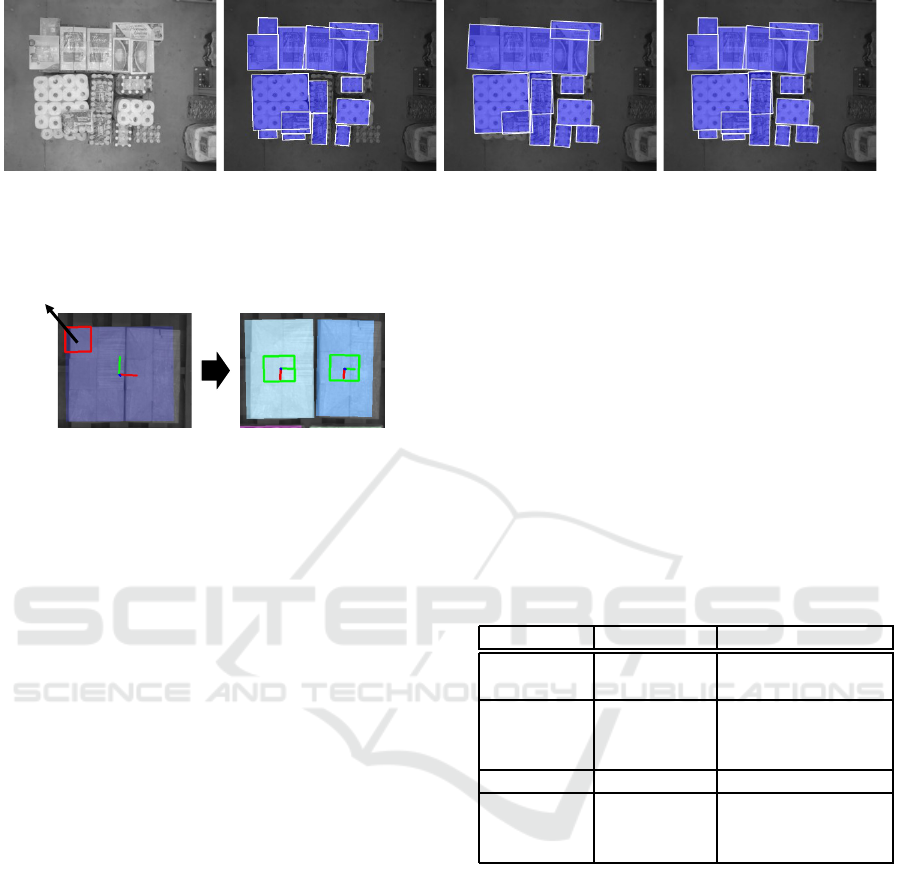

The first parameter set is adjusted for detecting

even small and thin edges. As shown in Figure 6b,

when using the first parameter set, the algorithm cor-

rectly divides boxes which are touching, but it detects

many edges fro m complicated measured data, such as

bottles in transparent wrapping, and d ivides them into

many small surfaces. As a results, it fails to detect

packs of bottles.

The second param eter set is adju sted to ignore

small and thin edges. As shown in Fig ure 6c, when

using the second parameter set, the algorithm success-

fully detects bottles in transparent wrapping. How-

ever, it fails to divide boxes that are touching because

it ignores the relatively thin boundary. Based on this

preliminar y trial, we apply each pa rameter set individ-

ually and integrate the two results a s shown in Figure

6d.

3.5 Slight Displacement

This section describes how slight displacement is per-

formed for automatic recovery. As mentioned in

1, package-boundary confidences are used to detect

successful results of object recognition as well as

to switch the pr ocess of robot motion. When the

package-boundary confidence is low, slight displac e-

ment is c onducted so that multiple objects placed ad-

jacent have enough gaps between the m (Figure 7).

Then, the object surfaces are estimated again and the

recogn ition is successful.

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

312

(a) Raw image (b) First parameter (c) Second parameter (d) Integrated results

Figure 6: Two parameter sets for surface esti mation. Results of first parameter are divided into many surfaces. Results of

second parameter are undivided for multi ple objects.

Slight

displacement

Figure 7: Improvement of recognition by slight displace-

ment.

4 EXPERIMENTS AND RESULTS

4.1 Experimental Setups

In the experiment, we collect datasets of gray-scale

images and point clouds using the vision system of

the depalletizer. The vision system is a TVS 4.0 vi-

sion sensor, a 3D vision head with two came ras and an

industrial projector, with a resolution of 1280 x 1024.

The height of the vision sensor from the floor surface

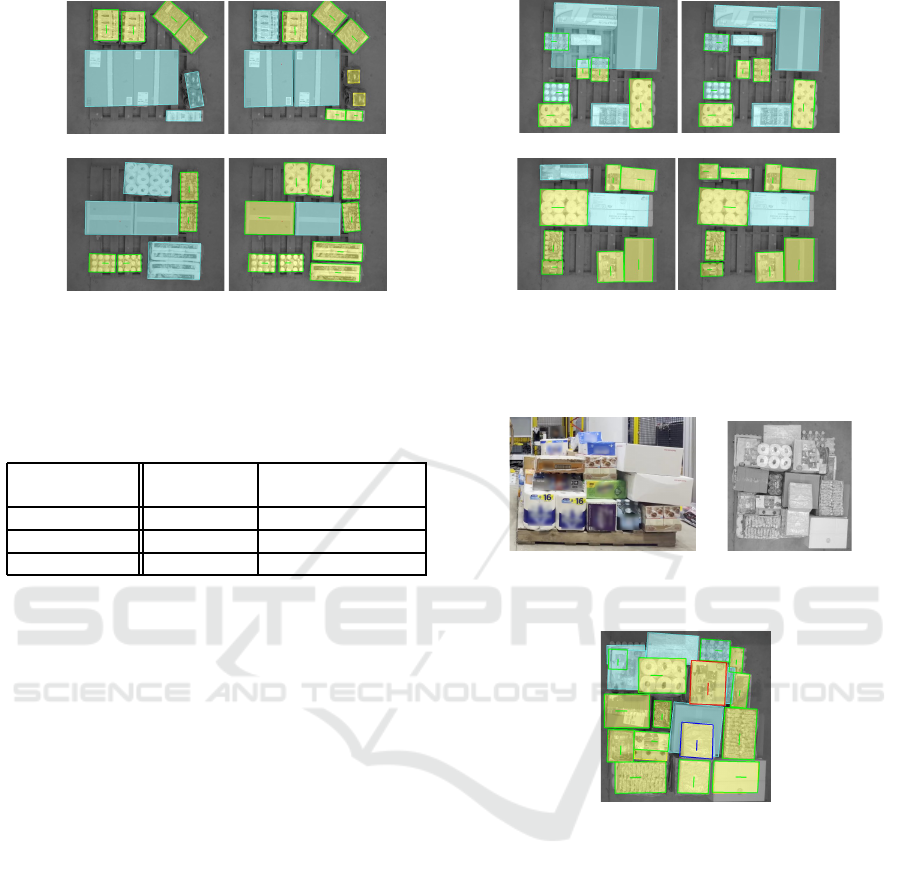

is 3,200 mm. The 32 types and eight grou ps of ob -

jects to be recognized are shown in Figure 8. In the

proposed method, these o bjects are classified into four

categories.

To evaluate the recognition rate of the proposed

method, we selected five pairs of objects from the

SKUs as shown in Figure 8 and arranged them so that

the objects in each pair would be close to each other as

shown in Figure 9. An example of the situation where

the SKUs in one pair of objects are same is shown in

Figure 9a, and that where the SKUs are different is

shown in Figure 9b, respectively. We captured 300

images while changing the gaps between paire d ob-

jects to 0 m m, 10 m m, and 20 mm, and applied our

technique to these images.

4.2 Definition of Successful Recognition

A recognition is defined as succ e ssful when the robot

picks the corre ct object. Whe ther the robot avoids in-

correct picking is determined by the object area of

surface estimation and the confid e nce whose thresh-

old is set as 0.5 (Table 1). If th e object area from the

surface estimation is correct, the recognition is suc-

cessful regard less of the confidenc e , be cause when

the confidence is hig h, the r obot picks the object

directly, and when the confidence is low, the robot

slightly displaces the object and the second attempt at

recogn ition is successful. If the object area from the

surface estimation is incorrect, the result can either be

a success or failure. Whe n th e confiden c e is high, the

robot picks the wrong object a nd the resulting recog-

nition is a failure, whereas when the confidence is low,

the robot slight displaces the object.

Table 1: Definiti on of success.

Object area Confidence Result

Correct ≧ 0.5 (high)

Success

(Direct picking)

Correct < 0.5 (low)

Success

(Slight

displacement)

False ≧ 0.5 (high) Failure

False < 0.5 (low)

Success

(Slight

displacement)

4.3 Results

Figure 10a and Figu re 10b show example results of

the tests where object pair s with the same and dif-

ferent SKUs w ere placed close to each other, respec-

tively. There are two p a tterns of ob je c t arrangements

in each figu re, and we show the results of two condi-

tions for ea c h pattern in which the gap between a pair

of objects is 0 mm or 20 mm.

Table 2 shows the resulting success rates for th e

same and different SKUs. Table 3 shows a com-

parison of co nventional meth ods and the proposed

method. As conventional methods, we used th e sur-

face estimation method (Yano et al., 2023), which

does not consider pac kage-boundary confidence. In

Estimation of Package-Boundary Confidence for Object Recognition in Rainbow-SKU Depalletizing Automation

313

A. Cardboard box

B. Branded box

C. Wrapped boxes

D. Plastic packaging

E. Wrapped rolls of

toilet paper

(d) Pack of bottles in

opaque wrapping

(c) Pack of bottles in

transparent wrapping

(b) Object with

holes

(a) General object

G. Wrappedbottles

(with label)

F. Wrapped cans

H. Wrapped bottles

(without label)

Figure 8: Rainbow SKUs used in experiments. The 32 types and eight groups of objects are classified into four categories.

(a) Same SKUs (b) Different SKUs

Figure 9: Scenes used for evaluation of the proposed

method.

Table 2: Resulting success rates and frequency of slight dis-

placement.

Condition of

object pairs

Success rate

Frequency

of slight

displacement

Same SKUs

with 0 mm gaps

99.4%

(523/526)

67.9%

Same SKUs

with 10 mm gaps

99.4%

(523/526)

35.6%

Same SKUs

with 20 mm gaps

99.0%

(521/526)

28.0%

Different SKUs

with 0 mm gaps

98.5%

(403/409)

37.0%

Different SKUs

with 10 mm gaps

98.8%

(404/409)

25.8%

Different SKUs

with 20 mm gaps

98.8%

(404/409)

25.5%

Total

99.0%

(2778/2805)

37.5%

this method, we use one of the pa rameters shown in

Figure 6b and Figure 6c for each experiment, and if

the estimation is false, the recognition would be a fail-

ure.

The proposed method had a high success rate for

32 types of objects, with a total success ra te of 99.0 %,

which is higher than that of the conventional methods.

In addition, the frequency of slight displacement was

37.5 % in total.

Table 3 also shows estimation of the in c rease in

depalletizing time for each condition. In case of task

failure, human intervention is required for recovery

and it takes five times the duration of a successful op-

eration. Additionally, in case of slight displacement,

there is no human interven tion, but since two pick-

ing actio ns are per formed for each object, it is esti-

mated to take twice the time. The estimated overall

operation time f or all ob jects in the case of all suc-

cesses can be expressed as Equation ( 1). In the con-

ventional method, frequency of slight displacement

is 0 %, while in the pro posed method, frequency of

slight displacement is calculated at 37.5 %. Increase

of operation time is estimated to be reduced by 35 %

compare d to the conventional method.

T = S × 1 + (1 − S) × 4 +D × 1 (1)

where:

T : Increase of operation tim e [%]

S : Success rate [%]

D : Frequency of slight displacement [%]

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

314

Scene1 (20mm gaps )

Scene1 (0mm gaps)

A

G

H

B

C

A

G

B

C

H

F

A

E

D

G

F

A

E

D

G

Scene2 (20mm gaps )

Scene2 (0mm gaps)

(a) Same SKUs

C

D

E

G

B

B

C

B

F

A

C

D

E

G

B

B

C

B

F

A

F

E

H

B

A

E

H

B

C

D

F

E

H

B

A

E

H

B

C

D

Scene3 (20mm gaps )

Scene3 (0mm gaps)

Scene4 (20mm gaps )

Scene4 (0mm gaps)

(b) Different SKUs

Figure 10: Examples of confidence calculation results. Yellow and cyan objects have high and low confidences, respectively.

A–H correspond to the eight groups in Figure 8.

Table 3: Comparison between conventional methods and

the proposed method.

Success rate

Increase o f time

(Estimated)

1st parameter 74.7 % 176 %

2nd p arameter 74.8 % 176 %

Proposed 99.0 % 141 %

4.4 Discussion

The proposed method achieved a high success rate

when two objects we re placed adjacent to each other

as shown in Figure 9 . However, in a real depalletiz-

ing environme nt, various objects a re stacked on top

of each other as shown in Figure 11. Figure 12 shows

the proposed method applied to the scene shown in

Figure 11 b. The top view shows that some objects

were occluded and the confidence could not be calcu-

lated. Therefor e, depth information must be consid-

ered when determ ining the order in which the objects

are picked . By picking obje c ts in o rder from highest

to lowest, occluded objects are picked later. Also, a s

higher objects are picked, the o bjects at the bottom are

no longer occluded, improving the accuracy of c onfi-

dence calculation. Hence, by considering robot mo-

tion planning, the proposed meth od can be applicable

in real depalletizing environments.

The proposed method also revealed the lim ita-

tions of slight displacement. Slight displacement

contributed to high recognition a c curacy, which was

99.0 %. However, the hig h frequency of slight

displacement still caused a decrease in throughp ut.

High throughput is crucial for operating depalletiz-

ing robots in warehouses. Reducing the frequency of

slight displacement will need to be addressed in the

future.

(a) View from side (b) View from top

Figure 11: Arrangement of stacked objects.

Figure 12: Resulting scene of stacked objects.

5 CONCLUSION

We prop osed a package- boundary confidence estima-

tion metho d that enables reliable recogn ition for var-

ious objects in rainbow-SKU depalletizing. The pr o-

posed method focuses on the differences in th e pack-

age boundar y of each typ e of object. Then we clas-

sified the results of su rface estimatio n into four c at-

egories and calculated the package-boundary confi-

dence using a different techniq ue for eac h category.

In the experiment, the pro posed metho d demon-

strated a high success rate for 32 types of objects,

with a total success rate of 99.0 %, which is higher

than that of the conventional method.

We also determined that the p roposed method is

applicable when various objects are stacked. The in-

Estimation of Package-Boundary Confidence for Object Recognition in Rainbow-SKU Depalletizing Automation

315

troduction of slight displacement to the depalletizer

system is expected to reduce the freq uency of manual

recovery perf ormed by workers.

Our future work includes integrating bound ary es-

timation with deep-learning method s to avoid results

with low confidence regardless of correct object ar-

eas. Although our method reduced incorrec t p ic k-

ing, the in crease in the fre quency of slight displace-

ment caused the throughput of robot automation to

decrease. We also aim to develop more short-time re-

covery methods, focusing on causes of failed recog-

nition.

ACKNOWLEDGEMENTS

We a re gra teful to Mr. Takaharu Matsui for his fruitful

discussions. We also thank Mr. Koichi Kato for his

assistance in im plementing the software for the pro-

posed method.

REFERENCES

Aleotti, J., Baldassarri, A., Bonf`e, M., Carricato, M., Chiar-

avalli, D., Di Leva, R., Fantuzzi, C., Farsoni, S., In-

nero, G., Rizzini, D. L., Melchiorri, C., Monica, R.,

Palli, G., Rizzi, J., Sabattini, L., Sampietro, G., and

Zaccaria, F. (2021). Toward future automatic ware-

houses: An autonomous depalletizing system based

on mobile manipulation and 3d perception. Applied

Sciences (Switzerland), 11(13).

Buongiorno, D., Caramia, D., Di Ruscio, L., Longo, N.,

Panicucci, S., Di Stefano, G., Bevilacqua, V., and

Brunetti, A. (2022). Object detection for industrial

applications: Training strategies for ai-based depal-

letizer. Applied Sciences, 12(22):11581.

Caccavale, R., Arpenti, P., Paduano, G., Fontanellli, A.,

Lippiello, V., Villani, L., and Siciliano, B. (2020).

A flexible robotic depalletizing system for supermar-

ket logistics. IEEE Robotics and Automation Letters,

5(3):4471–4476.

Doliotis, P., McMurrough, C. D. , Criswell, A., Middleton,

M. B., and Rajan, S. T. (2016). A 3D perception-based

robotic manipulation system for automated truck un-

loading. IEEE International Conference on Automa-

tion Science and Engineering, 2016-Novem:262–267.

Duda, R. O . and Hart, P. E. (1972). Use of the Hough trans-

formation to detect lines and curves in pictures. Com-

munications of the ACM, 15(1):11–15.

Eto, H., Nakamoto, H., Sonoura, T., Tanaka, J., and Ogawa,

A. (2019). Development of automated high-speed de-

palletizing system for complex stacking on roll box

pallets. Journal of Advanced Mechanical Design, Sys-

tems and Manufacturing, 13(3):1–12.

Fontanelli, G. A., Paduano, G., Caccavale, R., Arpenti, P.,

Lippiello, V., Villani, L., and Siciliano, B. (2020). A

reconfigurable gripper for robotic autonomous depal-

letizing in supermarket logistics. IEEE Robotics and

Automation Letters, 5(3):4612–4617.

Girshick, R. (2015). Fast R-CNN. In Proceedings of the

IEEE international conference on computer vision,

pages 1440–1448.

He, K., Gkioxari, G., Doll´ar, P., and Girshick, R. (2017).

Mask R- CNN. In Proceedings of the IEEE Interna-

tional Conference on Computer Vision, pages 2961–

2969.

Katsoulas, D. K. and Kosmopoulos, D. I. (2001). An ef-

ficient depalletizing system based on 2D range im-

agery. Proceedings - IEEE International Conference

on Robotics and Automation, 1:305–312.

Kimura, N., Ito, K., Fuji, T., Fujimoto, K., Esaki, K.,

Beniyama, F., and Moriya, T. (2016). Mobile

dual-arm robot for automated order picking system

in warehouse containing various kinds of products.

IEEE/SICE International Symposium on System Inte-

gration, pages 332–338.

Li, J., Kang, J., Chen, Z., Cui, F., and Fan, Z. (2020).

A workpiece localization method for robotic de-

palletizing based on region growing and ppht. IEEE

Access, 8:166365–166376.

Liu, W., Anguelov, D., Erhan, D., Szegedy, C., R eed, S., Fu,

C.-Y., and Berg, A. C. (2016). SSD: Single shot multi-

box detector. In Computer Vision–ECCV 2016: 14th

European Conference, Amsterdam, The Netherlands,

Proceedings, Part I 14, pages 21–37. Springer.

Nakamoto, H., Eto, H., Sonoura, T., Tanaka, J. , and Ogawa,

A. (2016). High-speed and compact depalletizing

robot capable of handling packages stacked compli-

catedly. IEEE International Conference on Intelligent

Robots and Systems, pages 344–349.

Naumann, A., Dorr, L., Ole Salscheider, N., and Furmans,

K. (2020). Refined Plane Segmentation for Cuboid-

Shaped Objects by Leveraging Edge Detecti on. Pro-

ceedings - 19th IEEE International Conference on

Machine Learning and Applications, pages 432–437.

Redmon, J., Divvala, S., Girshick, R., and Farhadi, A.

(2016). You only look once: Unified, real-time object

detection. In Proceedings of the IEEE Conference on

Computer Vision and Pattern Recognition, pages 779–

788.

Stein, S. C., Schoeler, M., Papon, J., and Worgotter, F.

(2014). Object partitioning using local convexity.

Proceedings of the IEEE Computer Society Confer-

ence on Computer Vision and Pattern Recognition,

(June):304–311.

Tanaka, J., Ogawa, A., Nakamoto, H., Sonoura, T., and Eto,

H. (2020). Suction pad unit using a bellows pneumatic

actuator as a support mechanism for an end effector of

depalletizing robots. ROBOMECH Journal, 7(1):1–

30.

Yano, T., Kimura, N., and Ito, K. (2023). Surface-graph-

based 6dof object-pose estimati on for shrink-wrapped

items applicable to mixed depalletizing robots. In

VISIGRAPP (5: VISAPP), pages 503–511.

Yuen, H., Princen, J., Illingworth, J., and Kittler, J. (1990).

Comparative study of Hough transform methods f or

circle finding. Image and vision computing, 8(1):71–

77.

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

316