Uncertainty-Based Detection of Adversarial Attacks

in Semantic Segmentation

Kira Maag

1

and Asja Fischer

2

1

Technical University of Berlin, Germany

2

Ruhr University Bochum, Germany

fi

Keywords:

Deep Learning, Semantic Segmentation, Adversarial Attacks, Detection.

Abstract:

State-of-the-Art deep neural networks have proven to be highly powerful in a broad range of tasks, including

semantic image segmentation. However, these networks are vulnerable against adversarial attacks, i.e., non-

perceptible perturbations added to the input image causing incorrect predictions, which is hazardous in safety-

critical applications like automated driving. Adversarial examples and defense strategies are well studied for

the image classification task, while there has been limited research in the context of semantic segmentation.

First works however show that the segmentation outcome can be severely distorted by adversarial attacks. In

this work, we introduce an uncertainty-based approach for the detection of adversarial attacks in semantic

segmentation. We observe that uncertainty as for example captured by the entropy of the output distribution

behaves differently on clean and perturbed images and leverage this property to distinguish between the two

cases. Our method works in a light-weight and post-processing manner, i.e., we do not modify the model or

need knowledge of the process used for generating adversarial examples. In a thorough empirical analysis, we

demonstrate the ability of our approach to detect perturbed images across multiple types of adversarial attacks.

1 INTRODUCTION

In recent years, deep neural networks (DNNs)

have demonstrated outstanding performance and have

proven to be highly expressive in a broad range of

tasks, including semantic image segmentation (Chen

et al., 2018; Pan et al., 2022). Semantic segmentation

aims at segmenting objects in an image by assigning

each pixel to a fixed and predefined set of semantic

classes, providing comprehensive and precise infor-

mation about the given scene. However, DNNs are

vulnerable to adversarial attacks (Bar et al., 2021)

which is very hazardous in safety-related applica-

tions like automated driving. Adversarial attacks are

small perturbations added to the input image caus-

ing the DNN to perform incorrect predictions at test

time. The perturbations are not perceptible to hu-

mans, making the detection of these examples very

challenging, see for example Figure 1. This undesir-

able property of DNNs is a major security concern in

real world applications. Hence, developing efficient

strategies against adversarial attacks is of high impor-

tance. Such strategies can either increase the robust-

ness of DNNs making it more difficult to generate ad-

versarial examples (defense) or build on approaches

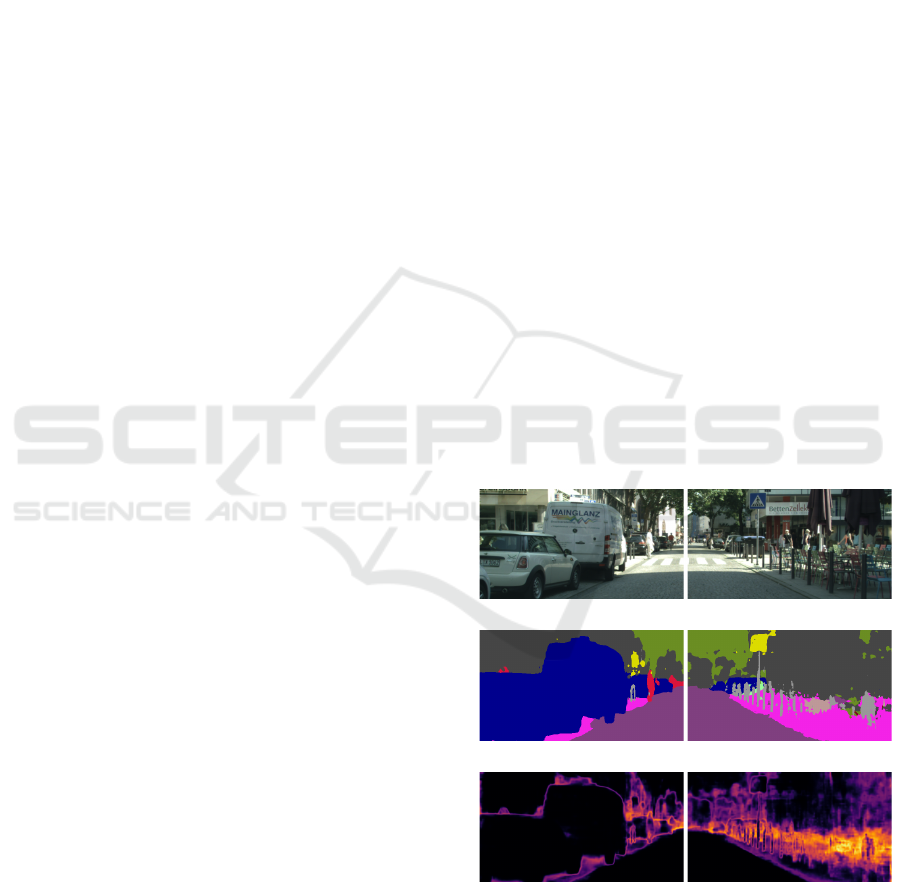

(a) Input image (perturbed half on right hand side)

(b) Semantic Segmentation prediction

(c) Entropy heatmap

Figure 1: Semantic segmentation prediction and entropy

heatmap for clean (left) and perturbed image (right) gen-

erated by a dynamic target attack for hiding pedestrians.

to detect adversarial attacks (detection).

Adversarial attacks have attracted much attention,

and numerous attacks as well as detection strategies

Maag, K. and Fischer, A.

Uncertainty-Based Detection of Adversarial Attacks in Semantic Segmentation.

DOI: 10.5220/0012303500003660

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 19th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2024) - Volume 2: VISAPP, pages

37-46

ISBN: 978-989-758-679-8; ISSN: 2184-4321

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

37

have been proposed (Khamaiseh et al., 2022). How-

ever, adversarial examples have not been analyzed ex-

tensively beyond standard image classification mod-

els, often using small datasets such as MNIST (LeCun

and Cortes, 2010) or CIFAR10 (Krizhevsky, 2009).

The vulnerability of modern DNNs to attacks in more

complex tasks like semantic segmentation in the con-

text of real datasets from different domains has been

rather poorly explored. The attacks which have been

tested so far on semantic segmentation networks can

be divided roughly into three categories. The first ap-

proaches transfer common attacks from image clas-

sification to semantic segmentation, i.e., pixel-wise

classification (Agnihotri and Keuper, 2023; Gu et al.,

2022; Rony et al., 2022). Other works (Cisse et al.,

2017; Xie et al., 2017) have presented attacks specifi-

cally designed for the task of semantic segmentation,

either attacking the input in a way that leads to the

prediction of some predefined and image-unrelated

segmentation mask or the complete deletion of one

segmentation class (Metzen et al., 2017). Such at-

tacks are more difficult to detect than attacks that per-

turb each pixel independently. The third group of at-

tacks creates rectangular patches smaller than the in-

put size, which leads to an erroneous prediction of the

entire image (Nakka and Salzmann, 2020; Nesti et al.,

2022). Defense methods aim to be robust against

such attacks, i.e., to achieve high prediction accuracy

even on perturbed images. For semantic segmenta-

tion tasks, defense approaches are often robust against

only one type of attack (Arnab et al., 2018; Klingner

et al., 2020; Yatsura et al., 2022). In contrast, de-

tection methods aim at classifying an input as clean

or perturbed based on the output of the segmentation

model (Xiao et al., 2018).

In this paper, we present an uncertainty-based ap-

proach for detecting several kinds of adversarial at-

tacks on semantic image segmentation models. Un-

certainty information has already been exploited for

the detection of adversarial attacks on classification

DNNs, but has not been investigated in the context

of segmentation so far. In (Feinman et al., 2017) an

approximation to Bayesian inference (Monte-Carlo

Dropout) is proposed, which is widely employed to

estimate model uncertainty, for the detection of ad-

versarial attacks. The gradient-based approach intro-

duced in (Michel and Ewetz, 2022) generates salient

features which are used to train a detector. While both

methods require access to the inside of the model, our

approach can be applied as a post-processing step us-

ing only information of the network output. We con-

struct features per image based on uncertainty infor-

mation provided by the DNN such as the entropy of

the output distribution. In Figure 1 (c), the entropy

heatmaps for a clean (left) and a perturbed image

(right) are shown, indicating high uncertainties in the

attacked regions, motivating the use of uncertainty in-

formation to separate clean and perturbed images. On

the one hand, these features for clean and perturbed

inputs are fed into an one-class support vector ma-

chine that performs unsupervised novelty detection

(Weerasinghe et al., 2018). On the other hand, we

train a logistic regression model with clean and per-

turbed data for classification. The perturbed data used

during training is generated by only one kind of adver-

sarial attack method, while the detector is applied to

identify adversarial examples of other methods. Our

approach neither modifies the semantic segmentation

model nor requires knowledge of the process for gen-

erating adversarial examples. We only assume that

our post-processing model is kept private while the

attacker may have full access to the semantic segmen-

tation model.

In our tests, we employ state-of-the-art seman-

tic segmentation networks (Chen et al., 2018; Pan

et al., 2022) applied to the Cityscapes (Cordts et al.,

2016) as well as Pascal VOC2012 dataset (Evering-

ham et al., 2012) demonstrating our adversarial at-

tack detection performance. To this end, we con-

sider different types of attackers, from pixel-level

attacks designed for image classification (Goodfel-

low et al., 2015; Kurakin et al., 2017) to pixel-

wise attacks developed for semantic segmentation

(Metzen et al., 2017) and patch-based ones (Nesti

et al., 2022). The source code of our method

is publicly available at https://github.com/kmaag/

Adversarial-Attack-Detection-Uncertainty. Our con-

tributions are summarized as follows:

• We introduce a new uncertainty-based approach

for the detection of adversarial attacks for the se-

mantic image segmentation task. In a thorough

empirical analysis, we demonstrate the capabil-

ity of uncertainty measures to distinguish between

clean and perturbed images. Our approach serves

as a light-weight post-processing step, i.e., we do

not modify the model or need knowledge of the

process for generating adversarial examples.

• For the first time, we present a detection method

that was not designed for a specific adversarial at-

tack, rather has a high detection capability across

multiple types. We achieve averaged detection ac-

curacy values of up to 100% for different network

architectures and datasets.

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

38

2 RELATED WORK

In this section, we discuss the related works on de-

fense and detection methods for the semantic seg-

mentation task. Defense methods aim to achieve high

prediction accuracy even on perturbed images, while

detection methods classify the model input as clean

or attacked image. A dynamic divide-and-conquer

strategy (Xu et al., 2021) and multi-task training

(Klingner et al., 2020), which extends supervised se-

mantic segmentation by a self-supervised monocular

depth estimation using unlabeled videos, are consid-

ered as adversarial training approaches enhancing the

robustness of the networks. Another kind of defense

strategy is input denoising to remove the perturba-

tion from the input without the necessity to re-train

the model. In (Bar et al., 2021) image quilting and

the non-local means algorithm are presented as input

transformation techniques. To denoise the perturba-

tion and restore the original image, a denoise autoen-

coder is used in (Cho et al., 2020). The demasked

smoothing technique, introduced in (Yatsura et al.,

2022), reconstructs masked regions of each image

based on the available information with an inpaint-

ing model defending against patch attacks. Another

possibility to increase the robustness of the model is

during inference. In (Arnab et al., 2018) is shown

how mean-field inference and multi-scale processing

naturally form an adversarial defense. The non-local

context encoder proposed in (He et al., 2019) mod-

els spatial dependencies and encodes global contexts

for strengthening feature activations. From all pyra-

mid features multi-scale information is fused to refine

the prediction and create segmentation. The presented

works up to now are defense methods improving the

robustness of the model. To the best of our knowl-

edge so far only one work focuses on detecting adver-

sarial attacks on segmentation models, i.e., the patch-

wise spatial consistency check which is introduced in

(Xiao et al., 2018).

The described defense approaches are created for

and tested only on a specific type of attack. The prob-

lem is that you assume a high model robustness, how-

ever, the defense method may perform poorly on new

unseen attacks and does not provide a statement about

this insecurity. Therefore, we present an uncertainty-

based detection approach which shows strong results

over several types of adversarial attacks. The pre-

sented detection approach (Xiao et al., 2018) is only

tested on perturbed images attacked in such a way

that a selected image is predicted. The spatial consis-

tency check randomly selects overlapping patches to

obtain pixel-wise confidence vectors. In contrast, we

use only information of the network output from one

inference and not from (computationally expensive)

multiple runs of the network. For these reasons, the

detection approach introduced in (Xiao et al., 2018)

cannot be considered as a suitable baseline.

Post-processing classification models as well as

simple output-based methods are used for false

positive detection (Maag et al., 2020; Maag and

Rottmann, 2023) and out-of-distribution segmenta-

tion (Maag et al., 2022; Hendrycks and Gimpel,

2016), but have not been investigated for adversarial

examples.

3 ADVERSARIAL ATTACKS

For the generation of adversarial examples, we dis-

tinguish between white and black box attacks. White

box attacks are created based on information of the

victim model, i.e., the adversarial attacker has access

to the full model, including its parameters, and knows

the loss function used for training. In contrast, black

box attackers have zero knowledge about the victim

model. The idea behind these type of attacks is trans-

ferability, i.e., an adversarial example generated from

another model works well with the victim one. The

attacks described in the following belong to the white

box setting and were proposed to attack semantic seg-

mentation models.

Attacks on Pixel-Wise Classification. The attacks

described in this paragraph were originally developed

for image classification and were (in a modified ver-

sion) applied to semantic segmentation. For semantic

segmentation, given an image x a neural network with

parameters w provides pixel-wise probability distri-

butions f (x;w)

i j

over a label space C = {y

1

,...,y

c

}

per spatial dimension (i, j). The single-step untar-

geted fast gradient sign method (FGSM, (Goodfellow

et al., 2015)) creates adversarial examples by adding

perturbations to the pixels of an image x with (one-hot

encoded) label y that leads to an increase of the loss L

(here cross entropy), that is

x

adv

i j

= x

i j

+ ε · sign(∇

x

L

i j

( f (x;w)

i j

,y

i j

)) , (1)

where ε is the magnitude of perturbation. The single-

step targeted attack with target label y

ll

instead de-

creases the loss for the target label and is given by

x

adv

i j

= x

i j

− ε · sign(∇

x

L

i j

( f (x;w)

i j

,y

ll

i j

)) . (2)

Following the convention, the least likely class pre-

dicted by the model is chosen as target class. This

attack is extended in (Kurakin et al., 2017) in an it-

erative manner (I-FGSM) to increase the perturbation

Uncertainty-Based Detection of Adversarial Attacks in Semantic Segmentation

39

strength

x

adv

i j,t+1

= (3)

clip

x,ε

x

adv

i j,t

+ α · sign(∇

x

adv

t

L

i j

( f (x

adv

t

;w)

i j

,y

i j

))

with x

adv

0

= x, step size α, and a clip function ensuring

that x

adv

t

∈ [x−ε,x+ε]. The targeted case (see eq. (2))

can be formulated analogously. Based on these at-

tacks, further methods for pixel-wise perturbations in

the classification context have been proposed such as

projected gradient descent (Madry et al., 2018; Bry-

niarski et al., 2022) and DeepFool (Moosavi-Dezfooli

et al., 2016). Some of these approaches have been fur-

ther developed and adapted to semantic segmentation

(Agnihotri and Keuper, 2023; Gu et al., 2022; Rony

et al., 2022).

Stationary Segmentation Mask Attacks. Another

type of attacks are so called stationary segmentation

mask methods (SSMM) where the pixels of a whole

image are iteratively attacked until most of the pixels

have been mis-classified into the target class (Cisse

et al., 2017; Xie et al., 2017). For each spatial dimen-

sion (i, j) ∈ I , the loss function per image x is given

by

L( f (x;w),y) =

1

|I |

∑

(i, j)∈I

L

i j

( f (x;w)

i j

,y

i j

). (4)

In (Metzen et al., 2017), the universal perturbation is

introduced to achieve real-time performance for the

attack at test time. To this end, training inputs D

train

=

{x

(k)

,y

(k),target

}

m

k=1

are generated where y

(k),target

de-

fines a fixed target segmentation. The universal noise

in iteration t + 1 is computed by

ξ

t+1

= clip

ε

ξ

t

(5)

−α · sign(

1

m

m

∑

k=1

∇

x

L( f (x

(k)

+ ξ

t

;w),y

(k),target

)

with ξ

0

= 0. The loss of pixels which are predicted as

belonging to the desired target class with a confidence

above a threshold τ are set to 0. At test time, this

noise is added to the input image and does not require

multiple calculations of the backward pass.

The dynamic nearest neighbor method (DNNM)

presented in (Metzen et al., 2017) aims to keep the

network’s segmentation unchanged but to remove a

desired target class. Let o be the object class be-

ing deleted and ˆy(x)

i j

= argmax

y∈C

f (x;w)

y

i j

the pre-

dicted class, where f (x; w)

y

i j

denotes the probability

the model assigns for the pixel at position (i, j) to

belong to class y, then I

o

= {(i, j)| ˆy(x)

i j

= o} and

I

¯o

= I \ I

o

. The target label is chosen by y

target

i j

=

ˆy(x)

i

′

j

′

with argmin

(i

′

, j

′

)∈I

¯o

(i

′

− i)

2

+ ( j

′

− j)

2

for all

(i, j) ∈ I

o

and y

target

i j

= ˆy(x)

i j

for all (i, j) ∈ I

¯o

. Since

the loss function described in eq. (4) weights all pix-

els equally though both objectives, i.e., hiding a ob-

ject class and being unobtrusive are not necessarily

equally important, a modified version of the loss func-

tion with weighting parameter ω is given by

L

ω

( f (x;w),y) =

1

|I |

(ω

∑

(i, j)∈I

o

L

i j

( f (x;w)

i j

,y

target

i j

)

+ (1 − ω)

∑

(i, j)∈I

¯o

L

i j

( f (x;w)

i j

,y

target

i j

)).

(6)

Note, the universal perturbation can also be computed

for the DNNM.

Patch-Based Attacks. The idea behind patch at-

tacks is that perturbing a small region of the im-

age causes prediction errors in a much larger re-

gion (Nakka and Salzmann, 2020). In (Nesti et al.,

2022), the expectation over transformation (EOT)-

based patch attack is introduced to create robust ad-

versarial examples, i.e., individual adversarial exam-

ples that are at the same time adversarial over a

range of transformations. Transformations occurring

in the real world are for instance angle and viewpoint

changes. These perturbations are modeled within the

optimization procedure and an extension of the pixel-

wise cross entropy loss is additionally presented in

(Nesti et al., 2022) to enable crafting strong patches

for the semantic segmentation setting.

4 DETECTION METHOD

Our method does not alter the semantic segmenta-

tion model, nor does it require knowledge of the ad-

versarial example generation process. While the at-

tacker may have full access to the semantic segmenta-

tion model, we only assume that our post-processing

model is kept secret or not attacked. Our approach

can be applied to any semantic segmentation network

serving as a post-processing step using only informa-

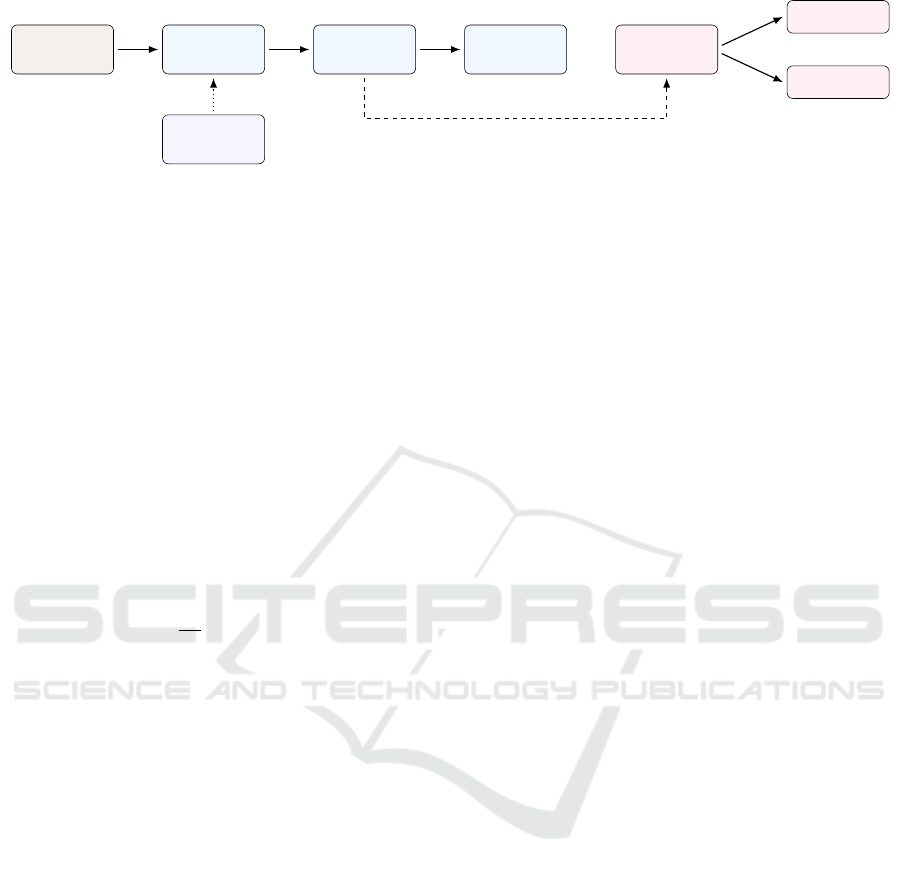

tion of the network output. In Figure 2 an overview

of our approach is given.

The degree of uncertainty in a semantic segmenta-

tion prediction is quantified by pixel-wise dispersion

measures like the entropy

E(x)

i j

= −

∑

y∈C

f (x;w)

y

i j

log f (x; w)

y

i j

, (7)

the variation ratio

V (x)

i j

= 1 − max

y∈C

f (x;w)

y

i j

, (8)

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

40

input image

x

segmentation

network f

softmax

probs f (x;w)

semantic seg-

mentation ˆy(x)

detector

p(x)

p(x) ≥ κ

p(x) < κ

clean

perturbed

adversarial

attacker

features

Figure 2: Schematic illustration of our detection method. The adversarial attacker can have full access to the semantic

segmentation model. Information from the network output is extracted to construct the features which serve as input to the

detector model classifying between clean and perturbed images.

or the probability margin

M(x)

i j

= V (x)

i j

+ max

y∈C \{ˆy(x)

i j

}

f (x;w)

y

i j

. (9)

The entropy heatmaps for a clean (left) and a per-

turbed image (right) are shown in Figure 1 (c) in-

dicating that higher uncertainties occur in the at-

tacked regions which motivates the use of uncer-

tainty information to separate clean and perturbed

data. To obtain uncertainty features per image from

these pixel-wise dispersion measures, we aggregate

them over a whole image by calculating the averages

¯

D = 1/|I |

∑

(i, j)∈I

D(x)

i j

where D ∈ {E,V,M}. More-

over, we obtain mean class probabilities for each class

y ∈ {1,...,C}

P(y|x) =

1

|I |

∑

(i, j)∈I

f (x;w)

y

i j

. (10)

The concatenation of this |C| + 3 features forms the

feature vectors used in the following. We compute

these image-wise features for a set of benign (and

adversarially changed) images, which are then used

to train classification models providing per image a

probability p(x) of being clean (and not perturbed).

We classify x as perturbed if p(x) < κ and as clean if

p(x) ≥ κ, where κ is a predefined detection threshold.

We explore different ways to construct such a classi-

fier.

First, we consider two basic outlier detection tech-

niques which only require benign data, i.e., an one-

class support vector machine (OCSVM, (Sch

¨

olkopf

et al., 1999)) and an approach for detecting out-

liers in a Gaussian distributed dataset learning an el-

lipse (Rousseeuw and Driessen, 1999). Second, we

consider the supervised logistic regression (LASSO,

(Tibshirani, 1996)) as classification model trained on

the features extracted for clean and perturbed images.

Importantly, we do not require knowledge of the ad-

versarial example generation process used by the at-

tacker, instead we use attacked data generated by any

(other) adversarial attack (cross-attack). While the

OCSVM and the ellipse approach are unsupervised

and outlier detection is a difficult task, the super-

vised cross-attack method has the advantage of hav-

ing already seen other types of perturbed data. Third,

we threshold only on the mean entropy

¯

E (which re-

quires only to choose the threshold value) proposing a

very basic uncertainty-based detector. Note, applying

our detection method is light-weight, i.e., the feature

computation is inexpensive and classification models

are trained in advance so that only one inference run

is added after semantic segmentation inference.

5 EXPERIMENTS

First, we present the experimental setting and then

evaluate our adversarial detection approach.

5.1 Experimental Setting

Datasets. We perform our tests on the Cityscapes

(Cordts et al., 2016) dataset for semantic segmen-

tation in street and on the Pascal VOC2012 (Ever-

ingham et al., 2012) (shorthand VOC) dataset of vi-

sual object classes in realistic scenes. The Cityscapes

dataset consists of 2,975 training and 500 validation

images of dense urban traffic in 18 and 3 different

German towns, respectively. The VOC dataset con-

tains 1,464 training and 1,449 validation images with

annotations for the various objects of categories per-

son, animal, vehicle and indoor.

Segmentation Networks. We consider the state-

of-the-art DeepLabv3+ network (Chen et al., 2018)

with Xception65 backbone (Chollet, 2017). Trained

on Cityscapes, we achieve a mean intersection over

union (mIoU) value of 78.93 on the validation set

and trained on VOC, a validation mIoU value of

88.39. Moreover, we use the BiSeNet (Yu et al., 2018)

trained on Cityscapes obtaining a validation mIoU of

70.32. We consider also two real-time models for the

Cityscapes dataset, the DDRNet (Pan et al., 2022)

achieving 77.8 mIoU and the HRNet (Wang et al.,

2021) with 70.5 mIoU.

Uncertainty-Based Detection of Adversarial Attacks in Semantic Segmentation

41

(a) Input image (b) Prediction (c) I-FGSM

4

(d) I-FGSM

ll

4

(e) Ground truth (f) SSMM (g) DNNM (h) Patch attack

Figure 3: Input image (a) with corresponding ground truth (e). Semantic segmentation prediction for clean (b) and perturbed

image generated by an untargeted (c) and a targeted FGSM attack (d) as well as by SSMM (f), DNNM (g) and patch attack

(h).

Adversarial Attacks. In many defense methods in

semantic segmentation, the adapted FGSM and I-

FGSM attack are employed (Arnab et al., 2018; Bar

et al., 2021; He et al., 2019; Klingner et al., 2020;

Xu et al., 2021). Thus, we study both attacks in

our experiments with the parameter setting presented

in (Kurakin et al., 2017). The magnitude of pertur-

bation is given by ε = {4,8,16}, the step size by

α = 1 and the number of iterations is computed as

n = min{ε + 4,⌊1.25ε⌋}. We denote the attack by

FGSM

#

ε

and the iterative one by I-FGSM

#

ε

, # ∈ { ,ll},

where the superscript discriminates between untar-

geted and targeted (here ll refers to ”least likely”). For

the re-implementation of SSMM and DNNM (Met-

zen et al., 2017), we use the parameters ε = 0.1 · 255,

α = 0.01 · 255, n = 60 and τ = 0.75. For SSMM, the

target image is chosen randomly for both datasets and

for DNNM, the class person is to be deleted for the

Cityscapes dataset. For the VOC dataset, the DNNM

attack makes no sense, since on the input images of-

ten only one object or several objects of the same class

are contained. For our experiments, we use a model

zoo

1

where we add the implementations of the adver-

sarial attacks FGSM, I-FGSM, SSMM and DNNM.

As we also use the pre-trained networks provided in

the repository, we run experiments for the Cityscapes

dataset on both models, DeepLabv3+ and HRNet, and

for VOC on the DeepLabv3+ network.

For the patch attack introduced in (Nesti et al.,

2022), we use the provided code with default param-

eters and consider two different segmentation mod-

els, BiSeNet and DDRNet, applied to the one tested

real world dataset (Cityscapes). Since we use the

cross-attack procedure (logistic regression) as detec-

tion model, i.e., we train the classifier on clean and

perturbed data attacked by an attack other than the

patch, we use the data obtained from the DeepLabv3+

1

https://github.com/LikeLy-Journey/SegmenTron

to train the detector and test on the DDRNet. For

the HRNet and the BiSeNet we proceed analogously,

since in each case the prediction performance (in

terms of mIoU) of both networks is similar.

As the Cityscapes dataset provides high-

resolution images (1024 × 2048 pixels) which require

a great amount of memory to run a full backward

pass for the computation of adversarial samples, we

re-scale image size for this dataset to 512 × 1024

when evaluating. In Figure 3, a selection of these

attacks is shown for the Cityscapes dataset and the

DeepLabv3+ network (or DDRNet for the patch

attack).

Evaluation Metrics. Our detection models provide

per image a probability p(x) of being clean (and

not perturbed). The image is then classified as at-

tacked if the probability exceeds a threshold κ for

which we tested 40 different values equally spaced

in [0,1]. The first evaluation metric we use is the

averaged detection accuracy (ADA) which is de-

fined as the proportion of images that are classi-

fied correctly. As this metric depends on a thresh-

old κ, we report the optimal ADA score obtained

by ADA

∗

= max

κ∈[0,1]

ADA(κ). Secondly, we com-

pute the area under the receiver operating character-

istic curve (AUROC) to obtain a threshold indepen-

dent metric. Lastly, we consider the true positive rate

while fixing the false positive rate on clean images to

5% (TPR

5%

).

5.2 Numerical Results

In the following, we study the attack success perfor-

mance and evaluate our adversarial attack detection

performance.

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

42

Table 1: APSR results for the semantic segmentation pre-

dictions on clean data.

DeepLabv3+ DDRNet HRNet BiSeNet

Cityscapes 6.84 4.00 5.48 5.26

VOC 2.92 - - -

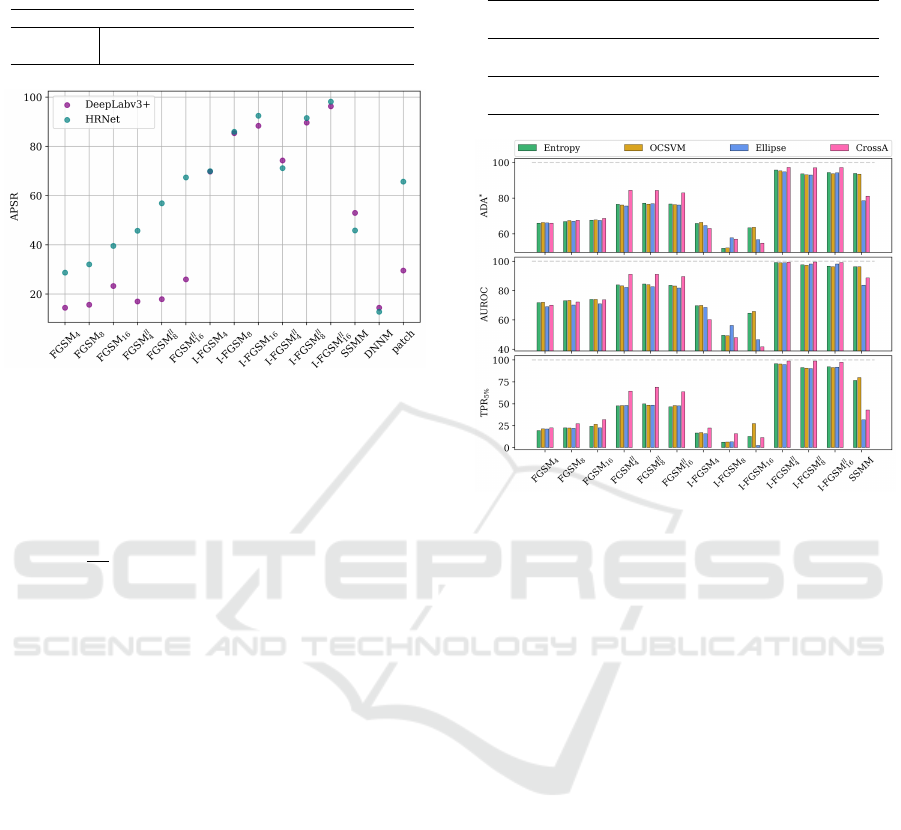

Figure 4: APSR results for the Cityscapes dataset and both

networks perturbed by different attacks.

Attack Performance. In order to access the per-

formance, i.e., the strength, of the attack generating

methods, we consider the attack pixel success rate

(APSR) (Rony et al., 2022) defined by

APSR =

1

|I |

∑

(i, j)∈I

argmax

y∈C

f (x

adv

;w)

y

i j

̸= y

GT

i j

, (11)

where y

GT

i j

denotes the true class of the pixel at loca-

tion (i, j). Note, this metric is the opposite of the ac-

curacy, as it focuses on falsely (and not correct) pre-

dicted pixels. If we replace x

adv

in eq. (11) by the

input image x, we obtain a measure how well the se-

mantic segmentation model performs on clean data.

The values for this measure for the different networks

and both datasets are given in Table 1. As expected,

we observe small APSR scores: all values are below

6.84%.

The APSR results for various attacks on the

Cityscapes dataset are shown in Figure 4. For all vari-

ations of the FGSM attack (untargeted vs. targeted,

non-iterative vs. iterative) the APSR increases with

larger magnitude of perturbation strength. Moreover,

targeted attacks lead to larger ASPR values than their

untargeted counterpart. The I-FGSM outperforms the

FGSM due to the iterative procedure of individual

perturbation steps. For the SSMM attack, the target

is a randomly chosen image from the dataset. The ex-

amples shown in Figure 3 (a) and (f) indicate, that

the correct and target classes of the clean and the

perturbed image coincide in several areas, such as

the street or the sky reflecting the nature of street

scenes. This may explain the relatively low ASPR

values around 50%. For the DNNM attack, the APSR

Table 2: APSR results for the VOC dataset and the

DeepLabv3+ network perturbed by different attacks.

FGSM

4

FGSM

ll

4

I-FGSM

4

I-FGSM

ll

4

SSMM

17.81 19.68 56.73 64.30 62.99

FGSM

8

FGSM

ll

8

I-FGSM

8

I-FGSM

ll

8

17.93 19.66 74.94 82.51

FGSM

16

FGSM

ll

16

I-FGSM

16

I-FGSM

ll

16

16.67 17.65 80.98 91.68

Figure 5: Detection performance results for the VOC

dataset and the DeepLabv3+ network.

scores are comparatively small since most parts of

the images are not perturbed but only one class is

to be deleted. We observe that the performance of

the patch attack has more or less impact depending

on the model. Comparing the two models, we find

that DeepLabv3+ is more robust against adversarial

attacks, as the APSR values are mostly smaller than

those of the HRNet network.

The results for the VOC dataset given in Ta-

ble 2 are qualitatively similar to the findings for the

Cityscapes dataset. However, the outcome for the

(targeted as well as untargeted) non-iterative FGSM

attack differs, i.e., the APSR scores are not increas-

ing with higher noise but stay at similar values. In

summary, for both datasets and the different network

architectures, most attacks achieve high APSR values

and greatly alter the prediction. Thus, the detection of

such attacks is extremely important.

Evaluation of Our Detection Method. The de-

fense approaches described above are created for and

tested only on a specific type of attacks. The sole pre-

sented detection approach (Xiao et al., 2018) is only

tested on stationary segmentation mask methods and

is computationally expensive due to the requirement

of multiple runs of the network. For this reason, nei-

ther this detection approach nor the defense methods

Uncertainty-Based Detection of Adversarial Attacks in Semantic Segmentation

43

Figure 6: Detection performance results for DeepLabv3+ (left) and the HRNet (right) trained on the Cityscapes dataset.

can be considered as suitable baselines.

In the following, we denote the single feature,

mean entropy based classification by Entropy, the or-

dinary one-class support vector machine by OCSVM,

the outlier detection method proposed in (Rousseeuw

and Driessen, 1999) by Ellipse and the logistic re-

gression model by CrossA. For training the regression

model, we chose data resulting from the iterative tar-

geted FGSM attack with a magnitude of noise of 2

as perturbed data (assuming that it might be advan-

tageous to have malicious data stemming from an at-

tack method with small perturbation strength, which

makes the attack harder to detect). The resulting clas-

sifier is then evaluated against all other attacks. Note,

the training of the detection models is light-weight

and no knowledge of the process for generating ad-

versarial examples is needed. For evaluating our de-

tection models, we use cross-validation with 5 runs.

The detection results for the VOC dataset are

shown in Figure 5 and for the Cityscapes dataset in

Figure 6. We observe comparatively lower detection

performance results for smaller perturbation magni-

tudes for the untargeted FGSM attacks which may

be explained by the fact that weaker attacks lead to a

change of prediction for a lower number of pixels and

are thus more difficult to detect. Targeted attacks are

better detected than untargeted attacks (with ADA

∗

values over 80% for all models). This could be due

to the procedure of picking the most unlikely class as

target which results in larger changes of the uncer-

tainty measures used as features. The detectors per-

form not that well on the adversarial examples result-

ing from the untargeted I-FGSM despite the strength

of the attack. An inspection of these examples shows

that during segmentation only a few classes are pre-

dicted (see Figure 3 (c)) with often low uncertainty for

large connected components which complicates the

distinction between clean and perturbed data. Inter-

esting are the high detection results of up to 96.89%

ADA

∗

values (obtained by CrossA for the Cityscapes

dataset and the DeepLabv3+ network) for the DNNM

attack as the perturbation targets only a few pixels,

(APSR values around 15%) and is therefore difficult

to detect. For the patch attack, it is noticeable that

the detection performance for the DeepLabv3+ net-

work on the Cityscapes dataset is low compared to the

HRNet which is explained by the higher disturbance

power of this attack on the HRNet, reflected in 36.11

percentage points higher APSR values. In general,

the detection capability for attacks on the HRNet is

stronger than for the DeepLabv3+, since the HRNet is

more easily to attack (see higher APSR values in Fig-

ure 4). Generally, our experiments highlight the high

potential of investigating uncertainty information for

successfully detecting adversarial segmentation at-

tacks. Already the basic method Entropy leads to

high accuracies often outperforming OCSVM and El-

lipse. However, across different attacks and datasets,

CrossA achieves ADA

∗

values of up to 100%. Thus,

our light-weight and uncertainty-based detection ap-

proach should be considered as baseline for future

methods.

6 CONCLUSION

In this work, we introduced a new uncertainty-based

approach for the detection of adversarial attacks on

semantic image segmentation tasks. We observed

that uncertainty information as given by the entropy

behaves differently on clean and perturbed images

and used this property to distinguish between the two

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

44

cases with very basic classification models. Our ap-

proach works in a light-weight and post-processing

manner, i.e., we do not modify the model nor need

knowledge of the process used by the attacker for gen-

erating adversarial examples. We achieve averaged

detection accuracy values of up to 100% for differ-

ent network architectures and datasets. Moreover, it

has to be pointed out, that our proposed detection ap-

proach is the first that was not designed for a specific

adversarial attack, but has a high detection capability

across multiple types. Given the high detection accu-

racy and the simplicity of the proposed approach, we

are convinced, that it should serve as simple baseline

for more elaborated but computationally more expen-

sive approaches developed in future.

ACKNOWLEDGEMENTS

This work is supported by the Ministry of Culture

and Science of the German state of North Rhine-

Westphalia as part of the KI-Starter research fund-

ing program and by the Deutsche Forschungsgemein-

schaft (DFG, German Research Foundation) under

Germany’s Excellence Strategy – EXC-2092 CASA

– 390781972.

REFERENCES

Agnihotri, S. and Keuper, M. (2023). Cospgd: a unified

white-box adversarial attack for pixel-wise prediction

tasks. 2, 4

Arnab, A., Miksik, O., and Torr, P. (2018). On the robust-

ness of semantic segmentation models to adversarial

attacks. In IEEE/CVF Conference on Computer Vi-

sion and Pattern Recognition (CVPR). 2, 3, 6

Bar, A., Lohdefink, J., Kapoor, N., Varghese, S., Huger, F.,

Schlicht, P., and Fingscheidt, T. (2021). The vulner-

ability of semantic segmentation networks to adver-

sarial attacks in autonomous driving: Enhancing ex-

tensive environment sensing. IEEE Signal Processing

Magazine. 1, 3, 6

Bryniarski, O., Hingun, N., Pachuca, P., Wang, V., and Car-

lini, N. (2022). Evading adversarial example detection

defenses with orthogonal projected gradient descent.

In International Conference on Learning Representa-

tions (ICLR). 4

Chen, L.-C., Zhu, Y., Papandreou, G., Schroff, F., and

Adam, H. (2018). Encoder-decoder with atrous sep-

arable convolution for semantic image segmentation.

In European Conference on Computer Vision (ECCV).

1, 2, 5

Cho, S., Jun, T. J., Oh, B., and Kim, D. (2020). Dapas

: Denoising autoencoder to prevent adversarial attack

in semantic segmentation. In International Joint Con-

ference on Neural Network (IJCNN). 3

Chollet, F. (2017). Xception: Deep learning with depthwise

separable convolutions. IEEE Conference on Com-

puter Vision and Pattern Recognition (CVPR). 5

Cisse, M., Adi, Y., Neverova, N., and Keshet, J. (2017).

Houdini: Fooling deep structured prediction models.

In Conference on Neural Information Processing Sys-

tems (NeurIPS). 2, 4

Cordts, M., Omran, M., Ramos, S., Rehfeld, T., Enzweiler,

M., Benenson, R., Franke, U., Roth, S., and Schiele,

B. (2016). The cityscapes dataset for semantic urban

scene understanding. In IEEE Conference on Com-

puter Vision and Pattern Recognition (CVPR). 2, 5

Everingham, M., Van Gool, L., Williams, C. K. I.,

Winn, J., and Zisserman, A. (2012). The

PASCAL Visual Object Classes Challenge

2012 (VOC2012) Results. http://www.pascal-

network.org/challenges/VOC/voc2012/workshop/

index.html. 2, 5

Feinman, R., Curtin, R. R., Shintre, S., and Gardner, A. B.

(2017). Detecting adversarial samples from artifacts.

2

Goodfellow, I. J., Shlens, J., and Szegedy, C. (2015). Ex-

plaining and harnessing adversarial examples. In Ben-

gio, Y. and LeCun, Y., editors, International Confer-

ence on Learning Representations (ICLR). 2, 3

Gu, J., Zhao, H., Tresp, V., and Torr, P. (2022). Segpgd: An

effective and efficient adversarial attack for evaluating

and boosting segmentation robustness. In European

Conference on Computer Vision (ECCV). 2, 4

He, X., Yang, S., Li, G., Li, H., Chang, H., and Yu, Y.

(2019). Non-local context encoder: Robust biomedi-

cal image segmentation against adversarial attacks. In

AAAI Conference on Artificial Intelligence. 3, 6

Hendrycks, D. and Gimpel, K. (2016). A baseline for de-

tecting misclassified and out-of-distribution examples

in neural networks. 3

Khamaiseh, S. Y., Bagagem, D., Al-Alaj, A., Mancino,

M., and Alomari, H. W. (2022). Adversarial deep

learning: A survey on adversarial attacks and defense

mechanisms on image classification. IEEE Access. 2

Klingner, M., B

¨

ar, A., and Fingscheidt, T. (2020). Im-

proved noise and attack robustness for semantic seg-

mentation by using multi-task training with self-

supervised depth estimation. In IEEE/CVF Confer-

ence on Computer Vision and Pattern Recognition

Workshop (CVPRW). 2, 3, 6

Krizhevsky, A. (2009). Learning multiple layers of features

from tiny images. 2

Kurakin, A., Goodfellow, I. J., and Bengio, S. (2017). Ad-

versarial machine learning at scale. In International

Conference on Learning Representations (ICLR). 2,

3, 6

LeCun, Y. and Cortes, C. (2010). MNIST handwritten digit

database. 2

Maag, K., Chan, R., Uhlemeyer, S., Kowol, K., and

Gottschalk, H. (2022). Two video data sets for track-

ing and retrieval of out of distribution objects. In Asian

Conference on Computer Vision (ACCV), pages 3776–

3794. 3

Uncertainty-Based Detection of Adversarial Attacks in Semantic Segmentation

45

Maag, K. and Rottmann, M. (2023). False negative re-

duction in semantic segmentation under domain shift

using depth estimation. In International Joint Con-

ference on Computer Vision, Imaging and Computer

Graphics Theory and Applications. SCITEPRESS -

Science and Technology Publications. 3

Maag, K., Rottmann, M., and Gottschalk, H. (2020). Time-

dynamic estimates of the reliability of deep semantic

segmentation networks. In IEEE International Con-

ference on Tools with Artificial Intelligence (ICTAI).

3

Madry, A., Makelov, A., Schmidt, L., Tsipras, D., and

Vladu, A. (2018). Towards deep learning models re-

sistant to adversarial attacks. In International Confer-

ence on Learning Representations, (ICLR). 4

Metzen, J. H., Kumar, M. C., Brox, T., and Fischer, V.

(2017). Universal adversarial perturbations against

semantic image segmentation. In IEEE International

Conference on Computer Vision (ICCV). 2, 4, 6

Michel, A. and Ewetz, R. (2022). Gradient-based adver-

sarial attack detection via deep feature extraction. In

SoutheastCon. 2

Moosavi-Dezfooli, S.-M., Fawzi, A., and Frossard, P.

(2016). Deepfool: A simple and accurate method to

fool deep neural networks. In IEEE/CVF Conference

on Computer Vision and Pattern Recognition (CVPR).

4

Nakka, K. K. and Salzmann, M. (2020). Indirect local

attacks for context-aware semantic segmentation net-

works. In European Conference on Computer Vision

(ECCV). 2, 4

Nesti, F., Rossolini, G., Nair, S., Biondi, A., and Buttazzo,

G. C. (2022). Evaluating the robustness of seman-

tic segmentation for autonomous driving against real-

world adversarial patch attacks. In IEEE/CVF Win-

ter Conference on Applications of Computer Vision

(WACV). 2, 4, 6

Pan, H., Hong, Y., Sun, W., and Jia, Y. (2022). Deep dual-

resolution networks for real-time and accurate seman-

tic segmentation of traffic scenes. IEEE Transactions

on Intelligent Transportation Systems. 1, 2, 5

Rony, J., Pesquet, J.-C., and Ayed, I. B. (2022). Proxi-

mal splitting adversarial attacks for semantic segmen-

tation. 2, 4, 7

Rousseeuw, P. J. and Driessen, K. V. (1999). A fast algo-

rithm for the minimum covariance determinant esti-

mator. Technometrics. 5, 8

Sch

¨

olkopf, B., Williamson, R. C., Smola, A., Shawe-Taylor,

J., and Platt, J. (1999). Support vector method for

novelty detection. In Advances in Neural Information

Processing Systems. MIT Press. 5

Tibshirani, R. (1996). Regression shrinkage and selection

via the lasso. Journal of the Royal Statistical Society:

Series B. 5

Wang, J., Sun, K., Cheng, T., Jiang, B., Deng, C., Zhao,

Y., Liu, D., Mu, Y., Tan, M., Wang, X., Liu, W., and

Xiao, B. (2021). Deep high-resolution representation

learning for visual recognition. IEEE Transactions on

Pattern Analysis and Machine Intelligence. 5

Weerasinghe, P. S., Erfani, S. M., Alpcan, T., Leckie, C.,

and Kuijper, M. (2018). Unsupervised adversarial

anomaly detection using one-class support vector ma-

chines. International Symposium on Mathematical

Theory of Networks and Systems. 2

Xiao, C., Deng, R., Li, B., Yu, F., Liu, M., and Song, D.

(2018). Characterizing adversarial examples based on

spatial consistency information for semantic segmen-

tation. In European Conference on Computer Vision

(ECCV). 2, 3, 7

Xie, C., Wang, J., Zhang, Z., Zhou, Y., Xie, L., and Yuille,

A. L. (2017). Adversarial examples for semantic seg-

mentation and object detection. IEEE International

Conference on Computer Vision (ICCV). 2, 4

Xu, X., Zhao, H., and Jia, J. (2021). Dynamic divide-

and-conquer adversarial training for robust semantic

segmentation. In IEEE International Conference on

Computer Vision (ICCV). 3, 6

Yatsura, M., Sakmann, K., Hua, N. G., Hein, M., and Met-

zen, J. H. (2022). Certified defences against adversar-

ial patch attacks on semantic segmentation. 2, 3

Yu, C., Wang, J., Peng, C., Gao, C., Yu, G., and Sang, N.

(2018). Bisenet: Bilateral segmentation network for

real-time semantic segmentation. In European Con-

ference on Computer Vision. 5

VISAPP 2024 - 19th International Conference on Computer Vision Theory and Applications

46