AR Authoring: How to Reduce Errors from the Start?

Camille Truong-Alli

´

e

1 a

, Martin Herbeth

2

and Alexis Paljic

1 b

1

Centre de Robotique, Mines Paris - PSL, Paris, France

2

Spectral TMS,

´

Evry-Courcouronnes, France

Keywords:

Augmented Reality, Task Assistance, AR Authoring Interface, Knowledge Transmission.

Abstract:

Augmented Reality (AR) can be used to efficiently guide users in procedures by overlaying virtual content

onto the real world. To facilitate the use of AR for creating procedures, multiple AR authoring tools have

been introduced. However, they often assume that authors digitize the procedure perfectly well the first time;

this is yet hardly the case. We focus on how AR authoring tools can support authors during the procedure

formalization. We introduce three authoring methods. The first one is a video-based method, where a video

recording is done before procedure digitization, to improve procedure recency, the second one an in-situ

method, where the digitization is made in the procedure environment, to improve context, and the last one

is the baseline method, where AR authors digitize from memory. We assess the quality of the procedures

resulting from these authoring methods with two simple yet underexplored metrics: the number of errors and

the number of versions until the final procedure. We collected feedbacks from AR authors in a field study to

validate their significance. We found that participants’ performance was better with the video-based method,

followed by the in-situ and then the baseline methods. The field study showed the advantages of the different

methods depending on the use case and validated the importance of measuring digitization error.

1 INTRODUCTION

Augmented Reality (AR), with its ability to over-

lay virtual content to real-world elements, has al-

ready proven its usability and efficiency in instructing

users about procedures they have to perform (Fite-

Georgel, 2011). For example, Head Mounted Dis-

plays (HMDs) enable users to keep their hands free.

With this, users can perform a task while following

AR instructions superimposed to the physical envi-

ronment such as animated 3D models or videos.

A procedure is typically a series of steps that need

to be completed to achieve a goal. In an industrial

context, a procedure is often a description of these

steps which is made available to workers as digital

documents or on paper. A well-written AR procedure

should ensure workers safety and efficiency.

To help virtual content creators (AR authors) to

design these procedures, multiple AR authoring tools

have been proposed. Figures 1 and 2 are examples

of one authoring tool. They enable an AR author with

no programming skills to create a software which pro-

vides AR instructions (Kearsley, 1982). It could be

a

https://orcid.org/0000-0002-0973-7004

b

https://orcid.org/0000-0002-3314-951X

possible, for example, to type text and arrange pic-

tures or 3D models in 3D space without code. Almost

all of the works about AR authoring tools consider

that the AR authors know perfectly well the proce-

dure, how to explain it best and digitize it perfectly

right the first time - this is often not the case on a

daily basis. Rather, they more likely loop between

authoring the content and reviewing it from the op-

erator view (Scholtz and Maher, 2014; Gerbec et al.,

2017). . It is for instance common to forget a step, or

to realize while following the AR-assisted procedure

that another formulation would have been clearer.

In this work, we are interested in the authoring

tool conditions which can help best AR authors to for-

malize procedures. We propose three methods related

to the moment AR authors plan the procedure digiti-

zation: a method such that they perform the procedure

before digitizing it (video-based method, where AR

authors first capture themselves executing the proce-

dure); a method such that the procedure digitization

is at the procedure location (in-situ method), and a

method where AR authors are left alone when digi-

tizing the procedure (baseline method, where AR au-

thors digitize the procedure off-site, by memory).

We start by reviewing the different authoring tools

408

Truong-Allié, C., Herbeth, M. and Paljic, A.

AR Authoring: How to Reduce Errors from the Start?.

DOI: 10.5220/0012303200003660

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 19th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2024) - Volume 1: GRAPP, HUCAPP

and IVAPP, pages 408-418

ISBN: 978-989-758-679-8; ISSN: 2184-4321

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

that we found in the literature, and how they have

been evaluated. Then, we introduce the three author-

ing methods above-mentioned and compare them in a

user study. Finally, we draw conclusions, limitations

and future work.

2 RELATED WORK

2.1 Difficulties in Authoring Tools

We are interested in authoring tools specifically de-

signed for procedural tasks, enabling the digitization

of AR procedures as step-by-step instructions. Exist-

ing AR authoring tools focus on easing AR content

creation, AR content placement, or procedure organi-

zation.

2.1.1 AR Content Creation

AR content creation can be time-consuming and re-

quire specific skills, for example to create complex

3D models (Gattullo et al., 2019). To help AR au-

thors to create AR content without the need of spe-

cific skills, three main strategies exist. 1. Some au-

thoring tools propose a database of AR content (typ-

ically, 3D models) in which AR authors can pick the

appropriate content (Knopfle et al., 2005; Blattger-

ste et al., 2019). 2. Some authoring tools automat-

ically create the AR content from the capture of AR

author gesture or their environment with computer vi-

sion (Chidambaram et al., 2021; Pham et al., 2021)

or of a product to assemble in the case of assembly

(Zogopoulos et al., 2022). 3. Finally, some AR au-

thoring tools rely on simple AR content which does

not require high skills to be created: video, picture,

text, and simple 3D models like arrows (Lavric et al.,

2021; Blattgerste et al., 2019).

2.1.2 AR Content Placement

Another difficulty when creating AR content is that

it should be integrated into the real-world. Manipu-

lating the AR content and place it accurately is not

an easy task. Multiple works propose to help AR au-

thors in the AR content placement. It is possible to

associate physical markers to virtual representations

that serve as references for the placement of AR con-

tent. These representations represent either directly

the virtual elements to display in AR (Zauner et al.,

2003), either the real environment, in which the vir-

tual content can be placed on a desktop application

(Gimeno et al., 2013). It is also possible to place the

virtual content with 2D interfaces on devices that AR

authors may be familiar with: a computer, with po-

sitioning of the virtual content in a 2D or 3D graph-

ical interface (Zauner et al., 2003; Haringer and Re-

genbrecht, 2002; Knopfle et al., 2005; B

´

egout et al.,

2020), a mobile phone, in a joystick controller fash-

ion (Blattgerste et al., 2019). Finally, some author-

ing tools automatically position the virtual content,

based on author positioning in the real-world (Chi-

dambaram et al., 2021) or automatic scene analysis

(Pham et al., 2021; Erkoyuncu et al., 2017).

2.1.3 Procedure Organization

Creating and placing the AR content is not enough to

make AR procedures. AR authors need to organize

its structure so that the whole procedure is coherent.

To help AR authors organizing the procedure, some

tools propose an automatic segmentation of the proce-

dure into steps, and one tool proposes a bi-directional

system which enable operators to correct errors AR

authors could have made in the procedure.

In the context of assembly, it is possible to auto-

matically detect the assembly steps as they all have a

similar structure that consists of the addition of parts

to assemble. Step detection can be done from a dig-

ital twin of the final product to assemble (Zogopou-

los et al., 2022) or computer vision (Bhattacharya and

Winer, 2019; Funk et al., 2018). For tasks which

are more complex than assembly, the Ajalon tool en-

ables automatic step detection at the cost of a higher

authoring tool complexity (Pham et al., 2021). This

tool helps AR authors to organize their procedure in a

finite-state-machine from which an adaptive software

is derived. The resulting software automatically de-

tects the step the operator is performing, gives the

corresponding instructions and indicates if the step

is wrongly performed. Finally, ACARS (Authorable

Context-aware Augmented Reality System) helps AR

authors in the creation of AR procedure, not with an

automatic step segmentation, but with a bi-directional

system that enables operators to point errors that have

been made in the procedure (Zhu et al., 2013). This

tool consists of two parts: an offline authoring tool

for AR authors, that enable them to create a context-

aware software from static rules, and an in-situ au-

thoring tool for operators in which they follow the AR

instructions, interact with it and update it. The static

rules enable to elect the right content depending on

the detected input context (i.e. choose level of detail

depending on expertise level).

2.1.4 Conclusion

The related work shows that, while multiple works

propose simple tools for AR content creation and

AR Authoring: How to Reduce Errors from the Start?

409

placement, fewer works are proposed when it comes

to organizing it in a coherent structure. They are

based on automatic step segmentation, which is today

challenging and limited to simple tasks like assembly,

or specific applications and cannot be generalized. To

the best of our knowledge, ACARS is the only tool

that considers AR authors fallibility and enable oper-

ators to correct their errors. This feature is major yet

underexplored in the literature. In this work, we fo-

cus on an earlier phase of the authoring process. We

aim to prevent these errors from occurring in the first

place, rather than simply correcting them afterward.

We are interested in how AR authoring tools can

be designed to best help AR authors in the procedure

organization. We consider the moment when they for-

malize their procedure, and question how to organize

the authoring tool around this moment. To do so,

we propose tools which can improve memory recall.

Recall is facilitated by practice, recency and context

(what is present in the person’s focus of attention)

(Budiu, 2014). We propose two authoring methods.

One is a video-based authoring method with which

the AR author first captures a first-person video of the

procedure before they formalize it - it is designed to

improve recall by recency. The other is an in-situ au-

thoring method, where the AR author formalizes the

procedure at the location of the procedure, enabling

them to observe the procedure environment and, if

desired, even perform the procedure - it is designed

to improve context.

2.2 Evaluation Methods

Our work focuses on how good the digitized proce-

dure is. We therefore need to understand what a good

procedure means. To answer this, we take interest in

how non AR procedures and AR authoring tools have

been evaluated.

2.2.1 Non AR Procedure Evaluation

Traditional paper procedures can be evaluated in

terms of risk of human error (Kirwan, 1997; Gertman

et al., 1992; Gertman et al., 2005) or complexity (Park

and Jung, 2007).

Human Reliability Analysis methods have been

proposed to evaluate human error probabilities given

a procedure. They all rely on an expert analysis of

the procedure and/or its environment, leading to sub-

jective measures and possible inconsistencies (Jang

and Park, 2022) and a time-consuming analysis for

a large set of procedures. TACOM, which stands

for TAsk COMplexity, is a measure which gives a

score of task complexity from quantifiable measures

(Park and Jung, 2007). The measures require knowl-

edge about the task context and a time-consuming

analysis. For example, for each step of the task,

logic complexity and information complexity should

be evaluated. To palliate the time-consuming anal-

ysis required by Human Reliability Analysis meth-

ods and TACOM scores, machine learning and nat-

ural language processing-based algorithms have been

proposed to evaluate procedures complexity based on

their structures (Sasangohar et al., 2018; McDonald

et al., 2023).

2.2.2 Authoring Tools Evaluation

Works on AR authoring tools propose different meth-

ods for their evaluation. The evaluations mainly con-

sider AR author experience, the quality of the result-

ing AR procedure by considering the operator expe-

rience when following it, and, if the authoring tool

has an automated part, its error rate. AR author ex-

perience is usually measured in terms of procedure

creation time, cognitive load and usability of the tool.

Operator experience is evaluated in terms of perfor-

mance (task completion time and error rate), cognitive

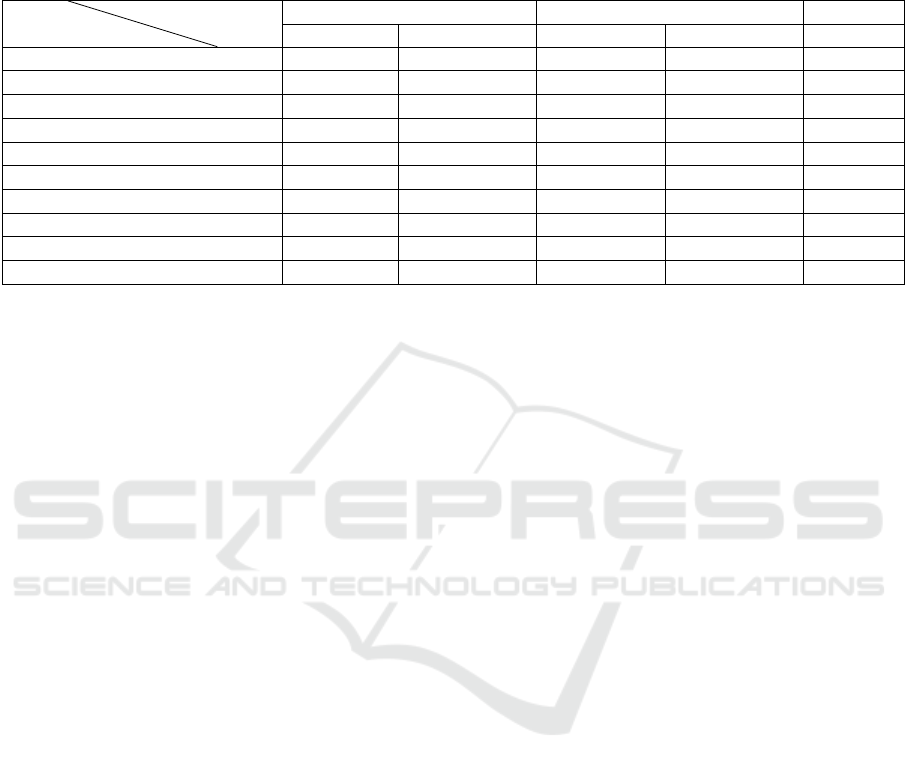

load and usability of the resulting procedure. Table

1 summarizes the different AR authoring tools eval-

uation methods used by the previous work. It only

includes the authoring tools that have been evaluated.

2.2.3 Conclusion

There is no straightforward method to evaluate the

quality of a procedure organization, and the existing

methods focus on whether a procedure is clear rather

than correct.

The evaluation of traditional non AR procedures

aims to clarify an existing procedure which is already

correct. It either requires complex analysis skills, ei-

ther machine learning analysis that can be difficult to

implement. The evaluation of AR authoring tools in-

directly assesses the resulting procedure quality with

operators experience when following it. No metric di-

rectly related to the procedure quality is used. Finally,

at the exception of ACARS, all the existing AR au-

thoring tools start from a finalized procedure, where

the only concern is to be digitized with AR. They do

not consider the process in which the procedure is

created and improved before reaching its final state.

Zhu et al., with ACARS, propose a method to correct

authoring errors, but they did not evaluate how this

method improves the final procedure.

In this work, we propose two simple metrics to

evaluate the quality of a digitized procedure organiza-

tion: the number of authoring errors made by AR au-

thors until they are satisfied with the final procedure,

HUCAPP 2024 - 8th International Conference on Human Computer Interaction Theory and Applications

410

Table 1: Existing AR authoring tools and their evaluation: With AR author, operator or tool performances. TCT stands

for Task Completion Time, RT for Reading Time, QMC for Quantity of Manually Created Content, ER for Error Rate, EP

for Error in Positioning, CQ for Custom Questionnaire, SUS for System Usability Scale, UEQ for Usability Experience

Questionnaire, NX for NASA-TLX, R for Recall, P for Precision, IoU for Intersection over Union (for object detection), TT

for Training/Testing Time (for machine learning model).

Paper

Eval. AR author Operator Tool

Objective Subjective Objective Subjective Objective

(Blattgerste et al., 2019) SUS, UEQ TCT, ER SUS, NX

(Chidambaram et al., 2021) TCT CQ, SUS, NX TCT, ER CQ, SUS, NX

(Pham et al., 2021) TCT, QMC CQ P, R, IoU

(Erkoyuncu et al., 2017) TCT TCT

(Lavric et al., 2021) TCT, RT, ER CQ, SUS

(Gimeno et al., 2013) TCT, EP CQ TCT

(B

´

egout et al., 2020) TCT

(Bhattacharya and Winer, 2019) P, R, TT

(Funk et al., 2018) TCT NX TCT, ER NX

(Zhu et al., 2013) CQ

and the number of versions they make to achieve this

final procedure. We used these metrics to compare

three different authoring methods, and gather AR au-

thors feedback in a field study to validate the impor-

tance of these two metrics.

3 AUTHORING METHODS

The authoring methods described in this section are

inspired from the idea that recall can be improved by

recency and context. They are all based on the same

two applications: a desktop and an AR applications.

3.1 Baseline

The baseline authoring method consists in alternating

between the desktop application, in which AR authors

set and describe all the elements to constitute the pro-

cedure, and the AR application on HMD, where AR

authors can visualize the elements and place them in

the real-world (Spectral TMS, ).

The desktop application enables to first write the

whole procedure, step by step. Each step can have

a title, a textual description, pictures, videos and 3D

models attached to them. We chose these virtual con-

tent types as they are the most used and preferred ones

in industries (Gattullo et al., 2020).

After writing the procedure, the AR authors can

use the AR application to place the virtual content

within the physical environment. If errors are noticed

during this step, they have to go back to the desktop

application to correct them in a new version. Then,

they use the AR application again to place any new

virtual content and verify that no error is left, etc.

3.2 Video-Based Authoring

The video-based authoring method is such that AR

authors capture themselves performing the task from

a first-view perspective with a HMD. Then, they use

the desktop authoring tool described in the baseline

and the video they just made as a support to write a

first version of the procedure. The authoring steps are

then the same as the ones proposed in the baseline

method.

This method can be extended into realistic author-

ing tools, like Taqtile (Taqtile ), or Ajalon (Pham

et al., 2021), where the expert video is the main

medium to create the whole AR procedure. For ex-

ample, the video can be used for content creation and

placement with automatic object detection and proce-

dure organization can be made even easier with auto-

matic step segmentation.

3.3 In-situ Authoring

With the in-situ authoring method, AR authors are at

the procedure location when writing the procedure on

the desktop application. The steps are then the same

than in the baseline but at the procedure location. This

way, authors can look at the procedure elements and

even perform the procedure to improve their recall.

This method can be extended into more complex

authoring tools that rely on the in-situ location of the

AR author, for example, by making possible to cre-

ate virtual content on the spot, like pictures or videos

(Lavric et al., 2021; Blattgerste et al., 2019).

AR Authoring: How to Reduce Errors from the Start?

411

4 EXPERIMENT

4.1 Objective

Each of the methods we proposed aims to represent a

diverse range of authoring tools; we design the meth-

ods and the corresponding authoring tool to be as

generic as possible. What we needed was for the three

methods to be comparable in a way that we are able

to measure the effects of their characteristics alone:

for the video-based method, the effect of adding video

capture before digitization, and for the in-situ method,

the effect of having the AR author physically present

on-site.

The objective of this experiment is to capture the

effect of such characteristics on participants proce-

dure creation time, number of errors, number of ver-

sions until the final one, and cognitive load.

4.2 Variables

We measured procedure creation time, video capture

included for the video-based authoring method. We

used NASA-RTLX (Byers, 1989) to assess partici-

pants’ cognitive load at the end of the experiment.

The score was calculated from linear scales from 1

to 100. We measured the number of errors and the

number of versions before the final version of the pro-

cedure. A version corresponds to the state of the pro-

cedure after a loop between the edition (mainly on

desktop application) and review of the procedure (on

AR application). The number of errors between two

consecutive versions is the number of changes made

by the participant between the two versions. The total

number of errors is the sum of all the changes between

consecutive versions. This means that each partici-

pant evaluates themselves whether there was an error

in their procedure by correcting it. We deemed this

to be the most effective method for error measure-

ment since the definition of an error is subjective and

therefore tricky to evaluate. For instance, one person

might perceive a step as too simple to mention, while

another might view this same step omission as an er-

ror. By using the number of changes between two

versions, we measured the number of self-detected er-

rors, that is, what participants actually consider an er-

ror.

4.3 Participants

30 participants took part in the experiment, 7 women

and 23 men. They came from diverse firms and from

a computer science research lab. They were 29 on

average - std 3. When asked about their familiarity

with AR and the task to perform (make a coffee with a

capsules machine) from 0 to 4, they ranked on average

their familiarity with AR to 1.4 (std 1.7) and with the

coffee machine to 2.6 (std 1.5).

Some participants where not familiar with the cof-

fee machine; they were consequently not given the

baseline condition.

4.4 Experimental Setup

Procedure to Digitize. Participants’ task was to

create a procedure to explain how to make a coffee

with a capsule machine with one of the three author-

ing methods (between-subject study). This task was

chosen because it is relatively common so that many

people can be considered as experts, but still complex

enough for participants to make mistakes. The coffee

machine had its water container empty (that needs to

be filled) and its old capsules container overfilled (that

needs to be emptied) for each participant: those were

the two main causes of errors.

Desktop Application. Because the experiment

does not focus on virtual content creation, we pro-

vided participants with dummy templates for the vir-

tual content they could use if needed: pictures, videos

and 3D models. Those were dummy media with an

icon representing what the actual media should have

been: a camera for the picture, a video camera for the

video and cube in a reference frame for the 3D model

(see what the templates look like in Figure 1). In addi-

tion to these virtual content types, participants could

also indicate physical elements location by placing

3D spheres, as described by Figure 2. The virtual con-

tent and physical elements locations can be created by

clicking on the “Add an equipment” and “Adding me-

dia” buttons.

AR Application. On the AR application, all the vir-

tual content can be placed in the physical environ-

ment; Figure 2 shows two examples of virtual content

for two different steps. The title and textual descrip-

tion are displayed on a dashboard that participants can

pin wherever they like. The AR part of the authoring

tool is not evaluated in the experiment and is the same

for all the authoring methods.

4.5 Procedure

Participants were first explained how the authoring

tool worked by creating a very simple procedure

(draw a smiley on a whiteboard). They created

the steps on the desktop application, and then, they

watched a tutorial video explaining how to use the

HUCAPP 2024 - 8th International Conference on Human Computer Interaction Theory and Applications

412

Figure 1: The authoring tool desktop application, where it

is possible to create procedure steps, describe them with a

title, a text, real-world elements locations, pictures, videos

and 3D models.

Figure 2: Two examples of the virtual content that is dis-

played on the AR application. On the left are indications of

real-world elements with orange spheres. On the right is a

dummy picture that the participant placed as if it were the

actual picture they wanted to use.

AR application. After this, they realized the actual

experiment with one of the three authoring methods.

They created their procedure on the desktop applica-

tion, placed the virtual content and reviewed it on the

AR application, corrected it on desktop in a new ver-

sion if needed, etc. They were asked to stop when sat-

isfied. Finally they answered the NASA-RTLX ques-

tionnaire and were free to leave comments about the

experiment.

4.6 Data Analysis

When the assumptions for the one-way ANOVA were

met (mainly normality and homoscedasticity), we

used it to analyze the data, otherwise, nonparametric

Kruskal-Wallis test was used. The procedure creation

time and NASA-RTLX data are normal (Shapiro’s

test p-value are respectively 0.7 and 0.9), continu-

ous and homoscedastic (Levene’s test p-value are 0.4

and 0.1). We could perform one-way ANOVA for

these variables. The number of versions and number

of errors are both categorical data; we used Kruskal-

Wallis.

4.7 Results

4.7.1 Effect of Authoring Methods

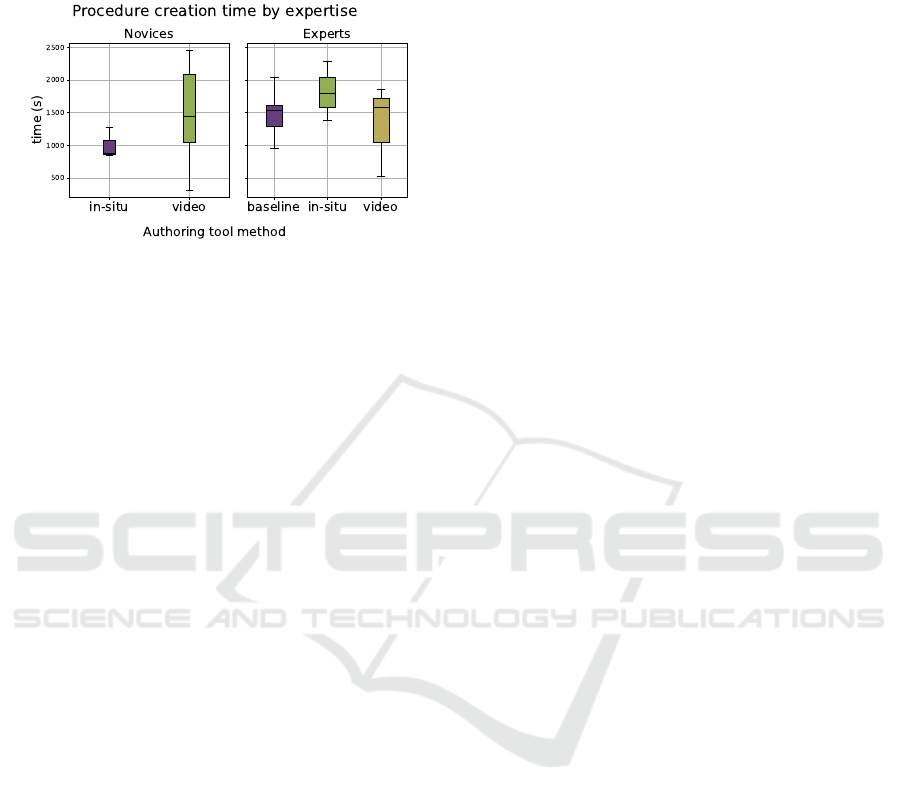

Participants performed best with the video-based au-

thoring method for all the metrics, as shown in Fig-

ure 3, although no statistically significant results were

found (see Table 2).

Figure 3: Boxplots of participants results between the dif-

ferent authoring methods. Procedure creation time is the

time taken by participants to reach the final version of the

procedure, video recording included for the video-based

method. NASA-RTLX assesses cognitive load (Byers,

1989). The number of versions is the number of versions

participants made before reaching the final procedure. The

number of errors is the number of errors they self-detected

within their procedure before reaching the final procedure.

Table 2: P-Values of the Kruskal-Wallis tests for the effect

of authoring method on procedure creation time, NASA-

RTLX, number of versions and number of errors.

Measure P-value

Procedure creation time 0.74

NASA-RTLX score 0.27

Number of versions 0.67

Number of errors 0.56

4.7.2 Effect of Expertise

In Section 4.7.1, we did not consider the effect of par-

ticipants’ expertise on their performances. Indeed, we

found in a prior analysis that adding participants’ ex-

pertise in the data modeling did not significantly im-

prove it. This result was obtained with the ANOVA

function from the R language, by comparing a model

with participants’ expertise and one without.

Yet, when separating participants between novices

(coffee expertise under 3) and experts, different trends

were observed between the two groups for the proce-

dure creation time. This is the only variable in which

two clearly different trends can be observed. In gen-

eral, novices created the procedure faster with the in-

situ method than with the video-based method, but

AR Authoring: How to Reduce Errors from the Start?

413

experts created the procedure slower with the in-situ

method than with the video-based and the baseline

methods, as illustrated by Figure 4.

Figure 4: Boxplots of procedure creation times by author-

ing methods and participants’ expertise. Baseline was not

proposed to novices as they were not able to write a proce-

dure without a video or being in-situ.

4.8 Conclusion

Participants’ performances are best (although not sig-

nificantly) for all the metrics with the video-based

authoring method, followed by the in-situ authoring

method and finally the baseline. Novices and ex-

perts have similar trends except for time data, where

novices are faster with the in-situ method than with

the video-based method.

4.9 Discussion and Limitations

Participants had better results with the video-based

method. Even with the video capture time included in

the procedure creation time, participants were faster

with the video-based than with the in-situ method.

This means that enforcing that AR authors, even

experts, perform the procedure before digitizing it

makes them earn time. This is probably due to the

video forcing them to perform the task, while with the

in-situ authoring method, they were free to do as they

pleased. We indeed noticed that only a few partici-

pants actually performed the procedure with the in-

situ method; most of them mimicked it or mentally

reviewed the steps to perform by looking at the en-

vironment. This characterizes well what a real-use

would be, as we told participants to do as they wanted.

On the contrary, novices took more time with the

video-based than with the in-situ method. This can

be explained by the video recording being a learn-

ing moment for novices rather than a reminder. When

recording the video, novices just discovered the task,

this probably was not enough to make a mental ver-

sion of the procedure to perform. Novices might have

started to formalize it only while using the desktop

application. And, there, contrary to the in-situ condi-

tion, they did not have the procedure environment as

a reminder. More work would be required to verify

this hypothesis.

The data was slightly biased because no novice

was assigned the baseline. This should have had only

a limited impact on our results as we shown that ex-

perts and novices shared similar trends most of the

time. Additionally, what interests us most is the dif-

ference between video-based and in-situ, and novices

were equally distributed between these conditions.

4.10 Future Works

An interesting future work would be to improve the

video-based and in-situ methods that we proposed. In

this direction, it could be interesting to better under-

stand the role of recall within the different methods.

In our work, we did not specifically assess how re-

cency and context affected recall. Investigating these

factors could provide insights into why the video-

based method outperformed the in-situ one and guide

the development of AR authoring tools that more ef-

fectively exploit the benefits of recency and/or con-

text. This could be done for instance by varying the

degrees of context and recency provided to partici-

pants when digitizing a specific procedure.

It could also be interesting to analyze the evolu-

tion of participants’ knowledge during procedure dig-

itization. It would enable to identify the moments

when participants formalize the procedure, and com-

pare how fast participants acquire or recall knowl-

edge in the different authoring conditions. Knowl-

edge could be subjectively assessed by grades of par-

ticipants’ confidence in their capacity to digitize the

procedure from memory, or objectively, with ques-

tionnaires about the procedures.

In this experiment, we measured procedure qual-

ity in terms of errors that are self-detected by AR au-

thors and of number of versions they made before be-

ing satisfied with the procedure. These two metrics

have the advantage of being simple to measure and to

straightforwardly represent a limitation of the AR au-

thoring tool, but they do not measure how well a pro-

cedure is organized. It would be interesting to mea-

sure procedure complexity using the metrics and tools

detailed in Section 2.2.1.

Finally, in this work, we focused on recall, but

other cognitive processes that help AR authors for-

malize a procedure could have been considered, e.g.

mental imagery, logical reasoning or analytical think-

ing. They would have led to other possible de-

signs. As an example, mental imagery involves

creating mental representations of concepts, objects,

HUCAPP 2024 - 8th International Conference on Human Computer Interaction Theory and Applications

414

or processes (Bronkhorst et al., 2020), and it has

been shown that providing thematic content improves

mental imagery and therefore problem solving skills

(Clement and Falmagne, 1986). Consequently, it

could be interesting to design authoring tools that pro-

pose thematic content (e.g. elements useful for the

procedure) to improve mental imagery and logical

reasoning.

5 FIELD STUDY AT INDUSTRIAL

SITES

5.1 Objectives

After the experiment described in Section 4, we per-

formed a field study where several AR authors from 7

different industrial sites and 3 firms were interviewed.

The field study had two objectives. The first objec-

tive was related to the authoring methods: we wanted

to make sure that they are viable and represent con-

crete use of AR authoring tools. The second objective

was related to the metrics we used to compare the au-

thoring methods (number of errors and versions): we

wanted to verify their actual interest in the assessment

of the quality of an authoring tool.

5.2 Setup

During the interviews, we asked AR authors how they

digitize their procedure. We asked them the context

and use case for which they digitize a procedure, the

existing materials that they use to digitize the proce-

dure, and their method to digitize the procedure. We

then question them about the video-based and in-situ

methods: if they are interesting for them and in which

conditions. Finally, we asked if they needed multi-

ple versions before reaching the final procedure. AR

authors digitize their procedure using the two appli-

cations described in Section 3.1. To complete digi-

tization, they need: to create AR content (text, pic-

ture, video or 3D model); to organize their procedure

within steps; to place the AR content. During the in-

terviews, we focused on AR content creation and pro-

cedure organization.

5.3 Results

5.3.1 Authoring Process

Digitization Context. AR authors digitize proce-

dures in different contexts and for different reasons.

Two of them are procedure creators: when new prod-

ucts or machines are created, they design and digitize

the AR procedure to assemble or use them. Three

of them digitize existing procedures with the help of

already existing materials (but possibly out-of-date).

Finally, two of them digitize existing procedures but

without any prior material. The summary of how AR

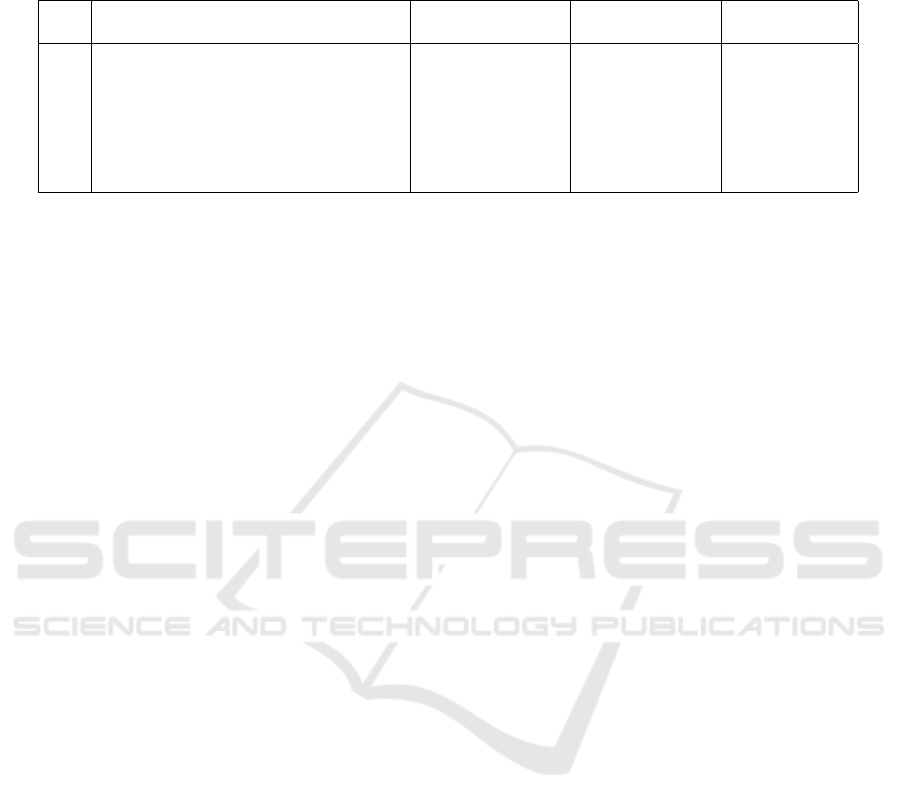

authors use the authoring tool is given by Table 3.

Expert Video Prior Digitization. Three out of

seven AR authors create an expert video prior to pro-

cedure digitization. They use it for two reasons: mak-

ing sure that they digitize an updated procedure (the

expert video is the most recent performance of the

procedure), and creating AR content for the proce-

dure: chunks of the expert video for each step or an-

notated screenshots.

AR Content Creation in-situ. Five out of seven

AR authors go in-situ prior and during procedure dig-

itization to create visual asset; primarily pictures and

videos.

5.3.2 Authoring Methods

All of the AR authors use the video-based, the in-situ,

or both methods. Those using the expert video use it

to limit digitization errors and to create AR content.

Those going in-situ do it to create AR content.

Multiple AR authors mentioned that they would

like to use the expert video to create all the virtual

content because it would prevent them from needing

to go in-situ. Yet, they often raised concerns about the

expert video as a good medium for content creation.

Indeed, they said that it required skills and time to cre-

ate a high quality expert video which can be used for

media creation. Making the expert video at the right

angle is difficult. Sometimes, what needs to be cap-

tured is small and would require zooming. The use

of a HMD to make a video requires practice: often,

the camera jitters, the AR authors need to rotate the

head in an unnatural position to capture the right ele-

ments, which can also be hidden from the AR author

point-of-view.

The creation of AR content in-situ can be time-

consuming, often because AR authors need several

trials before being satisfied with the content. For ex-

ample, they can realize while editing the expert video

on desktop that the expert forgot to wear the security

equipment and that they need to do the video all over

again.

5.3.3 Numbers of Errors and Versions Metrics

All of the AR authors mentioned high number of er-

rors and versions before reaching the final procedure.

The number of versions is even higher when the AR

AR Authoring: How to Reduce Errors from the Start?

415

Table 3: Summary of AR authors’ digitization methods gathered during a field study. Each industrial site uses AR for a

specific use case. They can digitize the procedure from existing materials, record an expert’s video of the procedure before

digitizing it, and create AR content (pictures, videos) at the location of the procedure (in-situ).

Site Use cases Existing materials

Expert’s video Media creation

prior digitization in-situ

1 Procedures for new products ✕ ✕ ✓

2 Procedures for new machines ✕ ✕ ✓

3 Quality control procedures ✕ ✕ ✓

4 Assembly procedures ✕ ✓ ✕

5 Assembly, quality control procedures ✓ ✓ ✕

7 Format shift, diagnoses procedures ✓ ✕ ✓

6 Digitization of spreadsheets procedures ✓ ✓ ✓

authors create a procedure which does not exist yet:

they have to write drafts that they heavily modify at

each new version. The creation of media also induces

multiple versions: AR authors first write the proce-

dure, then go at its location to create media that they

then have to edit. They regularly do this several times,

for example to recapture an unsatisfactory media.

5.4 Conclusion and Discussion

5.4.1 Authoring Methods

This field study validated the video as a tool for AR

authors to do less authoring mistakes because it forces

them to digitize with the the up-to-date procedure in

mind. It additionally highlighted the difficulty of cre-

ating high quality AR content, and suggested two pos-

sibilities to ease the creation of content: either using

the expert video as a base for other assets, either by

privileging in-situ authoring tools. The former has

the drawback of relying on video creation, which re-

quires skills, and the latter has the drawbacks of not

effectively reducing authoring errors, and of poten-

tially disturbing production lines for an undetermined

period for industrial applications.

5.4.2 Number of Errors and Versions Metrics

This field study highlighted that the number of ver-

sions is both due to digitization errors and to AR

content creation. While in this case, the number of

versions represents yet another difficulty of authoring

tool (media creation), it does not enable to capture

procedure quality alone. The number of errors is a

more precise metric to evaluate the procedure quality.

This field study showed that both metrics are rel-

evant and and concern AR authors on a daily basis.

We argue that they should be used in the design of

authoring tools in two aspects. The first aspect is AR

authoring tool evaluation during its design: these met-

rics enable to assess the quality of the procedures re-

sulting from the authoring tool. The second aspect

relates to the design of the authoring tool itself. This

study showed that it is very unlikely that AR authors

digitize their procedure in a single version. Not only

can they make mistakes, but they can also want to im-

prove the quality of the AR content, or the procedure

can change through time and AR authors need to up-

date it. If they create a new procedure, they work it-

eratively until they are satisfied with it. We argue that

AR authoring tools that enable a smooth versioning

and editing would improve AR authors experience.

For example, works could focus on making the edi-

tion of AR content simple, even for assets requiring

high technical skills like video or 3D models.

6 CONCLUSION

In this work, we studied how AR authoring conditions

can improve the quality of the resulting procedure. To

evaluate this quality, we measured the number of self-

detected authoring errors and number of versions un-

til AR authors are satisfied with their procedure. We

compared three authoring conditions, a video-based

method, an in-situ method, and a baseline method.

We found that the video-based method outperformed

the other methods in terms of the two metrics above-

mentioned as well as in terms of procedure creation

time and cognitive load. In addition, in a field study,

we gathered AR authors’ feedback about how they

digitize AR procedures and what they think of the au-

thoring methods above-mentioned. Depending on the

use case for which AR is needed, they favoured a dif-

ferent authoring method. They all produced a high

number of versions before being satisfied with their

procedure, highlighting the relevance of self-detected

authoring errors and number of versions metrics.

ACKNOWLEDGEMENTS

The authors wish to thank all the participants of the

experiment: thank you for your time, we hope you

enjoyed it.

HUCAPP 2024 - 8th International Conference on Human Computer Interaction Theory and Applications

416

REFERENCES

B

´

egout, P., Duval, T., Kubicki, S., Charbonnier, B., and

Bricard, E. (2020). Waat: A workstation ar author-

ing tool for industry 4.0. In Augmented Reality, Vir-

tual Reality, and Computer Graphics: 7th Interna-

tional Conference, AVR 2020, Lecce, Italy, September

7–10, 2020, Proceedings, Part II 7, pages 304–320.

Springer.

Bhattacharya, B. and Winer, E. H. (2019). Augmented real-

ity via expert demonstration authoring (areda). Com-

puters in Industry, 105:61–79.

Blattgerste, J., Renner, P., and Pfeiffer, T. (2019). Au-

thorable augmented reality instructions for assistance

and training in work environments. In Proceedings

of the 18th International Conference on Mobile and

Ubiquitous Multimedia, pages 1–11.

Bronkhorst, H., Roorda, G., Suhre, C., and Goedhart, M.

(2020). Logical reasoning in formal and everyday rea-

soning tasks. International Journal of Science and

Mathematics Education, 18:1673–1694.

Budiu, R. (2014). Memory recognition and recall in user

interfaces. Nielsen Norman Group, 1.

Byers, J. C. (1989). Traditional and raw task load index (tlx)

correlations: Are paired comparisons necessary? Ad-

vances in Industrial Erfonomics and Safety l: Taylor

and Francis.

Chidambaram, S., Huang, H., He, F., Qian, X., Villanueva,

A. M., Redick, T. S., Stuerzlinger, W., and Ramani, K.

(2021). Processar: An augmented reality-based tool

to create in-situ procedural 2d/3d ar instructions. In

Designing Interactive Systems Conference 2021, DIS

’21, page 234–249, New York, NY, USA. Association

for Computing Machinery.

Clement, C. A. and Falmagne, R. J. (1986). Logical reason-

ing, world knowledge, and mental imagery: Intercon-

nections in cognitive processes. Memory & Cognition,

14:299–307.

Erkoyuncu, J. A., del Amo, I. F., Dalle Mura, M., Roy, R.,

and Dini, G. (2017). Improving efficiency of industrial

maintenance with context aware adaptive authoring in

augmented reality. CIRP Annals, 66(1):465–468.

Fite-Georgel, P. (2011). Is there a reality in industrial aug-

mented reality? In 2011 10th IEEE International Sym-

posium on Mixed and Augmented Reality, pages 201–

210.

Funk, M., Lischke, L., Mayer, S., Shirazi, A. S., and

Schmidt, A. (2018). Teach me how! interactive as-

sembly instructions using demonstration and in-situ

projection. Assistive Augmentation, pages 49–73.

Gattullo, M., Evangelista, A., Uva, A. E., Fiorentino, M.,

and Gabbard, J. L. (2020). What, how, and why are

visual assets used in industrial augmented reality? a

systematic review and classification in maintenance,

assembly, and training (from 1997 to 2019). IEEE

transactions on visualization and computer graphics,

28(2):1443–1456.

Gattullo, M., Scurati, G. W., Evangelista, A., Ferrise, F.,

Fiorentino, M., and Uva, A. E. (2019). Informing the

use of visual assets in industrial augmented reality. In

International Conference of the Italian Association of

Design Methods and Tools for Industrial Engineering,

pages 106–117. Springer.

Gerbec, M., Balfe, N., Leva, M. C., Prast, S., and

Demichela, M. (2017). Design of procedures for

rare, new or complex processes: Part 1 – an iterative

risk-based approach and case study. Safety Science,

100:195–202. SAFETY: Methods and applications for

Total Safety Management.

Gertman, D., Blackman, H., Marble, J., Byers, J., Smith,

C., et al. (2005). The spar-h human reliability anal-

ysis method. US Nuclear Regulatory Commission,

230(4):35.

Gertman, D. I., Blackman, H. S., Haney, L. N., Seidler,

K. S., and Hahn, H. A. (1992). Intent: a method

for estimating human error probabilities for decision-

based errors. Reliability Engineering & System Safety,

35(2):127–136.

Gimeno, J., Morillo, P., Ordu

˜

na, J., and Fern

´

andez, M.

(2013). A new ar authoring tool using depth maps

for industrial procedures. Computers in Industry,

64(9):1263–1271. Special Issue: 3D Imaging in In-

dustry.

Haringer, M. and Regenbrecht, H. (2002). A pragmatic ap-

proach to augmented reality authoring. In Proceed-

ings. International Symposium on Mixed and Aug-

mented Reality, pages 237–245.

Jang, I. and Park, J. (2022). Determining the complexity

level of proceduralized tasks in a digitalized main con-

trol room using the tacom measure. Nuclear Engineer-

ing and Technology, 54(11):4170–4180.

Kearsley, G. (1982). Authoring systems in computer based

education. Commun. ACM, 25(7):429–437.

Kirwan, B. (1997). The development of a nuclear chemical

plant human reliability management approach: Hrms

and jhedi. Reliability Engineering & System Safety,

56(2):107–133.

Knopfle, C., Weidenhausen, J., Chauvigne, L., and Stock, I.

(2005). Template based authoring for ar based service

scenarios. In IEEE Proceedings. VR 2005. Virtual Re-

ality, 2005., pages 237–240.

Lavric, T., Bricard, E., Preda, M., and Zaharia, T. (2021).

Exploring low-cost visual assets for conveying assem-

bly instructions in ar. In 2021 International Confer-

ence on INnovations in Intelligent SysTems and Appli-

cations (INISTA), pages 1–6.

McDonald, A. D., Ade, N., and Peres, S. C. (2023). Predict-

ing procedure step performance from operator and text

features: A critical first step toward machine learning-

driven procedure design. Human Factors, 65(5):701–

717. PMID: 32988239.

Park, J. and Jung, W. (2007). A study on the development

of a task complexity measure for emergency operating

procedures of nuclear power plants. Reliability Engi-

neering & System Safety, 92(8):1102–1116.

Pham, T. A., Wang, J., Iyengar, R., Xiao, Y., Pillai,

P., Klatzky, R., and Satyanarayanan, M. (2021).

Ajalon: Simplifying the authoring of wearable cog-

nitive assistants. Software: Practice and Experience,

51(8):1773–1797.

AR Authoring: How to Reduce Errors from the Start?

417

Sasangohar, F., Peres, S. C., Williams, J. P., Smith, A., and

Mannan, M. S. (2018). Investigating written proce-

dures in process safety: Qualitative data analysis of

interviews from high risk facilities. Process Safety and

Environmental Protection, 113:30–39.

Scholtz, C. R. and Maher, S. T. (2014). Tips for the

creation and application of effective operating proce-

dures. Process Safety Progress, 33(4):350–354.

Spectral TMS. The technician’s augmented reality assistant.

https://www.spectraltms.com/en/. Accessed: 2021-

12-17.

Taqtile Knowledge when and where you need it.

https://taqtile.com/. Accessed: 2023-09-14.

Zauner, J., Haller, M., Brandl, A., and Hartman, W. (2003).

Authoring of a mixed reality assembly instructor for

hierarchical structures. In The Second IEEE and ACM

International Symposium on Mixed and Augmented

Reality, 2003. Proceedings., pages 237–246.

Zhu, J., Ong, S. K., and Nee, A. Y. (2013). An authorable

context-aware augmented reality system to assist the

maintenance technicians. The International Journal of

Advanced Manufacturing Technology, 66:1699–1714.

Zogopoulos, V., Geurts, E., Gors, D., and Kauffmann, S.

(2022). Authoring tool for automatic generation of

augmented reality instruction sequence for manual op-

erations. Procedia CIRP, 106:84–89. 9th CIRP Con-

ference on Assembly Technology and Systems.

HUCAPP 2024 - 8th International Conference on Human Computer Interaction Theory and Applications

418