Gaia: A Social Robot to Help Connect Humans and Plants

Christopher Xenophontos, Teressa Clark, Michael Seals, Cole A. Lampman, Iliyas Tursynbek

and Mounia Ziat

a

Bentley University, 175 Forest St, Waltham, MA, 02452, U.S.A.

Keywords:

Social Robots, Human-Plant Interaction, Multimodal Design, Arduino.

Abstract:

Interacting with plants has been shown to increase both physical and mental health outcomes and seemed

obvious for many during such troubling times. Inevitably, plants died as a result of the owner’s lack of

awareness of their needs. Gaia, a social robot-planter, was created to communicate a plant’s needs to its

owner in an easy and enjoyable way. The final prototype, a multimodal interface, was designed to join natural

language messaging with an emotive digital face and “voice.” Creating a social robot that anthropomorphizes

the user’s plant to effectively communicate the plants’ needs, build empathy, and create a stronger emotional

bond between the plant and owner, leading to better outcomes for both.

1 INTRODUCTION

One strategy many individuals use to improve and

personalize their space was to incorporate more

plants. House plants not only elevate a room vi-

sually, they also provide comfort and have poten-

tial health and psychological benefits (Elings, 2006;

Sabra, 2016; Seow et al., 2022). Alongside the rise

in popularity of plant caretaking, there has been a

parallel rise in products designed to help individuals

care for their newly acquired plants (Clark, 2022). In-

creasingly, products are turning towards technology,

often in the form of mobile applications, as a solution

for common problems such as species identification,

watering schedules, and troubleshooting if a plant’s

health begins to decline.

While the uptick in plant caretaking as a hobby

has shown an upturn in houseplant sales, it has not

been a revolution in the way humans interact with

plants. Although plants are living things, they are

treated more like decorations or accessories to a space

rather than living companions. The relationship be-

tween a plant and its caretaker is entirely driven by

the human that puts as much meaning into the plant

as they want. The plant is unable to interact with or

explicitly communicate with the caretaker. Instead,

plants communicate their needs over days as their

leaves and stalks droop yellow. Once a caretaker no-

tices these changes in their plants’ health, they must

a

https://orcid.org/0000-0003-4620-7886

know what intervention to take; many owners have

asked themselves the question, “Are these leaves turn-

ing yellow because of lack of or too much water?”

With these circumstances confusing communica-

tion, plant owners can find themselves stressed about

the health of their plants while the plants find them-

selves under subpar care conditions. Our device Gaia,

shown on Fig. 2a, transforms any houseplant into

an evocative social robot that gives the plant a way

to communicate its needs to the caretaker in an in-

stant (Breazeal, 2003) using sensors and audio inter-

face to check the plant’s condition. Using soil and

sunlight sensors, which measure the exact amount of

moisture in the soil and the intensity of the light the

plant is receiving, respectively, the robot describes the

plant’s needs to the user. Through the use of screens,

emoticons, and sounds, Gaia anthropomorphizes the

plant, making it easier for humans to understand its

needs. Additionally, by giving the plant-robot the

ability to express the same emotions as humans, it

could increase the empathy a caretaker has for their

plant, thereby making a caretaker more responsive

(Urquiza-Haas and Kotrschal, 2015). Ultimately, the

goal of Gaia is to make plant caretaking easier by al-

lowing the caretaker to interact with the plant in novel

ways involving emotion-based prompts.

1.1 Benefits of Plant Caretaking

Human-plant relations precede any written history.

Not only have we relied on plants to survive, but

400

Xenophontos, C., Clark, T., Seals, M., Lampman, C., Tursynbek, I. and Ziat, M.

Gaia: A Social Robot to Help Connect Humans and Plants.

DOI: 10.5220/0012266400003660

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 19th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2024) - Volume 1: GRAPP, HUCAPP

and IVAPP, pages 400-407

ISBN: 978-989-758-679-8; ISSN: 2184-4321

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

plants have played an active role in human evolu-

tion, civilization, and urbanization. Humans have af-

fected plant evolution in a similar manner – through

cultivation and agriculture, we have developed a co-

dependence with the plant kingdom (Van der Veen,

2014).

As a result of this close relationship with plants,

humans can receive a multitude of benefits and range

of interactions – from simply being around plants

to actively gardening (Elings, 2006; Sabra, 2016).

In passive interaction environments (e.g., plants in

an office setting), being in the presence of vegeta-

tion has shown to improve cognitive functioning, at-

tention maintenance, creativity, and even happiness

(Chien et al., 2015; Seow et al., 2022). When work-

places have abundant greenery, employees reported

decreases in negative health effects like headaches

and tiredness and allowed workers to recover from

stress more quickly (Elings, 2006). Additionally, suc-

cessfully caring for a houseplant can provide a feeling

of control in a world where daily stress leaves people

feeling increasingly unmoored (Sabra, 2016).

In activities involving greater interaction, like gar-

dening, there are physical benefits, such as lowering

the risk of heart disease, in addition to the robust psy-

chological benefits. Considering mental health, gar-

dening has been seen to increase self-esteem and can

provide a sense of belonging and self-worth. Thera-

peutic horticulture, or the use of gardening and culti-

vating to promote well-being, has been incorporated

into social and clinical work as an alternative therapy.

In therapeutic settings, gardening provides an envi-

ronment where people can be mindful and intentional

and, as a result, can become more aware of their sur-

roundings, recognize stressful triggers, practice self-

regulation, and have a healthy outlet for their frustra-

tion (Chien et al., 2015; Elings, 2006; Sabra, 2016).

1.2 Social Robot and Human-Plant

Interaction

Relying on Social Robot principles of empathy and

interaction, Gaia seeks to build a stronger bond be-

tween a caretaker and their plant, making it easier and

more fun to maintain the health of one’s houseplants.

In this context, our team uses the Socially Evoca-

tive definition for a social robot, described as anthro-

pomorphized robots to encourage humans to interact

with them in a more natural way (Breazeal, 2003). As

a result of its interactive nature, a social robot could

provide several psychological benefits. Robinson et

al. suggest that interactions with social robots could

decrease levels of loneliness, and, more recently, a

meta-analysis of the use of social robots with older

adults found that interactions with social robots could

also lower levels of agitation and anxiety (Robinson

et al., 2013; Pu et al., 2019). By turning the sim-

ple houseplant into a social robot, we seek to capture

the health and wellness benefits from both interacting

with a social robot as well as plant caretaking.

Gaia is not the first attempt to create a device al-

lowing humans to interact with plants on a deeper

level. Such existing devices compose a subset of what

is considered Living Media Interfaces (LMI). Mer-

ritt et al. define LMI as “interfaces that incorporate

living organisms and biological materials into arti-

facts to support interaction between humans and dig-

ital systems,”. Key features of an LMI include a dig-

itally controlled input/output communication (I/O),

the ability to communicate a feeling, and facilitation

of human interaction (Merritt et al., 2020).

Other projects have sought to create interfaces us-

ing ‘I/O Plant’ design patterns for allowing a plant to

directly affect actuators (Kuribayashi et al., 2007b).

With Plantio, researchers used sensors that measured

a plant’s biopotential, allowing the plant to “wake up”

when it was stimulated (using LED lights) (Kurib-

ayashi et al., 2007a). More recently, researchers at the

MIT Media Lab developed a device and framework

for plant-based interfaces (Seow et al., 2022). By at-

taching electrodes, actuated by a sensor, to a mimosa

pudica plant, researchers were able to simulate natu-

ral impulses and provide a plant-based interface that

displayed information on air quality through opening

and closing pudica’s leaves (Seow et al., 2022).

The closest work to our approach was carried out

by Angelini et al. (Angelini et al., 2016), where

three different augmented plants based on user per-

sonas were designed. The difference resides is that

we focused on the social features of the plant and

its ability to trigger emotions using humanoid voice

and emoticon faces while caring for the plant. Addi-

tionally, social aspects in human-plant interaction re-

search (Chang et al., 2022), involving emotions and

voice in one single system was not investigated before

this work.

Our design focus was to create a nurturing sys-

tem - as defined by Aspling et al. as “interfaces that

support the well-being of the plant through greater ex-

pression of need, anthropomorphization, and creating

emotional bonds with humans” (Aspling et al., 2016).

One example of a nurturing system is ‘My Green Pet’

that allows the measurement of the physical contact

children made with the plant (e.g., hitting, stroking,

tickling, etc.) and output audio and visual information

to help children identify the plant as a living organism

(Hwang et al., 2010). However, their design sought

to hide electric components to emphasize the organic

Gaia: A Social Robot to Help Connect Humans and Plants

401

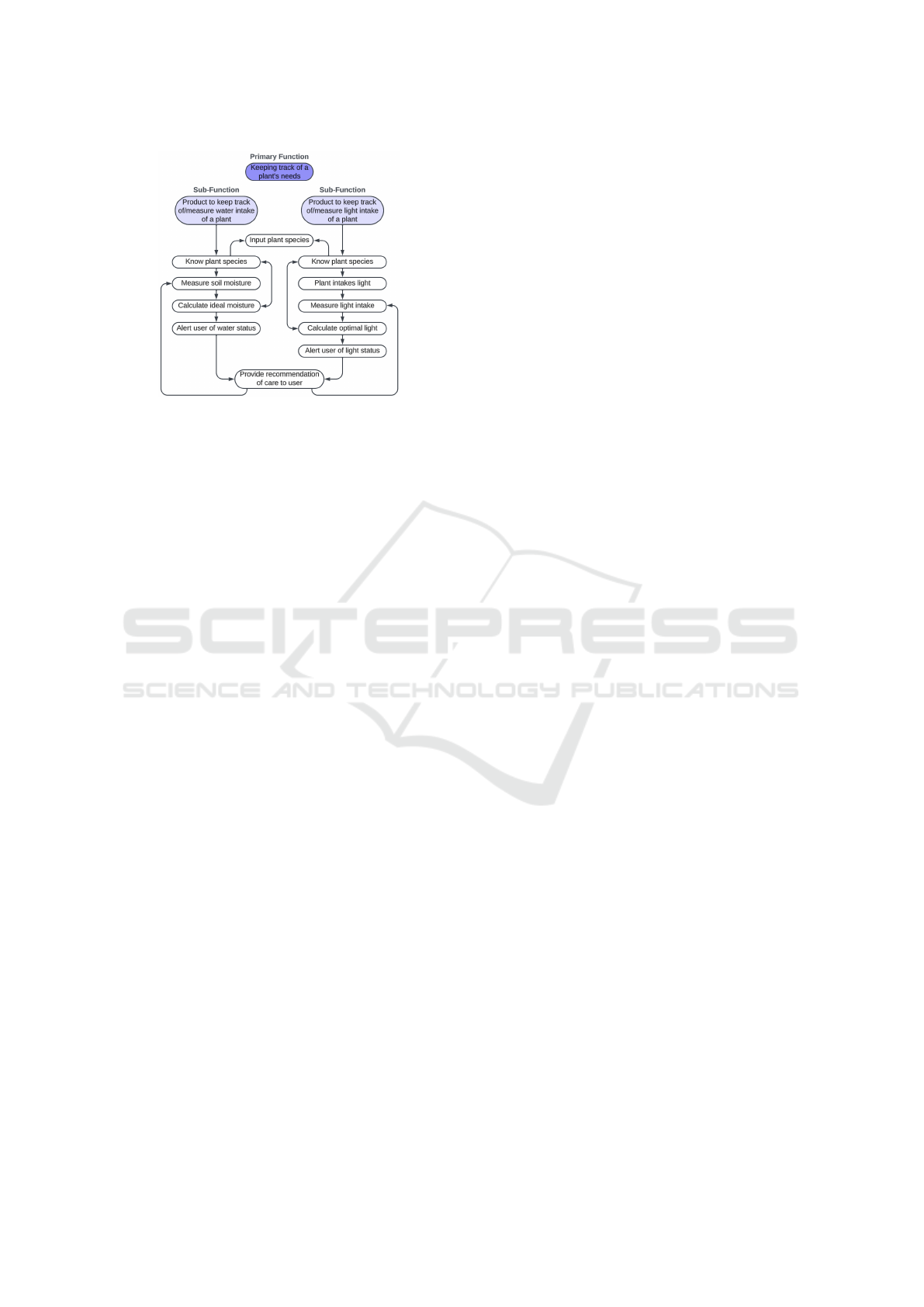

Figure 1: Functional analysis.

nature of the plant (Hwang et al., 2010). With Gaia,

we designed the device as a nurturing system where

the components would be visible while still creating

an emotional bond.

2 IDEATION & EARLY TESTING

2.1 Ideation

Ideation started with a storyboard depicting a user’s

journey: a person initially struggles to care for house-

plants, but finds success with an interactive plant.

This plant not only self-assesses its needs but also

communicates them effectively to the owner. The de-

sign prioritized making the user experience fun and

exciting.

A functional analysis (Fig. 1) identified the de-

vice’s main functions and user interaction consid-

erations. This analysis informed a morphological

chart, listing functions with multiple solution options

(Roozenburg and Eekels, 1995). From this, three

top solutions were selected for further analysis: 1)

Minimalist Wall Art, with the plant on an interactive

wall; 2) Maximum Interaction, where the plant, like

a robotic cleaner, moves on wheels with obstacle sen-

sors for light-seeking; 3) Balanced Approach, keep-

ing the plant in a pot but with enhanced human-plant

interaction features.

Using the morphological chart, we created low-

fidelity sketches for the device’s initial prototype.

This process involved selecting features, determining

module size and placement, and conceptualizing the

device’s overall visual design. We also discussed user

interaction and the physical interface. Initially, the de-

sign consisted of a discrete stand the plant would be

set on with water and light information surrounding

the plant. Ultimately, we decided that separating the

plant from the information felt too artificial. Having

the information attached to the plant’s pot made it feel

like it was being communicated from the plant itself.

Gaia aims to evoke the sense of caring for a robotic

pet, akin to a Tamagotchi, where it communicates its

feelings and care needs back to the user.

2.2 Early Stage Prototype Testing

2.2.1 Research Questions

During the initial design of Gaia, when we had an

understanding of the main functions of the device

as well as the general structure, we had several us-

ability questions that we wanted to validate: 1) Can

users correctly interpret the emoticons we chose to

represent the plant’s various states?, 2) What is the

best way for the plant to communicate its status on

the LCD screen? Should it display natural language

phrases (e.g., “I need water”) or exact sensor readings

(e.g., “Soil Moisture 25%”)?, and 3) Do users under-

stand how to use the buttons on the device?

To answer these questions, we created a rapid pa-

per prototype of the device using cardboard, LED but-

ton modules, and sticky notes (see Fig. 2). A card-

board frame was made to simulate the device, com-

plete with LED buttons. Sticky notes on the cardboard

prototype had drawings of the various emoticons and

status messages on them. During the test, when a par-

ticipant interacted with the mock device, a researcher

would switch the sticky notes to reflect the correct sta-

tus. All testing sessions were conducted in person.

2.2.2 Participants

For the initial test, we recruited four participants aged

23 to 42 years old. Prior to the test, participants

were asked to gauge their experience with caring for

houseplants. Three of the participants owned multiple

houseplants, and the fourth had plants in the past but

was not a current owner. None of the participants de-

scribed themselves as very confident with their abil-

ity to keep their plants alive; all participants had used

Google to help them care for their plants, with two of

the participants reporting they currently use mobile

apps to track a watering schedule for their plants.

2.2.3 Task List

The usability test focused on assessing how well par-

ticipants would use different information to inform

plant care. The tasks consisted of checking the wa-

ter and light levels of the plants, with one set display-

ing information in natural language (NL) phrases and

HUCAPP 2024 - 8th International Conference on Human Computer Interaction Theory and Applications

402

(a) (b) (c)

Figure 2: (a) Gaia in operation with a plant inside. (b) The cardboard prototype used in the initial testing: Sticky notes act as

interchangeable screen states, (c) Different faces and screen states tested in the usability session.

the other set displaying information with exact sen-

sor readings. All participants completed both sets of

tasks. The tasks can be seen below:

1. Check the Water Level of the Plant. “If you

wanted to check the current water levels of your

plant, how would you do that?”

(a) Show user natural phrase messages or percent-

age messages: “Based on this information, how

would you care for your plant?”

2. Check the Light Levels of the Plant. “If you

wanted to check the current light levels of your

plant, how would you do that?”

(a) Show user natural phrase messages or percent-

age messages: “Based on this information, how

would you care for your plant?”

3. Verify the Different States of the Plant. “Imag-

ine you walked by your interactive plant and saw

this face on the screen. What would that make you

think of?” (Default Happy State)

(a) “How would you care for your plant after see-

ing that?”

(b) “What about this face?” (Change to Sad State)

(c) “How would you care for your plant after see-

ing that?”

2.2.4 Results

Due to the small number of participants, we need to

be considerate of the level of confidence we give the

test results, however, we were able to pull out high-

level trends based on the interactions we saw.

1. Interpretation of Emoticons. Participants con-

sistently demonstrated an ability to interpret the

emoticons representing the plant’s various states.

The emoticons were often the first element no-

ticed, with participants quickly understanding the

emotions conveyed before reading the LCD mes-

sages. This suggests that the chosen emoticons

effectively communicated the plant’s needs, align-

ing well with the intended design of Gaia.

2. Comparing NL Phrases vs. Exact Sensor

Readings. Participants exhibited high confidence

in responding to NL phrases (e.g., “I need water”).

They understood and acted upon these messages

with clearly and consistently, indicating a pref-

erence for this mode of communication. The re-

sponse to exact sensor readings (e.g., “Soil Mois-

ture 50%”) was mixed, with some participants

gave the plant a little bit of water while other

waited to give the plant more water. Participants

were less confident about the necessary care, indi-

cating some ambiguity in interpreting these read-

ings. This suggests that NL phrases are more ef-

fective in communicating plant needs to users.

Our results led us to use natural language (NL)

phrases for user information display. NL phrases im-

proved participants’ understanding of plant care and

needs. Our set sunlight and soil moisture thresholds,

coupled with corresponding messages, promoted a

natural watering schedule—allowing soil drying be-

fore thorough watering, instead of constant semi-

hydration. Additionally, NL phrases added ”personal-

ity” to plants, making them seem more like compan-

ions than mere health maintenance devices for partic-

ipants.

Gaia: A Social Robot to Help Connect Humans and Plants

403

Figure 3: Electrical connections from Arduino Uno to mod-

ules.

3 GAIA PROTOTYPE

Gaia, controlled by an Arduino Uno and Grove Seeed

modules, interacts with plant owners using sensors.

As the owner approaches within 3m, the PIR Motion

Sensor activates, triggering the Sunlight and Capaci-

tive Moisture Sensors to measure light and soil mois-

ture. The plant’s status is communicated via the RGB

Matrix embedded in the shell, displaying faces indi-

cating water and light levels. Owners can check these

levels using two LED buttons; the blue for water on

the left and the yellow for sunlight on the right of the

RGB Matrix. We chose these colors for their strong

association with plant needs. Pressing the buttons dis-

plays a face, a message on the LCD RGB backlight,

and plays a sound reflecting the plant’s ’mood,’ pro-

duced by an MP3 player module, a Micro SD card,

and a speaker. The outer square shell, 3-D printed

and shown in Fig.3, houses the modules and includes

a space for a flower pot, making plant placement easy.

3.1 Interface Design

3.1.1 3D Printed Plant Shell

The initial 3D print shell, that was circular, did

not enable a secure fit to the Arduino mod-

ules. We switched to a rectangular shape shell

(17.5 (W) x 19.5 (L) x 16 cm (H)) to comfortably fit

all Arduino modules and to protect them from water

and soil (Fig. 4). The outside rectangular shell was

designed to fit any 6-inch pot or less. The pot easily

slides in and out to allow for maintenance. The pot

shell was printed using a Creality Ender 3 3D-printer,

with white PLA filament. The white was chosen to

blend in with other planters that the owner could have

in their home, also allowing for the lights to bounce

off the white for better visibility. The LCD buttons,

RGB Matrix, and LCD RGB screen were placed in

Figure 4: The interior look of the 3D-printed plant pot shell

created using the Creality Ender 3.

the front to represent the face of the plant while the

speaker was located in the back of the device.

3.1.2 Sound Design

The sound design for Gaia has two primary goals, first

to reinforce the visual information being presented

about the status of the plant, and second to foster an

emotional connection between the user and the plant.

Ideally, the sound design would transform Gaia from

a common house or decorative plant into something

that is cared for and holds emotional value that in turn,

drive more intentional care for the plant.

The sound design was modeled after something

that was already familiar to people. Cute and friendly

robots, have existed in the media for decades and pro-

duce strong feelings in the people that interact with

them (Dou et al., 2021). This allowed the design to

tap into an already existing mental model and resulted

in the creation of a childlike and expressive voice that

could convey and amplify both the negative and posi-

tive emotions desired.

Both Positive and negative sounds were imple-

mented. Negative audio feedback would prompt

negative feelings and motivate users to quickly re-

solve any issues in the plant’s environment. Con-

versely, positive audio feedback would provide a pos-

itive emotional reward, reinforcing behavior (Jolij and

Meurs, 2011).

3.1.3 Visual Design

The insights and findings gathered from the usabil-

ity testing sessions were implemented into the de-

sign of visual displays of the RGB Matrix and LCD

screen. Participants in the usability study responded

more to NL messages (e.g., “I feel great, I don’t need

water”) than sensor readouts (i.e., percentages). We

designed the LCD messages to sound more conver-

sational while still remaining within the 32-character

HUCAPP 2024 - 8th International Conference on Human Computer Interaction Theory and Applications

404

(a) (b)

Figure 5: Examples of different screen states based on soil moisture and sunlight levels: (a) Sufficient moisture level state

with happy face and message, (b) Insufficient sunlight state with sad face and message.

constraint of the LCD screen. The usability study also

uncovered that the design of the ‘face’ on the RGB

Matrix was a very salient feature and reinforced the

message on the LCD screen.

3.2 Modes

3.2.1 Motion Sensor Mode - Plant Wakes Up

The plant when motion is not detected is in a sleep

state, only showing a message on the LCD that states

“zzzzzzzzz”. As the plant owner walks past the plant

the sensor will trigger and they are able to glance over

and look at the overall health of the plant as seen in

Fig. 5. They can see the RGB Matrix which will dis-

play a range of emotive faces depending on the water

level and light level. If both water and light needs are

met, it will display a happy face. If only one need is

met, it will show a neutral face, communicating that it

needs care soon. If both needs are unmet, it will dis-

play a crying face to show that it needs to be tended

to. This ‘plant wake-up mode’ is intended to help the

user understand the overall health of the plant with a

quick glance as they go about their day, and can also

serve as a reminder that the plant needs tending to. In

this mode, no any sound or voice output is provided

by Gaia.

3.2.2 Moisture Sensor Mode - Blue LED Button

Pressed

When the plant owner is interested in learning about

the water level they can walk over to the plant pot and

press on the blue LED button. The button will read the

moisture level of the soil, the RGB Matrix will then

show the feeling of the plant based on the level. If the

moisture level is ≥ 50%, a happy face with sunglasses

with a happy sound appears, and a message on the

LCD that states “I feel great, I don’t need water.” If

soil moisture is between 15 and 50%, a happy face

accompanied by a contented sigh is displayed with a

message on the LCD that states, “A bit dry, need water

tomorrow,” to communicate that it will be tending to

tomorrow. If soil moisture is < 15%, the matrix will

show a crying face, a sad sound will play, and an LCD

message will display, “I’m totally dry, I need water!”

If the plant owner sees the last message, the intention

is that they will water the plant.

3.2.3 Sunlight Sensor Mode - Yellow LED

Button Pressed

When the plant owner is interested in learning about

the amount of sunlight their plant is receiving, they

can walk over to the plant pot and press the yellow

LED button. The button will read the light levels and

the RGB Matrix will then show the feeling of the plant

based on the level. If the light level is ≥ 50%, it will

show a happy face with sunglasses on, the LCD will

display a message stating “I am getting enough light!”

and the speaker will play a happy sound. If the light

levels are < 50% the RGB Matrix will show an un-

happy face, a message stating “I’m not getting enough

sunlight,” and a sad sound. This 3D prototype was

tested in a second user study described below.

4 USER STUDY

4.1 Participants

Eight participants (Average Age: 29.6 (SD:9); Gen-

der: 3 F, 4 M, 1 prefer not to say) were recruited for

this study. They were all Bentley University students

and were compensated for their time.

Gaia: A Social Robot to Help Connect Humans and Plants

405

4.2 Usability Test

Participants were instructed to interact with Gaia by

responding to the needs of the plant. They were sitting

in a dim room to be able to modulate the light level us-

ing a nearby lamp and have a complete interaction ex-

perience in a single study session and were provided

with a bottle of water and a lamp as described in the

instructions: “This plant has essential needs for its

growth. The plant pot that you see has buttons and

displays. Please take some time to examine this plant

pot and interact with it. Try to satisfy the plant’s needs

by following visual and audio prompts from the pot by

using the water and the lamp provided”.

After the interaction with Gaia, they were asked to

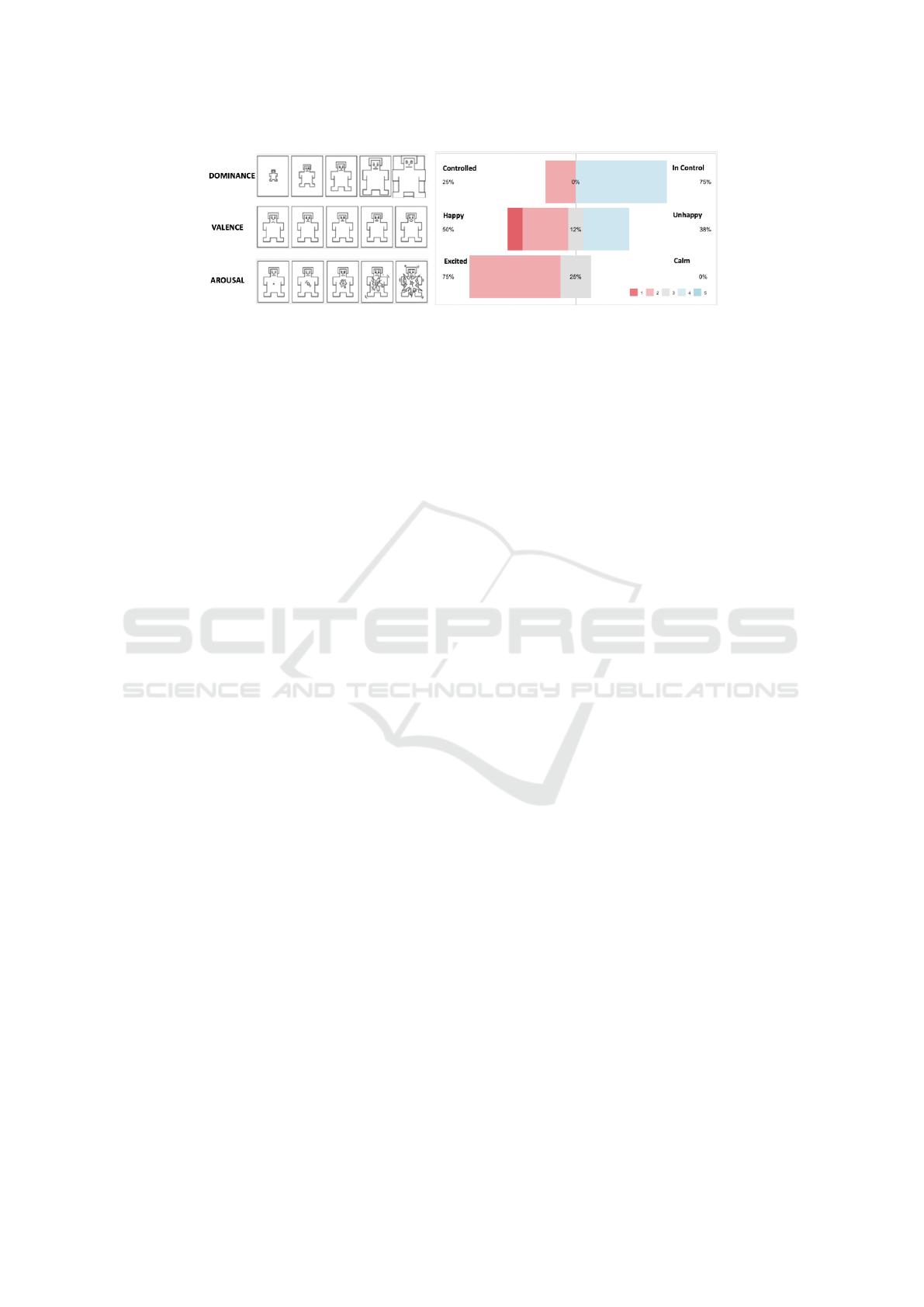

assess the voice using the Self-Assessment Manikin

(SAM) (Bradley and Lang, 1994) with a five-point

scale and evaluate the overall usability using the sys-

tem usability scale (SUS) (Brooke et al., 1996). The

SAM is a non-verbal pictorial scale that directly mea-

sures the valence, arousal, and dominance dimensions

associated with a person’s affective reaction to a wide

variety of stimuli, including visual, auditory, and tac-

tile (Ziat and Raisamo, 2017; Ziat et al., 2020). The

SUS is used to assess several usability attributes such

as effectiveness, efficacy, and satisfaction.

4.3 Results

All participants successfully interacted with the de-

vice buttons, indicating no issues in understanding

their functionality. This confirms the usability of the

button interface in the prototype design. The mean

SUS score of 77.2 with a 95% confidence Interval [68

- 87], significantly (p < .05) above the average (68),

reflects a high level of usability and user satisfaction

with Gaia. It is equivalent to a B on the Sauro/Lewis

SUS grading curve with a corresponding grade range

from C to A+ (Lewis and Sauro, 2018).

The SAM results showed that 75% of participants

felt in control and excited, with 50% reporting hap-

piness (Fig. 6). Participant feedback indicated con-

fusion over delayed responses; three participants ex-

pected immediate changes in the plant’s state. For

instance, two noted: “After watering, the hydration

level showed happy, but it turned dry again after 10-20

sec,” and “The delayed feedback might lead to over-

watering.”

Most participants liked the happy voice but found

the unhappy/whining voice annoying or excessive.

Yet, the voice feature was viewed as a social ice-

breaker, with a participant noting its suitability for

business or social settings with unfamiliar guests.

Also, several participants were intrigued by the de-

vice’s potential to assist those struggling with plant

care, with comments like, “Very interesting device,

though not a plant person,” and “Seemed incredi-

bly helpful for someone who has never really grown

plants.”

5 CONCLUSION AND FUTURE

RESEARCH

At present, Gaia embodies the foundational concept

of transforming a living plant into a simple social

robot, drawing from the broader context of LMIs as

defined by Merritt et al. (Merritt et al., 2020). Our am-

bition, given more resources and time, is to enhance

Gaia in two critical areas: offering more sophisticated

plant care and advancing its social robot features.

To help plant owners care for their plants, Gaia

currently communicates a plant’s needs by taking

readings from either the soil or moisture sensor and

comparing the values to a set of predetermined thresh-

olds. This method of communication, while effective,

doesn’t account for the diverse requirements of dif-

ferent plant species. Each species has its own optimal

care conditions, some might want dry soil for long

periods of time or to be always kept above 50% soil

moisture respectively. In future versions, we aim to

incorporate a species selector, inspired by the species-

specific considerations that have been notably absent

in earlier projects (Kuribayashi et al., 2007a; Kurib-

ayashi et al., 2007b; Seow et al., 2022). This function-

ality will enable Gaia to provide tailored care, adjust-

ing sensor thresholds for individual plant species, en-

suring optimal growth conditions. Furthermore, there

are additional sensors that could be added to Gaia that

would increase the level of plant care, such as hu-

midity and temperature sensors. These would work

in conjunction with a species selector to ensure any

plant could be given its optimal environment.

Gaia’s current iteration, with its emoticons and

natural phrases, is just the beginning of what we en-

vision for its social robot capabilities. Moving for-

ward, we plan to integrate more immediate interac-

tion features, addressing the delay issues identified in

our user study; which were related to the source of

unhappiness expressed by some of the participants as

they expected an instantaneous interaction. This de-

velopment will take cues from the augmented plants

of Angelini et al. (Angelini et al., 2016) and the nur-

turing system approach defined by Aspling et al. (As-

pling et al., 2016), further enhancing Gaia’s ability to

forge a strong emotional bond with users by giving it a

stronger personality and using more complex interac-

tions. The introduction of a “Party Mode”, a function

HUCAPP 2024 - 8th International Conference on Human Computer Interaction Theory and Applications

406

Figure 6: Left: SAM scale, Right: SAM results for the three affective dimensions: Dominance, Valence, and Arousal.

where Gaia would play music and simulate a dance

party, and a richer library of animations and sounds

will enable Gaia to express more lifelike and nuanced

states, surpassing the level of interaction observed in

‘My Green Pet’ (Hwang et al., 2010).

Conducting usability studies with different age

groups, such as children to identify their specific

needs and interaction patterns to improve Gaia’s de-

sign is another aspect that requires improvements. As

we continue our development, we aim to not only im-

prove Gaia’s functionality but also deepen the con-

nection it fosters between humans and plants, thereby

transforming it from a mere decorative object into a

cherished companion.

REFERENCES

Angelini, L., Caon, M., Caparrotta, S., Khaled, O. A., and

Mugellini, E. (2016). Multi-sensory emotiplant: mul-

timodal interaction with augmented plants. In Conf.

on Pervas. and Ubiqu. Computing, pages 1001–1009.

Aspling, F., Wang, J., and Juhlin, O. (2016). Plant-computer

interaction, beauty and dissemination. In 3rd Inter.

Conf. on Animal-Computer Interaction, pages 1–10.

Bradley, M. M. and Lang, P. J. (1994). Measuring emotion:

the self-assessment manikin and the semantic differ-

ential. J. of behav. ther. and exp. psych., 25(1):49–59.

Breazeal, C. (2003). Toward sociable robots. Robotics and

autonomous systems, 42(3-4):167–175.

Brooke, J. et al. (1996). Sus-a quick and dirty usability

scale. Usability evaluation in industry, 189(194):4–7.

Chang, M., Shen, C., Maheshwari, A., Danielescu, A., and

Yao, L. (2022). Patterns and opportunities for the de-

sign of human-plant interact. In DIS, pages 925–948.

Chien, J. T., Guimbreti

`

ere, F. V., Rahman, T., Gay, G.,

and Matthews, M. (2015). Biogotchi! an exploration

of plant-based information displays. In 33rd annual

ACM CHI: Extended Abstracts, pages 1139–1144.

Clark, C. (2022). Putting down roots: A growing millennial

demographic interested in small plants. In Nursery &

Garden Stores in the US. IBISWorld database.

Dou, X., Wu, C.-F., Lin, K.-C., Gan, S., and Tseng, T.-M.

(2021). Effects of different types of social robot voices

on affective evaluations in different application fields.

International Journal of Social Robotics, 13:615–628.

Elings, M. (2006). People-plant interaction: the physiologi-

cal, psychological and sociological effects of plants on

people. In Farming for health, pages 43–55. Springer.

Hwang, S., Lee, K., and Yeo, W. (2010). My green pet: A

current-based interactive plant for children. In Inter.

Conf. on Inter. Des. and Children, pages 210–213.

Jolij, J. and Meurs, M. (2011). Music alters visual percep-

tion. PLOS ONE, 6(4):1.

Kuribayashi, S., Sakamoto, Y., Morihara, M., and Tanaka,

H. (2007a). Plantio: an interactive pot to augment

plants’ expressions. In Internat. conf. on advances in

computer entertainment technology, pages 139–142.

Kuribayashi, S., Sakamoto, Y., and Tanaka, H. (2007b). I/o

plant: a tool kit for designing augmented human-plant

interactions. In CHI’07 EA, pages 2537–2542.

Lewis, J. R. and Sauro, J. (2018). Item benchmarks for the

system usability scale. J. of Usability Studies, 13(3).

Merritt, T., Hamidi, F., Alistar, M., and DeMenezes, M.

(2020). Living media interfaces: a multi-perspective

analysis of biological materials for interaction. Digital

Creativity, 31(1):1–21.

Pu, L., Moyle, W., Jones, C., and Todorovic, M. (2019). The

effectiveness of social robots for older adults: a sys-

tematic review and meta-analysis of randomized con-

trolled studies. The Gerontologist, 59(1):e37–e51.

Robinson, H., MacDonald, B., Kerse, N., and Broadbent,

E. (2013). The psychosocial effects of a companion

robot: a randomized controlled trial. J. of the Ameri-

can Medical Directors Association, 14(9):661–667.

Roozenburg, N. F. and Eekels, J. (1995). Product design:

fundamentals and methods. Wiley.

Sabra, C. (2016). Connecting to self and nature. Journal of

Therapeutic Horticulture, 26(1):31–38.

Seow, O., Honnet, C., Perrault, S., and Ishii, H. (2022). Pu-

dica: A framework for designing augmented human-

flora interaction. In Aug. Humans 2022, pages 40–45.

Urquiza-Haas, E. G. and Kotrschal, K. (2015). The mind

behind anthropomorphic thinking: attribution of men-

tal states to other species. Animal Beh., 109:167–176.

Van der Veen, M. (2014). The materiality of plants:

plant–people entanglements. World archaeology,

46(5):799–812.

Ziat, M., Chin, K., and Raisamo, R. (2020). Effects of

visual locomotion and tactile stimuli duration on the

emotional dimensions of the cutaneous rabbit illusion.

In ACM ICMI 2020, pages 117–124.

Ziat, M. and Raisamo, R. (2017). The cutaneous-rabbit il-

lusion: What if it is not a rabbit? In WorldHaptics,

pages 540–545.

Gaia: A Social Robot to Help Connect Humans and Plants

407