Non-Invasive Load Recognition Model Based on CNN and Mixed

Attention Mechanism

Chenchen Zhang

1

, Yujun Song

2

, Dong Wang

3

, Shifang Song

2

, Xuesong Pan

3,*

and Lanzhou Liu

1

1

Ocean University of China, Qingdao, China

2

Qingdao Haier Air Conditioner Co., Ltd, Qingdao, China

3

Qingdao Haier Air Conditioner Co., Ltd, State Key Laboratory of Digital Household Appliances, Qingdao, China

Keywords: NILM, V-If Trajectories, Load Identification, Attention Mechanism.

Abstract: In recent years, deep learning has been widely applied in various fields, including the field of load recognition.

Machine learning methods such as SVM and K-means, as well as various neural network approaches, have

shown promising results. However, due to the significant differences among similar appliances and the

existence of multiple operating states for each appliance, misjudgments often occur during load recognition.

Therefore, this paper proposes a preprocessing method that transforms current-voltage data into V-If

trajectories. Additionally, a non-intrusive load recognition algorithm is presented, which incorporates a self-

designed convolutional neural network (CNN), a hybrid attention mechanism (ECA_NET and Spatial

attention mechanism, ECA-SAM), and a hybrid loss function (Center Loss and ArcFace, CA). The

effectiveness of this approach is demonstrated through simulation experiments conducted on the PLAID

dataset, achieving a remarkable 98% accuracy in the identification of electrical appliances.

1

INTRODUCTION

The concept of Non-Intrusive Load Monitoring

(NILM) was first proposed by Professor Hart from

the Massachusetts Institute of Technology (Hart,

1992). It aims to identify and monitor various

electrical appliances in households by analyzing the

current and voltage waveforms in the power system.

NILM technology can help households and

businesses better understand their energy

consumption, thereby improving energy efficiency

and reducing energy costs. Additionally, NILM

technology can be used in smart home systems and

energy management systems to achieve smarter and

more efficient energy management

The main focus of this study is the load

recognition module in Non-Intrusive Load

Monitoring (NILM), with an emphasis on load

identification methods. By leveraging a series of deep

learning techniques, the aim is to analyze the usage

patterns of common household appliances and

accurately identify the appliance categories. This

assists home users in gaining a better understanding

of their electricity consumption habits.

An algorithm for non-intrusive load recognition is

proposed, incorporating a self-designed

Convolutional Neural Network (CNN), a hybrid

attention mechanism (ECA_NET and Spatial

attention mechanism, ECA-SAM), and a hybrid loss

function (Center Loss and ArcFace, CA). This

algorithm aims to enhance the network's ability to

extract load features, while promoting intra-class

cohesion and inter-class dispersion, thereby

improving load recognition capability.

2

RELATE WORK

Since the concept of non-intrusive load monitoring

(NILM) was introduced, it has attracted significant

attention from scholars both domestically and

internationally. Researchers have been exploring

various methods to improve the effectiveness and

practicality of NILM.

In 1995, Leeb proposed an algorithm for transient

event detection to identify loads (Leeb, 1995). In

2000, Cole et al. used current harmonics as load

features and differentiated different loads by

calculating the city-block distance and Hamming

distance between harmonics, achieving load

recognition (Cole, 2000). In 2008, Suzuki et al.

introduced an NILM method based on integer

programming, formulating the detection problem as

an integer quadratic programming problem to achieve

70

Zhang, C., Song, Y., Wang, D., Song, S., Pan, X. and Liu, L.

Non-Invasive Load Recognition Model Based on CNN and Mixed Attention Mechanism.

DOI: 10.5220/0012274000003807

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 2nd International Seminar on Artificial Intelligence, Networking and Information Technology (ANIT 2023), pages 70-74

ISBN: 978-989-758-677-4

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

non-intrusive appliance load monitoring (Suzuki,

2008).

With the rapid development of deep learning, it

has also been applied in the field of NILM. In 2015,

Kelly et al. first applied denoising autoencoder

(DAE) models to the NILM problem, showing that

this model outperformed combinatorial optimization

and factorial hidden Markov models (

Kelly, 2015

). In

the same year, Lin et al. applied attention mechanisms

to the NILM problem, proposing two networks: MA-

net and MAED-net. The former is based on multi-

head attention mechanisms, while the latter combines

an encoder-decoder structure with multi-head

attention mechanisms (

Lin, 2020

).

3

METHOD

This chapter uses a hybrid attention mechanism to

improve the performance of convolutional neural

networks. Different from the CBAM attention

mechanism, a lighter ECA-Net channel attention

mechanism is chosen to replace SE-Net to improve

the performance of the network

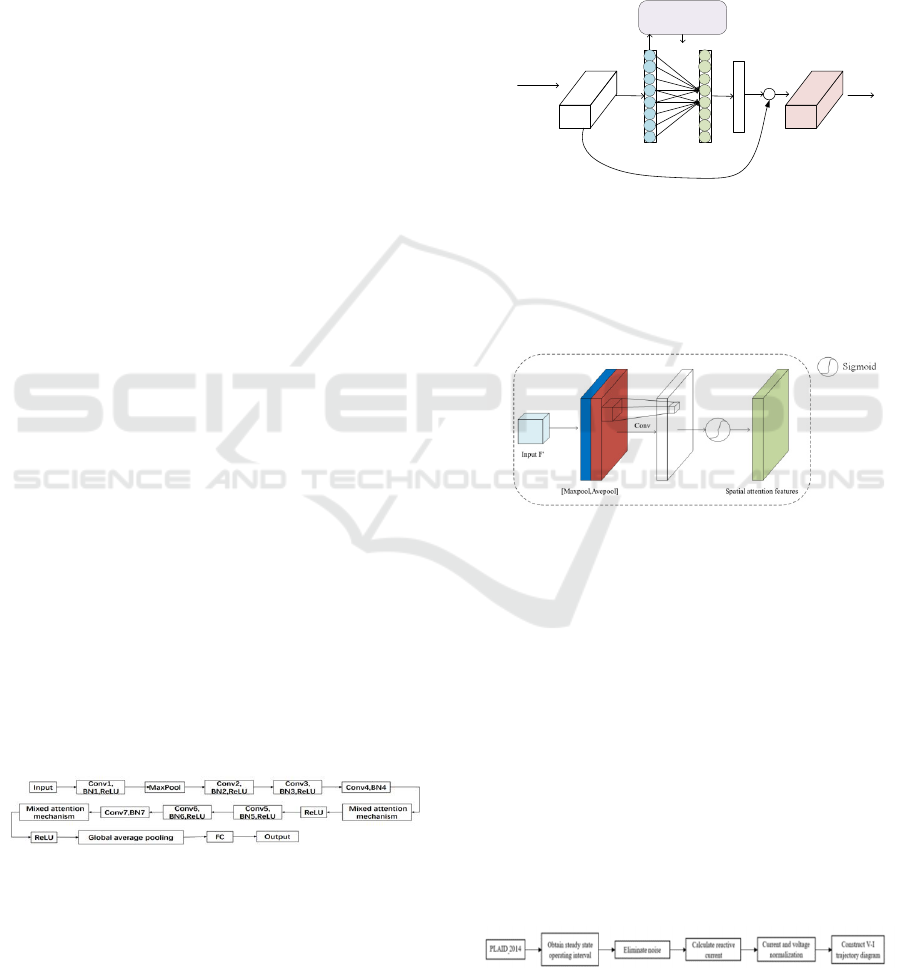

3.1 Network Architecture

In this study, the channel attention mechanism ECA-

Net and the spatial attention mechanism SAM are

incorporated into the network to build a non-intrusive

load recognition model based on CNN and hybrid

attention mechanisms. The purpose is to enhance the

network's ability to extract load features. The network

architecture is illustrated in Figure 1.

From Figure 1, it is evident that both the channel

attention mechanism and the spatial attention

mechanism are added after the fourth and seventh

convolutional layers, respectively. Additionally, the

ECA-Net module is positioned before the SAM

module. This configuration establishes the overall

structure of the network model used in the

experiment.

Figure 1: Network Architecture of Non-intrusive Load

Recognition Model based on CNN and Hybrid Attention

Mechanism.

3.2 Hybrid Attention Mechanism

ECA-Net, the channel attention mechanism: To

address the issue of diminished details in image

processing caused by the dimension reduction in SE-

Net, researchers introduced ECA-Net (Zhu, 2020).

ECA-Net effectively reduces the parameter

requirement of the channel attention mechanism,

while preserving the original channel dimension. As

a result, ECA-Net offers a more lightweight solution

that does not compromise on capturing intricate

details in comparison to SE-Net.

The structure of ECA-Net is depicted in Figure 2:

C

X

H

W

GAP

1x1xC

1x1xC

σ

X

C

X~

H

W

k=5

Adaptive Selection of

Kernel Size.

K = ψ(C)

Figure 2: ECA-Net Architecture.

The role of the Spatial Attention Module (SAM)

is to identify the most important parts within the

network for processing. The structure of the Spatial

Attention Module SAM is illustrated in Figure 3.

Figure 3: Spatial Attention SAM Architecture.

3.3 Constructing V-I Trajectory

Diagram

The V-I trajectory is a widely-used load characteristic

in load recognition applications. The main distinction

of the V-I trajectory lies in the different current

profiles. However, for resistive appliances such as

heaters and hair dryers, the V-I trajectories are

similar, making it difficult to differentiate between

them. To address this, researchers proposed the

application of Fryze power theory to decompose the

reactive current, thereby enhancing the

distinguishability of V-I trajectories.

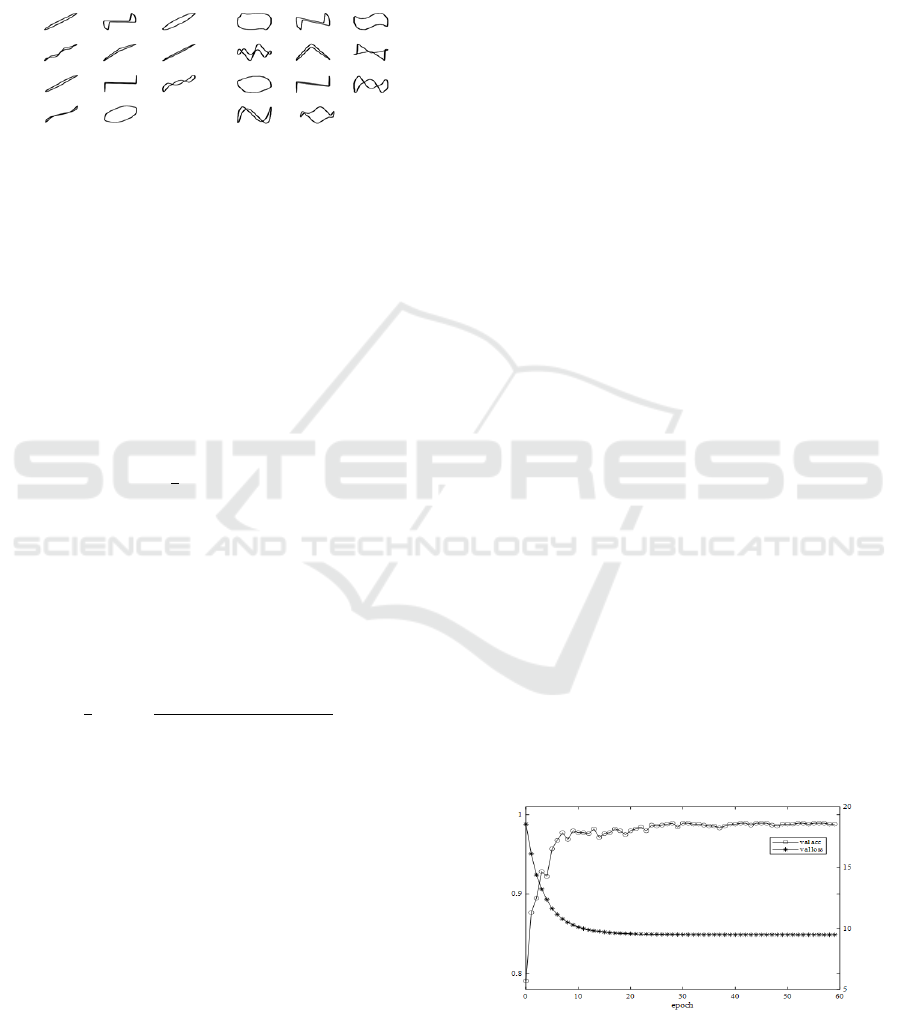

The construction process of the V-I

f

trajectory is

depicted in Figure 4:

Figure 4: V-I

f

trajectory construction flowchart.

Non-Invasive Load Recognition Model Based on CNN and Mixed Attention Mechanism

71

The generated V-I track image and V-I

f

track

image are shown in FIG. 5, respectively, where each

track image from left to right is: Air Conditioner,

Fluorescent Lamp, Fan, Fridge, Hairdryer, Heater,

Incandescent Light Bulb, Laptop, Microwave,

Vacuum, Washing Machine.

Figure 5: V-I and V-I

f

trajectory diagrams.

It is evident from Figure 5 that the V-I

f

trajectory

image exhibits greater specificity as a load

characteristic compared to the V-I trajectory image.

This enhanced specificity is more advantageous for

carrying out load recognition tasks.

3.4 Loss Function

Center Loss: center loss function was proposed in

2016(Wen, 2016). center loss function can narrow the

intra-class distance and aggregate similar samples.

The formula of center loss function is shown in (1) :

𝐿

=

∑

||𝑥

−𝐶

||

(1)

Where

𝐶

represents the feature center of the

𝑦

th class.

ArcFace Loss: The ArcFace loss function is an

improvement upon the SoftMax loss function. It is a

margin-based loss function that adds the margin m to

the angle directly by normalizing the feature vectors

and weights. This can be seen in (2):

𝐿

= −

∑

𝑙𝑜𝑔

((

))

((

))

∑

,

(2)

Where 𝜃

the range of θ is shown in (3):

𝜃

∈ [0, 𝜋−𝑚]

(3)

Here, y

i

represents the true class of sample i .

In this section, we attempt to combine the ArcFace

loss function and the center loss function to create a

hybrid loss function, which collectively guides the

training of the network and improves the convergence

speed of the model. The hybrid loss function (CA)

used in this section is shown in equation (4):

𝐿

= 𝜆𝐿

arcface

+ (1 −𝜆)L

center

(4)

Here, λ represents the hyperparameter that

balances the center loss function and the ArcFace loss

function.

4

EXPERIMENT

4.1 DataSet

Due to the significant intra-class variations in PLAID

and the presence of different brands and multiple

operating conditions of loads, this section uses the

PLAID dataset to construct V-I

f

trajectory images for

conducting experiments.

4.2 Evaluation Metrics

In this section, the accuracy metric ACC and the F1-

macro are adopted to evaluate the proposed non-

intrusive load identification method based on

CNN_ECA-SAM_CA. A bar chart is used for

comparison, providing a more intuitive representation

of the model performance.

4.3 Experiment Settings

During training, the batch size is set to 10, with a total

of 60 training iterations. The initial learning rate is set

to 0.001 and it is decayed exponentially every 3

epochs with a decay rate of 0.9. The Adam algorithm

is used as the training optimizer for the experiment.

The hyperparameter λ is set to 0.95 (as defined in

eq(4)).

5

RESULT

The accuracy and loss values of the proposed

CNN_ECA-SAM_CA model on the validation set are

shown in Figure 6 of this chapter. The horizontal axis

represents the training epochs, while the left vertical

axis represents the accuracy on the validation set and

the right vertical axis represents the loss on the

validation set. Both metrics tend to stabilize in the later

stages of training.

Figure 6: Validation training graph of the CNN_ECA-

SAM_CA model.

ANIT 2023 - The International Seminar on Artificial Intelligence, Networking and Information Technology

72

In order to validate the effectiveness and

feasibility of the proposed load identification

algorithm based on CNN_ECA-SAM_CA, this

chapter conducts ablation experiments including

CNN_ECA-SAM_AL, CNN_CBAM_CA, and

CNN_ECA-SAM_CA. Here, ECA-SAM represents a

hybrid attention mechanism composed of ECA-Net

and spatial attention mechanism, AL denotes the

ArcFace loss function, CBAM represents the CBAM

attention mechanism (Woo, 2018), and CA represents

the hybrid loss function composed of the center loss

function and ArcFace loss function. These

experiments aim to demonstrate the effectiveness of

the proposed hybrid attention mechanism and loss

function. Additionally, a comparative experiment is

designed to prove the effectiveness of V-I

f

trajectory

compared to V-I trajectory, as well as to compare with

advanced load identification methods.

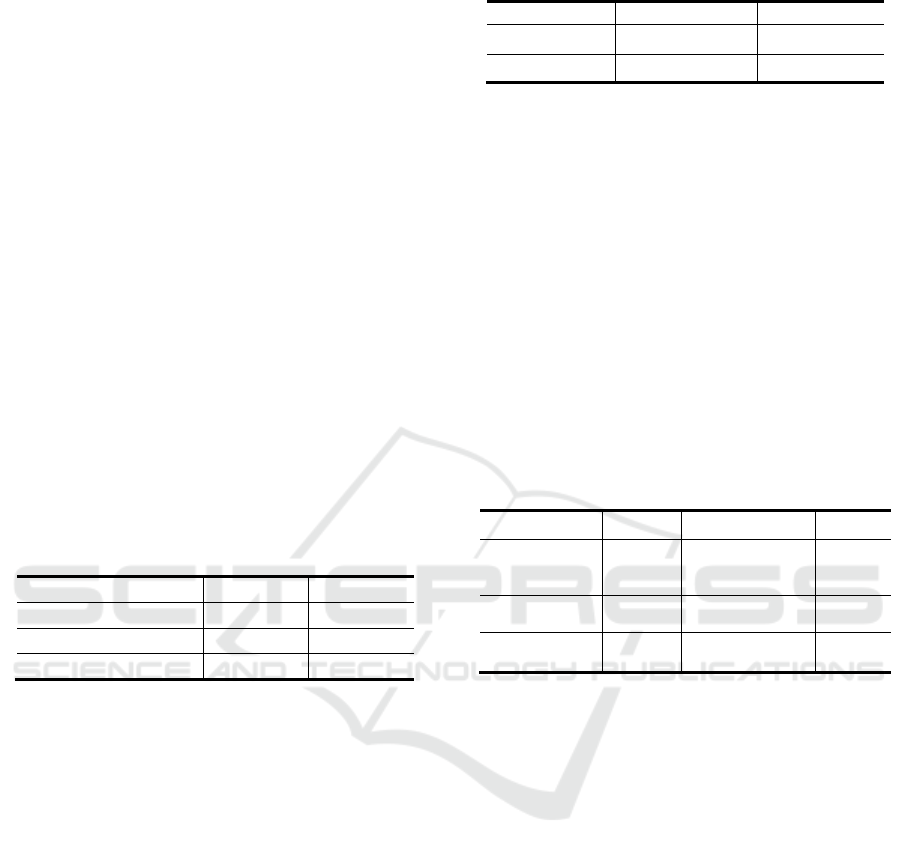

5.1 Experiment A

Conducting ablation experiments to validate the

effectiveness of the designed hybrid attention

mechanism and hybrid loss function

Table 1: Performance of three models on PLAID dataset-

Model ACC F1-macro

CNN_ECA-SAM_AL 0.9892 0.9824

CNN_CBAM_CA 0.9892 0.9828

CNN_ECA-SAM_CA 0.9928 0.9890

From Table 1, it can be seen that the comparative

experiments based on the CNN_CBAM_CA and

CNN_ECA-SAM_CA models are conducted to

validate the effectiveness of the proposed hybrid

attention mechanism. In terms of accuracy and F1-

macro, the former achieves a slight decrease of 0.36%

and 0.62% compared to the latter, demonstrating the

effectiveness of the hybrid attention mechanism for

non-intrusive load identification. Additionally, the

experiments based on the CNN_ECA-SAM_AL and

CNN_ECA-SAM_CA models aim to validate the

effectiveness of the proposed hybrid loss function. It

can be observed that, in terms of accuracy and F1-

score, CNN_ECA-SAM_AL achieves a slight

decrease of 0.36% and 0.66% compared to

CNN_ECA-SAM_CA, indicating the effectiveness of

the proposed hybrid loss function.

5.2 Experiment B

Conducting comparative experiments to validate the

effectiveness of the proposed V-I

f

trajectories relative

to V-I trajectories.

Table 2: Results of V-If and V-I operations.

Load Features ACC F1-macro

V-I 0.9699 0.9530

V-I

f

0.9928 0.9890

Table 2 illustrates the accuracy and F1-macro

scores of load identification based on CNN_ECA-

SAM_CA in terms of V-I and V-I

f

. It can be observed

that load identification based on V-I

f

trajectory images

achieves higher accuracy and F1-macro scores

compared to V-I trajectory images. This indicates that

V-I

f

trajectories are more suitable as features for load

identification.

5.3 Experiment C

Table 3 presents a comparison of the results between

the proposed model in this chapter and advanced load

identification algorithms.

Table 3: Performance results of three models on PLAID

dataset.

literature methods load feature F1-macro

References

(

DE BAETS L, 2018)

CNN

Grey Verhulst-

Integration (V-I)

trajectory

0.7760

References

(Faustine, 2020)

CNN

weighted recursive

graph

0.8853

this paper

CNN_ECA-

SAM_CA

V-I

f

trajectory 0.9890

From Table 3, it can be observed that the proposed

model in this chapter outperforms (DE BAETS, 2018)

and (Faustine, 2020) in terms of the F1-macro

performance metric, thus verifying the effectiveness

of the proposed model.

6

CONCLUSION

In this paper, a non-intrusive load identification

algorithm based on CNN_ECA-SAM_CA is

proposed. It utilizes the ECA-Net attention

mechanism and spatial attention mechanism, which

are added to a self-designed convolutional neural

network. The algorithm incorporates both the ArcFace

and Center loss functions to achieve intra-class

aggregation and inter-class dispersion. This solves the

problem of significant intra-class variations in the load

identification dataset. Through simulation

experiments conducted on the PLAID dataset, it is

demonstrated that this method effectively identifies

Non-Invasive Load Recognition Model Based on CNN and Mixed Attention Mechanism

73

appliances and performs well in identifying

ambiguous appliances

REFERENCES

Hart G W. Nonintrusive appliance load monitoring[J].

Proceedings of the IEEE, 1992, 80(12): 1870-1891.

Leeb S B, Shaw S R, Kirtley J L. Transient event detection

in spectral envelope estimates for nonintrusive load

monitoring[J]. IEEE Transactions on Power Delivery,

1995, 10(3): 1200-1210.

Cole A, Albicki A. Nonintrusive identification of electrical

loads in a three-phase environment based on harmonic

content[C]//Proceedings of the 17th IEEE

Instrumentation and Measurement Technology

Conference. IEEE, 2000, 1: 24-29

Suzuki K, Inagaki S, Suzuki T, et al. Nonintrusive

appliance load monitoring based on integer

programming[C]//2008 SICE Annual Conference.

IEEE, 2008: 2742-2747.

Kelly J, Knottenbelt W. Neural nilm: Deep neural networks

applied to energy disaggregation[C]//Proceedings of the

2nd Acm International Conference On Embedded

Systems For Energy-Efficient Built Environments. 2015:

55-64.

Lin N, Zhou B, Yang G, et al. Multi-head attention

networks for nonintrusive load monitoring[C]//2020

IEEE International Conference on Signal Processing,

Communications and Computing (ICSPCC). IEEE,

2020: 1-5.

B, Zhu P, et al. Supplementary material for ‘ECA-Net:

Efficient channel attention for deep convolutional neural

networks[C]//Proceedings of the 2020 IEEE/CVF

Conference on Computer Vision and Pattern

Recognition, IEEE, Seattle, WA, USA. 2020: 13-19.

Wen Y, Zhang K, Li Z, et al. A discriminative feature

learning approach for deep face

recognition[C]//European conference on computer

vision. Springer, Cham, 2016: 499-515.

Woo S, Park J, Lee J Y, et al. Cbam: Convolutional block

attention module[C]//Proceedings of the European

conference on computer vision (ECCV). 2018: 3-19.

DE BAETS L, Ruyssinck J, Develder C, et al. Appliance

classification using VI trajectories and convolutional

neural networks[J]. Energy and Buildings, 2018,

158(PT.1):32-36.

Faustine A, Pereira L. Improved Appliance Classification

in Non-Intrusive Load Monitoring Using Weighted

Recurrence Graph and Convolutional Neural

Networks[J]. Energies, 2020, 13.

ANIT 2023 - The International Seminar on Artificial Intelligence, Networking and Information Technology

74