Investigating the Use of the Thunkable End-User Framework

to Develop Haptic-Based Assistive Aids in the Orientation

of Blind People

Alina Vozna

a

, Giulio Galesi

b

and Barbara Leporini

c

ISTI-CNR, Pisa, Italy

Keywords: Accessibility, Inclusive Tools, Accessible Tools, Inclusive Cultural Experience, Thunkable.

Abstract: Nowadays mobile devices are essential tools for visiting cultural heritage sites. Thus, it is very important to

provide an inclusive cultural mobile experience for anyone. In this study, we investigate how to create

accessible apps to enhance the experience of the visually impaired people in outdoor cultural itineraries. In

such a context, the integration of specific features for improving accessibility for blind people may require

high skills. This study investigates how it is possible to exploit a simple-to-use developing environment,

Thunkable framework, which does not require specific technical competencies, to easily develop an accessible

app.

1 INTRODUCTION

The World Health Organization states that more than

2.2 billion people have visual impairments, of which

1 billion have preventable or untreated problems

(WHO, 2022). This makes tasks that require spatial

understanding challenging for them. Technical

solutions to perform everyday activities that were not

previously possible (Jones et al., 2019; Wang and Yu,

2017), such as touchscreen interactions and many

applications for orientation and mobility in both

indoor and outdoor environments, but few help with

cultural routes. Our study focuses on improving

cultural guides for visually impaired visitors. We're

developing tools, including an app, that provide tour

information and accessibility details. This should

improve the experience for visually impaired users

and make them part of the tool's target audience. A

major barrier to the diffusion of accessibility is the

high skill level of the people who develop these

accessible digital solutions. Through a case study, we

propose how to create inclusive tools that are also

easy to develop. Some design aspects related to the

inclusion of accessible maps and haptic feedback are

a

https://orcid.org/0009-0009-0179-6948

b

https://orcid.org/0000-0001-6185-0795

c

https://orcid.org/0000-0003-2469-9648

1

https://thunkable.com/#/

also considered in this study. Blind and visually

impaired people use screen readers with integrated

speech synthesis to navigate websites or mobile

applications. However, for the screen reader to work

properly, it must be programmed to read and

recognize all the information on the screen as

faithfully as possible, without causing confusion or

creating an additional cognitive load. A focus group

was conducted to gain insights from the blind and

visually impaired community about digital tools to

assist in visiting cultural routes. Participant feedback

informed the development of an application to test the

potential of the Thunkable

1

framework for creating

accessible interfaces. Specifically, this paper

investigates:

1) the Thunkable framework as an end-user

development tool for the development of an

accessible app,

2) the implementation of haptic feedback as an

assistive tool in the exploration of a digital map.

294

Vozna, A., Galesi, G. and Leporini, B.

Investigating the Use of the Thunkable End-User Framework to Develop Haptic-Based Assistive Aids in the Orientation of Blind People.

DOI: 10.5220/0012181900003584

In Proceedings of the 19th International Conference on Web Information Systems and Technologies (WEBIST 2023), pages 294-301

ISBN: 978-989-758-672-9; ISSN: 2184-3252

Copyright © 2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

2 RELATED WORK

Smartphones and tablets are widely used by people of

all ages, including people with disabilities. They

serve as important tools, especially for the visually

impaired, acting as assistive technologies. These

technologies improve the autonomy, safety, and

social engagement of visually impaired people,

reducing their isolation. Despite the accessibility

features built into cell phones, there are apps tailored

to assist blind users with various tasks, especially

when they are outside their homes, such as navigating

cities or shopping. These apps enable full use of the

device through features such as voice commands and

auditory feedback.

Bigham et al. (2010) developed VizWiz::LocateI,

a cell phone app addressing the challenge of handling

excessive information in apps, often visually encoded

through text and colors. This complexity poses

usability issues for visually impaired users.

Innovative solutions include Blindhelper (Meliones,

2016), aiding navigation for the blind. It integrates a

smartphone app with an embedded system for

accurate pedestrian detection via GPS, obstacle

identification, and traffic light status. The app offers

voice-guided navigation and user assistance.

Another notable application, BlindNavi, attempts

to simplify navigation by combining all relevant

information into a single application. This eliminates

the need to switch between multiple apps when

navigating in nature. It uses technologies such as

iBeacon to provide accurate navigation directions to

visually impaired users. BlindRouteVision offers a

novel approach by using a smartphone app to improve

pedestrian navigation for visually impaired people

without requiring assistance. This application detects

the status of traffic lights and identifies obstacles in

the user's path to ensure safer navigation. Augmented

reality has also made strides in helping the visually

impaired. A mobile application uses augmented

reality to suggest optimal routes while taking safety

into account. It evaluates predefined parameters to

discard routes that pose a potential risk to the mobility

of the visually impaired (Medina-Sanchez, 2021). In

the area of public transportation, an app has been

developed to address a common challenge faced by

visually impaired individuals: understanding when to

signal a stop on public transit. This app informs users

of their location along the route, granting them more

independence (Lima, 2018). In contrast, another

application employs a camera and voice messages to

provide real-time identification of objects, as well as

their distance and direction relative to the camera.

(Meenakshi, 2022).

Several factors must be considered when

designing a website or application, such as the use of

alternative text, appropriate color contrasts, and the

use of markup instead of images to convey

information. All of these factors are organized by the

W3C in the form of guidelines (W3C, 2023). The goal

of this work is to design useful tools for accessible

use of itineraries, cultural (and other) routes.

3 METHOD

In this work, we assessed the accessibility support of

the Thunkable app development framework, which

offers a 'Design' section for UI layout and a 'Blocks'

section to link components. The Thunkable Live

application must be installed on the mobile device to

test the prototype on the smartphone. The main

purpose of this work is to find out whether the

framework enables the developers to create

accessible interfaces. Thus, features such as

alternative descriptions, labels of buttons and links, as

well as the inclusion of haptic features have been

considered in the design. Design specifications have

been gathered by involving potential end-users who

expressed their expectations and preferences about

tools that should be available along the itinerary.

A prototype app has been therefore designed and

developed with the Thunkable framework. In order to

make the itinerary as inclusive as possible, the use of

the QR code was exploited to make the traditional

panels that are usually available along the itinerary

usable for the blind. This is intended to enrich the user

experience and thus be a practical indication for

planners.

4 THE APP PROTOTYPE

4.1 User Requirements

To gather information from visually impaired and

blind users about their preferences and expectations

regarding the application, a series of remote focus

groups were organised. Ten users participated in the

focus groups, six blind and four visually impaired.

The focus groups addressed three different topics:

outdoor mobility (in the context of walking with a

mobile navigator/orienteer), orientation (and the

ability to explore a digital map and how it is perceived

by the user), and the user experience (and how

different technologies are used in everyday

environments, from computers to cell phones).

Investigating the Use of the Thunkable End-User Framework to Develop Haptic-Based Assistive Aids in the Orientation of Blind People

295

Some specifications for interacting with the

application were discussed with users. These include

a simple and direct navigation menu, a help button to

always assist the user in navigating, various search

options (verbal, keyboard input, and a dropdown

menu). In order to be accessible, it is important that

a) the correct labelling allows the screen reader to

read all the icons, buttons and text, and b) all the

elements of the user interface have semantics so that

the user knows the function of each element and

especially how to interact with them.

The preferences and expectations of blind users

can be summarized as follows.

In terms of mobility, users mostly prefer

vibration-based instructions, although this could be

challenging for those unfamiliar with haptic

technology. Instructions on how to use and adjust the

vibrations could help. Preferences vary: some prefer

continuous vibrations, while others opt for subtle

audible cues. Warning signals for distances, stops,

benches, crosswalks, and traffic lights are critical.

Trail verification is essential for wayfinding, which

includes a star-based (from 1 to 5) rating system and

customizable text inputs. Marking points of interest

with distinct vibrations or voice notifications, as well

as retrievable vibrations when a POI is reached, are

beneficial. Testing Thunkable's custom haptic

capabilities during map development showed great

interest. Since the application is not intended to be

used only by blind people, it was decided to complete

a questionnaire (via Google Form) to understand if

and how certain functions and features are useful for

sighted people. Questions addressed user

characterization (gender, age, how often they hike),

information that might be useful for viewing the trail,

and points of interest. A question about the type of

feedback preferred for detecting POI, and finally,

interest in wearing a device that provides more

information. Results showed overlap in desired

features between the two groups. For example, many

(50%) sighted users expressed interest in timely

information and in having content read aloud rather

than reading from the screen.

4.2 App Architecture

The app was designed to facilitate user interaction

and navigation through content with a simple and

clear layout. Our goal was to develop an accessible

mobile application that would allow visually

impaired users to interact via voice commands and

multi-touch. We designed the user interface with

2

https://www.airtable.com/

high-contrast background and foreground colors so

that visually impaired users could better identify

components on the screen. We developed the

application so that it could be used in conjunction

with the screen reader by adding a brief description of

the components that users could interact with.

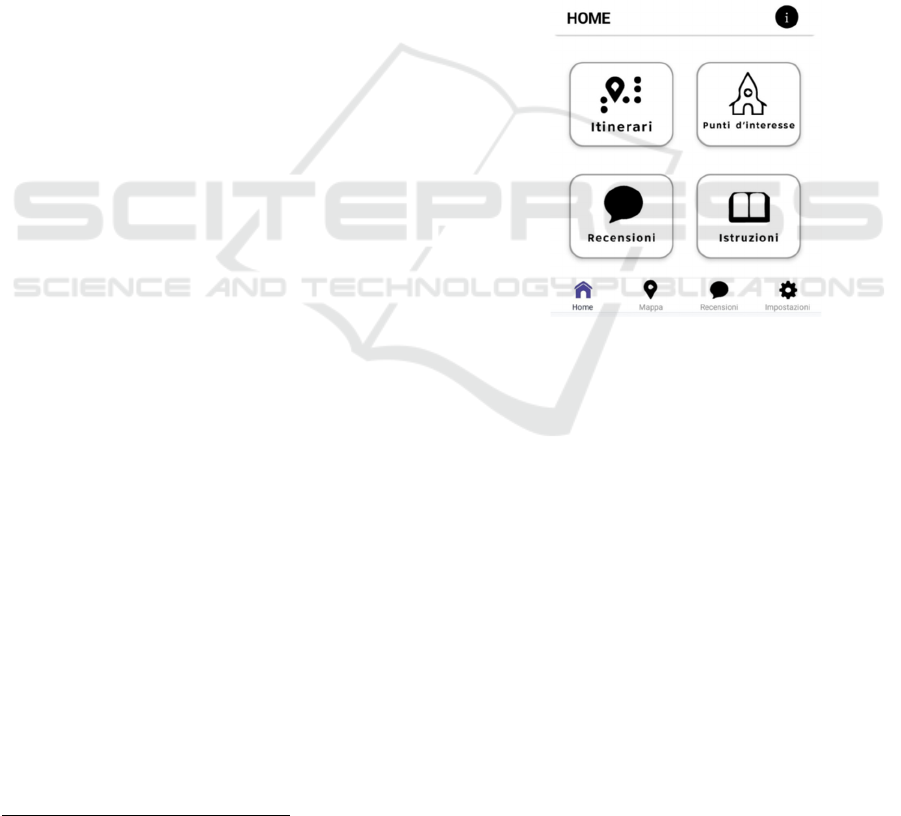

Homepage. On the home page, users will

immediately find the most important elements,

organised into four macro sections: Itineraries, Point

of Interest, Reviews and Instructions. A navigation

menu at the bottom of the app is very useful to

navigate within the different pages without getting

lost, with the possibility to return to the home page by

simply clicking on the left button. The menu is

divided into four main pages: Home, Map, Reviews

and Settings (see figure 1).

Figure 1: Screenshot of the home page.

Itineraries. In this section, the user can select an item

from a list of itineraries, with the possibility of

performing a voice search, entering the name in the

search box, or selecting by location: Toscana,

Liguria, Sardegna, Corsica, PACA (Provenza-Alpi-

Costa Azzurra). To implement the list, the 'Data

viewer list' component was added, which allows to

link a data source and select which data to display. In

the case of this project, it was decided to place all the

data in Airtable

2

, a spreadsheet that can be used to

create data tables in various formats. The information

to be displayed included the title of the trail, a subtitle

representing the region in which it is located, and an

image of the trail map. Each itinerary opens a

description page with information on name, length,

altitude, routes and navigation (for download).

WEBIST 2023 - 19th International Conference on Web Information Systems and Technologies

296

Point of Interest. In this section, the user can choose

from a list of places of interest with appropriate

search options, including selection by category:

Church, Hermitage, Parish. The data viewer list was

also used in this case, in order to be able to visualise

the data via Airtable, but compared to the itineraries,

it was decided to add a text alongside the title and

subtitle. This text represents the different POI

categories. The description page displays information

about the name, pictures, five icons for accessibility

and five icons for the vehicles you can use to reach

the place, as illustrated in figure 6.

Reviews. In the “Reviews" section (see figure 2), the

user can see all the comments left by other users. In

the preview, the name of the route and the type of

disability of the commentator are highlighted.

Clicking on one of the comments opens the page with

the comment and the rating (stars received) for the

different categories: Route, Difficulty, Services,

Accessibility.

Figure 2: Screenshot of the Review page.

Instructions. Finally, the 'Instructions' button is

designed to help users navigate each page. In addition

to this button on the home page, a small button has

been added in the upper right corner to provide the

ability to access the instructions at any time. The

tutorials are organised so that the page titles are

displayed and clicking on one of these titles displays

the explanatory text. The buttons have been designed

in line with the app's colour palette, i.e. white text on

a purple background.

4.3 Accessibility Features

Blind and visually impaired people use a screen

reader with integrated speech synthesis to navigate

web portals or mobile devices. However, for the

screen reader to work properly, it must be

programmed so that all information on the screen is

read and recognized as faithfully as possible. By

following the W3C Web Accessibility Guidelines

(WCAG) 2.2 we were able to make the application

suitable for use by visually impaired users and others.

According to users' preferences and expectations, the

following accessibility features were implemented in

the prototype app. This allowed us to test whether the

Thunkable development environment enables the

inclusion of such features.

Microphone. Using the microphone for searching is

a useful navigation option for all user categories. To

enable this tool, the voice recognition component has

been integrated. This is an invisible component that

listens to the target voice and returns a text value after

the entire sentence is spoken. It stops listening when

all sounds have been revealed.

Figure 3: Microphone usage code.

As illustrated in figure 3, for the text value it

returns, 'Text's input text' was set to the value of the

speech recognizer. So as soon as the speech is

finished, the text is transferred to the search box.

When the user taps the microphone once, a short

vibration alerts them that it is a record button. If, on

the other hand, it is held down, a longer vibration

alerts the user to the possibility of recording. Since it

is not enough to convey information only via a

vibration, this feedback was combined with a voice

output for better understanding.

Page Structure. Navigation between pages is made

difficult when information is arranged without a

logical structure. Moreover, too much information on

a single page leads to such confusion that users are

forced to abandon the page, leaving them frustrated.

Thus, it is important to arrange all elements and

information in a simple and easy-to-understand way,

create simple pages and move excess information.

Additional information can be activated via buttons if

the user has an interest in going deeper, saving time

Investigating the Use of the Thunkable End-User Framework to Develop Haptic-Based Assistive Aids in the Orientation of Blind People

297

listening to the excess information. Finally, on the

travel page, it was decided to structure the

information about altitudes and elevations in a way

that can be better read by the screen reader, avoiding

columns that are difficult to decipher by the screen

reader.

Alternative Text/Description. According to W3C

guidelines, it is necessary to insert alternative text for

images, video graphics and buttons. Since there is no

way to insert alternative text for images in Thunkable,

all images have been converted to buttons. This

makes it possible to insert text that is not visible on

the page, but can be recognized by the screen reader

and thus read by users. For accessibility reasons, each

button has text describing its function and explaining

what it depicts.

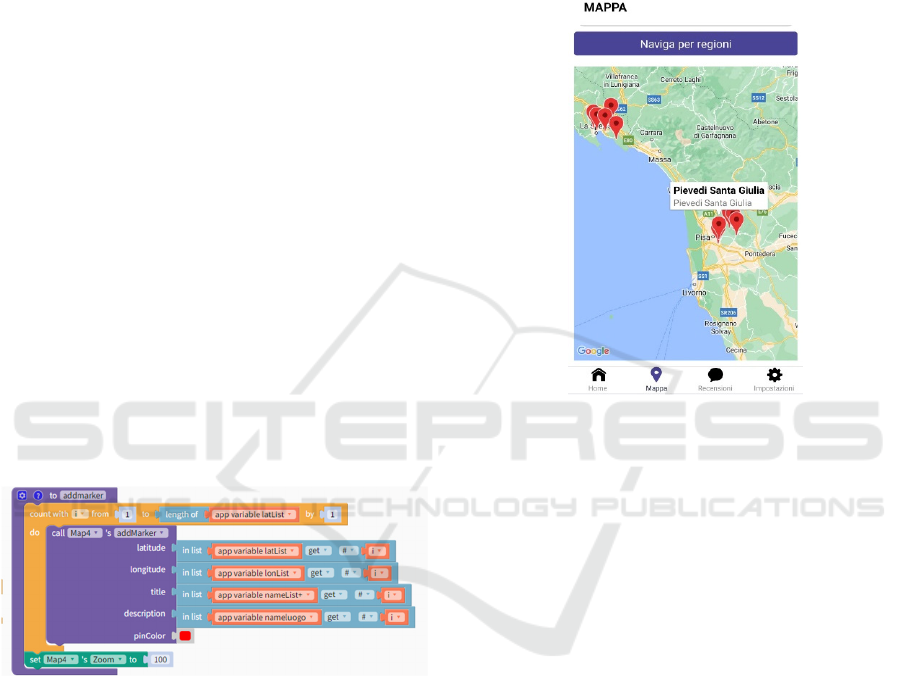

Digital Map. The biggest challenge was making the

map accessible, as Thunkable does not have the tools

to create it. To make the map more user-friendly, the

first step was to include a pop-up warning about

activating GPS. Activating the code starts the position

sensor, which detects the user's current position and

displays it on the map with a different colored marker.

Then all the markers of the points of interest of the

different locations are displayed as illustrated in

figure 4.

Figure 4: Example of code to add marker.

As illustrated in figure 5, the ‘Navigate by Region’

button allows the user to select one of the six regions

to avoid moving around the screen and losing control

of the map. By selecting the region, the map zooms in

on the selected location with its points of interest.

Clicking on POI activates a dual recognition vibration

with speech synthesis that announces the name

(Paratore, 2023).

Dark Screen Mode. Dark mode or dark theme

indicates a negative contrast polarity in which text

and background colors are inverted and it is an

excellent aid for visually impaired people as, thanks

to the dimming of the screen allows them to see and

read content and reduces eye fatigue. During the

questionnaire administered to blind and visually

impaired users, it emerged that most visually

impaired users prefer dark mode mainly because of

the sunlight reflecting off the screen does not allow

them to view the content present. Therefore, it was

decided to include this mode in the project as well.

Figure 5: Map showing the points of interest and the

“Navigate by Region” button.

Haptic. Haptic systems are critical in providing users

with a variety of feedback on their actions, often

using sound and vibration. This project focused on

optimizing haptic feedback, primarily through sound

and vibration, for assistive smartphone technologies.

The design was primarily aimed at improving the

effectiveness of interactions, especially for blind

users. To make vibration informative for blind users,

a combination of adaptive vibration and audio

feedback was considered. A specific example is the

use of double vibration patterns with a short pause to

distinguish interactions. These intuitive vibrations

were complemented by clear explanations on the

app's user interface to enhance usability. The study

also explored the integration of auditory cues with

haptic feedback, as both modalities can convey

information synergistically. This hybrid approach

was evident in voice search, where different

vibrations combined with voice instructions

improved users' understanding of available actions.

In addition, the study presents an innovative approach

by combining audio feedback with haptics. A notable

example is the "play or pause" function on the page

describing points of interest (POI). This feature

consists of two different buttons, "Play" and "Stop","

WEBIST 2023 - 19th International Conference on Web Information Systems and Technologies

298

which allow the user to trigger the voice output of the

description text or to pause it if needed. The choice of

these buttons is based on the recognition that not all

users rely exclusively on screen readers. These

buttons provide a comprehensive solution for the

different user groups of the app with different

preferences. It's worth noting that this feature isn't

only beneficial for the visually impaired, but enriches

the experience for all users.

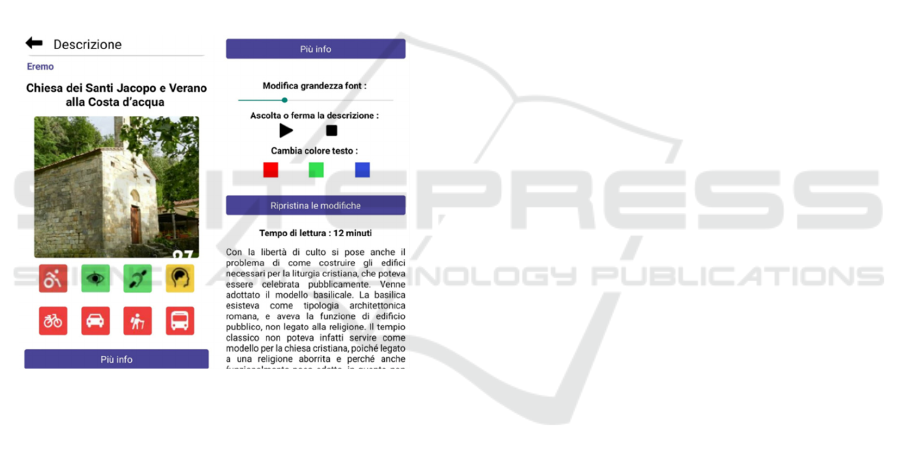

Customization. A button is located on the

description page of all POIs. It is used to change the

text size, which by default starts at 18 and goes up to

50. It is also possible to have the description read

aloud using a text-to-speech function. Finally, it is

possible to change the color of the text. A very useful

feature not only for the visually impaired, but also for

the color blind or people with other visual

impairments.

Figure 6: Screenshot of the description POI page.

5 EVALUATION

5.1 User Testing

In collaboration with the Italian Association of the

Blind (Unione Italiana Ciechi e Ipovedenti), a test

was conducted to evaluate the use of the prototype by

visually impaired people. During the tests, the

difficulties encountered by the users were observed

and analysed in order to identify the possible

problems and find solutions to improve the

application or the function.

For the tests, an Android-based smartphone was

chosen as the test device, on which the Thunkable

Live development platform was installed. In this way,

it is possible to perform a live test considering all the

developed components mentioned in the previous

section.

Tasks. To evaluate usability, a series of tasks were

outlined for users to complete. These tasks involve

goal-oriented activities, requiring descriptions of the

steps taken, task duration, and whether they were

successfully accomplished. Six tasks were proposed

to each user:

1. From the ‘map section’, navigate by choosing a

region and find the different POIs of the selected

region.

2. Within the ‘POI page’, filter the search in

"Church," by category selection, choose one and

read the description page.

3. Perform a search for POIs via the search box.

4. In the ’Itineraries page’, search for a route via

voice search, select the route and read the found

page.

5. In the description of the itineraries, add a user

review.

6. Read the reviews added by other users.

SUS – System Usability Scale (Sauro, 2011). Once

the user-test tasks have been completed, users are

presented with a short questionnaire to be completed,

the System Usability Scale. The SUS is a 10-item

questionnaire with 5 response options, where 1

indicates "strongly disagree", while 5 indicates

"strongly agree". The questionnaire is composed as

follows:

1. I think that I would like to use this system

frequently.

2. I found the system unnecessarily complex.

3. I thought the system was easy to use.

4. I think that I would need the support of a

technical person to be able to use this system.

5. I found the various functions in this system were

well integrated.

6. I thought there was too much inconsistency in

this system.

7. I would imagine that most people would learn to

use this system very quickly.

8. I found the system very cumbersome to use.

9. I felt very confident using the system.

10. I needed to learn a lot of things before I could

get going with this system.

5.2 Participants

Only 5 users gave their consent to participate in the

test": 4 blind and 1 visually impaired, aged from 45

Investigating the Use of the Thunkable End-User Framework to Develop Haptic-Based Assistive Aids in the Orientation of Blind People

299

to 60. Four users were male and one female. Two of

blind participants (one male and one female) are very

expert users while the other ones considerate

themselves as intermediate users.

5.3 Results

During the test, time was measured and interaction

with the product was analysed: all tasks were

completed smoothly in a short time, in average about

five minutes, for completing all the tasks. The

interaction did not show major difficulties in

understanding the use of the interfaces except in a few

cases that were reported in order to improve the

application.

Limitations. The development of the app

encountered several challenges that underscored the

complexity of creating an optimal user experience for

people with visual impairments. One major hurdle

was the use of the map function, particularly the

accurate selection of markers for navigation. This

underscored the need for precise design of haptic

feedback to effectively distinguish between different

inputs.

Inconsistencies in the wording of the rating page

on different devices also proved to be a drawback.

The content appeared in different languages on

different cell phones, which can create a language

barrier for users. This underscores the importance of

thorough cross-device testing to ensure consistency

regardless of user location or device settings.

Screen reader accessibility issues were another

problem. Some screen readers had difficulty

recognizing the microphone button for voice input,

which hindered voice search functionality.

Additionally, the 'Search' button lacked clear

warnings upon activation, potentially confusing users

during search operations. The absence of

comprehensive auditory and tactile cues highlighted

the necessity for more intuitive and complete

feedback mechanisms.

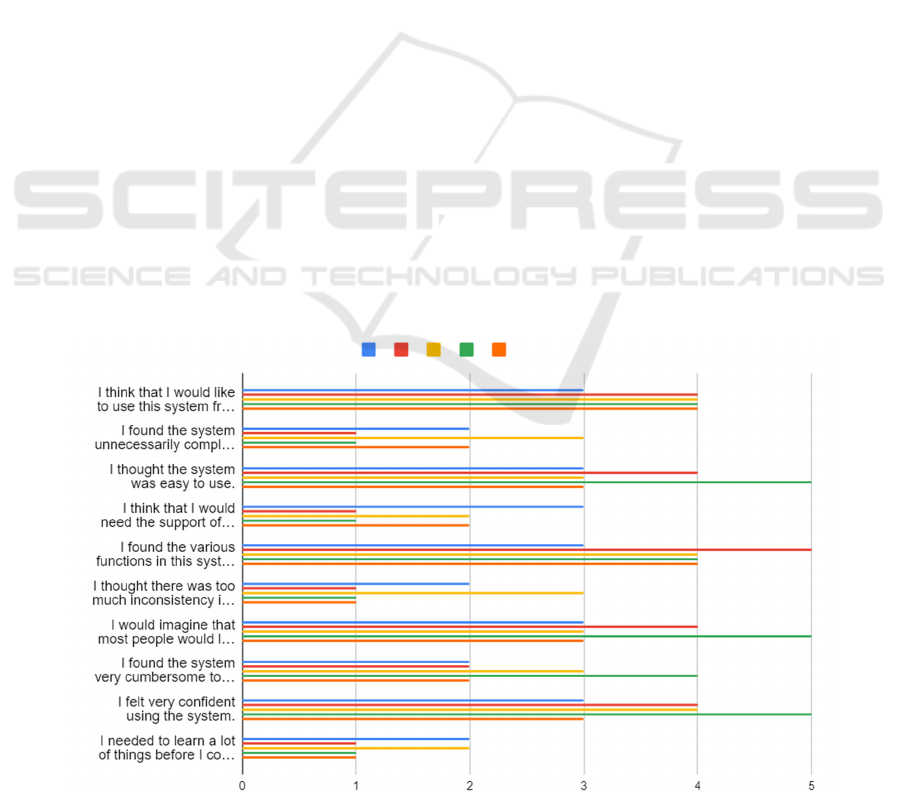

Concerning the SUS questionnaire, the results

collected so far show a positive average, where the

user did not find difficulties in using the system,

finding it simple and well organized. Figure 7 shows

the results of the SUS questionnaire.

6 CONCLUSIONS AND FUTURE

WORK

This work investigated the accessibility by design

offered by the Thunkable Framework. The study

found that creating an accessible app with Thunkable

is suitable for people without programming skills.

However, ensuring accessibility often requires

specific customization, as certain features are not

directly supported by the framework. In particular,

being compliant with accessibility principles such as

alternative image descriptions requires specific

solutions. This challenge, already noted in other

development environments such as App Inventor

(Leporini, 2020), underscores the importance of the

Figure 7: The results of the SUS questionnaire responses.

WEBIST 2023 - 19th International Conference on Web Information Systems and Technologies

300

contributions of this study. The key innovation lies in

the development of an app prototype using the

Thunkable framework. This app is designed to

facilitate navigation and exploration of outdoor

environments, with a focus on travel routes. The app

seamlessly provides information about itineraries and

points of interest along the routes and allows users to

post and access reviews from others. The app also

integrates a digital map for navigating different

regions. What makes the app special is the explicit

consideration of accessibility during development.

This includes the inclusion of alternative text, voice

dictation via the microphone, and the implementation

of haptic feedback, which enhances the user

experience when exploring the digital map.

The evaluation found that some adjustments are

needed to address the limitations of the Thunkable

platform. Therefore, a recommendation for future

development of this project is to improve accessibility

through targeted design. It might be useful to provide

users with an overview of the route within the routes

in the form of a video presentation or photos of key

points. Also, it would be a good option to offer the

possibility to select a language other than Italian.

During the evaluation, difficulties arose in the

map design, so some information essential for good

usability could not be included. In this case, with a

view to the future, the code could be modified to store

points of interest and users' favourite routes.

The Thunkable platform is constantly being

updated, and new features could overcome the

highlighted limitations, making it easier to exploit

multimedia and multimodal functionalities for

enhancing inclusion; as a result, we intend to conduct

a new test with a larger number of participants

shortly.

ACKNOWLEDGMENTS

This work was funded by the Italian Ministry of

Research through the research projects of national

interest (PRIN) TIGHT (Tactile InteGration for

Humans and arTificial systems).

REFERENCES

Bigham, J. P., Jayant, C., Miller, A., White, B., Yeh, T.

(2010). VizWiz:: LocateIt-enabling blind people to

locate objects in their environment. In 2010 IEEE

Computer Society Conference on Computer Vision and

Pattern Recognition-Workshops (pp. 65-72). IEEE.

Hsuan-Eng Chen, Yi-Ying Lin, Chien-Hsing Chen, and I-

Fang Wang (2015). BlindNavi: A Navigation App for

the Visually Impaired Smartphone User. In Proceedings

of the 33rd Annual ACM Conference Extended

Abstracts on Human Factors in Computing Systems

(CHI EA '15). Association for Computing Machinery,

New York, NY, USA, 19–24.

Jones, N., Bartlett, H. E., & Cooke, R. (2019). An analysis

of the impact of visual impairment on activities of daily

living and vision-related quality of life in a visually im-

paired adult population. British Journal of Visual

Impairment, 37(1), 50-63

Leporini, B., & Catanzaro, G. (2020). App Inventor as a

Developing Tool to Increase the Accessibility and

Readability of Information: A Case Study. In 9th

International Conference on Software Development

and Technologies for Enhancing Accessibility and

Fighting Info-exclusion (pp. 71-75).

Lima, A., Mendes, D., & Paiva, S. (2018). Outdoor

navigation systems to promote urban mobility to aid

visually impaired people. Journal of Information

Systems Engineering & Management, 3(2), 14.

Medina-Sanchez, E. H., Mikusova, M., & Callejas-Cuervo,

M. (2021). An interactive model based on a mobile

application and augmented reality as a tool to support

safe and efficient mobility of people with visual

limitations in sustainable urban environments.

Sustainability, 13(17), 9973.

Meenakshi, R., Ponnusamy, R., Alghamdi, S., Khalaf, O. I.,

& Alotaibi, Y. (2022). Development of a Mobile App to

Support the Mobility of Visually Impaired People.

CMC-Comput. Mater. Contin, 73, 3473-3495.

Meliones, A., Filios, C. (2016). Blindhelper: A pedestrian

navigation system for blinds and visually impaired. In:

Proceedings of the 9th ACM International Conference

on PErvasive Technologies Related to Assistive

Environments, pp. 1-4, ACM.

Paratore, M. T., & Leporini, B. (2023). Exploiting the

haptic and audio channels to im-prove orientation and

mobility apps for the visually impaired. Universal

Access in the Information Society, 1-11.

Sauro, J. (2011). Measuring Usability with the System

Usability Scale (SUS), https://measuringu.com/sus, last

accessed 2023/06/20.

Theodorou, P.; Tsiligkos, K.; Meliones, A.; Filios, C.

(2022). An Extended Usability and UX Evaluation of a

Mobile Application for the Navigation of Individuals

with Blindness and Visual Impairments Outdoors—An

Evaluation Framework Based on Training. Sensors

2022, 22, 4538.

W3C (2023). Web Content Accessibility Guidelines

(WCAG) 2.2 W3C Candidate Recommendation Draft

17 May 2023. https://www.w3.org/TR/WCAG22/

Wang, S., & Yu, J. (2017). Everyday information behaviour

of the visually impaired in China. Information

Research: An International Electronic Journal, 22(1),

n1.

WHO (2022). World Health Organization.

Report on

Blindness and vision impairment, retrieved at Blindness

and vision impairment (who.int)

Investigating the Use of the Thunkable End-User Framework to Develop Haptic-Based Assistive Aids in the Orientation of Blind People

301