Integrating Unsupervised Clustering and Label-Specific Oversampling to

Tackle Imbalanced Multi-Label Data

Payel Sadhukhan

1 a

, Arjun Pakrashi

2 b

, Sarbani Palit

3 c

and Brian Mac Namee

2 d

1

Institute for Advancing Intelligence, TCG CREST, Kolkata, India

2

School of Computer Science, University College, Dublin, Ireland

3

Computer Vision and Pattern Recognition Unit, Indian Statistical Institute, Kolkata, India

Keywords:

Multi-Label, Imbalanced Learning, Unsupervised Clustering, Oversampling.

Abstract:

There is often a mixture of very frequent labels and very infrequent labels in multi-label datasets. This varia-

tion in label frequency, a type class imbalance, creates a significant challenge for building efficient multi-label

classification algorithms. In this paper, we tackle this problem by proposing a minority class oversampling

scheme, UCLSO, which integrates Unsupervised Clustering and Label-Specific data Oversampling. Cluster-

ing is performed to find out the key distinct and locally connected regions of a multi-label dataset (irrespective

of the label information). Next, for each label, we explore the distributions of minority points in the cluster

sets. Only the intra-cluster minority points are used to generate the synthetic minority points. Despite having

the same cluster set across all labels, we will use the label-specific class information to obtain a variation in the

distributions of the synthetic minority points (in congruence with the label-specific class memberships within

the clusters) across the labels. The training dataset is augmented with the set of label-specific synthetic minor-

ity points, and classifiers are trained to predict the relevance of each label independently. Experiments using

12 multi-label datasets and several multi-label algorithms shows the competency of the proposed method over

other competing algorithms in the given context.

1 INTRODUCTION

In a multi-label dataset, a single datapoint is associ-

ated with more than one relevant label. This type of

data is obtained naturally from real-world domains

like text (Joachims, 1998; Godbole and Sarawagi,

2004), bioinformatics (Barutcuoglu et al., 2006),

video (Qi et al., 2007), images (Boutell et al., 2004;

Nasierding et al., 2009; Li et al., 2014) and mu-

sic (Li and Ogihara, 2006). We denote a multi-

label dataset as D = {(x

i

, y

i

), i = 1, 2, . . . , n}. Here,

x

i

is the i

th

input datapoint in d dimensions, and

y

i

= {y

i1

, y

i2

, . . . , y

iq

} is the corresponding label as-

signment for x

i

among the possible q labels. y

ik

in-

dicates if the k

th

label is applicable (or relevant) for

the i

th

datapoint: y

ik

= 1 denotes that the k

th

label is

relevant to x

i

, and y

ik

= 0 denotes that the k

th

label

is not applicable, or is irrelevant, to x

i

. The target of

a

https://orcid.org/0000-0001-7795-3385

b

https://orcid.org/0000-0002-9605-6839

c

https://orcid.org/0000-0002-4105-6452

d

https://orcid.org/0000-0003-2518-0274

multi-label learning is to build a model that can cor-

rectly predict all of the relevant labels for a datapoint

x

i

.

Multi-label datasets are often found to possess

an imbalance in the representation of the different

labels—some labels are relevant to a very large num-

ber of datapoints while other labels are only relevant

to a few. The quantitative disproportion in the rep-

resentation of different classes of a dataset is known

as class imbalance problem (Das et al., 2022). In a

binary class-imbalanced dataset, the over-represented

and the under-represented classes of the dataset are

termed as the majority class and the minority class re-

spectively. Let the set of the majority points and the

set of the minority points in an imbalanced dataset be

denoted by Ma j and Min. Imbalance ratio quantifies

the degree of disproportion in a dataset and it is rep-

resented as follows.

imbalance ratio =

|Ma j|

|Min|

(1)

In a multi-label dataset, the number of labels, L is

greater than 1. We have a positive class and a negative

Sadhukhan, P., Pakrashi, A., Palit, S. and Namee, B.

Integrating Unsupervised Clustering and Label-Specific Oversampling to Tackle Imbalanced Multi-Label Data.

DOI: 10.5220/0011901200003393

In Proceedings of the 15th International Conference on Agents and Artificial Intelligence (ICAART 2023) - Volume 2, pages 489-498

ISBN: 978-989-758-623-1; ISSN: 2184-433X

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

489

class for each label. Often, the labels in a multi-label

dataset have widely varying imbalance ratios and this

is a challenging aspect for building multi-label clas-

sification models which will work good on all imbal-

ance ratios.

Addressing label-specific imbalances to improve

multi-label classification is an active field of re-

search, and several methods have been proposed to

address this problem (Zhang et al., 2020; Daniels and

Metaxas, 2017; Liu and Tsoumakas, 2019; Pereira

et al., 2020a). There is, however, room for sig-

nificant improvement. Label-specific oversampling

can be a solution to address the issue of varying la-

bel imbalances in multi-label datasets. In this light,

we propose UCLSO, which integrates Unsupervised

Clustering and Label Specific data Oversampling.

The essence of the UCLSO approach is to integrate i]

label-invariant information — information about the

proximity of points (through clustering) and ii] label-

specific information — about the class-memberships

of the points, to address the issue of class imbalance

in multi-label datasets. In this work, i) synthetic mi-

nority points are generated from local data clusters

(obtained from unsupervised clustering of the fea-

ture space), and ii) the cardinality of the label-specific

oversampled minority set obtained in a cluster will de-

pend on the cluster’s share of label-specific minority

data. In effect, the method oversamples the minority

class by focusing on the cluster-specific distributions

of the minority instances. The key highlights of our

work are,

• We propose UCLSO, a new minority class over-

sampling method for multi-label datasets, which

generates synthetic minority datapoints specifi-

cally in the minority regions of the input space.

• UCLSO works towards preserving the intrinsic

class distributions of the local clusters. The goal

is avoid generating synthetic minority instances

in the majority region, or as outliers in the input

space.

• UCLSO ensures that the number of synthetic mi-

nority points added in a region is in accordance

with the original minority density in that region.

• In UCLSO, instances belonging to the clus-

ters are same for all the labels but their label-

specific class-information varies. We integrate

this label-specific class information (by virtue of

memberships) and the physical proximity of the

points (obtained through clustering) to generate

the label-specific synthetic minority points.

• An empirical study involving 12 well-known,

real-world multi-label datasets and nine com-

peting methods illustrates the competency of

UCLSO in handling label-specific imbalance of

multi-label data over other competing methods.

The remainder of the paper is structured as follows.

Section 2 discusses the relevant existing work in the

multi-label domain. In Section 3 we first describe the

motivations of our approach and then present the steps

of the proposed UCLSO algorithm. The experiment

design is described in Section 4 and the results of the

experiments are discussed in Section 5. Finally, Sec-

tion 6 concludes the paper and discusses some direc-

tions for future work.

2 RELATED WORK

Existing multi-label classification methods are princi-

pally classified into two types: i) Problem transfor-

mation methods that modify the multi-label dataset

in different ways such that it can be used with exist-

ing multi-class classification algorithms (Tsoumakas

et al., 2011; F

¨

urnkranz et al., 2008; Read et al., 2011;

Zhang and Zhou, 2013), and ii) Algorithm adaptation

approaches that modify existing machine learning

algorithms to directly handle multi-label datasetets

(Zhang and Zhou, 2007; Nam et al., 2014; Zhang and

Zhou, 2006; Zhang and Zhou, 2013).

Multi-label algorithms can also be categorised

based on if and how they take label associations into

account, which allows algorithms to be categorised

as: i) first-order, ii) second-order or iii) higher-order

approaches based on the number of labels that are

considered together to train the models. First or-

der approaches do not consider any label association

and learn a classifier for each label independently

of all other labels (Zhang and Zhou, 2007; Tanaka

et al., 2015; Zhang et al., 2018). In second order

methods, pair-wise label associations are explored to

achieve enhanced learning of multi-label data (Park

and F

¨

urnkranz, 2007; F

¨

urnkranz et al., 2008). Higher

order approaches considering associations between

more than two labels (Boutell et al., 2004). A num-

ber of diversified techniques have facilitated higher

order label associations through interesting schemes

including classifier chains (Cheng et al., 2010; Read

et al., 2013), RAkEL (Tsoumakas et al., 2011), ran-

dom graph ensembles (Su and Rousu, 2015), DM-

LkNN (Younes et al., 2008), IBLR-ML+ (Cheng and

H

¨

ullermeier, 2009), and Stacked-MLkNN (Pakrashi

and Namee, 2017).

In recent years, data transformation has been a

popular choice for handling multi-label datasets. The

two principal ways of data transformation in multi-

label domain are: i) feature extraction or selection,

and ii) data oversampling or undersampling. One

ICAART 2023 - 15th International Conference on Agents and Artificial Intelligence

490

of the earliest applications of feature extraction in

multi-label learning was through LIFT (Zhang and

Wu, 2015), which brought significant performance

improvements. Most feature selection or extraction

methods select a label-specific feature set for each la-

bel to improve the discerning capability of the label

specific classifiers. Subsequently, a number of differ-

ent feature selection and extraction approaches have

been proposed (Huang et al., 2018; Xu, 2018; Xu

et al., 2016; Li et al., 2017). In (Huang et al., 2019),

label specific features are generated and the authors

also address the issue of the missing labels Recently,

the class imbalance problem in multi-label learning

has received more interest from the researchers. One

common approach to handling imbalance is to bal-

ance the cardinalities of the relevant and irrelevant

classes for each label. One way of achieving this

is through the removal of points from the majority

class of each label– for example using random un-

dersampling (Tahir et al., 2012) or tomek-link based

undersampling (Pereira et al., 2020b). Another way to

achieve this is by adding synthetic minority points to

the minority class (Liu and Tsoumakas, 2019; Sad-

hukhan and Palit, 2019; Charte et al., 2015). Al-

though this approaches have been shown to be effec-

tive there is still a lot of room for improvement.

3 UNSUPERVISED CLUSTERING

AND LABEL SPECIFIC DATA

OVERSAMPLING (UCLSO)

In this section we discuss the motivation and

then present the proposed approach: Unsupervised

Clustering and Label-Specific data Oversampling

(UCLSO).

3.1 Motivation

Let us consider a two-dimensional toy dataset with

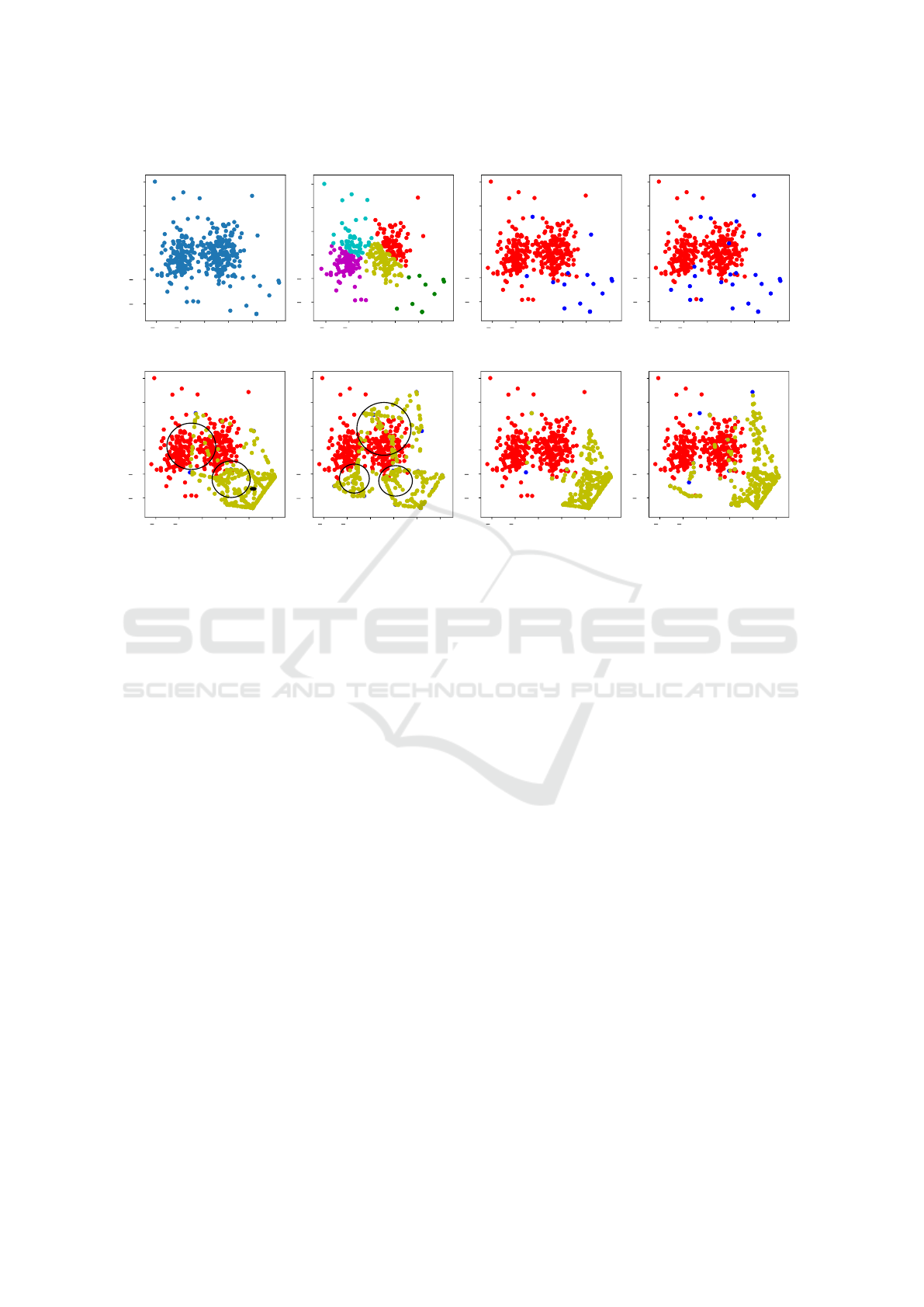

two labels (1 and 2) shown in Figure 1(a). The imbal-

ance ratios of labels 1 and 2 in this dataset are 24.7

and 14.4 respectively. Figure 1(b) shows 5 clusters in

this datatset which are found using k-means. In Fig-

ures 1 (c) and (d), we mark the points with respect to

their label-specific class memberships. The colours

red and blue indicate the majority and the minority

class points respectively. Data pre-processing via mi-

nority class oversampling is a popular choice to tackle

the issue of imbalance in imbalanced datasets (He and

Garcia, 2009). In a multi-label dataset, due to spa-

tial and quantitative variation of class-memberships

across the labels, we need a label-specific approach.

Figures 1 (e) and (f) show the label-specific SMOTE-

based (Chawla et al., 2002) oversampling (synthetic

points in yellow) for label 1 and label 2 respectively.

It can be seen that SMOTE oversamples the synthetic

minority points in the majority-populated regions on

a number of occasions for both labels 1 and 2 (high-

lighted by black circles in Figures 1 (e) and (f)).

In order to achieve an effective learning of a

dataset, we need to prevent the majority space en-

croachment during oversampling. We tackle this is-

sue by clustering (using k-means) the feature space.

Clustering the dataset will give us k localized sub-

spaces. Oversampling only within each cluster can

prevent the majority class encroachment.

This work is motivated by an effort to balance the

cardinalities of the minority and majority classes of

the labels without encroaching on the majority class

spaces, as well as an effort to preserve the underlying

distribution of the datapoints.

As indicated in Figures 1 (e) and (f), a generic

oversampling for all labels will not be fruitful as dif-

ferent labels have different quantitative and spatial

distribution of the minority points. The are two as-

pects we need to keep in mind. i) Where should we

perform the oversampling? To answer this, we cluster

the feature space in an unsupervised manner (only the

feature attributes of the points are taken into account).

ii) If there is more than one subspace in which to per-

form oversampling, how much should we oversample

in each subspace? We look into the distribution of the

minority points (label-specific) in the clusters to de-

cide this. The degree of label-specific oversampling

in a cluster should be proportional to its original mi-

nority class distribution for that label. Figures 1 (g)

and (h) show the oversampling on labels 1 and labels

2 through the proposed method UCLSO. The degree

of encroachment in the majority class region is much

less for UCLSO compared to SMOTE.

3.2 An Intuitive Description of UCLSO

• We add synthetic minority points to each

imbalanced label.

Our aim is to reduce the bias of our classifier

towards the well and over-represented majority

class against the quantitatively scarce minority

class. To achieve this, a synthetic minority set is

selected for each label.

• What is the modus operandi of the proposed

oversampling?

We randomly select two existing minority points

and sample a synthetic minority point at a random

location on their direction vector.

Integrating Unsupervised Clustering and Label-Specific Oversampling to Tackle Imbalanced Multi-Label Data

491

50 25 0 25 50 75

40

20

0

20

40

60

(a) Original dataset in 2

dimension

50 25 0 25 50 75

40

20

0

20

40

60

(e) SMOTE for label 1

50 25 0 25 50 75

40

20

0

20

40

60

(c) Majority-minority

configuration for label 1

50 25 0 25 50 75

40

20

0

20

40

60

(f) SMOTE for label 2

50 25 0 25 50 75

40

20

0

20

40

60

(d) Majority-minority

configuration for label 2

50 25 0 25 50 75

40

20

0

20

40

60

(b) Clusters in the dataset

50 25 0 25 50 75

40

20

0

20

40

60

(g) Oversampling using

UCLSO for label 1

50 25 0 25 50 75

40

20

0

20

40

60

(h) Oversampling using

UCLSO for label 2

Figure 1: A toy dataset illustrating the problems with oversampling, and how UCLSO addresses them.

• Where should we initiate the sampling so that we

are more confident of sampling in the minority

class region rather than encroaching the majority

space?

Adding any synthetic minority point cannot guar-

antee that it is being added to the minority re-

gion in the input space and it is not ”encroaching”

into the majority class. We can avoid regions of

the input space where adding a synthetic minority

point is equivalent to adding an outlier or being

in a majority region. Intuitively, if the two origi-

nal minority points involved in the oversampling

lie within a small neighbourhood, we can ensure

oversampling of a ”non-encroaching synthetic mi-

nority point”.

• How do we arrange for the two original minority

points to lie within a locality?

A common choice of selecting two points within a

locality or neighbourhood is by selecting the first

point and then selecting the second from the first

one’s nearest neighbourhood. But, for a label with

high imbalance ratio and sparsely distributed mi-

nority points, the neighbours from the same class

can lie far part. In this case, it is highly likely that

the neighbours encompass a significant volume of

feature space and are not actually local (in spite of

being neighbours). Oversampling a synthetic mi-

nority point on the direction vectors of two such

neighbours can lead to the generation of synthetic

minority point in some arbitrary location causing

encroachment into the majority spaces.

To tackle this issue, we resort to clustering of the

original points. We employ k-means clustering

for this purpose and use it in an unsupervised for-

mat. By unsupervised, we mean that the cluster-

ing process does not involve any class or label in-

formation of the points. Clustering is done solely

on the basis of inter-point euclidean distances be-

tween the points.

After segregating the points into a pre-fixed num-

ber of clusters, we inspect the label-specific class-

memberships of the points in the clusters. We se-

lect two intra-cluster minority points from a clus-

ter and compute the synthetic minority point at

a random location on their direction vector. We

compute the synthetic minority points from the

original minority points lying in the k clusters.

The number of synthetic minority points gener-

ated from a cluster will depend on the share of

original minority points in the clusters.

Let us consider two clusters C

1

and C

2

with dif-

fering shares of minority instances for label l. Let

the shares of C

1

and C

2

be denoted by s

1

and s

2

respectively such that s

1

> s

2

. The total number

of minority points, minority points in C

1

and mi-

nority points in C

2

are denoted by n, n

1

and n

2

respectively. The share of minority instances in

a cluster does not depend on the total number of

points in a cluster, rather it depends on how many

out of the total original minority points we have

in that cluster. We will oversample more points in

C

1

than C

2

because, C

1

has more original minority

ICAART 2023 - 15th International Conference on Agents and Artificial Intelligence

492

points than that of C

2

, and we have more confi-

dence on adding a point to C

1

over C

2

if we want

non-encroachment to the majority class as much

as possible. On a similar logic, if we get a cluster

with zero minority instances share for some la-

bel, we will not oversample any synthetic minor-

ity point in that cluster as it represent a majority

region.

3.3 Approach: UCLSO

Algorithm 1: UCLSO.

1: procedure UCLSO(D, k) ▷ D: Training dataset,

k: number of clusters in k-means clustering

2: {C

1

,C

2

, . . . ,C

k

} = k means ({x

i

|1 ≤ i ≤ n}, k)

▷ Cluster the input space

3: for l ∈ 1, 2, . . . , L do

4: S

l

= {}

5: for p ∈ 1, 2, . . . k do

6: n

l p

= number of original minority

points for label l in C

p

7: ▷ Find the synthetic

minority instance shares of each cluster

8: min

l

= {x

i

|∀

i

y

il

= 1}

9: ma j

l

= {x

i

|∀

i

y

il

= 0}

10: syn

l p

= ⌈n

l p

×

|ma j

l

|−|min

l

|

|min

l

|

⌉, p =

1, 2, . . . , k

11: ▷ Generate synthetic

points

12: for j ∈ 1, 2, . . . , syn

l p

do

13: u

p

is selected randomly from C

p

14: v

p

is u

p

’s randomly selected near-

est neighbor in C

p

15: r ∈ (0, 1) selected randomly

16: s

(l)

p j

= u

p

+ (v

p

− u

p

) × r ▷ j

th

synthetic pt. for label l from C

p

17: S

l

= S

l

S

{(s

(l)

p j

, y

(l)

p j

= 1)}

18: end for

19: end for

20: A

l

= D ∪ S

l

▷ Augment original data

21: end for

22: return {A

l

|l = 1, 2, . . . , q} ▷ Per label

augmented synthetic datasets

23: end procedure

The main idea of this oversampling method is to gen-

erate the synthetic minority points in the minority

populated regions of the input space. We follow this

scheme to introduce more synthetic minority points in

the minority regions, thereby avoiding the introduc-

tion of the synthetic minority points in non-minority

regions. This should ideally improve the detection of

the minority points with respect to the majority points

— as the error optimization in the classifier modelling

phase will have equivalent contribution from both the

minority points and the majority points. This will in

turn help in mitigating the bias of the majority class

and learning a better decision boundary for an imbal-

anced label.

A common approach for generating synthetic mi-

nority datapoints is to select two points within a

neighbourhood and then generate a synthetic point

by interpolation at a random location on the direc-

ton vector connecting the two (Chawla et al., 2002).

For a label with a high imbalance ratio and sparsely

distributed minority points, the neighbours from the

same class for this label can lie far apart. Conse-

quently, the neighbourhood can encompass a large

volume of feature space. Therefore, oversampling in

the given manner may lead to the generation of syn-

thetic minority points which end up in the majority

region of the input space.

To tackle this issue, we partition the original

points into k clusters

{C

1

,C

2

, . . . ,C

k

}, on the basis of their Euclidean dis-

tances. We use the k-means algorithm to perform this

clustering. Once we get the clusters, for each clus-

ter C

p

, we randomly select u

p

, a minority point from

a cluster, and v

p

, which is a randomly chosen near-

est neighbour of u

p

∈ C

p

(randomly chosen minor-

ity neighbor of u

p

from the same cluster). We com-

pute the synthetic minority point by interpolation at a

random location of the direction vector connecting u

p

and v

p

. The synthetic point is computed as follows

s

(l)

p j

= u

p

+ (v

p

− u

p

) × r (2)

where s

(l)

p j

is the jth synthetic datapoint generated in

cluster C

p

for the label l, and r ∈ (0, 1) is a random

number sampled from the uniform distribution, which

decides the location of the synthetic point between u

p

and v

p

.

The number of synthetic minority points gener-

ated from a cluster is directly proportional to the share

of original minority points in that cluster. Therefore,

more synthetic minority points will be introduced in

the clusters with more original minority points. This

is because, we are more confident about adding mi-

nority points in a region which originally had more

original minority points. The number of synthetic mi-

nority points to be added is computed as.

syn

l p

= ⌈n

l p

×

|ma j

l

| − |min

l

|

|min

l

|

⌉ (3)

where min

l

and ma j

l

are the sets of minority and ma-

jority datapoints for the label l respectively. Here n

l p

Integrating Unsupervised Clustering and Label-Specific Oversampling to Tackle Imbalanced Multi-Label Data

493

is the number of original minority datapoints for la-

bel l in cluster C

p

. This way, the clusters which have

more original minority points will be populated with

more synthetic minority point.

Following the above steps, we obtain the synthetic

minority set S

l

for the label l. The original training

dataset D is appended with S

l

to get an augmented

dataset A

l

for each label l. This augmented training

set, A

l

, is used to train a binary classifier model for

the corresponding label l. The above process is sum-

marised in Algorithm 1.

4 EXPERIMENTS

We performed a set of experiments to evaluate the

effectiveness of the proposed UCLSO method. This

section describes the datasets, algorithms, experimen-

tal setup, and evaluation processes used for the exper-

iments.

Several well-known multi-label datasets were se-

lected which are listed in Table 1

1

. Here, in-

stances, inputs and labels indicate the total number

of datapoints, the number of predictor variables, and

the number of potential labels respectively in each

dataset. Type indicates if the input space is numeric

or nominal. Distinct labelsets indicates the number of

unique combinations of labels. Cardinality is the av-

erage number of labels per datapoint, and Density is

achieved by dividing Cardinality by the Labels.

The datasets are modified as recommended in

(Zhang et al., 2020; He and Garcia, 2009). Labels

having a very high degree of imbalance (50 or greater)

or having too few positive samples (20 in this case)

are removed. For text datasets (medical, enron, rcv1,

bibtex), only the input space features with high degree

of document frequencies are retained.

To compare the performance of different ap-

proaches, we have selected the label-based macro-

averaged F-Score and label-based macro-averaged

AUC scores recommended in (Zhang et al., 2020).

For the experiments evaluating the proposed al-

gorithm we have performed a 10 × 2 fold cross-

validation experiment. The experiment setup and en-

vironment was kept identical to Zhang et. al.(Zhang

et al., 2020). For clustering, the number of clusters

was set to 5 for the k-means step of UCLSO. In the

classification phase, a set of linear SVM classifiers

are used, one for each label.

We compare the performance of UCLSO against

several state-of-the-art multi-label classification algo-

rithms – COCOA (Zhang et al., 2020), THRSEL (Pil-

1

http://mulan.sourceforge.net/datasets-mlc.html

lai et al., 2013), IRUS (Tahir et al., 2012), SMOTE-

EN (Chawla et al., 2002), RML (Petterson and Cae-

tano, 2010), and binary relevance (BR), calibrated la-

bel ranking (CLR) (F

¨

urnkranz et al., 2008), ensem-

ble classifier chains (ECC) (Read et al., 2011) and

RAkEL (Tsoumakas et al., 2011). We base our ex-

periments on the experiment presented in Zhang et.

al. (Zhang et al., 2020), and extend the results of that

paper by adding the performance of UCLSO.

5 RESULTS

Tables 2 and 3 shows the label-based macro-average

F-Score and label-based macro-averaged AUC results

respectively

2

, along with the relative ranks in brackets

(lower ranks are better) of the algorithms compared

for each dataset. The last row of both tables indicate

the average rank for the algorithms. The best values

are highlighted in boldface.

Also, to further analyse the differences between

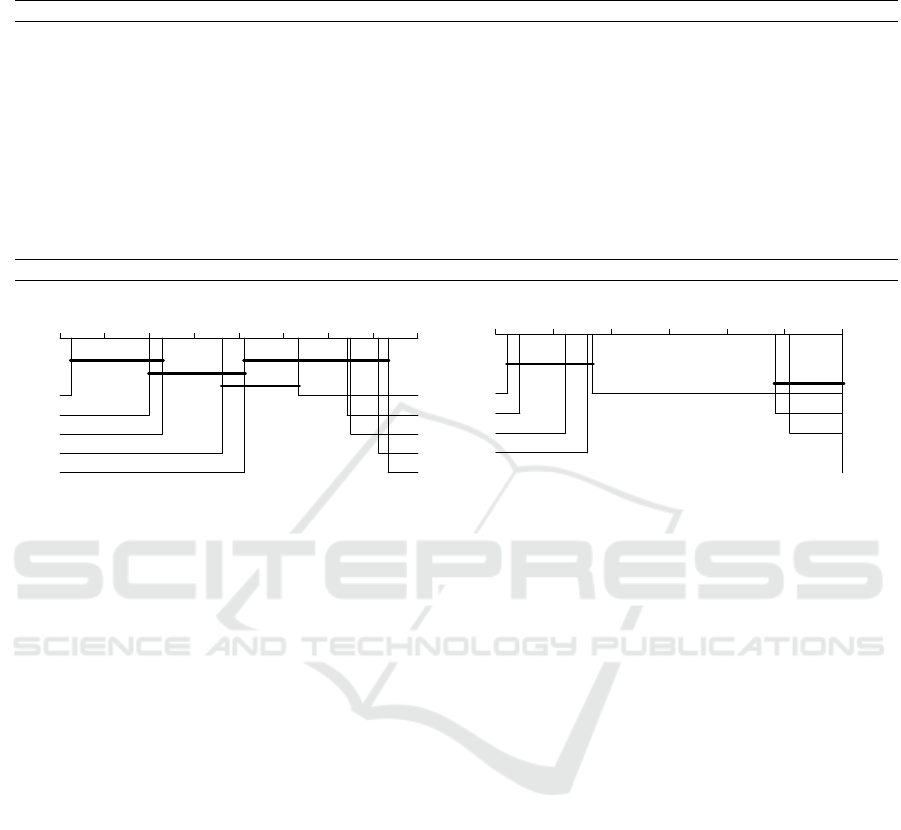

the algorithms, we performed a non-parametric sta-

tistical test for a multiple classifier comparison test.

Following (Garc

´

ıa et al., 2010), we have performed

a Friedman test with Finner p-value adjustments, and

the critical difference plots from the test results are

shown in Figure 2

3

.

Table 2 clearly shows that the overall performance

of the proposed UCLSO algorithm is better than all

the other algorithms, attaining the best average rank

of 1.25. The second best rank is attained by COCOA

(avg. rank 3). Also, the proposed method UCLSO

achieved much better performance than the other ap-

proaches for many datasets and attained the top rank

for nine of the datasets, and on the remaining three

datasets it attained the second rank. these results also

show that methods that attempt to explicitly consider

the label imbalance issue perform better than those

that do not. The other algorithms which specifically

address label imbalance attained the following order:

RML (avg. rank 3.29), THRSEL (avg. rank 4.62),

SMOTE-EN (avg. rank 5.12) and IRUS (arg. rank

6.33). The algorithms which do not consider the label

imbalances like BR (avg. rank 7.42), RAkEL (avg.

rank 7.5), ECC (avg. rank 8.12), and CLR (avg. rank

8.33) all performed poorly.

Multiple classifier comparison results in Figure 2

show that when UCLSO is compared with other al-

2

Note that results for Table 3 does not have the results

RML (Petterson and Caetano, 2010) as the implementation

does not provide prediction scores.

3

The full result tables in supplementary mate-

rial: https://github.com/phoxis/uclso/blob/main/UCLSO

Supplementary Material.pdf

ICAART 2023 - 15th International Conference on Agents and Artificial Intelligence

494

Table 1: Description of datasets.

Dataset Instances Inputs Labels Type Cardinality Density Distinct Proportion of Imbalance Ratio

Labelsets Distinct min max avg

Labelsets

yeast 2417 103 13 numeric 4.233 0.325 189 0.078 1.328 12.500 2.778

emotions 593 72 6 numeric 1.869 0.311 27 0.046 1.247 3.003 2.146

medical 978 144 14 numeric 1.075 0.077 42 0.043 2.674 43.478 11.236

cal500 502 68 124 numeric 25.058 0.202 502 1.000 1.040 24.390 3.846

rcv1-s1 6000 472 42 numeric 2.458 0.059 574 0.096 3.342 49.000 24.966

rcv1-s2 6000 472 39 numeric 2.170 0.056 489 0.082 3.216 47.780 26.370

rcv1-s3 6000 472 39 numeric 2.150 0.055 488 0.081 3.205 49.000 26.647

enron 1702 50 24 nominal 3.113 0.130 547 0.321 1.000 43.478 5.348

bibtex 7395 183 26 nominal 0.934 0.036 377 0.051 6.097 47.974 32.245

llog 1460 100 18 nominal 0.851 0.047 109 0.075 7.538 46.097 24.981

corel5k 5000 499 44 nominal 2.241 0.050 1037 0.207 3.460 50.000 17.857

slashdot 3782 53 14 nominal 1.134 0.081 118 0.031 5.464 35.714 10.989

Table 2: Each cell indicates the averaged label-based macro-averaged F-Scores scores (best score in bold) along with the

relative rank of the corresponding algorithm in brackets. The last row indicates the overall average ranks.

UCLSO COCOA THRSEL IRUS SMOTE-EN RML BR CLR ECC RAkEL

yeast 0.505 (1) 0.461 (3) 0.427 (5 ) 0.426 (6 ) 0.436 (4 ) 0.471 (2 ) 0.409 (9 ) 0.413 (8 ) 0.389 (10 ) 0.420 (7)

emotions 0.658 (2) 0.666 (1) 0.560 (9 ) 0.622 (5 ) 0.575 (8 ) 0.645 (3 ) 0.550 (10) 0.595 (7 ) 0.638 (4 ) 0.613 (6)

medical 0.783 (1) 0.759 (2) 0.733 (3.5) 0.537 (10) 0.700 (8 ) 0.707 (7 ) 0.718 (6 ) 0.724 (5 ) 0.733 (3.5) 0.672 (9)

cal500 0.273 (2) 0.210 (5) 0.252 (3 ) 0.277 (1 ) 0.235 (4 ) 0.209 (6 ) 0.169 (8 ) 0.081 (10) 0.092 (9 ) 0.193 (7)

rcv1-s1 0.443 (1) 0.364 (3) 0.292 (5 ) 0.252 (8 ) 0.313 (4 ) 0.387 (2 ) 0.285 (6 ) 0.227 (9 ) 0.192 (10 ) 0.272 (7)

rcv1-s2 0.432 (1) 0.342 (3) 0.275 (5 ) 0.234 (8 ) 0.305 (4 ) 0.363 (2 ) 0.272 (6 ) 0.226 (9 ) 0.173 (10 ) 0.263 (7)

rcv1-s3 0.480 (1) 0.339 (3) 0.275 (5 ) 0.225 (8 ) 0.302 (4 ) 0.371 (2 ) 0.271 (6 ) 0.211 (9 ) 0.163 (10 ) 0.257 (7)

enron 0.352 (1) 0.342 (2) 0.291 (5 ) 0.293 (4 ) 0.266 (8 ) 0.307 (3 ) 0.246 (9 ) 0.244 (10) 0.268 (6 ) 0.267 (7)

bibtex 0.442 (1) 0.318 (3) 0.303 (4 ) 0.253 (8 ) 0.283 (5 ) 0.326 (2 ) 0.263 (7 ) 0.265 (6 ) 0.212 (10 ) 0.252 (9)

llog 0.181 (1) 0.082 (6) 0.096 (3 ) 0.124 (2 ) 0.095 (4.5) 0.095 (4.5) 0.031 (7 ) 0.024 (8 ) 0.022 (10 ) 0.023 (9)

corel5k 0.209 (2) 0.196 (3) 0.146 (4 ) 0.105 (6 ) 0.125 (5 ) 0.215 (1 ) 0.089 (7 ) 0.049 (10) 0.054 (9 ) 0.084 (8)

slashdot 0.443 (1) 0.374 (2) 0.355 (4 ) 0.257 (10) 0.366 (3 ) 0.343 (5 ) 0.291 (8 ) 0.290 (9 ) 0.304 (6 ) 0.296 (7)

Avg. rank 1.25 3.00 4.62 6.33 5.12 3.29 7.42 8.33 8.12 7.5

gorithms, except for COCOA and RML, the null hy-

pothesis can be rejected with a significance level of

α = 0.05. Therefore, based on the statistical test,

UCLSO is significantly better than the other algo-

rithms, except COCOA and RML.

Table 3 shows the label-based macro-averaged

AUC scores, which shows that proposed method

UCLSO was able to attain the second best average

rank of 2.42, being very close to COCOA attain-

ing the best rank of 2.21. Interestingly UCLSO at-

tained more rank ones (six) than COCOA (two rank

ones). Also, interestingly ECC was able to perform

better than UCLSO in six of the datasets, but was

able to perform better in nine datasets when com-

pared to COCOA. It is also interesting to notice that

ECC and CLR had higherrankings for the label-based

macro-averaged AUC metric than for macro-averaged

F-Scores. It seems that a simple BR still performed

poorly. As ECC and CLR takes label associations into

consideration in a binary relevance and ranking fash-

ion, respectively, it helped improve the comparative

performances. RAkEL, on the other hand, taking la-

bel associations into account is sensitive on the label

subset size (value of k) and the specific combination,

which can lead to an even higher degree of imbalance.

The difference in the results of the label-based macro-

average AUC compared to the F-Score also indicates

the importance of thresholding the predictions when

deciding the relevance of a certain label.

Multiple classifier comparison results show in

Figure 2 that when UCLSO is compared with others,

the null hypothesis could not be rejected for COCOA,

ECC, CLR and IRUS in this case with a significance

level of α = 0.05. Although, UCLSO performed sig-

nificantly better than RAkEL, SMOTE-ML, THRSEL

and BR. Overall, the experiments demonstrate the ef-

fectiveness of the proposed method UCLSO, as it out-

performs the compared state of the art algorithms in

almost all cases.

6 CONCLUSION AND FUTURE

WORK

In this work we have proposed an algorithm to ad-

dress the class imbalance of labels in multi-label clas-

sification problems. The proposed algorithm, Un-

Integrating Unsupervised Clustering and Label-Specific Oversampling to Tackle Imbalanced Multi-Label Data

495

Table 3: Each cell indicates the averaged Label-based macro-averaged AUC scores (best score in bold) along with the relative

rank of the corresponding algorithm in brackets. The last row indicates average ranks.

UCLSO COCOA THRSEL IRUS SMOTE-EN BR CLR ECC RAkEL

yeast 0.666 (3) 0.711 (1 ) 0.576 (8.5) 0.658 (4 ) 0.582 (7) 0.576 (8.5) 0.650 (5 ) 0.705 (2 ) 0.641 (6)

emotions 0.819 (3) 0.844 (2 ) 0.687 (8.5) 0.802 (4 ) 0.698 (7) 0.687 (8.5) 0.796 (6 ) 0.850 (1 ) 0.797 (5)

medical 0.967 (1) 0.964 (2 ) 0.869 (7.5) 0.955 (3.5) 0.873 (6) 0.869 (7.5) 0.955 (3.5) 0.952 (5 ) 0.856 (9)

cal500 0.550 (4) 0.558 (2 ) 0.509 (8.5) 0.545 (5 ) 0.512 (7) 0.509 (8.5) 0.561 (1 ) 0.557 (3 ) 0.528 (6)

rcv1-s1 0.919 (1) 0.889 (3 ) 0.643 (7.5) 0.882 (4 ) 0.626 (9) 0.643 (7.5) 0.891 (2 ) 0.881 (5 ) 0.728 (6)

rcv1-s2 0.912 (1) 0.882 (2.5) 0.640 (7.5) 0.880 (4 ) 0.622 (9) 0.640 (7.5) 0.882 (2.5) 0.874 (5 ) 0.721 (6)

rcv1-s3 0.956 (1) 0.880 (2 ) 0.633 (7.5) 0.872 (4.5) 0.628 (9) 0.633 (7.5) 0.877 (3 ) 0.872 (4.5) 0.718 (6)

enron 0.719 (5) 0.752 (1 ) 0.597 (8.5) 0.738 (3 ) 0.619 (7) 0.597 (8.5) 0.720 (4 ) 0.750 (2 ) 0.650 (6)

bibtex 0.844 (4) 0.877 (2 ) 0.673 (8.5) 0.894 (1 ) 0.706 (6) 0.673 (8.5) 0.811 (5 ) 0.873 (3 ) 0.696 (7)

llog 0.721 (1) 0.663 (4 ) 0.518 (7.5) 0.676 (2 ) 0.561 (6) 0.518 (7.5) 0.612 (5 ) 0.673 (3 ) 0.514 (9)

corel5k 0.695 (4) 0.718 (3 ) 0.559 (7.5) 0.687 (5 ) 0.596 (6) 0.559 (7.5) 0.740 (1 ) 0.723 (2 ) 0.552 (9)

slashdot 0.806 (1) 0.774 (2 ) 0.632 (8.5) 0.753 (4 ) 0.714 (6) 0.632 (8.5) 0.742 (5 ) 0.765 (3 ) 0.638 (7)

Avg. ranks 2.42 2.21 8.00 3.67 7.08 8.00 3.58 3.21 6.83

1 2

3

4

5

6

7

8 9

UCLSO

COCOA

RML

THRSEL

SMOTE-EN

IRUS

BR

RAkEL

ECC

CLR

(a) label-based macro-averaged F-Score

2

3

4

5

6

7

8

COCOA

UCLSO

ECC

CLR

IRUS

RAkEL

SMOTE-EN

THRSEL

BR

(b) label-based macro-averaged AUC

Figure 2: Critical difference plots. The scale indicates the average ranks. The methods which are not connected with the

horizontal lines are significantly different with a significance level of α = 0.05.

supervised Clustering and Label-Specific data Over-

sampling (UCLSO), oversamples label-specific mi-

nority datapoints in a multi-label problem to balance

the sizes of the majority and the minority classes of

each label. The oversampling of the minority classes

for each label is done in a way such that more mi-

nority class samples are generated in regions (or clus-

ters) where the density of minority points is high. This

avoids the introduction of minority datapoints in ma-

jority regions in the input space. The number of sam-

ples introduced per cluster also depends on the share

of the minority class for that cluster.

An experiment with 12 well-known multi-label

datasets and other state of the art algorithms demon-

strates the efficacy of UCLSO with respect to label-

based macro-averaged F-Score. UCLSO attained the

best average rank and the degree of its improvement

over existing approaches was significant. This shows

that UCLSO has successfully improved the classifi-

cation of imbalanced multi-label data. In future, we

would specifically like to incorporate some imbalance

informed clustering to extend our scheme. Moreover,

it would be interesting to amalgamate the oversam-

pling technique with label associated learning, an-

other key component of multi-label data.

REFERENCES

Barutcuoglu, Z., Schapire, R. E., and Troyanskaya, O. G.

(2006). Hierarchical multi-label prediction of gene

function. Bioinformatics, 22(7):830–836.

Boutell, M. R., Luo, J., Shen, X., and Brown, C. M.

(2004). Learning multi-label scene classification. Pat-

tern recognition, 37(9):1757–1771.

Charte, F., Rivera, A. J., del Jesus, M. J., and Herrera, F.

(2015). MLSMOTE: approaching imbalanced multi-

label learning through synthetic instance generation.

Knowledge-Based Systems, 89:385–397.

Chawla, N. V., Bowyer, K. W., Hall, L. O., and Kegelmeyer,

W. P. (2002). SMOTE: synthetic minority over-

sampling technique. J. Artif. Int. Res., 16(1):321–357.

Cheng, W. and H

¨

ullermeier, E. (2009). Combining

instance-based learning and logistic regression for

multilabel classification. Machine Learning, 76(2-

3):211–225.

Cheng, W., H

¨

ullermeier, E., and Dembczynski, K. J. (2010).

Bayes optimal multilabel classification via probabilis-

tic classifier chains. In Proceedings of the 27th inter-

national conference on machine learning (ICML-10),

pages 279–286.

Daniels, Z. and Metaxas, D. (2017). Addressing imbalance

in multi-label classification using structured hellinger

forests. In Proceedings of the AAAI Conference on

Artificial Intelligence, volume 31.

ICAART 2023 - 15th International Conference on Agents and Artificial Intelligence

496

Das, S., Mullick, S. S., and Zelinka, I. (2022). On super-

vised class-imbalanced learning: An updated perspec-

tive and some key challenges. IEEE Transactions on

Artificial Intelligence, 3(6):973–993.

F

¨

urnkranz, J., H

¨

ullermeier, E., Loza Menc

´

ıa, E., and

Brinker, K. (2008). Multilabel classification via cali-

brated label ranking. Mach. Learn., 73(2):133–153.

Garc

´

ıa, S., Fern

´

andez, A., Luengo, J., and Herrera, F.

(2010). Advanced nonparametric tests for multi-

ple comparisons in the design of experiments in

computational intelligence and data mining: Exper-

imental analysis of power. Information sciences,

180(10):2044–2064.

Godbole, S. and Sarawagi, S. (2004). Discriminative meth-

ods for multi-labeled classification. In Proceedings

of the 8th Pacific-Asia Conference on Knowledge Dis-

covery and Data Mining, pages 22–30.

He, H. and Garcia, E. A. (2009). Learning from imbal-

anced data. IEEE Trans. on Knowl. and Data Eng.,

21(9):1263–1284.

Huang, J., Li, G., Huang, Q., and Wu, X. (2018). Joint fea-

ture selection and classification for multilabel learn-

ing. IEEE Transactions on Cybernetics, 48(3):876–

889.

Huang, J., Qin, F., Zheng, X., Cheng, Z., Yuan, Z., Zhang,

W., and Huang, Q. (2019). Improving multi-label

classification with missing labels by learning label-

specific features. Information Sciences, 492:124–146.

Joachims, T. (1998). Text categorization with support vec-

tor machines: Learning with many relevant features.

In European conference on machine learning, pages

137–142. Springer.

Li, F., Miao, D., and Pedrycz, W. (2017). Granular multi-

label feature selection based on mutual information.

Pattern Recognition, 67:410 – 423.

Li, T. and Ogihara, M. (2006). Toward intelligent music

information retrieval. Multimedia, IEEE Transactions

on, 8(3):564–574.

Li, X., Zhao, F., and Guo, Y. (2014). Multi-label image

classification with a probabilistic label enhancement

model. In Uncertainty in Artificial Intelligence.

Liu, B. and Tsoumakas, G. (2019). Synthetic oversampling

of multi-label data based on local label distribution. In

Joint European Conference on Machine Learning and

Knowledge Discovery in Databases, pages 180–193.

Springer.

Nam, J., Kim, J., Menc

´

ıa, E. L., Gurevych, I., and

F

¨

urnkranz, J. (2014). Large-scale multi-label

text classification—revisiting neural networks. In

Joint european conference on machine learning and

knowledge discovery in databases, pages 437–452.

Springer.

Nasierding, G., Tsoumakas, G., and Kouzani, A. Z. (2009).

Clustering based multi-label classification for image

annotation and retrieval. In Systems, Man and Cyber-

netics, 2009. SMC 2009. IEEE International Confer-

ence on, pages 4514–4519.

Pakrashi, A. and Namee, B. M. (2017). Stacked-MLkNN: A

stacking based improvement to multi-label k-nearest

neighbours. In LIDTA@PKDD/ECML.

Park, S. and F

¨

urnkranz, J. (2007). Efficient pairwise clas-

sification. In ECML 2007. LNCS (LNAI, pages 658–

665. Springer.

Pereira, R. M., Costa, Y. M., and Silla Jr, C. N. (2020a).

Mltl: A multi-label approach for the tomek link under-

sampling algorithm. Neurocomputing, 383:95–105.

Pereira, R. M., Costa, Y. M., and Silla Jr., C. N. (2020b).

MLTL: A multi-label approach for the tomek link

undersampling algorithm. Neurocomputing, 383:95–

105.

Petterson, J. and Caetano, T. S. (2010). Reverse multi-label

learning. In Advances in Neural Information Process-

ing Systems 23, pages 1912–1920. Curran Associates,

Inc.

Pillai, I., Fumera, G., and Roli, F. (2013). Threshold opti-

misation for multi-label classifiers. Pattern Recogn.,

46(7):2055–2065.

Qi, G.-J., Hua, X.-S., Rui, Y., Tang, J., Mei, T., and Zhang,

H.-J. (2007). Correlative multi-label video annotation.

In Proceedings of the 15th ACM International Con-

ference on Multimedia, MM ’07, pages 17–26, New

York, NY, USA. ACM.

Read, J., Martino, L., and Luengo, D. (2013). Effi-

cient monte carlo optimization for multi-label classi-

fier chains. pages 3457–3461.

Read, J., Pfahringer, B., Holmes, G., and Frank, E. (2011).

Classifier chains for multi-label classification. Ma-

chine learning, 85(3):333.

Sadhukhan, P. and Palit, S. (2019). Reverse-nearest neigh-

borhood based oversampling for imbalanced, multi-

label datasets. Pattern Recognition Letters, 125:813 –

820.

Su, H. and Rousu, J. (2015). Multilabel classification

through random graph ensembles. Machine Learning,

99(2).

Tahir, M. A., Kittler, J., and Yan, F. (2012). Inverse

random under sampling for class imbalance prob-

lem and its application to multi-label classification.

45(10):3738–3750.

Tanaka, E. A., Nozawa, S. R., Macedo, A. A., and

Baranauskas, J. A. (2015). A multi-label approach

using binary relevance and decision trees applied to

functional genomics. Journal of Biomedical Informat-

ics, 54:85–95.

Tsoumakas, G., Katakis, I., and Vlahavas, I. (2011). Ran-

dom k-labelsets for multilabel classification. IEEE

Transactions on Knowledge and Data Engineering,

23(7):1079–1089.

Xu, J. (2018). A weighted linear discriminant analysis

framework for multi-label feature extraction. Neuro-

computing, 275:107–120.

Xu, J., Liu, J., Yin, J., and Sun, C. (2016). A multi-

label feature extraction algorithm via maximizing fea-

ture variance and feature-label dependence simultane-

ously. Knowledge-Based Systems, 98:172–184.

Younes, Z., Abdallah, F., and Denœux, T. (2008). Multi-

label classification algorithm derived from k-nearest

neighbor rule with label dependencies. In 2008 16th

European Signal Processing Conference, pages 1–5.

IEEE.

Integrating Unsupervised Clustering and Label-Specific Oversampling to Tackle Imbalanced Multi-Label Data

497

Zhang, M. and Zhou, Z. (2006). Multi-label neural net-

works with applications to functional genomics and

text categorization. IEEE Transactions on Knowledge

and Data Engineering, 18:1338–1351.

Zhang, M.-L., Li, Y.-K., Liu, X.-Y., and Geng, X.

(2018). Binary relevance for multi-label learning: an

overview. Frontiers of Computer Science, 12(2):191–

202.

Zhang, M.-L., Li, Y.-K., Yang, H., and Liu, X.-Y. (2020).

Towards class-imbalance aware multi-label learning.

IEEE Transactions on Cybernetics.

Zhang, M.-L. and Wu, L. (2015). Lift: Multi-label learning

with label-specific features. Pattern Analysis and Ma-

chine Intelligence, IEEE Transactions on, 37(1):107–

120.

Zhang, M.-L. and Zhou, Z.-H. (2007). ML-KNN: A lazy

learning approach to multi-label learning. Pattern

Recogn., 40(7):2038–2048.

Zhang, M.-L. and Zhou, Z.-H. (2013). A review on

multi-label learning algorithms. IEEE transactions on

knowledge and data engineering, 26(8):1819–1837.

ICAART 2023 - 15th International Conference on Agents and Artificial Intelligence

498