Improving the Engagement of Participants by Interacting with Agents

who Are Persuaded During Decision-Making

Yoshimasa Ohmoto

a

and Hikaru Nakaaki

Faculty of Infomatics, Shizuoka University, Hamamatsu-shi, Shizuoka-ken, Japan

Keywords:

Human-Agent Interaction, Human Factors, Persuade, Engagement.

Abstract:

A collaborative task execution by leveraging the respective strengths of human and artificial agents can solve

problems more effectively than if both entities work separately. The important point is that humans have the

mental attitude to transform their own opinions through active interaction with the agent. However, people do

not often interact actively with agents because they often consider them to be mere information providers. In

this study, our idea was to increase participant engagement by having participants experience interactions in

which the agent is persuaded by the participant in a decision-making task. We performed an experiment to

analyze whether the interaction with an agent that implements a persuasion interaction model could enhance

the user’s sense of self-efficacy and engagement in the task. As a result, the participant’s behavior and the

questionnaire’s findings revealed that persuading the interaction partner generally improves engagement in the

interaction. On the other hand, it was suggested that the experience of persuading the interaction partner and

the experience of the partner agreeing influenced the subsequent engagement and subjective evaluation of the

interaction.

1 INTRODUCTION

We believe that the final form of the interface be-

tween humans and artifacts can be roughly classi-

fied into two types: “transparent,” in which the in-

terface (and its accompanying artifacts) can be em-

ployed without concern for its presence, and “pro-

jective,” in which the presence of the interface plays

a crucial role in addition to the functions it offers.

The “transparent” interface is ultimately the one in

which the absence of interaction (or the perception

of interaction) works best. Numerous common user-

friendly interfaces and human-supporting artifacts are

oriented this way. However, they are often oriented

toward a “projective” form in situations that require

agents. For instance, it has been reported that in

idea support situations, idea generation is facilitated

by communication with others (Sannomiya, 2015;

Chen et al., 2019). In decision-making situations,

some studies have demonstrated that agents enhance

users’ impressions of the decision-making process

and their positive attitude toward decision-making by

adding the agents’ subjective opinions to their propos-

als (Ohmoto et al., 2014).

Traditional interactive systems often exist as tools

a

https://orcid.org/0000-0003-2962-6675

that passively help humans performing a specific task

and discovering the decision-making factors in which

users are interested (Raux et al., 2005; Misu et al.,

2011). The user must recognize that agents are more

than just information providers in human-agent in-

teraction. For example, the agent’s recommendation

and/or opinion opposing the user’s idea has dimin-

ished importance to the user when the agent is just an

information presentation interface to the user. Qiu and

Benbasat (Qiu and Benbasat, 2009) proposed employ-

ing the perspective of social relationships between hu-

mans and the design of interfaces for software-based

product recommendation agents, providing value be-

yond the functionality design and practical aspects of

decision support systems. The results of a laboratory

experiment show that employing humanoid embodi-

ment and human voice-based communication signif-

icantly affects users’ perceptions of social presence,

which in turn improves users’ trusting beliefs, percep-

tions of enjoyment, and their intentions to employ the

agent as a decision aid.

However, it is crucial to accomplish the task while

dynamically changing the roles and initiatives of both

parties for numerous entities to accomplish a task co-

operatively by leveraging their respective strengths.

Such interaction techniques include “Mixed-Initiative

Ohmoto, Y. and Nakaaki, H.

Improving the Engagement of Participants by Interacting with Agents who Are Persuaded During Decision-Making.

DOI: 10.5220/0011803600003393

In Proceedings of the 15th International Conference on Agents and Artificial Intelligence (ICAART 2023) - Volume 3, pages 923-930

ISBN: 978-989-758-623-1; ISSN: 2184-433X

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

923

Interaction” (Allen et al., 1999). Gianni et al. (Gianni

et al., 2011) noted that to achieve a mutually initiated

interaction between humans and agents, there are still

some challenges. Additionally, each participant in the

interaction must continuously interact with a certain

degree of spontaneity to achieve the mixed-initiative

interaction. Such a state in which a participant’s spon-

taneous approach is applied is considered a state in

which engagement with the tasks and interactions is

high.

A possible way to enhance user engagement is to

increase self-efficacy (Bandura, 1977). Self-efficacy

is the state of knowing that one can achieve a certain

goal. The higher the self-efficacy, the more proactive

one is said to be in achieving a goal. Some studies aim

to enhance human work motivation for monotonous

and boring tasks by increasing self-efficacy (Fujino

et al., 2007). In these studies, humans were required

to convince a character agent of the task’s importance,

and work motivation was demonstrated to improve for

tasks after the agent was persuaded. This indicates

that the awareness of trying to persuade the agent en-

hances human engagement and improves self-efficacy

when the agent can be persuaded.

This study’s final goal is to develop a human-

agent interaction model, which can realize a mixed-

initiative interaction. To accomplish this, it is crucial

to induce and maintain the engagement of the par-

ticipants in the interaction. In this study, our idea

was to increase participant engagement by having par-

ticipants experience interactions in which the agent

is persuaded by the participant in a decision-making

task. A persuasion interaction model was proposed,

and we examined whether the interaction with an

agent that implemented the model could enhance the

user’s sense of self-efficacy and engagement.

2 INTERACTION MODEL FOR

PERSUADED AGENTS

Numerous studies have analyzed ways to enhance en-

gagement (e.g., (Kotze, 2018)). In these studies, hav-

ing a sense that a person’s actions affect one’s collab-

orative partners and the environment was considered

to play a crucial role. When performing tasks that

involve actual work, it is easier to feel that a person

has made an impact on others and the environment

due to the feedback provided by the findings of the

work. However, in tasks that focus on discussion and

decision-making, it is challenging to obtain explicit

feedback and to feel that a person has made changes

in others and the environment during the task. In this

study, we hypothesized that users could experience

interactions, which persuade the agent in a decision-

making task; thus, making them feel that they have

made a change in others in a relatively explicit way,

which could enhance their engagement.

It is crucial for the persuader to actively interact

with the persuaded to persuade others. By making

users aware that they must persuade the agent, it is ex-

pected to initiate an engagement in being actively in-

volved in tasks that require interaction with the agent.

Additionally, since proactive decision-making can in-

crease confidence in one’s abilities (Siebert et al.,

2020), we expect that interactions that persuade the

agent will increase self-efficacy.

2.1 Persuasion Interaction with Agents

The agent to be persuaded initially expresses an opin-

ion that differs from the opinion of the interacting user

in situations where decision-making is needed during

the task. Through the human-agent interaction, the

agent is encouraged by the user to change its opinion

and finally changes its opinion to another opinion that

conforms to the user’s intention. Here, this is called

“persuasion.” Our preliminary experiments using a

fully controlled agent by the experimenter confirmed

that the sequence of persuasion interaction between

the user and the agent to be persuaded often follows

the following;

1. Introduction Phase. The agent encourages the

user to express his/her opinions and tries to clar-

ify the user’s intentions and thoughts in decision-

making situations. The user may express an am-

biguous opinion. However, the agent infers some

important factors of the user’s opinion as much as

possible. After the user’s utterances, the agent ex-

presses an opinion that is opposite to or does not

immediately agree with the user.

2. Persuasion Phase. The agent encourages the user

to persuade the agent against the agent’s opinion.

First, the agent makes an utterance agreeing with

part of the user’s opinion or incorporating a bit

of the user’s argument. Then, depending on the

content of the user’s response, the agent expresses

reasons why the agent’s proposal is better. Based

on the user’s objections to the agent’s opinion, the

agent infers the user’s important factors, and the

agent suggests points where it can compromise.

3. Agreement Phase. As the interaction progresses,

the agent shows the user with a gradual shift in the

agent’s opinion from disagreeing to agreeing with

the user. At the end of the persuasion interaction,

the agent plainly agrees with the user’s opinion,

indicating that the agent has changed its opinion

in response to the user’s persuasion.

ICAART 2023 - 15th International Conference on Agents and Artificial Intelligence

924

2.2 Implemented Interaction Model

The agent must change its response according to how

the user interacts and the progress of the conversation

for the user to feel that the agent has been persuaded

by the user. In this study, we categorized the attitudes

and the responses of users and agents in their persua-

sion interactions. The corresponding agent responses

were then determined according to the user’s attitude

and the progress of the conversation. Table 1 summa-

rizes the agent’s reaction corresponding to each user’s

attitude and stage of the conversation.

Argument. Utterances that reinforce one’s opinion,

such as expressing a position, showing an advan-

tage, or expressing one’s thoughts, are considered

“arguments.” This includes rebuttals but does not

consider whether the content of the utterance is

valid or not.

Pointed Out (User Only). A denial of an agent’s

position or arguments, pointing out a fault, or sim-

ilar utterances are considered a “pointed out” by

the user. A refutation of the agent’s previous ut-

terance is an “argument.”

Question. Utterances that ask for opinions or con-

firmation from others are considered “questions.”

If the user asks a question that the agent can an-

swer with a “yes/no,” the agent will in any case

respond appropriately according to the content of

the question.

Neutral. Utterances that do not amount to a denial

of the others, or utterances that seem thoughtful or

monologue (e.g., “What should I do”?) are con-

sidered “neutral.” When a user makes an utterance

that shows agreement or understanding with the

agent, it is also treated as a neutral utterance. In

other words, the agent does not persuade the user.

Suggestion. Regardless of the position, utterances

that are necessary for the benefit of the whole

are considered “suggestions.” For example, “We

should decide about XX,” etc. These are sugges-

tions that require consideration regardless of the

position of either the user or the agent.

Acceptance (Agent Only.) Positive utterances such

as agreeing with the user’s opinion or showing

empathy are considered “acceptance.” The agent

always makes an utterance of acceptance at the

end of a conversation. This encourages the user’s

willingness to be persuaded.

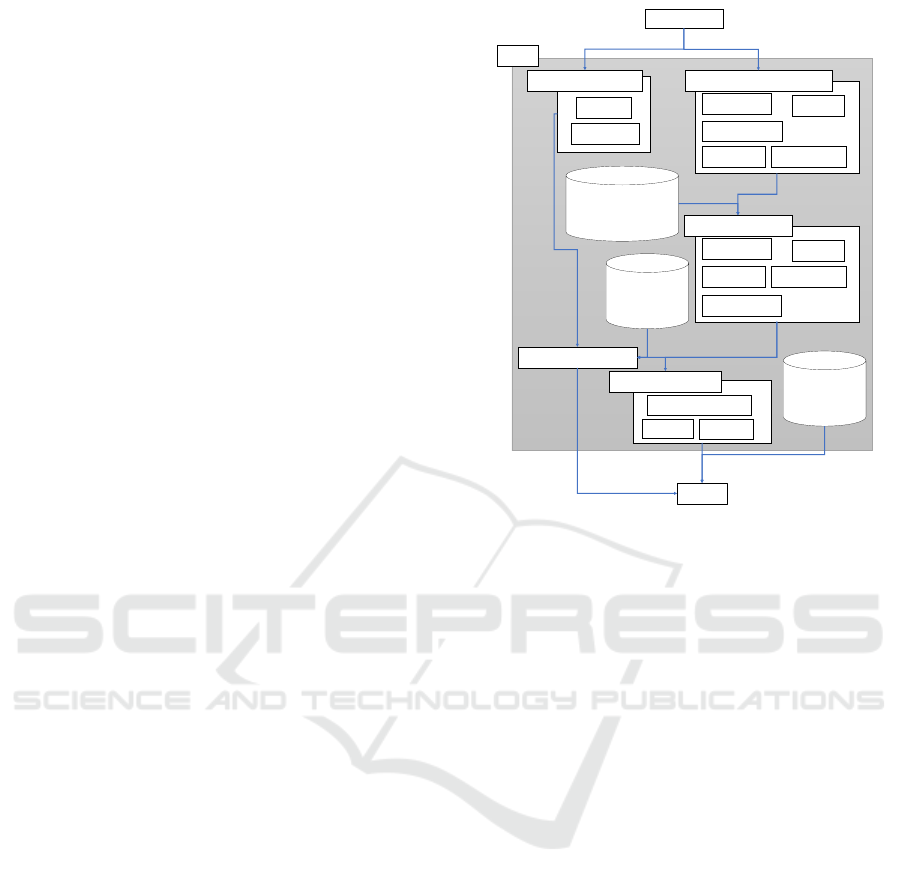

Figure 1 shows the architecture of an agent that

implements the above user attitudes and the agent’s

responses to them as persuaded actions in a decision-

making situation. The user information component

Voice Input

Argument

Pointed out

Question

Neutral

Suggestion

Attitude classification

User information

Position

Argument

Agent reaction

Output Utterance

Output behavior

Output

Facial expression

Emotion

Gesture

Agent

Argument

Acceptance

Question

Neutral

Suggestion

Utterance

database

Pre-defined

correspondence

rules

Behavior

database

Figure 1: The architecture of an agent that implements the

persuaded actions.

retains the user’s position and arguments from the

user’s utterances. The attitude classification compo-

nent classifies the estimated user’s attitude accord-

ing to the user’s utterances and the conversation sce-

nario. The agent reaction component determines the

agent’s reaction according to the correspondence in

Table 1. To determine the agent’s behavior from a

predefined database, the agent’s response, the phases

of the decision-making scene, and the content that has

already appeared in the conversation are integrated.

3 EXPERIMENT

An experiment was conducted employing an agent

that implements the interaction model described

above to test the hypothesis that the human persuasion

of an agent increases self-efficacy and engagement in

a task. In the experiment, participants conducted a

decision-making task while conversing with an agent;

one participant performed two different tasks and in-

teracted with an agent with a different behavior model

for each task. One behavioral model was the “per-

suaded agent,” which naturally conducted conversa-

tions in which it was persuaded in decision-making

situations, and the other behavioral model was the

“agreeing agent,” which agreed to human sugges-

tions. Participants’ conversational situations during

the experiment were recorded by video and employed

Improving the Engagement of Participants by Interacting with Agents who Are Persuaded During Decision-Making

925

Table 1: The agent’s reaction corresponding to each user’s attitude and phase of the conversation.

User’s attitude Agent responses

Persuasion phase (first half) Persuasion phase (second phase) Agreement phase

Argument Argument, Suggestion Question, Neutral Neutral, Acceptance

Pointed out Argument, Question Question, Neutral Neutral, Acceptance

Question Argument, Suggestion Argument, Acceptance Suggestion, Acceptance

Neutral Argument, Question Suggestion, Neutral Neutral, Acceptance

Suggestion Argument, Question Question, Neutral Neutral, Acceptance

for evaluation. Participants completed a questionnaire

in each session and a questionnaire directly compar-

ing the two sessions at the end of the experiment.

3.1 Description of the Task

In the experiment, one participant performed both

the “Persuaded condition” employing the persuaded

agent and the “Agreeing condition” using the agreeing

agent. Participants were taught to persuade the agents

in a decision-making scene if necessary. The content

of the decision-making situations in each session was

different; however, the structure of the conversation

was kept the same. In each session, there were two

different decision-making scenes. Each session in-

cluded two agents and a participant in the conversa-

tion; the two agents and the participant were taught

that their positions were equal. The appearance of

each agent in each session was different.

3.1.1 Common

In common with the two sessions, a scenario was set

up in which the participant worked with two agents to

write an introductory article on a given tourist desti-

nation as a school assignment. In the two conditions,

the task commonly followed the following flow: 1.

ice-breaker, 2. first decision-making scene, 3. chat-

ting scene with scene changes, 4. second decision-

making scene. In each decision-making scene, the

participants were instructed to express their ideas

about a course of action and to make a decision that

had everyone, including the agent, take the same ac-

tion. In the decision-making scene, they expressed

their opinions twice, and in each case, they were

also encouraged to state reasons why they were the

way they were. A story connecting the preceding

and following decision-making situations was facil-

itated through conversation in the chatting situation.

The content of the conversation in the chatting scene

was unrelated to the course of action in the decision-

making scene.

Both the participants and agents were engaged in

a voice conversation during the task. During the con-

versation, four options were displayed at the top of

the screen to guide the participants’ statements and

Guide options

Interaction recommendation indicator

Figure 2: The screen shot of the experiment.

encourage them to keep their statements within a cer-

tain range. A similar system has been proposed by

(Tominaga et al., 2021) and others. Since the turn of

speech is often not obvious in interactions with artifi-

cial agents, a “♢♢♢” icon was shown on the screen

as an “interaction recommendation indicator” at the

point when the participant was encouraged to interact

with the agents. Participants could interact with the

agents even when the indicator was not displayed. If

the participant’s utterance agreed with an option, the

option was indicated in red to show that the choice

had been made. Figure 2 is an example of the dis-

played screen in the experiment.

Conversation scenarios were made in advance,

and the timing of presenting the interaction recom-

mendation indicator to the participant and options of

speech guidelines presented to participants were de-

termined according to the conversation scenarios. The

timing of when the agents were persuaded was set as

the point in time when seven conversation turns were

performed with the agent, which was the same for all

conditions.

Persuaded Condition. In the Persuaded condition,

a scenario was set up in which the participants

were to visit the museum, cover the event, and

buy a souvenir to take home. The task was to de-

cide which part of the museum to visit in the first

decision-making scene. In the second decision-

making scene, the task was to decide which sou-

venir to buy. In this condition, one agent took a

neutral position while the other agent took a posi-

tion that conflicted with the participant’s opinion.

The agent taking the neutral position was swapped

in each decision-making scene.

ICAART 2023 - 15th International Conference on Agents and Artificial Intelligence

926

Interaction

control PC

Agent status

Scenario status

WoZ environment

The experimenter

Category of

the participant

voice input

Monitoring

PC

Display the agents’

behavior

Participant

Immersive display

Figure 3: The experimental setting.

Agreeing Condition. In the Agreeing condition, a

scenario was set up in which the participants

would interview the garden park and leave from

there. The task was to decide the division of the

roles during the interview in the first decision-

making scene. In the second decision-making

scene, the task was to decide on public transporta-

tion to return home after the interview. In this con-

dition, one agent took a neutral position while the

other agent took a position agreeing with the par-

ticipant’s opinion. The agent taking the neutral

position was swapped in each decision-making

situation.

3.2 Experimental Setting

Figure 3 illustrates the experimental setting. We em-

ployed the Immersive Collaborative Interaction En-

vironment (ICIE) (Nishida et al., 2014) and Unity

(http://unity3d.com/) to construct the virtual environ-

ment and the two agents. The ICIE employs a 360-

degree immersive display consisting of eight portrait

orientation LCD monitors with a 65-inch octagonal

screen. Participants in the experiment stood in the

center of the displays and interacted with the two

agents on the displays using their voice. The partici-

pants’ utterance was transmitted to the operator using

a microphone, and the operator controlled the agents

according to the utterances based on the predefined

rules (Wizard of Oz: WoZ). The participant’s voice

was recorded using microphones. The experimenter

sat out of the participant’s sight and observed the par-

ticipant’s behavior. The agents used audio to provide

suggestions and presentations.

3.3 Procedure

First, the participants were instructed about the ex-

periment and practiced interacting with the agents. A

session on the decision-making task was started after

it was determined that the participant was sufficiently

proficient in how to interact with the agents. Two ses-

sions were conducted for the entire experiment. Af-

ter each session, the participant completed a question-

naire about their conversations during the task. Each

session lasted around 30 minutes, and the entire ex-

periment lasted about 1 hour.

The interaction during the experiment proceeded

based on the participant’s utterances. The experi-

menter classified the utterances according to predeter-

mined rules (Wizard of Oz: WoZ). The agents’ utter-

ances were selected according to predetermined rules

based on the category of the participant’s utterances,

which were entered by the WoZ operator. Based on

input from the WoZ operator, the decision-making

task proceeded automatically.

Twenty-eight undergraduate students participated

in the experiment (22 were male and 6 were female).

The participants ranged in age from 19 to 24 years,

with an average age of 20.9 years (standard devia-

tion: 2.78). Fourteen of the participants first inter-

acted with the agents in the Persuaded condition (P-

first group). The remaining fourteen participants first

interacted with the agents in the Agreeing condition

(A-first group).

3.4 Result

Speech latency was measured as an index to infer the

participant’s willingness to participate in the conver-

sation in a decision-making task. Some researchers

report that the speech reaction time is related to

the divergent/convergent phases in discussion (Ichino,

2011) and in the human mental state in social interac-

tions with agents (Bechade et al., 2015; Ono et al.,

2016). Based on the speech recorded in the video,

speech latency was defined as the period between the

display of the interaction recommendation indicator

and the participant’s utterance. For utterances in sit-

uations in which the agent has not displayed the in-

teraction recommendation indicator, the latency was

defined as “0” if the agent was speaking, otherwise,

the latency was defined as “the time since the previ-

ous agent’s utterance.”

The post-session questionnaire consisted of six

questions regarding self-efficacy, engagement for the

interaction, and satisfaction with the interactions dur-

ing the sessions, each answered on a 7-point Likert

scale. The questionnaires after all sessions consisted

of three items that directly compared the sessions

to each other regarding self-efficacy, engagement for

the interaction, satisfaction with the interactions, and

which of the two sessions was felt more strongly.

Improving the Engagement of Participants by Interacting with Agents who Are Persuaded During Decision-Making

927

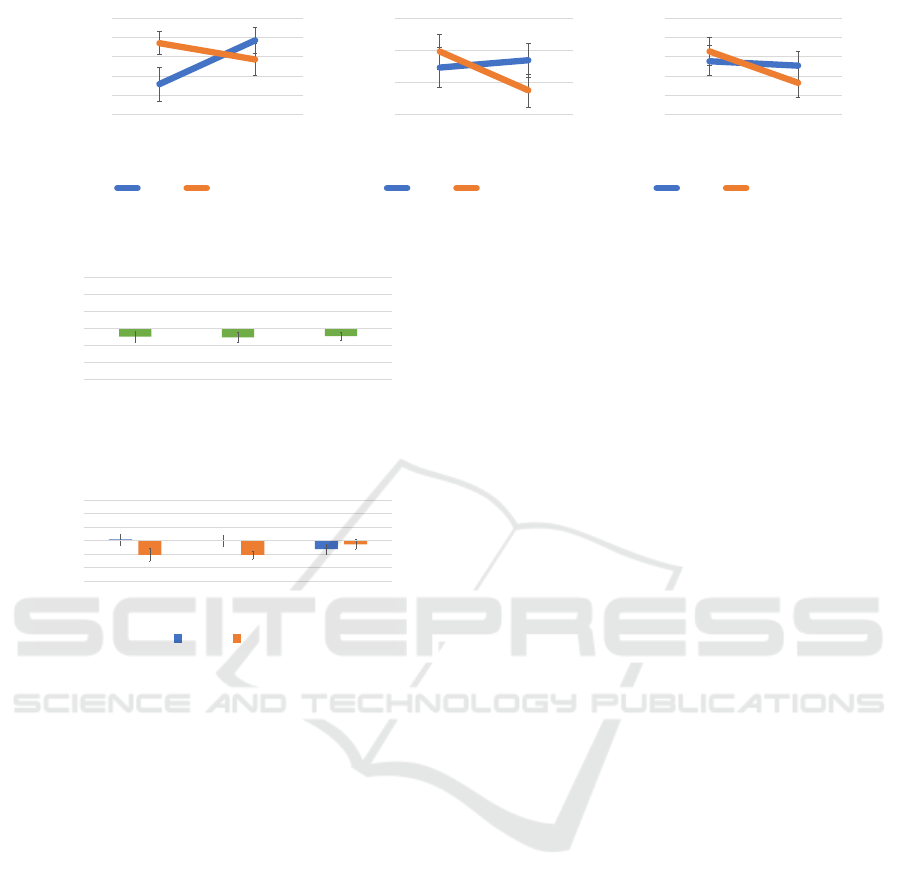

3.4.1 Speech Latency

Figure 4 shows the means and standard errors of

speech latency. We performed a two-way analysis of

variance (Group: P-first or A-first x Condition: Per-

suaded or Agreeing).

1.8

2.3

2.8

Persuaded Agreeing

Speech latency (s)

Condition

P-first A-first

Figure 4: The speech la-

tency between groups.

2

2.5

3

3.5

4

4.5

first second

Speech latency (s)

Scene

Persuaded Agreeing

Figure 5: The speech la-

tency between scenes.

The findings indicated a significant difference in

condition (F(1, 26) = 5.64, p = 0.025; Persuaded <

Agreeing). The difference as a numerical value is

insignificant. However, the fact that significant dif-

ferences were found despite the explicit triggers for

participants’ utterances shows that they could grasp

their partner’s utterances well and could also quickly

formulate their own opinions about their own opin-

ions. Thus, the result demonstrates that participants’

engagement with the interaction was increased in Per-

suaded condition.

We measured participants’ speech latency in each

of two decision-making scenes in one session to ex-

amine changes in participants’ engagement over time.

Figure 5 shows the mean and standard error of the

speech latency. We performed the two-way analysis

of variance (Scene: first or second x Condition: Per-

suaded or Agreeing) on these data.

The findings revealed that there was a marginally

significant difference in Scene (F(1, 27) = 4.13, p =

0.052; first < second). In other words, the results

demonstrate that in both the Persuaded and Agreeing

conditions, the speech latency tends to be longer in

the second scene. This indicates that engagement may

be decreasing as participants understand the prepared

scenarios.

3.4.2 Questionnaires for Each Session

Questions were asked regarding self-efficacy, engage-

ment in the interaction, and satisfaction with the inter-

action during each session. All participants answered

the self-efficacy question; however, one participant in

the P-first group did not answer the questions for en-

gagement and satisfaction; so this participant was ex-

cluded from the analysis. Figure 6 shows the means

and standard errors for each. We performed a two-

way analysis of variance (Group: P-first or A-first x

Condition: Persuaded or Agreeing) on these data.

Self-Efficiency.

The results demonstrated that the interaction was sig-

nificant (F(1,26) = 7.87, p = 0.0094). A simple main

effect test revealed that in the P-first group, scores

were substantially lower in the Persuaded condition

(F(1, 26) = 8.32, p = 0.0078). Another simple main

effect test demonstrated that in the Persuaded condi-

tion, there was a marginally significant difference in

the Group (F(1, 52) = 3.78, p = 0.058; P-first < A-

first). This indicates that participants who had not ex-

perienced being agreed with by their partner were less

likely to feel a sense of self-efficacy when persuad-

ing, whereas participants who had experienced being

agreed with by their partner did not experience a de-

crease in self-efficacy when persuading.

Engagement in the Interaction.

The result demonstrated a marginally significant dif-

ference in condition (F(1,25) = 4.01, p = 0.056; Per-

suaded > Agreeing). However, a simple main ef-

fect was tested since the interaction was significant

(F(1,25) = 8.65, p = 0.0069). The findings demon-

strated that in the A-first group, the scores in the

Agreeing condition were substantially lower than that

in Persuaded condition (F(1, 25) = 12.22, p = 0.0018).

This indicated that participants who had not experi-

enced persuasion were less likely to enhance their en-

gagement when the other party simply agreed without

persuasion. The results also demonstrated that partic-

ipants who had experienced persuasion did not expe-

rience a significant decrease in engagement when the

other party agreed without persuasion.

Satisfaction with the Interactions.

The result demonstrated a marginally significant dif-

ference in condition (F(1,25) = 3.41, p = 0.077; Per-

suaded > Agreeing). This shows that persuading or

not may enhance interaction satisfaction, although the

impact is not large.

3.4.3 Session-to-Session Comparison

Questionnaire

To make direct comparisons between sessions, we an-

alyzed the results of participants’ answers to question-

naires regarding self-efficacy, engagement in the in-

teraction, and satisfaction with the interactions. Each

item was encoded with the middle as the reference

(0), with the Persuaded condition in the positive di-

rection and the Agreeing condition in the negative di-

ICAART 2023 - 15th International Conference on Agents and Artificial Intelligence

928

3

3.5

4

4.5

5

5.5

Persuaded Agreeing

Score of Self

-efficiency

Condition

P-first A-first

16

18

20

22

Persuaded Agreeing

Score of Engagement in

interaction

Condition

P-first A-first

12

13

14

15

16

17

Persuaded Agreeing

Score of Satisfaction with

the interactions

Condition

P-first A-first

Figure 6: The results of questionnaires regarding self-efficacy, engagement, and satisfaction with the interaction.

-3

-2

-1

0

1

2

3

Self-efficiency Engagement in

the interaction

Satisfaction with

the interactions

Scores of questionnaires

Figure 7: The results of questionnaires of direct compar-

isons between sessions.

-3

-2

-1

0

1

2

3

Self-efficiency Engagement in

the interaction

Satisfaction with

the interactions

P-first A-first

Scores of questionnaires

Figure 8: The results of questionnaires of direct compar-

isons between sessions in each group.

rection (+3 to -3). Figure 7 shows the results. A one-

sample Wilcoxon Signed-Rank Test was conducted

with the null hypothesis that the mean is 0 for each

item. The findings showed marginally significant dif-

ferences in engagement for the interaction (engage-

ment, p = 0.057; Persuaded > Agreeing).

Since Questionnaires for each session sometimes

showed different trends by the group, they were tab-

ulated separately for each group. Figure 8 shows

the results. A one-sample Wilcoxon Signed-Rank

Test was conducted in the same way for each group.

The findings demonstrated that there were no signifi-

cant differences in the P-first group, while there were

marginal or significant differences in self-efficacy and

engagement for the interaction in the A-first group

(self-efficacy, p = 0.070; engagement for the interac-

tion, p = 0.011; both, Persuaded ¿ Agreeing).

These results demonstrate that, when comparing

the two interaction experiences overall, persuading

produces positive, albeit not large, impressions in en-

gagement and satisfaction. Additionally, the results

show that participants’ positive impressions of self-

efficacy and engagement were stronger when partic-

ipants were persuaded after they had experienced an

interaction in which the others agreed with the partic-

ipants. However, this may have been influenced by

impressions from the second session conducted.

4 DISCUSSION

The participants’ behavior and the results of the ques-

tionnaire demonstrated that, overall, persuading the

interaction partner enhances engagement in the inter-

action. However, the experience of persuading the

interaction partner and the experience of the partner

agreeing influenced the subsequent engagement and

subjective evaluation of the interaction. This indicates

that participants made criteria for their interactions

with others based on their prior experiences and as-

sessed their own experiences based on these criteria.

In other words, to enhance engagement in a particular

situation, it is crucial to comprehensively design the

series of interactions leading up to that situation.

The findings of the questionnaire after the two

interaction sessions revealed that self-efficacy was

lower when the agents were persuaded in the first ses-

sion. However, engagement tended to score higher

in the Persuaded condition. To analyze the relation-

ship between these ratings, we computed the corre-

lation coefficients among self-efficacy, engagement

and satisfaction questionnaire findings in the Per-

suaded and Agreeing conditions, respectively, and

discovered that although there was a strong correla-

tion between engagement and satisfaction (Persuaded

= 0.78, Agreeing = 0.73), there was no strong cor-

relation between self-efficacy and engagement and

between self-efficacy and satisfaction (between self-

efficacy and engagement, Persuaded = 0.29, Agreeing

= 0.17; between self-efficacy and satisfaction, Per-

suaded = 0.39, Agreeing = 0.33). This shows that self-

efficacy has no direct effect on engagement in the cur-

rent experimental task. Interaction factors, which can

directly influence engagement must be considered.

In this experimental task, since the interactions

proceeded according to a predetermined decision-

Improving the Engagement of Participants by Interacting with Agents who Are Persuaded During Decision-Making

929

making scenario, participants might not have fully

understood the need for persuasion in their interac-

tions with the agent and in the task. It is also possible

that although the participants were allowed to speak

freely, they were guided in the direction of the inter-

action by displaying speech options, and thus did not

have sufficient awareness that they were successfully

persuaded by their own opinions. We would like to

test this in a conversation with sufficient flexibility.

5 CONCLUSION

The aim of this study was to investigate whether

the interaction with an agent that implemented the

proposed persuasion model could enhance the user’s

sense of self-efficacy and engagement in the task. We

conducted an experiment employing an agent that im-

plements the interaction model described above to test

the hypothesis that the human persuasion of an agent

increases self-efficacy and engagement in a task. As a

result, the participants’ behavior and the results of the

questionnaire demonstrated that, overall, persuading

the interaction partner enhances engagement in the in-

teraction. However, the experience of persuading the

interaction partner and the experience of the partner

agreeing influenced the subsequent engagement and

subjective evaluation of the interaction. We believe

that persuasion interaction plays an important role for

intelligent agents to be recognized as independent en-

tities with their own opinions, rather than just accept-

ing human commands. In the future, we would like

to examine whether persuasion interaction can con-

tribute to improving the quality of collaborative deci-

sion making.

REFERENCES

Allen, J. E., Guinn, C. I., and Horvtz, E. (1999). Mixed-

initiative interaction. IEEE Intelligent Systems and

their Applications, 14(5):14–23.

Bandura, A. (1977). Self-efficacy: toward a unifying

theory of behavioral change. Psychological review,

84(2):191.

Bechade, L., Dubuisson Duplessis, G., Sehili, M., and Dev-

illers, L. (2015). Behavioral and emotional spoken

cues related to mental states in human-robot social in-

teraction. In Proceedings of the 2015 ACM on Interna-

tional Conference on Multimodal Interaction, pages

347–350. ACM.

Chen, T.-J., Subramanian, S. G., and Krishnamurthy, V. R.

(2019). Mini-map: Mixed-initiative mind-mapping

via contextual query expansion. In AIAA Scitech 2019

Forum, page 2347.

Fujino, H., Ishii, H., and Shimoda, H. (2007). Sugges-

tion of an education method with an affective interface

for promoting employees’work motivation in a routine

work. IFAC Proceedings Volumes, 40(16):409–414.

Gianni, M., Papadakis, P., Pirri, F., and Pizzoli, M. (2011).

Awareness in mixed initiative planning. In 2011 AAAI

Fall Symposium Series.

Ichino, J. (2011). Discriminating divergent/convergent

phases of meeting using non-verbal speech patterns.

In ECSCW 2011: Proceedings of the 12th Euro-

pean Conference on Computer Supported Coopera-

tive Work, 24-28 September 2011, Aarhus Denmark,

pages 153–172. Springer.

Kotze, M. (2018). How job resources and personal

resources influence work engagement and burnout.

African Journal of Economic and Management Stud-

ies, 9(2):148–164.

Misu, T., Sugiura, K., Kawahara, T., Ohtake, K., Hori, C.,

Kashioka, H., Kawai, H., and Nakamura, S. (2011).

Modeling spoken decision support dialogue and opti-

mization of its dialogue strategy. ACM Transactions

on Speech and Language Processing (TSLP), 7(3):10.

Nishida, T., Nakazawa, A., Ohmoto, Y., and Mohammad, Y.

(2014). Conversational Informatics: A Data-Intensive

Approach with Emphasis on Nonverbal Communica-

tion. Springer.

Ohmoto, Y., Kataoka, M., and Nishida, T. (2014). The ef-

fect of convergent interaction using subjective opin-

ions in the decision-making process. In Proceedings

of the Annual Meeting of the Cognitive Science Soci-

ety, volume 36.

Ono, T., Ichijo, T., and Munekata, N. (2016). Emergence

of joint attention between two robots and human us-

ing communication activity caused by synchronous

behaviors. In Robot and Human Interactive Com-

munication (RO-MAN), 2016 25th IEEE International

Symposium on, pages 1187–1190. IEEE.

Qiu, L. and Benbasat, I. (2009). Evaluating anthropomor-

phic product recommendation agents: A social re-

lationship perspective to designing information sys-

tems. Journal of management information systems,

25(4):145–182.

Raux, A., Langner, B., Bohus, D., Black, A. W., and Es-

kenazi, M. (2005). Let’s go public! taking a spoken

dialog system to the real world. In Ninth European

Conference on Speech Communication and Technol-

ogy.

Sannomiya, M. (2015). The effect of backchannel utter-

ances as a strategy to faciliate idea generation in a

copresence situation: Relationship to thinking tasks.

Osaka Human Sciences, 1:189–203.

Siebert, J. U., Kunz, R. E., and Rolf, P. (2020). Effects of

proactive decision making on life satisfaction. Euro-

pean Journal of Operational Research, 280(3):1171–

1187.

Tominaga, Y., Tanaka, H., Matsubara, H., Ishiguro, H.,

and Ogawa, K. (2021). Report of the case of con-

structing dialogue design patterns for agent system

based on the standard dialogue structure. Transac-

tions of the Japanese Society for Artificial Intelli-

gence, 36(5):AG21–F.

ICAART 2023 - 15th International Conference on Agents and Artificial Intelligence

930