Brazilian Banknote Recognition Based on CNN for Blind People

Odalisio L. S. Neto

1

, Felipe G. Oliveira

2

, Jo

˜

ao M. B. Cavalcanti

1

and Jos

´

e L. S. Pio

1

1

Institute of Computing (ICOMP), Federal University of Amazonas (UFAM), Manaus, Amazonas, Brazil

2

Institute of Exact Sciences and Technology (ICET), Federal University of Amazonas (UFAM),

Keywords:

Banknote Recognition, Convolutional Neural Network, Visually Impaired People, Accessibility.

Abstract:

This paper presents an approach based on computer vision techniques for the recognition of Brazilian ban-

knotes. The methods for identifying banknotes, proposed by the Brazilian Central Bank, are unsafe due to

intense banknote damage to their original state during daily use. These damages directly affect the recognition

ability of the visually impaired. The proposed approach takes into account the second family of the Brazilian

currency, the Real (plural Reais), regarding notes of 2, 5, 10, 20, 50 and 100 Reais. Thus, the proposed strategy

is composed by two main steps: i) Image Pre-Processing; and ii) Banknote Classification. In the first step, the

images of Brazilian banknotes, acquired by smartphone cameras, are processed to reduce the noise presence

and preserve edges, through the bilateral filter. Finally, in the banknote classification step, the feature learning

process is performed, representing the main features for banknote image classification. In addition, the Convo-

lutional Neural Network (CNN) is used to classify the note denomination (value). Experiments demonstrated

the effectiveness and robustness of the proposed approach, achieving an accuracy of 99.103%, using the pro-

posed dataset with 6365 images of real banknotes in different environments and illumination conditions.

1 INTRODUCTION

The most used payment method worldwide is through

banknotes and it still constitutes an essential part in

monetary transactions (Joshi et al., 2020). Banknotes

recognition is a non-trivial task for visually impaired

and blind people. The mentioned difficulty makes

people with visual impairment vulnerable to fraud.

Moreover, it creates a barrier to daily consumption ac-

tivities, like purchases and other banking tasks (Sousa

et al., 2020).

Studies carried out in 2015 demonstrated that

there were an estimated 36 million blind people glob-

ally. Meanwhile, moderate and severe vision impair-

ment affected 216.6 million people (Bourne et al.,

2017). According to the Brazilian National Health

Survey in 2019, 6.978 million Brazilian people have

severe vision impairment or blindness (IBGE, 2019).

Banking institutions around the world, respon-

sible for the banknotes manufacture, provide some

strategies to facilitate banknote recognition by the vi-

sually impaired, such as: different sizes, coded tactile

structure, braille markings, irregular edges or tangible

features (Joshi et al., 2020)(Ng et al., 2020). How-

ever, the tactile analysis represents a considerable dif-

ficulty due to intense banknotes turnover, promoting

damages in their original state resulting in incorrect

identification. Figure 1 presents an example of Brazil-

ian banknote damage, regarding tactile analysis.

Figure 1: Example of Brazilian banknote with damage in

tactile marks.

This paper presents an approach to recognizing

Brazilian banknotes and assisting people with vi-

sual impairment in financial operations. The ban-

knote denomination (value) is represented and clas-

sified through the proposed CNN architecture, with

a specific learning process for the banknote domain.

Experiments in real-world scenarios demonstrate the

proposed approach scalability and show that the ob-

tained results are accurate and reliable.

Our main contribution is to provide a robust and

846

Neto, O., Oliveira, F., Cavalcanti, J. and Pio, J.

Brazilian Banknote Recognition Based on CNN for Blind People.

DOI: 10.5220/0011796200003417

In Proceedings of the 18th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2023) - Volume 5: VISAPP, pages

846-853

ISBN: 978-989-758-634-7; ISSN: 2184-4321

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

efficient approach, based on Deep Learning, to clas-

sify Brazilian banknotes, assisting the visually im-

paired in daily monetary transactions. Based on the

proposed approach it is possible to embed the learn-

ing model into a mobile application to support vi-

sually impaired people, in monetary operations. We

also highlight the dataset used in this research work,

consisting of Brazilian banknotes in different environ-

ments and luminosity conditions. Furthermore, it is

important to mention the variety of images, including

crumpled banknotes and real acquisition scenarios.

This paper is organized as follows. In Section

2 we present the state-of-the-art regarding banknotes

recognition. In Section 3 is presented the proposed

methodology. We describe the experiments and dis-

cuss the obtained results in Section 4. Finally, in Sec-

tion 5, we provide concluding remarks and discuss

paths for future investigation.

2 RELATED WORK

Banknote analysis is a popular research area in Pat-

tern Recognition. It has been the focus of in-

tense investigation to achieve accurate and reliable re-

sults, with different approaches reported in the litera-

ture (Huang and Gai, 2020)(Tan et al., 2020)(Pham

et al., 2019)(Yousry et al., 2018). Several state-of-

the-art work perform the banknote image acquisi-

tion using different camera settings, with respect to

high-resolution (Dittimi et al., 2017), low-resolution

(Sousa et al., 2020) and smartphone cameras (Ng

et al., 2020). Other research work employ sensors,

including contact image sensor (Sanghun Lee et al.,

2017) and banknote readers (Tan et al., 2020). In this

work, we use smartphone cameras to collect our pro-

posed image dataset.

There are several research work analyzing distinct

currencies, including the US dollar, the Euro and the

Brazilian real (Sousa et al., 2020). Other work tackle

banknotes from countries such as China (Sun et al.,

2018), Hong Kong (Ng et al., 2020), India (Joshi

et al., 2020), among many others (Baykal et al., 2018),

(Yousry et al., 2018), (Tan et al., 2020) (Kongprasert

and chongstitvatana, 2019), (Huang and Gai, 2020),

(Pham et al., 2019) and (Dittimi et al., 2017).

In this paper we address banknotes from Brazil,

aiming at a robust and effective banknote recognition.

Unlike the work mentioned earlier, our image dataset

is composed by banknotes in real situations, including

crumpled banknotes, blurred and images captured in

non-ideal light condition.

Banknote classification techniques typically iden-

tify counterfeit (Laavanya and Vijayaraghavan,

2019), soiled (Sanghun Lee et al., 2017), worn (Tan

et al., 2020), old (Sun et al., 2018), nationality

(Khashman et al., 2018) or denomination (value) (Ng

et al., 2020) of banknotes. In some approaches, ban-

knote features are represented through size, color and

text (Abburu et al., 2017), Histogram of Oriented Gra-

dients (HOG) (Dittimi et al., 2017), Oriented FAST

and Rotated BRIEF (ORB) (Yousry et al., 2018), Dis-

crete Cosine Transformation (DCT) (Tan et al., 2020)

and Quaternion Wavelet Transform (QWT) (Huang

and Gai, 2020). Different approaches threaten the

classification problem using Fuzzy (Tan et al., 2020),

Support Vector Machine (SVM) (Kongprasert and

chongstitvatana, 2019), Random Forest (Sousa et al.,

2020), Neural Networks (Khashman et al., 2018) and

Hamming distance (Yousry et al., 2018). In the pro-

posed strategy we address the recognition of ban-

knotes denomination (value) problem. Furthermore,

we use a Deep Learning technique to learn better fea-

tures to depict the banknote characteristics.

State-of-the-art approaches tackle banknote

recognition using Deep Learning architectures,

including CNN fully trained on their own ban-

knote datasets (Ng et al., 2020)(Huang and Gai,

2020)(Baykal et al., 2018), YOLO-CNN model

(Joshi et al., 2020), Alex net tuned through transfer

leaning (Laavanya and Vijayaraghavan, 2019),

Alex net, VGG-11, VGG-16, Resnet-18 combining

(Pham et al., 2019) and DenseNet-121 tuned through

transfer leaning (Sun et al., 2018). We also define

our feature representation learning and classification

process using a CNN architecture. However, our

approach tackles images acquired in real scenarios,

with different environments and lighting conditions,

unlike the mentioned works. Additionally, The pro-

posed approach regards images in distinct capturing

settings, varying the positional relation between the

camera and the banknote.

The closer work to ours is (Thomas and Mee-

han, 2021), where the authors created a CNN for ban-

knote recognition, assisting visually impaired people

to identify different banknote values. The authors

used data augmentation techniques to simulate partial

banknote images, resembling those a blind or visu-

ally impaired person would take. The created model

achieved an average accuracy rate of 94%. The model

had some difficult in correctly identifying some ban-

knotes, likely attributed to issues such as poor lighting

in the images. Our approach uses varied lighting con-

ditions which may significantly improve the model’s

sensitivity to illumination conditions.

Brazilian Banknote Recognition Based on CNN for Blind People

847

3 METHODOLOGY

Our problem can be summarized as follows:

Problem (Brazilian Banknote Value Recognition).

Let I = {i

1

, i

2

, ..., i

n

} be a series of images provided by

a smartphone camera. Also let V = {v

1

, v

2

, ..., v

k

} be a

series of previously known banknote labels (values).

Our main goal is to correctly associate an unknown

Brazilian banknote image (i

j

) to the correspondent

banknote label (v

w

), representing its value.

In this paper, we propose an approach for the au-

tomatic recognition of Brazilian banknotes, based on

visual features and deep learning classification. The

proposed approach tackles the recognition problem of

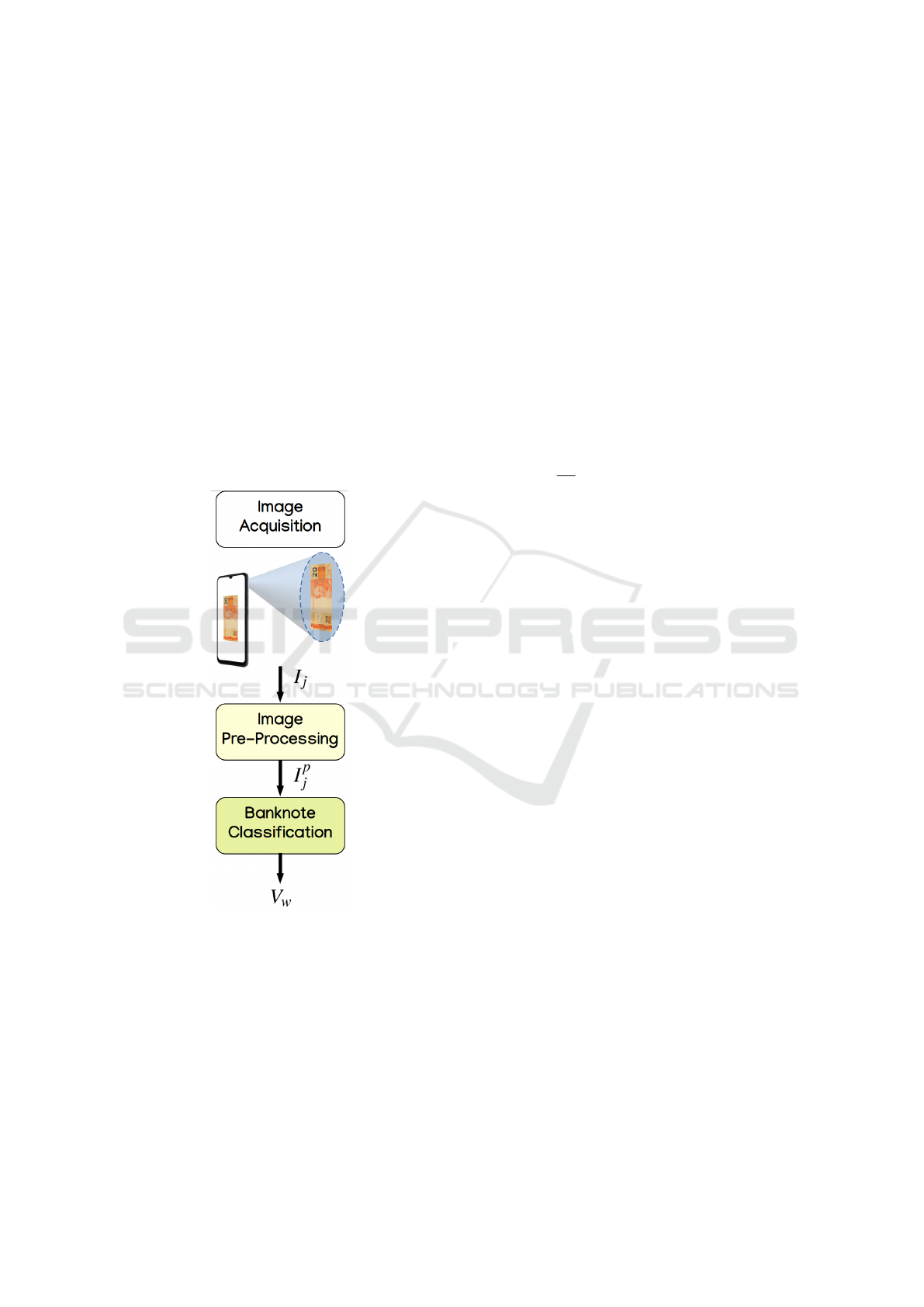

banknote denomination (value). An overview of the

proposed methodology is shown in Figure 2, whose

details will be presented in the next subsections.

Figure 2: Overview of the proposed approach for Brazilian

banknote recognition.

In Figure 2, we present an overview of the

proposed approach, highlighting the main steps to

achieve the Brazilian banknote classification. To

reach this goal, images are acquired by the user and

an image pre-processing stage is performed. Differ-

ent visual aspects are learned in a deep learning pro-

cedure, understanding the banknote features for an ef-

ficient classification.

3.1 Image Pre-Processing

In this methodology, the images (I) are initially ac-

quired in Red, Green and Blue (RGB) model and later

the images are re-scaled to 640x480 pixels. After this,

it is needed to process the raw images to highlight the

main features to describe the banknote images.

In order to enhance the image quality for the

Brazilian banknote recognition, the Bilateral filtering

is applied. The Bilateral Filter (BF) is a nonlinear

weighted averaging filter, where the weights depend

on both the spatial distance and the intensity distance

concerning the central pixel. The main feature of the

bilateral filter is its ability to preserve edges while do-

ing spatial smoothing. The mentioned nonlinear filter

is performed as in the equation below:

BF(I)

p

=

1

W

p

∑

q∈S

G

σs

(||p − q||)G

σr

(|I

p

− I

q

|)I

q

(1)

where p is a pixel location, S is the spatial neighbor-

hood of p, q is every neighbor pixel in S, G

σs

is the

spatial domain kernel and G

σr

is the intensity range

kernel. W

p

is the normalization factor, as defined be-

low:

W

p

=

∑

q∈S

G

σs

(||p − q||)G

σr

(|I

p

− I

q

|) (2)

In this stage, every banknote image (I

j

) is

smoothed, reducing the noise presence, meanwhile

the edge quality for the feature representation process

is preserved (I

p

j

). In this sense, the main features of

the banknote images are highlighted, improving the

learning step for banknote classification.

3.2 Banknote Classification

From the pre-processed Brazilian banknote images

(I

p

j

), a feature learning process is applied. For this,

a deep learning based approach is used to understand

patterns and learn features in different conditions. A

CNN architecture is proposed for the feature learning

and banknote classification problem.

A CNN is a deep learning architecture strongly

used for computer vision applications. CNN models

can learn and represent effective features to allow the

classification or regression process in real problems.

In the banknote classification context, the proposed

CNN model, unlike other classical approaches, can

learn the features and its representation for the clas-

sification stage, even in different scenarios and do-

mains.

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

848

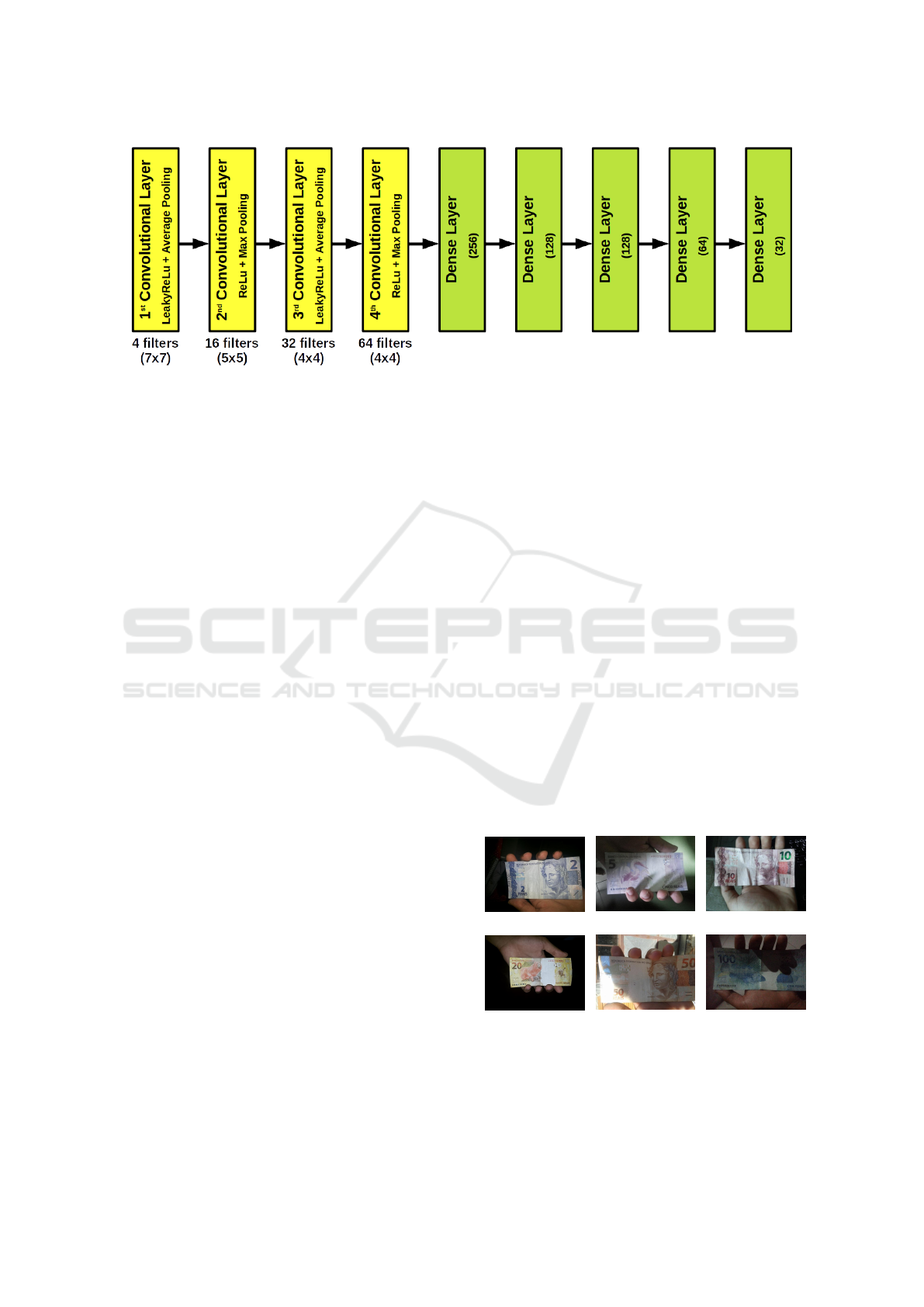

Figure 3: Proposed CNN architecture for Brazilian banknote recognition.

The proposed CNN model is composed by 4 con-

volutional layers. The first layer is defined with 4 fil-

ters, the second layer is defined with 16 filters, the

third layer is defined with 32 filters and, at last, the

fourth layer is defined with 64 filters. The filters size

in the first layer are (7x7), in the second layer are

(5x5) and in the third and fourth layers are (4x4).

In the first and third convolutional layers, the

LeakyReLu activation function is used, together with

the Average Pooling function, regarding a window

size of (2x2). In the second and fourth convolutional

layers, the ReLu activation function is used, together

with the Max Pooling function, regarding window

size of (2x2).

After the convolutional layers, 5 fully-connected

layers are proposed. The sizes of the fully-connected

layers are 256, 128, 128, 64, and 32, respectively

for each flatten layer. Between each fully-connected

layer are used Dropout layers with rate value of 0.1.

In the training stage, the Stochastic Gradient De-

scent (SGD) optimization algorithm is used, with

learning rate equal to 0.01 and momentum of 0.75.

The training procedure was performed for 20 epochs

and using a batch size of 20. The CNN model is used

for Brazilian banknote classification due to good re-

sults achieved in similar scenarios (Thomas and Mee-

han, 2021). The proposed CNN model is also used

due to good feature representation learning, depicting

distinct and complementary features for modeling dif-

ferent banknotes and environment conditions, unlike

classical approaches.

Figure 3 presents the proposed CNN architecture

for the Brazilian banknote value recognition. It also

provides details about the deep-learning model, de-

scribing the convolutional and dense layers, filters,

complementary functions and parameters involved.

4 EXPERIMENTS

In this section, we evaluate the proposed approach,

comparing it with traditional methods. Furthermore,

we evaluate the proposed approach regarding simu-

lated noisy and blurry images.

The proposed experimental setup is composed by

two low-cost smartphone cameras, with 8.0 megapix-

els and artificial illumination (flash). For the pro-

cessing stage a Dell computer, with an Intel Xeon

T M

Silver 4114 2.20GHz CPU and 128 GB DDR4-2133

main memory, is used to execute the proposed ap-

proach.

4.1 Image Acquisition

The image dataset was collected using smartphone

cameras, depicting Brazilian banknotes in different

real environments and illumination conditions. The

proposed dataset is composed by 6 different types of

banknotes, regarding notes of 2, 5, 10, 20, 50 and 100

Reais, as can be observed in Figure 4.

(a) (b) (c)

(d) (e) (f)

Figure 4: Different banknote images from the proposed im-

age dataset.

The image dataset consists of 6365 Brazilian ban-

knote images, with variation in natural illumination

Brazilian Banknote Recognition Based on CNN for Blind People

849

and artificial illumination (with flash) conditions. Ad-

ditionally, images from the front and back surface of

each banknote were acquired.

For the image acquisition process, the smartphone

camera must be positioned keeping a distance of

about 10cm, between the banknote and the camera de-

vice. We suggest a region near the mid-forearm, as

reference point, for the correct position of the image

acquisition device, as can be seen in Figure 5.

Figure 5: Acquisition process of Brazilian banknote im-

ages.

4.2 Brazilian Banknote Classification

Assessment

This experiment evaluates the accuracy of the pro-

posed approach for the Brazilian banknote classifica-

tion. Different approaches are implemented and eval-

uated to address this classification problem. The ap-

proaches used for comparison are: i) Local Binary

Pattern (LBP) and Ada Boosting (AB) classifier; ii)

LBP and Random Forest (RF) classifier; iii) HOG

and AB classifier; and iv) HOG and RF classifier.

The comparison techniques were used due to good

results obtained in related approaches for banknotes

recognition (Dittimi et al., 2017), (Sousa et al., 2020)

and overall banknotes analysis (Ayalew Tessfaw et al.,

2018) and (Thomas and Meehan, 2021).

The setting parameters for the AB classifier were

the number of estimators equals to 100. For the RF

classifier the setting parameters were the number of

estimators equal to 30 and the max depth equal to

30. The parameters tuning for the proposed approach

was performed by varying a set of parameters to max-

imize accuracy, during the training and testing stages.

Different scenarios changing the number of convolu-

tional layers, the number of filters, the size of filters,

the number of fully-connected layers, the size of the

fully-connected layers and the learning rate were ex-

perimented.

The training stage of the classification model was

performed from a set of input images and the testing

stage uses another set of input images since the cross-

validation 5-fold protocol is applied. The dataset used

for the training process is composed of 6365 images

of Brazilian banknotes, acquired manually by a visu-

ally impaired human operator.

The achieved results show that the proposed CNN

model outperforms the other traditional techniques, as

we can observe in Table 1. The combination of the

LBP descriptor (depicting texture features) with the

RF classifier (banknote value recognition), obtained

the best results among the traditional techniques.

Table 1: Results for Brazilian banknote classification. This

experiment presents the accuracy for CNN (proposed),

HOG and LBP descriptors. Additionally, were used the

Random Forest (RF) and Ada Boosting (AB) for the classi-

fication problem.

Method Accuracy

LBP + AB 48.672 ± 1.226

LBP + RF 81.257 ± 1.301

HOG + AB 61.461 ± 0.670

HOG + RF 78.020 ± 0.985

Our method 99.103 ± 0.326

The proposed approach achieved accurate results,

in the Brazilian banknote classification process, due

to the learning of different filters during the value

recognition process. The CNN process allows the

learning of patterns in different contexts and regard-

ing different features. Thereby, some banknote con-

ditions can be better represented and classified using

the convolutional learning process.

4.3 Brazilian Banknote Evaluation:

Noise Analysis

This experiment evaluates the robustness of the pro-

posed approach for Brazilian banknote classification

in the presence of noise. Two different types of noise

are added in banknote images, Salt and Pepper and

Gaussian noises. All the traditional techniques used

in the experiment described in the previous subsection

and the proposed CNN model are evaluated. In this

experiment the added noise simulates possible prob-

lems in the image acquisition process in real-world

situations and different types of smartphone cameras.

For this assessment, the classification model train-

ing is performed from a set of images without noise

and the testing stage uses another set of images with

added noise. Figure 6 represents Brazilian banknote

image examples. Figure 6 represents a Brazilian ban-

knote without noise. Figure 6 represents a Brazilian

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

850

(a) (b) (c)

Figure 6: Examples of Brazilian banknote images. In Figure 6 a banknote image is presented without the presence of noise.

Figure 6 presents a banknote image with Salt and Pepper noise, with 0.02 noise density. Figure 6 presents a banknote image

with Gaussian noise, with 0.02 noise density.

Table 2: Results for robustness evaluation of Brazilian ban-

knotes classification, in the presence of noise. In this exper-

iment, all the considered methods are evaluated for Salt and

Pepper noise.

Salt and Pepper

Noise density 0.005 0.01 0.02

LBP+AB

44.446

± 1.775

41.147

± 0.988

36.009

± 1.208

LBP+RF

65.750

± 1.688

51.155

± 1.242

36.434

± 1.924

HOG+AB

46.771

± 1.388

35.570

± 1.836

27.133

± 1.812

HOG+RF

63.378

± 1.751

49.568

± 1.018

35.617

± 1.827

Our method

98.879

± 0.291

98.975

± 0.517

98.911

± 0.747

Table 3: Results for robustness evaluation of Brazilian ban-

knote classification, in the presence of noise. In this experi-

ment, all the considered methods are evaluated for Gaussian

noise.

Gaussian

Noise density 0.005 0.01 0.02

LBP+AB

18.350

± 0.825

17.753

± 1.638

18.130

± 0.939

LBP+RF

18.068

± 0.917

18.382

± 0.810

18.209

± 0.756

HOG+AB

29.411

± 0.616

24.195

± 0.999

21.430

± 0.480

HOG+RF

31.767

± 0.686

25.232

± 1.104

21.728

± 1.313

Our method

98.783

± 0.314

98.255

± 0.527

98.015

± 0.313

banknote with Salt and Pepper noise, with 0.02 noise

density. Figure 6 represents a Brazilian banknote with

Gaussian noise, with 0.02 noise density.

The achieved results in this experiment show that

the proposed CNN model presents better performance

even in the presence of noise, as we can observe in

Table 2 and Table 3. Table 2 shows the Brazilian ban-

knote classification results for Salt and Pepper noise,

using the accuracy and standard deviation measures.

Table 3 shows the Brazilian banknote classification

results for Gaussian noise, also using the accuracy and

standard deviation measures. The results show that

the proposed CNN model outperforms the other clas-

sification approaches even in the presence of noise,

demonstrating the robustness of our approach.

4.4 Brazilian Banknote Evaluation:

Blurring Analysis

This experiment evaluates the robustness of the pro-

posed approach for Brazilian banknote classification

with blurred images. For this, the Gaussian blurring

technique is used to generate different blurring de-

grees for banknote images. In this experiment, Gaus-

sian filters were used with size 13x13, 15x15, 17x17,

19x19 and 21x21, simulating a blur effect in the image

capturing process.

All the traditional techniques used in the experi-

ments described in the previous subsections and our

proposed CNN model are evaluated. For this assess-

ment, the classification model training is performed

with a set of images without blur and the testing stage

uses another set of images with added blur. Figure 7

shows examples of blurred images of Brazilian ban-

knotes. Figures 7, 7, 7, 7 and 7 represent a Brazilian

banknote with 13x13, 15x15, 17x17, 19x19 and 21x21

filter sizes, respectively.

The achieved results in this experiment show that

the proposed CNN model presents better performance

even when the banknote image is blurred, as we can

observe in Table 4. Table 4 shows the Brazilian ban-

knote blurred image classification results, using the

accuracy and standard deviation measures.

In Figure 8, it is also possible to verify the perfor-

mance of the proposed approach regarding the blur-

ring problem in image capturing process, highlighting

Brazilian Banknote Recognition Based on CNN for Blind People

851

(a) (b) (c)

(d) (e)

Figure 7: Examples of blurred images of Brazilian banknotes. Figures 7, 7, 7, 7 and 7 present blurring effects, with Gaussian

filters with sizes 13x13, 15x15, 17x17, 19x19 and 21x21, respectively.

Table 4: Results for robustness evaluation of Brazilian ban-

knote classification, in out of focus images. In this experi-

ment, all the considered methods are evaluated using low-

pass Gaussian filter.

Lowpass Gaussian Filter

Kernel size 13 15 17 19 21

LBP+AB

15.727

±1.215

15.727

±1.440

16.041

±1.690

16.057

±1.757

15.837

±1.541

LBP+RF

16.292

±0.790

16.386

±0.714

16.057

±0.819

16.119

±1.063

16.182

±1.165

HOG+AB

62.577

±1.228

60.644

±1.164

59.230

±0.749

59.199

±1.351

58.445

±1.354

HOG+RF

76.198

±1.093

74.863

±0.749

74.187

±0.756

73.260

±0.844

72.333

±0.904

Our method

99.055

± 0.211

98.767

± 0.212

98.895

± 0.484

98.639

± 0.444

98.687

± 0.767

the high accuracy of our method. Results show that

the proposed CNN model outperforms the other clas-

sification approaches even in blurred banknote im-

ages, demonstrating the robustness of the proposed

approach in real image capturing conditions.

5 CONCLUSION

In this paper we addressed the problem of Brazilian

banknote classification, recognizing the note denom-

ination (value), for visually impaired people. Un-

like other state-of-the-art approaches, our method

achieves high accuracy with images of banknotes in

a real-world scenario, reproducing the manual ban-

Figure 8: Evaluation of Brazilian banknote classification

methods under blurring impact.

knote image acquisition through smartphone cameras.

Experiments involving different classification

techniques have shown that the obtained Brazilian

banknote classification results are reliable and accu-

rate, considering the image dataset used. Addition-

ally, the proposed approach presents robustness, even

in presence of noise, blurring and defocusing during

image acquisition. It also demonstrates the feasibil-

ity for real applications, once the experiments were

carried out in real-world scenarios.

As future work, we intend to combine different

classification methods to improve the current classifi-

cation accuracy. We also plan to concentrate efforts

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

852

on extending the banknote classification process to

tackle banknotes from other countries. Furthermore,

we intend to address deformed image banknotes, re-

producing crumpled banknotes.

ACKNOWLEDGEMENTS

This work was developed with support from the Mo-

torola, through the IMPACT-Lab R&D project, in the

Institute of Computing (ICOMP) of the Federal Uni-

versity of Amazonas (UFAM).

REFERENCES

Abburu, V., Gupta, S., Rimitha, S. R., Mulimani, M.,

and Koolagudi, S. G. (2017). Currency recognition

system using image processing. In 2017 Tenth In-

ternational Conference on Contemporary Computing

(IC3), pages 1–6.

Ayalew Tessfaw, E., Ramani, B., and Kebede Bahiru, T.

(2018). Ethiopian banknote recognition and fake de-

tection using support vector machine. In 2018 Sec-

ond International Conference on Inventive Commu-

nication and Computational Technologies (ICICCT),

pages 1354–1359.

Baykal, G., Demir, U., Shyti, I., and

¨

Unal, G. (2018). Turk-

ish lira banknotes classification using deep convolu-

tional neural networks. In 2018 26th Signal Pro-

cessing and Communications Applications Confer-

ence (SIU), pages 1–4.

Bourne, R. R. A., Flaxman, S. R., Braithwaite, T., Cicinelli,

M. V., Das, A., Jonas, J. B., Keeffe, J., Kempen, J. H.,

Leasher, J., Limburg, H., Naidoo, K., Pesudovs, K.,

Resnikoff, S., Silvester, A., Stevens, G. A., Tahhan,

N., Wong, T. Y., and Taylor, H. R. (2017). Magni-

tude, temporal trends, and projections of the global

prevalence of blindness and distance and near vision

impairment: a systematic review and meta-analysis.

The Lancet Global Health, 5(9):e888–e897.

Dittimi, T. V., Hmood, A. K., and Suen, C. Y. (2017). Multi-

class svm based gradient feature for banknote recog-

nition. In 2017 IEEE International Conference on In-

dustrial Technology (ICIT), pages 1030–1035.

Huang, X. and Gai, S. (2020). Banknote classification based

on convolutional neural network in quaternion wavelet

domain. IEEE Access, 8:162141–162148.

IBGE (2019). Pesquisa nacional de sa

´

ude 2019: percepc¸

˜

ao

do estado de sa

´

ude, estilos de vida, doenc¸as cr

ˆ

onicas

e sa

´

ude bucal; Brasil e grandes regi

˜

oes. Coordenac¸

˜

ao

de Trabalho e Rendimento. Rio de Janeiro: Instituto

Brasileiro de Geografia e Estat

´

ıstica (IBGE).

Joshi, R. C., Yadav, S., and Dutta, M. K. (2020). YOLO-

v3 based currency detection and recognition system

for visually impaired persons. In 2020 International

Conference on Contemporary Computing and Appli-

cations (IC3A). IEEE.

Khashman, A., Ahmed, W., and Mammadli, S. (2018). Ban-

knote issuing country identification using image pro-

cessing and neural networks. In 13th International

Conference on Theory and Application of Fuzzy Sys-

tems and Soft Computing (ICAFS), pages 746–753.

Springer International Publishing.

Kongprasert, T. and chongstitvatana, P. (2019). Parameters

learning of bps m7 banknote processing machine for

banknote fitness classification. In 2019 23rd Interna-

tional Computer Science and Engineering Conference

(ICSEC), pages 310–314.

Laavanya, M. and Vijayaraghavan, V. (2019). Real time

fake currency note detection using deep learning.

International Journal of Engineering and Advanced

Technology, 9(1S5):95–98.

Ng, S.-C., Kwok, C.-P., Chung, S.-H., Leung, Y.-Y., and

Pang, H.-S. (2020). An intelligent banknote recogni-

tion system by using machine learning with assistive

technology for visually impaired people. In 2020 10th

International Conference on Information Science and

Technology (ICIST). IEEE.

Pham, T., Nguyen, D., Park, C., and Park, K. (2019).

Deep learning-based multinational banknote type and

fitness classification with the combined images by

visible-light reflection and infrared-light transmission

image sensors. Sensors, 19(4):792.

Sanghun Lee, Sangwook Baek, Euisun Choi, Yoonkil Baek,

and Chulhee Lee (2017). Soiled banknote fitness de-

termination based on morphology and otsu’s thresh-

olding. In 2017 IEEE International Conference on

Consumer Electronics (ICCE), pages 450–451.

Sousa, L. P., Veras, R. M. S., Vogado, L. H. S., Britto Neto,

L. S., Silva, R. R. V., Araujo, F. H. D., and Medeiros,

F. N. S. (2020). Banknote identification methodology

for visually impaired people. In 2020 International

Conference on Systems, Signals and Image Processing

(IWSSIP), pages 261–266.

Sun, W., Ma, Y., Yin, Z., and Gu, A. (2018). Banknote fit-

ness classification based on convolutional neural net-

work*. In 2018 IEEE International Conference on In-

formation and Automation (ICIA), pages 1545–1549.

Tan, C. J., Wong, W. K., and Min, T. S. (2020). Malaysian

banknote reader for visually impaired person. In 2020

IEEE Student Conference on Research and Develop-

ment (SCOReD). IEEE.

Thomas, M. and Meehan, K. (2021). Banknote object de-

tection for the visually impaired using a cnn. In 2021

32nd Irish Signals and Systems Conference (ISSC),

pages 1–6. IEEE.

Yousry, A., Taha, M., and Selim, M. (2018). Currency

recognition system for blind people using orb algo-

rithm. International Arab Journal of e-Technology,

5:34–40.

Brazilian Banknote Recognition Based on CNN for Blind People

853