Cartesian Robot Controlling with Sense Gloves and Virtual Control

Buttons: Development of a 3D Mixed Reality Application

Turhan Civelek

a

and Arnulph Fuhrmann

b

Institute of Media and Imaging Technology, TH Köln, Cologne, Germany

Keywords:

Mixed Reality, Robotic Control, Force Feedback, Human-Robot Interaction, Arduino.

Abstract:

In this paper, we present a cartesian robot controlled by a mixed reality interface that includes virtual buttons,

virtual gloves, and a drill. The mixed reality interface, into which the virtual reality input/output devices and

sense gloves have been integrated, enables the control of the cartesian robot. The data transfer between the

mixed reality interface and the cartesian robot is implemented via Arduino kit. The cartesian robot moves in

6 axes simultaneously with the movement of the sense gloves and the touch of the virtual buttons. This study

aims to perform remote-controlled and task-oriented screwing and unscrewing of a bolt using a cartesian robot

with a mixed reality interfaces.

1 INTRODUCTION

Designing robots that interact with humans through

virtual, augmented, and mixed reality (MR) environ-

ments, designing augmented reality (AR) interfaces

that mediate this communication and achieving opti-

mal design practices are one of the most important

issues in the field of human robot interaction (HRI).

Robotics studies are aimed at fully autonomous robots

that cooperate with humans. Although significant

work has been done in this field recently, the areas

where autonomous robots are used are limited (Xu

et al., 2022). In this case, robots controlled by remote

devices as a joystick are used as a solution. Some

studies in the literature show that robots are controlled

by sensor data on screens and other input and output

devices used for video games (Tanaka et al., 2018;

Klamt et al., 2018).

While traditionally controlled robots have their

advantages, MR, AR, and virtual reality (VR) of-

fer a promising alternative as immersive interfaces

that allow three-dimensional work and an alternative

method of mediating HRIs that enable communica-

tion between them (Gruenbaum et al., 1997; Sato and

Sakane, 2000).

Programming and simulation of industrial robots

in large-scale manufacturing facilities are time-

consuming and costly. Therefore, the focus is on

programming and simulation of MR-based collabora-

a

https://orcid.org/0000-0003-0487-5560

b

https://orcid.org/0000-0001-5118-5461

tive robots to improve the experience of robot control

(RC) and HRI. While augmented reality can be used

for data visualization, MR is more comprehensive and

can be used for interactivity (Gallala et al., 2019) and

is a one-dimensional arrangement between real and

virtual environments (Skarbez et al., 2021; Gallala

et al., 2021). In particular, MR, AR ,and, VR inter-

faces have significant advantages over conventional

techniques in terms of the user’s spatial awareness

and immersion, and the improved performance, lower

cost, and usability of the input/output (I/O) devices

used with these interfaces encourage their use in RC

and HRI (Stotko et al., 2019; Whitney et al., 2019).

MR, AR and, VR interfaces also provide unique expe-

riences that were not possible with previous technolo-

gies (LeMasurier et al., 2021; Hetrick et al., 2020).

Studies on HRI with MR, AR ,and, VR interfaces

have focused on topics such as picking up and plac-

ing an object (Xu et al., 2022), novel control methods

(Bustamante et al., 2021), eye-tracking interaction for

object search and tracking, and head-motion interac-

tion for selection and manipulation, deep-learning-

based object recognition for initial position estima-

tion (Park et al., 2021), object identification based on

head pose (Higgins et al., 2022), analysis of user per-

ception of robot motion during assembly of a gearbox

(Höcherl et al., 2020), and picking up and placing an

object using eye and head movements of the robot

(Park et al., 2022). The focus of these studies is on

MR, AR, and VR interfaces, robotic arms, and HRI.

In recent years, haptic devices have been used in

VR environments. Haptic or force feedback interfaces

242

Civelek, T. and Fuhrmann, A.

Cartesian Robot Controlling with Sense Gloves and Virtual Control Buttons: Development of a 3D Mixed Reality Application.

DOI: 10.5220/0011787700003417

In Proceedings of the 18th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2023) - Volume 1: GRAPP, pages

242-249

ISBN: 978-989-758-634-7; ISSN: 2184-4321

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

used with force sensor systems allow users to touch,

grasp, feel, and manipulate virtual objects (Shor et al.,

2018). In addition, the user’s actual hand and finger

movements when touching, grasping, and lifting ob-

jects are mimicked by their virtual hand and fingers in

the virtual environment (Espinoza et al., 2020). These

devices allow people to interact with virtual objects in

VR or remote environments and transfer force to the

arm (Park et al., 2016). Force feedback is important

for effective and productive manipulations in confined

spaces. The operator can recognize more states of the

operation through force feedback, improving task per-

formance (Nakajima et al., 2014; Pacchierotti et al.,

2016; Li et al., 2016).

Cartesian robots are widely used in the industry

due to their high payload and speed, repeatability

and accurate positioning (Rui et al., 2017) capabili-

ties. To improve the accuracy and efficiency of the

cartesian robot manipulation tasks, an HRI with non-

contact electromagnetic force feedback (Du et al.,

2020) and a machine vision-based calibration method

have been proposed to satisfy the automatic and intel-

ligent motion control of cartesian Robot (Rui et al.,

2017). Control of cartesian robots with virtual in-

terfaces has been proposed in some studies (Palmero

et al., 2005; Martínez et al., 2021). Studies on HRI

focus on the control of MR, AR, and VR interfaces

which mostly concentrate on robotic arms. The pro-

liferation of studies on MR interfaces and control of

cartesian robots, which are widely used in the indus-

try, will enable them to be profitably and efficiently

used like other robots. Adding haptic devices such as

sense gloves to these interfaces will enable more re-

alistic immersion by integrating physical interactions

with virtual objects into these interfaces.

In this context, a cartesian robot with stepper mo-

tors and motor control units that can move in six axes,

and a virtual environment in which this robot is con-

trolled, including direction virtual control buttons and

virtual hands representing sensory gloves, has been

developed. The Unity 3D application development

platform was used to develop the virtual environment.

Arduino unit was used to communicate between the

Cartesian robot and this virtual environment. In addi-

tion, the Arduino software development platform was

used to program the Arduino. The Cartesian robot is

moved simultaneously in the six axes by touching the

direction virtual buttons on the control panel or with-

out touching the virtual buttons with sense gloves.

During touching, the force and vibration are trans-

ferred to the actual hand through the sense gloves.

Through this transfer, the user gets a more realistic

feeling when interacting with virtual objects. With

the designed system, the screwing and unscrewing

of bolts was realized without touching the cartesian

robot.

This paper is organized as follows: Related Work

section contains the literature on human-robot inter-

action and MR, AR and, VR, Preliminary Study sec-

tion contains the hardware and software tools of the

designed Cartesian robot system, their intended use,

design and implementation of the system Discussion

and Conclusion section contains a summary of the

content of the paper, the limitations encountered dur-

ing the design, the proposed solutions and the final

application areas.

2 RELATED WORKS

Recent advances in interactive handheld, MR, and VR

systems for controlling a robot significantly increase

the applicability of this approach. VR environments

have also been developed to visualize the trajectories

of mobile wheelchairs and robots (Chadalavada et al.,

2015; Schiavi et al., 2009). Developments in AR and

VR are advancing rapidly and are being usefully in-

tegrated into HRI (Andersen et al., 2016; Chakraborti

et al., 2017; Diaz et al., 2017). Alternative control

methods such as brain-computer interfaces for robots,

graphical predictive interfaces for RC, eye sensors for

eye control, VR simulators and simulations to im-

prove medical skills, and robots for teleoperation are

being used in various disciplines (Zhang and Hansen,

2019; Pérez et al., 2019; MacCraith et al., 2019; Wat-

son et al., 2016; Walker et al., 2019). In addition,

real-time RC requires a secure physical connection

between human and robotic operators and manipula-

tors (Schiavi et al., 2009). In the context of these de-

velopments, the design of appropriate interfaces for

HRI, delivery windows, manual guidance, collabo-

rative work, and control issues are at the forefront

(ANSI/RIA and ANSI, 2012). In the manufacturing

industry, designing and testing machines and their in-

terfaces is often a costly and long process. These

long processes can be overcome by designing and ac-

tively using machine interfaces in VR environments

and combining these environments with the haptic ex-

periences of physical interfaces (Murcia-López and

Steed, 2018a; Ucar et al., 2017; van Deurzen et al.,

2021).

Haptic and force feedback during interaction with

virtual objects can be less distracting in environments

with rich visual and auditory data in many VR appli-

cations. Haptic and force feedback in VR applications

can greatly enhance user immersion and embedding

(Jones and Sarter, 2008; Zhou et al., 2020). The liter-

ature suggests that haptic applications with individual

Cartesian Robot Controlling with Sense Gloves and Virtual Control Buttons: Development of a 3D Mixed Reality Application

243

finger force generation can play a role in education

as well as in industry and healthcare (Civelek et al.,

2014; Civelek and Fuhrmann, 2022; Murcia-López

and Steed, 2018b; Richards et al., 2019; Vergara et al.,

2019; Grajewski et al., 2015; Christensen et al., 2018;

Civelek and Vidinli, 2018).

Stepper motors are one of the preferred motor

types for cartesian robot motion. It is a control mo-

tor widely used in model (Kołota and St˛epie

´

n, 2017).

The control system of a conventional stepper motor

usually controls a single stepper motor, but later var-

ious solutions of multiple control systems for stepper

motors have been proposed (Wang et al., 2020; Chen

et al., 2012; WANG et al., 2020; Zhang et al., 2021;

Slater et al., 2010). These studies have enabled the

control system to control multiple stepper motors si-

multaneously and to control and adjust the operating

state of stepper motors in real time.

3 PRELIMINARY STUDY

A cartesian robot and a virtual environment in which

the cartesian robot is controlled have been developed

for collaboration between machines and humans.

Figure 1: Interaction of virtual hand and virtual buttons dur-

ing implementation.

Figure 2: Interaction of the virtual hand with the drill during

execution.

As shown in Figures 1 and 2, the cartesian robot

is equipped with stepper motors, stepper motor con-

trollers, a web camera, an Arduino unit, and appro-

priate tools for movement in 6 axes. The designed

VR environment includes the virtual hands represent-

ing the sensory gloves, virtual control buttons and a

virtual display. In addition, HMD, HTC Vive tracker,

sensory gloves, etc. are used to capture and immerse

the real hand position and head movements.

3.1 Cartesian Robot Design

Cartesian robots are designed as linear industrial

robots (i.e. they move in a straight line and do not

rotate) and have three main control axes that are per-

pendicular to each other. They move in 6 axes on 3

rails. They are reliable and precise in 3D space. As a

robotic coordinate system, it is also suitable for hori-

zontal movements and stacking boxes.

Figure 3: Top view of the designed cartesian robot. 1. Step-

per Motors, 2. Stepper Motor Control Units, 3. Webcam, 4.

Arduino, 5. HTC Vive Trackers, 6. Sense Gloves, 7. HMD.

Figure 4: A front view of the designed cartesian robot.

As shown in Figures 3 and 4, a Cartesian robot

was developed that screws and unscrews a bolt in this

work. The frame of the system is designed to allow

movements in 6 axes and is made of rigid aluminum

GRAPP 2023 - 18th International Conference on Computer Graphics Theory and Applications

244

material due to its light weight. Next, the bolt, float-

ing bearing block and bracket parts were assembled,

and the system was connected to the stepper motors

via a claw coupling. Finally, cable connections were

made between the motors and the motor controllers,

and among the motors, Arduino, and the computer.

The robot was equipped with a camera to determine

the boundaries of the movement, and the motor was

placed in an area bounded by the colors, which con-

sisted of two different colors. The reason for placing

it in the colored area is to determine the limits of the

robot’s movements and to prevent the moving part of

robot from bumping against the fixed skeleton.

3.2 Design of the Virtual Environment

As shown in Figure 5, a virtual environment was de-

signed for the Cartesian robot control. The virtual en-

vironment includes control buttons, virtual hands rep-

resenting sense gloves, a virtual screen to which the

image of the colored surface is transferred, and a vir-

tual drilling machine. The control buttons send the

data defined under certain conditions when touched

by the virtual hands to a C program interface, which

is used to control the Arduino through the serial inter-

face to allow the Cartesian robot to move in six axes

(right, left, front, back, up, and down).

Figure 5: The virtual control panel.

The sense gloves were equipped with HTC Vive

trackers that transmitted their position from the real

world to the virtual world. The position data from

the trackers attached to the sense gloves is transmit-

ted to the gloves in the virtual environment via base

stations, allowing the virtual gloves to move simulta-

neously with the real hand. When the virtual drill is

gripped by the virtual gloves, the data from the vir-

tual hand, which contains the information required to

move the Cartesian robot, is transmitted through the

serial port to the C programming interface to drive

the motors. When the button of the drill is touched

with the index finger, the necessary data to turn the

motor to the right is transmitted, and when the same

motor is touched with the middle finger to turn it to

the left. Therefore, the motor is rotated in two axes to

turn. By this, the motor can be rotated clockwise or

counterclockwise in order to tighten or to loosen the

bolt. When the position of the bolt and the position of

the drill tip used to screw or unscrew the bolt match,

touching the drill button screw or unscrew the bolt.

In order to ensure that the Cartesian robot does

not collide with its own skeleton during operation,

the motion boundaries are defined with colored paper.

These boundaries are transmitted to the virtual screen

via the webcam and help the user decide whether to

continue or stop the movement in one axis.

3.3 Implementation

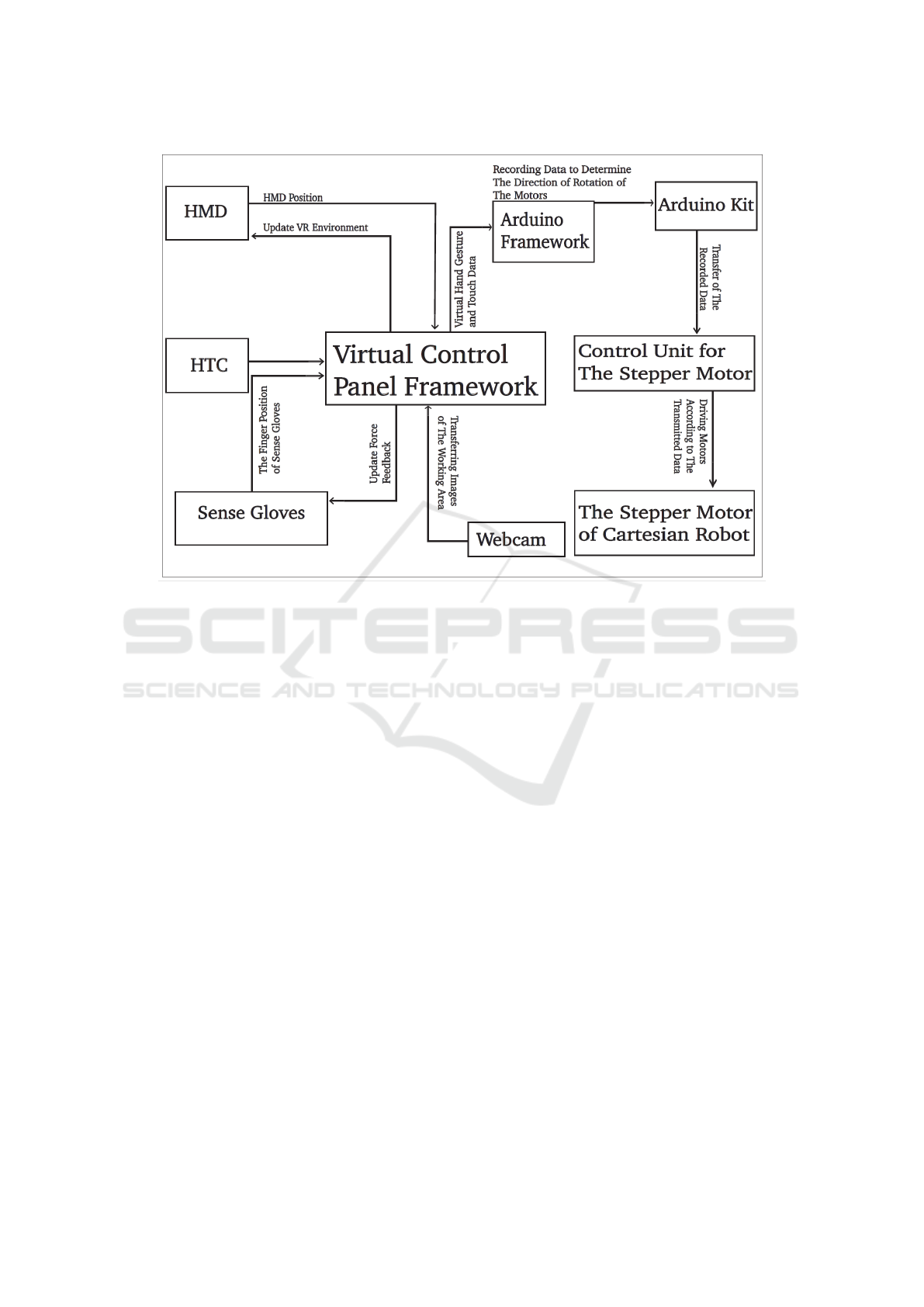

As shown in Figure 6, during the application, the

HMD position, the position of the sense glove, and

the finger positions of sense glove are transmitted to

the virtual control panel framework. The updated VR

environment is transmitted to the HMD. The updated

force feedback during virtual touch is transmitted to

the sense glove. The position data during the touch of

the virtual buttons and the position data of the hand

movement after gripping the drill are transmitted to

the Arduino framework. The motor rotation data de-

fined according to the incoming data is recorded in

Arduino kit and transmitted to the motor control units

through the Arduino kit. The control units drive the

motors according to the transmitted data. The image

of the surface on which the cartesian robot is mov-

ing is transmitted to the virtual control panel via the

webcam.

The virtual environment was created using the C#

programming language on the Unity 3D platform.

The necessary algorithms were coded for the simul-

taneous movement of the virtual hands representing

the sense gloves, the transfer of the position infor-

mation of the sense gloves to the virtual hand. The

information about the events that occur when the vir-

tual buttons are touched with the virtual hands, the

position information of the virtual hands when the

virtual object is grasped and moved with the virtual

hand, and the transfer of the image of the moving area

of the robot to the virtual screen during the move-

ment of the robot. In addition, the virtual environ-

ment is programmed to provide force feedback to the

user’s hand via the sensory glove motors when the

virtual buttons are touched with the sensory gloves

and the drill is gripped. This increases the user’s

perception of reality when grasping and touching ob-

jects and gives the user the feeling of being in a real

world. The Ports module (System.IO.Ports;) in the

C# language was used for the data flow, and the data

flow between Unity and the Arduino is through the

Cartesian Robot Controlling with Sense Gloves and Virtual Control Buttons: Development of a 3D Mixed Reality Application

245

Figure 6: Input and output devices, virtual control panel framework, the Arduino framework, the Arduino, the stepper motor

control unit, the stepper motors of the Cartesian robot and the events that take place between them.

SerialPort COM4.

public SerialPort serial = new SerialPort

("COM4", 115200, Parity.None,

8, StopBits.One);

The processes required to receive the data sent by

Unity to the Arduino and to send the necessary com-

mands to the control units of the motors that move

the robot under certain conditions according to the re-

ceived data are coded in the C programming language.

Events that occur when a button is touched with

the virtual hand are transmitted as string data to the

Arduino programming tool via the serial port. If the

virtual drill is gripped with the virtual hand, the end

position of the virtual hand is compared with the start

position. The string data is transferred to the Arduino

programming tool according to the size and small-

ness of the comparison. Here, the incoming data at

Arduino is interpreted and information is sent to the

stepper motor controllers depending on the type of in-

coming data. Based on the information received, the

direction of rotation of the stepper motors is deter-

mined. When the bolt and the drill meet in the same

position, the motor to which the bolt mounting tip

is attached is rotated and the bolt is screwed or un-

screwed.

4 DISCUSSION AND

CONCLUSION

Mixed reality and robot control, supported by Sense

gloves to control a Cartesian robot, combines the ca-

pabilities of communication and interaction between

input and output hardware tools, virtual environment,

controls, and machine components with the experi-

ence of performing a task without touching the ma-

chines. During this interaction, motors on the sen-

sor gloves transmit force to the hand and the image

received from the workspace where the task is being

performed to the virtual screen, giving the user a more

realistic sense of being in a more real world. The im-

age transmission is done through a two-dimensional

virtual screen. Matching the positions of the bolt and

drill bit to remove the bolt is done using these images,

either by pressing the virtual control buttons with the

virtual hand or by moving the drill bit with the virtual

hand.

During the application, both by touching the but-

tons with the fingers of the virtual hand and by mov-

ing the gripped drill with the virtual hand, the move-

ment of the machine in six axes was realized simul-

taneously. When the position of tip of the drill coin-

GRAPP 2023 - 18th International Conference on Computer Graphics Theory and Applications

246

cided with the position of the bolt, the task of screw

and unscrew the bolt was realized.

In the literature, studies on the development of

mixed reality robot control interfaces have mainly fo-

cused on robotic arms. In this work on remote con-

trol of Cartesian robots, we believe that the use of

MR control interfaces will help the improvement of

control interfaces for Cartesian robots. In addition,

to cost-efficient hardware and software tools, the de-

signed system is highly reproducible and perfectly

configurable by the user.

There are several ways to improve the implemen-

tation. For example, more powerful motors could be

used to improve performance. To increase realism,

the workspace of the Cartesian robot can be virtual-

ized in 3D and transferred to the virtual environment,

and the motion boundaries can be determined by en-

coding. Future studies in this area, including assem-

bly and disassembly of machine parts, machine repair,

construction and maintenance, as well as virtual sur-

gical procedures can be performed through virtual en-

vironments and force feedback devices without being

in the environments where these processes take place.

REFERENCES

Andersen, R. S., Madsen, O., Moeslund, T. B., and Amor,

H. B. (2016). Projecting robot intentions into human

environments. In 2016 25th IEEE International Sym-

posium on Robot and Human Interactive Communica-

tion (RO-MAN), pages 294–301.

ANSI/RIA and ANSI (2012). American national stan-

dard for industrial robots and robot systems –

safety requirements. In American National Standard

for Industrial Robots and Robot Systems – Safety

Requirements. American National Standards Insti-

tute/Robotics Industry Association.

Bustamante, S., Peters, J., Schölkopf, B., Grosse-Wentrup,

M., and Jayaram, V. (2021). Armsym: A vir-

tual human–robot interaction laboratory for assistive

robotics. IEEE Transactions on Human-Machine Sys-

tems, 51(6):568–577.

Chadalavada, R. T., Andreasson, H., Krug, R., and Lilien-

thal, A. J. (2015). That’s on my mind! robot to human

intention communication through on-board projection

on shared floor space. In 2015 European Conference

on Mobile Robots (ECMR), pages 1–6.

Chakraborti, T., Sreedharan, S., Kulkarni, A., and Kamb-

hampati, S. (2017). Alternative modes of interaction

in proximal human-in-the-loop operation of robots.

CoRR, abs/1703.08930.

Chen, H., Duan, Z., Lian, X., and Zhang, X. (2012). The

design of stepper motor system based on can bus.

In 2012 2nd International Conference on Consumer

Electronics, Communications and Networks (CEC-

Net), pages 3262–3265.

Christensen, N. H., Hjermitslev, O. G., Stjernholm, N. H.,

Falk, F., Nikolov, A., Kraus, M., Poulsen, J., and

Petersson, J. (2018). Feasibility of team training in

virtual reality for robot-assisted minimally invasive

surgery. In Proceedings of the Virtual Reality Inter-

national Conference - Laval Virtual, VRIC ’18, New

York, NY, USA. Association for Computing Machin-

ery.

Civelek, T. and Fuhrmann, A. (2022). Virtual reality learn-

ing environment with haptic gloves. In 2022 3rd

International Conference on Education Development

and Studies, ICEDS’22, page 32–36, New York, NY,

USA. Association for Computing Machinery.

Civelek, T., Ucar, E., Ustunel, H., and Aydın, M. (2014).

Effects of a haptic augmented simulation on k-12

students’ achievement and their attitudes towards

physics. Eurasia Journal of Mathematics, Science and

Technology Education, 10:565–574.

Civelek, T. and Vidinli, B. (2018). Resection of benign

tumor in tibia with a high speed burr by haptic de-

vices in virtual reality environments. In Proceedings

of the Virtual Reality International Conference - Laval

Virtual. International Institute of Informatics and Sys-

temics.

Diaz, C., Walker, M., Szafir, D. A., and Szafir, D. (2017).

Designing for depth perceptions in augmented reality.

In 2017 IEEE International Symposium on Mixed and

Augmented Reality (ISMAR), pages 111–122.

Du, G., Zhang, B., Li, C., Gao, B., and Liu, P. X. (2020).

Natural human–machine interface with gesture track-

ing and cartesian platform for contactless electromag-

netic force feedback. IEEE Transactions on Industrial

Informatics, 16(11):6868–6879.

Espinoza, J., Malubay, S. A., Alipio, M., Abad, A., Asares,

V., Joven, M. J., and Domingo, G. F. (2020). De-

sign and evaluation of a human-controlled haptic-

based robotic hand for object grasping and lifting.

In 2020 11th IEEE Annual Information Technology,

Electronics and Mobile Communication Conference

(IEMCON), pages 0068–0075.

Gallala, A., Hichri, B., and Plapper, P. (2019). Survey: The

evolution of the usage of augmented reality in industry

4.0. IOP Conference Series: Materials Science and

Engineering, 521:012017.

Gallala, A., Hichri, B., and Plapper, P. (2021). Human-robot

interaction using mixed reality. In 2021 International

Conference on Electrical, Computer and Energy Tech-

nologies (ICECET), pages 1–6.

Grajewski, D., Górski, F., Hamrol, A., and Zawadzki, P.

(2015). Immersive and haptic educational simulations

of assembly workplace conditions. Procedia Com-

puter Science, 75:359–368.

Gruenbaum, P. E., McNeely, W. A., Sowizral, H. A., Over-

man, T. L., and Knutson, B. W. (1997). Implementa-

tion of Dynamic Robotic Graphics For a Virtual Con-

trol Panel. Presence: Teleoperators and Virtual Envi-

ronments, 6(1):118–126.

Hetrick, R., Amerson, N., Kim, B., Rosen, E., Visser, E.

J. d., and Phillips, E. (2020). Comparing virtual real-

ity interfaces for the teleoperation of robots. In 2020

Cartesian Robot Controlling with Sense Gloves and Virtual Control Buttons: Development of a 3D Mixed Reality Application

247

Systems and Information Engineering Design Sympo-

sium (SIEDS), pages 1–7.

Higgins, P., Barron, R., and Matuszek, C. (2022). Head

pose for object deixis in vr-based human-robot in-

teraction. In 2022 31st IEEE International Confer-

ence on Robot and Human Interactive Communica-

tion (RO-MAN), pages 610–617.

Höcherl, J., Adam, A., Schlegl, T., and Wrede, B. (2020).

Human-robot assembly: Methodical design and as-

sessment of an immersive virtual environment for

real-world significance. In 2020 25th IEEE Interna-

tional Conference on Emerging Technologies and Fac-

tory Automation (ETFA), volume 1, pages 549–556.

Jones, L. and Sarter, N. (2008). Tactile displays: Guid-

ance for their design and application. Human factors,

50:90–111.

Klamt, T., Rodriguez, D., Schwarz, M., Lenz, C.,

Pavlichenko, D., Droeschel, D., and Behnke, S.

(2018). Supervised autonomous locomotion and ma-

nipulation for disaster response with a centaur-like

robot. In 2018 IEEE/RSJ International Conference on

Intelligent Robots and Systems (IROS), pages 1–8.

Kołota, J. and St˛epie

´

n, S. (2017). Analysis of 3d model

of reluctance stepper motor with a novel construction.

In 2017 22 International Conference on Methods and

Models in Automation and Robotics (MMAR), pages

955–958.

LeMasurier, G., Allspaw, J., and Yanco, H. A. (2021).

Semi-autonomous planning and visualization in vir-

tual reality. CoRR, abs/2104.11827.

Li, W., Gao, H., Ding, L., and Tavakoli, M. (2016). Tri-

lateral predictor-mediated teleoperation of a wheeled

mobile robot with slippage. IEEE Robotics and Au-

tomation Letters, 1(2):738–745.

MacCraith, E., Forde, J., and Davis, N. (2019). Robotic

simulation training for urological trainees: a compre-

hensive review on cost, merits and challenges. Journal

of Robotic Surgery, 13.

Martínez, M. A. G., Sanchez, I. Y., and Chávez, F. M.

(2021). Development of automated virtual cnc router

for application in a remote mechatronics laboratory.

In 2021 International Conference on Electrical, Com-

puter, Communications and Mechatronics Engineer-

ing (ICECCME), pages 1–6.

Murcia-López, M. and Steed, A. (2018a). A comparison

of virtual and physical training transfer of bimanual

assembly tasks. IEEE Transactions on Visualization

and Computer Graphics, 24(4):1574–1583.

Murcia-López, M. and Steed, A. (2018b). A comparison

of virtual and physical training transfer of bimanual

assembly tasks. IEEE Transactions on Visualization

and Computer Graphics, 24(4):1574–1583.

Nakajima, Y., Nozaki, T., and Ohnishi, K. (2014). Heartbeat

synchronization with haptic feedback for telesurgical

robot. IEEE Transactions on Industrial Electronics,

61(7):3753–3764.

Pacchierotti, C., Prattichizzo, D., and Kuchenbecker, K. J.

(2016). Cutaneous feedback of fingertip deformation

and vibration for palpation in robotic surgery. IEEE

Transactions on Biomedical Engineering, 63(2):278–

287.

Palmero, D., Perez, A., Ortiz, M., Amaro, F., and Hen-

riquez, F. (2005). Object recognition and sorting by

using a virtual cartesian robot with artificial vision. In

15th International Conference on Electronics, Com-

munications and Computers (CONIELECOMP’05),

pages 201–206.

Park, K.-B., Choi, S. H., Lee, J. Y., Ghasemi, Y., Mo-

hammed, M., and Jeong, H. (2021). Hands-free hu-

man–robot interaction using multimodal gestures and

deep learning in wearable mixed reality. IEEE Access,

9:55448–55464.

Park, K.-B., Choi, S. H., Moon, H., Lee, J. Y., Ghasemi, Y.,

and Jeong, H. (2022). Indirect robot manipulation us-

ing eye gazing and head movement for future of work

in mixed reality. In 2022 IEEE Conference on Virtual

Reality and 3D User Interfaces Abstracts and Work-

shops (VRW), pages 483–484.

Park, T. M., Won, S. Y., Lee, S. R., and Sziebig, G. (2016).

Force feedback based gripper control on a robotic

arm. In 2016 IEEE 20th Jubilee International Confer-

ence on Intelligent Engineering Systems (INES), pages

107–112.

Pérez, L., Diez, E., Usamentiaga, R., and García, D. F.

(2019). Industrial robot control and operator training

using virtual reality interfaces. Computers in Industry,

109:114–120.

Richards, K., Mahalanobis, N., Kim, K., Schubert, R., Lee,

M., Daher, S., Norouzi, N., Hochreiter, J., Bruder,

G., and Welch, G. (2019). Analysis of peripheral vi-

sion and vibrotactile feedback during proximal search

tasks with dynamic virtual entities in augmented real-

ity. In Symposium on Spatial User Interaction, SUI

’19, New York, NY, USA. Association for Computing

Machinery.

Rui, W., Huawei, Q., Yuyang, S., and Jianliang, L. (2017).

Calibration of cartesian robot based on machine vi-

sion. In 2017 IEEE 3rd Information Technology

and Mechatronics Engineering Conference (ITOEC),

pages 1103–1108.

Sato, S. and Sakane, S. (2000). A human-robot interface

using an interactive hand pointer that projects a mark

in the real work space. In Proceedings 2000 ICRA.

Millennium Conference. IEEE International Confer-

ence on Robotics and Automation. Symposia Proceed-

ings (Cat. No.00CH37065), volume 1, pages 589–595

vol.1.

Schiavi, R., Bicchi, A., and Flacco, F. (2009). Integration of

active and passive compliance control for safe human-

robot coexistence. In 2009 IEEE International Con-

ference on Robotics and Automation, pages 259–264.

Shor, D., Zaaijer, B., Ahsmann, L., Immerzeel, S., Weetzel,

M., Eikelenboom, D., Hartcher-O’Brien, J., and As-

chenbrenner, D. (2018). Designing haptics: Compar-

ing two virtual reality gloves with respect to realism,

performance and comfort. In 2018 IEEE International

Symposium on Mixed and Augmented Reality Adjunct

(ISMAR-Adjunct), pages 318–323.

GRAPP 2023 - 18th International Conference on Computer Graphics Theory and Applications

248

Skarbez, R., Smith, M., and Whitton, M. (2021). Revis-

iting milgram and kishino’s reality-virtuality contin-

uum. Frontiers in Virtual Reality, 2.

Slater, M., Spanlang, B., and Corominas, D. (2010). Sim-

ulating virtual environments within virtual environ-

ments as the basis for a psychophysics of presence.

ACM Trans. Graph., 29.

Stotko, P., Krumpen, S., Schwarz, M., Lenz, C., Behnke,

S., Klein, R., and Weinmann, M. (2019). A vr sys-

tem for immersive teleoperation and live exploration

with a mobile robot. In 2019 IEEE/RSJ International

Conference on Intelligent Robots and Systems (IROS),

pages 3630–3637.

Tanaka, M., Nakajima, M., Suzuki, Y., and Tanaka, K.

(2018). Development and control of articulated mo-

bile robot for climbing steep stairs. IEEE/ASME

Transactions on Mechatronics, 23(2):531–541.

Ucar, E., Ustunel, H., Civelek, T., and Umut, I. (2017).

Effects of using a force feedback haptic augmented

simulation on the attitudes of the gifted students to-

wards studying chemical bonds in virtual reality en-

vironment. Behaviour & Information Technology,

36(5):540–547.

van Deurzen, B., Goorts, P., De Weyer, T., Vanacken, D.,

and Luyten, K. (2021). Hapticpanel: An open system

to render haptic interfaces in virtual reality for man-

ufacturing industry. In Proceedings of the 27th ACM

Symposium on Virtual Reality Software and Technol-

ogy, VRST ’21, New York, NY, USA. Association for

Computing Machinery.

Vergara, D., Extremera, J., Rubio, M., and Dávila, L.

(2019). Meaningful learning through virtual reality

learning environments: A case study in materials en-

gineering. Applied Sciences, 9:1–14.

Walker, M. E., Hedayati, H., and Szafir, D. (2019). Robot

teleoperation with augmented reality virtual surro-

gates. In 2019 14th ACM/IEEE International Confer-

ence on Human-Robot Interaction (HRI), pages 202–

210.

Wang, X., Lu, S., and Zhang, S. (2020). Rotating angle es-

timation for hybrid stepper motors with application to

bearing fault diagnosis. IEEE Transactions on Instru-

mentation and Measurement, 69(8):5556–5568.

WANG, Y., XU, J., LIU, Q., GOU, Y., LI, B., and ZHANG,

Y. (2020). Design of can bus control motor based on

stm32. In 2020 International Conference on Artificial

Intelligence and Computer Engineering (ICAICE),

pages 37–40.

Watson, G. S., Papelis, Y. E., and Hicks, K. C. (2016).

Simulation-based environment for the eye-tracking

control of tele-operated mobile robots. In Proceed-

ings of the Modeling and Simulation of Complexity in

Intelligent, Adaptive and Autonomous Systems 2016

(MSCIAAS 2016) and Space Simulation for Planetary

Space Exploration (SPACE 2016), MSCIAAS ’16,

San Diego, CA, USA. Society for Computer Simula-

tion International.

Whitney, D., Rosen, E., Phillips, E., Konidaris, G., and

Tellex, S. (2019). Comparing Robot Grasping Teleop-

eration Across Desktop and Virtual Reality with ROS

Reality, pages 335–350. Springer International Pub-

lishing.

Xu, S., Moore, S., and Cosgun, A. (2022). Shared-control

robotic manipulation in virtual reality. In 2022 In-

ternational Congress on Human-Computer Interac-

tion, Optimization and Robotic Applications (HORA),

pages 1–6.

Zhang, G. and Hansen, J. P. (2019). A virtual reality simu-

lator for training gaze control of wheeled tele-robots.

In 25th ACM Symposium on Virtual Reality Software

and Technology, VRST ’19, New York, NY, USA. As-

sociation for Computing Machinery.

Zhang, L., Peng, H., Tang, L., Cao, X., and Yue, X.-G.

(2021). A control system of multiple stepmotors based

on stm32 and can bus. In Proceedings of the 2020 2nd

International Conference on Big Data and Artificial

Intelligence, ISBDAI ’20, page 624–629, New York,

NY, USA. Association for Computing Machinery.

Zhou, R., Wu, Y., and Sareen, H. (2020). Hextouch: Af-

fective robot touch for complementary interactions to

companion agents in virtual reality. In 26th ACM

Symposium on Virtual Reality Software and Technol-

ogy, VRST ’20, New York, NY, USA. Association for

Computing Machinery.

Cartesian Robot Controlling with Sense Gloves and Virtual Control Buttons: Development of a 3D Mixed Reality Application

249