Using a 1D Pose-Descriptor on the Finger-Level to Reduce the

Dimensions in Hand Posture Estimation

Amin Dadgar and Guido Brunnett

Computer Science, Chemnitz University of Technology, Straße der Nationen 62, 09111, Chemnitz, Germany

Keywords:

One-D Finger Pose-Descriptor, Distance-Based Descriptor, Anatomy-Based Dimensionality Reduction,

Temporal a-Priori, Hand Posture Estimation, Single RGB Camera, Virtual Hand Models, Computer Vision.

Abstract:

We claim there is a simple measure to characterize all postures of every finger in human hands, each with

a single and unique value. To that, we illustrate the sum of distances of fingers’ (movable) joints/nodes (or

of the finger’s tip) to a locally fixed reference point on that hand (e.g., wrist joint) equals a unique value for

each finger’s posture. We support our hypothesis by presenting numerical justification based on the kinematic

skeleton of a human hand for four fingers and by providing evidence on two virtual hand models (which

closely resemble the structure of human hands) for thumbs. The employment of this descriptor reduces the

dimensionality of the finger’s space from 16 to 5 (e.g., one degree of freedom for each finger). To demonstrate

the advantages of employing this measure for finger pose estimation, we utilize it as a temporal a-priori in the

analysis-by-synthesis framework to constrain the posture space in searching and estimating the optimum pose

of fingers more efficiently. In a set of experiments, we show the benefits of employing this descriptor in time

complexity, latency, and accuracy of the pose estimation of our virtual hand.

1 INTRODUCTION

Three-D hand pose estimation systems aim at detect-

ing the joint configuration of human hands in 3D

space. These systems are essential requirements for

disciplines such as human behavior understanding,

human-computer interaction, and augmented reality.

However, the high degrees of freedom of fingers (16

out of total 28 DoF of hands) is cumbersome for a

fast and/or accurate performance. Therefore, it is ad-

vantageous to discover the feasibility of reducing this

high dimensionality by exploiting the hands’ inherent

kinematic/anatomic properties.

The main idea of our work is to investigate

whether a finger pose could be uniquely described

as a distance between the keypoints on fingers and a

locally fixed reference point on the hands (e.g., the

wrist joint, palm center, etc.). Such a relation would

simplify the representation of the fingers’ postures to

merely five numbers and thus drastically reduce the

dimensionality of the problem.

Similar approaches have been suggested in two-

D (Liao et al., 2012) though the authors failed to

generalize them to three-D. There were endeavors on

three-D (Shimizume et al., 2019) but their generaliza-

tions to all postures and orientations remained chal-

lenging. Thus in those works, simplifying the prob-

lem, by modeling the camera-hand distance, assum-

ing stretched outward fingers, and strictly fixating the

hand orientation, seems to the expected trend.

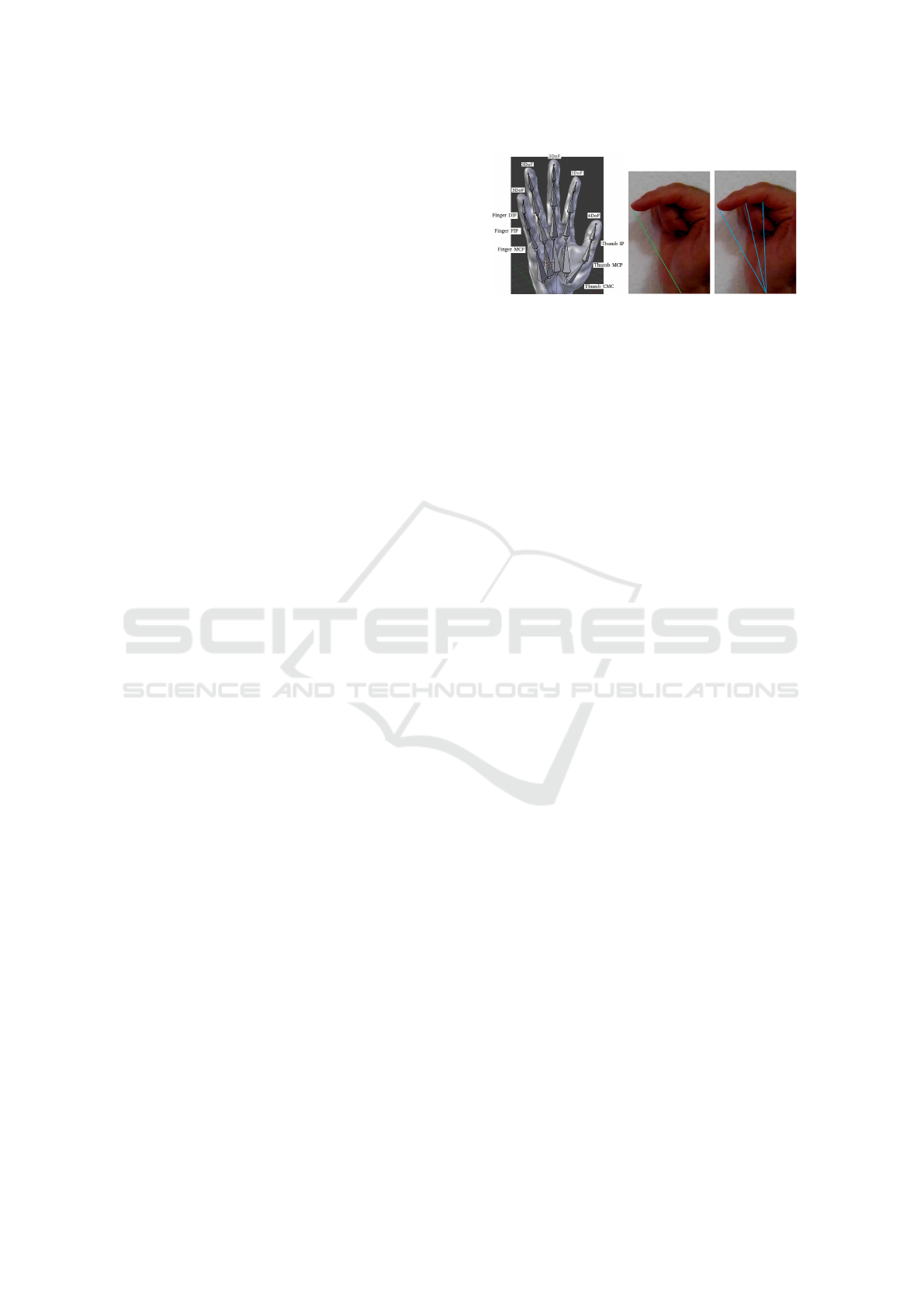

In our investigation, by employing two artificial

hand models, we were able to observe two different

yet highly related pose-descriptors (Eq1, 2). First,

the distance of the fingers or thumb’s tip to a refer-

ence point (wrist) is always a unique value for differ-

ent poses, Fig1-middle. Second, the sum of distances

of fingers/thumb (movable) joints/nodes to that refer-

ence point is also a unique value, Fig1-right.

After validating the uniqueness of the pose-

descriptors for both models, we extended our investi-

gation to justify our findings based on the kinematic

relations of the human hand. In the Section 3, we

numerically justify, given a range of the allowable ro-

tation for joints, if one merely selects the place of the

reference point carefully, the uniqueness of these dis-

tances will be maintained. We label the suitable spot

of reference points in Fig3 as Re f

J

.

In brief, we require the following components

to compute and assess the uniqueness of our pose-

descriptors: A) Positions of moving nodes of fingers

(e.g., two inter-phalangeal joins and the tip), and the

thumb’s (i.e., two phalangeal joints and the tip) as

Dadgar, A. and Brunnett, G.

Using a 1D Pose-Descriptor on the Finger-Level to Reduce the Dimensions in Hand Posture Estimation.

DOI: 10.5220/0011781000003411

In Proceedings of the 12th International Conference on Pattern Recognition Applications and Methods (ICPRAM 2023), pages 501-508

ISBN: 978-989-758-626-2; ISSN: 2184-4313

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

501

seen in Fig1-top. B) Appropriate reference point that

always remains locally static with respect to fingers

(e.g., the wrist joint). C) Plausible rotation range of

each movable node. This descriptor is independent

of camera distance to the hands, hand orientation (or

camera view-point), and fingers pose.

We employ this pose-descriptor to reduce the high

degrees of freedom of fingers to five (one-D for each

finger). Additionally, we will incorporate this as a-

priori in five one-D temporal models (one model for

each finger) and achieve a real-time estimation of the

finger poses of a virtual hand in the costly synthesis-

by-analysis framework on CPUs.

2 LITERATURE REVIEW

For accurate and real-time estimation of the hand’s

three-D postures, researchers consider a wide range

of approaches (Zhang et al., 2020; Zhao et al., 2013).

To review the fore works regarding global relation-

ships between the fingers and a point on the hands,

however, we focus on a specific type of posture es-

timation that detects fingertips position. As one of

the earliest works in finding some global features on

fingers, (Hardenberg, 2001) demonstrated a circular-

diametric relationship between the fingers on two-D.

Then he tried to find the fingertip’s position based on

those relationships.

There are several distance-based approaches to

detecting fingertips in two-D. The work by (Dung and

Mizukawa, 2010) suggested a distance-based method

in connected component labeling (Paralic, 2012) fash-

ion to extract the two-D fingertips, hand region, and

palm center on images. However, their approach

works only if the fingers are wide open. A more

advanced strategy proposed by (Liao et al., 2012)

addresses more challenging hand postures such as

closed-fingers poses. They employed distance trans-

formation to filter fingers and remain with merely the

palm area. However, they used a simplified two-D

hand model with strongly local geometric constraints.

These approaches have two main drawbacks that

are intrinsic to their two-D characteristics. First, they

rely on local properties that are assumed will remain

unchanged. However, these two-D properties inher-

ently are against the innate three-D global invariabil-

ity (unless the hand faces forward, with a specified

distance to the camera and a fixed orientation). Sec-

ond, their extensions to other scenarios and applica-

tions are not straightforward.

To alleviate the problems above, several three-D

distance-based approaches were proposed. For in-

stance, amid the depth sensors’ era, (Raheja et al.,

Figure 1: Left: CMC carpal-metacarpal joint, MCP

metacarpal- phalangeal joint, PIP proximal inter-phalangeal

joint, DIP distal interphalangeal joint, IP inter-phalangeal

joint. Both of these values, Middle: the distance of fingers’

tip to a local reference point (e.g., wrist joint) and Right:

The sum of the distances of 3 movable fingers’ nodes, on a

hand gives us a unique value for each finger posture.

2011) proposed a method for fingertips and centers

of palms detection using KINECT. However, they as-

sumed that fingertips are closer to the camera than the

other hand’s parts, which imposes brute constraints on

the orientation of hands.

By incorporating monocular sensors and propos-

ing a novel finger constraint, (Yamamoto et al., 2012;

Shimizume et al., 2019) estimated hand postures us-

ing detected fingertip positions with inverse kinemat-

ics (IK). However, there is a major issue in their ap-

proach. In IK, there are relations from fingertips to

the joints’ configuration (not the reverse). Therefore,

in practice, one can not meaningfully reduce the high

complexity (or DoF) of fingers. For example, an effi-

cient temporal model can not be introduced on the IK.

Thus the system has to estimate hand postures from

fingertip positions by solving IK for a high DoF prob-

lem. That novel constraint was employed to identify

the touch state of the fingers’ tips. However, the ex-

tension of the approach for every posture of the hand

seems infeasible.

To the best of our knowledge, we propose a novel

distance-based measure to describe the poses of each

finger. Our descriptor is in three-D and is view-point

and camera-distance independent. Therefore, it can

assist one in designing finger pose estimation systems

with merely five DoF.

3 METHODOLOGY

Our pose-descriptor for each finer is a one-

dimensional number that characterizes the postures of

that finger. To compute this descriptor we require the

distance of fingertips (and movable finger joints) to a

locally fixed point on the hands (e.g., the wrist joint).

In this section, we demonstrate how we calculate this

descriptor and justify its uniqueness within plausible

ranges of fingers kinematics (degrees). Additionally,

ICPRAM 2023 - 12th International Conference on Pattern Recognition Applications and Methods

502

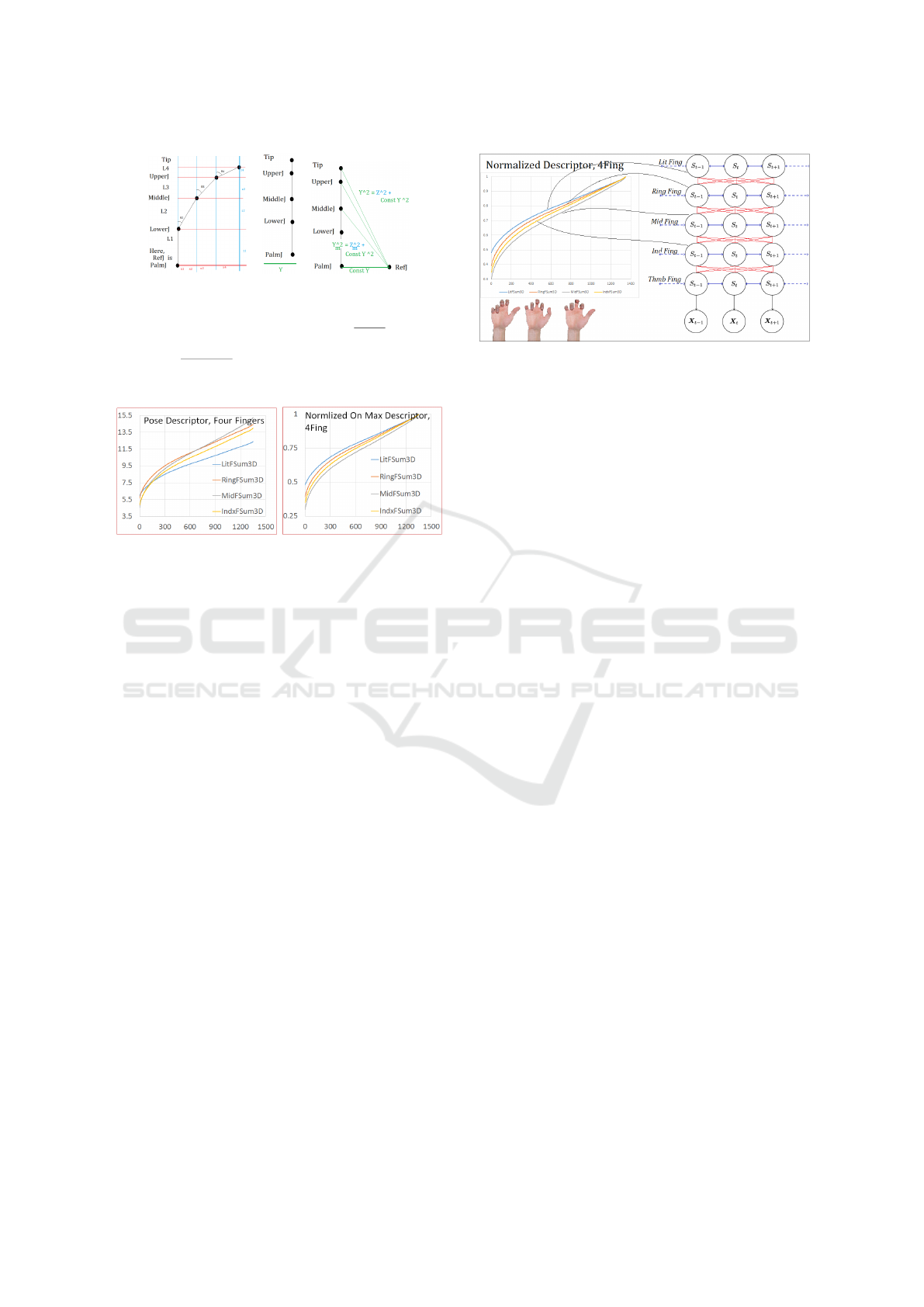

Figure 2: The sorted pose-descriptor Type

1

(Eq1) and

Type

2

(Eq2), for middle finger & thumb. The four fin-

gers have the same number of DoF and a similar descriptor

pattern. We illustrate the Mid-Res database consisting of

≈1400 poses for the finger and 3600 poses for the thumb.

we show how to incorporate it in a temporal model

as a-priori and design a search engine to estimate the

poses of a virtual hand in real-time.

Calculation of a Novel Pose-Descriptor. The basis

of our calculations is a data structure that comprises

the positions of all finger joints and tips. Considering

21 joints in hands, we define 7 limbs Lt, Rn, Md, Id,

T h, Wr, and Fr which stand for little, ring, middle,

index, thumb, wrist, and forearm, respectively. Ad-

ditionally, each finger has a tip t node and u, m, l, p

joints which stand for upper, middle, lower, and palm

(note the thumb has no middle joint).

By choosing the wrist as the Re f point, the finger-

tips distances to the wrist are calculated as follows,

and call it the pose-descriptor Type

1

:

Descriptor

1

(Fin

i

) =D(Tip(Fin

i

),W r)

where, Fin

i

={Lt,Rn,Md,Id,T h}

(1)

We also define the Type

2

descriptor as the sum of

the movable nodes’ distances to that Re f point.

Descriptor

2

(Fin

i

) =

∑

Node=t,u,m

D(Node(Fin

i

),W r)

where, Fin

i

={Lt,Rn,Md,Id}

Descriptor

2

(T h) =

∑

Node=t,u,l

D(Node(T h),W r)

(2)

In this equation, all fingers have the joint’s indexes of

t, u, m, and the thumb joint’s indexes are t, u, l.

In our experiments, we investigate the advantages

of the second type of this pose-descriptor. However,

as the following paragraphs will clarify, both descrip-

tors are unique. Thus in some applications, where the

position of other joints is not known, the first type

would work just as fine.

Pose-Descriptor Uniqueness: Justification & Evi-

dence. To employ this metric as a pose-descriptor,

we justify it has a unique value for each finger’s most

(or ideally all) poses for an excessively large num-

ber of finger posture set (e.g., high-resolution pose

database). To simplify the investigation, as seen in

Fig3, we begin with the hand kinematics for pose-

descriptor Type

2

. Also, we initially consider the dis-

tance of the fingers moving nodes to the palm joint of

each finger, PalmJ (not the wrist joint), to eliminate

the calculations on the Y -axis (using Eq3). Next, we

set the ranges for the finger joints’ angles. For the

four angles of the fingers, θ

1

, θ

2

, θ

3

, and θ

4

we ob-

serve the ranges of [0

◦

, 0

◦

] (e.g., L

1

is a fixed part),

[0

◦

, 90

◦

], [0

◦

, 120

◦

], and [0

◦

, 45

◦

], respectively.

Z =Z

T

+ Z

U

+ Z

M

= (z

1

+ z

2

+ z

3

+ z

4

)+

(z

1

+ z

2

+ z

3

) + (z

1

+ z

2

) = 3z

1

+ 3z

2

+ 2z

3

+ z

4

=3L

1

cos(θ

1

) + 3L

2

cos(θ

2

) + 2L

3

cos(θ

3

) + L

4

cos(θ

4

)

X =X

T

+ X

U

+ X

M

= (x

1

+ x

2

+ x

3

+ x

4

)+

(x

1

+ x

2

+ x

3

) + (x

1

+ x

2

) = 3x

1

+ 3x

2

+ 2x

3

+ x

4

=3L

1

sin(θ

1

) + 3L

2

sin(θ

2

) + 2L

3

sin(θ

3

) + L

4

sin(θ

4

)

(3)

Then considering T heta

i

s, we have a function on

the hyperspace in which we can form a parametric

line (with parameter t) that lies on the intersection of

this function and an arbitrary plane. Now, for every

value of t and T heta

i

, if the derivative of this line is

non-zero, we can conclude Eq3 is injective (See ap-

pendix for developing this derivative). To perform a

thorough numerical justification, we also investigate

the influence of the bones’ length and calculate the

derivative for different length values. To that, we nor-

malize L

i

s w.r.t L

2

(the lowest ‘moving’ limb on each

finger) and alter the values of others (L

i

> 0). Fi-

nally, we calculate this derivative for any two points

on the high-resolution database of 1-degree-step for

each joint (e.g. 486K poses).

In our investigations, the smallest value the deriva-

tive gets mostly is in the order of 10

−9

and rarely to

10

−16

. Considering the precision of python as 10

−28

,

we can conclude these smallest values are non-zero

(even with L

2

= L

3

= L

4

= 1 only if L

1

̸= L

2

). Thus,

Eq3 is unique for all poses of fingers in our exces-

sively high-resolution database, and in the uniqueness

property of the descriptor, the fixed part’s length has

the most significant role. One can select PalmJ in a

spot where the condition of L

1

̸= L

2

satisfies.

The next step is to incorporate the Y -axis dis-

tance into the computation, and to show the pose-

descriptor stays unique if the reference point is

not on the z-axis of the fingers. As demon-

strated in Fig3-right, Y

2

T

= Z

2

T

+ConstY

2

, Y

2

U

= Z

2

U

+ConstY

2

,

and Y

2

M

= Z

2

M

+ConstY

2

. Therefore, the total distance on Y-

axis is Y

2

= Z

2

T

+ Z

2

U

+ Z

2

M

+ 3 ×ConstY

2

. However, one term

of this equation is constant, and the Z terms are the

previously calculated Z-distance. Therefore, we con-

clude that our justification is extendable to an arbi-

trary Re f J on the Y -axis. The same conclusion is

derivable for Type

1

descriptor by conducting a sim-

ilar analysis. Because by a closer look at the Eq3,

Using a 1D Pose-Descriptor on the Finger-Level to Reduce the Dimensions in Hand Posture Estimation

503

Figure 3: The kinematics formulation to calculate pose-

descriptor Type

2

: The left image shows the side view of

a finger, to find Z and X values. The middle image demon-

strates the case when Y = 0 and D

PalmJoint

=

2

p

X

2

+ Z

2

. The right

image illustrates that the incorporation of the Y-distance

(D

PalmJoint

=

2

p

X

2

+Y

2

+ Z

2

) into the formula for pose-descriptor

Type

2

and how no new variable enters the equation.

Figure 4: The actual and normalized pose-descriptor Type

2

values of four fingers. Thumb has a different number of

poses thus its visualization with other fingers is not possible.

Type

1

is a special case of Type

2

where the z

i

and x

i

(i ∈ {1, 2, 3, 4}) coefficients equal one.

Finally, we extend the uniqueness assessment to

the thumbs. Thumbs, similar to fingers, have three

moving nodes. However, unlike other fingers, there

is no inherent fixed part in the thumbs’ bone kine-

matics. Nevertheless, by defining the Re f J some-

where below its PalmJ, we can assume a fixed limb

for it. Therefore, a similar hierarchical structure of

fingers is imitable for thumbs. However, a more sig-

nificant discrepancy here is, fingers have three de-

grees of freedom while thumbs have four with en-

tirely distinct ranges of degrees ([−20, 90], [−10, 10],

[−30, 10], [−20, 20]) and complex motion’s structure.

Arguing a similar kinematic formulations for thumbs

(in the appendix) exceeds the limits for this paper.

Therefore to justify the uniqueness of thumbs, we

employ two synthetic hand models with appropriate

rigging and skinning. After using the defined pose

representation and setting the virtual camera parame-

ters, we compute the joints’ three-D position. Then,

we select a Low

Res

, Mid

Res

, and High

Res

database for

each joint (e.g., less than 10

◦

-step, more than < 10

◦

-

step, 4

◦

-step, respectively). These resolutions lead to

a database of 300+, 3400+, and > 32500 postures for

the thumb on one of the hand model (for the second

model, these numbers are slightly less as the degree

ranges for that model is different). Then, we calculate

the pose-descriptor Type

1

and Type

2

, as explained

Figure 5: Various poses of all five fingers can relate together

using five one-dimensional temporal model. Thumb has a

different number of poses thus with could not visualize it’s

sorted pose-descriptor with other fingers.

in the previous subsection, and sort the values. Simi-

lar to the fingers, we witness unique descriptor values

for all different resolutions of thumb postures for both

types of descriptors and on both models (See Fig2 for

Type

1

, and for Type

2

on the first model).

In real-life applications (and our experiments), the

number of considered poses is usually much smaller

than the considered ones. Therefore, those large pos-

ture sets provide a high level of confidence about the

uniqueness of our proposed descriptor.

One-D Temporal Model. Initially, we sought to il-

lustrate the advantages of the pose-descriptor in the

mere estimation of the finger poses (without consider-

ing the time complexity). Conducting a set of experi-

ments, we achieved accurate pose estimations for one

finger where the gradient descent utterly failed. How-

ever, the time complexity of the approach was high,

thus we altered the roadmap and considered real-time

performance as a crucial criterion in our evaluations.

To that, we utilize our descriptor in a one-D tem-

poral Markovian model. As seen in Fig4, the model

uses the previous and current states to estimate the

next state. The figure visualizes all four fingers’ one-

dimensional pose-descriptor. Thumb has a different

number of poses thus, simultaneous visualization of

its descriptor in that figure is not possible.

Hands, as high DoF objects, require high dimen-

sional (and complex) temporal model. However, with

our pose-descriptor we can employ five 1D models

to enhance the time-complexity considerably (Fig5).

If n is the number of states and h is the number of

searched fingers, the search algorithm will have O(n

h

)

complexity. In a mid-resolution pose database, with-

out the temporal model and considering merely the

generate-and-test search strategy (GAT ), n will be

1500(for four fingers) or 3500 (for thumbs). How-

ever, if we employ the temporal model, n will be 3,

which leads to considerable time improvements.

ICPRAM 2023 - 12th International Conference on Pattern Recognition Applications and Methods

504

Figure 6: GAT

Dir

2st

(left) and GAT

Dir

1st

(right) to constraint

the temporal model of five fingers based on the previous

movement direction (open/closing) of fingers motion.

Further Improvement of Search Time. For find-

ing the optimal pose, our approach is to compare

the contours of the projected 3D model (into the 2D

plane, S) to the contour of the input image (I) using

Chamfer Distance (CD). However, CD is a costly pro-

cedure, because for each point on I, CD computes its

distance to all points in S to find the minimum (Eq4)

CD =

1

|I|

∑

i∈(0,I)

Min

s∈(0,S)

[d(I

i

, S

s

)]

(4)

However, our S and I are the ‘sorted’ (ordered)

contour points’ coordinates, so we can modify and

speed up the Chamfer computation (Eq5): By solely

calculating the distance around the neighborhood (nn)

of the previously found point. That reduces the time

complexity from O(n

2

) to O(h ∗n). For the first point

on I, we consider the entire points of S (initialization).

In our experiments, nn is usually 5.

CD =

1

|I|

∑

i∈(0,I)

Min

s∈(s−nn,s+nn)

[d(I

i

, S

s

)]

(5)

Real-Time Performance. Though these improve-

ments considerably enhanced the time complexity, the

performance is not real-time. Because in the tempo-

ral model, the GAT algorithm has O(n

h

) complexity

where, thanks to our one-D temporal model, n = 3.

That is, at each instance of time (S

t

), there are for-

ward, backward, and self transitions states each tem-

poral model could undergo (Fig5). By considering

five fingers, the overall complexity will amount to

n

h

= 3

5

= 243 poses to find the solution for one frame.

If we constrain them only to the left-to-right di-

rection (as in Fig6-left, for example), at S

t

, the tran-

sition can not go backward. So coming from S

t−1

, it

can either remain on S

t

or go forward. That is, there

are only two possible states each finger can under-

take (GAT

Dir

2st

), equating the total number of com-

plexity to n

h

= 2

5

= 48. A similar strategy exists for

Fig6-right. If merely forward transition is possible

(GAT

Dir

1st

), the number of poses the algorithm would

search equals to one: n

h

= 1

5

= 1.

Three Motion Patterns. With these considerations,

real-time estimation even on CPUs is feasible. Now,

we need application-specific scenarios to allow the

search, at each time step, to eliminate one or two

states (out of three possibilities). To that, we intro-

Figure 7: Three motion patterns of the little finger. We use

them to design application-dependent scenarios. These pat-

terns can also be very useful to analyze and model the time

series of fingers’ motion.

duce three motion patterns we observed in the fin-

gers/thumb which assist us designing our scenarios.

Four fingers have similar movement patterns but

have different ones compared to the thumbs. As

shown in Fig7 (using our Descriptor Type

1

for the lit-

tle finger), there are three possible paths the four fin-

gers can undertake to move between open and close

states. First, is freestyle motion, denoted as path

1

, in

which all the finger’s joints move together. Second,

hook-like motion (path

2

) in which, during the clos-

ing, the lowest joint of the fingers moves at the final

phase, but first, other joints close until their limits.

Third, pinch-like motion (path

3

), in which during the

closing, the lowest joint closes at first until its limit,

then the other joints close together.

For the thumb with upper, lower, and palm joints,

the path

1

is the freestyle, in which all the joints

close together. For the path

2

, (stretching motion), the

thumb initially approaches the index finger by mov-

ing the lower joint. Then by moving the palm joint,

the tip accosts the other fingers’ parent joint. Next, by

moving the upper joint, it closes the upper part, and

finally, it ends the motion by returning the palm joint

to its resting position. The path

3

, (wiping motion),

starts by moving its lower joint to approach the index

finger (similar to the second path). Then, it closes the

upper joint while being close to the index finger. Fi-

nally, it brings the lower joint to its resting position.

4 EXPERIMENT

We test the efficacy of our descriptor in finding the op-

timal solution in three experiments on a virtual hand

as input. We use the path

1

to design application-

specific scenarios with considerations from Table 1.

We employ four metrics to evaluate the perfor-

mance. First is the time complexity, indicated by fps.

Second is the initial and total latency caused by the

initialization or unexpected costs in the middle of the

estimation. Third is the average 3D Joint Position er-

Using a 1D Pose-Descriptor on the Finger-Level to Reduce the Dimensions in Hand Posture Estimation

505

Table 1: Eleven Variables could be considered in designing

the scenarios that contain fingers’ motion.

Exp Variables

Do the fingers move A/Synchronously?

Do they move orderly: Adjacent/Apart? Is the order Right-to-Left?

If failed, do we Srch Adj Fings Only? Is fingers’ Motion Full-Cycle?

If not, do they Conserve their Direction? Is there Hand-lvl Open-Closing?

Do we consider Previous Direction? If yes, is it Initialized?

No. of Prtl-Cycled Finger’s Dynamics? 1, 2, or 3?

No. of End-Cycled Fing’s Dynamics? 1, 2, or 3?

ror (JPos3D) calculated as the normalized sum of esti-

mated joints distances from the ground truth. Last is

the Accuracy (Acc). We attend to highly constrained

cases. Therefore, we indicate the amount of accuracy

only if it is not 100%. The search algorithm knows

the previous state of fingers in the sequence.

To animate the fingers, we employ a similar hi-

erarchical hand database as proposed by (Dadgar

and Brunnett, 2018). They Define their hierarchical

database on various layers of complexity. That en-

ables us to animate hand limbs individually with a

specific emphasize on the layer of interest (e.g., fin-

gers). Its finger’s layer (e.g., L

4

) is further parti-

tionable into five sublayers (one for each finger) with

modifiable step degree (e.g., resolution). These prop-

erties makes the database a suitable choice to exam-

ine the uniqueness of our pose-descriptor on different

resolutions, refine it with different paths, and consider

various variables to design specific scenarios.

We create a sequence of postures for each

finger based on the temporal evolution of each

finger specified for every experiment using a

Viterbi-like algorithm (Viterbi, 1967). Re-

turning S = {S

n

|n = 1,2, 3, 4, 5} sequences (where

1 ≡ Little, 2 ≡ Ring,3 ≡ Middle, 4 ≡ Index, 5 ≡ T humb) of

Q = {q

1

, q

2

, ..., q

m

} states, where m ≈ 2000 is a usual

practice in this work. After selecting a specific global

orientation, we retrieve the input sequences. Finally,

the OpenCV’s contour extraction method (Bradski,

2000) is applied to the input frames to extract the

contours when searching for the optimal posture. All

experiments employ the previous direction for the

search. An elaborated version of the definitions and

their evaluations are in the following subsections:

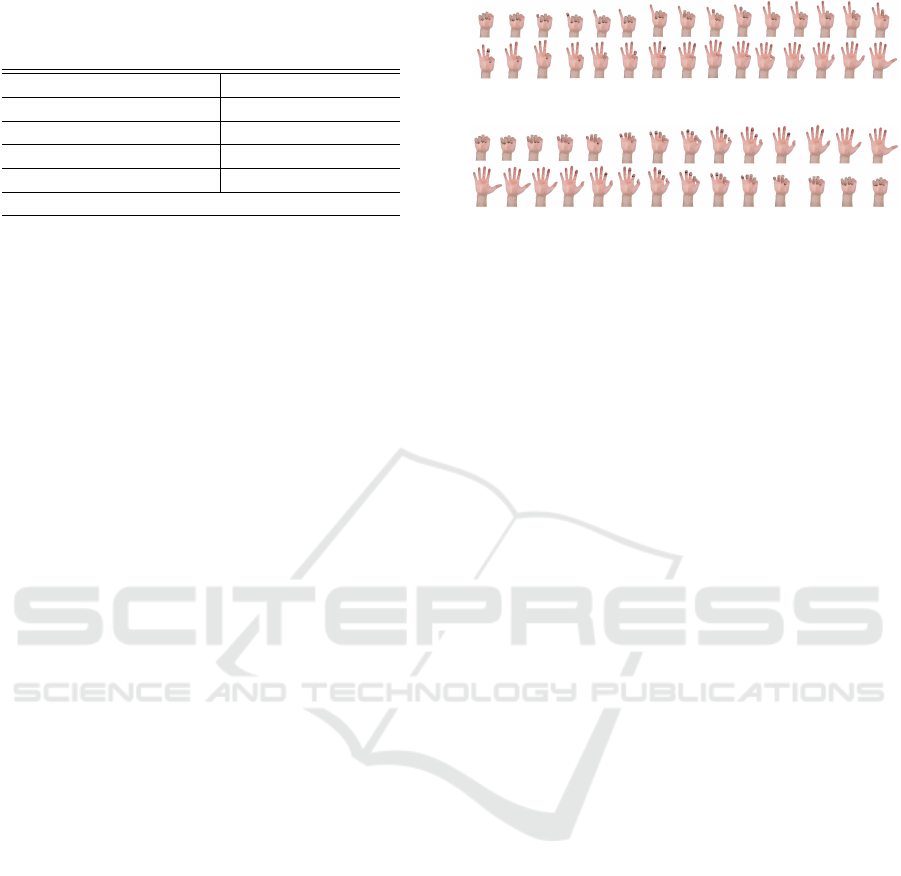

Experiment1. In this experiment, we consider all dif-

ferent digit combinations of fingers, including their

transitions (see Fig8). The fingers start at closed-pose,

and one by one (thus asynchronously), from the lit-

tle finger, each of them opens and stays in the open

pose (thus full-cycled on the finger level and having

hand-level cycles) until all fingers open from right to

left (therefore orderly or adjacent). After one-by-one

closing, the next opening cycle starts from the next

Figure 8: A sample of inputs for experiment one.

Figure 9: A sample of inputs for experiment two.

(e.g., ring) finger. For this scenario, considering the

path

1

where each finger has 21 possible poses from

close to open, we have 2100 poses. For the first frame,

we do not use the initialization (so the number of dy-

namics is 2

5

). Nevertheless, for the end poses, at

open or close cycles, the re-initialization information

is known (thus, the number of possible dynamics for

each finger is 1).

Experiment2. For this experiment, the collective free

motion of fingers from close-posture to open (and re-

verse) is under investigation (see Fig9). Here, other

fingers do not have to wait at their end states for one

finger to reach its closed or opened states (thus there is

no hand-level cycle). Identical to the previous exper-

iment, the hand starts in the closed pose and not one-

by-one (yet still asynchronously) opens from the little

finger. Each finger opens till the end (thus full-cycled)

until all fingers get opened from right to left (therefore

adjacent). Considering the 21 poses of path

1

, we have

1840 frames. Similarly, for the first frame we do not

employ the initialization (thus the number of dynam-

ics is 2

5

). For the end poses, at each cycles, also, the

re-initialization is known.

Experiment3. Here, we consider collective free mo-

tion (so there is no hand-level cycle) from close-

posture to open (and reverse). Analogous to the previ-

ous experiment, the hand starts in the closed pose and

asynchronously opens its fingers from the little one.

However, unlike in two other experiments, each finger

does not fully open until the end (thus partial-cycled)

until all fingers reach their end-pose from right to left

(therefore adjacent). For this scenario, considering

the path

1

, we have 2230 poses. We do not use the

initialization for the first frame. However, the number

of dynamics for that initial frame is 3

5

since the mo-

tion starts not at the beginning of the cycle. Here, for

the end cycles, at open or close postures, the initial-

ization information is available (thus, the number of

possible dynamics for each finger is 1).

Evaluation. Using our pose-descriptor in the one-D

temporal model enables us to achieve a real-time esti-

mation, as shown in Table 2. In all experiments, aver-

age output frame rates are above 31 fps. These output

ICPRAM 2023 - 12th International Conference on Pattern Recognition Applications and Methods

506

Table 2: The results of our three experiments indicate that

our pose-descriptor can assist one to estimate the poses of

all five fingers in real-time. The latency of the search is also

within toleration if the frame rate of the input video is less

than or equal to 31.

Exp Descriptor Type

1

Descriptor Type

2

I

f ps

O

f ps

L

Int

L

Ttl

O

f ps

L

Int

L

Ttl

1 25 32.5 62 142 32.5 50 156

2 25 32.4 52 173 31.1 44 302

3 24 31 440 515 32 294 359

1 32 32.5 632 1825 32.5 857 1791

2 31 32.4 237 971 31.1 1130 1734

3 31 31 2194 2202 32 1208 1474

frame rates are suitable to estimate the poses when

the input videos have an fps of 25 or lower. There-

fore, we can conclude our pose-descriptor provides

an appropriate tool for real-time applications. The

initialization of the first frame is the primary cause

of the increase in the total latency (L

Ttl

). The latency

increases slightly during the rest of ≈ 2000 frames.

That is usually the result of cost variations in contour

extraction and Chamfer comparison caused by the al-

terable shape of the hand. However, because of much

higher average output frame rates (compared to input

fps), such increases in latency have marginal effects

on the overall performance. The third experiment has

a worse initial latency (L

Init

). For, the third experi-

ment’s motions do not start at the full-cycled of close-

postures. At those partial-cycled poses, there are three

(previous, current, and the next) states for each finger

to search (3

5

= 243). Whereas at full-cycled postures,

there are solely two states (current and next). That

leads to 2

5

= 32 searches, and results in experiments

one and two have better latency.

In rows four, five, and six of the table, we eval-

uate these experiments, this time with a higher input

frame rate. As expected, the average output frame

rates of the searches remain unchanged compared to

the first set of experiments. However, the latency is

different, and that affects the performance. Though

the L

Init

is worse, it is L

Ttl

that experiences the highest

diminution and affects the performance by large mar-

gins. When we increase the frame rates of the input

video, the search algorithm finds the correct answer

for each frame as before. However, a slight variation

in the shapes of the contours and, thus, the estima-

tion cost causes the search stays behind the video’s

current frames, and the L

Ttl

drastically worsens. The

primary purpose of the experiments was to demon-

strate the suitability of our descriptor in estimating

the finger poses in real-time applications, even when

merely CPU resources are available. The conclusion

section elaborates on the JPose3D and Acc metrics we

achieved during the experimentation.

5 CONCLUSION

We proposed a simple pose-descriptor that character-

izes the postures at finger level. We showed this de-

scriptor has unique values for different finger poses,

reducing the fingers’ DoF to 5 and eliminates the ne-

cessity of constraining the problem (as needed in re-

lated works). We incorporated this pose-descriptor

into a temporal model and with further modifications

could achieve real-time performance on CPUs. To

share more insights about the JPose3D and Acc, we

briefly touch on other conducted experiments using

the GAT paradigm, with various image scales and fin-

gers’ combinations.

To start systematically, we defined five categories

of finger combinations: Cat

1

means merely the little

finger is under the search. Cat

2

means solely the lit-

tle and the ring fingers are the subjects of estimation.

Cat

3

means we estimate the little, ring, and middle

fingers pose. Cat

4

means we search the poses of the

little, ring, middle, and index fingers. Finally, Cat

5

means we search all five fingers.

Beginning with GAT search and 100%-

scale, we achieved Acc = 100% and JPose3D = 0, on

Cat

1

. As we proceeded to Cat

5

, the results

shows a slight decrements on the accuracy:

Cat

2

(Acc = 100%,JPose3D = 0), Cat

3

(Acc ≈ 100%,JPose3D = 0.0003),

Cat

4

(Acc ≈ 96%,JPose3D = 0.001), Cat

5

(Acc ≈ 95%,JPos3D = 0.0008).

However, the low average f ps of the search was

a significant obstacle since we were aiming for

real-time results: Cat

1

(Out

f ps

= 1.23), Cat

2

(Out

f ps

= 0.41),

Cat

3

(Out

f ps

= 0.14), Cat

4

(Out

f ps

= 0.045), and Cat

5

(Out

f ps

= 0.015).

Continuing with the GAT search, we down

scaled the input and searched images. The low-

est scale which led to fast yet accurate (stable) re-

sults was the 10%-scale. For example, on Cat

1

with

that scale, we faced a slight decrease in accuracy

Cat

1

(Acc = 97%,JPose3D = 0.003). However, the average f ps

gain was considerable (Out

f ps

= 25). A similar pattern

was observable in all categories insofar that for Cat

5

,

we achieved the accuracy of Cat

5

(Acc ≈ 80%,JPose3D = 0.02)

and the average (Out

f ps

= 0.3). Though having an accept-

able accuracy, the time complexity for Cat

5

was still

far from being real-time. However, the enhanced time

complexity motivated us to modify the CD.

Proceeding to GAT

Dir

1st

search, we once consid-

ered various scales and coupled them with our mod-

ified Chamfer distance computation and reached the

time complexity as high as Cat

5

(Out

f ps

= 42) on 10%-

scale. However, without the scaling down, the aver-

age frame rate was around 32 for that specific experi-

ment (not much difference). Thus we did not include

scaling down the images in our experiment section to

avoid the plethora of information.

Using a 1D Pose-Descriptor on the Finger-Level to Reduce the Dimensions in Hand Posture Estimation

507

Applications. The applications of our pose-

descriptor can fork in several directions. First, as a

consequence of reducing the finger’s DoF, one could

build a new motion capture system with fewer sensors

(e.g., markers, haptics), wires, and circuitry. Second,

our descriptor assists in constructing a training set that

is as diverse as possible images in machine learning)

to let the nets generalize better. Finally, one can ben-

efit from our one-dimensional descriptor for studying

and modeling of sign languages. We touched on this

briefly and experienced the convenience of designing

synthetic sign gestures with our descriptor.

ACKNOWLEDGMENTS

This project was funded by the Deutsche Forschungs-

gemeinschaft CRC 1410 / project ID 416228727.

REFERENCES

Bradski, G. (2000). The OpenCV Lib. Dobb Sftwr Tl s Jrnl.

Dadgar, A. and Brunnett, G. (2018). Multi-Forest Classifi-

cation and Layered Exhaustive Search Using a Fully

Hierarchical Hand Posture / Gesture Database. In VIS-

APP, Funchal.

Dung, L. and Mizukawa, M. (2010). Fast fingertips posi-

tioning based on distance-based feature pixels. ICCE.

Hardenberg, C. V. (2001). Bare-Hand Human-Computer

Interaction.

Liao, Y., Zhou, Y., Zhou, H., and Liang, Z. (2012). Finger-

tips detection algorithm based on skin colour filtering

and distance transformation. Quality Sftwr Int Conf.

Paralic, M. (2012). Fast connected component labeling in

binary images. 35th Intern Conf on TSP.

Raheja, J. L., Chaudhary, A., and Singal, K. (2011). Track-

ing of fingertips and centers of palm using KINECT.

CIMSim, 3rd Intern Conf.

Shimizume, T., Umezawa, T., and Osawa, N. (2019). Esti-

mation of the Distance Between Fingertips Using Sil-

houette and Texture Information of Dorsal of Hand,

volume 11844 LNCS. Springer International.

Viterbi, A. (1967). Error bounds for convolutional codes

and an asymptotically optimum decoding algorithm.

IEEE Trans on Informtin Theory, 13(2):260–269.

Yamamoto, S., Funahashi, K., and Iwahori, Y. (2012). A

study for vision based data glove considering hidden

fingertip with self-occlusion. 13th Int Conf on Soft-

ware Eng, AI, Netwrk, & Parallel/Distrb Compt.

Zhang, F., Bazarevsky, V., Vakunov, A., Tkachenka, A.,

Sung, G., Chang, C.-L., and Grundmann, M. (2020).

MediaPipe Hands: On-device Real-time Hand Track-

ing.

Zhao, W., Zhang, J., Min, J., and Chai, J. (2013). Robust re-

altime physics-based motion control for human grasp-

ing. ACM Trans on Graphics, 32(6).

APPENDIX: UNIQUENESS

According to Fig3, we have z

1

= L

1

× cos(θ

1

),

z

2

= L

2

× cos(θ

2

), z

3

= L

3

× cos(θ

3

), z

4

= L

4

× cos(θ

4

),

x

1

= L

1

× sin(θ

1

), x

2

= L

2

× sin(θ

2

), x

3

= L

3

× sin(θ

3

),

x

4

= L

4

× sin(θ

4

). Therefore, descriptor Type

2

will be:

D

2

= F(θ

1

, θ

2

, θ

3

, θ

4

) = X

2

+ Z

2

= (x

1

+ x

2

+ x

3

+ x

4

)

2

+ (z

1

+ z

2

+ z

3

+ z

4

)

2

= L

2

1

sin

2

(θ

1

) + L

2

2

sin

2

(θ

2

) + L

2

3

sin

2

(θ

3

) + L

2

4

sin

2

(θ

4

)+

L

2

1

cos

2

(θ

1

) + L

2

2

cos

2

(θ

2

) + L

2

3

cos

2

(θ

3

) + L

2

4

cos

2

(θ

4

) + 2 × [

L

1

L

2

sin(θ

1

)sin(θ

2

) + L

1

L

3

sin(θ

1

)sin(θ

3

) + L

1

L

4

sin(θ

1

)sin(θ

4

)+

L

2

L

3

sin(θ

2

)sin(θ

3

) + L

2

L

4

sin(θ

2

)sin(θ

4

) + L

3

L

4

sin(θ

3

)sin(θ

4

)+

L

1

L

2

cos(θ

1

)cos(θ

2

) + L

1

L

3

cos(θ

1

)cos(θ

3

) + L

1

L

4

cos(θ

1

)cos(θ

4

)+

L

2

L

3

cos(θ

2

)cos(θ

3

) + L

2

L

4

cos(θ

2

)cos(θ

4

) + L

3

L

4

cos(θ

3

)cos(θ

4

)]

Using cos(x − y) = cosxcosy + sinxsiny and

considering θ

1

is always zero, we can simplify

the kinematic function F(θ

2

, θ

3

, θ

4

) as following:

F = L

2

1

+ L

2

2

+ L

2

3

+ L

2

4

+ 2 × [L

1

L

2

cos(θ

2

) + L

1

L

3

cos(θ

3

)+

L

1

L

4

cos(θ

4

) + L

2

L

3

cos(θ

3

− θ

2

) + L

2

L

4

cos(θ

4

− θ

2

) + L

3

L

4

cos(θ

4

− θ

3

)]

A Line Connecting Two Points. To show

that F(θ) is unique/injective in a given

interval (e.g., (θ

2

0

, θ

3

0

, θ

4

0

) ̸= (θ

2

1

, θ

3

1

, θ

4

1

)

with

F(θ

2

0

, θ

3

0

, θ

4

0

) ̸= F(θ

2

1

, θ

3

1

, θ

4

1

)), we connect these two

points with a line, and represent it in a vector form:

l (t) =

θ

2

0

, θ

3

0

, θ

4

0

+t

θ

2

1

− θ

2

0

, θ

3

1

− θ

3

0

, θ

4

1

− θ

4

0

, t ∈ ℜ

In terms of F(θ), this line has the following form:

F(t) =F

θ

2

0

+ (θ

2

1

− θ

2

0

)t, θ

3

0

+ (θ

3

1

− θ

3

0

)t, θ

4

0

+ (θ

4

1

− θ

4

0

)t

With the following components:

F

θ

2

(t) =L

2

1

+ L

2

2

+ L

2

3

+ L

2

4

+ 2 × L

1

L

2

cos(θ

2

0

+ (θ

2

1

− θ

2

0

)t)+

2 × L

2

L

3

cos(θ

3

0

− θ

2

0

+ (θ

3

1

− θ

2

1

)t − (θ

3

0

− θ

2

0

)t)+

2 × L

2

L

4

cos(θ

4

0

− θ

2

0

+ (θ

4

1

− θ

2

1

)t − (θ

4

0

− θ

2

0

)t)

F

θ

3

(t) =L

2

1

+ L

2

2

+ L

2

3

+ L

2

4

+ 2 × L

1

L

3

cos(θ

3

0

+ (θ

3

1

− θ

3

0

)t)+

2 × L

2

L

3

cos(θ

3

0

− θ

2

0

+ (θ

3

1

− θ

2

1

)t − (θ

3

0

− θ

2

0

)t)+

2 × L

3

L

4

cos(θ

4

0

− θ

3

0

+ (θ

4

1

− θ

3

1

)t − (θ

4

0

− θ

3

0

)t)

F

θ

4

(t) =L

2

1

+ L

2

2

+ L

2

3

+ L

2

4

+ 2 × L

1

L

4

cos(θ

4

0

+ (θ

4

1

− θ

4

0

)t)+

2 × L

2

L

4

cos(θ

4

0

− θ

2

0

+ (θ

4

1

− θ

2

1

)t − (θ

4

0

− θ

2

0

)t)+

2 × L

3

L

4

cos(θ

4

0

− θ

3

0

+ (θ

4

1

− θ

3

1

)t − (θ

4

0

− θ

3

0

)t)

(6)

If the derivative of this function is non-zero for each t

and any pair of points, then F is injective. We have

F

′

(t) =(θ

2

1

− θ

2

0

)F

θ

2

(t) + (θ

3

1

− θ

3

0

)F

θ

3

(t) + (θ

4

1

− θ

4

0

)F

θ

4

(t)

(7)

Also, for human hands, one can realize the following:

θ

2

= [0, 90

◦

] , θ

3

= θ

2

+ [0, 120

◦

] = [0, 210

◦

] =⇒ θ

3

− θ

2

= [0, 120

◦

]

θ

4

=θ

3

+ [0, 45

◦

] = θ

2

+ [0, 120

◦

] + [0, 45

◦

] = θ

2

+ [0, 165

◦

] =

[0, 255

◦

] =⇒ θ

4

− θ

2

= [0, 165

◦

] , θ

4

− θ

3

= [0, 45

◦

]

Now, by numerically populating T heta

i

s (combi-

nation of 2 from 486K, C

2

486K

), L

i

s, and t, we check if

the F(θ) is injective.

ICPRAM 2023 - 12th International Conference on Pattern Recognition Applications and Methods

508