Sentiment-Based Engagement Strategies for Intuitive Human-Robot

Interaction

Thorsten Hempel

a

, Laslo Dinges

b

and Ayoub Al-Hamadi

c

Neuro-Information Technology, Faculty of Electrical Engineering and Information Technology,

Otto von Guericke University, Magdeburg, Germany

Keywords:

Human-Robot Interaction, Approaching Strategy, Sentiment Estimation, Emotion Detection, Anticipating

Human Behaviors, Approaching People, Productive Teaming.

Abstract:

Emotion expressions serve as important communicative signals and are crucial cues in intuitive interactions

between humans. Hence, it is essential to include these fundamentals in robotic behavior strategies when inter-

acting with humans to promote mutual understanding and to reduce misjudgements. We tackle this challenge

by detecting and using the emotional state and attention for a sentiment analysis of potential human inter-

action partners to select well-adjusted engagement strategies. This way, we pave the way for more intuitive

human-robot interactions, as the robot’s action conforms to the person’s mood and expectation. We propose

four different engagement strategies with implicit and explicit communication techniques that we implement

on a mobile robot platform for initial experiments.

1 INTRODUCTION

Once utopian, robots are increasingly moving from

industrial and laboratory settings to the real-world

in order to assist humans in everyday tasks. In this

process, human-robot interaction became a central

point of interest in robotic research that investigates

the manifold challenges in performing interactive and

collaborative tasks. As a general principle, the robot

needs to be acquired with the necessary skills to en-

able intuitive interactions with arbitrary human inter-

action partners, regardless of the corresponding task,

the human’s intention and communication behavior.

To this end, a fundamental objective is the anticipa-

tion of appropriate strategies to proactively approach

or evade people based on the situation-specific con-

text, such as the person’s mood, attitude (M

¨

uller-

Abdelrazeq et al., 2019; Elprama et al., 2016) and

ressentiments towards robots (Naneva et al., 2020;

Miller et al., 2021). First, it requires the determina-

tion of people’s interest in interacting at all (Satake

et al., 2009; Finke et al., 2005). Thereupon, the robot

has to find an appropriate strategy for either engaging

people to establish an interaction or to avoid a con-

frontation if desired. In order to reach these under-

a

https://orcid.org/0000-0002-3621-7194

b

https://orcid.org/0000-0003-0364-8067

c

https://orcid.org/0000-0002-3632-2402

lying decisions, the robot has to cope with a row of

sub-tasks: estimating people’s focus of attention (Dini

et al., 2017), predicting their mental state, and carry-

ing out a (dis-)engagement strategy (Avelino et al.,

2021).

Especially, the estimation of the interaction will-

ingness is a very challenging task, as this mental state

tends to provide only vague social signals. As an

alternative approach, many methods (Oertel et al.,

2020) go after the detection of engagement in order

to determine if a person already approached the robot

and waits for the robot’s reaction. This state expresses

itself more clearly, e.g., in voice commands (Foster

et al., 2017), gestures and proxemics, but it under-

mines the proactive approach and neglects situations

where the human partner is in need of help, but too

uncertain or not aware of the robot.

In this work, we close the gap between the lack of

proactive behavior and advanced mental state predic-

tions by introducing a sentiment-based engagement

strategy.

By combining the focus of attention and emotions,

we derive a sentiment analysis that allows us to select

fine-grained behavior patterns that exceed current bi-

nary engagement and disengagement strategies. We

implement our approach on a mobile robot platform

and execute our strategies using explicit and implicit

embodied communication. Our models are trained

on minimum sized networks in order to perform our

680

Hempel, T., Dinges, L. and Al-Hamadi, A.

Sentiment-Based Engagement Strategies for Intuitive Human-Robot Interaction.

DOI: 10.5220/0011772900003417

In Proceedings of the 18th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2023) - Volume 4: VISAPP, pages

680-686

ISBN: 978-989-758-634-7; ISSN: 2184-4321

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

method on mobile systems with limited computa-

tional resources.

2 RELATED WORK

In recent years, proxemic rules have been a popular

tool to draw conclusions about the different states of

human-robot interactions (Mumm and Mutlu, 2011)

and how spatial zones can be leverages to improve

them (Syrdal et al., 2007). (Repiso et al., 2018) pre-

dict appropriate encounter points to achieve a natu-

ral engagement with a group of people. Similarly,

(Satake et al., 2009) proactively approach detected

people to offer help. (Fischer et al., 2021) verbally

greet people and dynamically adapt the voice volume

based on the distance to the target person. Follow-

ing (Kendon, 1990), (Carvalho et al., 2021) apply the

Kendon’s greeting model to approach people while

tracking the human mental states of the interaction us-

ing multiple features, such as gaze, facial expressions,

and gestures. Yet, all of these methods neglect if the

human counterpart is actually interested and ready to

initiate an interaction. This is problematic, as in the

case of negative attitude, the robot’s interaction ef-

forts can be perceived as rude and annoying (Br

¨

ohl

et al., 2019). An initial attempt to address this was

presented by (Kato et al., 2015), who only approach

and offer help if they detect signs of attention towards

the robot. But this still doesn’t incorporate the actual

mental attitude of the human counterpart towards the

interaction itself.

The recent advances in the area of deep-learning

opened up new possibilities for the visual analysis of

humans, starting from general detection tasks to the

estimation of facial micro expressions. This allows

the aggregation of additional relevant information,

such as emotions (Chuah and Yu, 2021; Spezialetti

et al., 2020), to better understand the behavior and in-

tentions of the human interaction partner. To the best

of our knowledge, we are the first to leverage this ad-

vancement by predicting sentiment states based on vi-

sual emotion estimation to improve interaction strat-

egy for robots. We summarize our main contributions

as follows:

• We propose an image-based sentiment analysis to

gauge the current interaction preference of poten-

tial human interaction partners.

• We propose four different behavior strategies (En-

gage, Attract, Ignore, Avoid) that comprises a

number of different explicit and implicit commu-

nication modalities.

Yaw, Tilt Head

Rotate Base

Lifting Torso

Speech

Figure 1: The TIAGo robot platform with the key opera-

tions used in our method.

• We design ultra lightweight models to implement

our method and integrate it on a mobile robot plat-

form.

• We conduct initial experiments in laboratory set-

tings for an early evaluation.

3 METHOD

This section explains each step of our proposed

method for sentiment-based interaction behavior.

The sentiment analysis is based on two main fea-

tures: head pose and emotion estimation. The head

pose estimation is a leading indicator to gauge the cur-

rent visual focus of attention of the person. The emo-

tion estimation predicts the current emotional state of

the person. Together, it allows the assumption about

a person that a) is seeking for interaction, b) is in-

decisive about it or c) doesn’t want to engage with

the robot at all. Determining each sentiment state en-

ables to perform a dedicated robot behavior that cor-

responds with the expected reaction from the person

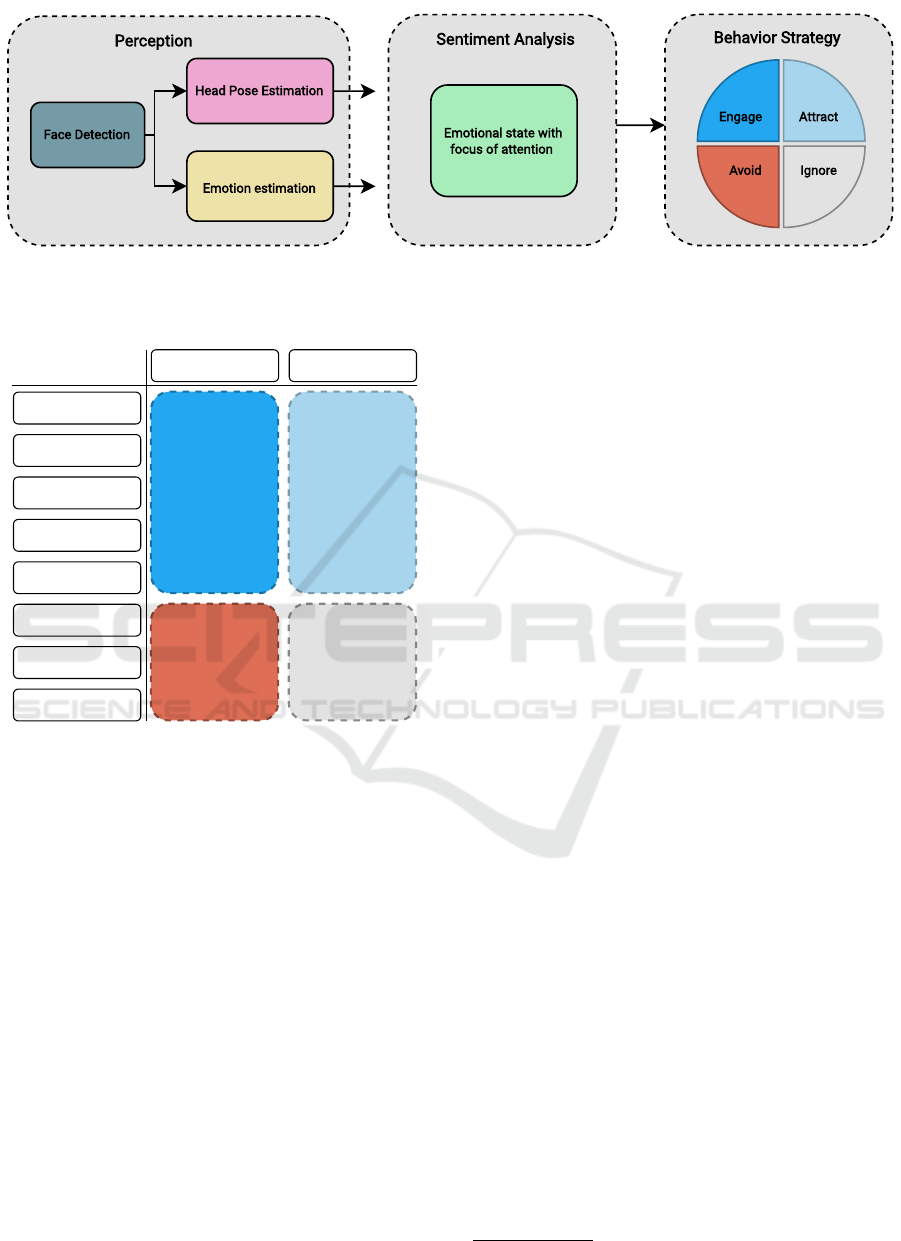

and, thus, improves an intuitive interaction. Figure 2

illustrates an overview of our proposed system. In the

following subsection, we will give details about each

component of the system and its interplay.

3.1 Robot Platform

We use the TIAGo robot from PAL Robotics, a mobile

service robot for indoor environments. It is equipped

with an RGB-D camera mounted inside its head, that

can be yawed and pitched to dynamically perceive the

environment. For grasping and moving objects, the

Sentiment-Based Engagement Strategies for Intuitive Human-Robot Interaction

681

robot has a manipulator arm with a 5 finger gripper.

The torso can be lifted to adjust the robot’s height, and

integrated speakers allow the output of voice com-

mands. These abilities allow the combination of ex-

plicit and implicit communication modalities that we

use in our method.

• Head Following: The robot’s head aims at and fol-

lows a moving person as an implicit signal of at-

tentiveness without actively approaching.

• Body Following: If a person exceeds the range of

the robot’s moving head, the entire robot base ro-

tates in order to keep track of the person. This

behavior communicates an attention commitment

and is therefore an even stronger signal as the

head following.

• Torso Lifting: Lifting the torso is a rather subtle

communication cue, as it is perceived similarly to

the social convention of standing up while greet

someone.

• Speech: Approaching people with speech rep-

resents an explicit communication strategy, that

stronger commits them to a reaction. Hence,

speech should be only used if the approached per-

son is highly expected to welcome an interaction.

3.2 Visual Attention

Typical indicators for gauging the human visual at-

tention are the gaze and, more coarsely, the head

pose. As the gaze depends on the eyes, which takes

only a small part in face images, it is prone to er-

rors. Therefore, we estimate the visual attention of

the surrounding persons based on a head pose predic-

tion algorithm. At first, we locate faces in the image

stream using an ultra light SSD face detector. Then,

the face crops are further processed by the 6DRep-

Net (Hempel et al., 2022), that uses a rotation repre-

sentation to directly regress yaw, pitch, and roll an-

gle of the faces. To assure real-time processing capa-

bilities, we replace the original 6DRepNet backbone

with the efficient MobileNetv3-Small (Howard et al.,

2019). The new head pose prediction model is able to

maintain 90% of its accuracy compared to the origi-

nal model, but is downsized to only one tenth of its

parameters count.

3.3 Emotion Estimation

In order to assess the attitude towards robots as well

as differentiated subjective states such as dislike or

willingness to cooperate, basic emotions can be used

as indicating features. Naturally, these are frequently

communicated by facial expressions. Hence, au-

tomatic facial expression recognition is an essen-

tial method to generate features for Human-Robot-

Collaboration and attention prediction.

Previous facial expression recognition methods

adhere to the established pipeline of face detection,

landmark extraction, and action unit (AU). Then,

emotions are classified using these AUs as feature

vectors (Wegrzyn et al., 2017; Vinkemeier et al.,

2018; Werner et al., 2017). However, end-to-end

learning on a single but more comprehensive, and bet-

ter generalizing database, often outperforms such tra-

ditional approaches. The AffectNet database (Molla-

hosseini et al., 2017) for example contains about 500k

in-the-wild samples for neutral and the basic emo-

tions happy, disgust, fear, surprise, anger, and sad-

ness. Furthermore, it is the only database, that also

includes ground truth of valence and arousal (VA),

which are, in contrast to the basic emotion classes,

continuous labels ∈ [−1, 1] which can be used for re-

gression tasks. VA is less intuitively interpretable by

humans, but it allows differentiation between facial

expressions of varying degrees. This is particularly

useful for capturing modest but long-term changes

in expressed emotion. In addition, a multitask net-

work (simultaneous training of classification and re-

gression) also improved the accuracy of the classifi-

cation. Furthermore, Valence summarizes essential

information about several emotions in one parameter

(negative, neutral, positive), which may facilitate the

development of heuristic behavior rules for some sce-

narios.

In our former work, we proposed a multitask

network based on YOLOv3 (Dinges et al., 2021).

For the current paper, we compared EfficientNetV2-

S, ResNet18, and MobileNetV3-Large. Despite the

poorer converging training loss, MobileNet, which is

optimized for limited hardware, achieved almost the

same accuracy on the test set as EfficientNet (devia-

tion < 1%). In addition, we used a weighted random

sampler to handle the imbalanced dataset and re-train

the network on the training and test set to generate

an application model with increased robustness to un-

seen data.

3.4 Sentiment Analysis and

Approaching Strategy

Detecting emotional expressions combined with the

visual focus of attention allows perceiving important

communicative signals. As in human-human interac-

tion, you may be more likely to greet a stranger who

smiles at you but avoid eye contact if that stranger

is scowling. We transfer these principles to deter-

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

682

Engage Attract

Avoid Ignore

Head Pose Estimation

Emotion estimation

Face Detection

Perception

Behavior Strategy

Sentiment Analysis

Emotional state with

focus of attention

Figure 2: Method overview of our proposed system. Detected faces are used to estimate the head pose and the corresponding

emotions based on facial expressions. Both information is used to determine the emotional state with the corresponding visual

focus of the person’s attention that indicates the sentiment and allows a fine-tuned behavior strategy.

Happy

Uncertain

Surprise

Neutral

Sad

Fear

Disgust

Anger

Facing Averted

ENGAGE:

Lift torso, follow with

head and base, and

approach by speech

ATTRACT:

Lift torso and follow

with head

AVOID:

Look down and avoid

attraction

IGNORE:

Follow with head

Figure 3: Behavior strategies based on the emotional and

attentive state.

mine states of sentiment based on the combination

of the different emotions and visual attention. Is a

person showing visual signs of fear or anger, while

he is attentive towards the robot, it indicates a nega-

tive attitude towards the robot. In this case, a passive

robot behavior without hectic movements can help to

defuse the situation and to calm the person. If a per-

son is feared, but not attentive towards the robot, the

reason for the fear might not be the robot. Execut-

ing a more active robot behavior to show awareness

could be therefore a more suitable strategy. Hence,

our sentiment analysis allows us to compartmental-

ize the common hard binary engagement strategy into

soft behavior. Instead of choosing between engaging

and not engaging, we choose between four different

behaviors: Engage, Attract, Avoid, Ignore.

• Engage: Engaging will extend the torso of the

robot to signal the awareness of the person’s pres-

ence. Additionally, voice commands will greet

the people to actively initiate an interaction. This

strategy is called, when the attention is directed at

the robot with clear positive emotional attitude.

• Attract: Positive emotional attitude without atten-

tion towards the robot leads to more passive be-

havior. The goal is to signal the offer for inter-

action and help without actively committing to an

interaction. This is done by extending the torso

to show awareness, facing the person, but without

verbally greeting. This way, a non-verbal offer for

support (especially in case of uncertainty and sad-

ness) is subtly given, that can be easily accepted

or ignored.

• Avoid: The avoiding strategy is executed when

strong negative emotions (Fear, Disgust, Anger)

are facing the robot. In this case, the robot avoids

eye contact (keeps the face outside the camera

center) and takes a passive part. Especially in case

of fear, the goal is to increase the feeling of safe-

ness by avoiding unexpected motions.

• Ignore: If the negative emotions are not directed

at the robot, the robot mostly ignores the person,

only following it with its head to capture changes

in emotional or attention states.

Figure 3 shows all combinations of emotional and at-

tention states with corresponding behavior strategy.

4 IMPLEMENTATION

We used the ROS

1

middleware to implement our pro-

posed solution on our robot platform, as this also

offers a simplified integration into other ROS-based

robot platforms. Face detection, head pose and emo-

tion estimation are executed in separate nodes, that

forward the necessary information to perform the sen-

timent analysis and the action controls. While the lat-

ter is performed on the robot itself, the inference of

1

https://www.ros.org/

Sentiment-Based Engagement Strategies for Intuitive Human-Robot Interaction

683

Figure 4: Sentiment analysis in a laboratory setting. The

person is tracked using a face detector, followed by a head

pose and emotion estimation. As the person shows atten-

tiveness and happy, a positive sentiment towards the robot

is expected and a proactive initiation strategy selected.

the neural networks is outsourced to a separate note-

book that is mounted on the shoulder of the robot.

This ensures the complete mobility of the robot, while

exploiting additional computational resources. The

notebook has a Quadro RTX 4000 processing all

models in parallel with the following inference times:

• Face Detection: 6.7 ms

• Head Pose Estimation: 1.4 ms

• Emotion Estimation: 6.3 ms

The visual perception can be therefore performed in

real time and is able to continuously track people in

the surroundings.

5 EXPERIMENT

We conducted an initial experiment in a laboratory

setting to test our implemented method on the TIAGo

robot platform. The objective of the experiment was

to gain experience about the model’s performances in

real application scenarios. Figure 4 shows a snapshot

from one of our tests. The robot platform detects a

face in the environment and predicts head pose and

emotional state. The focus of attention is on the robot

(indicated by the green arrow) in conjunction with ex-

pressing happiness, so the robot initiates an interac-

tion by following the engage strategy.

While the models provide very robust predic-

tions, the implementation is currently entirely based

on single shot detections. Yet, over the duration of

face tracking, facial expressions change intermedi-

ately and short distractions make the head pose oc-

casionally oscillating. This leads to abrupt changes of

states within short time periods. Hence, to improve

the robustness of the sentiment analysis, conclusions

about the current state should incorporate the predic-

tions from multiple time frames.

6 CONCLUSION

In this paper, we present a sentiment-based method-

ology to improve the intuitiveness and reasoning in

human-robot interactions. Our method focuses on the

visual perception of mental states and the focus of at-

tention to derive a sentiment analysis for suitable in-

teraction initiation strategies. We take also into ac-

count the case where people are not interested in an

engagement at all, which has been mainly neglected

in the recent literature. To implement our method,

we trained lightweight perception models that we in-

tegrate on a mobile robot platform. Finally, we con-

ducted initial experiments using a mobile robot plat-

form to analyze the performance of our models and

the overall system.

In the future, we will extend our work by

embedding our sentiment analysis in a temporal-

probabilistic framework to improve the robustness in

real-world scenarios. Here, we will also incorpo-

rate the valence and arousal predictions to evaluate

the emotion’s intensity and transitions between emo-

tional states. Further enhancements offer the inclu-

sion of the robot’s mobility to actively engage and dis-

engage people or to add additional interaction modal-

ities, such as following people by command, getting

out of the way of passing people, or approaching a

place that offers a better overview of the surround-

ings.

Finally, we will conduct a study with test subjects

to evaluate our approach in terms of intuitiveness and

sympathy.

ACKNOWLEDGEMENTS

This work is funded and supported by the Fed-

eral Ministry of Education and Research of Ger-

many (BMBF) (AutoKoWAT-3DMAt under grant Nr.

13N16336) and German Research Foundation (DFG)

under grants Al 638/13-1, Al 638/14-1 and Al 638/15-

1.

REFERENCES

Avelino, J., Garcia-Marques, L., Ventura, R., and

Bernardino, A. (2021). Break the ice: a survey on

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

684

socially aware engagement for human–robot first en-

counters. International Journal of Social Robotics,

13:1851 – 1877.

Br

¨

ohl, C., Nelles, J., Brandl, C., Mertens, A., and Nitsch,

V. (2019). Human-robot collaboration acceptance

model: Development and comparison for germany,

japan, china and the usa. I. J. Social Robotics, 11:709–

726.

Carvalho, M., Avelino, J., Bernardino, A., Ventura, R.

M. M., and Moreno, P. (2021). Human-robot greet-

ing: tracking human greeting mental states and acting

accordingly. 2021 IEEE/RSJ International Confer-

ence on Intelligent Robots and Systems (IROS), pages

1935–1941.

Chuah, S. H.-W. and Yu, J. (2021). The future of ser-

vice: The power of emotion in human-robot interac-

tion. Journal of Retailing and Consumer Services,

61:102551.

Dinges, L., Al-Hamadi, A., Hempel, T., and Al Aghbari,

Z. (2021). Using facial action recognition to evaluate

user perception in aggravated hrc scenarios. In 2021

12th International Symposium on Image and Signal

Processing and Analysis (ISPA), pages 195–199.

Dini, A., Murko, C., Yahyanejad, S., Augsd

¨

orfer, U., Hof-

baur, M., and Paletta, L. (2017). Measurement and

prediction of situation awareness in human-robot in-

teraction based on a framework of probabilistic atten-

tion. In 2017 IEEE/RSJ International Conference on

Intelligent Robots and Systems (IROS), pages 4354–

4361.

Elprama, S., El Makrini, I., Vanderborght, B., and Jacobs,

A. (2016). Acceptance of collaborative robots by fac-

tory workers: a pilot study on the role of social cues

of anthropomorphic robots.

Finke, M., Koay, K. L., Dautenhahn, K., Nehaniv, C., Wal-

ters, M., and Saunders, J. (2005). Hey, i’m over here

- how can a robot attract people’s attention? In RO-

MAN 2005. IEEE International Workshop on Robot

and Human Interactive Communication, 2005., pages

7–12.

Fischer, K., Naik, L., Langedijk, R. M., Baumann, T.,

Jel

´

ınek, M., and Palinko, O. (2021). Initiating human-

robot interactions using incremental speech adapta-

tion. In Companion of the 2021 ACM/IEEE Interna-

tional Conference on Human-Robot Interaction, HRI

’21 Companion, page 421–425, New York, NY, USA.

Association for Computing Machinery.

Foster, M. E., Gaschler, A., and Giuliani, M. (2017). Au-

tomatically classifying user engagement for dynamic

multi-party human–robot interaction. International

Journal of Social Robotics, 9.

Hempel, T., Abdelrahman, A. A., and Al-Hamadi, A.

(2022). 6d rotation representation for unconstrained

head pose estimation. In 2022 IEEE International

Conference on Image Processing (ICIP), pages 2496–

2500.

Howard, A., Sandler, M., Chu, G., Chen, L.-C., Chen, B.,

Tan, M., Wang, W., Zhu, Y., Pang, R., Vasudevan, V.,

Le, Q. V., and Adam, H. (2019). Searching for mo-

bilenetv3. In Proceedings of the IEEE/CVF Interna-

tional Conference on Computer Vision (ICCV).

Kato, Y., Kanda, T., and Ishiguro, H. (2015). May i

help you? - design of human-like polite approach-

ing behavior-. In 2015 10th ACM/IEEE International

Conference on Human-Robot Interaction (HRI), pages

35–42.

Kendon, A. (1990). Conducting Interaction: Patterns of Be-

havior in Focused Encounters. Cambridge University

Press, Cambridge, U.K.

Miller, L., Kraus, J., Babel, F., and Baumann, M. (2021).

More than a feeling—interrelation of trust layers in

human-robot interaction and the role of user disposi-

tions and state anxiety. Frontiers in Psychology, 12.

Mollahosseini, A., Hasani, B., and Mahoor, M. H. (2017).

Affectnet: A database for facial expression, valence,

and arousal computing in the wild. IEEE Transactions

on Affective Computing, 10:18–31.

M

¨

uller-Abdelrazeq, S. L., Sch

¨

onefeld, K., Haberstroh, M.,

and Hees, F. (2019). Interacting with Collaborative

Robots—A Study on Attitudes and Acceptance in In-

dustrial Contexts, pages 101–117. Springer Interna-

tional Publishing, Cham.

Mumm, J. and Mutlu, B. (2011). Human-robot proxemics:

Physical and psychological distancing in human-robot

interaction. In 2011 6th ACM/IEEE International

Conference on Human-Robot Interaction (HRI), pages

331–338.

Naneva, S., Gou, M. S., Webb, T. L., and Prescott, T. J.

(2020). A systematic review of attitudes, anxiety, ac-

ceptance, and trust towards social robots. Interna-

tional Journal of Social Robotics, pages 1–23.

Oertel, C., Castellano, G., Chetouani, M., Nasir, J., Obaid,

M., Pelachaud, C., and Peters, C. (2020). Engagement

in human-agent interaction: An overview. Frontiers in

Robotics and AI, 7.

Repiso, E., Garrell, A., and Sanfeliu, A. (2018). Robot

approaching and engaging people in a human-robot

companion framework. In 2018 IEEE/RSJ Interna-

tional Conference on Intelligent Robots and Systems

(IROS), pages 8200–8205.

Satake, S., Kanda, T., Glas, D. F., Imai, M., Ishiguro, H.,

and Hagita, N. (2009). How to approach humans?-

strategies for social robots to initiate interaction. In

2009 4th ACM/IEEE International Conference on

Human-Robot Interaction (HRI), pages 109–116.

Spezialetti, M., Placidi, G., and Rossi, S. (2020). Emotion

recognition for human-robot interaction: Recent ad-

vances and future perspectives. Frontiers in Robotics

and AI, 7.

Syrdal, D. S., Lee Koay, K., Walters, M. L., and Dauten-

hahn, K. (2007). A personalized robot companion?

- the role of individual differences on spatial prefer-

ences in hri scenarios. In RO-MAN 2007 - The 16th

IEEE International Symposium on Robot and Human

Interactive Communication, pages 1143–1148.

Vinkemeier, D., Valstar, M. F., and Gratch, J. (2018). Pre-

dicting folds in poker using action unit detectors and

decision trees. In FG, pages 504–511.

Wegrzyn, M., Vogt, M., Kireclioglu, B., Schneider, J., and

Kissler, J. (2017). Mapping the emotional face. How

Sentiment-Based Engagement Strategies for Intuitive Human-Robot Interaction

685

individual face parts contribute to successful emotion

recognition. PLOS ONE, 12(5):e0177239.

Werner, P., Handrich, S., and Al-Hamadi, A. (2017). Fa-

cial action unit intensity estimation and feature rel-

evance visualization with random regression forests.

In 2017 Seventh International Conference on Affective

Computing and Intelligent Interaction (ACII), volume

2018-Janua, pages 401–406. IEEE.

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

686