Multi-View Video Synthesis Through Progressive Synthesis and

Refinement

Mohamed Ilyes Lakhal

1 a

, Oswald Lanz

2 b

and Andrea Cavallaro

1 c

1

Queen Mary University of London, London, U.K.

2

Free University of Bozen-Bolzano, Bolzano, Italy

Keywords:

Multi-View Video Synthesis, Generative Models, Temporal Consistency.

Abstract:

Multi-view video synthesis aims to reproduce a video as seen from a targeted viewpoint. This paper proposes

to tackle this problem using a multi-stage framework to progressively add more details on the synthesized

frames and refine wrong pixels from previous predictions. First, we reconstruct the foreground and the back-

ground by using 3D mesh. To do so, we leverage the one-to-one correspondence between rendered mesh faces

between the input and the target view. Then, the predicted frames are defined with a recurrence formula to

correct wrong pixels and adding high-frequency details. Results on the NTU RGB+D dataset show the effec-

tiveness of the proposed approach against frame-based and video-based state-of-the-art models.

1 INTRODUCTION

Multi-view video synthesis tries to synthesize a video

of a person performing an action as seen from a target

view given an input-view video (see Fig. 1).

The multi-view synthesis problem can be clas-

sified onto computer-graphics (Zhang et al., 2017)

methods and learning-based methods (Ma et al.,

2017). The computer-graphics methods synthesize

detailed body from an arbitrary viewpoint, however,

the computation time to get the body representa-

tion and the sensibility towards unseen clothing make

them hard to be applied to in-the-wild data. Learning-

based methods use generative models such as Gener-

ative Adversarial Network (GAN) (Goodfellow et al.,

2014) to synthesize the person from a target-view.

Recent advance in human-mesh recovery (Kanazawa

et al., 2019) has allowed the combination of both ex-

pressive cues (3D mesh) and GANs (Liu et al., 2019)

of a person seen from a target-view.

In this paper, we propose a pipeline that consists

of iterative steps (or layers) of synthesis. Each layer

is represented as a GAN network. The first (or recon-

struction) layer, synthesizes the low-level frequencies

(i.e. overall structure of the novel-view scene and

human body). The network structure uses a mask

containing information about the visibility of the in-

a

https://orcid.org/0000-0003-4432-9740

b

https://orcid.org/0000-0003-4793-4276

c

https://orcid.org/0000-0001-5086-7858

Input view

Target view

Synthesized action on the target view

Time

Figure 1: Multi-view video synthesis. Given a video of a

scene with a person acting (e.g. throwing), captured from a

fixed camera, the goal is to synthesize a video from a target

view as it would be captured by another fixed camera from

a different viewpoint.

put view onto the target view. The mask is obtained

from the 3D body mesh to separate the information

of the background, visible body part, and occluded

body part. The mask is shown to be useful for the net-

work to focus on each of the three parts individually

230

Lakhal, M., Lanz, O. and Cavallaro, A.

Multi-View Video Synthesis Through Progressive Synthesis and Refinement.

DOI: 10.5220/0011759400003417

In Proceedings of the 18th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2023) - Volume 5: VISAPP, pages

230-238

ISBN: 978-989-758-634-7; ISSN: 2184-4321

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

in the synthesis. Then, the refinement layer is an itera-

tive module that takes the synthesis from the previous

layer and recursively synthesizes and refines it.

2 BACKGROUND

Scene synthesis methods focus on reconstructing a

novel view from neighboring regions of the captured

scene. Methods use synchronized multi-view images

to infer the 3D geometry in order to reconstruct the

scene (Chaurasia et al., 2013; Niklaus et al., 2019;

Wiles et al., 2020). The novel view is then obtained by

re-projection onto the image plane of the desired cam-

era view. However, most of these methods suppose

that the scene contains static objects which makes the

synthesis stable (i.e. less jitter effect), are adapted for

indoor scenes (Shin et al., 2019).

Articulated object novel-view synthesis includes:

human bodies (Kundu et al., 2020), human faces (Wu

et al., 2020). Methods estimating the 3D human body

shape from videos can be categorized into template-

based (Vlasic et al., 2008), model-based (Bogo et al.,

2015), free-form (Guo et al., 2017) and learning-

based reconstruction (Saito et al., 2019). These meth-

ods require 3D mesh for each human body and cloth-

ing dress which makes them impractical in some sce-

narios (e.g. in-the-wild applications).

Synthesizing the human body motion attracted a

lot of attention lately (Ma et al., 2017; Liu et al.,

2019; Dong et al., 2018; Wang et al., 2019). Success-

ful attempts to the problem are related to the pose-

guided view synthesis problem (Ma et al., 2017). The

synthesis generator combines the input image of the

person from a given pose along with a target pose.

The more descriptive (i.e. descriptive of the human

body on other representation space than RGB) the tar-

get pose is the better the synthesis (Liu et al., 2019;

Dong et al., 2018). In contrast, the multi-view synthe-

sis problem focuses on synthesizing the same pose of

the human body as well as keeping a temporal consis-

tency across the frames (Lakhal et al., 2019; Lakhal

et al., 2020).

The modeling of the generator can benefit from

progressively synthesizing and refining. Methods

in the literature can be divided into two cate-

gories, feature-block recurrence or model recurrence.

Feature-block recurrence (Men et al., 2020; Zhu et al.,

2019) progressively learns to transfer the pose in the

feature space at each block of the generator model.

On the other hand, model recurrence (Karras et al.,

2018a; Shaham et al., 2019) progressively refines the

prediction using a generator initialized at each step.

By doing so, the network needs to learn the data dis-

<latexit sha1_base64="AWIQUmfbHSBXDwD7ImzIoMzPydA=">AAAB7nicbVBNT8JAEJ3iF+IX6tFLI5h4Ii0XPRK9eMREwAQast1uYcN2t9mdmpCGH+HFg8Z49fd489+4QA8KvmSSl/dmMjMvTAU36HnfTmljc2t7p7xb2ds/ODyqHp90jco0ZR2qhNKPITFMcMk6yFGwx1QzkoSC9cLJ7dzvPTFtuJIPOE1ZkJCR5DGnBK3Uqw9UpLA+rNa8hreAu078gtSgQHtY/RpEimYJk0gFMabveykGOdHIqWCzyiAzLCV0Qkasb6kkCTNBvjh35l5YJXJjpW1JdBfq74mcJMZMk9B2JgTHZtWbi/95/Qzj6yDnMs2QSbpcFGfCReXOf3cjrhlFMbWEUM3trS4dE00o2oQqNgR/9eV10m02fK/h3zdrrZsijjKcwTlcgg9X0II7aEMHKEzgGV7hzUmdF+fd+Vi2lpxi5hT+wPn8AaSxjxg=</latexit>

<latexit sha1_base64="AWIQUmfbHSBXDwD7ImzIoMzPydA=">AAAB7nicbVBNT8JAEJ3iF+IX6tFLI5h4Ii0XPRK9eMREwAQast1uYcN2t9mdmpCGH+HFg8Z49fd489+4QA8KvmSSl/dmMjMvTAU36HnfTmljc2t7p7xb2ds/ODyqHp90jco0ZR2qhNKPITFMcMk6yFGwx1QzkoSC9cLJ7dzvPTFtuJIPOE1ZkJCR5DGnBK3Uqw9UpLA+rNa8hreAu078gtSgQHtY/RpEimYJk0gFMabveykGOdHIqWCzyiAzLCV0Qkasb6kkCTNBvjh35l5YJXJjpW1JdBfq74mcJMZMk9B2JgTHZtWbi/95/Qzj6yDnMs2QSbpcFGfCReXOf3cjrhlFMbWEUM3trS4dE00o2oQqNgR/9eV10m02fK/h3zdrrZsijjKcwTlcgg9X0II7aEMHKEzgGV7hzUmdF+fd+Vi2lpxi5hT+wPn8AaSxjxg=</latexit>

<latexit sha1_base64="AWIQUmfbHSBXDwD7ImzIoMzPydA=">AAAB7nicbVBNT8JAEJ3iF+IX6tFLI5h4Ii0XPRK9eMREwAQast1uYcN2t9mdmpCGH+HFg8Z49fd489+4QA8KvmSSl/dmMjMvTAU36HnfTmljc2t7p7xb2ds/ODyqHp90jco0ZR2qhNKPITFMcMk6yFGwx1QzkoSC9cLJ7dzvPTFtuJIPOE1ZkJCR5DGnBK3Uqw9UpLA+rNa8hreAu078gtSgQHtY/RpEimYJk0gFMabveykGOdHIqWCzyiAzLCV0Qkasb6kkCTNBvjh35l5YJXJjpW1JdBfq74mcJMZMk9B2JgTHZtWbi/95/Qzj6yDnMs2QSbpcFGfCReXOf3cjrhlFMbWEUM3trS4dE00o2oQqNgR/9eV10m02fK/h3zdrrZsijjKcwTlcgg9X0II7aEMHKEzgGV7hzUmdF+fd+Vi2lpxi5hT+wPn8AaSxjxg=</latexit>

<latexit sha1_base64="AWIQUmfbHSBXDwD7ImzIoMzPydA=">AAAB7nicbVBNT8JAEJ3iF+IX6tFLI5h4Ii0XPRK9eMREwAQast1uYcN2t9mdmpCGH+HFg8Z49fd489+4QA8KvmSSl/dmMjMvTAU36HnfTmljc2t7p7xb2ds/ODyqHp90jco0ZR2qhNKPITFMcMk6yFGwx1QzkoSC9cLJ7dzvPTFtuJIPOE1ZkJCR5DGnBK3Uqw9UpLA+rNa8hreAu078gtSgQHtY/RpEimYJk0gFMabveykGOdHIqWCzyiAzLCV0Qkasb6kkCTNBvjh35l5YJXJjpW1JdBfq74mcJMZMk9B2JgTHZtWbi/95/Qzj6yDnMs2QSbpcFGfCReXOf3cjrhlFMbWEUM3trS4dE00o2oQqNgR/9eV10m02fK/h3zdrrZsijjKcwTlcgg9X0II7aEMHKEzgGV7hzUmdF+fd+Vi2lpxi5hT+wPn8AaSxjxg=</latexit>

+

<latexit sha1_base64="AWIQUmfbHSBXDwD7ImzIoMzPydA=">AAAB7nicbVBNT8JAEJ3iF+IX6tFLI5h4Ii0XPRK9eMREwAQast1uYcN2t9mdmpCGH+HFg8Z49fd489+4QA8KvmSSl/dmMjMvTAU36HnfTmljc2t7p7xb2ds/ODyqHp90jco0ZR2qhNKPITFMcMk6yFGwx1QzkoSC9cLJ7dzvPTFtuJIPOE1ZkJCR5DGnBK3Uqw9UpLA+rNa8hreAu078gtSgQHtY/RpEimYJk0gFMabveykGOdHIqWCzyiAzLCV0Qkasb6kkCTNBvjh35l5YJXJjpW1JdBfq74mcJMZMk9B2JgTHZtWbi/95/Qzj6yDnMs2QSbpcFGfCReXOf3cjrhlFMbWEUM3trS4dE00o2oQqNgR/9eV10m02fK/h3zdrrZsijjKcwTlcgg9X0II7aEMHKEzgGV7hzUmdF+fd+Vi2lpxi5hT+wPn8AaSxjxg=</latexit>

<latexit sha1_base64="AWIQUmfbHSBXDwD7ImzIoMzPydA=">AAAB7nicbVBNT8JAEJ3iF+IX6tFLI5h4Ii0XPRK9eMREwAQast1uYcN2t9mdmpCGH+HFg8Z49fd489+4QA8KvmSSl/dmMjMvTAU36HnfTmljc2t7p7xb2ds/ODyqHp90jco0ZR2qhNKPITFMcMk6yFGwx1QzkoSC9cLJ7dzvPTFtuJIPOE1ZkJCR5DGnBK3Uqw9UpLA+rNa8hreAu078gtSgQHtY/RpEimYJk0gFMabveykGOdHIqWCzyiAzLCV0Qkasb6kkCTNBvjh35l5YJXJjpW1JdBfq74mcJMZMk9B2JgTHZtWbi/95/Qzj6yDnMs2QSbpcFGfCReXOf3cjrhlFMbWEUM3trS4dE00o2oQqNgR/9eV10m02fK/h3zdrrZsijjKcwTlcgg9X0II7aEMHKEzgGV7hzUmdF+fd+Vi2lpxi5hT+wPn8AaSxjxg=</latexit>

<latexit sha1_base64="AWIQUmfbHSBXDwD7ImzIoMzPydA=">AAAB7nicbVBNT8JAEJ3iF+IX6tFLI5h4Ii0XPRK9eMREwAQast1uYcN2t9mdmpCGH+HFg8Z49fd489+4QA8KvmSSl/dmMjMvTAU36HnfTmljc2t7p7xb2ds/ODyqHp90jco0ZR2qhNKPITFMcMk6yFGwx1QzkoSC9cLJ7dzvPTFtuJIPOE1ZkJCR5DGnBK3Uqw9UpLA+rNa8hreAu078gtSgQHtY/RpEimYJk0gFMabveykGOdHIqWCzyiAzLCV0Qkasb6kkCTNBvjh35l5YJXJjpW1JdBfq74mcJMZMk9B2JgTHZtWbi/95/Qzj6yDnMs2QSbpcFGfCReXOf3cjrhlFMbWEUM3trS4dE00o2oQqNgR/9eV10m02fK/h3zdrrZsijjKcwTlcgg9X0II7aEMHKEzgGV7hzUmdF+fd+Vi2lpxi5hT+wPn8AaSxjxg=</latexit>

<latexit sha1_base64="AWIQUmfbHSBXDwD7ImzIoMzPydA=">AAAB7nicbVBNT8JAEJ3iF+IX6tFLI5h4Ii0XPRK9eMREwAQast1uYcN2t9mdmpCGH+HFg8Z49fd489+4QA8KvmSSl/dmMjMvTAU36HnfTmljc2t7p7xb2ds/ODyqHp90jco0ZR2qhNKPITFMcMk6yFGwx1QzkoSC9cLJ7dzvPTFtuJIPOE1ZkJCR5DGnBK3Uqw9UpLA+rNa8hreAu078gtSgQHtY/RpEimYJk0gFMabveykGOdHIqWCzyiAzLCV0Qkasb6kkCTNBvjh35l5YJXJjpW1JdBfq74mcJMZMk9B2JgTHZtWbi/95/Qzj6yDnMs2QSbpcFGfCReXOf3cjrhlFMbWEUM3trS4dE00o2oQqNgR/9eV10m02fK/h3zdrrZsijjKcwTlcgg9X0II7aEMHKEzgGV7hzUmdF+fd+Vi2lpxi5hT+wPn8AaSxjxg=</latexit>

ˆx

j

k−1

d

j

x

i

DE

α

k

1 −α

k

Eq. 1

ˆx

j

k

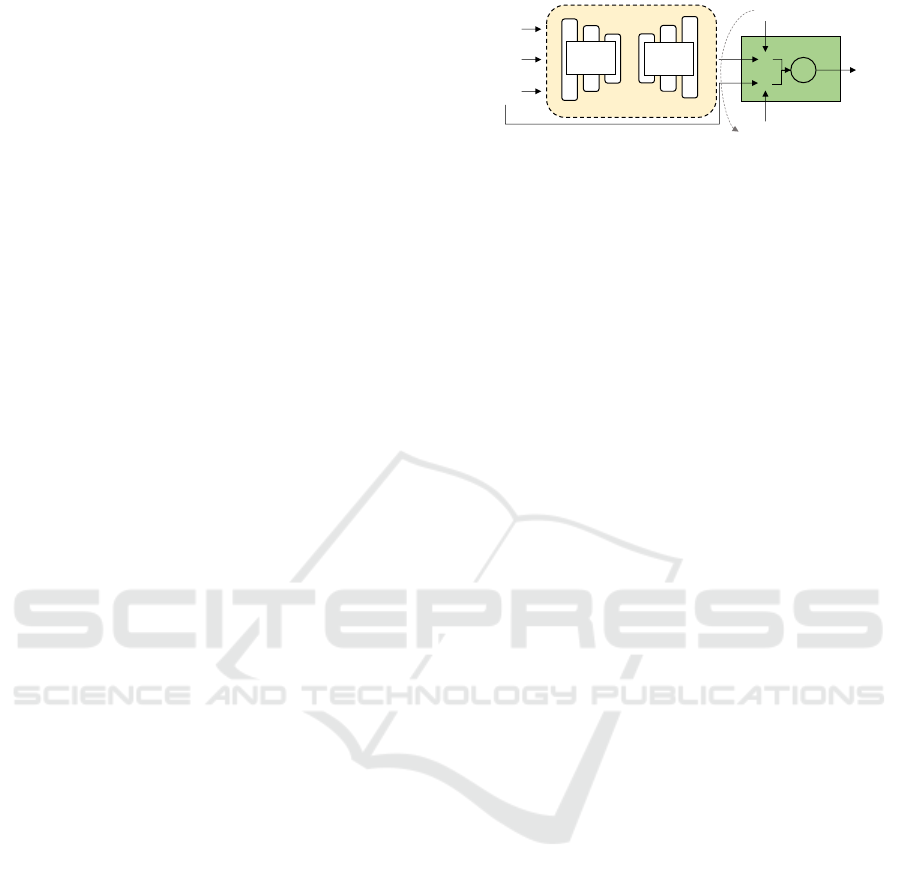

Figure 2: Overview of the proposed PSR-Net. The model

is composed of K generators (called layers). At each layer

k ∈ {2, ..., K} the generator G

k

i→j

takes the input view

video x

i

, target depth d

j

and the synthesis from the pre-

vious stage k −1 which will guide the synthesis to produce

ˆx

j

k

. The video is obtained with Eq. 1 from the synthesis of

the layer k −1 and k. KEY – α

k

: weighting factor.

tribution by starting from a good initialization at the

previous step.

3 MODEL

This section presents our proposed Progressive Syn-

thesis and Refinement Network (PSR-Net) model.

We provide insights on key choices and we detail the

architecture of each stage of the proposed pipeline.

The idea of our PSR-Net is inspired by the suc-

cess of progressive GANs (Shaham et al., 2019; Kar-

ras et al., 2018b) where the image is gradually syn-

thesized and refined. The pipeline presented in Fig. 2

is composed of K generators (also called layers), not

necessarily of the same architecture, that gradually

synthesize the video using iterative recurrence for-

mula as:

ˆx

j

k

=

(

G

k=1

i→j

(x

i

, ˆx

j

0

,d

j

) if k = 1

α

k

G

k

i→j

(x

i

, ˆx

j

k−1

,d

j

) + (1 −α

k

) ˆx

j

k−1

if k ≥2

(1)

where ˆx

j

0

is the initial target-view estimate, d

j

target-

view depth, and x

i

(resp. x

j

) input (resp. target) view

video. The weighting factor α

k

∈ [0,1] is a hyper-

parameter and k ∈{1,.. . ,K} is the layer index.

3.1 Reconstruction Layer G

k=1

i→j

We aim to synthesize the foreground and background

guided by the initial foreground estimation of ˆx

j

0

. We

first estimate the 3D mesh using an off the shelf es-

timator such as the method proposed in (Kanazawa

et al., 2019). Then, we synthesize the foreground

and the background with dedicated decoders using the

mask obtained from the projection of the 3D mesh on

the target view.

Visibility Map. Let F

i

∈ R

T ×W ×H

be the projection

of a 3D human body mesh onto the image plane of

Multi-View Video Synthesis Through Progressive Synthesis and Refinement

231

Algorithm 1: Visibility map.

Input: F

i

; F

j

; k (k-NN) value;

k-NN

face (Step I & II (Lakhal et al., 2020))

Output: Visibility map M

i→j

1 M

i→j

← 1

T ×w×h

2 for (t, u

x

,u

y

) ∈ {[1..T ],[1..w], [1..h]} do

3 if k-NN face(F

j

[t, u

x

,u

y

],k) then

4 M

i→j

[t, u

x

,u

y

] ← 2

5 else

6 M

i→j

[t, u

x

,u

y

] ← 3

7 end

8 end

view i capturing the scene of the action, where T,W ,

and H represent the number of frames, width, and

height of the video stream. In practice, the 3D human

body mesh can be represented with the SMPL (Loper

et al., 2015) model and estimated using an off-the-

shelf human-mesh recovery method (Kanazawa et al.,

2019). Alg. 1 describes the process to obtain the visi-

bility map. Using the one-to-one correspondence be-

tween the projected mesh F

i

(resp. F

j

) of the in-

put (resp. target) view, we build the visibility map

M

i→j

∈ {1,2,3}

T ×w×h

where the labels (1, 2, 3) rep-

resent (background, visible and occluded). Instead of

independently processing the mesh from the temporal

axis, we leverage the information available about the

faces F

i

and F

j

. We consider a mesh index (or face)

f ∈ F

j

t

occluded if f ̸∈ F

i

for all t ∈ {1,.. . ,T } (see

Fig. 3).

Network Architecture. We adopt a context-based

generator with separated encoders E

∗

for each

of the inputs x

i

, ˆx

j

0

, and d

j

. Then, each part that

the visibility mask M

i→j

represents (i.e. back-

ground, visible and occluded) is decoded using a

separated decoder D

∗

and M

i→j

(see Fig. 4(d)). Let

k

i→j

= {(u

x

,u

y

) ∈ N

w×h

|M

i→j

[t, u

x

,u

y

] = k;1 ≤t ≤ T }

refers to the map with the label k ∈ {1, 2, 3}. The

novel-view video is obtained as:

˜x

j

1

= ˆx

j

b

⊙1

i→j

+ ˆx

j

v

⊙2

i→j

+ ˆx

j

o

⊙3

i→j

, (2)

where ˆx

j

b

(resp. ˆx

j

v

, and ˆx

j

o

) is the background (resp.

visible, and occluded) part synthesis and is obtained

using the decoder D

b

(resp. D

v

, and D

o

). We also add

an explicit temporal constraint using the estimated

optical-flow obtained from the target view mesh O

j

and a warping function W as defined in (Jaderberg

et al., 2015) such as:

ˆx

j

1

=

(

˜x

j

1,t

if t = 1

˜x

j

1,t

+ ζ.W ( ˜x

j

1,t−1

,O

j

t+1→t

) if t ∈ [2..T],

(3)

x

i

x

j

M

i→j

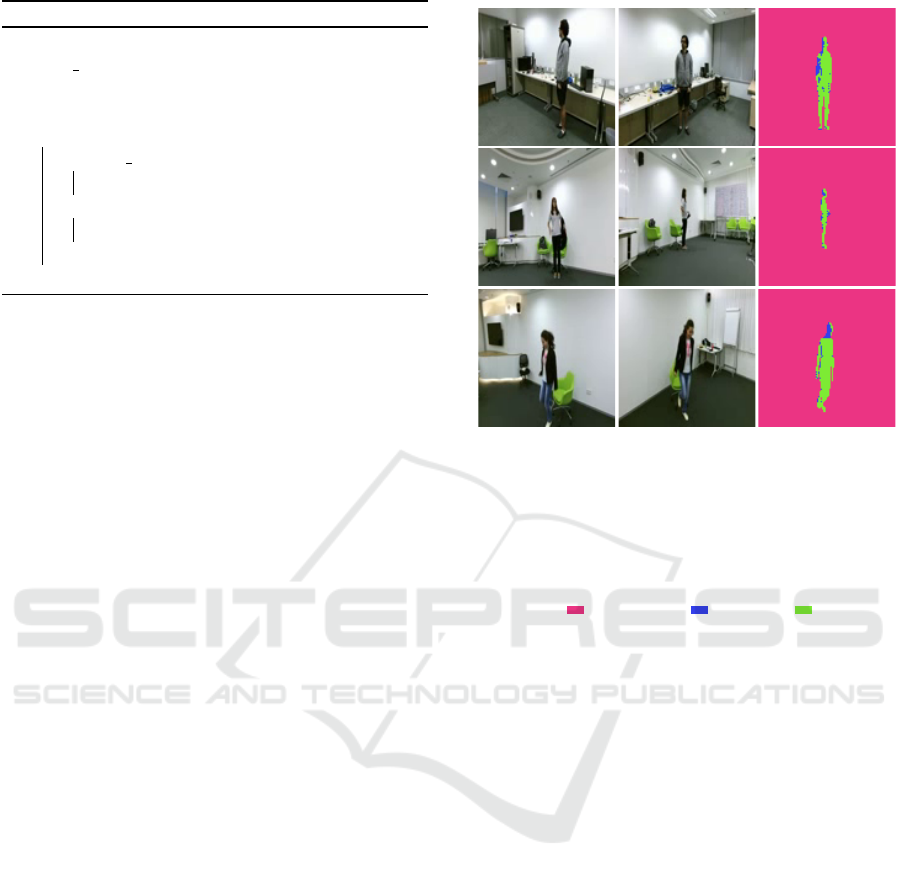

Figure 3: Visibility map. Using the correspondence be-

tween the projected mesh onto the input view and of the tar-

get view, we determine the visibility map M

i→j

(see Alg. 1).

We build a dictionary using the projected mesh in the in-

put view by keeping track whether a face index is present

along with its neighboring visible faces. Then, using the re-

sulted dictionary, we assign to each face index in the target-

view mesh the visibility value. Legend – x

i

: input-view; x

j

:

target-view; background; occluded; visible.

where ζ, controls the importance of the warping of

previously synthesized frames with the synthesis from

the current time frame produced by the generator.

The factor ζ is set empirically to .1 as suggested

by (Lakhal et al., 2020).

To train the generator we use a Huber loss L

r

(Hu-

ber, 1964) as reconstruction loss. We also employ a

temporal perceptual loss L

p

(Lakhal et al., 2019) that

penalises the prediction on the spatio-temporal fea-

ture space from a function Φ called perceptual net-

work. We employ an adversarial loss (Goodfellow

et al., 2014) (denoted as L

a

), in order to enforce high

frequency constraints. The training loss for the recon-

struction layer is given as:

L

k=1

= L

r

+ 0.01(L

p

+ L

a

). (4)

3.2 Recurrence Layer G

k

i→j

The role of this layer is to recursively refine the syn-

thesized video by adding high-frequency details. We

give equal priority to all pixels and, therefore, choose

to predict ˆx

j

k

using one decoder.

Network Architecture. The synthesized videos

{ˆx

j

u

}

k

u=1

with k ∈ {2,. ..,K} progressively approxi-

mate better the novel-view video. Therefore, we use

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

232

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

+

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

E

E

D

D

D

D

D

D

D

ˆx

j

ˆx

j

Eq. 2Eq. 2

M

i→j

M

i→j

x

i

p

j

ˆx

j

b

ˆx

j

f

ˆx

j

b

ˆx

j

o

ˆx

j

v

ˆx

j

b

ˆx

j

o

ˆx

j

v

ˆx

j

(a) (b)

(c) (d)

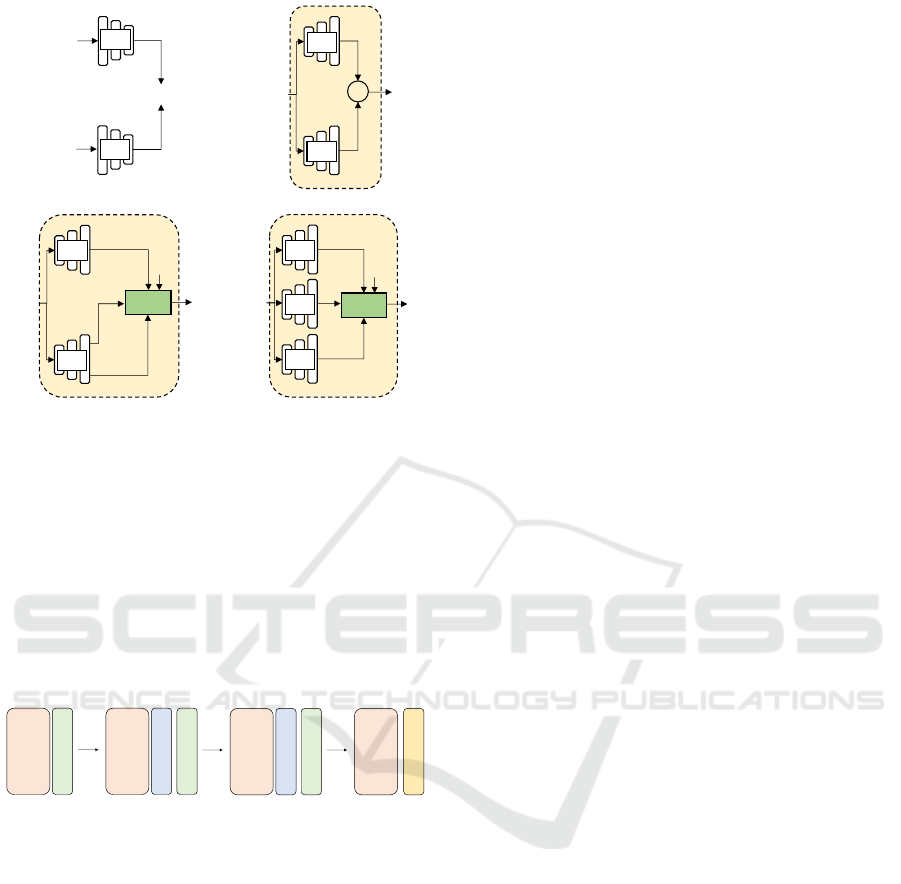

Figure 4: Reconstruction layer variants. (a) Feature en-

coding: the input view video x

i

and the target view prior

p

j

are encoded using separate encoders and are merged to-

gether using a convolution operation; (b) context-based ap-

proach: we use separated decoders D for each of the fore-

ground and the background; (c) proposed (common head):

the visible and occluded regions are synthesized using sep-

arated heads (i.e. final deconvolution operation) but they

share the same decoder; (d) proposed (separate heads):

each information from the map M

i→j

is synthesized on a

separated branch. KEY – p

j

: target modality e.g. depth;

ˆx

j

f

: foreground; ˆx

j

b

: background; ˆx

j

o

: occluded; ˆx

j

v

: visible;

⊕: concatenation.

conv

3D

<latexit sha1_base64="m/h48J0g7vOiQF4P6ZOaYqLm/1U=">AAAB/XicbVC7TsNAEDyHVwgv8+hoLBIkqsgOBZQRUFAGiTykxLLOl3Nyyvls3a0jgmXxKzQUIETLf9DxN1wSF5Aw0kqjmV3t7vgxZwps+9sorKyurW8UN0tb2zu7e+b+QUtFiSS0SSIeyY6PFeVM0CYw4LQTS4pDn9O2P7qe+u0xlYpF4h4mMXVDPBAsYASDljzzqNID+gAAKYnEOPPS85us4pllu2rPYC0TJydllKPhmV+9fkSSkAogHCvVdewY3BRLYITTrNRLFI0xGeEB7WoqcEiVm86uz6xTrfStIJK6BFgz9fdEikOlJqGvO0MMQ7XoTcX/vG4CwaWbMhEnQAWZLwoSbkFkTaOw+kxSAnyiCSaS6VstMsQSE9CBlXQIzuLLy6RVqzp21bmrletXeRxFdIxO0Bly0AWqo1vUQE1E0CN6Rq/ozXgyXox342PeWjDymUP0B8bnD4XHlT4=</latexit>

<latexit sha1_base64="m/h48J0g7vOiQF4P6ZOaYqLm/1U=">AAAB/XicbVC7TsNAEDyHVwgv8+hoLBIkqsgOBZQRUFAGiTykxLLOl3Nyyvls3a0jgmXxKzQUIETLf9DxN1wSF5Aw0kqjmV3t7vgxZwps+9sorKyurW8UN0tb2zu7e+b+QUtFiSS0SSIeyY6PFeVM0CYw4LQTS4pDn9O2P7qe+u0xlYpF4h4mMXVDPBAsYASDljzzqNID+gAAKYnEOPPS85us4pllu2rPYC0TJydllKPhmV+9fkSSkAogHCvVdewY3BRLYITTrNRLFI0xGeEB7WoqcEiVm86uz6xTrfStIJK6BFgz9fdEikOlJqGvO0MMQ7XoTcX/vG4CwaWbMhEnQAWZLwoSbkFkTaOw+kxSAnyiCSaS6VstMsQSE9CBlXQIzuLLy6RVqzp21bmrletXeRxFdIxO0Bly0AWqo1vUQE1E0CN6Rq/ozXgyXox342PeWjDymUP0B8bnD4XHlT4=</latexit>

<latexit sha1_base64="m/h48J0g7vOiQF4P6ZOaYqLm/1U=">AAAB/XicbVC7TsNAEDyHVwgv8+hoLBIkqsgOBZQRUFAGiTykxLLOl3Nyyvls3a0jgmXxKzQUIETLf9DxN1wSF5Aw0kqjmV3t7vgxZwps+9sorKyurW8UN0tb2zu7e+b+QUtFiSS0SSIeyY6PFeVM0CYw4LQTS4pDn9O2P7qe+u0xlYpF4h4mMXVDPBAsYASDljzzqNID+gAAKYnEOPPS85us4pllu2rPYC0TJydllKPhmV+9fkSSkAogHCvVdewY3BRLYITTrNRLFI0xGeEB7WoqcEiVm86uz6xTrfStIJK6BFgz9fdEikOlJqGvO0MMQ7XoTcX/vG4CwaWbMhEnQAWZLwoSbkFkTaOw+kxSAnyiCSaS6VstMsQSE9CBlXQIzuLLy6RVqzp21bmrletXeRxFdIxO0Bly0AWqo1vUQE1E0CN6Rq/ozXgyXox342PeWjDymUP0B8bnD4XHlT4=</latexit>

<latexit sha1_base64="m/h48J0g7vOiQF4P6ZOaYqLm/1U=">AAAB/XicbVC7TsNAEDyHVwgv8+hoLBIkqsgOBZQRUFAGiTykxLLOl3Nyyvls3a0jgmXxKzQUIETLf9DxN1wSF5Aw0kqjmV3t7vgxZwps+9sorKyurW8UN0tb2zu7e+b+QUtFiSS0SSIeyY6PFeVM0CYw4LQTS4pDn9O2P7qe+u0xlYpF4h4mMXVDPBAsYASDljzzqNID+gAAKYnEOPPS85us4pllu2rPYC0TJydllKPhmV+9fkSSkAogHCvVdewY3BRLYITTrNRLFI0xGeEB7WoqcEiVm86uz6xTrfStIJK6BFgz9fdEikOlJqGvO0MMQ7XoTcX/vG4CwaWbMhEnQAWZLwoSbkFkTaOw+kxSAnyiCSaS6VstMsQSE9CBlXQIzuLLy6RVqzp21bmrletXeRxFdIxO0Bly0AWqo1vUQE1E0CN6Rq/ozXgyXox342PeWjDymUP0B8bnD4XHlT4=</latexit>

(in=3, out=64,

kernel=(4,4,4),

stride=(1, 2, 2),

padding=(0, 1, 1))

LeakyReLU

<latexit sha1_base64="zg87HjZaVb2t26PTROgBsmYCOSE=">AAAB/XicbVDJTgJBEO3BDXEbl5uXjmDiicxw0SPRiwcOaBwgAUJ6mgI69CzprjHihPgrXjxojFf/w5t/Y7McFHxJJS/vVaWqnh9LodFxvq3Myura+kZ2M7e1vbO7Z+8f1HSUKA4ej2SkGj7TIEUIHgqU0IgVsMCXUPeHVxO/fg9Kiyi8w1EM7YD1Q9ETnKGROvZRoYXwgIhpBdhwdAsVb1zo2Hmn6ExBl4k7J3kyR7Vjf7W6EU8CCJFLpnXTdWJsp0yh4BLGuVaiIWZ8yPrQNDRkAeh2Or1+TE+N0qW9SJkKkU7V3xMpC7QeBb7pDBgO9KI3Ef/zmgn2LtqpCOMEIeSzRb1EUozoJAraFQo4ypEhjCthbqV8wBTjaALLmRDcxZeXSa1UdJ2ie1PKly/ncWTJMTkhZ8Ql56RMrkmVeISTR/JMXsmb9WS9WO/Wx6w1Y81nDskfWJ8/MbaVCA==</latexit>

<latexit sha1_base64="zg87HjZaVb2t26PTROgBsmYCOSE=">AAAB/XicbVDJTgJBEO3BDXEbl5uXjmDiicxw0SPRiwcOaBwgAUJ6mgI69CzprjHihPgrXjxojFf/w5t/Y7McFHxJJS/vVaWqnh9LodFxvq3Myura+kZ2M7e1vbO7Z+8f1HSUKA4ej2SkGj7TIEUIHgqU0IgVsMCXUPeHVxO/fg9Kiyi8w1EM7YD1Q9ETnKGROvZRoYXwgIhpBdhwdAsVb1zo2Hmn6ExBl4k7J3kyR7Vjf7W6EU8CCJFLpnXTdWJsp0yh4BLGuVaiIWZ8yPrQNDRkAeh2Or1+TE+N0qW9SJkKkU7V3xMpC7QeBb7pDBgO9KI3Ef/zmgn2LtqpCOMEIeSzRb1EUozoJAraFQo4ypEhjCthbqV8wBTjaALLmRDcxZeXSa1UdJ2ie1PKly/ncWTJMTkhZ8Ql56RMrkmVeISTR/JMXsmb9WS9WO/Wx6w1Y81nDskfWJ8/MbaVCA==</latexit>

<latexit sha1_base64="zg87HjZaVb2t26PTROgBsmYCOSE=">AAAB/XicbVDJTgJBEO3BDXEbl5uXjmDiicxw0SPRiwcOaBwgAUJ6mgI69CzprjHihPgrXjxojFf/w5t/Y7McFHxJJS/vVaWqnh9LodFxvq3Myura+kZ2M7e1vbO7Z+8f1HSUKA4ej2SkGj7TIEUIHgqU0IgVsMCXUPeHVxO/fg9Kiyi8w1EM7YD1Q9ETnKGROvZRoYXwgIhpBdhwdAsVb1zo2Hmn6ExBl4k7J3kyR7Vjf7W6EU8CCJFLpnXTdWJsp0yh4BLGuVaiIWZ8yPrQNDRkAeh2Or1+TE+N0qW9SJkKkU7V3xMpC7QeBb7pDBgO9KI3Ef/zmgn2LtqpCOMEIeSzRb1EUozoJAraFQo4ypEhjCthbqV8wBTjaALLmRDcxZeXSa1UdJ2ie1PKly/ncWTJMTkhZ8Ql56RMrkmVeISTR/JMXsmb9WS9WO/Wx6w1Y81nDskfWJ8/MbaVCA==</latexit>

<latexit sha1_base64="zg87HjZaVb2t26PTROgBsmYCOSE=">AAAB/XicbVDJTgJBEO3BDXEbl5uXjmDiicxw0SPRiwcOaBwgAUJ6mgI69CzprjHihPgrXjxojFf/w5t/Y7McFHxJJS/vVaWqnh9LodFxvq3Myura+kZ2M7e1vbO7Z+8f1HSUKA4ej2SkGj7TIEUIHgqU0IgVsMCXUPeHVxO/fg9Kiyi8w1EM7YD1Q9ETnKGROvZRoYXwgIhpBdhwdAsVb1zo2Hmn6ExBl4k7J3kyR7Vjf7W6EU8CCJFLpnXTdWJsp0yh4BLGuVaiIWZ8yPrQNDRkAeh2Or1+TE+N0qW9SJkKkU7V3xMpC7QeBb7pDBgO9KI3Ef/zmgn2LtqpCOMEIeSzRb1EUozoJAraFQo4ypEhjCthbqV8wBTjaALLmRDcxZeXSa1UdJ2ie1PKly/ncWTJMTkhZ8Ql56RMrkmVeISTR/JMXsmb9WS9WO/Wx6w1Y81nDskfWJ8/MbaVCA==</latexit>

(.2)

conv

3D

<latexit sha1_base64="m/h48J0g7vOiQF4P6ZOaYqLm/1U=">AAAB/XicbVC7TsNAEDyHVwgv8+hoLBIkqsgOBZQRUFAGiTykxLLOl3Nyyvls3a0jgmXxKzQUIETLf9DxN1wSF5Aw0kqjmV3t7vgxZwps+9sorKyurW8UN0tb2zu7e+b+QUtFiSS0SSIeyY6PFeVM0CYw4LQTS4pDn9O2P7qe+u0xlYpF4h4mMXVDPBAsYASDljzzqNID+gAAKYnEOPPS85us4pllu2rPYC0TJydllKPhmV+9fkSSkAogHCvVdewY3BRLYITTrNRLFI0xGeEB7WoqcEiVm86uz6xTrfStIJK6BFgz9fdEikOlJqGvO0MMQ7XoTcX/vG4CwaWbMhEnQAWZLwoSbkFkTaOw+kxSAnyiCSaS6VstMsQSE9CBlXQIzuLLy6RVqzp21bmrletXeRxFdIxO0Bly0AWqo1vUQE1E0CN6Rq/ozXgyXox342PeWjDymUP0B8bnD4XHlT4=</latexit>

<latexit sha1_base64="m/h48J0g7vOiQF4P6ZOaYqLm/1U=">AAAB/XicbVC7TsNAEDyHVwgv8+hoLBIkqsgOBZQRUFAGiTykxLLOl3Nyyvls3a0jgmXxKzQUIETLf9DxN1wSF5Aw0kqjmV3t7vgxZwps+9sorKyurW8UN0tb2zu7e+b+QUtFiSS0SSIeyY6PFeVM0CYw4LQTS4pDn9O2P7qe+u0xlYpF4h4mMXVDPBAsYASDljzzqNID+gAAKYnEOPPS85us4pllu2rPYC0TJydllKPhmV+9fkSSkAogHCvVdewY3BRLYITTrNRLFI0xGeEB7WoqcEiVm86uz6xTrfStIJK6BFgz9fdEikOlJqGvO0MMQ7XoTcX/vG4CwaWbMhEnQAWZLwoSbkFkTaOw+kxSAnyiCSaS6VstMsQSE9CBlXQIzuLLy6RVqzp21bmrletXeRxFdIxO0Bly0AWqo1vUQE1E0CN6Rq/ozXgyXox342PeWjDymUP0B8bnD4XHlT4=</latexit>

<latexit sha1_base64="m/h48J0g7vOiQF4P6ZOaYqLm/1U=">AAAB/XicbVC7TsNAEDyHVwgv8+hoLBIkqsgOBZQRUFAGiTykxLLOl3Nyyvls3a0jgmXxKzQUIETLf9DxN1wSF5Aw0kqjmV3t7vgxZwps+9sorKyurW8UN0tb2zu7e+b+QUtFiSS0SSIeyY6PFeVM0CYw4LQTS4pDn9O2P7qe+u0xlYpF4h4mMXVDPBAsYASDljzzqNID+gAAKYnEOPPS85us4pllu2rPYC0TJydllKPhmV+9fkSSkAogHCvVdewY3BRLYITTrNRLFI0xGeEB7WoqcEiVm86uz6xTrfStIJK6BFgz9fdEikOlJqGvO0MMQ7XoTcX/vG4CwaWbMhEnQAWZLwoSbkFkTaOw+kxSAnyiCSaS6VstMsQSE9CBlXQIzuLLy6RVqzp21bmrletXeRxFdIxO0Bly0AWqo1vUQE1E0CN6Rq/ozXgyXox342PeWjDymUP0B8bnD4XHlT4=</latexit>

<latexit sha1_base64="m/h48J0g7vOiQF4P6ZOaYqLm/1U=">AAAB/XicbVC7TsNAEDyHVwgv8+hoLBIkqsgOBZQRUFAGiTykxLLOl3Nyyvls3a0jgmXxKzQUIETLf9DxN1wSF5Aw0kqjmV3t7vgxZwps+9sorKyurW8UN0tb2zu7e+b+QUtFiSS0SSIeyY6PFeVM0CYw4LQTS4pDn9O2P7qe+u0xlYpF4h4mMXVDPBAsYASDljzzqNID+gAAKYnEOPPS85us4pllu2rPYC0TJydllKPhmV+9fkSSkAogHCvVdewY3BRLYITTrNRLFI0xGeEB7WoqcEiVm86uz6xTrfStIJK6BFgz9fdEikOlJqGvO0MMQ7XoTcX/vG4CwaWbMhEnQAWZLwoSbkFkTaOw+kxSAnyiCSaS6VstMsQSE9CBlXQIzuLLy6RVqzp21bmrletXeRxFdIxO0Bly0AWqo1vUQE1E0CN6Rq/ozXgyXox342PeWjDymUP0B8bnD4XHlT4=</latexit>

(128)

(in=64, out=128,

kernel=(4,4,4),

stride=(1, 2, 2),

padding=(0, 1, 1))

LeakyReLU

<latexit sha1_base64="zg87HjZaVb2t26PTROgBsmYCOSE=">AAAB/XicbVDJTgJBEO3BDXEbl5uXjmDiicxw0SPRiwcOaBwgAUJ6mgI69CzprjHihPgrXjxojFf/w5t/Y7McFHxJJS/vVaWqnh9LodFxvq3Myura+kZ2M7e1vbO7Z+8f1HSUKA4ej2SkGj7TIEUIHgqU0IgVsMCXUPeHVxO/fg9Kiyi8w1EM7YD1Q9ETnKGROvZRoYXwgIhpBdhwdAsVb1zo2Hmn6ExBl4k7J3kyR7Vjf7W6EU8CCJFLpnXTdWJsp0yh4BLGuVaiIWZ8yPrQNDRkAeh2Or1+TE+N0qW9SJkKkU7V3xMpC7QeBb7pDBgO9KI3Ef/zmgn2LtqpCOMEIeSzRb1EUozoJAraFQo4ypEhjCthbqV8wBTjaALLmRDcxZeXSa1UdJ2ie1PKly/ncWTJMTkhZ8Ql56RMrkmVeISTR/JMXsmb9WS9WO/Wx6w1Y81nDskfWJ8/MbaVCA==</latexit>

<latexit sha1_base64="zg87HjZaVb2t26PTROgBsmYCOSE=">AAAB/XicbVDJTgJBEO3BDXEbl5uXjmDiicxw0SPRiwcOaBwgAUJ6mgI69CzprjHihPgrXjxojFf/w5t/Y7McFHxJJS/vVaWqnh9LodFxvq3Myura+kZ2M7e1vbO7Z+8f1HSUKA4ej2SkGj7TIEUIHgqU0IgVsMCXUPeHVxO/fg9Kiyi8w1EM7YD1Q9ETnKGROvZRoYXwgIhpBdhwdAsVb1zo2Hmn6ExBl4k7J3kyR7Vjf7W6EU8CCJFLpnXTdWJsp0yh4BLGuVaiIWZ8yPrQNDRkAeh2Or1+TE+N0qW9SJkKkU7V3xMpC7QeBb7pDBgO9KI3Ef/zmgn2LtqpCOMEIeSzRb1EUozoJAraFQo4ypEhjCthbqV8wBTjaALLmRDcxZeXSa1UdJ2ie1PKly/ncWTJMTkhZ8Ql56RMrkmVeISTR/JMXsmb9WS9WO/Wx6w1Y81nDskfWJ8/MbaVCA==</latexit>

<latexit sha1_base64="zg87HjZaVb2t26PTROgBsmYCOSE=">AAAB/XicbVDJTgJBEO3BDXEbl5uXjmDiicxw0SPRiwcOaBwgAUJ6mgI69CzprjHihPgrXjxojFf/w5t/Y7McFHxJJS/vVaWqnh9LodFxvq3Myura+kZ2M7e1vbO7Z+8f1HSUKA4ej2SkGj7TIEUIHgqU0IgVsMCXUPeHVxO/fg9Kiyi8w1EM7YD1Q9ETnKGROvZRoYXwgIhpBdhwdAsVb1zo2Hmn6ExBl4k7J3kyR7Vjf7W6EU8CCJFLpnXTdWJsp0yh4BLGuVaiIWZ8yPrQNDRkAeh2Or1+TE+N0qW9SJkKkU7V3xMpC7QeBb7pDBgO9KI3Ef/zmgn2LtqpCOMEIeSzRb1EUozoJAraFQo4ypEhjCthbqV8wBTjaALLmRDcxZeXSa1UdJ2ie1PKly/ncWTJMTkhZ8Ql56RMrkmVeISTR/JMXsmb9WS9WO/Wx6w1Y81nDskfWJ8/MbaVCA==</latexit>

<latexit sha1_base64="zg87HjZaVb2t26PTROgBsmYCOSE=">AAAB/XicbVDJTgJBEO3BDXEbl5uXjmDiicxw0SPRiwcOaBwgAUJ6mgI69CzprjHihPgrXjxojFf/w5t/Y7McFHxJJS/vVaWqnh9LodFxvq3Myura+kZ2M7e1vbO7Z+8f1HSUKA4ej2SkGj7TIEUIHgqU0IgVsMCXUPeHVxO/fg9Kiyi8w1EM7YD1Q9ETnKGROvZRoYXwgIhpBdhwdAsVb1zo2Hmn6ExBl4k7J3kyR7Vjf7W6EU8CCJFLpnXTdWJsp0yh4BLGuVaiIWZ8yPrQNDRkAeh2Or1+TE+N0qW9SJkKkU7V3xMpC7QeBb7pDBgO9KI3Ef/zmgn2LtqpCOMEIeSzRb1EUozoJAraFQo4ypEhjCthbqV8wBTjaALLmRDcxZeXSa1UdJ2ie1PKly/ncWTJMTkhZ8Ql56RMrkmVeISTR/JMXsmb9WS9WO/Wx6w1Y81nDskfWJ8/MbaVCA==</latexit>

(.2)

BatchNorm3d

<latexit sha1_base64="eyOhP7ThCAL5t9FwpBi8BbVjXhw=">AAAB/3icbVA9SwNBEN2LXzF+nQo2NoeJYBXuYqFliI2VRDAfkBxhb2+TLNnbPXbnxHCm8K/YWChi69+w89+4Sa7QxAcDj/dmmJkXxJxpcN1vK7eyura+kd8sbG3v7O7Z+wdNLRNFaINILlU7wJpyJmgDGHDajhXFUcBpKxhdTf3WPVWaSXEH45j6ER4I1mcEg5F69lGpC/QBANIaBjK8kSo6Dyelnl10y+4MzjLxMlJEGeo9+6sbSpJEVADhWOuO58bgp1gBI5xOCt1E0xiTER7QjqECR1T76ez+iXNqlNDpS2VKgDNTf0+kONJ6HAWmM8Iw1IveVPzP6yTQv/RTJuIEqCDzRf2EOyCdaRhOyBQlwMeGYKKYudUhQ6wwARNZwYTgLb68TJqVsueWvdtKsVrL4sijY3SCzpCHLlAVXaM6aiCCHtEzekVv1pP1Yr1bH/PWnJXNHKI/sD5/ALmKleM=</latexit>

<latexit sha1_base64="eyOhP7ThCAL5t9FwpBi8BbVjXhw=">AAAB/3icbVA9SwNBEN2LXzF+nQo2NoeJYBXuYqFliI2VRDAfkBxhb2+TLNnbPXbnxHCm8K/YWChi69+w89+4Sa7QxAcDj/dmmJkXxJxpcN1vK7eyura+kd8sbG3v7O7Z+wdNLRNFaINILlU7wJpyJmgDGHDajhXFUcBpKxhdTf3WPVWaSXEH45j6ER4I1mcEg5F69lGpC/QBANIaBjK8kSo6Dyelnl10y+4MzjLxMlJEGeo9+6sbSpJEVADhWOuO58bgp1gBI5xOCt1E0xiTER7QjqECR1T76ez+iXNqlNDpS2VKgDNTf0+kONJ6HAWmM8Iw1IveVPzP6yTQv/RTJuIEqCDzRf2EOyCdaRhOyBQlwMeGYKKYudUhQ6wwARNZwYTgLb68TJqVsueWvdtKsVrL4sijY3SCzpCHLlAVXaM6aiCCHtEzekVv1pP1Yr1bH/PWnJXNHKI/sD5/ALmKleM=</latexit>

<latexit sha1_base64="eyOhP7ThCAL5t9FwpBi8BbVjXhw=">AAAB/3icbVA9SwNBEN2LXzF+nQo2NoeJYBXuYqFliI2VRDAfkBxhb2+TLNnbPXbnxHCm8K/YWChi69+w89+4Sa7QxAcDj/dmmJkXxJxpcN1vK7eyura+kd8sbG3v7O7Z+wdNLRNFaINILlU7wJpyJmgDGHDajhXFUcBpKxhdTf3WPVWaSXEH45j6ER4I1mcEg5F69lGpC/QBANIaBjK8kSo6Dyelnl10y+4MzjLxMlJEGeo9+6sbSpJEVADhWOuO58bgp1gBI5xOCt1E0xiTER7QjqECR1T76ez+iXNqlNDpS2VKgDNTf0+kONJ6HAWmM8Iw1IveVPzP6yTQv/RTJuIEqCDzRf2EOyCdaRhOyBQlwMeGYKKYudUhQ6wwARNZwYTgLb68TJqVsueWvdtKsVrL4sijY3SCzpCHLlAVXaM6aiCCHtEzekVv1pP1Yr1bH/PWnJXNHKI/sD5/ALmKleM=</latexit>

<latexit sha1_base64="eyOhP7ThCAL5t9FwpBi8BbVjXhw=">AAAB/3icbVA9SwNBEN2LXzF+nQo2NoeJYBXuYqFliI2VRDAfkBxhb2+TLNnbPXbnxHCm8K/YWChi69+w89+4Sa7QxAcDj/dmmJkXxJxpcN1vK7eyura+kd8sbG3v7O7Z+wdNLRNFaINILlU7wJpyJmgDGHDajhXFUcBpKxhdTf3WPVWaSXEH45j6ER4I1mcEg5F69lGpC/QBANIaBjK8kSo6Dyelnl10y+4MzjLxMlJEGeo9+6sbSpJEVADhWOuO58bgp1gBI5xOCt1E0xiTER7QjqECR1T76ez+iXNqlNDpS2VKgDNTf0+kONJ6HAWmM8Iw1IveVPzP6yTQv/RTJuIEqCDzRf2EOyCdaRhOyBQlwMeGYKKYudUhQ6wwARNZwYTgLb68TJqVsueWvdtKsVrL4sijY3SCzpCHLlAVXaM6aiCCHtEzekVv1pP1Yr1bH/PWnJXNHKI/sD5/ALmKleM=</latexit>

conv

3D

<latexit sha1_base64="m/h48J0g7vOiQF4P6ZOaYqLm/1U=">AAAB/XicbVC7TsNAEDyHVwgv8+hoLBIkqsgOBZQRUFAGiTykxLLOl3Nyyvls3a0jgmXxKzQUIETLf9DxN1wSF5Aw0kqjmV3t7vgxZwps+9sorKyurW8UN0tb2zu7e+b+QUtFiSS0SSIeyY6PFeVM0CYw4LQTS4pDn9O2P7qe+u0xlYpF4h4mMXVDPBAsYASDljzzqNID+gAAKYnEOPPS85us4pllu2rPYC0TJydllKPhmV+9fkSSkAogHCvVdewY3BRLYITTrNRLFI0xGeEB7WoqcEiVm86uz6xTrfStIJK6BFgz9fdEikOlJqGvO0MMQ7XoTcX/vG4CwaWbMhEnQAWZLwoSbkFkTaOw+kxSAnyiCSaS6VstMsQSE9CBlXQIzuLLy6RVqzp21bmrletXeRxFdIxO0Bly0AWqo1vUQE1E0CN6Rq/ozXgyXox342PeWjDymUP0B8bnD4XHlT4=</latexit>

<latexit sha1_base64="m/h48J0g7vOiQF4P6ZOaYqLm/1U=">AAAB/XicbVC7TsNAEDyHVwgv8+hoLBIkqsgOBZQRUFAGiTykxLLOl3Nyyvls3a0jgmXxKzQUIETLf9DxN1wSF5Aw0kqjmV3t7vgxZwps+9sorKyurW8UN0tb2zu7e+b+QUtFiSS0SSIeyY6PFeVM0CYw4LQTS4pDn9O2P7qe+u0xlYpF4h4mMXVDPBAsYASDljzzqNID+gAAKYnEOPPS85us4pllu2rPYC0TJydllKPhmV+9fkSSkAogHCvVdewY3BRLYITTrNRLFI0xGeEB7WoqcEiVm86uz6xTrfStIJK6BFgz9fdEikOlJqGvO0MMQ7XoTcX/vG4CwaWbMhEnQAWZLwoSbkFkTaOw+kxSAnyiCSaS6VstMsQSE9CBlXQIzuLLy6RVqzp21bmrletXeRxFdIxO0Bly0AWqo1vUQE1E0CN6Rq/ozXgyXox342PeWjDymUP0B8bnD4XHlT4=</latexit>

<latexit sha1_base64="m/h48J0g7vOiQF4P6ZOaYqLm/1U=">AAAB/XicbVC7TsNAEDyHVwgv8+hoLBIkqsgOBZQRUFAGiTykxLLOl3Nyyvls3a0jgmXxKzQUIETLf9DxN1wSF5Aw0kqjmV3t7vgxZwps+9sorKyurW8UN0tb2zu7e+b+QUtFiSS0SSIeyY6PFeVM0CYw4LQTS4pDn9O2P7qe+u0xlYpF4h4mMXVDPBAsYASDljzzqNID+gAAKYnEOPPS85us4pllu2rPYC0TJydllKPhmV+9fkSSkAogHCvVdewY3BRLYITTrNRLFI0xGeEB7WoqcEiVm86uz6xTrfStIJK6BFgz9fdEikOlJqGvO0MMQ7XoTcX/vG4CwaWbMhEnQAWZLwoSbkFkTaOw+kxSAnyiCSaS6VstMsQSE9CBlXQIzuLLy6RVqzp21bmrletXeRxFdIxO0Bly0AWqo1vUQE1E0CN6Rq/ozXgyXox342PeWjDymUP0B8bnD4XHlT4=</latexit>

<latexit sha1_base64="m/h48J0g7vOiQF4P6ZOaYqLm/1U=">AAAB/XicbVC7TsNAEDyHVwgv8+hoLBIkqsgOBZQRUFAGiTykxLLOl3Nyyvls3a0jgmXxKzQUIETLf9DxN1wSF5Aw0kqjmV3t7vgxZwps+9sorKyurW8UN0tb2zu7e+b+QUtFiSS0SSIeyY6PFeVM0CYw4LQTS4pDn9O2P7qe+u0xlYpF4h4mMXVDPBAsYASDljzzqNID+gAAKYnEOPPS85us4pllu2rPYC0TJydllKPhmV+9fkSSkAogHCvVdewY3BRLYITTrNRLFI0xGeEB7WoqcEiVm86uz6xTrfStIJK6BFgz9fdEikOlJqGvO0MMQ7XoTcX/vG4CwaWbMhEnQAWZLwoSbkFkTaOw+kxSAnyiCSaS6VstMsQSE9CBlXQIzuLLy6RVqzp21bmrletXeRxFdIxO0Bly0AWqo1vUQE1E0CN6Rq/ozXgyXox342PeWjDymUP0B8bnD4XHlT4=</latexit>

(256)

(in=128, out=256,

kernel=(4,4,4),

stride=(1, 2, 2),

padding=(1, 0, 1))

LeakyReLU

<latexit sha1_base64="zg87HjZaVb2t26PTROgBsmYCOSE=">AAAB/XicbVDJTgJBEO3BDXEbl5uXjmDiicxw0SPRiwcOaBwgAUJ6mgI69CzprjHihPgrXjxojFf/w5t/Y7McFHxJJS/vVaWqnh9LodFxvq3Myura+kZ2M7e1vbO7Z+8f1HSUKA4ej2SkGj7TIEUIHgqU0IgVsMCXUPeHVxO/fg9Kiyi8w1EM7YD1Q9ETnKGROvZRoYXwgIhpBdhwdAsVb1zo2Hmn6ExBl4k7J3kyR7Vjf7W6EU8CCJFLpnXTdWJsp0yh4BLGuVaiIWZ8yPrQNDRkAeh2Or1+TE+N0qW9SJkKkU7V3xMpC7QeBb7pDBgO9KI3Ef/zmgn2LtqpCOMEIeSzRb1EUozoJAraFQo4ypEhjCthbqV8wBTjaALLmRDcxZeXSa1UdJ2ie1PKly/ncWTJMTkhZ8Ql56RMrkmVeISTR/JMXsmb9WS9WO/Wx6w1Y81nDskfWJ8/MbaVCA==</latexit>

<latexit sha1_base64="zg87HjZaVb2t26PTROgBsmYCOSE=">AAAB/XicbVDJTgJBEO3BDXEbl5uXjmDiicxw0SPRiwcOaBwgAUJ6mgI69CzprjHihPgrXjxojFf/w5t/Y7McFHxJJS/vVaWqnh9LodFxvq3Myura+kZ2M7e1vbO7Z+8f1HSUKA4ej2SkGj7TIEUIHgqU0IgVsMCXUPeHVxO/fg9Kiyi8w1EM7YD1Q9ETnKGROvZRoYXwgIhpBdhwdAsVb1zo2Hmn6ExBl4k7J3kyR7Vjf7W6EU8CCJFLpnXTdWJsp0yh4BLGuVaiIWZ8yPrQNDRkAeh2Or1+TE+N0qW9SJkKkU7V3xMpC7QeBb7pDBgO9KI3Ef/zmgn2LtqpCOMEIeSzRb1EUozoJAraFQo4ypEhjCthbqV8wBTjaALLmRDcxZeXSa1UdJ2ie1PKly/ncWTJMTkhZ8Ql56RMrkmVeISTR/JMXsmb9WS9WO/Wx6w1Y81nDskfWJ8/MbaVCA==</latexit>

<latexit sha1_base64="zg87HjZaVb2t26PTROgBsmYCOSE=">AAAB/XicbVDJTgJBEO3BDXEbl5uXjmDiicxw0SPRiwcOaBwgAUJ6mgI69CzprjHihPgrXjxojFf/w5t/Y7McFHxJJS/vVaWqnh9LodFxvq3Myura+kZ2M7e1vbO7Z+8f1HSUKA4ej2SkGj7TIEUIHgqU0IgVsMCXUPeHVxO/fg9Kiyi8w1EM7YD1Q9ETnKGROvZRoYXwgIhpBdhwdAsVb1zo2Hmn6ExBl4k7J3kyR7Vjf7W6EU8CCJFLpnXTdWJsp0yh4BLGuVaiIWZ8yPrQNDRkAeh2Or1+TE+N0qW9SJkKkU7V3xMpC7QeBb7pDBgO9KI3Ef/zmgn2LtqpCOMEIeSzRb1EUozoJAraFQo4ypEhjCthbqV8wBTjaALLmRDcxZeXSa1UdJ2ie1PKly/ncWTJMTkhZ8Ql56RMrkmVeISTR/JMXsmb9WS9WO/Wx6w1Y81nDskfWJ8/MbaVCA==</latexit>

<latexit sha1_base64="zg87HjZaVb2t26PTROgBsmYCOSE=">AAAB/XicbVDJTgJBEO3BDXEbl5uXjmDiicxw0SPRiwcOaBwgAUJ6mgI69CzprjHihPgrXjxojFf/w5t/Y7McFHxJJS/vVaWqnh9LodFxvq3Myura+kZ2M7e1vbO7Z+8f1HSUKA4ej2SkGj7TIEUIHgqU0IgVsMCXUPeHVxO/fg9Kiyi8w1EM7YD1Q9ETnKGROvZRoYXwgIhpBdhwdAsVb1zo2Hmn6ExBl4k7J3kyR7Vjf7W6EU8CCJFLpnXTdWJsp0yh4BLGuVaiIWZ8yPrQNDRkAeh2Or1+TE+N0qW9SJkKkU7V3xMpC7QeBb7pDBgO9KI3Ef/zmgn2LtqpCOMEIeSzRb1EUozoJAraFQo4ypEhjCthbqV8wBTjaALLmRDcxZeXSa1UdJ2ie1PKly/ncWTJMTkhZ8Ql56RMrkmVeISTR/JMXsmb9WS9WO/Wx6w1Y81nDskfWJ8/MbaVCA==</latexit>

(.2)

BatchNorm3d

<latexit sha1_base64="eyOhP7ThCAL5t9FwpBi8BbVjXhw=">AAAB/3icbVA9SwNBEN2LXzF+nQo2NoeJYBXuYqFliI2VRDAfkBxhb2+TLNnbPXbnxHCm8K/YWChi69+w89+4Sa7QxAcDj/dmmJkXxJxpcN1vK7eyura+kd8sbG3v7O7Z+wdNLRNFaINILlU7wJpyJmgDGHDajhXFUcBpKxhdTf3WPVWaSXEH45j6ER4I1mcEg5F69lGpC/QBANIaBjK8kSo6Dyelnl10y+4MzjLxMlJEGeo9+6sbSpJEVADhWOuO58bgp1gBI5xOCt1E0xiTER7QjqECR1T76ez+iXNqlNDpS2VKgDNTf0+kONJ6HAWmM8Iw1IveVPzP6yTQv/RTJuIEqCDzRf2EOyCdaRhOyBQlwMeGYKKYudUhQ6wwARNZwYTgLb68TJqVsueWvdtKsVrL4sijY3SCzpCHLlAVXaM6aiCCHtEzekVv1pP1Yr1bH/PWnJXNHKI/sD5/ALmKleM=</latexit>

<latexit sha1_base64="eyOhP7ThCAL5t9FwpBi8BbVjXhw=">AAAB/3icbVA9SwNBEN2LXzF+nQo2NoeJYBXuYqFliI2VRDAfkBxhb2+TLNnbPXbnxHCm8K/YWChi69+w89+4Sa7QxAcDj/dmmJkXxJxpcN1vK7eyura+kd8sbG3v7O7Z+wdNLRNFaINILlU7wJpyJmgDGHDajhXFUcBpKxhdTf3WPVWaSXEH45j6ER4I1mcEg5F69lGpC/QBANIaBjK8kSo6Dyelnl10y+4MzjLxMlJEGeo9+6sbSpJEVADhWOuO58bgp1gBI5xOCt1E0xiTER7QjqECR1T76ez+iXNqlNDpS2VKgDNTf0+kONJ6HAWmM8Iw1IveVPzP6yTQv/RTJuIEqCDzRf2EOyCdaRhOyBQlwMeGYKKYudUhQ6wwARNZwYTgLb68TJqVsueWvdtKsVrL4sijY3SCzpCHLlAVXaM6aiCCHtEzekVv1pP1Yr1bH/PWnJXNHKI/sD5/ALmKleM=</latexit>

<latexit sha1_base64="eyOhP7ThCAL5t9FwpBi8BbVjXhw=">AAAB/3icbVA9SwNBEN2LXzF+nQo2NoeJYBXuYqFliI2VRDAfkBxhb2+TLNnbPXbnxHCm8K/YWChi69+w89+4Sa7QxAcDj/dmmJkXxJxpcN1vK7eyura+kd8sbG3v7O7Z+wdNLRNFaINILlU7wJpyJmgDGHDajhXFUcBpKxhdTf3WPVWaSXEH45j6ER4I1mcEg5F69lGpC/QBANIaBjK8kSo6Dyelnl10y+4MzjLxMlJEGeo9+6sbSpJEVADhWOuO58bgp1gBI5xOCt1E0xiTER7QjqECR1T76ez+iXNqlNDpS2VKgDNTf0+kONJ6HAWmM8Iw1IveVPzP6yTQv/RTJuIEqCDzRf2EOyCdaRhOyBQlwMeGYKKYudUhQ6wwARNZwYTgLb68TJqVsueWvdtKsVrL4sijY3SCzpCHLlAVXaM6aiCCHtEzekVv1pP1Yr1bH/PWnJXNHKI/sD5/ALmKleM=</latexit>

<latexit sha1_base64="eyOhP7ThCAL5t9FwpBi8BbVjXhw=">AAAB/3icbVA9SwNBEN2LXzF+nQo2NoeJYBXuYqFliI2VRDAfkBxhb2+TLNnbPXbnxHCm8K/YWChi69+w89+4Sa7QxAcDj/dmmJkXxJxpcN1vK7eyura+kd8sbG3v7O7Z+wdNLRNFaINILlU7wJpyJmgDGHDajhXFUcBpKxhdTf3WPVWaSXEH45j6ER4I1mcEg5F69lGpC/QBANIaBjK8kSo6Dyelnl10y+4MzjLxMlJEGeo9+6sbSpJEVADhWOuO58bgp1gBI5xOCt1E0xiTER7QjqECR1T76ez+iXNqlNDpS2VKgDNTf0+kONJ6HAWmM8Iw1IveVPzP6yTQv/RTJuIEqCDzRf2EOyCdaRhOyBQlwMeGYKKYudUhQ6wwARNZwYTgLb68TJqVsueWvdtKsVrL4sijY3SCzpCHLlAVXaM6aiCCHtEzekVv1pP1Yr1bH/PWnJXNHKI/sD5/ALmKleM=</latexit>

conv

3D

<latexit sha1_base64="m/h48J0g7vOiQF4P6ZOaYqLm/1U=">AAAB/XicbVC7TsNAEDyHVwgv8+hoLBIkqsgOBZQRUFAGiTykxLLOl3Nyyvls3a0jgmXxKzQUIETLf9DxN1wSF5Aw0kqjmV3t7vgxZwps+9sorKyurW8UN0tb2zu7e+b+QUtFiSS0SSIeyY6PFeVM0CYw4LQTS4pDn9O2P7qe+u0xlYpF4h4mMXVDPBAsYASDljzzqNID+gAAKYnEOPPS85us4pllu2rPYC0TJydllKPhmV+9fkSSkAogHCvVdewY3BRLYITTrNRLFI0xGeEB7WoqcEiVm86uz6xTrfStIJK6BFgz9fdEikOlJqGvO0MMQ7XoTcX/vG4CwaWbMhEnQAWZLwoSbkFkTaOw+kxSAnyiCSaS6VstMsQSE9CBlXQIzuLLy6RVqzp21bmrletXeRxFdIxO0Bly0AWqo1vUQE1E0CN6Rq/ozXgyXox342PeWjDymUP0B8bnD4XHlT4=</latexit>

<latexit sha1_base64="m/h48J0g7vOiQF4P6ZOaYqLm/1U=">AAAB/XicbVC7TsNAEDyHVwgv8+hoLBIkqsgOBZQRUFAGiTykxLLOl3Nyyvls3a0jgmXxKzQUIETLf9DxN1wSF5Aw0kqjmV3t7vgxZwps+9sorKyurW8UN0tb2zu7e+b+QUtFiSS0SSIeyY6PFeVM0CYw4LQTS4pDn9O2P7qe+u0xlYpF4h4mMXVDPBAsYASDljzzqNID+gAAKYnEOPPS85us4pllu2rPYC0TJydllKPhmV+9fkSSkAogHCvVdewY3BRLYITTrNRLFI0xGeEB7WoqcEiVm86uz6xTrfStIJK6BFgz9fdEikOlJqGvO0MMQ7XoTcX/vG4CwaWbMhEnQAWZLwoSbkFkTaOw+kxSAnyiCSaS6VstMsQSE9CBlXQIzuLLy6RVqzp21bmrletXeRxFdIxO0Bly0AWqo1vUQE1E0CN6Rq/ozXgyXox342PeWjDymUP0B8bnD4XHlT4=</latexit>

<latexit sha1_base64="m/h48J0g7vOiQF4P6ZOaYqLm/1U=">AAAB/XicbVC7TsNAEDyHVwgv8+hoLBIkqsgOBZQRUFAGiTykxLLOl3Nyyvls3a0jgmXxKzQUIETLf9DxN1wSF5Aw0kqjmV3t7vgxZwps+9sorKyurW8UN0tb2zu7e+b+QUtFiSS0SSIeyY6PFeVM0CYw4LQTS4pDn9O2P7qe+u0xlYpF4h4mMXVDPBAsYASDljzzqNID+gAAKYnEOPPS85us4pllu2rPYC0TJydllKPhmV+9fkSSkAogHCvVdewY3BRLYITTrNRLFI0xGeEB7WoqcEiVm86uz6xTrfStIJK6BFgz9fdEikOlJqGvO0MMQ7XoTcX/vG4CwaWbMhEnQAWZLwoSbkFkTaOw+kxSAnyiCSaS6VstMsQSE9CBlXQIzuLLy6RVqzp21bmrletXeRxFdIxO0Bly0AWqo1vUQE1E0CN6Rq/ozXgyXox342PeWjDymUP0B8bnD4XHlT4=</latexit>

<latexit sha1_base64="m/h48J0g7vOiQF4P6ZOaYqLm/1U=">AAAB/XicbVC7TsNAEDyHVwgv8+hoLBIkqsgOBZQRUFAGiTykxLLOl3Nyyvls3a0jgmXxKzQUIETLf9DxN1wSF5Aw0kqjmV3t7vgxZwps+9sorKyurW8UN0tb2zu7e+b+QUtFiSS0SSIeyY6PFeVM0CYw4LQTS4pDn9O2P7qe+u0xlYpF4h4mMXVDPBAsYASDljzzqNID+gAAKYnEOPPS85us4pllu2rPYC0TJydllKPhmV+9fkSSkAogHCvVdewY3BRLYITTrNRLFI0xGeEB7WoqcEiVm86uz6xTrfStIJK6BFgz9fdEikOlJqGvO0MMQ7XoTcX/vG4CwaWbMhEnQAWZLwoSbkFkTaOw+kxSAnyiCSaS6VstMsQSE9CBlXQIzuLLy6RVqzp21bmrletXeRxFdIxO0Bly0AWqo1vUQE1E0CN6Rq/ozXgyXox342PeWjDymUP0B8bnD4XHlT4=</latexit>

(in=256, out=1,

kernel=(1,1,3),

stride=(2, 2, 2),

padding=(0, 0, 0))

Sigmoid

<latexit sha1_base64="P7EFOzqbPdmxYWJoFiKL9/lbIi0=">AAAB+3icbVBNT8JAEN3iF+JXxaOXjWDiibRc9Ej04hGjgAk0ZLvdwobtttmdGkjTv+LFg8Z49Y9489+4QA8KvmSSl/dmMjPPTwTX4DjfVmljc2t7p7xb2ds/ODyyj6tdHaeKsg6NRawefaKZ4JJ1gINgj4liJPIF6/mTm7nfe2JK81g+wCxhXkRGkoecEjDS0K7WB8CmAJDd81EU8yCvD+2a03AWwOvELUgNFWgP7a9BENM0YhKoIFr3XScBLyMKOBUsrwxSzRJCJ2TE+oZKEjHtZYvbc3xulACHsTIlAS/U3xMZibSeRb7pjAiM9ao3F//z+imEV17GZZICk3S5KEwFhhjPg8ABV4yCmBlCqOLmVkzHRBEKJq6KCcFdfXmddJsN12m4d81a67qIo4xO0Rm6QC66RC10i9qogyiaomf0it6s3Hqx3q2PZWvJKmZO0B9Ynz8UuJRy</latexit>

<latexit sha1_base64="P7EFOzqbPdmxYWJoFiKL9/lbIi0=">AAAB+3icbVBNT8JAEN3iF+JXxaOXjWDiibRc9Ej04hGjgAk0ZLvdwobtttmdGkjTv+LFg8Z49Y9489+4QA8KvmSSl/dmMjPPTwTX4DjfVmljc2t7p7xb2ds/ODyyj6tdHaeKsg6NRawefaKZ4JJ1gINgj4liJPIF6/mTm7nfe2JK81g+wCxhXkRGkoecEjDS0K7WB8CmAJDd81EU8yCvD+2a03AWwOvELUgNFWgP7a9BENM0YhKoIFr3XScBLyMKOBUsrwxSzRJCJ2TE+oZKEjHtZYvbc3xulACHsTIlAS/U3xMZibSeRb7pjAiM9ao3F//z+imEV17GZZICk3S5KEwFhhjPg8ABV4yCmBlCqOLmVkzHRBEKJq6KCcFdfXmddJsN12m4d81a67qIo4xO0Rm6QC66RC10i9qogyiaomf0it6s3Hqx3q2PZWvJKmZO0B9Ynz8UuJRy</latexit>

<latexit sha1_base64="P7EFOzqbPdmxYWJoFiKL9/lbIi0=">AAAB+3icbVBNT8JAEN3iF+JXxaOXjWDiibRc9Ej04hGjgAk0ZLvdwobtttmdGkjTv+LFg8Z49Y9489+4QA8KvmSSl/dmMjPPTwTX4DjfVmljc2t7p7xb2ds/ODyyj6tdHaeKsg6NRawefaKZ4JJ1gINgj4liJPIF6/mTm7nfe2JK81g+wCxhXkRGkoecEjDS0K7WB8CmAJDd81EU8yCvD+2a03AWwOvELUgNFWgP7a9BENM0YhKoIFr3XScBLyMKOBUsrwxSzRJCJ2TE+oZKEjHtZYvbc3xulACHsTIlAS/U3xMZibSeRb7pjAiM9ao3F//z+imEV17GZZICk3S5KEwFhhjPg8ABV4yCmBlCqOLmVkzHRBEKJq6KCcFdfXmddJsN12m4d81a67qIo4xO0Rm6QC66RC10i9qogyiaomf0it6s3Hqx3q2PZWvJKmZO0B9Ynz8UuJRy</latexit>

<latexit sha1_base64="P7EFOzqbPdmxYWJoFiKL9/lbIi0=">AAAB+3icbVBNT8JAEN3iF+JXxaOXjWDiibRc9Ej04hGjgAk0ZLvdwobtttmdGkjTv+LFg8Z49Y9489+4QA8KvmSSl/dmMjPPTwTX4DjfVmljc2t7p7xb2ds/ODyyj6tdHaeKsg6NRawefaKZ4JJ1gINgj4liJPIF6/mTm7nfe2JK81g+wCxhXkRGkoecEjDS0K7WB8CmAJDd81EU8yCvD+2a03AWwOvELUgNFWgP7a9BENM0YhKoIFr3XScBLyMKOBUsrwxSzRJCJ2TE+oZKEjHtZYvbc3xulACHsTIlAS/U3xMZibSeRb7pjAiM9ao3F//z+imEV17GZZICk3S5KEwFhhjPg8ABV4yCmBlCqOLmVkzHRBEKJq6KCcFdfXmddJsN12m4d81a67qIo4xO0Rm6QC66RC10i9qogyiaomf0it6s3Hqx3q2PZWvJKmZO0B9Ynz8UuJRy</latexit>

Figure 5: Detailed architecture of the temporal discrimina-

tor D

t

.

two encoders, one encoder E

v

(parameterized by θ

v

)

for the videos x

i

and ˆx

j

k−1

, the other encoder is for the

target depth E

d

. As motivated above, we use one de-

coder D

k

in order to synthesize the video. To account

for temporal consistency and encourage smooth video

synthesis, we add a video-based discriminator in the

adversarial training. We use a similar discriminator

architecture as the one proposed in (Tulyakov et al.,

2018) (see Fig. 5). The total loss to train the model is

given by:

L

k

= L

r

+ 0.01L

a

. (5)

4 EXPERIMENTS

This section describes the evaluation setup along with

quantitative and qualitative results against state-of-

the-art methods to assess the effectiveness of the our

proposed model. We also present a detailed ablation

study to justify the architectural choices that we make

in the model.

4.1 Experimental Setup

Dataset. We use NTU RGB+D (Shahroudy et al.,

2016), a synchronised multi-view action recognition

dataset where ach action is captured from 3 views.

We use the following evaluation measures:

• Frame-Base Evaluation. We assess the per-

frame synthesis quality of each model using Struc-

tural Similarity (SSIM) and by measuring the

error-sensitivity using Peak Signal-to-Noise-Ratio

(PSNR) (Zhou Wang et al., 2004).

• Fr

´

echet Video Distance. (FVD) (Unterthiner et al.,

2019) is an extension of Fr

´

echet Inception Distance

(FID) (Heusel et al., 2017) and it is specifically de-

signed for videos. FVD quantifies the quality and

the diversity of samples generated from a paramet-

ric model with respect to the ground truth.

• Pose Estimation. We use the pose estimator pro-

posed in (Raaj et al., 2019) and report the L

2

er-

ror, Percentage of Correct Keypoints (PCK) (Yang

and Ramanan, 2013) along with the precision, re-

call, and F1 of the estimations.

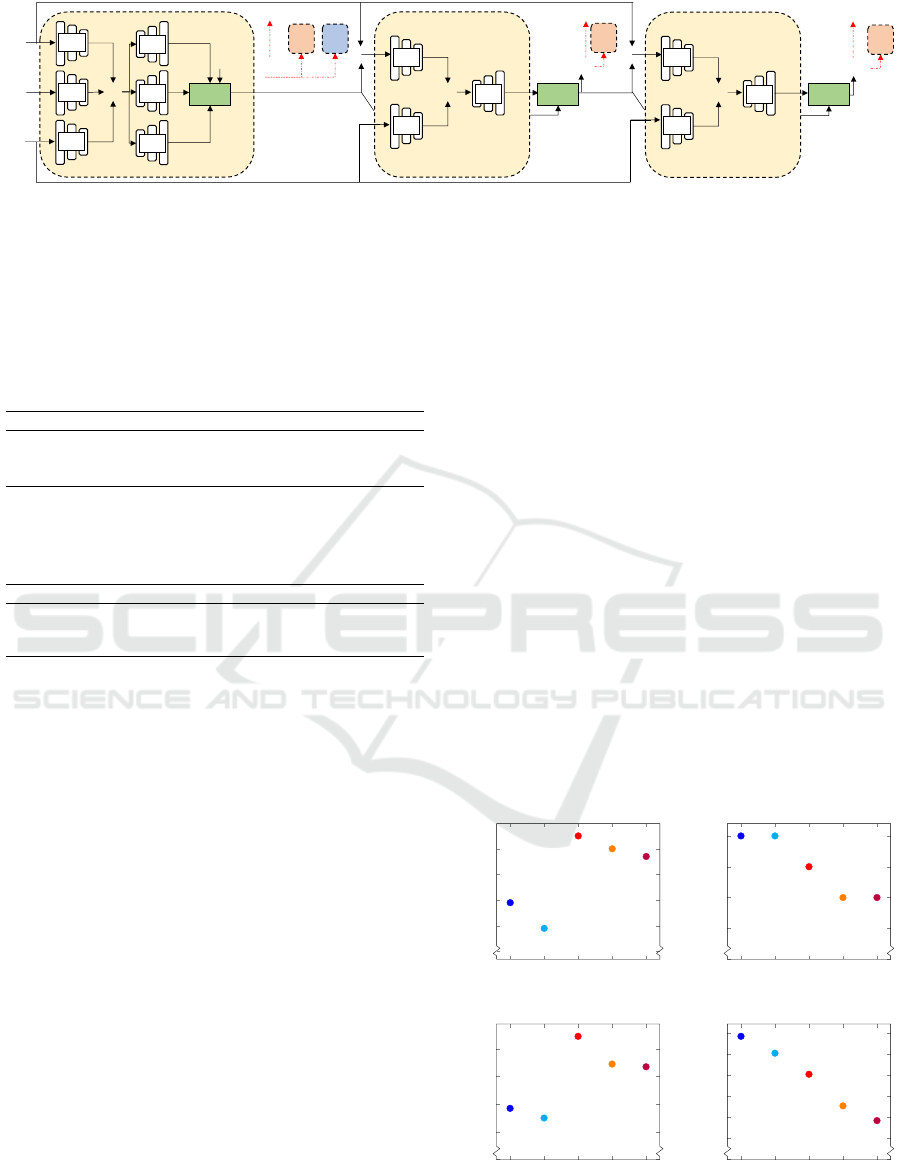

Implementation Details. We train PSR-Net with

K = 3 layers (see Fig. 6). We use a 6 layered

ResNet (Zhu et al., 2017) with 3D convolutions as

a base for each of the three generators. We train all

the model parameters with Adam optimizer (Kingma

and Ba, 2015) with the hyperparameters (α

1

,α

2

) =

(0.5,0.999). The learning rate is set to 2·10

−5

.

4.2 Reconstruction Layer

The focus of the reconstruction layer is to accu-

rately reconstruct the foreground (i.e. motion) in the

target-view. We investigate different model architec-

tures and highlight the effectiveness of the computed

visibility mask M

i→j

in the foreground reconstruc-

tion (see Fig. 4). We present three models (Context-

based, Common head, Separate heads) that take x

i

, ˆx

j

0

,

and d

j

and process them with separate encoders.

Then, the feature presentation from the encoders are

concatenated and fed to a convolution layer with the

following configurations:

Multi-View Video Synthesis Through Progressive Synthesis and Refinement

233

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

<latexit sha1_base64="GyhvOmYJNyv55T+MPYdIeuMzy+U=">AAAB73icbVDLTgJBEOzFF+IL9ehlIph4Irtc9Ej04hETeSSwIbPDLEyYnVnnYUI2/IQXDxrj1d/x5t84wB4UrKSTSlV3uruilDNtfP/bK2xsbm3vFHdLe/sHh0fl45O2llYR2iKSS9WNsKacCdoyzHDaTRXFScRpJ5rczv3OE1WaSfFgpikNEzwSLGYEGyd1q32Zcqurg3LFr/kLoHUS5KQCOZqD8ld/KIlNqDCEY617gZ+aMMPKMMLprNS3mqaYTPCI9hwVOKE6zBb3ztCFU4YolsqVMGih/p7IcKL1NIlcZ4LNWK96c/E/r2dNfB1mTKTWUEGWi2LLkZFo/jwaMkWJ4VNHMFHM3YrIGCtMjIuo5EIIVl9eJ+16LfBrwX290rjJ4yjCGZzDJQRwBQ24gya0gACHZ3iFN+/Re/HevY9la8HLZ07hD7zPH4n2j58=</latexit>

x

i

ˆx

j

0

d

j

E

E

E

D

D

D

Eq. 2 Eq. 1 Eq. 1

DD

E

E

E

E

ˆx

j

v

ˆx

j

o

ˆx

j

b

M

i→j

ˆx

j

1

ˆx

j

2

ˆx

j

3

D Φ D

t

D

t

G

k=1

i→j

G

k=2

i→j

G

k=3

i→j

L

r

L

a

L

p

L

r

L

a

L

r

L

a

Figure 6: Proposed PSR-Net model. Detailed architecture of the proposed PSR-Net with K = 3 layers. KEY – Φ: spa-

tiotemporal perceptual network (Lakhal et al., 2019); E (resp. D): encoder (resp. decoder) network, we omit the weights θ

for clarity; →: information flow; ⊕: concatenation.

Table 1: Results of the model ablation of the first layer

G

k=1

i→j

. We investigate the synthesis performance with the

different decoding strategies. The focus of this network is

about foreground synthesis and hence the background syn-

thesis is not a primary model evaluation measure. KEY –

M: mask, best, second best.

Decoders SSIM M-SSIM PSNR M-PSNR

Context-based .811 .981 23.32 32.31

Common head .795 .980 23.16 32.21

Separate heads .801 .981 23.13 32.38

Table 2: Model ablation of the second stage G

k=2

i→j

. We in-

vestigate different encoding strategies. KEY – M: mask,

best, second best.

Encoders SSIM M-SSIM PSNR M-PSNR

Separate encoders .801 .978 23.12 31.57

Concat all .791 .978 22.97 31.64

Concat videos .811 .978 23.25 31.41

• Context-Based: we only synthesize the foreground

and the background with the dedicated decoders

(see Fig. 4(a)).

• Common Head: in the decoder of the foreground

branch, we output two convolution heads that cor-

respond to the visible and occluded part from the

input view (see Fig. 4(b)).

• Separate Heads: we exploit the information avail-

able in the visibility mask M

i→j

and output three

branches with separate weights to synthesize the

background, visible, and occluded parts respec-

tively (see Fig. 4(c)).

Results show that the proposed model using sep-

arate decoders (heads) with the map M

i→j

produces

better foreground synthesis compared to the two other

models (see Tab. 1). Therefore, we keep this model as

a default for G

k=1

i→j

.

4.3 Recurrence Layer

The aim of the following ablations is to set a base

model for the recurrence layers, i.e. G

k

i→j

,k ≥ 2. We

conduct the following ablation to show the impor-

tance of inputs encoding at this stage.

• Separate Encoders. Each input x

i

, ˆx

j

k−1

, and d

j

have separate encoder E

x

, E

k−1

, and E

d

respec-

tively.

• Concat All. We concatenate all the inputs

x

i

, ˆx

j

k−1

,d

j

and then apply one encoder, E

v

.

• Concat Videos. The videos x

i

, ˆx

j

k−1

are concate-

nated and encoded with a single encoder, E

v

, and

the depth is encoded with E

d

.

Tab. 2 reports the scores using the three strategies

above. Results show that combining the videos x

i

and

ˆx

j

k−1

with a single encoder provide better refinement

with .811 (resp. 23.25) SSIM (resp. PSNR) score.

The model using three encoders could not generalize

well compared to the one that uses E

v

and E

d

. This is

because at this stage, the model uses prediction from

the reconstruction layer (does not learn from scratch).

To avoid extensive hyper-parameter search, we fix

the weighting factor α

k

at each stage k ∈ {1,... , K}

for all layers. Fig. 7 reports the sensitivity of the

model G

k=1

i→j

with different α ∈ {.1, .3,.5,.7,.9} val-

(a) SSIM

.1 .3 .5

.7

.9

0.790

0.800

0.810

0.820

0.830

α

(b) M-SSIM

.1 .3 .5

.7

.9

0.976

0.977

0.978

0.979

0.980

α

(c) PSNR

.1 .3 .5

.7

.9

23.00

23.20

23.40

23.60

23.80

α

(d) M-PSNR

.1 .3 .5

.7

.9

31.00

31.20

31.40

31.60

31.80

32.00

32.20

α

Figure 7: Sensitivity analysis with different α values using

the recurrence layer G

k=2

i→j

(higher score better). KEY – M:

mask.

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

234

ues. We can see that α = .5 obtains the the best

value. This suggests that the synthesis of the pre-

vious layer is equally important to the synthesis in

the k-th layer. We also note that the effectiveness

of the proposed weighting scheme compared to con-

ventional synthesis (without weighting). The concat

videos model produces an overall SSIM of .811, how-

ever, by adding the weighting factor as defined in

Eq. 1 with α

k

= .5 produces an overall SSIM of .835.

For the rest of the paper, we fix concat videos using

α

k

= .5 as default model with G

l=k

i→j

, for k ≥2.

4.4 State-of-the-Art Comparison

Model Performance. After each layer, the model en-

hances the synthesis reconstruction (see Tab. 3). This

is because the network G

k

i→j

;k ≥ 2 focuses on cor-

recting mis-classified pixels and refining the videos.

However, we notice a slight drop in the foreground

synthesis (from .981 M-SSIM to .979) as we are no

longer using the visibility mask M

i→j

with the re-

construction loss. The reason is we are assuming

that the recurrence layer is to refine the synthesis

and not to produce the temporal motion in the novel-

view. Fig. 10 depicts two examples showing the idea

of progressively refining the results and adding high-

frequency details, e.g. in the first row after synthe-

sizing the novel-view video the recurrence networks

(G

k=2

i→j

and G

k=3

i→j

) focus on correcting the lighting of

the room from a wrong prediction in G

k=1

i→j

.

We further investigate challenging synthesis cases

to better understand the model behaviour. Fig. 9

shows that, in some cases, the synthesis quality de-

creases from its initial prediction. We can see that in

these cases, the network fails to rectify the artifacts.

Model Comparison. Tab. 3 compares the proposed

PSR-Net with K = 3 against VDNet (Lakhal et al.,

2019) and GTNet (Lakhal et al., 2020) and frame-

base method PG

2

(Ma et al., 2017) and PATN (Zhu

et al., 2019). We clearly see the advantage of the

video based models compared to the frame-based.

This is because the video based models use explicit

temporal consistency across the synthesis. Also, the

proposed model outperforms the other video-based

models with an SSIM score of .841 and PSNR score

of 24.01. Fig. 8 highlights the per-view SSIM scores

of each model. We can see that the performance grad-

ually enhances after each iteration. We also note that

the overall scores obtained are consistent across views

and the proposed method performs well in the chal-

lenging case of view 3 →2. Since we explicitly avoid

using context-based approach after the first stage, it is

expected that GTNet produces better foreground syn-

thesis than G

k=3

i→j

with an M-SSIM (resp. M-PSNR)

1 →2 1 →3 2 →1 2 →3 3 →1 3 →2

0.74

0.76

0.78

0.8

0.82

0.84

0.86

SSIM

VDNet

GTNet PSR-Net (ours)

(a) state-of-the-art comparison

1 →2 1 →3 2 →1 2 →3 3 →1 3 →2

0.74

0.76

0.78

0.8

0.82

0.84

0.86

SSIM

G

k=1

i→j

G

k=2

i→j

G

k=3

i→j

(b) PSR-Net performance with K = 3 layers

Figure 8: Per-view SSIM comparison between the pro-

posed PSR-Net against VDNet (Lakhal et al., 2019) and

GTNet (Lakhal et al., 2020). KEY – i → j: synthesis from

view i to j.

.400

<latexit sha1_base64="8AL7XRVislXlw5JJ8g0Vd+VRr38=">AAAB7XicbVBNSwMxEJ31s9avqkcvwVbwVLJF0GPRi8cK9gPapWTTbBubTZYkK5Sl/8GLB0W8+n+8+W9M2z1o64OBx3szzMwLE8GNxfjbW1vf2NzaLuwUd/f2Dw5LR8cto1JNWZMqoXQnJIYJLlnTcitYJ9GMxKFg7XB8O/PbT0wbruSDnSQsiMlQ8ohTYp3UqlQvMa70S2VcxXOgVeLnpAw5Gv3SV2+gaBozaakgxnR9nNggI9pyKti02EsNSwgdkyHrOipJzEyQza+donOnDFCktCtp0Vz9PZGR2JhJHLrOmNiRWfZm4n9eN7XRdZBxmaSWSbpYFKUCWYVmr6MB14xaMXGEUM3drYiOiCbUuoCKLgR/+eVV0qpVfVz172vl+k0eRwFO4QwuwIcrqMMdNKAJFB7hGV7hzVPei/fufSxa17x85gT+wPv8AX4fjb4=</latexit>

<latexit sha1_base64="8AL7XRVislXlw5JJ8g0Vd+VRr38=">AAAB7XicbVBNSwMxEJ31s9avqkcvwVbwVLJF0GPRi8cK9gPapWTTbBubTZYkK5Sl/8GLB0W8+n+8+W9M2z1o64OBx3szzMwLE8GNxfjbW1vf2NzaLuwUd/f2Dw5LR8cto1JNWZMqoXQnJIYJLlnTcitYJ9GMxKFg7XB8O/PbT0wbruSDnSQsiMlQ8ohTYp3UqlQvMa70S2VcxXOgVeLnpAw5Gv3SV2+gaBozaakgxnR9nNggI9pyKti02EsNSwgdkyHrOipJzEyQza+donOnDFCktCtp0Vz9PZGR2JhJHLrOmNiRWfZm4n9eN7XRdZBxmaSWSbpYFKUCWYVmr6MB14xaMXGEUM3drYiOiCbUuoCKLgR/+eVV0qpVfVz172vl+k0eRwFO4QwuwIcrqMMdNKAJFB7hGV7hzVPei/fufSxa17x85gT+wPv8AX4fjb4=</latexit>

<latexit sha1_base64="8AL7XRVislXlw5JJ8g0Vd+VRr38=">AAAB7XicbVBNSwMxEJ31s9avqkcvwVbwVLJF0GPRi8cK9gPapWTTbBubTZYkK5Sl/8GLB0W8+n+8+W9M2z1o64OBx3szzMwLE8GNxfjbW1vf2NzaLuwUd/f2Dw5LR8cto1JNWZMqoXQnJIYJLlnTcitYJ9GMxKFg7XB8O/PbT0wbruSDnSQsiMlQ8ohTYp3UqlQvMa70S2VcxXOgVeLnpAw5Gv3SV2+gaBozaakgxnR9nNggI9pyKti02EsNSwgdkyHrOipJzEyQza+donOnDFCktCtp0Vz9PZGR2JhJHLrOmNiRWfZm4n9eN7XRdZBxmaSWSbpYFKUCWYVmr6MB14xaMXGEUM3drYiOiCbUuoCKLgR/+eVV0qpVfVz172vl+k0eRwFO4QwuwIcrqMMdNKAJFB7hGV7hzVPei/fufSxa17x85gT+wPv8AX4fjb4=</latexit>

<latexit sha1_base64="8AL7XRVislXlw5JJ8g0Vd+VRr38=">AAAB7XicbVBNSwMxEJ31s9avqkcvwVbwVLJF0GPRi8cK9gPapWTTbBubTZYkK5Sl/8GLB0W8+n+8+W9M2z1o64OBx3szzMwLE8GNxfjbW1vf2NzaLuwUd/f2Dw5LR8cto1JNWZMqoXQnJIYJLlnTcitYJ9GMxKFg7XB8O/PbT0wbruSDnSQsiMlQ8ohTYp3UqlQvMa70S2VcxXOgVeLnpAw5Gv3SV2+gaBozaakgxnR9nNggI9pyKti02EsNSwgdkyHrOipJzEyQza+donOnDFCktCtp0Vz9PZGR2JhJHLrOmNiRWfZm4n9eN7XRdZBxmaSWSbpYFKUCWYVmr6MB14xaMXGEUM3drYiOiCbUuoCKLgR/+eVV0qpVfVz172vl+k0eRwFO4QwuwIcrqMMdNKAJFB7hGV7hzVPei/fufSxa17x85gT+wPv8AX4fjb4=</latexit>

.436

<latexit sha1_base64="dZJZIhgAl/xbndHHaGkVaUzLzZ8=">AAAB7XicbVBNSwMxEJ2tX7V+VT16CbaCp2VXRT0WvXisYD+gXUo2zbax2WRJskJZ+h+8eFDEq//Hm//GtN2Dtj4YeLw3w8y8MOFMG8/7dgorq2vrG8XN0tb2zu5eef+gqWWqCG0QyaVqh1hTzgRtGGY4bSeK4jjktBWObqd+64kqzaR4MOOEBjEeCBYxgo2VmlX34vyy2itXPNebAS0TPycVyFHvlb+6fUnSmApDONa643uJCTKsDCOcTkrdVNMEkxEe0I6lAsdUB9ns2gk6sUofRVLZEgbN1N8TGY61Hseh7YyxGepFbyr+53VSE10HGRNJaqgg80VRypGRaPo66jNFieFjSzBRzN6KyBArTIwNqGRD8BdfXibNM9f3XP/+rFK7yeMowhEcwyn4cAU1uIM6NIDAIzzDK7w50nlx3p2PeWvByWcO4Q+czx+Lz43H</latexit>

<latexit sha1_base64="dZJZIhgAl/xbndHHaGkVaUzLzZ8=">AAAB7XicbVBNSwMxEJ2tX7V+VT16CbaCp2VXRT0WvXisYD+gXUo2zbax2WRJskJZ+h+8eFDEq//Hm//GtN2Dtj4YeLw3w8y8MOFMG8/7dgorq2vrG8XN0tb2zu5eef+gqWWqCG0QyaVqh1hTzgRtGGY4bSeK4jjktBWObqd+64kqzaR4MOOEBjEeCBYxgo2VmlX34vyy2itXPNebAS0TPycVyFHvlb+6fUnSmApDONa643uJCTKsDCOcTkrdVNMEkxEe0I6lAsdUB9ns2gk6sUofRVLZEgbN1N8TGY61Hseh7YyxGepFbyr+53VSE10HGRNJaqgg80VRypGRaPo66jNFieFjSzBRzN6KyBArTIwNqGRD8BdfXibNM9f3XP/+rFK7yeMowhEcwyn4cAU1uIM6NIDAIzzDK7w50nlx3p2PeWvByWcO4Q+czx+Lz43H</latexit>

<latexit sha1_base64="dZJZIhgAl/xbndHHaGkVaUzLzZ8=">AAAB7XicbVBNSwMxEJ2tX7V+VT16CbaCp2VXRT0WvXisYD+gXUo2zbax2WRJskJZ+h+8eFDEq//Hm//GtN2Dtj4YeLw3w8y8MOFMG8/7dgorq2vrG8XN0tb2zu5eef+gqWWqCG0QyaVqh1hTzgRtGGY4bSeK4jjktBWObqd+64kqzaR4MOOEBjEeCBYxgo2VmlX34vyy2itXPNebAS0TPycVyFHvlb+6fUnSmApDONa643uJCTKsDCOcTkrdVNMEkxEe0I6lAsdUB9ns2gk6sUofRVLZEgbN1N8TGY61Hseh7YyxGepFbyr+53VSE10HGRNJaqgg80VRypGRaPo66jNFieFjSzBRzN6KyBArTIwNqGRD8BdfXibNM9f3XP/+rFK7yeMowhEcwyn4cAU1uIM6NIDAIzzDK7w50nlx3p2PeWvByWcO4Q+czx+Lz43H</latexit>

<latexit sha1_base64="dZJZIhgAl/xbndHHaGkVaUzLzZ8=">AAAB7XicbVBNSwMxEJ2tX7V+VT16CbaCp2VXRT0WvXisYD+gXUo2zbax2WRJskJZ+h+8eFDEq//Hm//GtN2Dtj4YeLw3w8y8MOFMG8/7dgorq2vrG8XN0tb2zu5eef+gqWWqCG0QyaVqh1hTzgRtGGY4bSeK4jjktBWObqd+64kqzaR4MOOEBjEeCBYxgo2VmlX34vyy2itXPNebAS0TPycVyFHvlb+6fUnSmApDONa643uJCTKsDCOcTkrdVNMEkxEe0I6lAsdUB9ns2gk6sUofRVLZEgbN1N8TGY61Hseh7YyxGepFbyr+53VSE10HGRNJaqgg80VRypGRaPo66jNFieFjSzBRzN6KyBArTIwNqGRD8BdfXibNM9f3XP/+rFK7yeMowhEcwyn4cAU1uIM6NIDAIzzDK7w50nlx3p2PeWvByWcO4Q+czx+Lz43H</latexit>

.678

<latexit sha1_base64="pknc91tTG9t33C1SXCt8vODPOOs=">AAAB7XicbVA9TwJBEJ3zE/ELtbTZCCZWlzsKoSTaWGIiHwlcyN6yByt7u5fdPRNy4T/YWGiMrf/Hzn/jAlco+JJJXt6bycy8MOFMG8/7djY2t7Z3dgt7xf2Dw6Pj0slpW8tUEdoikkvVDbGmnAnaMsxw2k0UxXHIaSec3M79zhNVmknxYKYJDWI8EixiBBsrtSvuda1eGZTKnustgNaJn5My5GgOSl/9oSRpTIUhHGvd873EBBlWhhFOZ8V+qmmCyQSPaM9SgWOqg2xx7QxdWmWIIqlsCYMW6u+JDMdaT+PQdsbYjPWqNxf/83qpiepBxkSSGirIclGUcmQkmr+OhkxRYvjUEkwUs7ciMsYKE2MDKtoQ/NWX10m76vqe699Xy42bPI4CnMMFXIEPNWjAHTShBQQe4Rle4c2Rzovz7nwsWzecfOYM/sD5/AGX/43P</latexit>

<latexit sha1_base64="pknc91tTG9t33C1SXCt8vODPOOs=">AAAB7XicbVA9TwJBEJ3zE/ELtbTZCCZWlzsKoSTaWGIiHwlcyN6yByt7u5fdPRNy4T/YWGiMrf/Hzn/jAlco+JJJXt6bycy8MOFMG8/7djY2t7Z3dgt7xf2Dw6Pj0slpW8tUEdoikkvVDbGmnAnaMsxw2k0UxXHIaSec3M79zhNVmknxYKYJDWI8EixiBBsrtSvuda1eGZTKnustgNaJn5My5GgOSl/9oSRpTIUhHGvd873EBBlWhhFOZ8V+qmmCyQSPaM9SgWOqg2xx7QxdWmWIIqlsCYMW6u+JDMdaT+PQdsbYjPWqNxf/83qpiepBxkSSGirIclGUcmQkmr+OhkxRYvjUEkwUs7ciMsYKE2MDKtoQ/NWX10m76vqe699Xy42bPI4CnMMFXIEPNWjAHTShBQQe4Rle4c2Rzovz7nwsWzecfOYM/sD5/AGX/43P</latexit>

<latexit sha1_base64="pknc91tTG9t33C1SXCt8vODPOOs=">AAAB7XicbVA9TwJBEJ3zE/ELtbTZCCZWlzsKoSTaWGIiHwlcyN6yByt7u5fdPRNy4T/YWGiMrf/Hzn/jAlco+JJJXt6bycy8MOFMG8/7djY2t7Z3dgt7xf2Dw6Pj0slpW8tUEdoikkvVDbGmnAnaMsxw2k0UxXHIaSec3M79zhNVmknxYKYJDWI8EixiBBsrtSvuda1eGZTKnustgNaJn5My5GgOSl/9oSRpTIUhHGvd873EBBlWhhFOZ8V+qmmCyQSPaM9SgWOqg2xx7QxdWmWIIqlsCYMW6u+JDMdaT+PQdsbYjPWqNxf/83qpiepBxkSSGirIclGUcmQkmr+OhkxRYvjUEkwUs7ciMsYKE2MDKtoQ/NWX10m76vqe699Xy42bPI4CnMMFXIEPNWjAHTShBQQe4Rle4c2Rzovz7nwsWzecfOYM/sD5/AGX/43P</latexit>

<latexit sha1_base64="pknc91tTG9t33C1SXCt8vODPOOs=">AAAB7XicbVA9TwJBEJ3zE/ELtbTZCCZWlzsKoSTaWGIiHwlcyN6yByt7u5fdPRNy4T/YWGiMrf/Hzn/jAlco+JJJXt6bycy8MOFMG8/7djY2t7Z3dgt7xf2Dw6Pj0slpW8tUEdoikkvVDbGmnAnaMsxw2k0UxXHIaSec3M79zhNVmknxYKYJDWI8EixiBBsrtSvuda1eGZTKnustgNaJn5My5GgOSl/9oSRpTIUhHGvd873EBBlWhhFOZ8V+qmmCyQSPaM9SgWOqg2xx7QxdWmWIIqlsCYMW6u+JDMdaT+PQdsbYjPWqNxf/83qpiepBxkSSGirIclGUcmQkmr+OhkxRYvjUEkwUs7ciMsYKE2MDKtoQ/NWX10m76vqe699Xy42bPI4CnMMFXIEPNWjAHTShBQQe4Rle4c2Rzovz7nwsWzecfOYM/sD5/AGX/43P</latexit>

.485