Predicting Eye Gaze Location on Websites

Ciheng Zhang

1

, Decky Aspandi

2

and Steffen Staab

2, 3

1

Institute of Industrial Automation and Software Engineering, University of Stuttgart, Stuttgart, Germany

2

Institute for Parallel and Distributed Systems, University of Stuttgart, Stuttgart, Germany

3

Web and Internet Science, University of Southampton, Southampton, U.K.

Keywords:

Eye-Gaze Saliency, Image Translation, Visual Attention.

Abstract:

World-Wide-Web, with website and webpage as a main interface, facilitates dissemination of important infor-

mation. Hence it is crucial to optimize webpage design for better user interaction, which is primarily done by

analyzing users’ behavior, especially users’ eye-gaze locations on the webpage. However, gathering these data

is still considered to be labor and time intensive. In this work, we enable the development of automatic eye-

gaze estimations given webpage screenshots as input by curating of a unified dataset that consists of webpage

screenshots, eye-gaze heatmap and website’s layout information in the form of image and text masks. Our cu-

rated dataset allows us to propose a deep learning-based model that leverages on both webpage screenshot and

content information (image and text spatial location), which are then combined through attention mechanism

for effective eye-gaze prediction. In our experiment, we show benefits of careful fine-tuning using our unified

dataset to improve accuracy of eye-gaze predictions. We further observe the capability of our model to focus

on targeted areas (images and text) to achieve accurate eye-gaze area predictions. Finally, comparison with

other alternatives shows state-of-the-art result of our approach, establishing a benchmark for webpage based

eye-gaze prediction task.

1 INTRODUCTION

Analysis of user behavior during their interaction with

the website (and contained webpages) is important

to evaluate the website overall quality. This infor-

mation can be used to create more optimized web-

pages for better interactions, as such, there are needs

to characterize these behaviors. Some of the charac-

teristics include users’ tendency to focus on certain

areas (e.g. upper left corner) during browsing (Shen

and Zhao, 2014). Other important characteristics can

be defined from users’ eye-gaze data, which normally

represents visual attention of the users during website

interaction. This data is usually acquired from hu-

man pupil-retinal location and movement relative to

the object of interest that allows one to pinpoint exact

webpage area, where users focus during their inter-

actions and its relations with overall webpage struc-

ture. Although this information is crucial to create a

more optimized website for better interactions, How-

ever, acquiring these eye-gaze data from every user

during their browsing duration is difficult, given the

complexity of the acquisition setting. Thus there is

currently a need for an automatic approach to predict

these eye-gaze locations given an observation of the

webpages.

In computer vision field, saliency prediction task

has been extensively investigated that allows estima-

tion of people’s attention given an image. The main

task is to estimate saliency map, which highlights first

location (or region) where observers’ eyes focus, with

photographs of natural scenes (Zhang et al., 2018;

Kroner et al., 2020) commonly used as input. Several

methods have been developed so far to solve this task,

including machine learning (Hou and Zhang, 2008;

Li et al., 2012) and recently deep learning based ap-

proaches (Kroner et al., 2020) with quite high accu-

racy achieved. With this progress, there is an oppor-

tunity to adopt these automatic, visual based saliency

predictors for eye-gaze predictions task, by represent-

ing input observation in a form of webpage screen-

shot. This enables adaptation of task objective for

eye-gaze heatmap prediction, in lieu of saliency map,

with both predicted maps representing area where

most people (or in our case users) attend.

The challenges however are differences between

saliency and eye-gaze prediction tasks in terms of

content, structure and layout of input images. For

Zhang, C., Aspandi, D. and Staab, S.

Predicting Eye Gaze Location on Websites.

DOI: 10.5220/0011747300003417

In Proceedings of the 18th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2023) - Volume 4: VISAPP, pages

121-132

ISBN: 978-989-758-634-7; ISSN: 2184-4321

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

121

instance, there is not any concept of depth on web-

page screenshot as opposed to natural images, an as-

pect that is highly utilized for general visual saliency

estimation. In addition, high contrast and varied col-

ored areas on natural image are commonly regarded

as saliency area, which may not be the case for web-

page screenshots, given that user eye-gaze locations

are highly dependent on the type of interactions dur-

ing website browsing. This problem can be recti-

fied by the use of a data-driven approach (Pan and

Yang, 2010), which can be made feasible through

fine-tuning using a specialized dataset on a particu-

lar task. However, this attempt is currently hampered

by the lack of such dataset.

In this work, we curate a generalized dataset of

eye-gaze prediction from webpage screenshots to en-

able effective training for machine and deep learning

approaches. Using this dataset, we propose a deep

learning based method that incorporates image and

textual locations of webpage through mask modali-

ties and combines them with attention fusion mecha-

nism. We then evaluate the impact of transfer learn-

ing, including comparison with other alternatives, to

establish a benchmark for this task. Specifically, the

contributions of this work are:

1. The establishment of a unified benchmark dataset

for eye-gaze detection from webpage screenshots,

derived from website and user interactions data.

2. A novel deep learning-based and multi-modal

eye-gaze detector with internal attention that

leverages characteristics of input contents and im-

portance of each stream of modality for accurate

eye-gaze predictions.

3. Benchmark results of automatic eye-gaze location

estimators and our state-of-the-art results for eye-

gaze detection task given webpage screenshot in-

puts.

2 RELATED WORK

An early example of work analyzing website con-

tent is done by Shen and Zhao et.al. (Shen and Zhao,

2014) where three types of webpages are analyzed:

Text-based, Pictorial-based, and Mixed (combination

of Text and Pictorial) websites. The authors show that

some attention characteristics of users during their

interactions with webpage exist, with main finding

that users usually pay more attention to some relevant

parts of websites (such as left-top corner of a website)

and their tendency to focus on areas where large im-

ages are present. Furthermore, on the websites from

‘Text’ category, their preference to focus on certain

parts of websites (middle left and bottom left regions)

is perceived. Lastly, they propose multi-kernel ma-

chine learning inferences for eye-gaze heatmap pre-

dictions (which represent visual attention of users) for

their developed website eye-gaze dataset of Fixations

in Webpage Images dataset (FiWI).

In computer vision field, the task of locating (or

predicting) users’ attention to natural image is com-

monly called as saliency prediction. These tasks are

commonly solved by utilising machine learning tech-

niques, given their automation capability. An earliest

example of this method is Incremental Coding Length

(ICL) (Hou and Zhang, 2008) which aims to pre-

dict the activation of saliency location by measuring

perspective entropy gain of each input feature (sev-

eral image patches) as a linear combination of sparse

coding basis function. Other algorithm of Context-

Aware Saliency Detection (CASD) capitalises on the

concept of dominant objects as additional context to

improve their prediction (Goferman et al., 2011). Fur-

thermore, D Houx et.al. (Houx et al., 2012) propose

an approach that aims to solve figure-ground separa-

tion problem for prediction. They use a binary and

holistic image descriptor of Image Signature, which

is defined as sign function of Discrete Cosine Trans-

form (DCT) of an image as additional input. Subse-

quently, Hypercomplex Fourier Transform (HFT) (Li

et al., 2012) is used to transform input image to fre-

quency domain to estimate saliency map. One rele-

vant work utilizes deep learning based method, given

their accurate estimations and ability to leverage huge

datasets size (that are increasingly present). This ap-

proach is based on Encoder and Decoder structure

with a pre-trained VGG network to predict saliency

maps (Kroner et al., 2020). Finally in recent years,

several researchers extend the saliency approach to

work with image and depth input. One example is the

work from Zhou et. al. (Zhou et al., 2021) that uses

Hierarchical Multimodal Fusion Network to process

both RGB (Red, Gren and Blue) colours image and

depth maps (one channel) as additional modality for

the input, which results in more accurate gaze maps

prediction.

Even though all of the described methods work for

general visual saliency predictions, however, their ca-

pability to predict users’ eye-gaze location on web-

page is not yet investigated, given lack of dataset

available. Thus in this work, we propose to cre-

ate a specialized webpage based eye-gaze prediction

datasets to allow for the development of automatic

eye-gaze predictions of webpage screenshot inputs,

then utilize it to develop our deep learning based eye-

gaze location predictor.

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

122

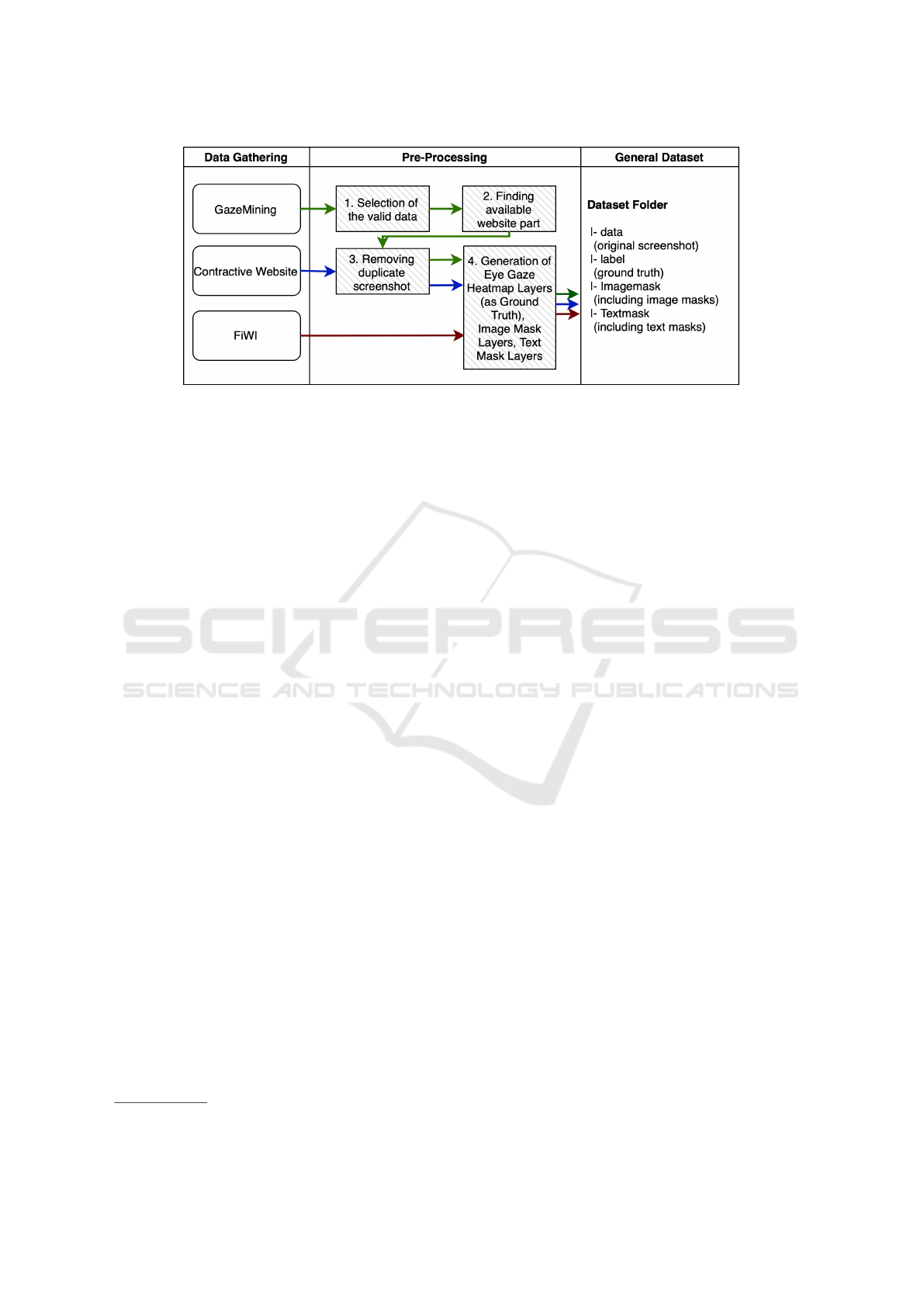

Figure 1: The flow of dataset generation.

3 METHODOLOGY

3.1 Dataset Gathering and Processing

In this work, we search, process and curate a gen-

eralized eye-gaze dataset from available datasets in

literature. Here, we focus on three publicly avail-

able user-website interaction datasets (where eye-

gaze and webpage screenshots are present): GazeMi-

ning (Menges, 2020), Contrastive Website (Aspandi

et al., 2022a), and Fixations in Webpage Images

(FiWI) dataset (Shen and Zhao, 2014). In this sec-

tion, we provide a short description of datasets, and

proceed with description of pre-processing algorithm

that we conduct to produce our unified dataset. The

processed dataset is available at

1

and available after

request.

• GazeMining is a dataset of video and interac-

tion recordings on dynamic webpages (Menges,

2020). In this work, the authors focused on web-

sites which contain constantly changing scenes

(thus dynamic). The data was collected on March

2019 from four participants who interacted with

12 different websites.

• Contrastive Website dataset is a recent website

interaction-based dataset, where participants were

asked to visit two sets of websites (each of four)

and performed respective tasks (flight planning,

route search, new searching and online shopping)

resulting on more than 160 sessions (Aspandi

et al., 2022a).

• FiWI dataset (Shen and Zhao, 2014) is a dataset

that focuses on website-based saliency prediction.

However, it is small in term of webpage screen-

shot availability (only 149 images) compared to

1

https://doi.org/10.18419/darus-3251

two previous datasets that prevents its use for

larger scale evaluations (this is especially true for

a deep learning model). Therefore FiWI dataset

is mostly (and commonly) utilized as comparative

evaluation with other models, but not for model

training.

Figure 1 shows the flow of our dataset curation,

data pre-processing and the results of the generalized

dataset. The first step of pre-processing is elimina-

tion of empty screenshots (which exist in small quan-

tities on GazeMining dataset), i.e. no screenshots are

present, which can come due to rapid sampling dur-

ing acquisition. Secondly, due to dynamic nature of

the observed websites, webpages’ static parts (that

consist of all unchanged, rendered webpages’ ele-

ments during interaction time) are removed (or black-

ened) on original datasets, which necessitates us to

find and collect only recorded screenshot - thus re-

moving irrelevant observations (black parts). Thirdly,

we remove the duplicate webpage screenshots of both

datasets for efficiency and generate respective loca-

tions of Image and Text as independent layers (called

Image and Text Mask, which we will detail in Sec-

tion 3.2.1). Lastly, eye-gaze heatmap layers are gen-

erated (as ground truth) with respect to duration of

each observed eye-gaze. This pipeline is applied to

all datasets, with the exception of Contrastive Web-

site, where first and second steps are skipped, and for

FiWI where only last step is necessary. In the end, our

unified dataset includes four sub-folders (data, label,

Imagemask, TextMask) with a total of 3119 screen-

shot examples (1546 samples or 49.6% from GazeMi-

ning, 1424 instances or 45.7% from Contrastive Web-

site dataset and 149 examples or 4.7% from FiWI)

along with the associated ground truths.

Predicting Eye Gaze Location on Websites

123

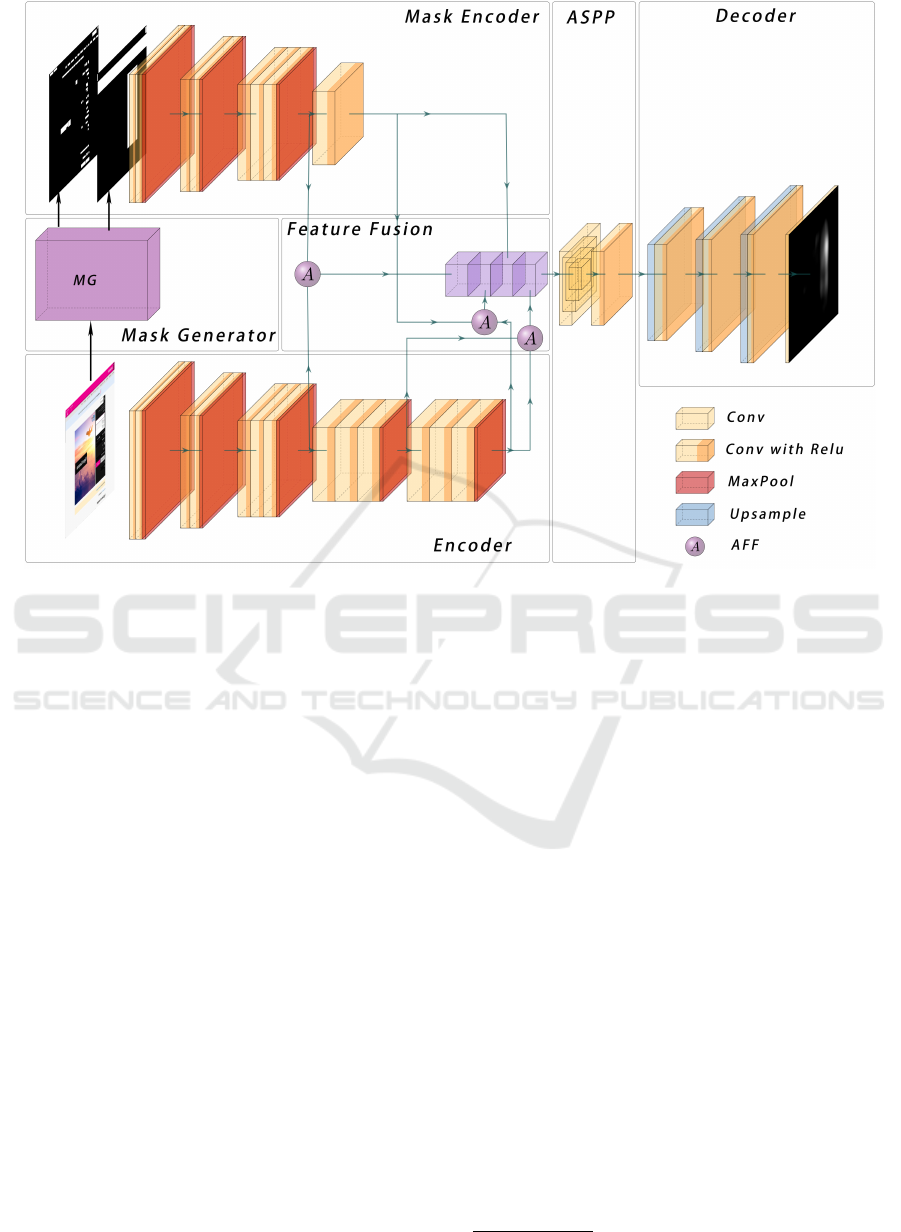

Figure 2: Structure of Multi Mask Input Attentional Network.

3.2 Multi Mask Input Attentional

Network (MMIAN) for Eye Gaze

Prediction

Our pre-processed datasets allows us to propose a

deep learning based model to benefit from sizable

number of observations (Rodriguez-Diaz et al., 2021).

Here we propose a Multi Mask Input Attentional Net-

work (MMIAN) that aims to predict eye gaze loca-

tion given a webpage screenshot as input. Specifi-

cally, given input webpage screenshot images of X

i..n

,

with X as 2D matrix of webpage screenshot image,

and n as number of batch size, MMIAN estimates

eye-gaze locations of

ˆ

Y

i..n

, where each

ˆ

Y is a 2D ma-

trix of eye-gaze heatmap, with each cell value repre-

sents probability of eye-gaze locations. The structure

of MMIAN follows encoder-decoder scheme that is

inspired by Multi-scale Information Network (MSI-

Model) (Kroner et al., 2020) consisting of several

modules: input and Mask Encoder, Mask Generator,

Attentional Feature Fusion, Atrous Spatial Pyramid

Pooling (ASPP) Module and Decoder. In this work,

we further propose several important modifications:

1. Incorporation of several input masks to include

webpage content information of textual and im-

ages spatial location.

2. Addition of attention mechanisms for effective fu-

sion of input modalities.

Overall architecture of MMIAN can be seen in

Figure 2. Specifically, an input webpage screenshot

image is passed into mask generator to produce an

image mask and text mask. Then both masks are con-

catenated and fed into Mask Encoder to extract rele-

vant features, while input image is simultaneously fed

into input Encoder. Feature Fusion module then fuses

extracted mask features and input features with sev-

eral attentional modules and combines them in a con-

joint block. This feature block is then fed into ASPP

part to enlarge field of views, capturing different reso-

lution views from the input, thus producing richer fea-

ture representations. These features are then passed

through series of up-sampling layers (Decoder) cre-

ating an eye-gaze saliency map as a final result. The

implementation code of our model is available on our

repository

2

.

3.2.1 Input Masks Generator

It has been observed that webpage layout (which in

principle is arrangement of images and texts) is an

important aspect of webpage (Shen and Zhao, 2014).

To benefit from this information, we propose to in-

2

https://github.com/ZackCHZhang/WebToGaze

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

124

(a) Input (b) Text Mask (c) Image Mask

Figure 3: One case of input and text mask generation. Figure (a) is an example of webpage screenshot, Figure (b) and (c) are

the generated text mask and image mask respectively.

corporate spatial locations of both image and text on

websites with mask representations: image and text

masks. These masks are represented in form of a ma-

trix, where each cell is activated in the presence of

both image and text in webpage screenshot.

• Image Mask Generation: For image loca-

tion recognition, we use method from M. Xie et

al. (Xie et al., 2020) which produces bounding-

box locations of an input image in a binary map

(i.e. map value is set to 1 where image is present,

otherwise 0).

• Text Mask Generation: we use one of avail-

able optical character recognition (OCR) meth-

ods of Efficient and Accuracy Scene Text detec-

tion (EAST) (Zhou et al., 2017) to locate location

of text of webpage screenshot input. Similar to

Image Mask, this process generates correspond-

ing Text Mask in Binary map format.

One example of generated masks from our curated

dataset can be seen in Figure 3. We can see that both

of masks contain quite accurate locations of both Im-

age and Text on an input webpage screenshot. Even

though some mild imperfections occur (i.e. some but-

tons can be recognized as images, it does not fully de-

tect locations of certain images due to small size, and

some text within images are included on text masks),

however in general the locations of both image and

text on input webpage screenshots are properly rec-

ognized. These masks are then concatenated to be fed

into Mask Encoder part.

3.2.2 Input and Mask Encoder

Encoder part is a series of Convolutional Neural Net-

work that aims to extract visual features from input

matrix, which is original web screenshot image and

concatenated generated Masks, for Input and Mask

Encoder respectively. Both Encoders are based on

VGG16 (Simonyan and Zisserman, 2014) architec-

tures with last fully connected layer removed, and

further reductions of convolution layers (five convo-

lutional layers with Relu and two max-polling layers)

applied for Mask Encoder. Input and Mask encoder

then produce MaskFeatures and Screenshot Features

to be combined through Multi-Modal Attentional Fu-

sion module.

3.2.3 Multi-Modal Attentional Fusion

We employ multi-modal inputs (that consists of web-

page screenshot and generated masks) to benefit from

additional stream of information (Zhou et al., 2021;

Aspandi et al., 2020). Moreover, we introduce atten-

tional fusion mechanism for more effective fusion of

these modalities, as opposed to simple fusion opera-

tions of summation or concatenation (Aspandi et al.,

2022b), and further solving the problems of inconsis-

tent input semantics and scales (Dai et al., 2021). An

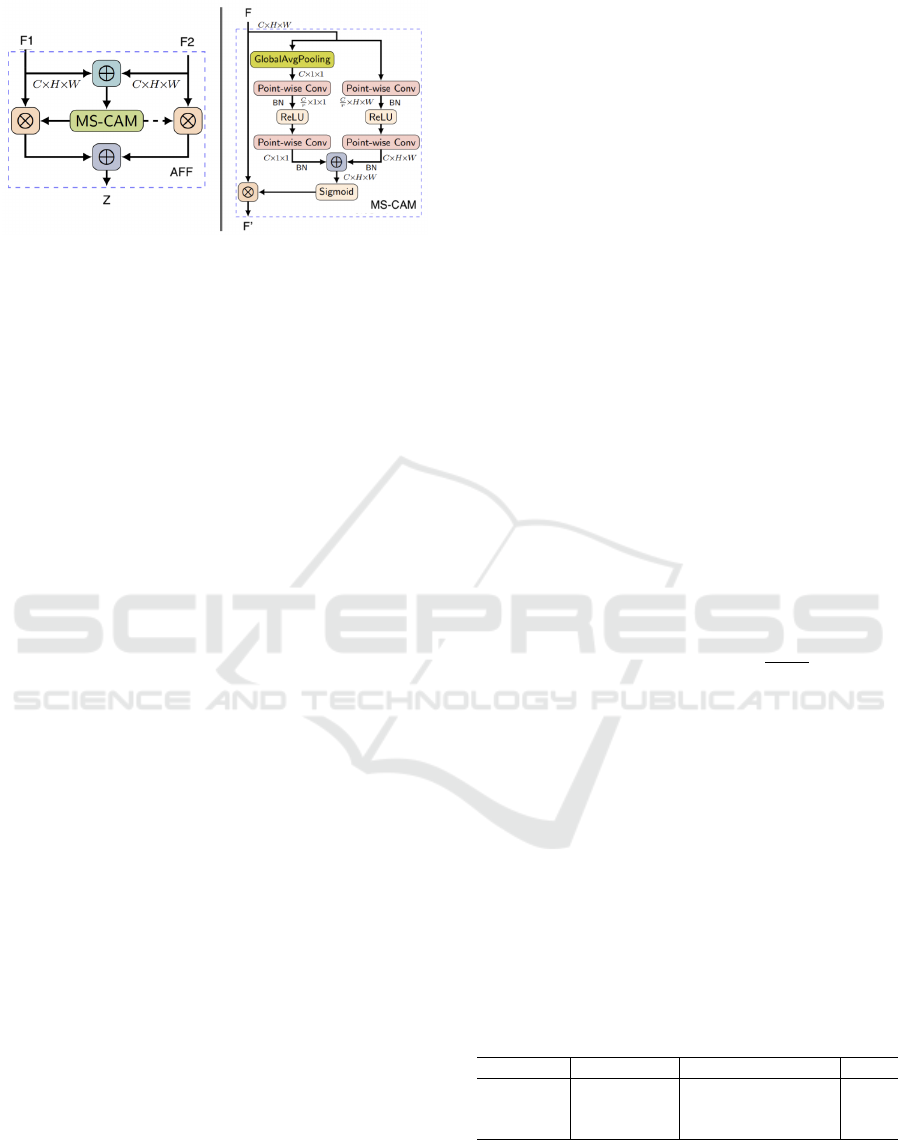

example of attentional feature fusion block (AFF) can

be seen in Figure 4 where it receives two streams of

inputs (denoted as F1 and F2) and initially fuses these

input features through matrix addition (denoted as

L

). The result from addition of internal Multi-scale

Channel Attention module (that aggregates channel

context by a series of point-wise convolutions) and

a sigmoid function generates a fusion weight. This

fusion weight and its another counterpart (where it

is negated by one) are multiplied (denoted as

N

) by

each of original inputs respectively, and then added

together (

L

symbol) to produce their weighted av-

erage as fused feature of Z. This fusion process is

applied to the received MaskFeatures and Screenshot

Features three times, to allow for observation of dif-

ferent scales of respective features (these operations

are marked as circled A in Figure 2). The resultants

of the fused features are then concatenated to a con-

joint block for subsequent pipelines.

3.2.4 Atrous Spatial Pyramid Pooling Module

(ASPP)

ASPP module is made by superimposing different

atrous convolutions (Chen et al., 2017) filters to ob-

tain features from larger receptive fields. This is

beneficial given that it is common for webpage lay-

outs to be modularized into a variety of different sub-

layouts. This thus increases receptive fields (or field-

of-view) of internal convolutional layers (where the

Predicting Eye Gaze Location on Websites

125

Figure 4: Left is Attentional Feature Fusion block, right is

Multi-scale Channel Attention module (Dai et al., 2021).

result from several filters are combined together) en-

ables us to capture such layout configurations. In our

ASPP module, we use dilation rates of 4, 8, and 12,

with number of 1 × 1 filter to be 256 to ensure kernel

size compatibility. This module is applied to conjoint

block input, and subsequent features are obtained for

decoding operation.

3.2.5 Output Decoder

Decoder part consists of a series of convolution layers

with up-sampling to decode input features, generating

final prediction of eye-gaze heatmap location (in the

form of a 2D tensor and in binary mode). Specifi-

cally, number of up-sampling blocks consisting of bi-

linear scaling operations (each sequentially doubles

the number of input tensor) and a subsequent convo-

lutional layer with kernel size 3 × 3 are used. With

this module, we provide the features generated from

ASPP as input features and final eye-gaze heatmap

predictions are obtained. Finally, we convert binary

heatmap to gray-scale format, to conform to the origi-

nal scale of eye-gaze heatmap from ground-truth map.

3.3 Training Procedure

To evaluate the impact of training of models uti-

lizing webpage screenshot based eye-gaze predic-

tion datasets, first we perform training process us-

ing an original saliency prediction model of Kro-

ner’s Model (MSI-Model) (Kroner et al., 2020). This

model has been pre-trained on general visual saliency

dataset (SALICON) (Jiang et al., 2015), which we

call as P-MSI, that serves as baseline. Given this

pre-trained model, we perform fine-tuning using our

pre-processed datasets (GazeMining and Contrac-

tive Website dataset) producing FT-MSI-GM and FT-

MSI-CW respectively, and evaluate observed accu-

racy gains. We did the model fine-tuning instead of

performing training from scratch to maximize the po-

tential accuracy obtained - we empirically found that

the overall obtained accuracy of the latter to be sub-

stantially lower.

Additionally, we propose to evaluate the impact

of training using a combined dataset (i.e. we com-

bine examples of both of GazeMining and Contrastive

Website) by further training MSI-Model with this

merged dataset, that we name as FT-MSI-CMB. The

training is conducted until convergence and no fur-

ther accuracy improvement is perceived. Afterward,

we proceed to training stage of our proposed model of

MMIAN by first transferring Encoder and Decoder’s

weight from best performing models of the previous

step, and initialized weights of both Mask Encoder

and ASPP by zero-mean uniform distribution.

All of the training is conducted by minimizing

Kullback-Leibler divergence (KLD) loss function as

shown in Equation 1, with Y indicating ground truth

and ε is a regularization constant to guarantee that

denominator is not zero. In this loss, estimation of

saliency maps can be regarded as a probability dis-

tribution prediction task, as formulated by Jetley et.

al. (Jetley et al., 2016). The output of estimator

is normalized to a non-negative number, with KLD

value used to measure level of differences between

predictions and ground truth. Finally, Adam opti-

mizer (Kingma and Ba, 2014) with a learning rate of

10

−4

is used for overall optimization.

D

KL

(

ˆ

Y ||Y ) =

∑

i

Y

i

ln(ε +

Y

i

ε +

ˆ

Y

i

) (1)

3.4 Experiment Setting

3.4.1 Dataset Experiment Setting

In this experiment, all of three pre-processed datasets

(cf. Section 3.1) are utilized, with training, valida-

tion and test instance numbers are shown in Table 1.

Specifically, both GazeMining and Contrastive Web-

site datasets are divided with 60%, 20% and 20% of

samples for training, validation set, and test set re-

spectively. Whereas for FiWI dataset (Shen and Zhao,

2014), all samples (of 149 images) are used for test-

ing.

Table 1: Training, validation and test set for each dataset.

Splits GazeMining Contrastive Website FiWI

Training 928 868 -

Validation 309 278 -

Test 309 278 149

3.4.2 Quantitative Metrics

We use three quantitative metrics of Area under Re-

ceiver Operating Characteristic curve (AUC), Nor-

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

126

malized Scanpath Saliency (NSS), and Person’s Cor-

relation Coefficient (CC) to judge the quality of mod-

els’ prediction.

• NSS is commonly used for general saliency pre-

diction tasks as a direct correspondence mea-

sure between predicted saliency maps and ground

truth, which is computed as average normal-

ized saliency at fixated locations (Peters et al.,

2005). Furthermore, NSS is sensitive to false pos-

itives, relative differences in saliency across eval-

uated image, and general monotonic transforma-

tions (Bylinskii et al., 2018). With Y

B

as a bi-

nary map of true fixation location and N to indi-

cate total pixel number and i indicates each pixel

instance, NSS value can be calculated using an

equation as shown below:

NSS (

ˆ

Y ,Y

B

) =

1

N

∑

i

ˆ

Y

i

×Y

B

i

(2)

• AUC-J evaluates predicted eye-gaze heatmaps as

a classification task, where each prediction pixel

is evaluated through a binary classification setting.

Here, a certain threshold value is used to decide

whether it is deemed to be correctly predicted as

eye-gaze locations, thus emphasizing frequency

of true positive. We use method described by Judd

et. al. (Judd et al., 2009) to select all required

thresholds.

• CC metric aims to evaluate level of linear rela-

tionship between two input distributions. In eye-

gaze location prediction task, both generated eye-

gaze location map and ground truth are treated as

random variables (Le Meur et al., 2007), and level

value is calculated with both of these map inputs

following equation 3. Thus, with operator σ as

covariance matrix, CC value can be calculated as:

CC(

ˆ

Y ,Y ) =

σ(

ˆ

Y , Y )

σ(Y ) × σ(

ˆ

Y )

(3)

4 EXPERIMENT RESULT

In this section, we first present results of both base-

line and our proposed approach using part of our pre-

processed dataset (GazeMining and Contrastive Web-

site), as outlined in Section 3. Then, we provide a

comparison of best results of our approach with al-

ternative saliency prediction models using full set of

our pre-processed dataset, establishing a benchmark

for eye-gaze prediction task given webpage screen-

shot inputs.

Table 2: Results of fine-tuned models on both preprocessed

GazeMining and Contrastive Website datasets.

No. Models

GazeMining Contrastive Website

NSS AUC-J CC NSS AUC-J CC

1. P-MSI 1.037 0.699 0.130 0.839 0.683 0.109

2. FT-MSI-GM 1.398 0.753 0.170 0.717 0.651 0.093

3. FT-MSI-CW 0.695 0.655 0.081 2.364 0.777 0.277

4. FT-MSI-CMB 1.447 0.752 0.170 2.269 0.789 0.269

4.1 Impact of Fine-Tuning Using

Independent and Combined Dataset

Table 2 shows results of four alternative models that

are trained using different datasets. Here we can see

that pre-trained MSI (P-MSI) model performs worse

in comparison to other models, which suggests its in-

ability to generalize to eye-gaze saliency prediction

task. We can further observe that when fine-tuning is

applied, improvement from original P-MSI is notice-

able, especially when it is tested on similar dataset

used for fine-tuning. This can be seen with higher

values achieved on all metrics of FT-MSI-GM and FT-

MSI-CW compared to P-MSI on GazeMining dataset

and Contrastive Website dataset respectively.

When model is trained using combined dataset,

however, accuracy improvement is instead lower.

This is indicated by lower quantitative values

achieved by FT-MSI-CMB as opposed to their coun-

terparts (FT-MSI-GM and FT-MSI-CW), that are

trained separately. This phenomenon may come

from difficulty of estimator to learn from these two

datasets, which are quite distinct, and also from the

nature of task executed by the users on each dataset.

Figure 5 presents visual prediction examples

for baseline model (P-MSI) and best performing

model (FT-MSI-CW) for Contrastive Website Dataset

(where the example is originated). The screenshot ex-

ample is a part of route search task, where user has to

enter vehicle information to estimate fuel consump-

tion. Thus it is natural for users to focus on dialog

box (displayed in the center of the webpage screen-

shot) resulting in users’ fixations being located within

this area.

By evaluating the predictions of eye-gaze

heatmaps of baseline model of P-MSI, we can see

that even though it manages to predict parts of

ground-truth (eye-gaze) locations, however, it also

falsely predicts other less relevant locations as eye-

gaze locations (i.e. advertisement part). These results

come from its tendency to detect high color contrast

as area of interest, which is common in natural image

datasets (SALICON (Jiang et al., 2015)). However,

this leads to higher occurrences of false positive, thus

reducing its accuracy. Our fine-tuned model however,

manages to reduce existing inaccuracies by absorbing

Predicting Eye Gaze Location on Websites

127

(a) Input+GT (b) P-MSI (c) FT-MSI-CW

Figure 5: Comparison between pre-trained results from P-MSI (baseline) and FT-MSI-CW (Fine-tuned). Figure (a) shows

webpage screenshot with ground truth (eye-gaze heatmap). Figure (b) and (c) show predictions from P-MSI and FT-MSI-CW.

the characteristics of dataset during fine-tuning,

adapting model to this specific eye-gaze prediction

task. In this example, we see that the predictions of

fine-tuned models are more precise, especially on

dialog box and they exclude some of high contrast

area that are normally recognized as saliency area

(e.g. advertisement locations).

From the experimental result, it can be concluded

that saliency detection model trained on natural image

dataset does not work well for direct application to

web screenshot scenario. This can be mitigated by

performing careful fine-tuning to only use a dedicated

dataset. Given this result, then we use FT-MSI-GM

and FT-MSI-CW as base for MMIAN optimization,

and for comparison in the next section.

4.2 Impact of Multi-Modal Masks and

Attention-Based Fusion

Table 3 presents results of our proposed MMIAN

model and best results of fine-tuned MSI-Model from

previous section. From this result, it can be seen that

MMIAN produces higher value across quantitative

metrics in overall, outperforming results from fine-

tuned MSI-Model. The gains on all of these metrics

altogether, suggest that estimation results of MMIAN

are more accurate compared to the best of FT-MSI re-

sults (from FT-MSI-GM and FT-MSI-CW), with im-

provements on both true positive (as judged by AUC-

Judd) and false positive Instances (as evaluated by

NSS and CC score).

In order to provide a more comprehensive analysis

of the impact of the use of image and text Masks and

attention mechanisms, we first show locations of de-

tected images and texts on webpage screenshot input

to provide semantic explanations of their relevances

for our MMIAN model to produce accurate predic-

tions. Then, we implemented a Gradient-weighted

Class Activation Mapping (Grad-CAM) (Selvaraju

et al., 2017) by propagating multiplied error value

and gradient to each convolutional layer, enabling us

to investigate the relevant activation of convolutional

kernels - with respect to image input - indicating

most prominent part of webpage screenshot for pre-

diction. The example of original webpage screenshot

input that includes image and text locations (drawn as

bounding box), associated eye-gaze location ground-

truth, generated Grad-CAM and each prediction of

fine-tuned MSI and MMIAN is shown in Figure 6.

On Figure 6, first we can see that prediction of

MMIAN is more accurate than FT-MSI, with larger

and correctly identified eye-gaze areas are present.

This is especially noticeable in areas where both im-

age and text are present. For examples there are ar-

eas where button resides (that is recognized as image)

and product description, which in this case, are in-

deed the locations where user looks at. This perceived

higher accuracy can be attributed to the use of image

and text masks during learning, which is combined

effectively through attention mechanism to properly

’guide’ learning process to put priority in this area.

This impact is also apparent from observation of grad-

cam heatmap of FT-MSI and MMIAN, where grad-

cam heatmap of MMIAN are seen to be more con-

centrated on both of images and text area simultane-

ously (indicating where models’ attention is) as op-

posed to ones produced by FT-MSI. Lastly, we also

notice that there are more frequent activations of grad-

cam heatmaps of MMIAN compared to ones from FT-

MSI, which may suggest that larger perceptual field of

view from MMIAN is indicative to be able to produce

more accurate eye-gaze estimations in overall.

4.3 State-of-the-Arts Comparison

We compare best predictions of our approach against

other available, seven generalized visual saliency

models:

1. Context-Aware Saliency Detection

(CASD) (Goferman et al., 2011).

2. Discrete Cosine Transform (DCTS) (Houx et al.,

2012).

3. Hypercomplex Fourier Transform (HFT) (Li

et al., 2012).

4. Incremental Coding Length (ICL) (Hou and

Zhang, 2008).

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

128

Figure 6: Example of prediction results of MMIAN and Fine-tuned MSI Model (FT-MSI). First column of (a) and (d) shows

webpage screenshot with outlined text (light green box) and image (purple box) area, along with eye-gaze heatmap ground

truth. Second column (b) and (e) shows predicted result from FT-MSI and MMIAN model, with third column of (c) and (f)

shows respective Grad-CAM heatmap.

5. RARE (Riche et al., 2012).

6. SeoMilanfar (Seo and Milanfar, 2009).

7. Spectral Residual (SR) (Hou and Zhang, 2007).

including one specialized eye-gaze location estimator

of Multiple Kernel Learning (MKL) (Shen and Zhao,

2014).

Table 4 shows results from all evaluated ap-

proaches. Here we see state-of-the-art results of

MMIAN that outperforms other alternatives, includ-

ing eye-gaze predictor of MKL with a large margin

on FiWI dataset (note that there is no training in-

volved for FiWI dataset evaluation). This result is

mainly due to large differences in task characteris-

tics between general visual saliency prediction (where

first seven models are trained) and webpage screen-

shot based eye-gaze estimations tasks (where MKL

and MMIAN are specialized). This highlights the in-

ability of visual saliency based models to generalize

on this specific task. Furthermore, our approach per-

forms better than MKL on FiWI, demonstrating the

effectiveness of our overall approach for eye-gaze es-

timation task.

Table 3: Quantitative results of both fine-tuned MSI models

and MMIAN model.

No. Models

GazeMining Contrastive Website

NSS AUC-J CC NSS AUC-J CC

1. FT-MSI 1.398 0.753 0.170 2.364 0.777 0.277

2. MMIAN 1.579 0.764 0.184 2.487 0.787 0.292

Figure 7 shows predictions example of our

MMIAN and four other best performing model on

FiWI for evaluation (excluding MKL, due to lack of

available implementation of the model). Based on this

figure, we can observe that most of alternative models

produce a large area of webpage screenshot as poten-

tial eye-gaze locations. However, this leads to largely

false positive prediction, given the mismatch between

predictions against actual eye-gaze locations from

user of these webpage screenshots (i.e. most area

are falsely identified as eye-gaze locations). In con-

trast, our approach produces more precise estimates,

as observed by more refined (and accurate) predicted

area of eye-gaze locations (that explains large mar-

gin in terms of NSS metrics value from our models

compared to others, as shown in Table 4). This is

mainly due to the tendency of visual saliency models

to focus on pure appearance of webpage screenshot

(i.e. high contrast images), as opposed to MMIAN

that has been conditioned to the specific character-

istics of our pre-processed eye-gaze dataset (which

inherently contains eye-gaze characteristics of users,

given webpage screenshot). Remarkably, our models

is also capable of correctly predicting eye-gaze loca-

tions on relevant areas, such as text (third row), im-

age (fourth row) and their combinations (first, second

and fifth row), in comparison with other approaches.

Here we see that our models’ predictions are consis-

tently more accurate than alternatives, demonstrating

the effectiveness of our approach.

Predicting Eye Gaze Location on Websites

129

Table 4: Comparison of existing saliency predictors evaluated on test sets of our full pre-processed dataset. The boldface

indicates best results, red color implies second-best results, and third-best results are marked by blue coloured fonts.

No. Models

GazeMining Contrastive Website FiWI

NSS AUC CC NSS AUC CC NSS AUC CC

1. CASD (Goferman et al., 2011) 0.567 0.653 0.064 0.419 0.614 0.053 0.680 0.732 0.233

2. DCTS (Houx et al., 2012) 0.479 0.618 0.053 0.256 0.552 0.035 0.541 0.671 0.195

3. HFT (Li et al., 2012) 0.707 0.644 0.088 0.534 0.593 0.067 0.740 0.737 0.251

4. ICL (Hou and Zhang, 2008) 0.485 0.518 0.057 0.192 0.490 0.030 0.444 0.618 0.162

5. RARE (Riche et al., 2012) 0.632 0.653 0.072 0.382 0.589 0.052 0.850 0.758 0.280

6. SeoMilanfar (Seo and Milanfar, 2009) 0.393 0.584 0.038 0.350 0.571 0.044 0.445 0.651 0.163

7. SR (Hou and Zhang, 2007) 0.566 0.639 0.062 0.510 0.612 0.062 0.635 0.714 0.216

8. MKL (Shen and Zhao, 2014) - - - - - - 1.200 0.702 0.382

9. MMIAN (proposed) 1.579 0.764 0.184 2.487 0.787 0.292 1.385 0.786 0.397

Figure 7: Five examples of screenshot inputs from FiWI dataset (with respective eye-gaze heatmaps ground-truth overlaid)

and predictions from other five saliency predictors (including ours).

5 CONCLUSION

In this work, we enable the development of automatic

eye-gaze estimations given webpage screenshot in-

puts to enable the improvement of the webpage lay-

out, and hence respective user interaction. We do this

by developing a unified eye-gaze dataset from three

available website and user interaction-based datasets:

GazeMining, Contrastive Website and FiWI. We then

pre-process each dataset to produce necessary data

for eye-gaze prediction task, such as webpage screen-

shot and corresponding eye-gaze heatmaps as ground

truth. In addition, we generate image and textual lo-

cations from webpage (in the form of masks) which

can be used for training and modeling. Given our uni-

fied eye-gaze dataset, then we propose a novel deep

learning based and multi-modal attentional network

eye-gaze predictor to benefit from the characteristics

of the dataset. Our proposed approach leverages spa-

tial locations of text and image (in form of masks),

which is further fused with attentional mechanisms to

enhance the prediction results.

During analysis of the impact of fine-tuning using

our pre-processed dataset, and the effects of training

when the combined dataset is used, we found that the

prediction results are indeed improved when careful

fine-tuning is conducted. Then, we evaluate the pre-

diction of our full approach (MMIAN) with respect

to the ground truth, existing text and image locations

from webpage screenshot, and part of Grad-CAM ac-

tivations. Here we notice accurate predictions of our

approach, especially on textual and image area where

users are looking at, with large and wide activation of

Grad-CAM that are concentrated on these locations.

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

130

This observation demonstrates the benefit of the use

of both image and text masks as input in combination

with attention mechanism.

To measure the competitive results of our pro-

posed approach, we compare them with other saliency

prediction alternatives, establishing a benchmark for

eye-gaze prediction task. In our comparison, we

found state-of-the-art results of our model with high

scores across quantitative metrics, and lower false

positive rates than other approaches. Visual analy-

sis further confirms our findings, that our approach

produces more accurate prediction of eye-gaze loca-

tions on relevant website locations, including where

text and image are present. The result suggests the su-

periority of our approach in capturing user behavior.

Future work will be to incorporate other user behav-

ior characteristics (e.g. mouse trajectory), including

users’ identity (if accessible) such as age, and loca-

tions as additional modalities to further benefit from

this information to improve prediction accuracy.

ACKNOWLEDGEMENT

This work is funded by UDeco project by Germany

BMBF-KMU Innovativ - 01IS20030B.

REFERENCES

Aspandi, D., Doosdal, S.,

¨

Ulger, V., Gillich, L., and

Staab, S. (2022a). User interaction analysis through

contrasting websites experience. arXiv preprint

arXiv:2201.03638.

Aspandi, D., Mallol-Ragolta, A., Schuller, B., and Binefa,

X. (2020). Latent-based adversarial neural networks

for facial affect estimations. In 2020 15th IEEE Inter-

national Conference on Automatic Face and Gesture

Recognition (FG 2020), pages 606–610. IEEE.

Aspandi, D., Sukno, F., Schuller, B. W., and Binefa, X.

(2022b). Audio-visual gated-sequenced neural net-

works for affect recognition. IEEE Transactions on

Affective Computing.

Bylinskii, Z., Judd, T., Oliva, A., Torralba, A., and Durand,

F. (2018). What do different evaluation metrics tell us

about saliency models? IEEE transactions on pattern

analysis and machine intelligence, 41(3):740–757.

Chen, L.-C., Papandreou, G., Kokkinos, I., Murphy, K., and

Yuille, A. L. (2017). Deeplab: Semantic image seg-

mentation with deep convolutional nets, atrous convo-

lution, and fully connected crfs. IEEE transactions on

pattern analysis and machine intelligence, 40(4):834–

848.

Dai, Y., Gieseke, F., Oehmcke, S., Wu, Y., and Barnard, K.

(2021). Attentional feature fusion. In Proceedings of

the IEEE/CVF Winter Conference on Applications of

Computer Vision, pages 3560–3569.

Goferman, S., Zelnik-Manor, L., and Tal, A. (2011).

Context-aware saliency detection. IEEE transac-

tions on pattern analysis and machine intelligence,

34(10):1915–1926.

Hou, X. and Zhang, L. (2007). Saliency detection: A spec-

tral residual approach. In 2007 IEEE Conference on

computer vision and pattern recognition, pages 1–8.

Ieee.

Hou, X. and Zhang, L. (2008). Dynamic visual attention:

Searching for coding length increments. Advances in

neural information processing systems, 21.

Houx, D., HAREL, J., and KOCH, C. (2012). Image signa-

ture: highlighting sparse salient regions. IEEE Trans-

actions on Pattern Analysis and Machine Intelligence,

34(1):194–201.

Jetley, S., Murray, N., and Vig, E. (2016). End-to-end

saliency mapping via probability distribution predic-

tion. In Proceedings of the IEEE conference on com-

puter vision and pattern recognition, pages 5753–

5761.

Jiang, M., Huang, S., Duan, J., and Zhao, Q. (2015). Sali-

con: Saliency in context. In Proceedings of the IEEE

conference on computer vision and pattern recogni-

tion, pages 1072–1080.

Judd, T., Ehinger, K., Durand, F., and Torralba, A. (2009).

Learning to predict where humans look. In 2009

IEEE 12th international conference on computer vi-

sion, pages 2106–2113. IEEE.

Kingma, D. P. and Ba, J. (2014). Adam: A

method for stochastic optimization. arXiv preprint

arXiv:1412.6980.

Kroner, A., Senden, M., Driessens, K., and Goebel, R.

(2020). Contextual encoder–decoder network for vi-

sual saliency prediction. Neural Networks, 129:261–

270.

Le Meur, O., Le Callet, P., and Barba, D. (2007). Predict-

ing visual fixations on video based on low-level visual

features. Vision research, 47(19):2483–2498.

Li, J., Levine, M. D., An, X., Xu, X., and He, H. (2012). Vi-

sual saliency based on scale-space analysis in the fre-

quency domain. IEEE transactions on pattern analy-

sis and machine intelligence, 35(4):996–1010.

Menges, R. (2020). Gazemining: A dataset of video and

interaction recordings on dynamic web pages. labels

of visual change, segmentation of videos into stimulus

shots, and discovery of visual stimuli.

Pan, S. J. and Yang, Q. (2010). A survey on transfer learn-

ing. IEEE Transactions on Knowledge and Data En-

gineering, 22(10):1345–1359.

Peters, R. J., Iyer, A., Itti, L., and Koch, C. (2005). Compo-

nents of bottom-up gaze allocation in natural images.

Vision Research, 45(18):2397–2416.

Riche, N., Mancas, M., Gosselin, B., and Dutoit, T. (2012).

Rare: A new bottom-up saliency model. In 2012 19th

IEEE International Conference on Image Processing,

pages 641–644. IEEE.

Rodriguez-Diaz, N., Aspandi, D., Sukno, F. M., and Binefa,

X. (2021). Machine learning-based lie detector ap-

plied to a novel annotated game dataset. Future Inter-

net, 14(1):2.

Predicting Eye Gaze Location on Websites

131

Selvaraju, R. R., Cogswell, M., Das, A., Vedantam, R.,

Parikh, D., and Batra, D. (2017). Grad-cam: Visual

explanations from deep networks via gradient-based

localization. In Proceedings of the IEEE international

conference on computer vision, pages 618–626.

Seo, H. J. and Milanfar, P. (2009). Static and space-time

visual saliency detection by self-resemblance. Journal

of vision, 9(12):15–15.

Shen, C. and Zhao, Q. (2014). Webpage saliency. In Eu-

ropean conference on computer vision, pages 33–46.

Springer.

Simonyan, K. and Zisserman, A. (2014). Very deep con-

volutional networks for large-scale image recognition.

arXiv preprint arXiv:1409.1556.

Xie, M., Feng, S., Xing, Z., Chen, J., and Chen, C. (2020).

UIED: A Hybrid Tool for GUI Element Detection,

page 1655–1659. Association for Computing Machin-

ery, New York, NY, USA.

Zhang, D., Fu, H., Han, J., Borji, A., and Li, X. (2018).

A review of co-saliency detection algorithms: Funda-

mentals, applications, and challenges. ACM Trans-

actions on Intelligent Systems and Technology (TIST),

9(4):1–31.

Zhou, W., Liu, W., Lei, J., Luo, T., and Yu, L. (2021). Deep

binocular fixation prediction using a hierarchical mul-

timodal fusion network. IEEE Transactions on Cog-

nitive and Developmental Systems, pages 1–1.

Zhou, X., Yao, C., Wen, H., Wang, Y., Zhou, S., He, W., and

Liang, J. (2017). East: An efficient and accurate scene

text detector. In Proceedings of the IEEE Conference

on Computer Vision and Pattern Recognition (CVPR).

VISAPP 2023 - 18th International Conference on Computer Vision Theory and Applications

132